Tensorizing Neural Network

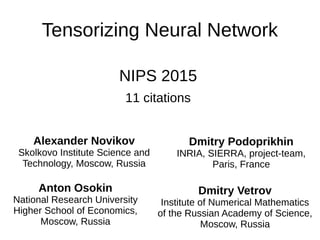

- 1. Tensorizing Neural Network NIPS 2015 11 citations Alexander Novikov Skolkovo Institute Science and Technology, Moscow, Russia Dmitry Podoprikhin INRIA, SIERRA, project-team, Paris, France Anton Osokin National Research University Higher School of Economics, Moscow, Russia Dmitry Vetrov Institute of Numerical Mathematics of the Russian Academy of Science, Moscow, Russia

- 2. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 3. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 5. Background: Training Neural Network ● Back-propagation – an efficient way to compute gradient of objective function wrt all the parameters ● Gradient descent: ● Objective:

- 6. output input ... ... layer1 ... layer2 layer3 Background: Back-propagation Notation layer output:

- 7. ● want to calculate all output input ... ... layer1 ... layer2 Background: Back-propagation layer3

- 13. output input ... ... layer1 ... layer2 Background: Back-propagation should be calculate at layer 3 layer3

- 17. – Each layer calculates the 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter Background: Back-propagation

- 18. ● output wrt input ● output wrt parameter – Each layer calculates the 3 gradients: propagate to prev layer Background: Back-propagation ● objective wrt parameter

- 19. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 20. Motivation ● What if neural network is too large to fit into memory?

- 21. Motivation ● What if neural network is too large to fit into memory? – distributed neural network ● distribute parameters ● challenge: training ● Approaches [Elastic Averaging SGD (NIPS'15)] – Model compression ● reduce required space

- 22. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 23. Problem Formulation ● Given weight matrix of a fully- connected layer – compact with back-propagation ● Requirement ● Goal – reduce space complexity

- 24. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 25. ● Reducing #parameter W N M U M M Σ N M V N N T = SVD decomposition Naïve Method: Low-Rank SVD

- 26. ● Reducing #parameter W N M U R M N R VT = Σ R R Take R largest eigenvalues and corresponding eigenvectors [1:R] [1:R] [1:R] [1:R] Naïve Method: Low-Rank SVD

- 27. ● Reducing #parameter W N M U R M N R VT = Σ R R [1:R] [1:R] [1:R] [1:R] ( ) Naïve Method: Low-Rank SVD

- 28. ● Reducing #parameter [1:R] [1:R] [1:R] [1:R] ( ) A R M W N M U R M N R VT = Σ R R N R B T Naïve Method: Low-Rank SVD

- 29. ● Reducing #parameter space complexity [1:R] [1:R] [1:R] [1:R] ( ) A R M W N M U R M N R VT = Σ R R N R B Naïve Method: Low-Rank SVD

- 30. Instead of updating W, update components Naïve Method: Low-Rank SVD By low-rank SVD

- 31. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter

- 32. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter doesn't change

- 33. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter

- 34. It works, but weight matrix might be very large → want to do more compression Naïve Method: Low-Rank SVD

- 35. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 36. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape

- 37. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape A r MW N M = N r B

- 38. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N

- 39. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N = N N

- 40. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N = N N gets thinner and thinner

- 41. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape balanced thin Give 2 matrix of same #elements, which can be compressed more?

- 42. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape balanced10 12 thin2 60 Give 2 matrix of same #elements, which can be compressed more?

- 43. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape 4 6 5 thin2 60 How about reshape into higher dimension tensor?

- 44. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape => Tensor-Train Decomposition

- 45. Tensor-Train Decomposition W8 10 If we want to TT-decompose a matrix First, need to reshape it into a tensor

- 46. 2 2 and apply low-rank SVD Tensor-Train Decomposition unfold by 1st dimension

- 48. apply low-rank SVD again Tensor-Train Decomposition saved reshape

- 49. apply low-rank SVD again Tensor-Train Decomposition saved reshape

- 50. apply low-rank SVD again Tensor-Train Decomposition saved saved

- 53. Tensor-Train Decomposition 2 fold into core tensors 2

- 54. Tensor-Train Decomposition Tensor-Train format TT-rank = SVD decomposition rank

- 55. Tensor-Train Decomposition Tensor-Train format [2010 Oseledets. et al] shows for arbitrary tensor, its TT-format exits but is not unique

- 56. Tensor-Train Decomposition Space complexity Tensor-Train format Tensor-Train formatorigin

- 57. Tensor-Train Decomposition Tensor-Train format TT-format Space canonical Tucker robust compared to other tensor decomposition methods

- 58. Tensor-Train Layer represent as d-dimensional tensors by simple MATLAB reshape

- 59. Tensor-Train Layer represent as d-dimensional tensors TT-decompose

- 60. Tensor-Train Layer represent as d-dimensional tensors TT-decompose forward pass

- 61. Tensor-Train Layer Integrated into back-propagation

- 62. Tensor-Train Layer X Integrated into back-propagation

- 63. Tensor-Train Layer calculus rule X Integrated into back-propagation

- 64. Tensor-Train Layer X Integrated into back-propagation

- 65. Tensor-Train Layer X Integrated into back-propagation backward pass

- 66. Tensor-Train Layer ● Lower space requirement ● Faster operation

- 67. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 68. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer

- 69. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer better than naïve method

- 70. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer balanced reshape works better

- 71. Experiment ● Large: ImageNet ILSVRC-2012 1000 class with 1.2 million(train), 50,000(valid) compress FC layer of a large CNN 4096 x 1000 4096 x 4096 4096 x 25088 13 conv-layers VGG-16

- 72. Experiment ● Large: ImageNet – replace 3 FC layer of large CNN (VGG-16)

- 73. Experiment ● Large: ImageNet – replace 3 FC layer of large CNN (VGG-16)

- 74. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 75. Conclusion ● An interesting approach to do model compression and did work very well ● Applying reshape adaptively in recursive low-rank SVD may work better?

- 76. Tensorizing Neural Network NIPS 2015 11 citations Alexander Novikov Skolkovo Institute Science and Technology, Moscow, Russia Dmitry Podoprikhin INRIA, SIERRA, project-team, Paris, France Anton Osokin National Research University Higher School of Economics, Moscow, Russia Dmitry Vetrov Institute of Numerical Mathematics of the Russian Academy of Science, Moscow, Russia 今天要報的這篇叫 Tensorizing Neural Network 發在 2015 年 NIPS 上 共有 11 個 citation

- 77. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 78. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 79. output input ... ... layer1 ... layer2 Background: Neural Network 一個 neural network 裡的 node 叫做 neuron 其實就是 一個 function 那把一個 neuron 接在另一個 neuron 後面就是在做 function composition 那 compose 很 多次就可以組出有複雜 output 的 neural network

- 80. Background: Training Neural Network ● Back-propagation – an efficient way to compute gradient of objective function wrt all the parameters ● Gradient descent: ● Objective: 那要怎麼用 neural network 呢首先定個 objective function 比如希望 training set 的 sum of square error 愈小愈好 那要怎麼為 neural network 找出一組 好的參數呢?你可以一開始隨便給所有參數一個值 然後用 gradient descent 每次調一點調一點 要算 gradient 就一定要介紹 neural network 很有名的演算 法 back-propagation 因為 neural network 是 composition of function 所以微分可以用 chain rule 那 back-propagation 就是分析 chain rule 重複的 term 然後找到一個好順序 讓計算 gradient 時可以利 用之前已經算出的結果 省去重複計算的時間 找到的順序是由 output layer 往前算 所以叫作 back- propagation

- 81. output input ... ... layer1 ... layer2 layer3 Background: Back-propagation Notation layer output: 那講 backpropagation 之前要先講一下 notation 我會把第 l 層的 output 寫成這樣 是個 vector

- 82. ● want to calculate all output input ... ... layer1 ... layer2 Background: Back-propagation layer3 目標就是要算出 objective function 對所有參數的 gradient Wij 上標 l 表示的是第 l 層第 i 個 neuron 的第 j 個參數

- 83. Background: Back-propagation output input ... ... layer1 ... layer2 layer3 從最後一層算起 第 3 層只有一個 neuron 來算它的第 一個參數的 gradient objective function 對這個參數 的 gradient 用用 chain rule 等於算 objective function 對 neuron 的 output 的 gradient 再乘上 neuron output 對自己這個參數的 gradient 由於是最後一層沒有可利用的 term 來看下一層 那第一項很好算因為 objective function E 裏面的 h 就 是這個 neuron 的 output 微分就把 ^2 拉下來自己推 那第 2 項 neuron 對參數的微分 很 trivial 我就不細講了

- 84. output input ... ... layer1 ... layer2 Background: Back-propagation layer3 算第 2 層第一個 neuron 第一個參數的 gradient 同樣用 chain rule 寫成 objective function 先對這個 neuron output 的微分再乘上 neuron output 對參數 的微分

- 85. output input ... ... layer1 ... layer2 Background: Back-propagation layer3 第一項可以在用一次 chain rule 展開 因為第三層會用 第 2 層 neuron 當 input 所以可以寫成 objective function 先對第 3 層 neuron 微分再乘上第 3 層 neuron 對第 2 層 neuron 的微分

- 88. output input ... ... layer1 ... layer2 Background: Back-propagation should be calculate at layer 3 layer3 第 2 項第 3 層 neuron 對這個 neuron 的微分 其實就相 當於第 3 層的 neuron function 對 input 的微分 所以第 2 項最好和第 1 項一樣在剛個第 3 層算好 然後 propagate 到這層 這層的收到後只需要再算第 3 項就可以兜出 objective function 對這一層參數的 gradient

- 89. output input ... ... layer1 ... layer2 Background: Back-propagation layer3 剛剛是我故意簡化的例子 後層只有個 neuron 現在來 看如果後層 neuron 不只一個的話 back-propagation 該怎麼傳呢? 同樣算第 1 層第一個 neuron 第一個參數的 gradient 後 用 chain rule 展開

- 90. output input ... ... layer1 ... layer2 Background: Back-propagation layer3 第一項再用一次 chain rule 展開跟第 2 層展開結果很 像 可是多了 summation on 所有下層 neuron 你可能 會問這 summation 哪來的呢?

- 91. output input ... ... layer1 ... layer2 Background: Back-propagation calculus rule layer3 繼續往下一層看 就會發現下層的 neuron 在第 3 層 neuron 會做 linear transformation 就會被 sum 起來 想像一下展開 objective function 展到這個 summation 再做 gradient 就可以用以前學過維積分 基本運算 A+B 的微分等於 AB 微分的加總 就能得到 這個式子

- 92. – Each layer calculates the 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter Background: Back-propagation 所以來 summary back-propagation 算每一層參數 gradient 時都利用到 了下一層的結果 每一層需算 3 種 gradient 第一個 objective function 對 neuron output 的微分 第二 neuron 對 input 的微分 和 neuron 對參數的微分

- 93. ● output wrt input ● output wrt parameter – Each layer calculates the 3 gradients: propagate to prev layer Background: Back-propagation ● objective wrt parameter 算好後 把前兩項 propagate 到前一層 就可以有效組出 所有參數的 gradient

- 94. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 95. Motivation ● What if neural network is too large to fit into memory? 萬一 neural network 參數太多一台機器 memory 放不 下怎麼辦?

- 96. Motivation ● What if neural network is too large to fit into memory? – distributed neural network ● distribute parameters ● challenge: training ● Approaches [Elastic Averaging SGD (NIPS'15)] – Model compression ● reduce required space 有兩個方向 其實是互補的可以一起使用 參數太多一台放不下那就把參數 distribute 到不同台機 器上 那這個 approach 的困難在於 training 也必須是 distributed 有不少 work 在研究 distributed stochastic gradient descent 比如康軍之後會報的 Elastic Averaging SGD 這篇屬於另一個 approach 做 model compression 減 少 neural network 需要的參數空間

- 97. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 98. Problem Formulation ● Given weight matrix of a fully- connected layer – compact with back-propagation ● Requirement ● Goal – reduce space complexity 這篇想 compress 的是 fully connected layer 那想 compress 的主要是 weight matrix 因為他最佔空 間 那目標是希望想出某種方法來減少 W 需要的空間 但有個條件 這個方法必須要能夠保留了一層 layer 本 來的功能 比如要算 layer output 要能算的出來 在 training 更新 weight 的時候 也要能跟前後層做 back- propagation

- 99. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 100. ● Reducing #parameter W N M U M M Σ N M V N N T = SVD decomposition Naïve Method: Low-Rank SVD Low-rank SVD 首先用 SVD 把 MbyN 的 matrix 拆成三項

- 101. ● Reducing #parameter W N M U R M N R VT = Σ R R Take R largest eigenvalues and corresponding eigenvectors [1:R] [1:R] [1:R] [1:R] Naïve Method: Low-Rank SVD 每個 eigenvalue 有對應的 eigenvector 把前 R 個 eigenvalue 大的 component 取出來

- 102. ● Reducing #parameter W N M U R M N R VT = Σ R R [1:R] [1:R] [1:R] [1:R] ( ) Naïve Method: Low-Rank SVD 把前兩項合併

- 103. ● Reducing #parameter [1:R] [1:R] [1:R] [1:R] ( ) A R M W N M U R M N R VT = Σ R R N R B T Naïve Method: Low-Rank SVD 變成兩個 matrix 相乘

- 104. ● Reducing #parameter space complexity [1:R] [1:R] [1:R] [1:R] ( ) A R M W N M U R M N R VT = Σ R R N R B Naïve Method: Low-Rank SVD 比起原本 W 需要 M*N 的空間 現在只存 component A 和 B 的話 需要的儲存空間只要 R(M+N) 所以可以減 少需要的空間

- 105. Instead of updating W, update components Naïve Method: Low-Rank SVD By low-rank SVD 那麼要怎麼跟 training 結合呢? 原本 gradient descent 更新 W 但現在不存 W 了 所以就需要算 objective function 對 component 的 grdient

- 106. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter 用 backprop 來算 還記得每層要算三種 gradient

- 107. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter doesn't change 前兩項不變

- 108. ● To integrated with back-propagation Naïve Method: Low-Rank SVD – have calculate 3 gradients: ● objective wrt parameter ● output wrt input ● output wrt parameter 只有第 3 項 output 對 componentA 和 B 的 gradient 要 推 我推出來是像這樣

- 109. It works, but weight matrix might be very large → want to do more compression Naïve Method: Low-Rank SVD 所以 low-rank SVD 可以做到 compression 減少參數 又保留 layer 的功能 那這篇想要做更有效率的壓縮 省更多 space

- 110. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 111. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape 主要想法是 recursive 的做 low-rank SVD

- 112. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape A r MW N M = N r B 原本 W 需要 M*N 來存 decompose 成兩個 matrix 後只要 M+N 乘上 rank 數 如果再進一步把 A 或 B decompose 的話就能省更多空 間

- 113. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N 比如對 B 再做一次 low-rank SVD

- 114. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N = N N 再對分解的第二個 matrix 做 low-rank SVD 一直做

- 115. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshaper = N r B N = N N gets thinner and thinner 你會發現 第二個 matrix 變得愈來愈細 那愈來愈細會怎 樣

- 116. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape balanced thin Give 2 matrix of same #elements, which can be compressed more? 問大家一個問題 你覺得兩個大小相同 但一個長的像左 邊這樣長寬差不多的 matrix 還是右邊這個很瘦的 matrix 做 low-rank SVD 取相同 rank 會省比較多空 間?

- 117. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape balanced10 12 thin2 60 Give 2 matrix of same #elements, which can be compressed more? 舉例說 10*12 和 2*60 都取 rank=1 的話 左邊的就少很 多 所以這就 motivate 了做 recursive low-rank SVD 在 matrix 太瘦的時候要 reshape 的 idea 所以大家直覺上同意叫 balanced 的能 compress 更 多?

- 118. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape 4 6 5 thin2 60 How about reshape into higher dimension tensor? 那再問一個問題 如果 reshape 不侷限在 2 維平面 允許 reshape 成 3 維以上的 tensor 的話 那是不是更 balanced 可以 compress 省更多空間呢?

- 119. Main Idea recursively applying low-rank SVD 1) 2) if matrix is too thin => reshape => Tensor-Train Decomposition 所以等會會看到這篇會先把 matrix reshape 成 tensor 然後用一種叫 Tensor-Train decomposition 的方法 Tensor-Train decomposition 基本上就是在做 recursive 的 low-rankSVD

- 120. Tensor-Train Decomposition W8 10 If we want to TT-decompose a matrix First, need to reshape it into a tensor 首先想 decompose 一個 matrix 先把它 reshape 成高 維 tensor 比如 8*10 的 reshape 成 2*2*2*2*5 的 5 維 的 tensor 那接下來就能使用 Tensor-Train decomposition

- 121. 2 2 and apply low-rank SVD Tensor-Train Decomposition unfold by 1st dimension Tensor-Train decomposition 首先會把 W 按照第 1 mode unfold 展開成 matrix 對這 matrix 做 low-rank SVD

- 122. 2 2 reshape Tensor-Train Decomposition saved into 把第一個 component 存起來 想繼續對第二個做 low- rank SVD 但他變細了所以先 reshape 那 Tensor-Train decomposition reshape 有固定形式 會把下一個 mode 的 dimension 拉下來做 reshape

- 123. apply low-rank SVD again Tensor-Train Decomposition saved reshape reshape 好了 就再做一次 low-rank SVD 把第一個存 起來 然後把下一維拉下來做 reshape

- 124. apply low-rank SVD again Tensor-Train Decomposition saved reshape 重複做

- 125. apply low-rank SVD again Tensor-Train Decomposition saved saved 直到每個 mode 的 dimension 都被拉過一次 就停止

- 126. can approximate Tensor-Train Decomposition 2 得到的 5 個 core matrix 就可以組出原本的 W 仔細一下這 5 個 matrix 就會發現

- 127. can approximate Tensor-Train Decomposition 2 原本 tensor 每一個 mode 的 dimension 都被對應的 matrix 保留了?

- 128. Tensor-Train Decomposition 2 fold into core tensors 2 就可以把 matrix fold 成 3 mode 的 tensor 然後 第 3 mode 的為度就是 tensor 對應的 dimension 而第一 個和最後一個比較特別只是 matrix tensor 的一個 element 就有簡潔的表示方式 可以寫成 第一個 matrix 的某個 row 乘上第 2 個 core tensor 的 對應的 slice 乘上第 3 個 core tensor 對應的 slice 乘 上第 4 個對應的 slice 再乘上最後一個對應的 column

- 129. Tensor-Train Decomposition Tensor-Train format TT-rank = SVD decomposition rank 這就是 Tensor-Train format 每一個 element 都能拆解 成 d 個 matrix 相乘 要特別講一下 Tensor-Train format 的 rank 是定義成 low-rank SVD 取的 rank 也 就是 r1,r2,r3,r4,r5

- 130. Tensor-Train Decomposition Tensor-Train format [2010 Oseledets. et al] shows for arbitrary tensor, its TT-format exits but is not unique 很自然會想知道 如果對一個 tensor 給定一組想要的 rank 能不能找到對應的 Tensor-Train format 呢? 發明 Tensor-Train 的 Oseledets 等人有給證明一定可 以找的到 但不是 unique 的

- 131. Tensor-Train Decomposition Space complexity Tensor-Train format Tensor-Train formatorigin 那來分析 space complexity 如果有個 tensor 每一 mode 的 dimension 是 nk 的話 原本需要的空間就是 d mode 的 dimension 相乘 n^d 那如果寫成 TT-format 就只存 core tensor space complexity 是 d 個 core tensor 每個需要 dimension n 乘上 rank r^2 有 d 個 core tensor 所以需要 ndr^2 那麼就從 n^d 降到 ndr^2

- 132. Tensor-Train Decomposition Tensor-Train format TT-format Space canonical Tucker robust compared to other tensor decomposition methods 跟其他常見的 tensor decomposition 方法比較 canonical/CP 是拆解成 d 個長度就是 dimension 的 vector 做 outer produce 成 rank1 tensor 然後取 r 個 拼起來 所以只需要 ndr 個 entry 比 TT-format 少但 CP 不是 SVD-based 不 robust 想取某個 rank r 的 low-rank tensor CP 不一定能找到最好的那就不適合 這篇 而 Tucker 雖然說他跟 TT 一樣也是 SVD-based 而且 形式跟 CP 很像但多了一個 d mode 的 core tensor 所以還多加上了 r^d 但開什麼玩笑 在 d 很大的時候 需要的 space 是 exponential 成長 這就是為何 Tucker 是 SVD-based 但這篇不用的原因

- 133. Tensor-Train Layer represent as d-dimensional tensors by simple MATLAB reshape 來講這篇 paper 要做的事情 要壓縮 fully-connected layer y=Wx+b 來講先把 W,x,b 都 reshape 成 d mode 的 tensor 用 matlab command

- 134. Tensor-Train Layer represent as d-dimensional tensors TT-decompose 然後把 W, X, B 用 TT-format 表示那 y 的每個 element 可以寫成這樣

- 135. Tensor-Train Layer represent as d-dimensional tensors TT-decompose forward pass 那 forward pass 就是要算出 y 的所有 element 原本需要的時間複雜度是 M*N 那用 TT-format matrix- by-vector 有快速算法複雜度是 dr^2mM,N 取 max

- 136. Tensor-Train Layer Integrated into back-propagation 那怎麼跟 back-propagation 結合呢?來推 core tensor 一個 slice 的 gradient

- 137. Tensor-Train Layer X Integrated into back-propagation Tensor-Train format 好處又來了 你可以把第 k 個 tensor slice 前的 G1 到 Gk 的 slice 乘 起來會是個 row vector k 以後的呢乘起來是 column vector

- 138. Tensor-Train Layer calculus rule X Integrated into back-propagation 要算長這樣的 Jacobian matrix 可以用 matrix calculus

- 139. Tensor-Train Layer X Integrated into back-propagation 所以 y 的一個 element 對一片 slice 的微分長這樣

- 140. Tensor-Train Layer X Integrated into back-propagation backward pass 那 backward pas 原本算 W 的 gradient 要 MN 次 那這篇 paper 給出的計算所有 core tensor 的 gradient 的 time complexity 是 d^2r^4mM,N 取 max 我試圖 reverse engineering 自己推但推出來和他的不一樣

- 141. Tensor-Train Layer ● Lower space requirement ● Faster operation 最後做 summary 他們提出的 TT-layer r 挑的夠小的話 可以做的比 naive 的 low-rank SVD 省更多空間 在計算 forward pass backward pass 也有機會比原本 省更多時間

- 142. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 143. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer 第一個是小型實驗用手寫數字 MNIST x 軸是 compress 後的參數數量代表壓縮率 圖上不同的點是 由挑不同 rank 畫出來的 可以看到壓縮愈多 x 愈小的 error 都愈高

- 144. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer better than naïve method 那跟 naive 比呢?可以看到在壓縮率相同的時候 TT- layer 表現比 naive 好很多 差了 1 個數量級

- 145. Experiment ● Small: MNIST FC 1024 x 10 FC 1024 x 1024 compress 1st layer balanced reshape works better 再來不同顏色表示不同 reshape 策略 reshape 成比較 balanced 的 TT-layer 表現比較好

- 146. Experiment ● Large: ImageNet ILSVRC-2012 1000 class with 1.2 million(train), 50,000(valid) compress FC layer of a large CNN 4096 x 1000 4096 x 4096 4096 x 25088 13 conv-layers VGG-16 再來下個實驗 想觀察在更大規模的情況下 TT-layer 的 好處比如做 layer operation 會不會比較明顯? dataset 是 ImageNet 而實驗使用的架構 VGG-16 是寫這 paper 時 2015 年 在這 dataset 表現最好的 CNN 那實驗方式是用 TT-layer 來取代 VGG 的 fully- connected layer 然後看壓縮率跟執行時間

- 147. Experiment ● Large: ImageNet – replace 3 FC layer of large CNN (VGG-16) 結果是用 Tensor-Train rank 都挑 4 的 TT-layer 取代第 1 層能得到最接近不壓縮的 error rate 而且壓縮率 看第二個 column 是那層 TT-layer 的壓縮 率壓縮了 5 萬倍非常驚人而對於整個 CNN 的參數來 說減少了 3.9 倍的參數

- 148. Experiment ● Large: ImageNet – replace 3 FC layer of large CNN (VGG-16) 最後想知道 TT-layer 運算會不會比較快 那就測取代的 那層 TT-layer 做 forward pass 需要的時間 第一個 column 是從底下 forward pass 1 張 image 經 過這一層需要的時間 那原本用 CPU 要 16.1ms 用 TT-layer 只需要 1.2ms 那覺得奇怪的是為何 TT-layer 傳一張比 FC 快很多但 是傳 100 張卻差不多呢? 我去翻這篇 NIPS 的 review 有人問到作者回應是他們 使用的 TT-toolbox 的 implementation 很不 efficient

- 149. Outline ● Background – Back-propagation ● Motivation ● Problem Formulation – Naïve method(related work) – Solution ● Experiment ● Conclusion

- 150. Conclusion ● An interesting approach to do model compression and did work very well ● Applying reshape adaptively in recursive low-rank SVD may work better? 學會一種有趣的 model compression 方法 說不定在 recursive 做 low-rank SVD 時不要做 reshape 而是 adaptive 的做說不定能壓縮更多