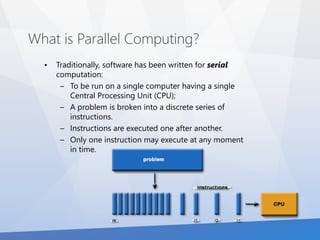

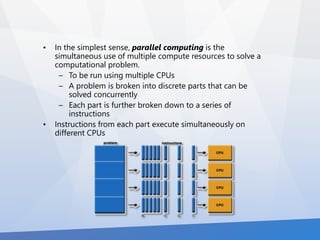

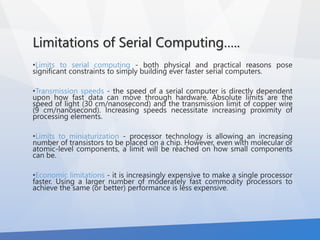

The document provides an overview of parallel computing, explaining its definition, advantages over serial computing, and its applications across various domains. It discusses limitations of serial computing, such as transmission speeds and economic challenges, alongside the benefits of using multiple CPUs to save time and solve larger problems. The document also highlights Moore's Law and the importance of parallelism in enhancing computational power, memory access, and data communication.

![Moore’s Law Moore's law states [1965]:

``The complexity for minimum

component costs has increased at a rate of

roughly a factor of two per year. Certainly

over the short term this rate can be expected

to continue, if not to increase. Over the

longer term, the rate of increase is a bit more

uncertain, although there is no reason to

believe it will not remain nearly constant for

at least 10 years. That means by 1975, the

number of components per integrated circuit

for minimum cost will be 65,000.''](https://image.slidesharecdn.com/lastfinished-141214114447-conversion-gate02/85/Introduction-to-Parallel-Computing-10-320.jpg)