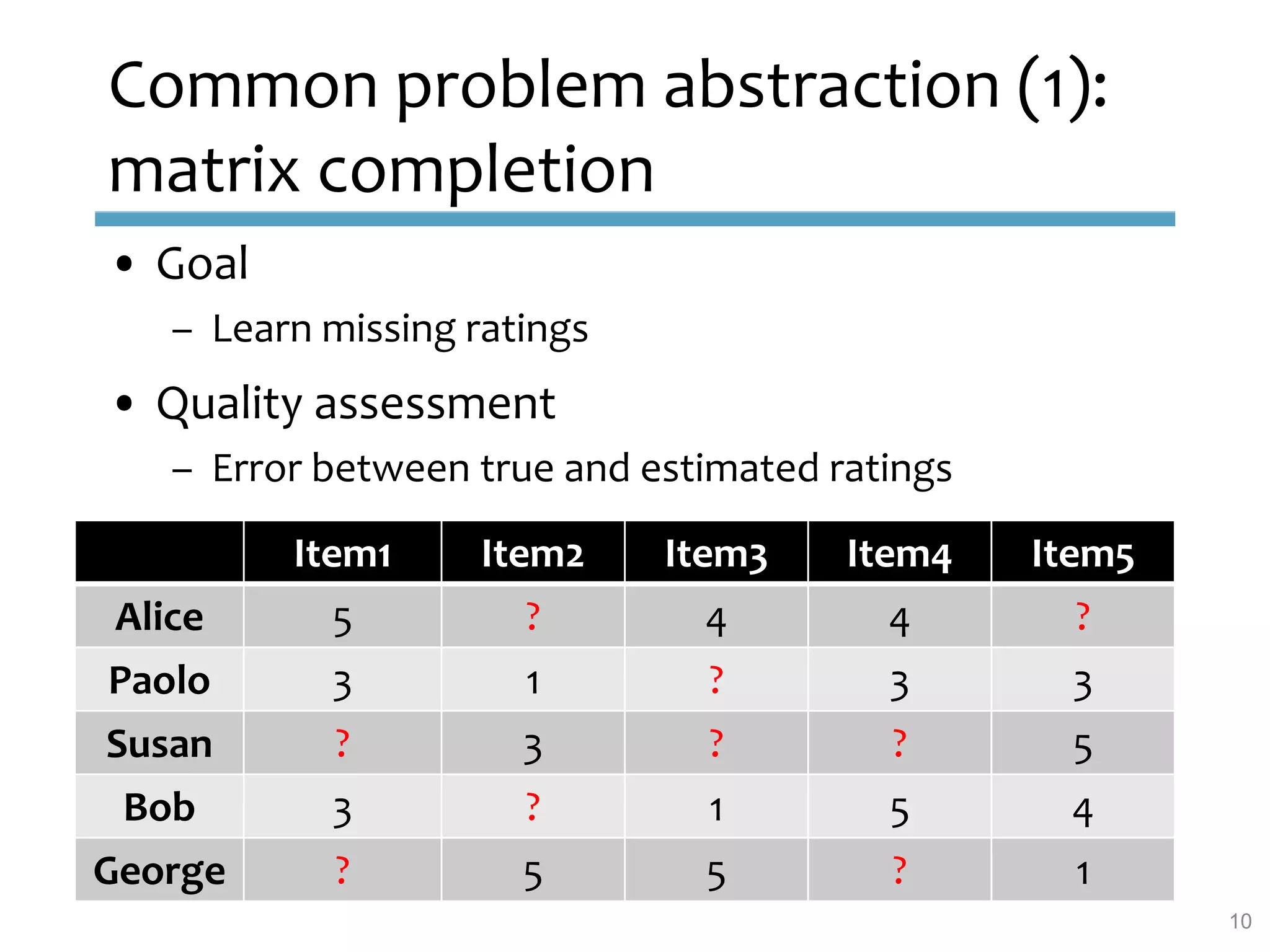

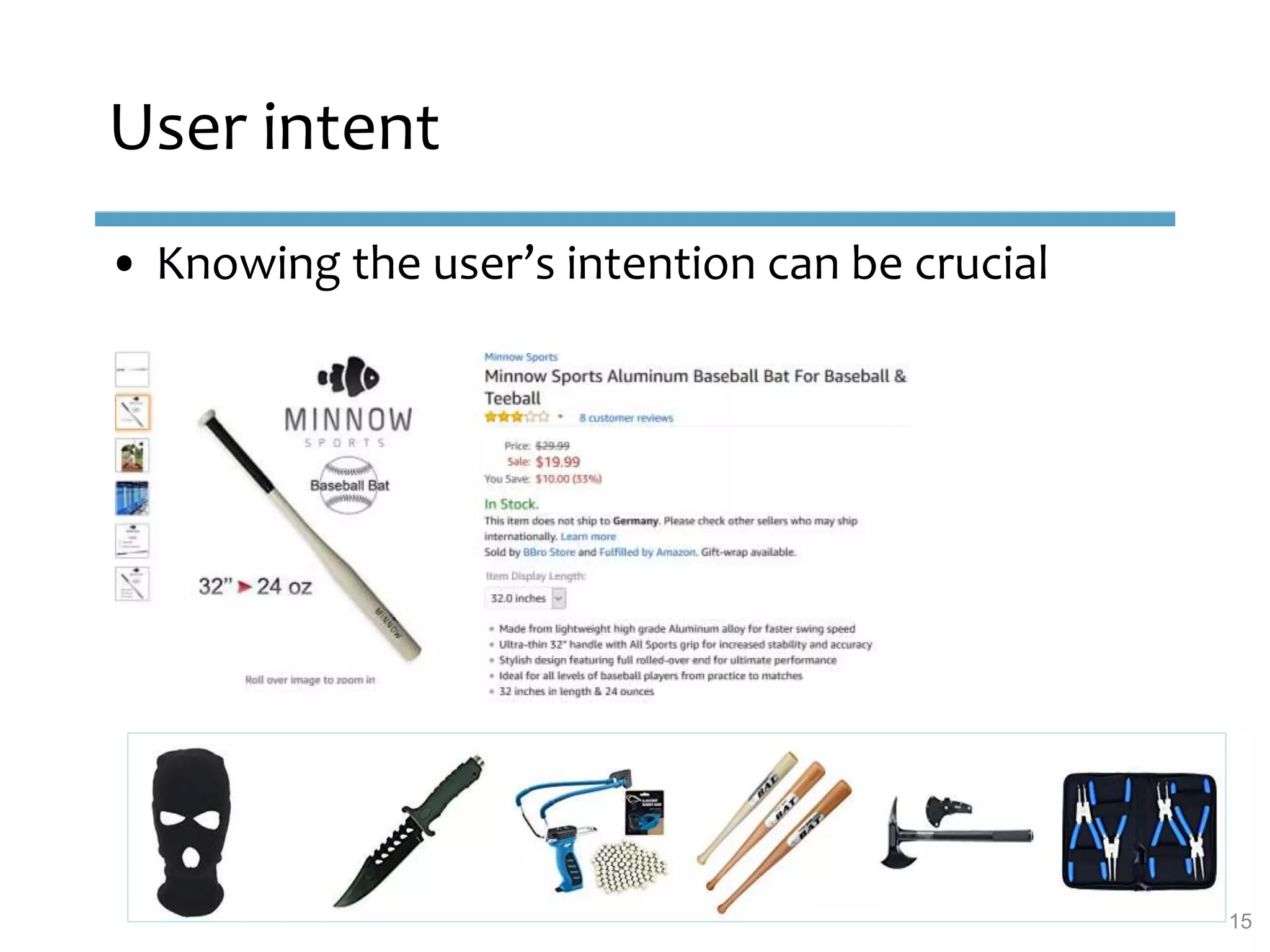

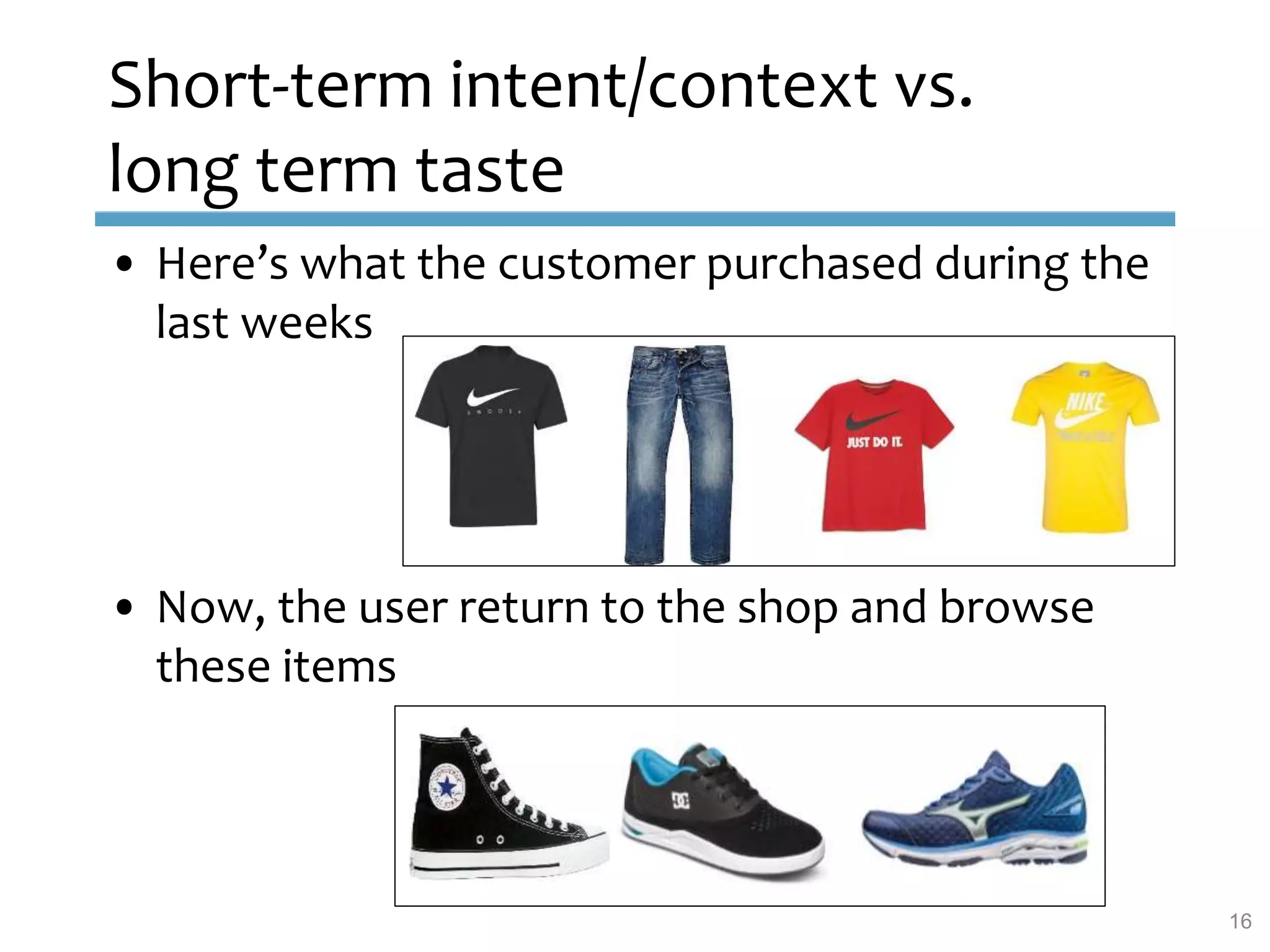

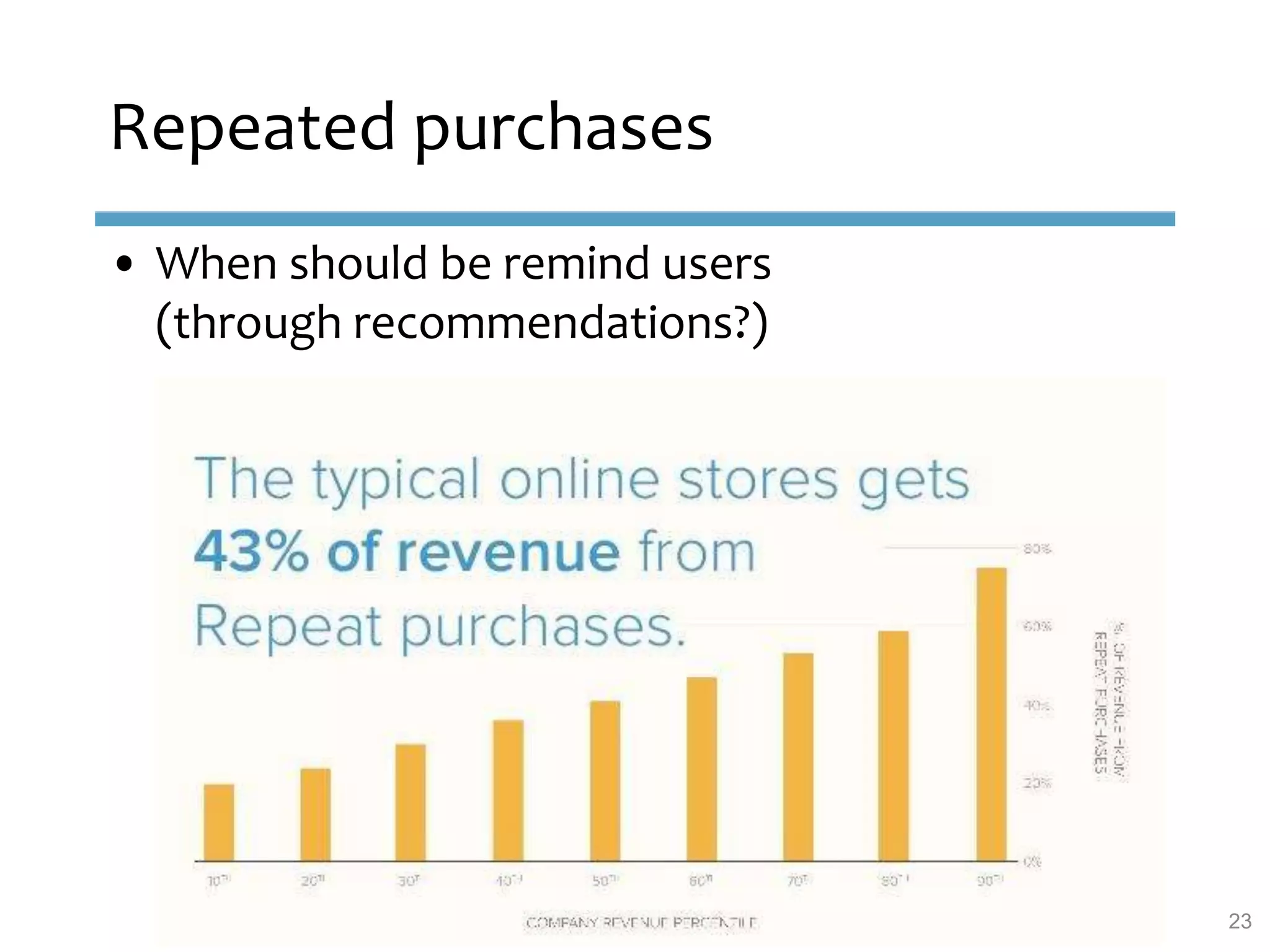

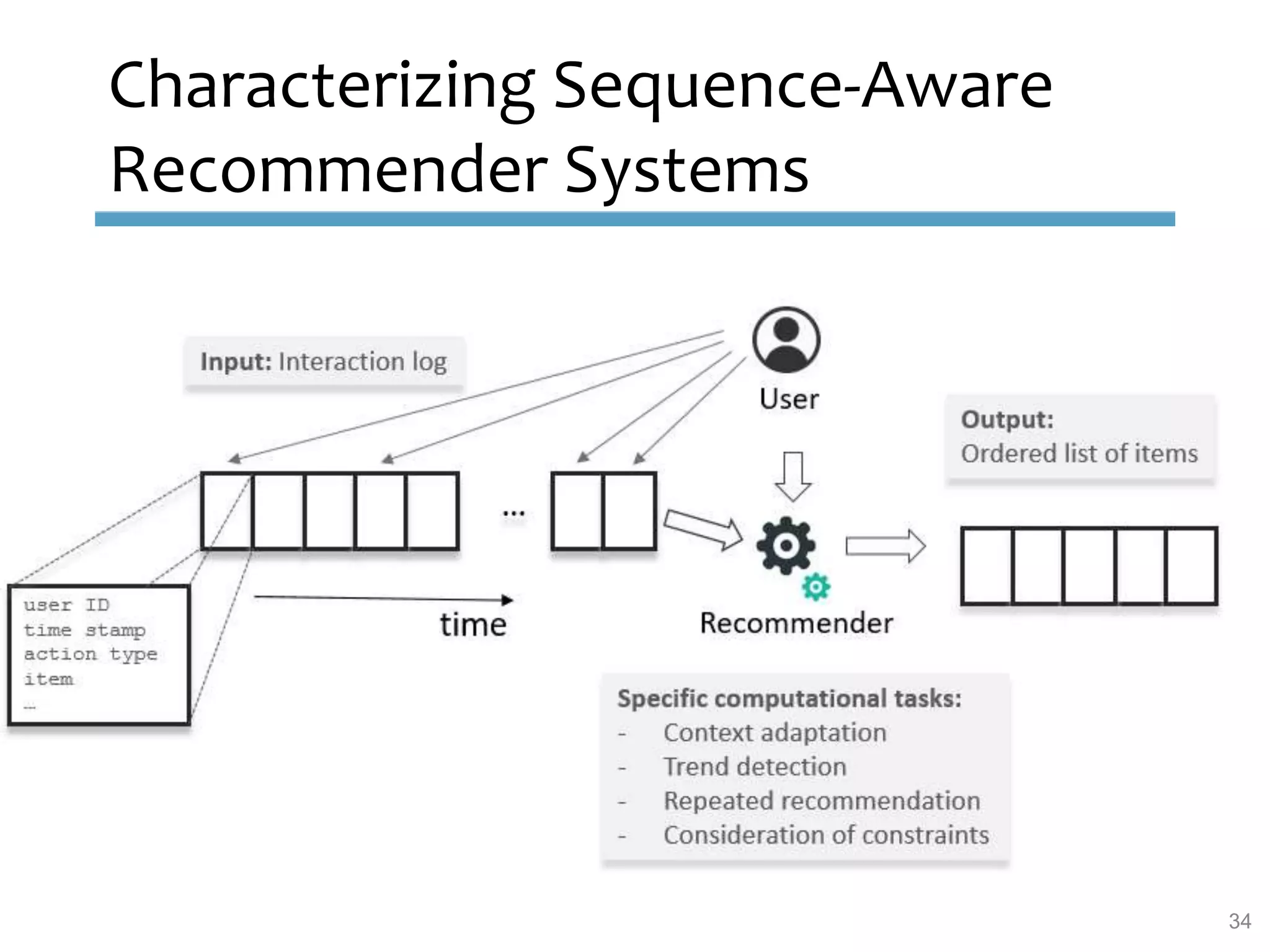

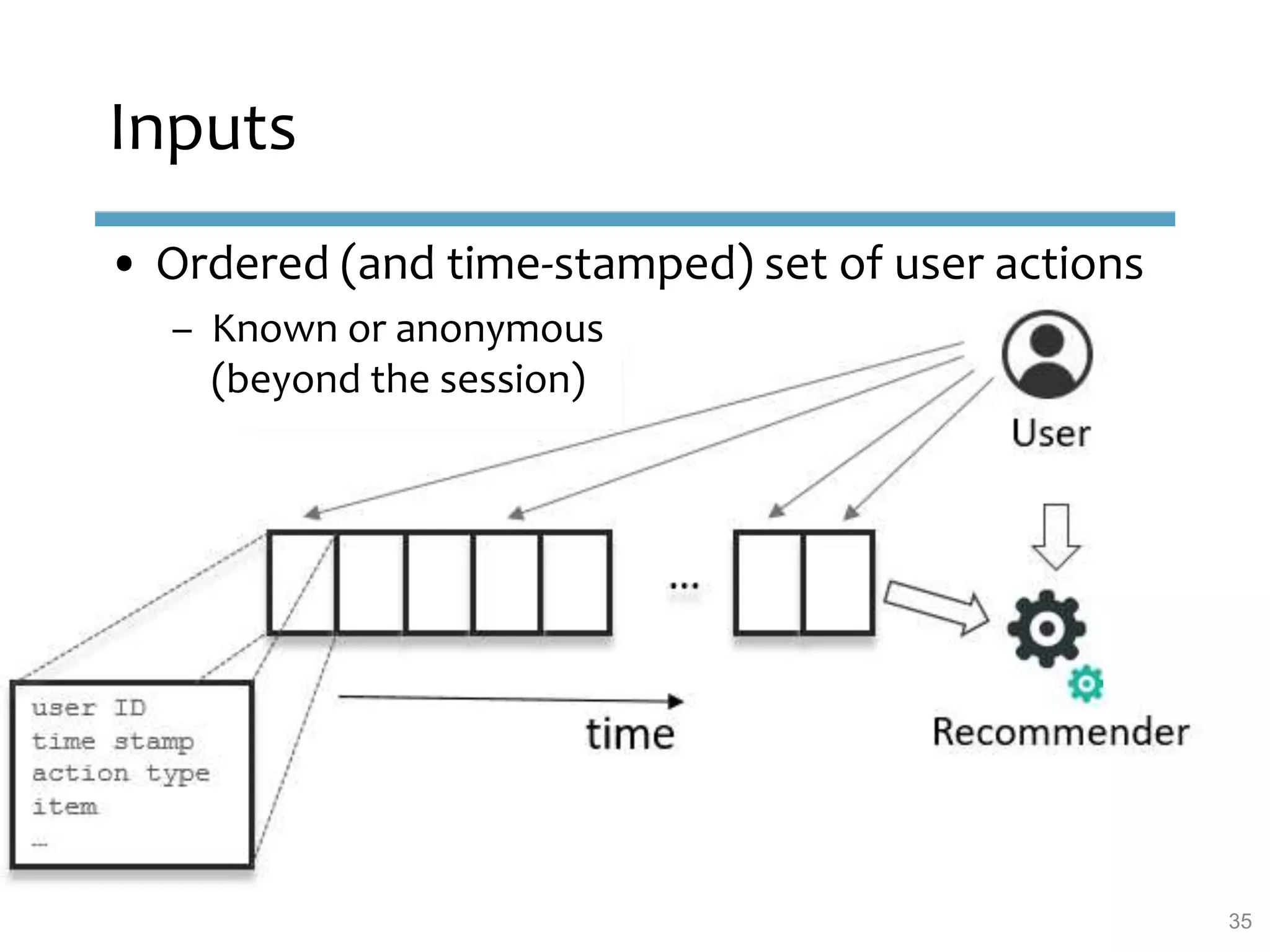

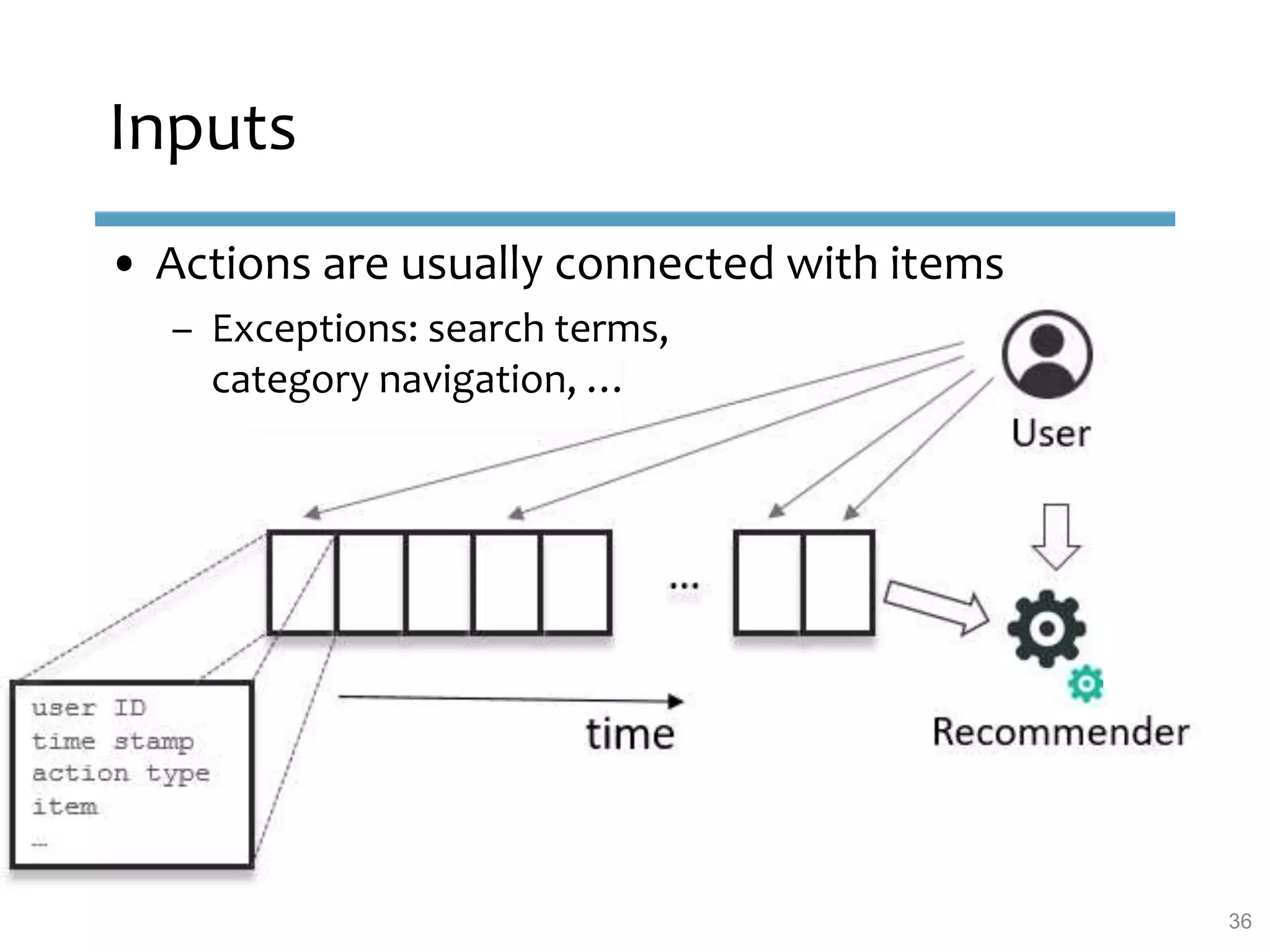

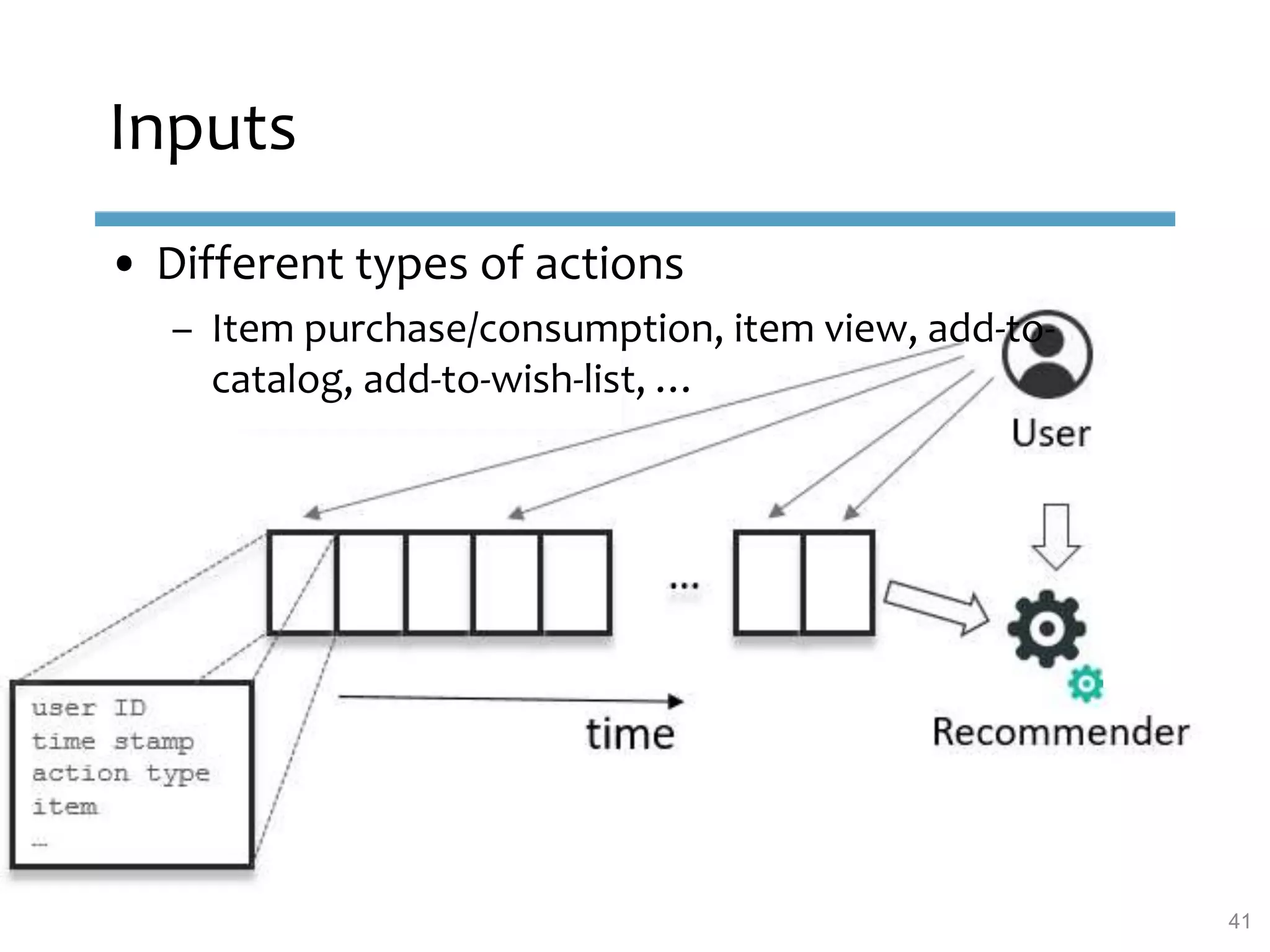

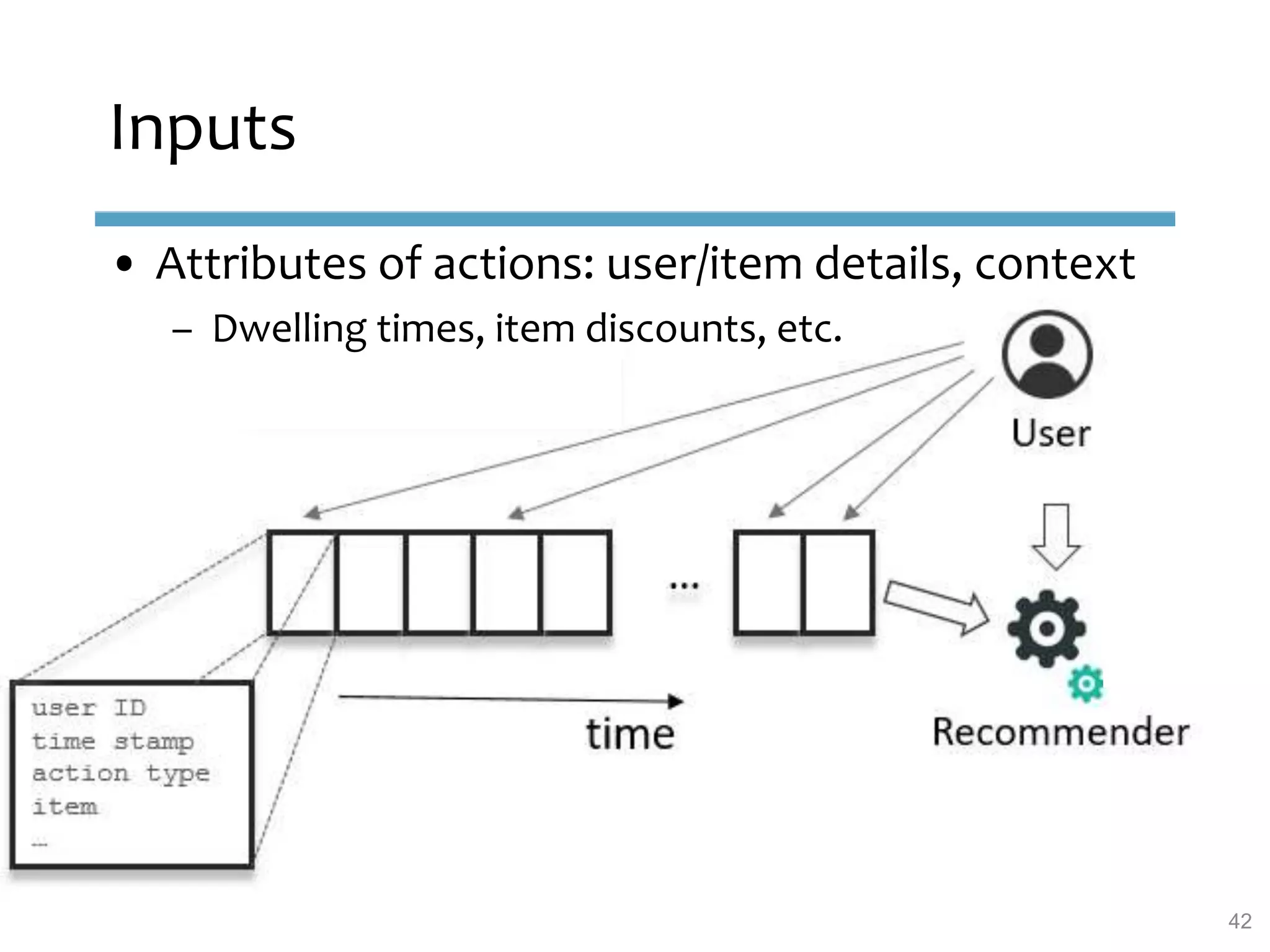

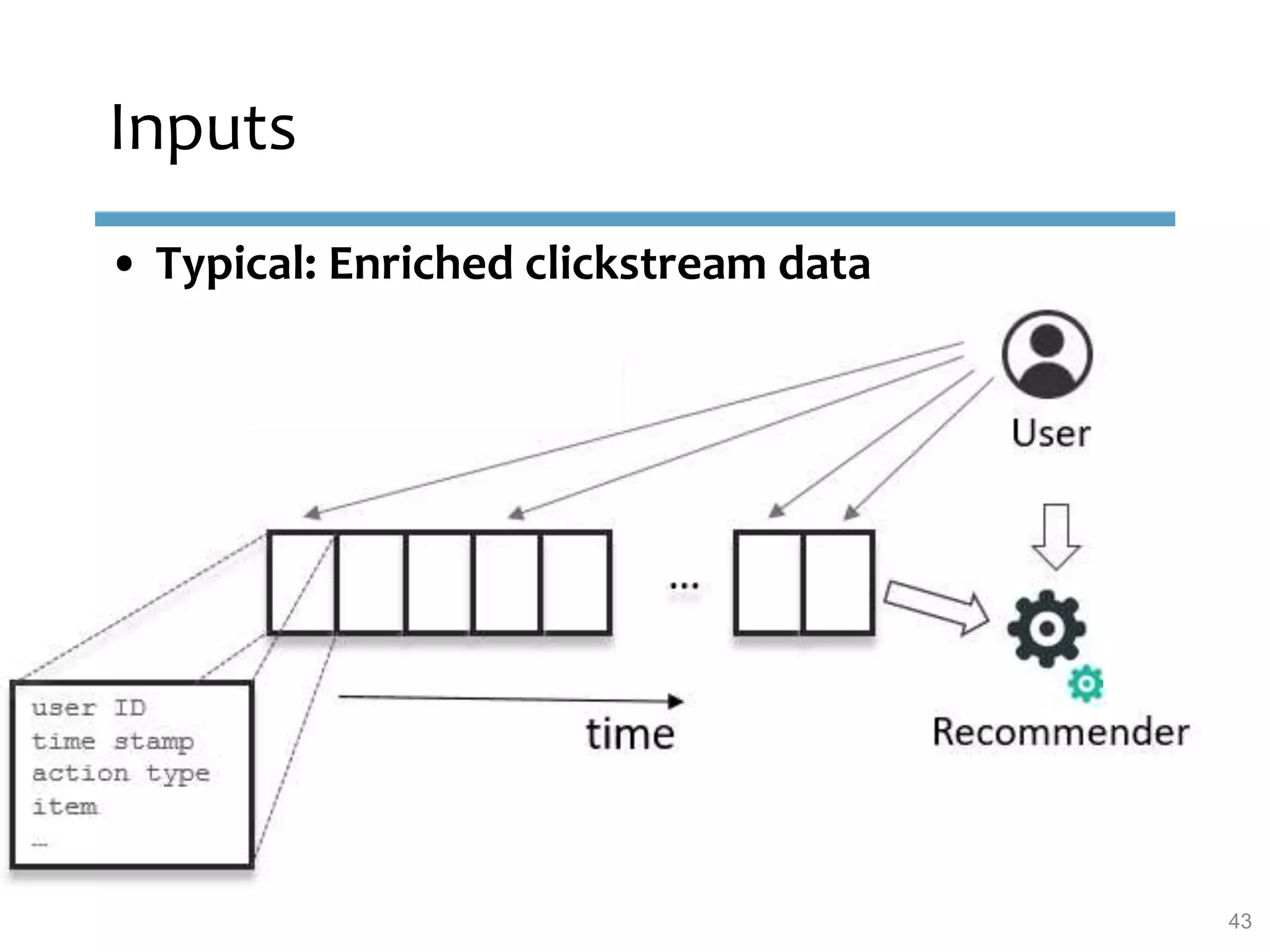

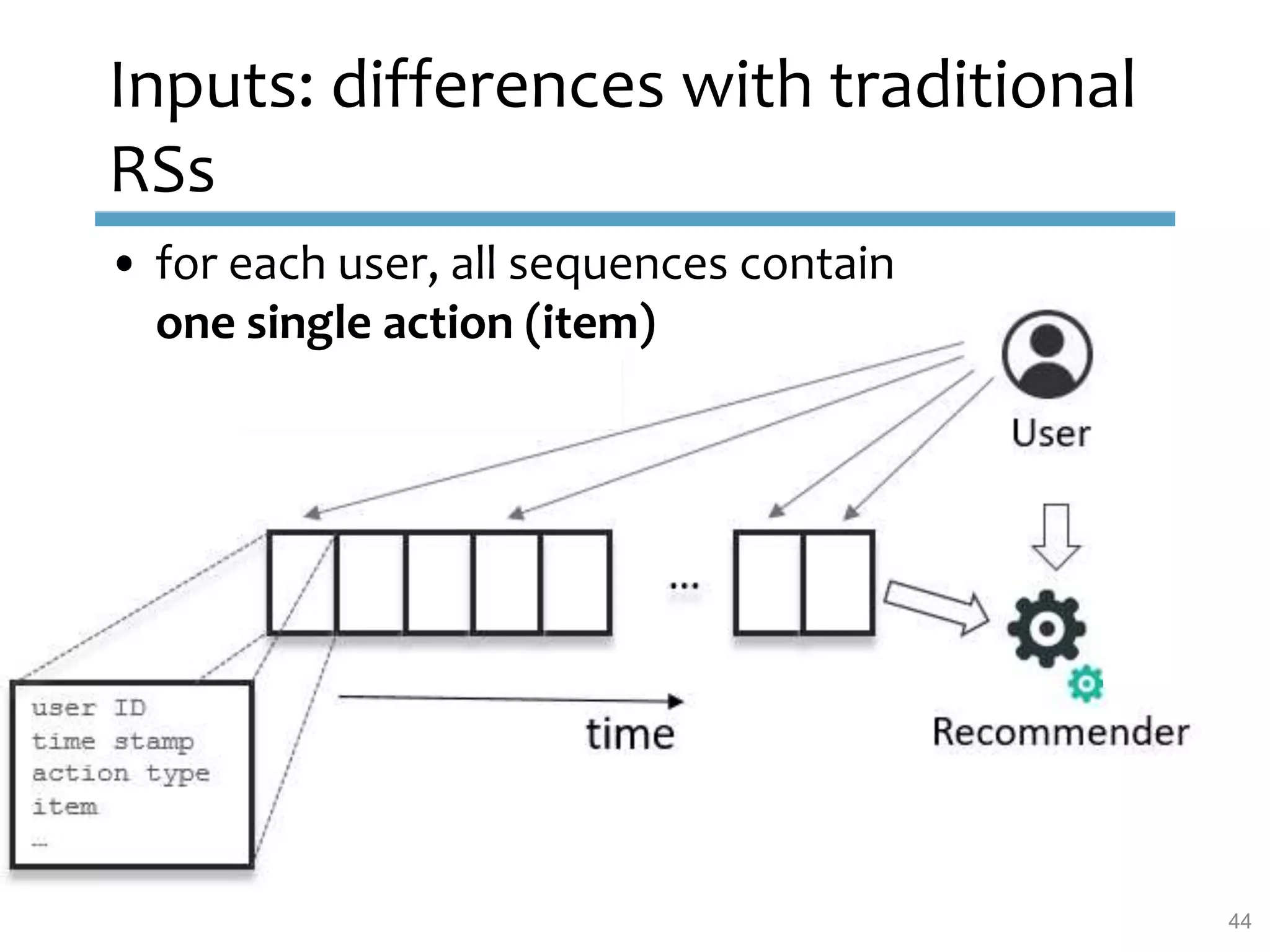

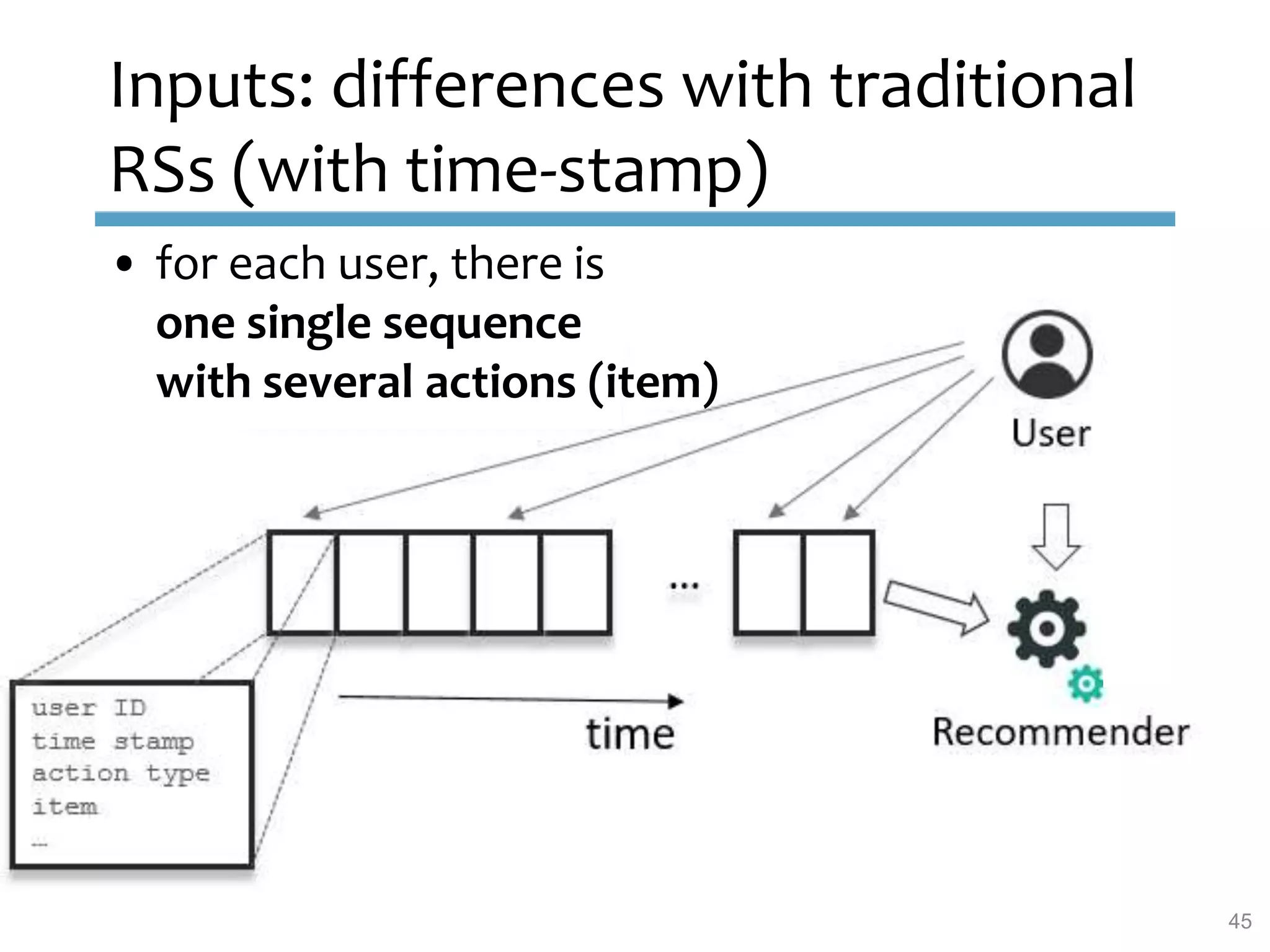

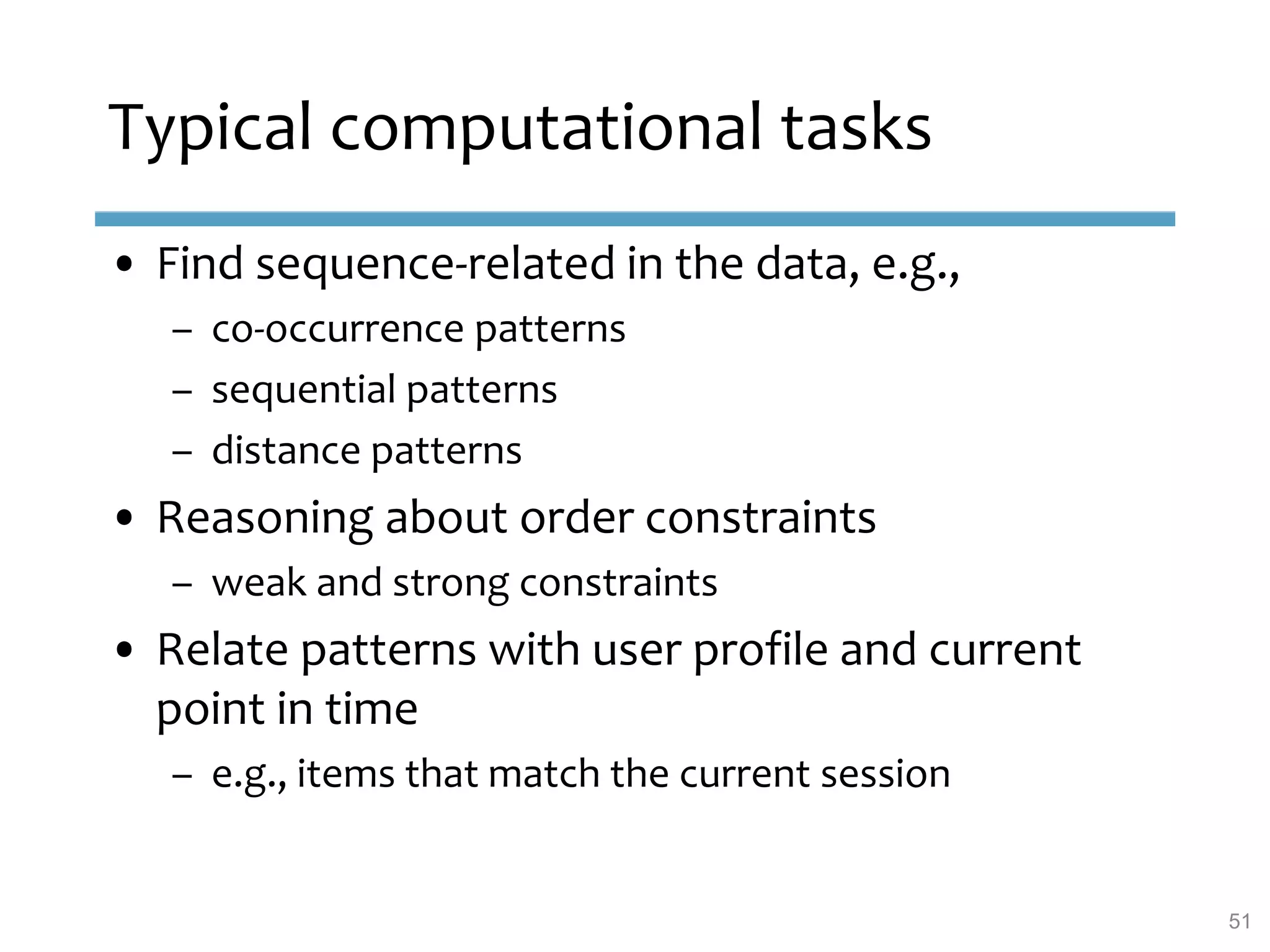

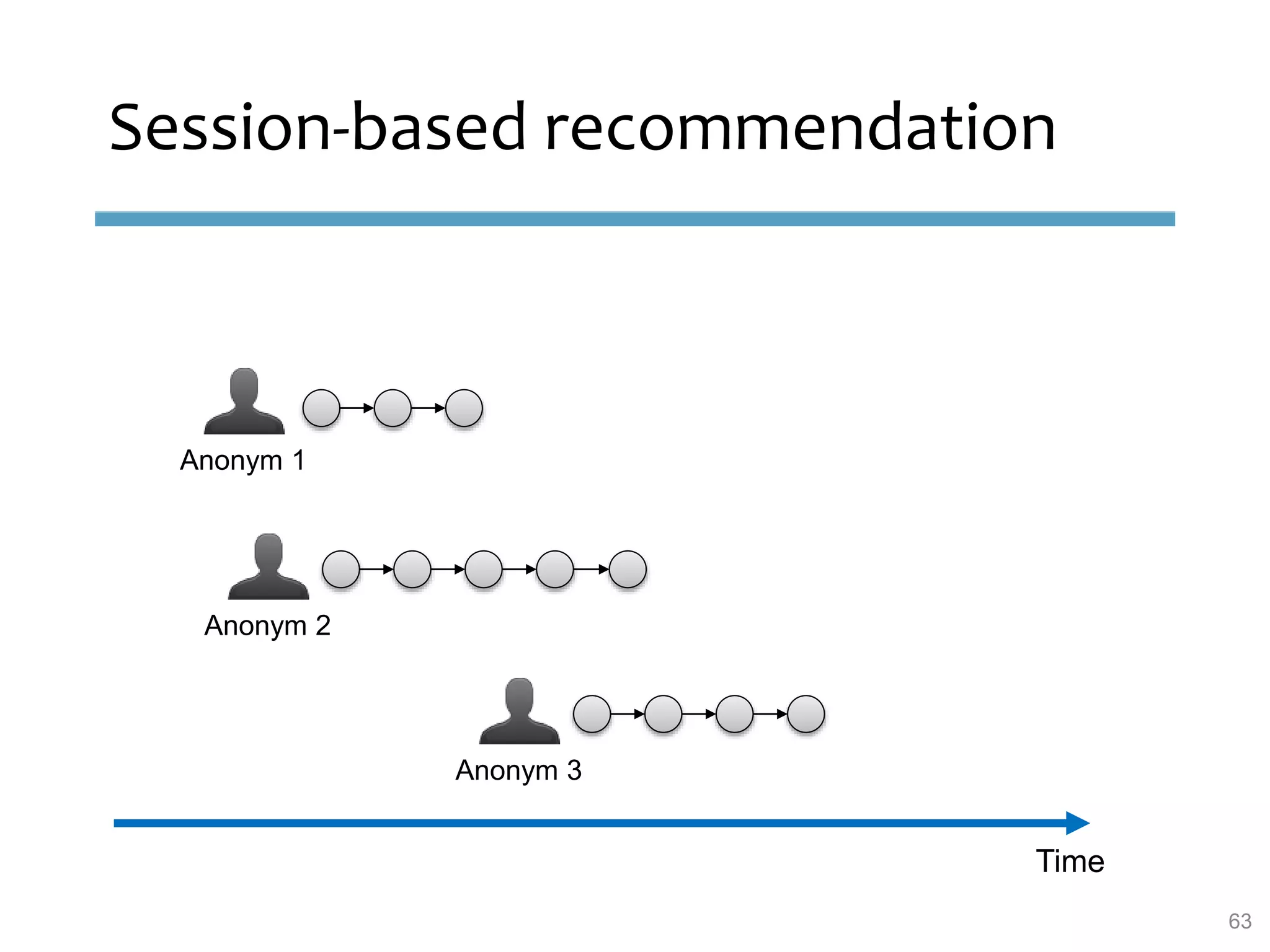

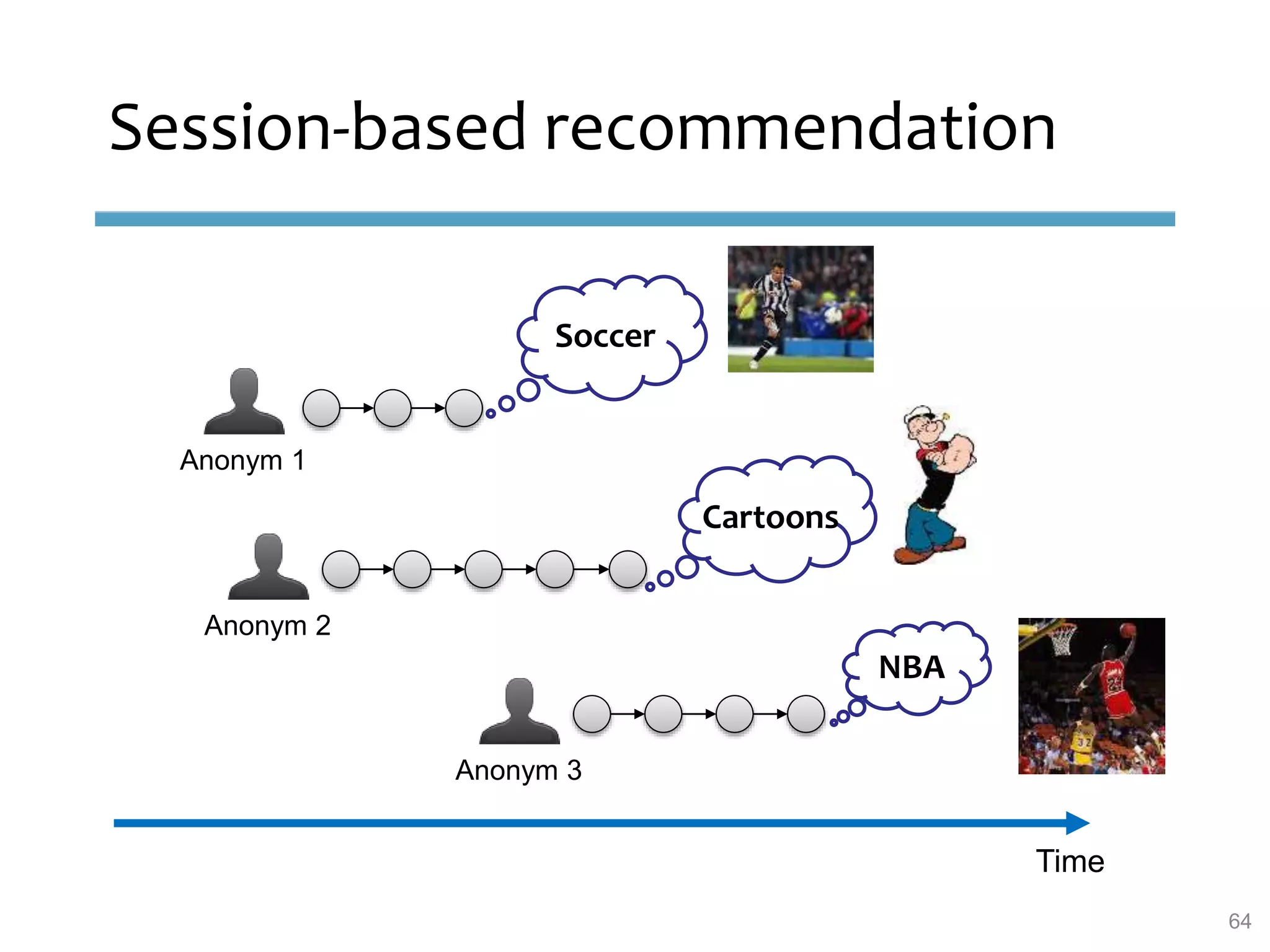

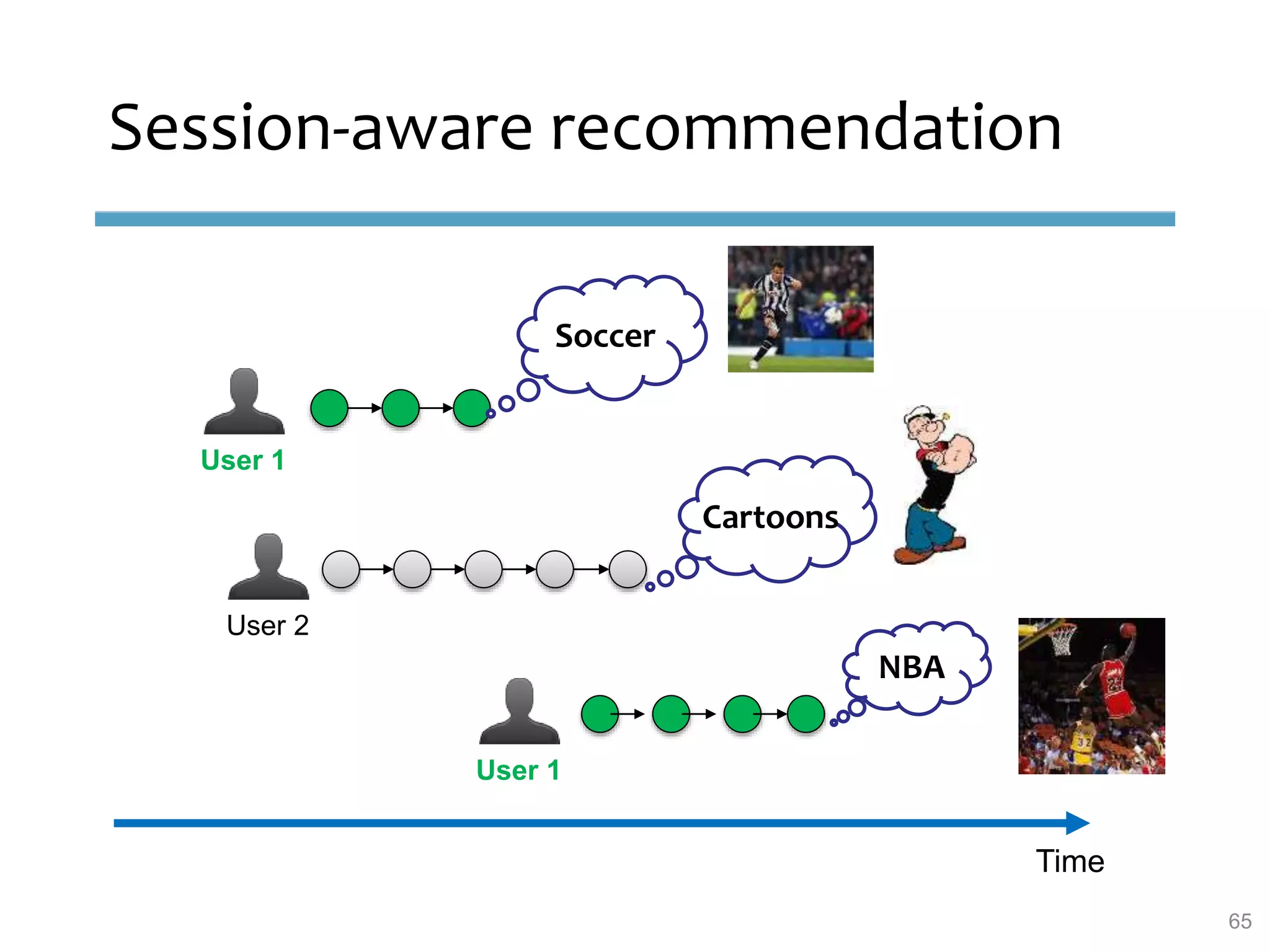

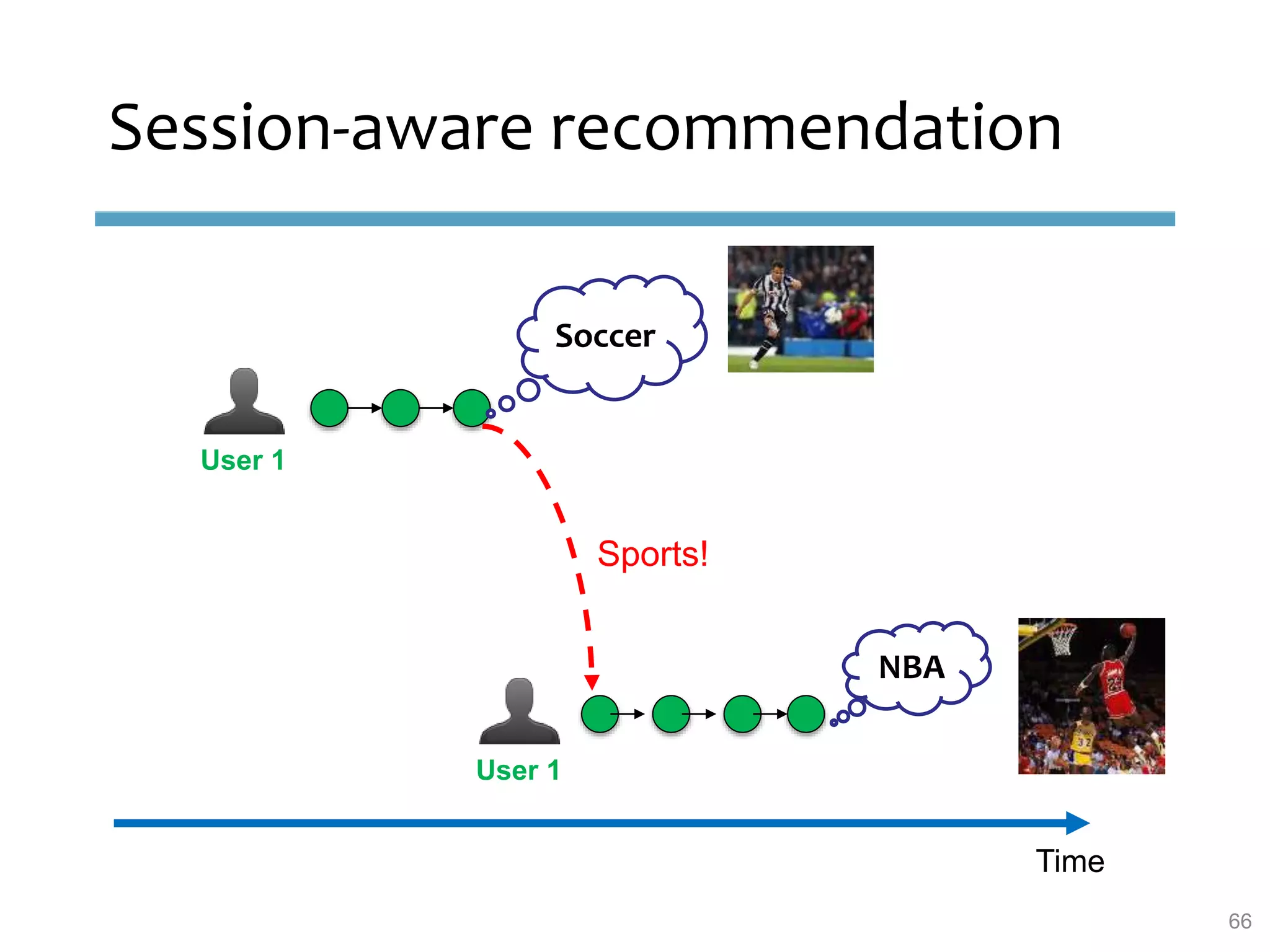

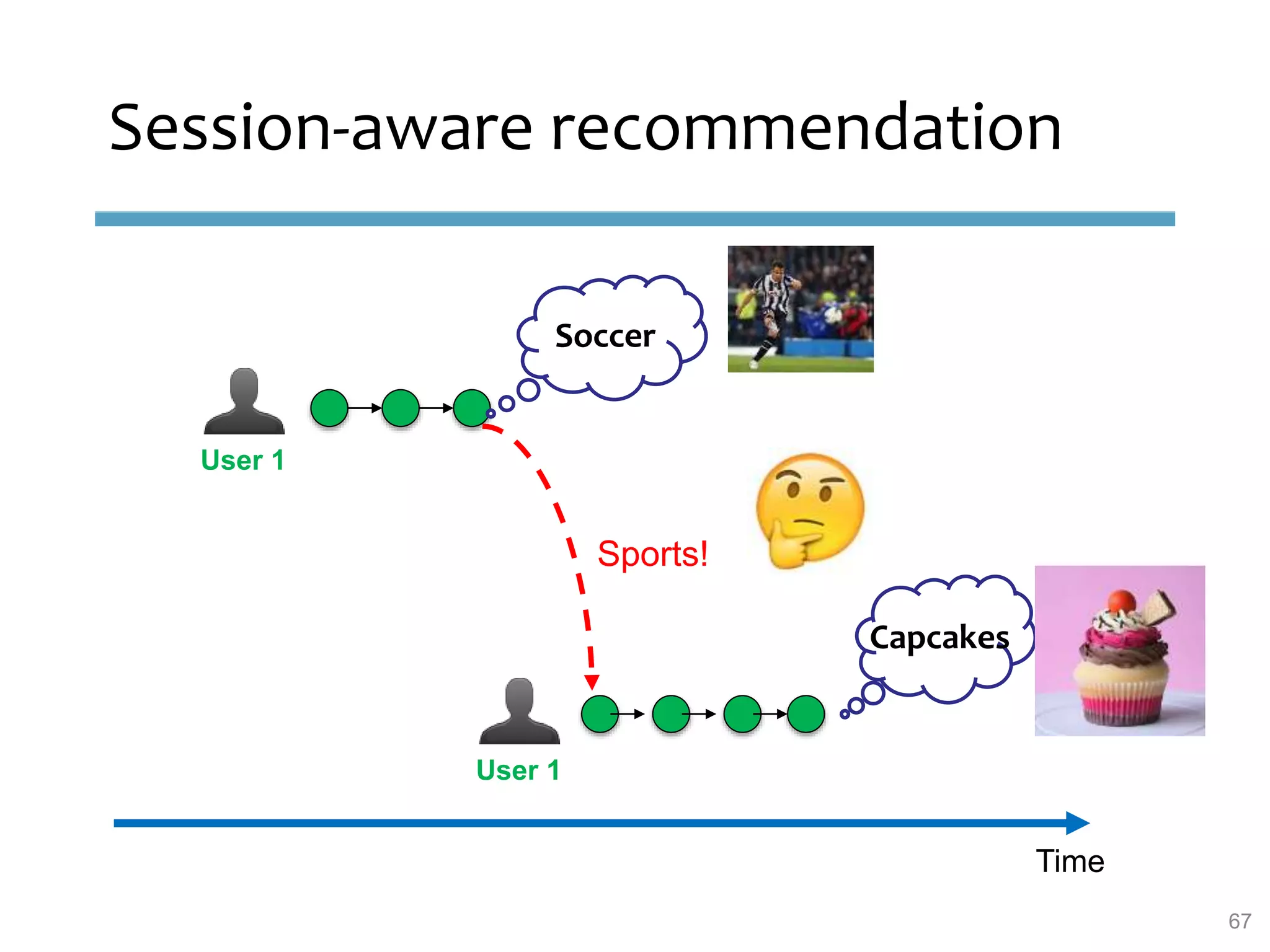

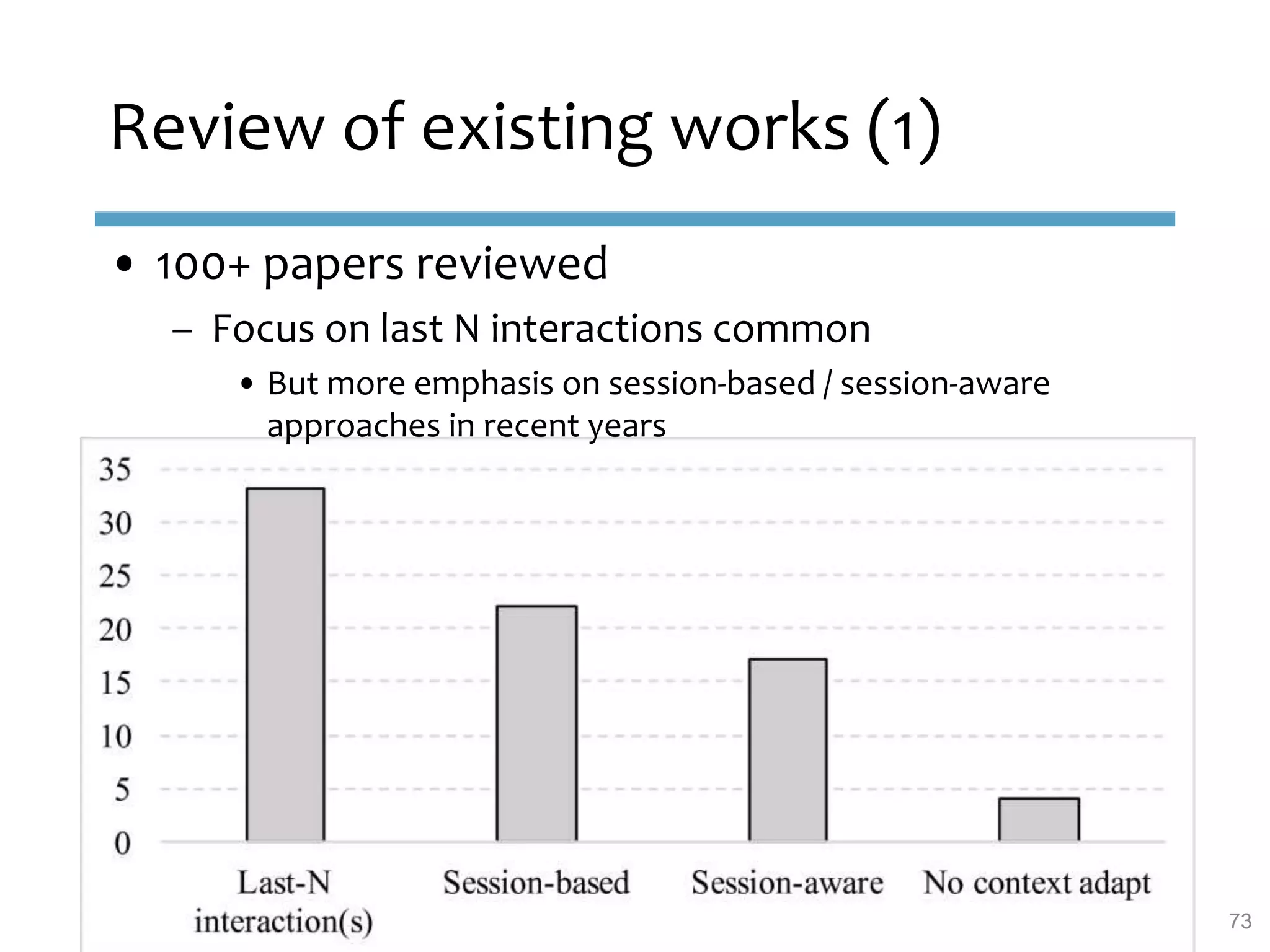

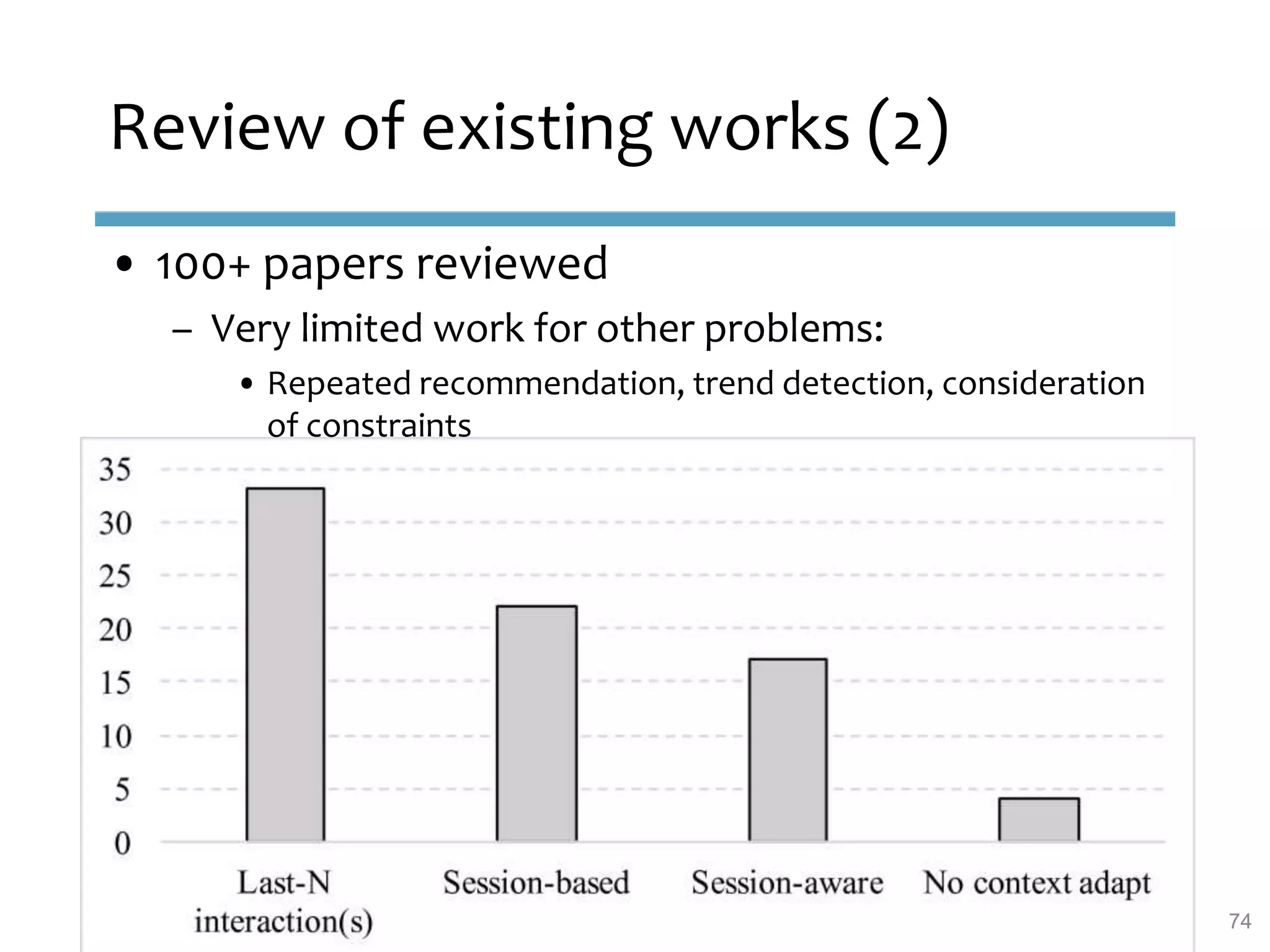

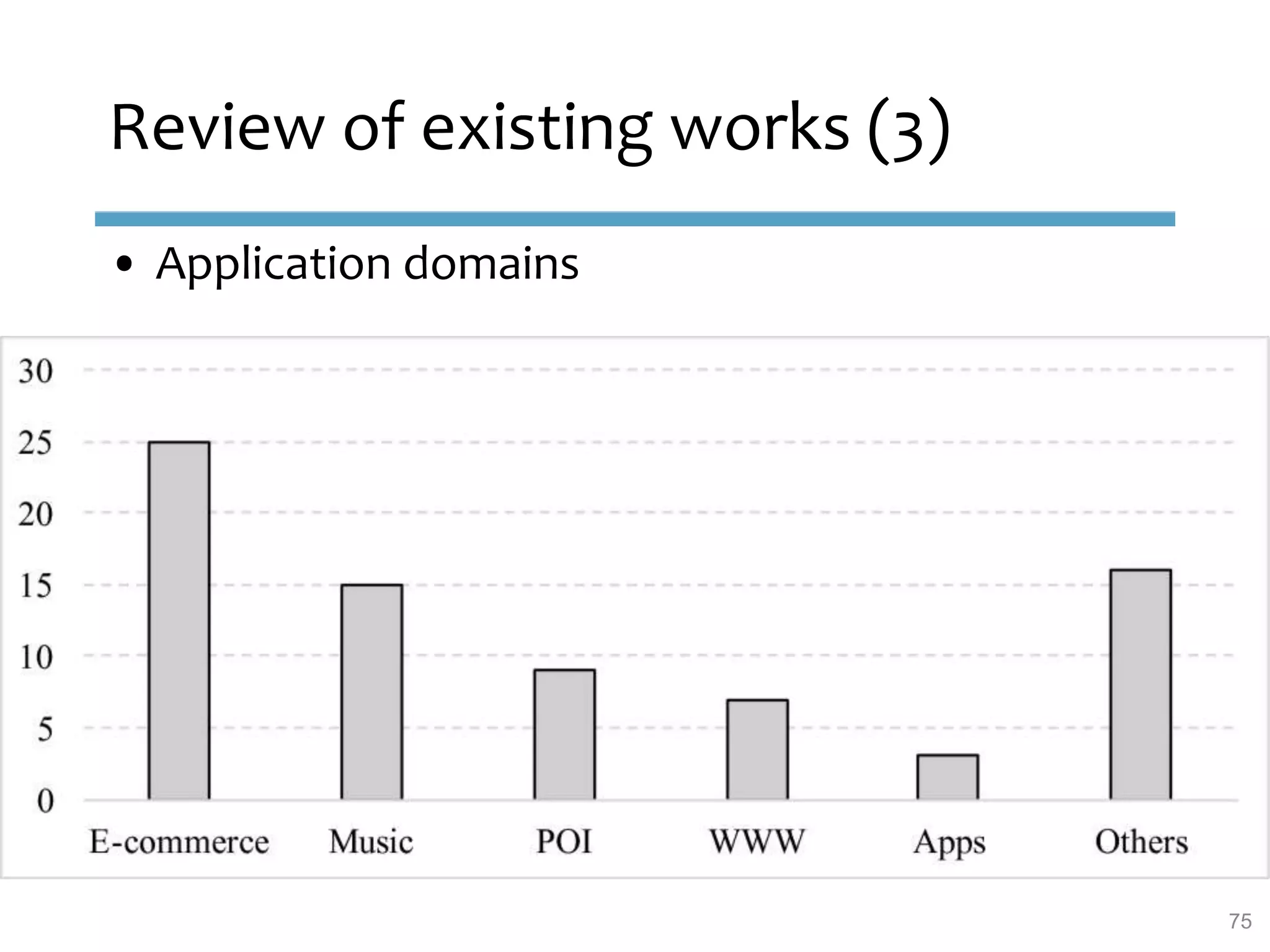

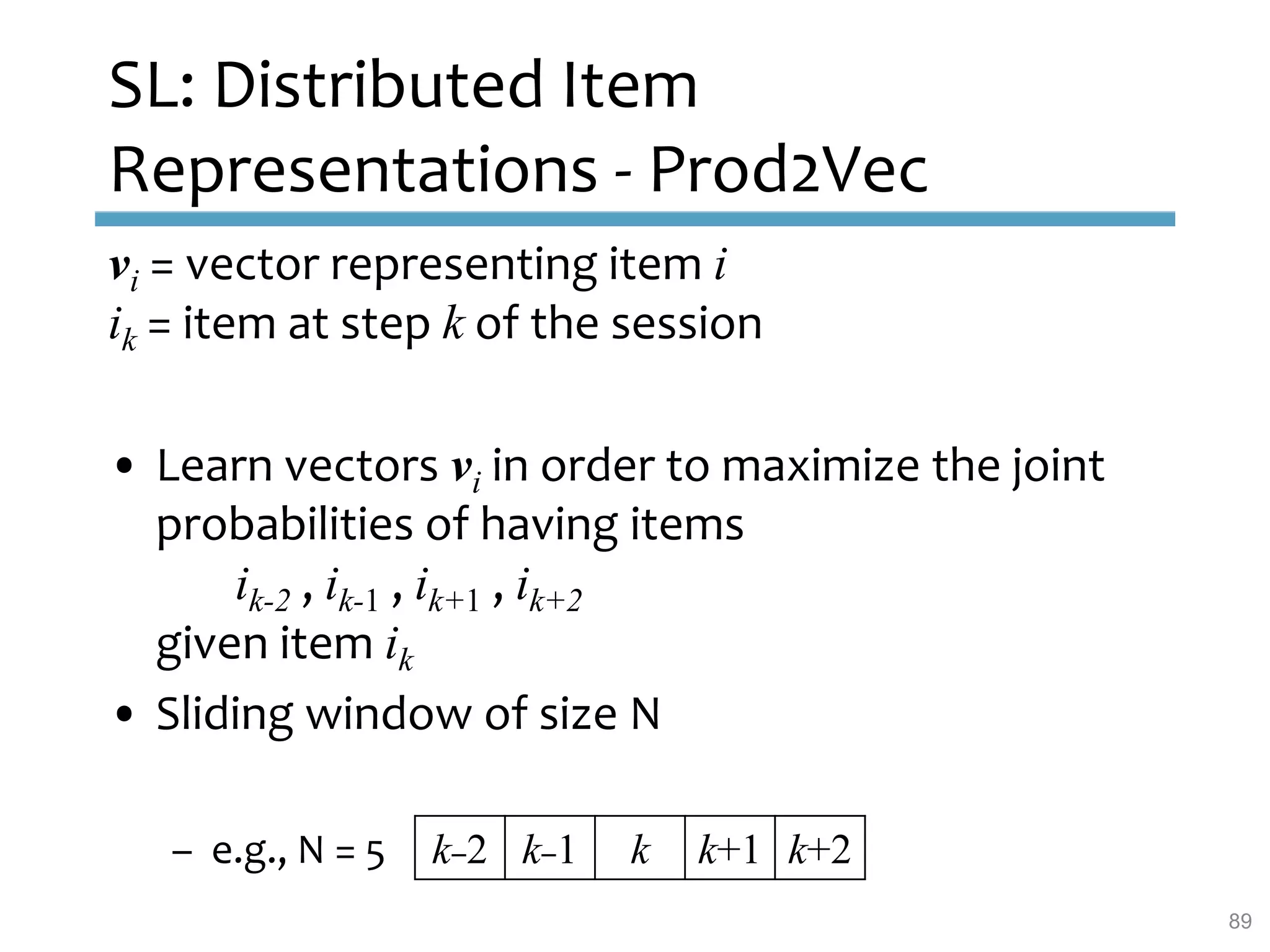

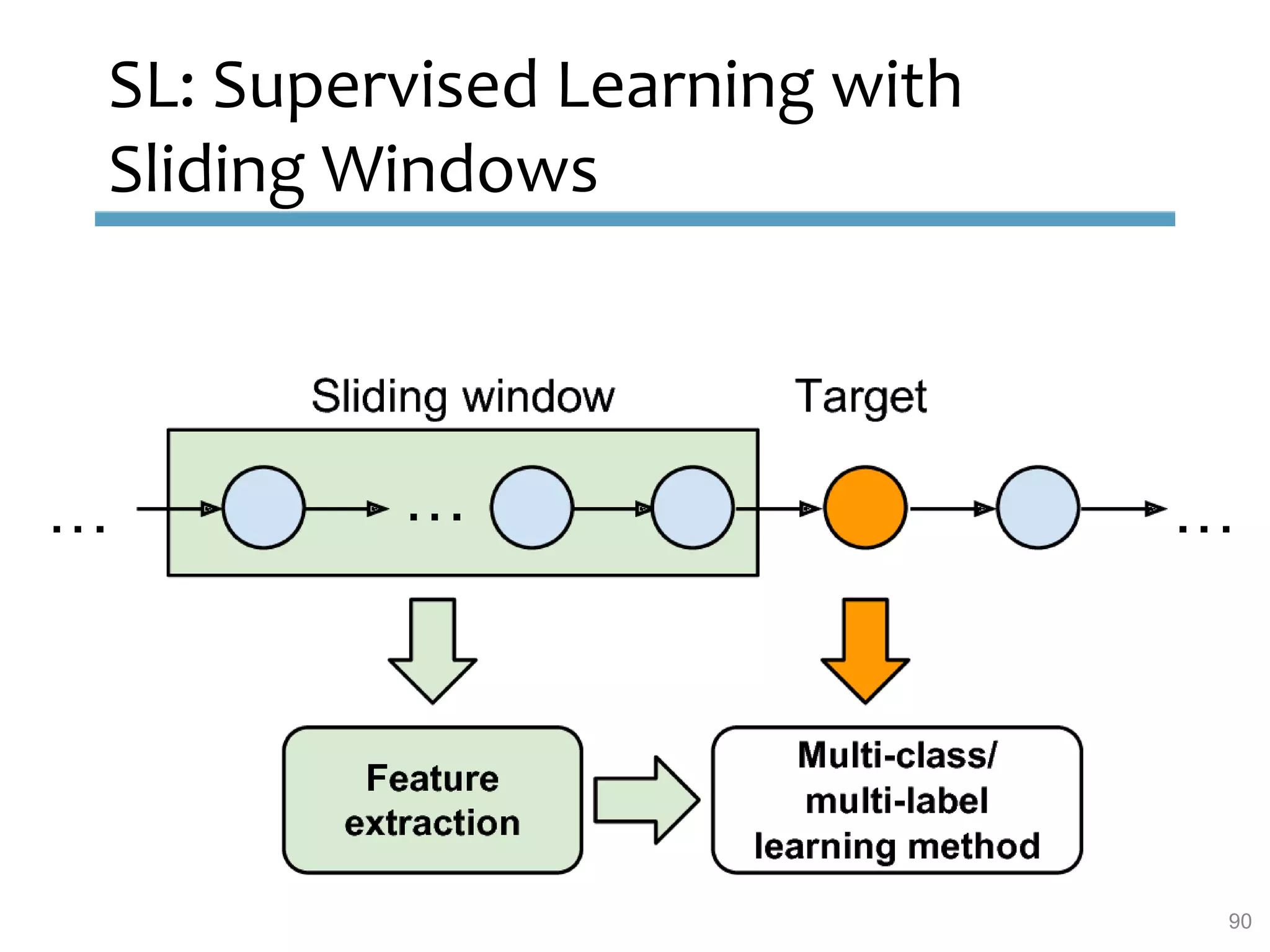

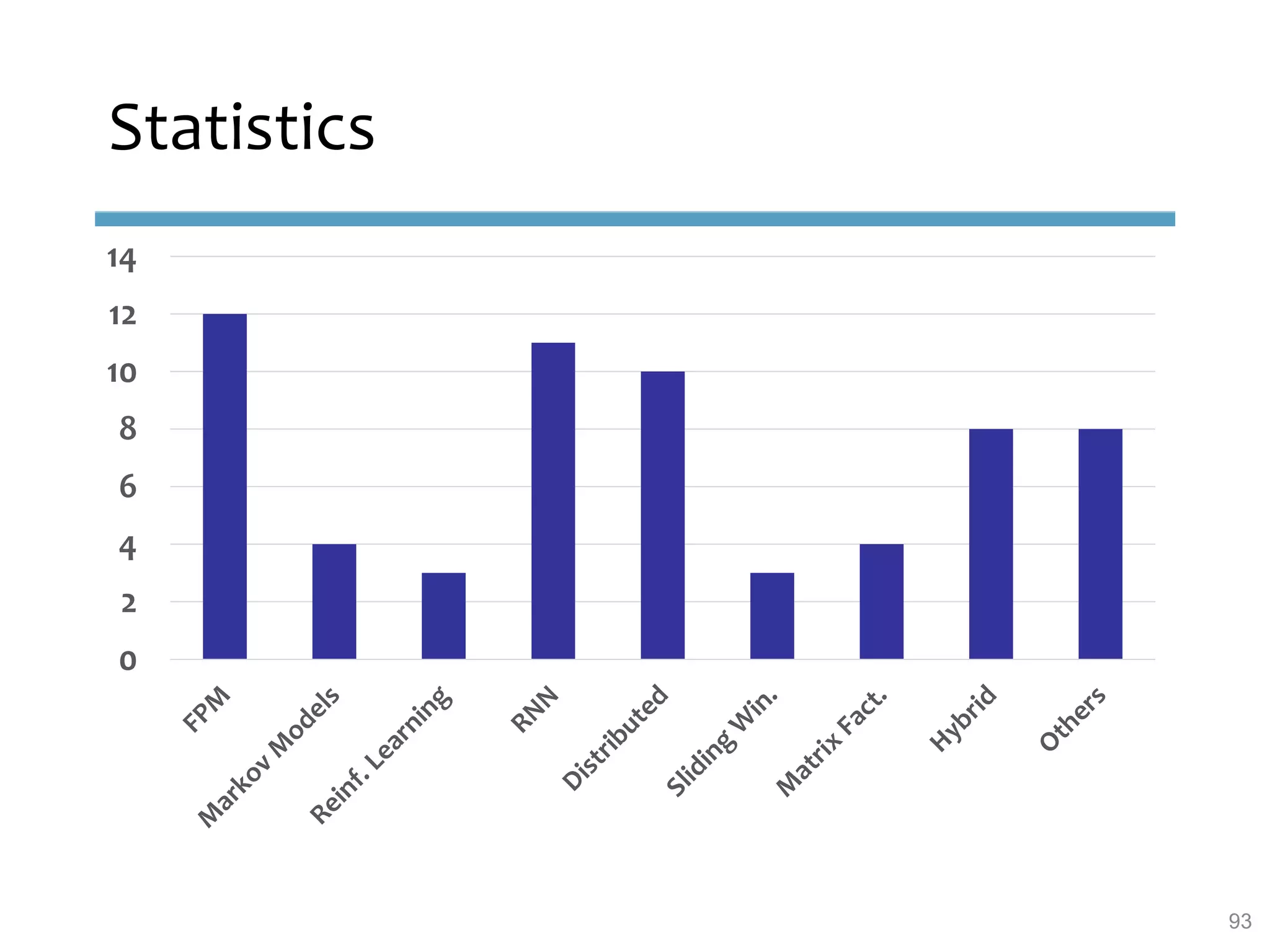

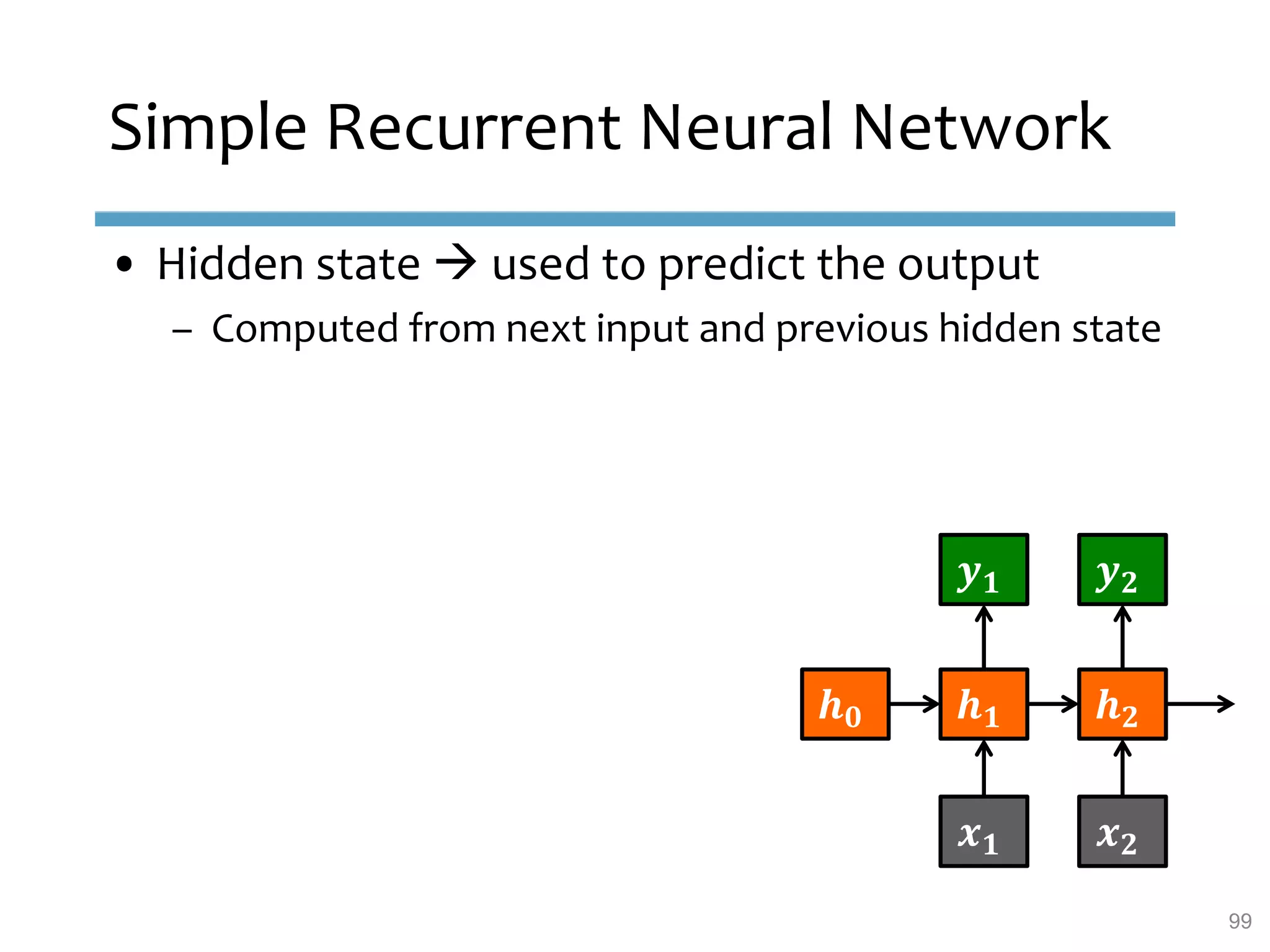

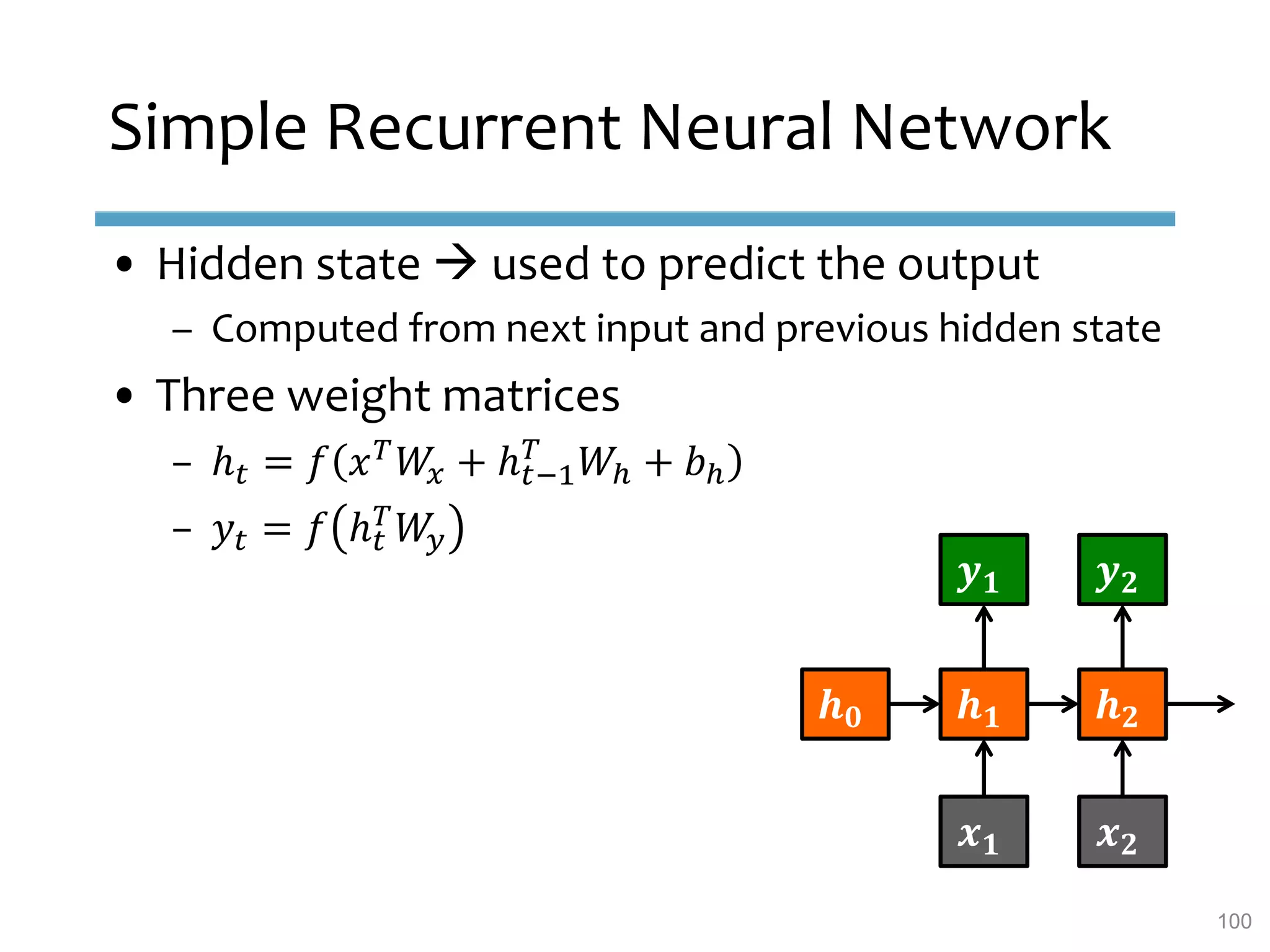

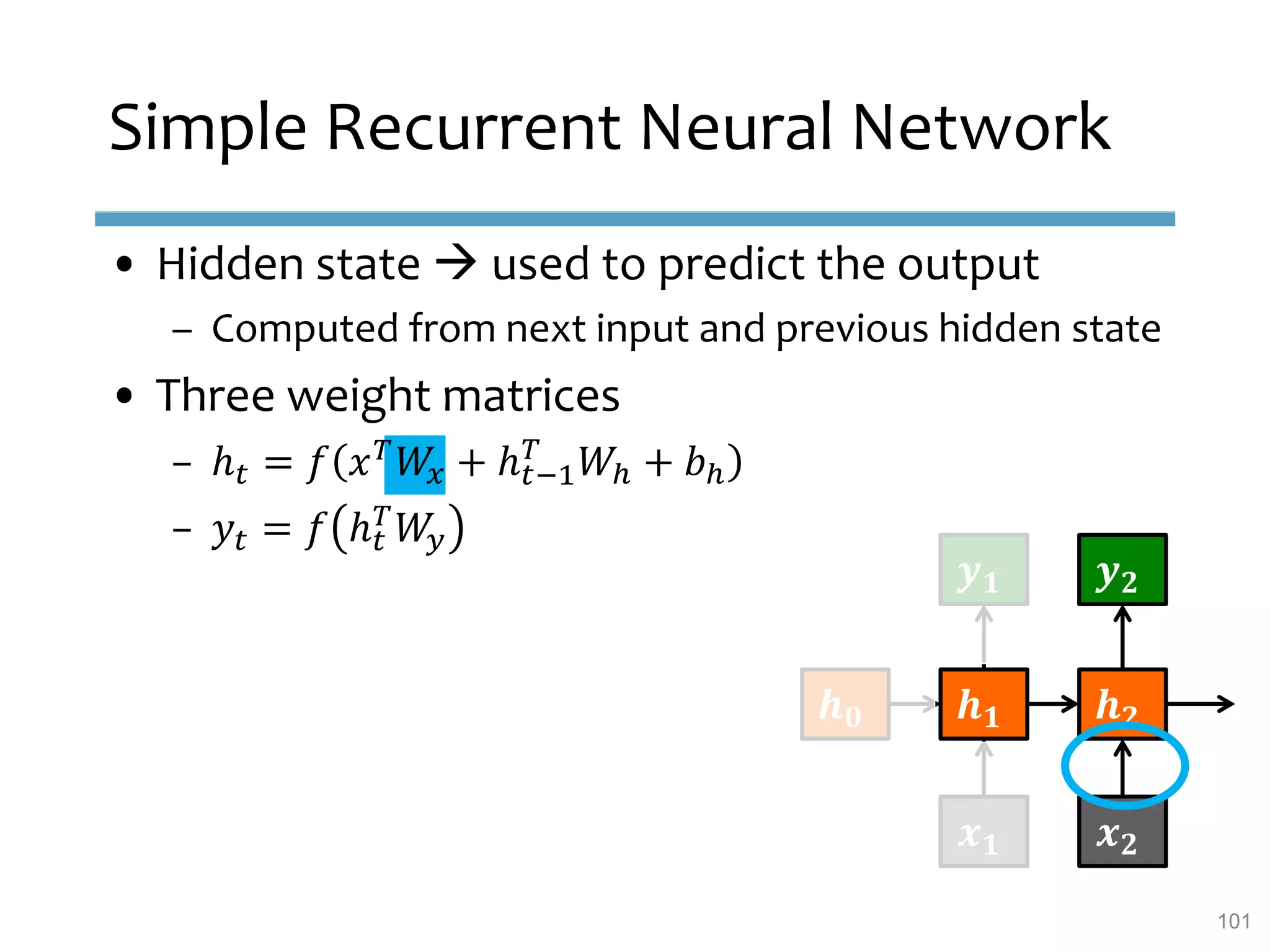

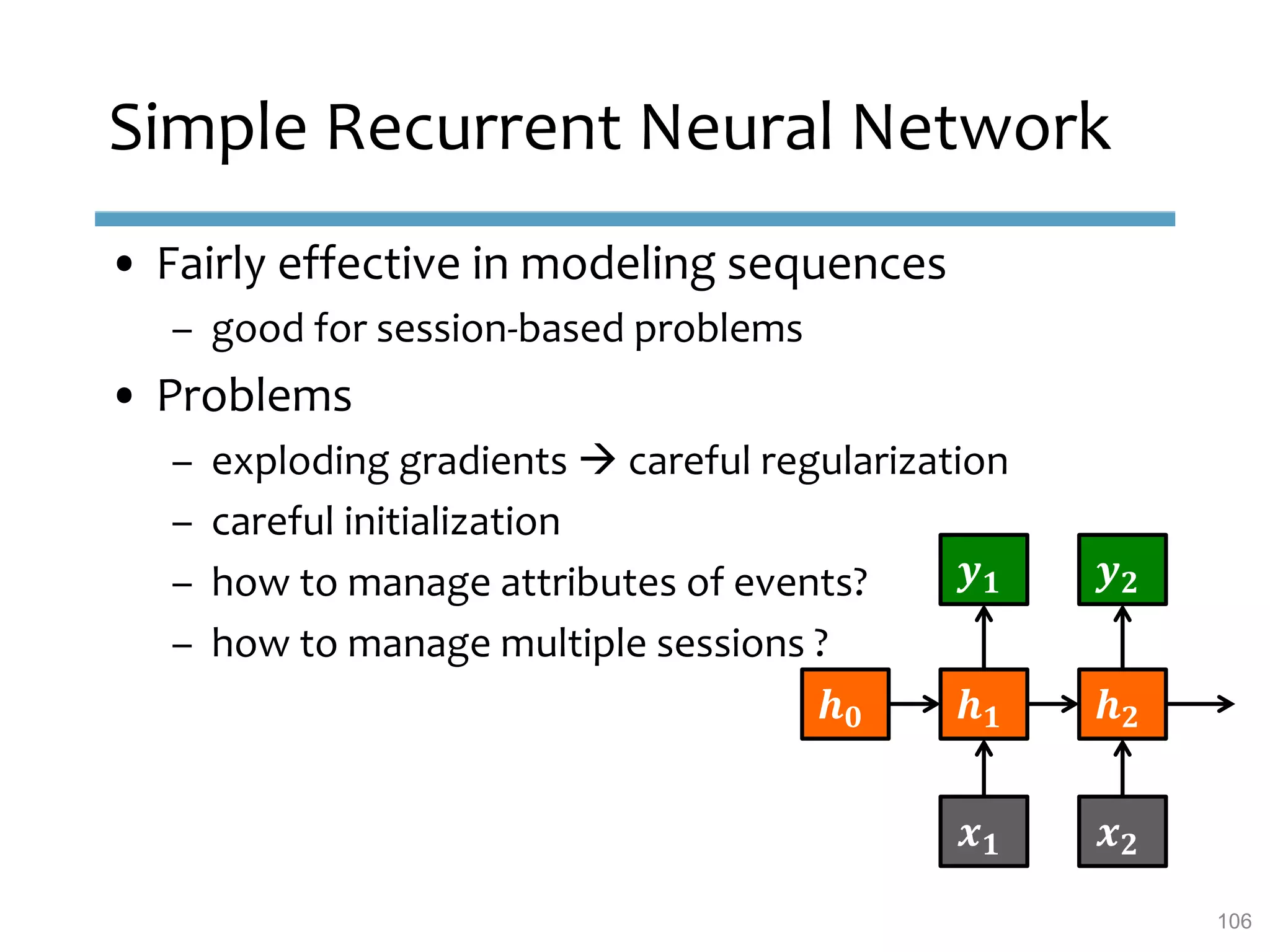

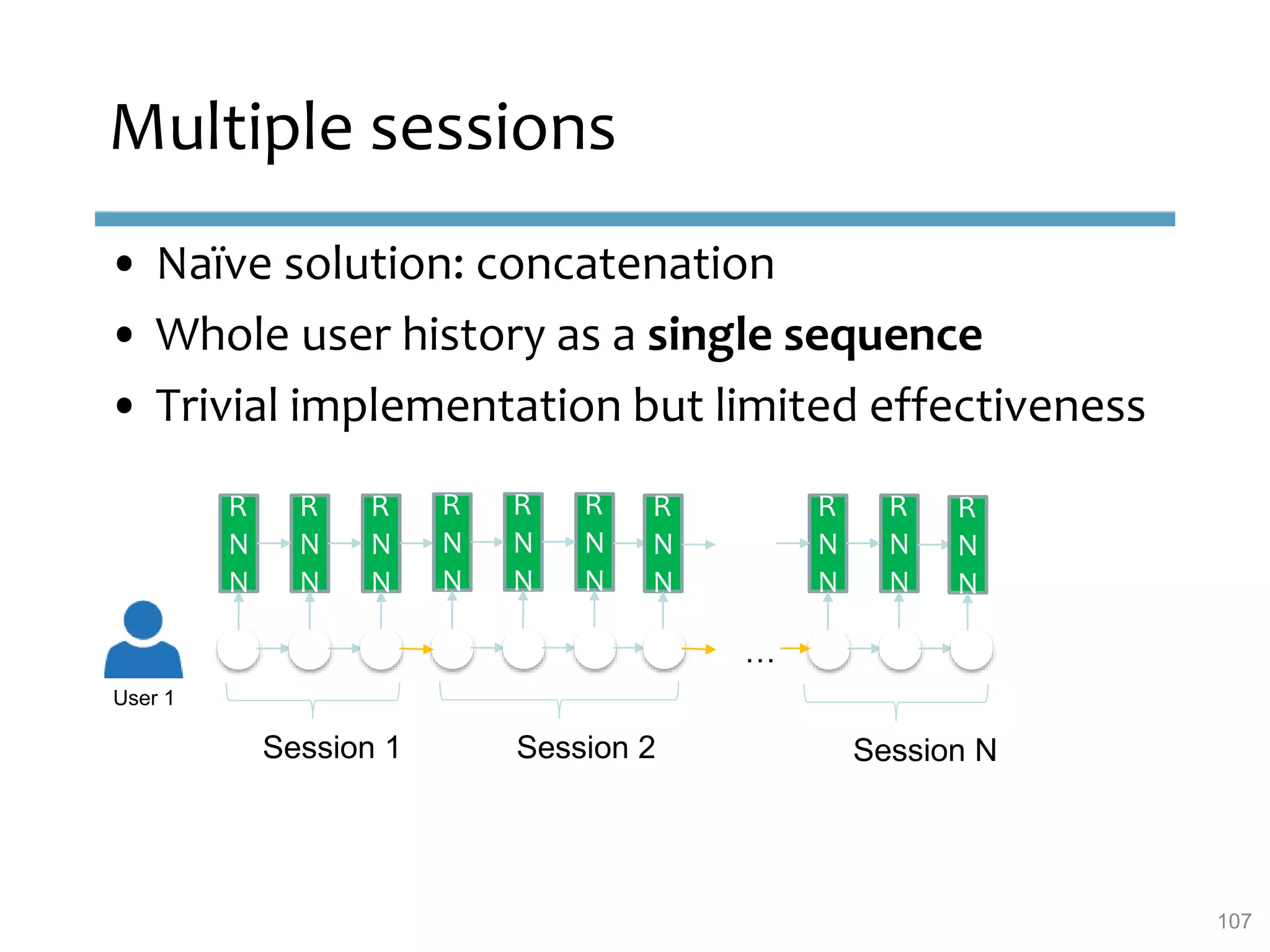

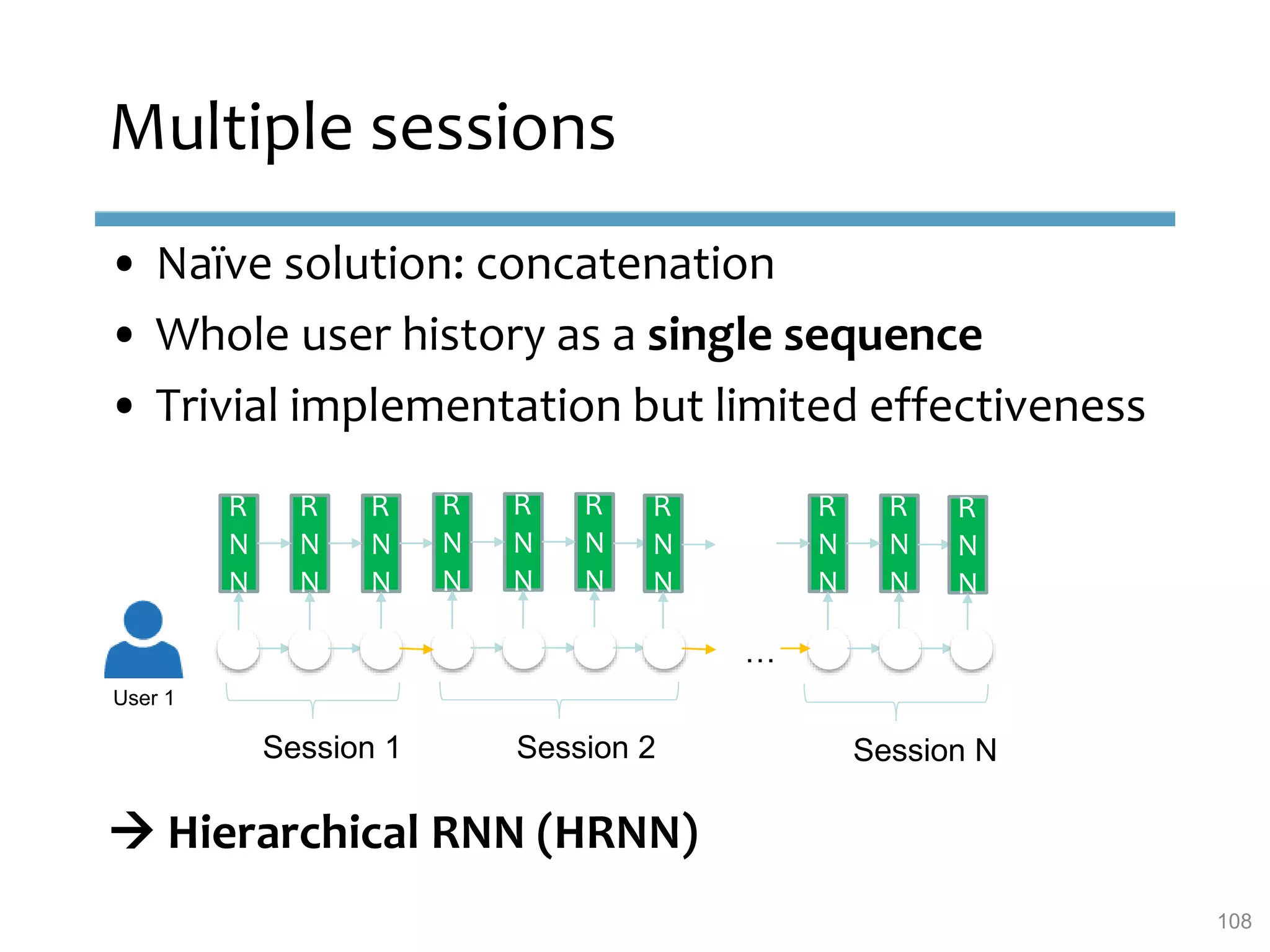

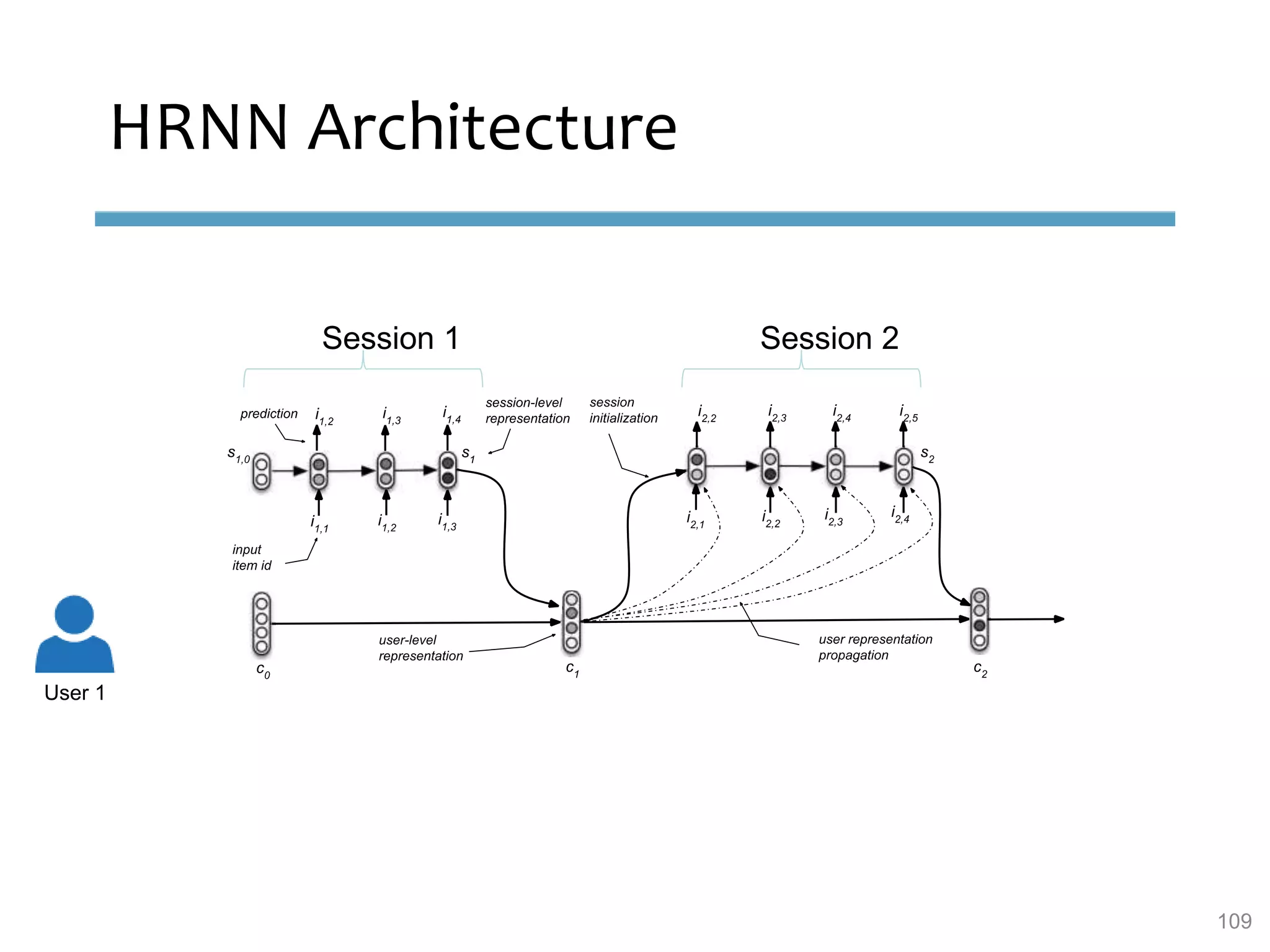

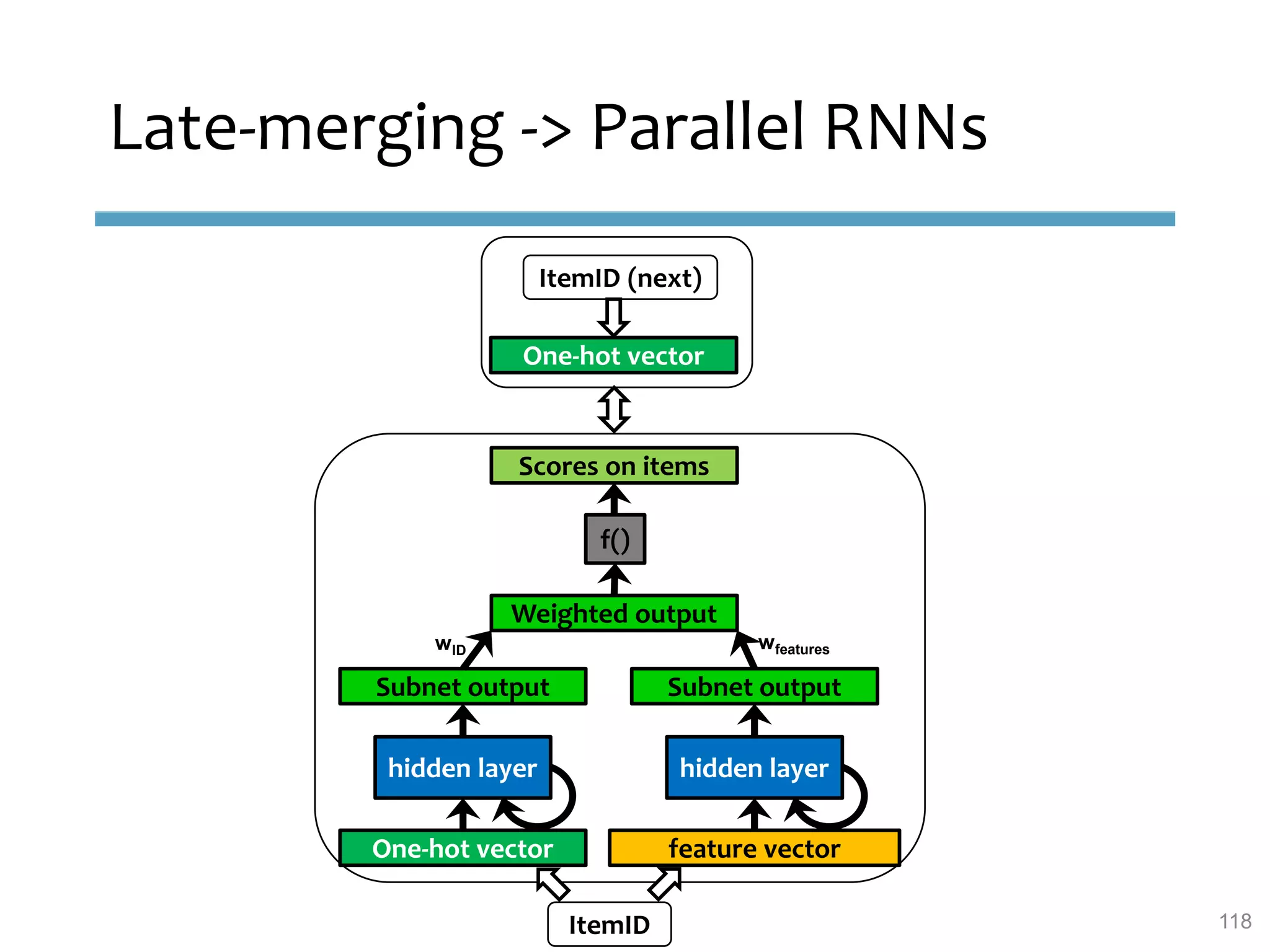

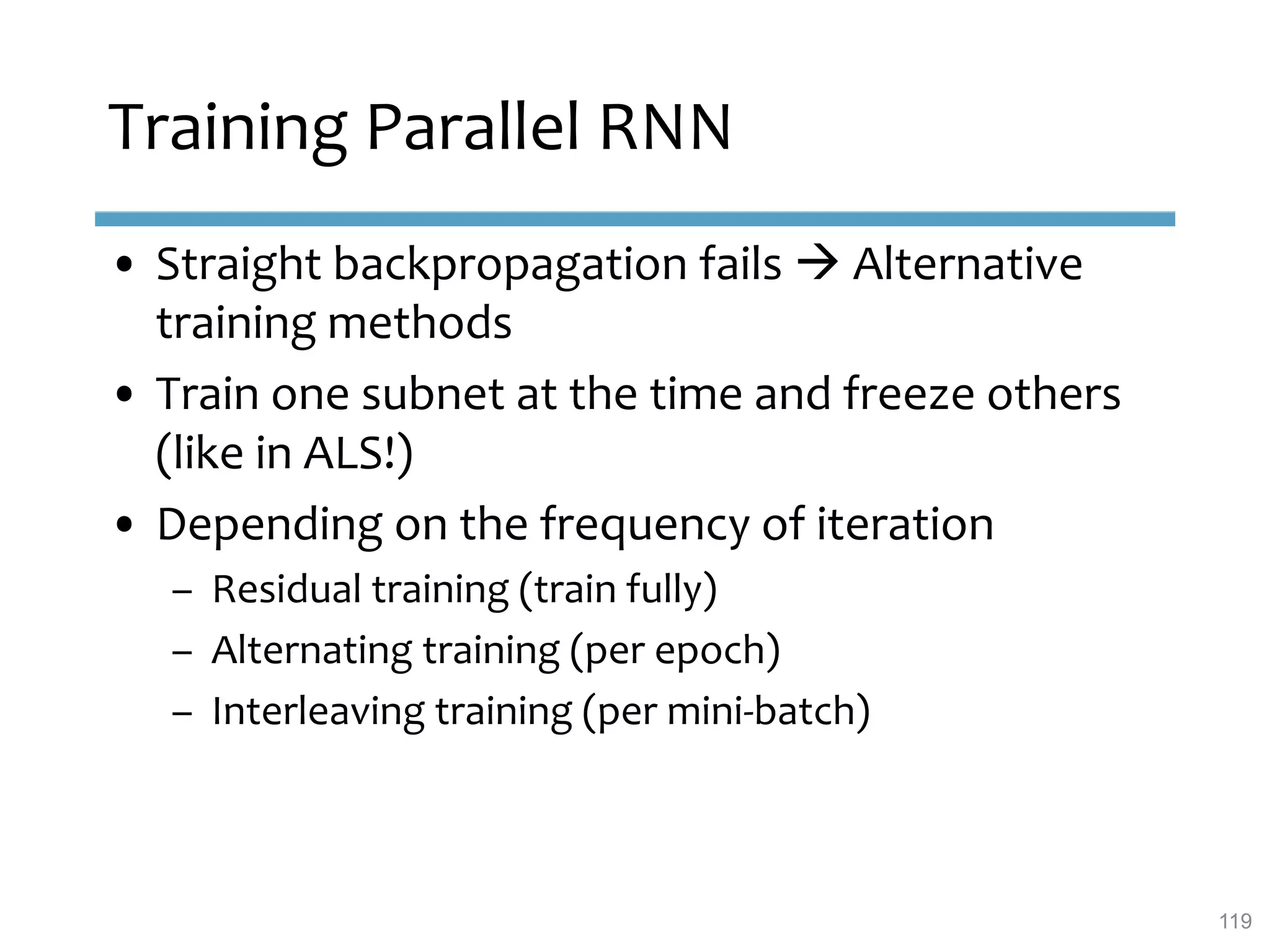

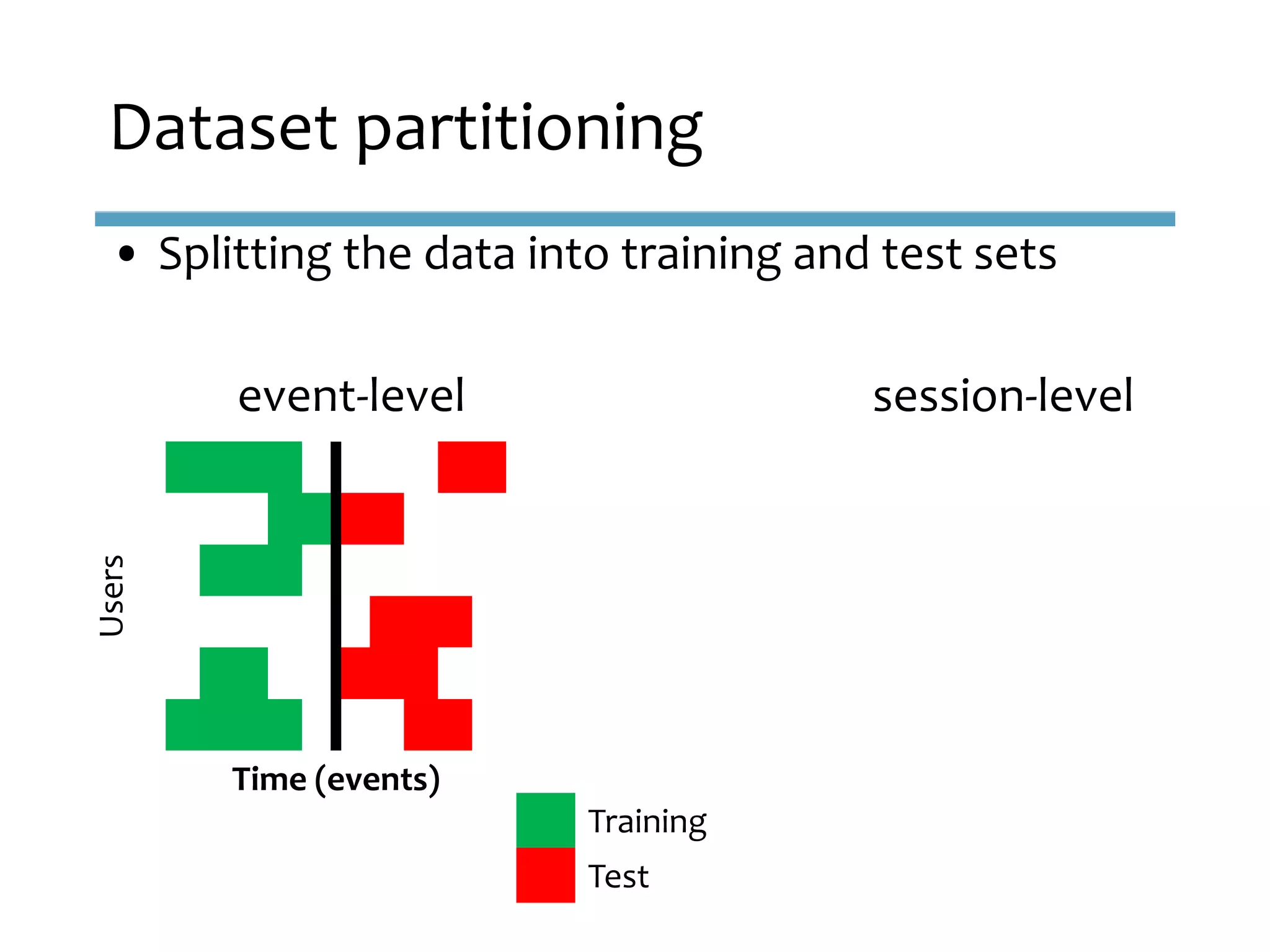

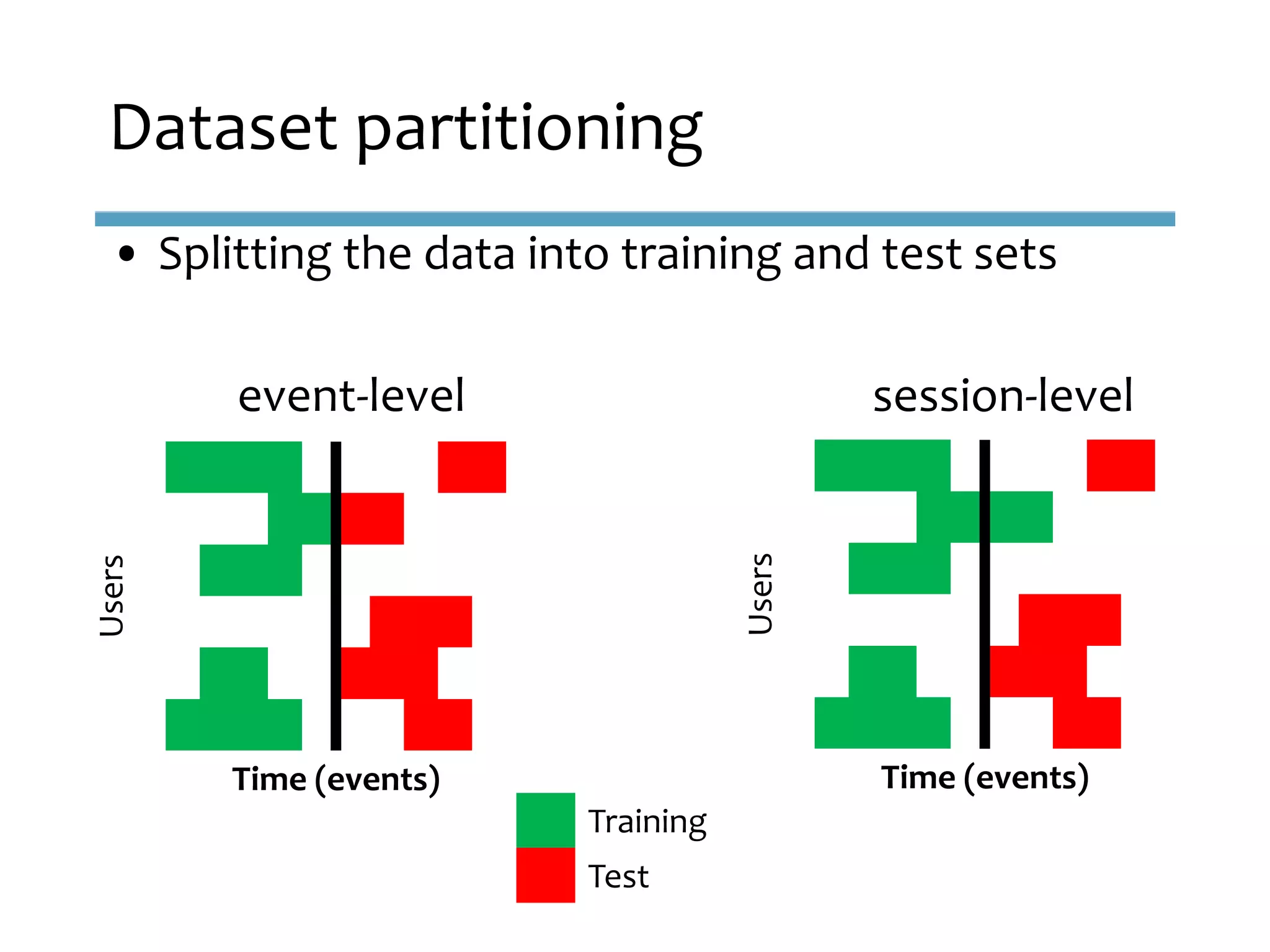

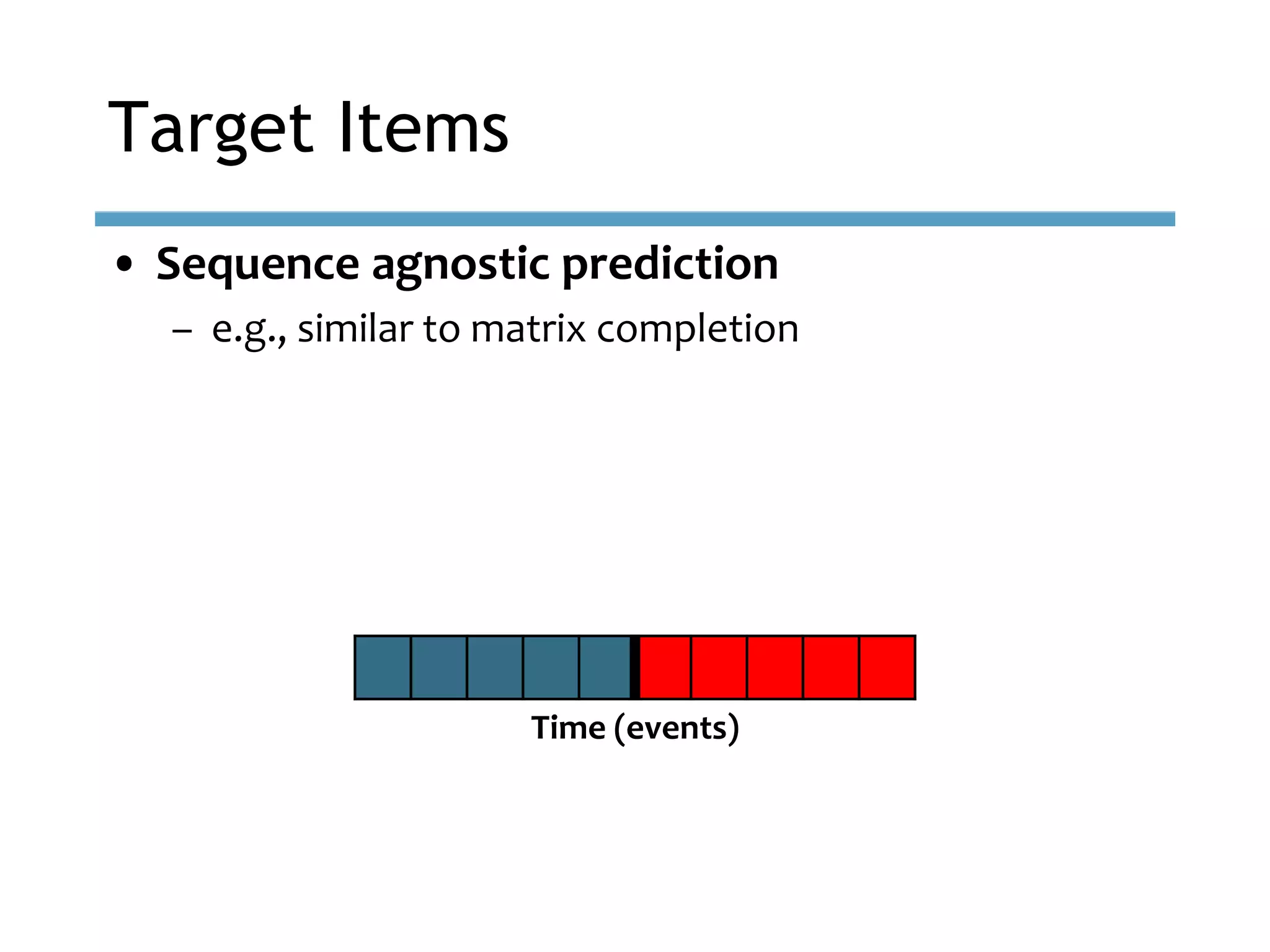

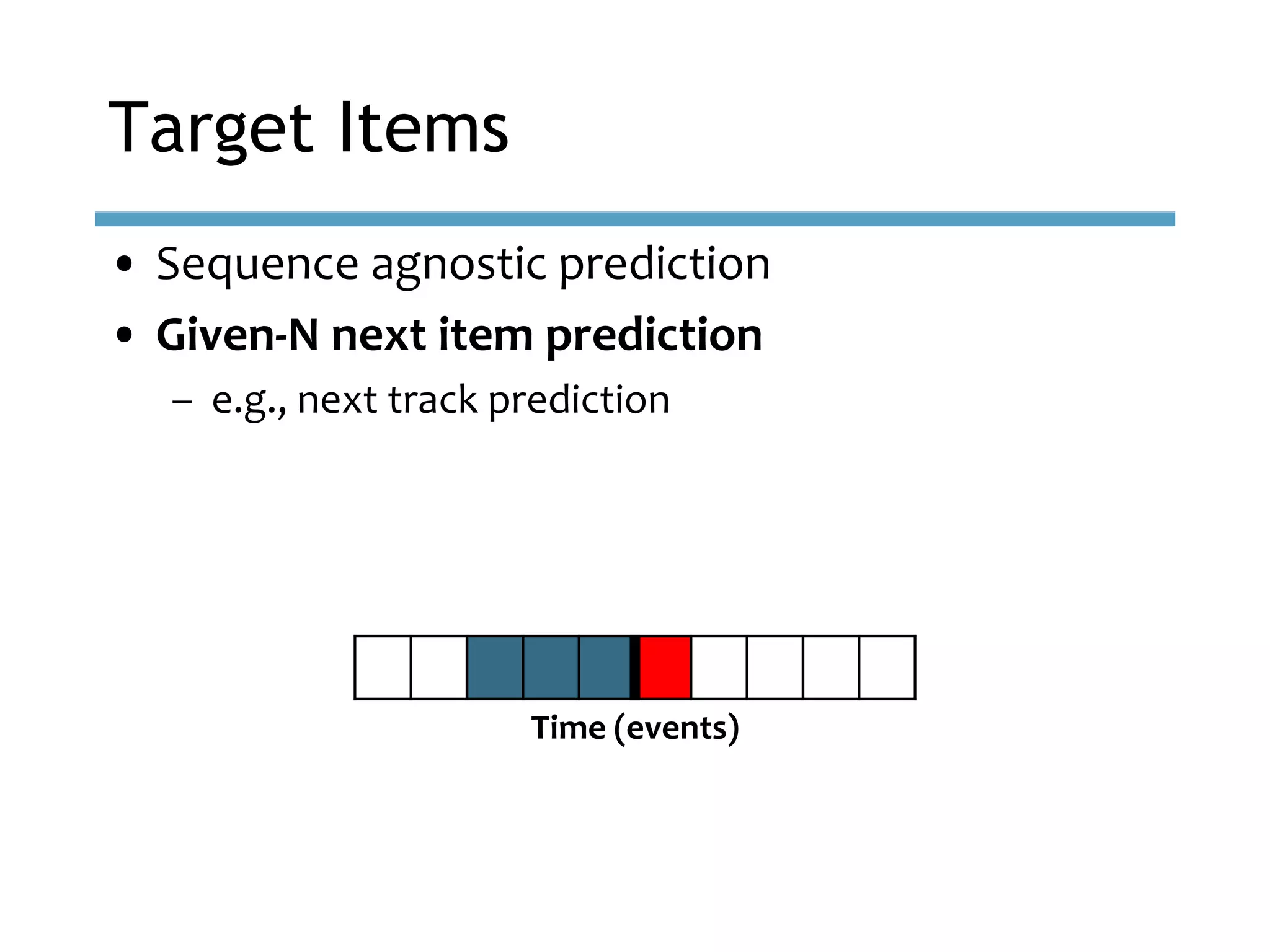

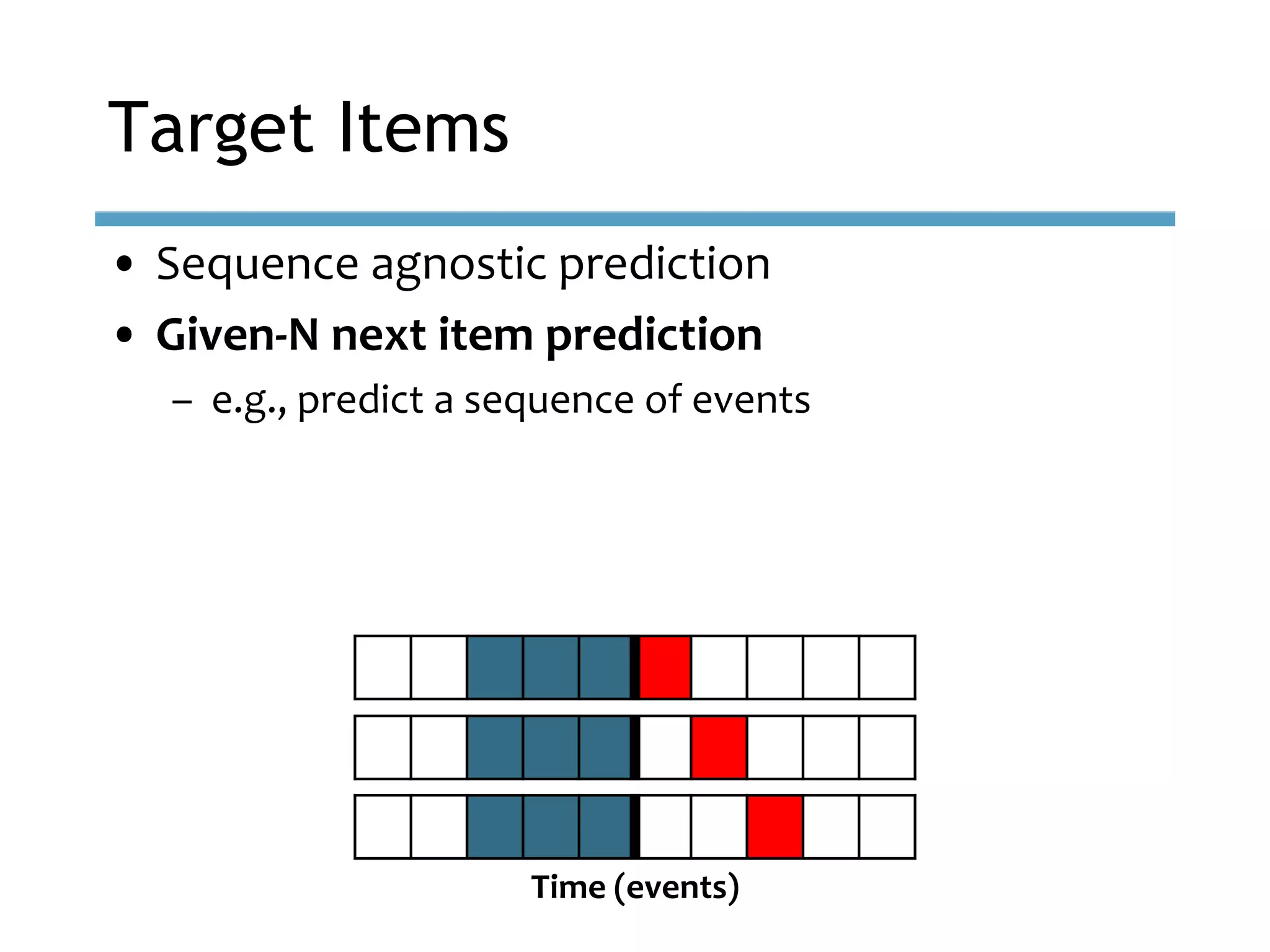

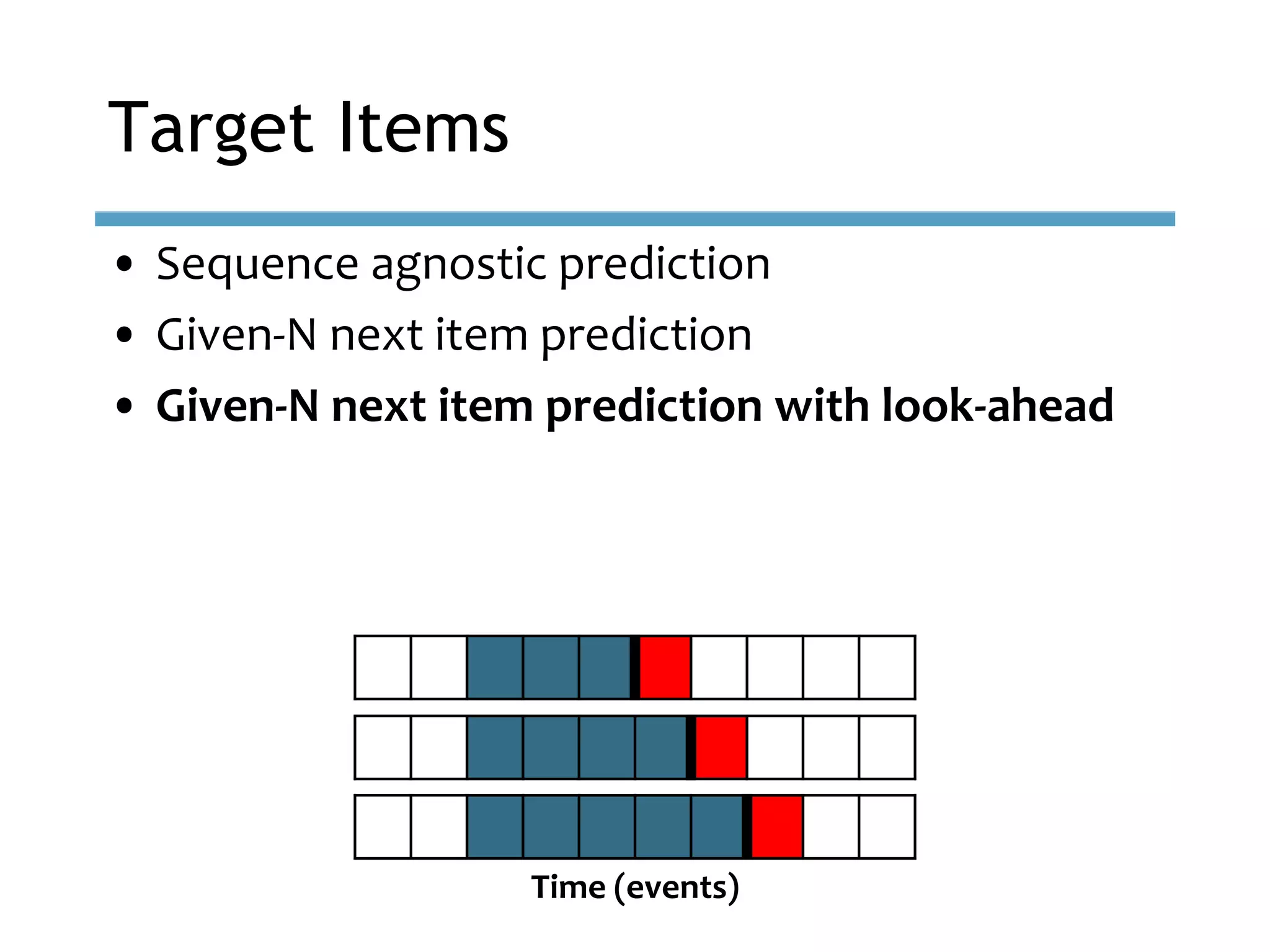

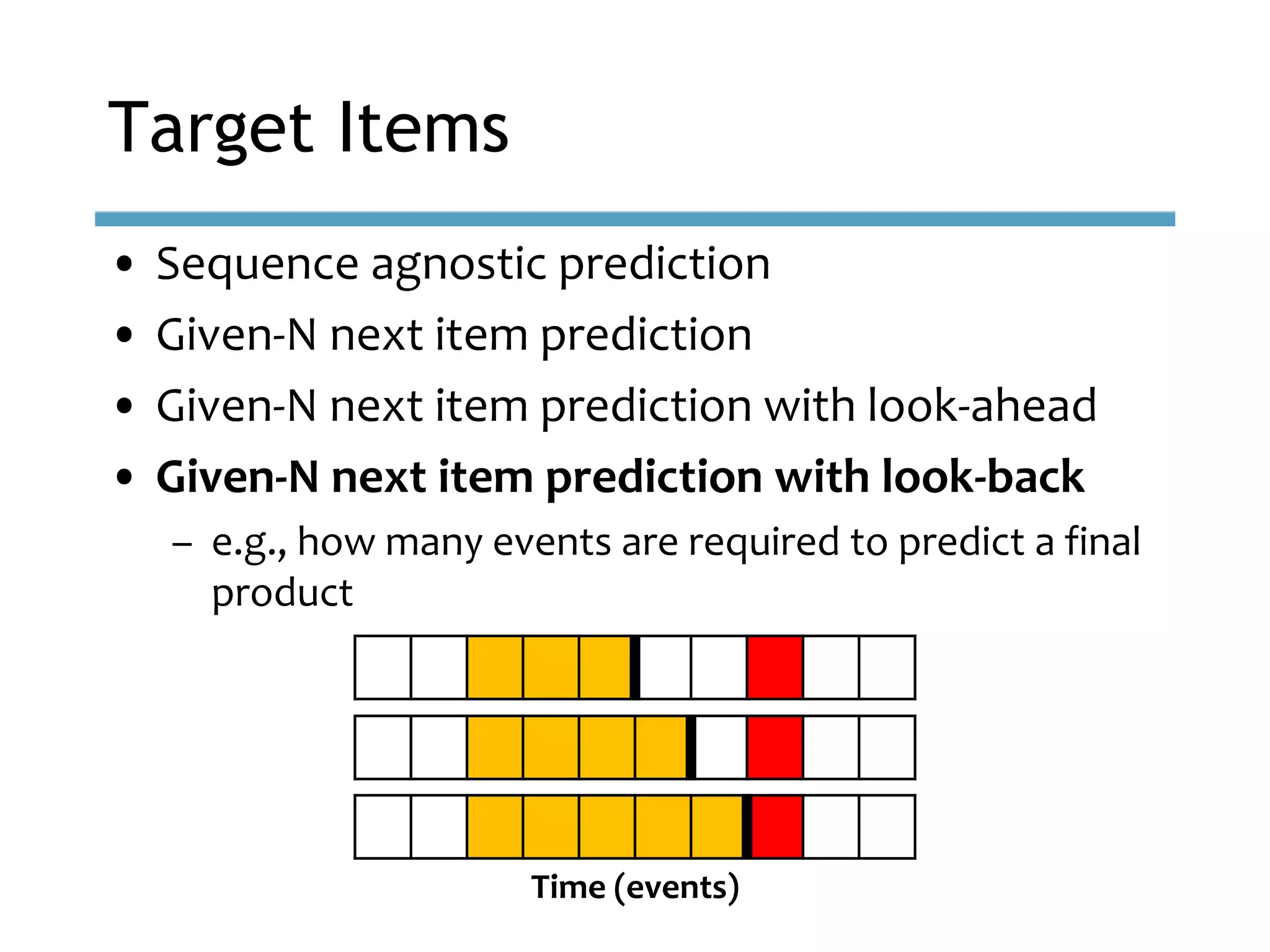

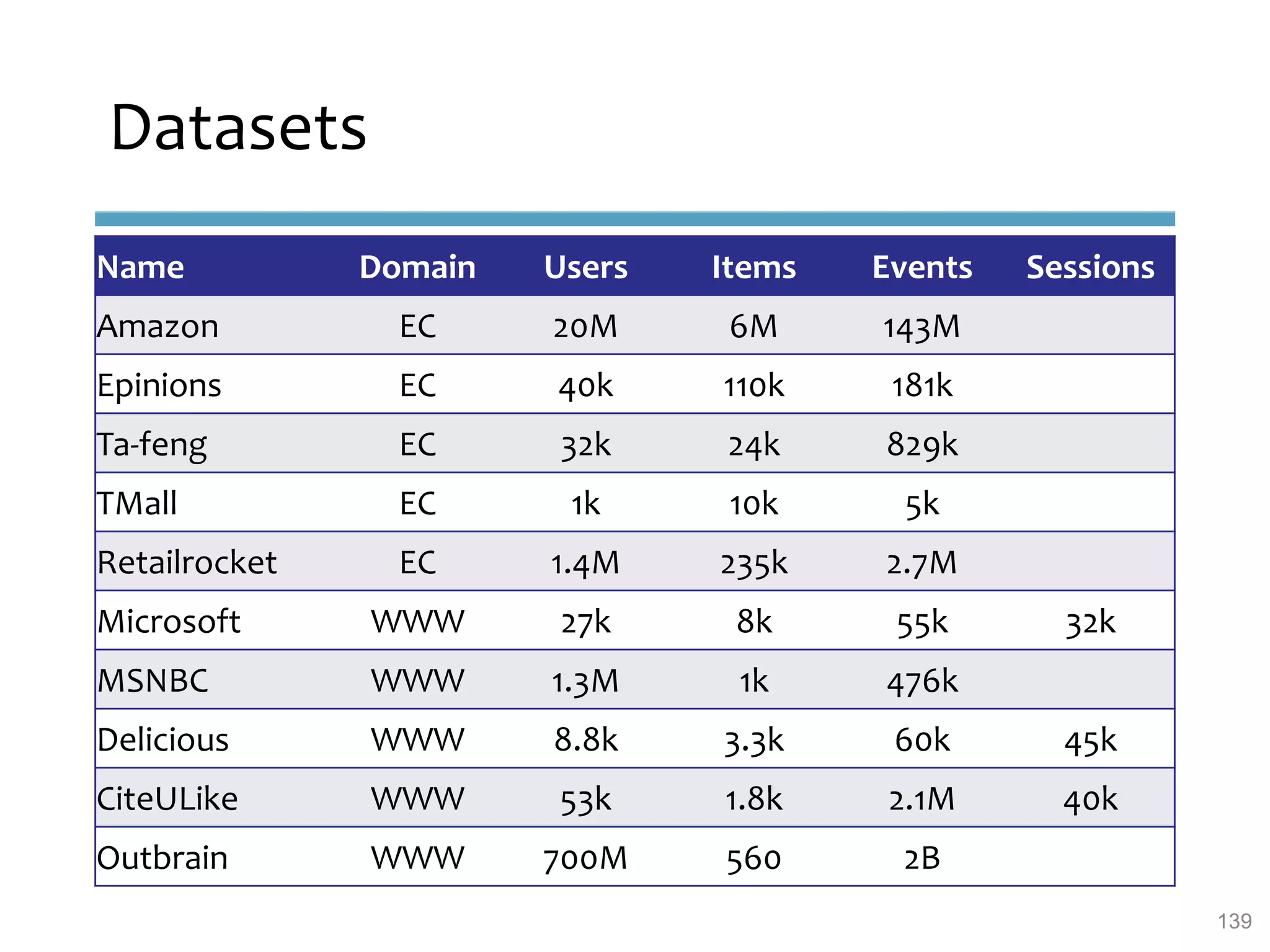

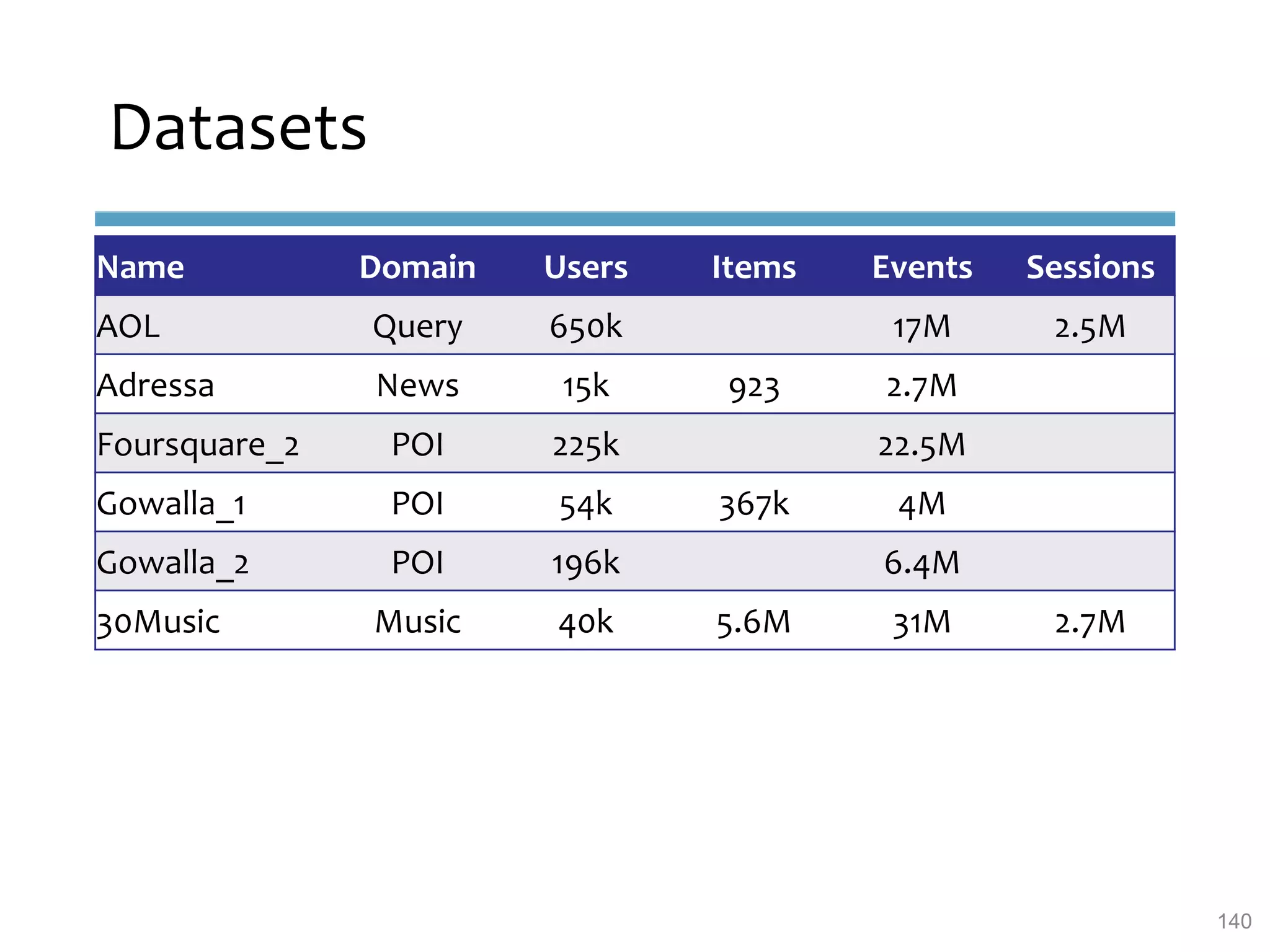

This document provides an overview of sequence-aware recommender systems. It discusses how sequence-aware recommenders differ from traditional recommender systems in that they take into account ordered, timestamped sequences of user actions and interactions rather than single user-item pairs. The document outlines various types of sequence-aware recommendation problems such as context adaptation, trend detection, repeated recommendations, and considering sequential patterns and order constraints. It also reviews existing algorithmic approaches for sequence-aware recommendations, which include sequence learning techniques like frequent pattern mining and sequence modeling, as well as hybrid methods.