The Universe on a Tee Shirt

- 1. The Universe on a Tee Shirt (A TOE According to One Amateur Scientist) by John Winders

- 2. Note to my readers: You can access and download this essay and my other essays through the Amateur Scientist Essays website under Direct Downloads at the following URL: https://sites.google.com/site/amateurscientistessays/ You are free to download and share all of my essays without any restrictions, although it would be very nice to credit my work when quoting directly from them.

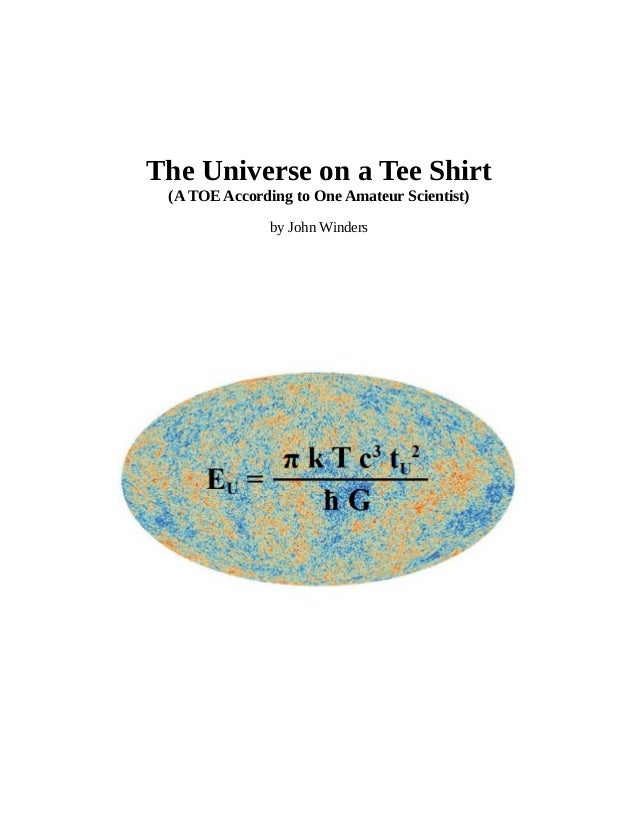

- 3. For a long time, theoretical physicists have dreamed of the day when the general theory of relativity and quantum mechanics would be combined to create the Theory of Everything. It often stated that such a theory would be so simple and concise that the whole thing could be condensed into a simple equation that would fit on a T-shirt. It was clear to me that classic material reductionism could not provide a path to that laudable goal, so I undertook an investigation to see what could replace it. That investigation spanned almost 4½ years, and it was documented step-by-step in my essay Order, Chaos and the End of Reductionism, which you can access from my Amateur Scientist Web Page. This research led me to several dead ends, blind alleys, and self contradictions; however, I never deleted or changed any of my mistakes in order to preserve and document the evolution of my thinking along each step of the way. The essay presented here is just a condensation of that much longer essay. The equation on the cover would easily fit on a T-shirt and I think it really does capture the essence of the Theory of Everything. EU = π k T c3 tU 2 / ħ G You'll note that the four fundamental constants of nature are here: Boltzmann's constant, k, the speed of light constant, c, Planck's constant, ħ, and Newton's gravitation constant, G. Astute readers who are familiar with the Bekenstein-Hawking theory will notice a piece of the Bekenstein equation is found in it. I believe this is the equation of state of the universe that describes expansion in terms of increasing mass-energy, EU, with respect to a universal time parameter, tU. You might object, “Wait a minute. I thought mass-energy is conserved.” Well, mass-energy is conserved over short time intervals where time-displacement symmetry is valid. What I discovered, however, is there is no time-displacement symmetry over cosmological time scales, and there's a good reason for that. The other thing I discovered is the gravitational constant, G, is not really constant after all, and there's a good reason for that too. So come with me on a short journey to find out what those reasons are. Because this essay is fairly brief, so you may have a bit of trouble following the derivation of my theory; therefore, I urge to to go over to the web site and review Order, Chaos and the End of Reductionism, especially Appendices W, X and Y, which go into much more detail. In its most basic form, reductionism is an approach to understanding the nature of complex things by reducing them to the interactions of their parts, or to simpler or more fundamental things. Engineers and physicists use reductionism to explain reality. I came to the conclusion that there are three different classes of interactions in nature: 1. Deterministic, linear, reversible, certain 2. Deterministic, non-linear, irreversible, predictable in the forward direction 3. Non-deterministic, irreversible, unpredictable (probabilistic) Reductionism is concerned mainly with the first class of interactions; however, they only apply to the most trivial of situations, such as two bodies orbiting around each other and simple harmonic motion. The vast majority of interactions in nature are in the second class, commonly referred to as chaotic interactions. Ironically, it seems that the highly-complex order we observe in the universe emerges essentially from chaos. Take for example weather patterns, like a hurricane, born from chaos and yet having an identity and a quasi-stable structure. The giant red spot on Jupiter is a permanent hurricane that has persisted for at least 187 years. Since reductionism is only capable of examining the simplest and most trivial examples of order, I chose the title Order, Chaos and the End of Reductionism to reflect the fact that order and chaos 1

- 4. begin where reductionism ends. Another interpretation is that reductionism is at an end as a viable scientific philosophy going forward. As long as you examine nature through linear, deterministic and reversible interactions, you are only seeing reality through a tiny keyhole. How sad it is that a majority of scientists still consider reductionism as the preferred default method of solving science. String theory is touted as the whiz-bang cutting edge of theoretical physics, but I perceive old- fashioned reductionism at its core. The third class of interactions are stochastic, random, and completely unpredictable. These interactions lie at the heart of quantum mechanics. Oddly enough, some extremely brilliant theoretical physicists (including Albert Einstein up till his death) deny the very existence of stochastic interactions, believing that some underlying local hidden variables are involved instead. I confess being guilty of thinking that chaotic interactions might be used as substitutes for stochastic processes, but I was definitely wrong. Experimental violations of Bell's inequality put that idea to rest, and in the face of such incontrovertible evidence as this I'm amazed there are theoretical physicists who still cling to determinism. The core of my thesis is this: Entropy equals information. Entropy has been completely misunderstood by many leading scientists, who try to label it as “missing information” or “hidden information” or even “negative information.” This misconception stems from the fact that order and entropy are indeed opposites. People tend to prefer order over disorder, so they equate entropy to something very negative and undesirable. On the other hand, people love information – the more the better. After all, we live in the “information age” with the Internet offering us cool things like Wikipedia, Facebook, Twitter, and Instagram. So how can something “good” like information possibly be the same as something so obviously “bad” like entropy? First off, you need to know information is defined, which unfortunately most physicists do not. Claude Shannon figured it out in the 1940s, and it has everything to do with probability and uncertainty. Suppose there are N possible outcomes of some interaction, each with a certain probability, pr. Shannon concluded that the amount of information, S, contained in that set of outcomes is as follows. S = – ∑ pr log2 pr , r = 1, 2, 3, … , N If an outcome is certain; i.e., if any of the probabilities in the set should equal one, then there is zero information in that set. Suppose I call someone on the phone and inform them it's Saturday. How much information did I relate to that person if he already knew it was Saturday? The answer is zero, because there was no uncertainty on his part about the day of the week. But now suppose that person just woke up from a coma and had no idea what day it was, so all days are equally probable to him. For N equally-probable outcomes, the above equation reduces to S = log2 N. Stating that it's Saturday provides log2 7 bits of information to that person. If you notice, S = log2 N is identical to Boltzmann's definition of thermodynamic entropy, except Bolzmann used the natural logarithm instead of the base-2 logarithm and he stuck a constant, kB, in front of it: S = kB Ln N. Once you come to grips with the fact that entropy = information, then it's apparent that information cannot exist without uncertainty. So which class of interactions in nature involves uncertainty? Well, the first class clearly doesn't because all outcomes can be uniquely solved in both forward and reverse directions. A single planet revolving around a star will stay in that orbit forever unless it is perturbed by some outside force. You can determine the exact location of that planet billions of years into the future or billions of years into the past using a simple formula that describes an ellipse with a time parameter, t. Can information come from a chaos? It might seem that chaos could provide randomness and uncertainty, but this is not the case. Chaotic processes are still deterministic because there is a unique relationship between the current state and subsequent states. Thus, every repetition of a chaotic process will produce exactly the same sequence of events. This is not true in the reverse 2

- 5. direction due to one-to-many relationships between the current state and previous states, rendering chaotic processes irreversible. Thus, irreversibility alone does not generate true uncertainty, at least going forward. Chaotic interactions can rearrange bits, and even make them unrecognizable, but they cannot create new bits. Only the third class of stochastic interactions can introduce the uncertainty that information requires. Chaos produces fractal patterns, and these patterns are widespread in nature. So at one point in this investigation, I thought the universe itself might be a colossal fractal. Fractal patterns have extremely high – or one might even say infinite – levels of complexity that can be generated by very simple non-linear functions. Fractals have the properties of scale-invariance and self-similarity, where large-scale features are repeated over and over on smaller scales. Those features are not necessarily repeated exactly, however. The Mandelbrot set is one of the most widely-known fractals, having a prominent circular feature that appears over and over again on smaller scales. On the smallest scales, this circular feature gradually gives way to different features. You can try this yourself using the interactive Mandelbrot Viewer. I used to think the general relativity field equations could only be applied to small-scale systems, but I was very wrong. What I discovered is that Einstein actually had stumbled on a set of equations that provides an exact description for the entire universe, and that pattern is only repeated as an approximation for smaller scales involving weak-field interactions. In other words, the universe is a fractal having an exact overall solution given by the Schwarzschild equation, but this equation is not necessarily an exact solution for smaller scales. A recurrent theme in this throughout my investigation is that the most important – and perhaps the only – law of nature is the statement that entropy of isolated systems cannot decrease. This is the famous second law of thermodynamics, which really should be the zeroth law of the universe because it underlies causality itself. Since entropy and information are equivalent, this law means that information cannot be destroyed. Some scientists try to trivialize this law by saying that there's just a tendency for entropy to increase because it's more likely to increase than to decrease. They say given enough time (and patience) you'll see an isolated system inevitably repeat some previous lower-entropy state. I state unequivocally that this is not just unlikely, but it's impossible because it would be tantamount to destroying information and causing “unhappening” of previous events. As a corollary to the second law of thermodynamics, I came up with what I call the “post- reductionist universal law” stated as follows: “Every change maximizes the total degrees of freedom of the universe.” The phrase “total degrees of freedom” sounds kind of nice, which is why I chose it. But the logarithm of total degrees of freedom equals total entropy, so what this really means is that every change maximizes the entropy of the universe. Not only can entropy never decrease, it must always increase to the maximum extent possible. Taking this idea to the limit, I postulated we live in a moment of maximally-increasing entropy, which addresses – and maybe solves – the mystery of time. What clocks are actually measuring are increases in entropy reflected as a reduction in curvature of the universe unfolding around them, as explained in the following paragraphs. Solving the Schwarzchild equation yields R = 2 E G / c2 describing a sphere of radius R, where E is the mass-energy of the system, G is the gravitational parameter, and c is the speed of light. Maximizing the total degrees of freedom (entropy) of the universe means the universe is in a permanent state of maximal entropy, so the only way to further increase entropy is through expansion. The maximum rate of expansion can be attained if R increases at the speed of light by introducing the concept of universal time, tU, where R = c tU. The idea that there could be such a thing as universal time is anathema to physicists. After all, we 3

- 6. are told space and time are relative, not absolute. However, tU isn't the Newtonian notion of simultaneity across space. Instead, tU marks the progress of universal expansion, and while R has a dimension of length, it should be thought of as an expanding radius of curvature around a temporal center, with a surface surrounding the center at a distance R = c tU marking the present moment. No clock can run ahead of tU because no clock can run ahead of the present moment. A free-falling body will keep up with tU, except when a force acts on the body causing acceleration and its proper time to lag behind tU. Observing objects at some distance in any direction, we observe them when the universe had a radius R' < c tU. Those objects will fall behind us in time and will appear to recede from us in space, resulting in the cosmological red shift. Objects at at distance R = c tU will be receding at the speed of light and will be at the edge of our horizon. Substituting c tU for R in the Schwarzschild equation results in E G = ½ c3 tU. This means either the total mass-energy of the universe or the gravitational parameter must increase over time, or both. As it turns out, the gravitational parameter decreases over time, being proportional to tU – 1, so E must increase in proportion to tU 2. If the universe is in a state of maximal entropy, we can apply the Bekenstein equation to it. By combining the Bekenstein and Szilárd equations, we get the following equation of state. dEU = (k T c3 / 4 ħ G) dA , where A is the expanding surface area of uniform curvature, 4 π R2. dA = 8 π R dR dA / dtU = 8 π R dR / dtU = 8 π c R dEU / dtU = 2 π k T c4 R / ħ G = 2 π k T c3 tU / ħ G → EU = π k T c3 tU 2 / ħ G The above equation of state combines the four fundamental constants k, c, ħ, and G (although G is really a variable, being inversely proportional to tU). The temperature of the universe, T, is inversely proportional to tU also, so the ratio T / G equals a constant that can be evaluated using the current temperature of the universe and the measured value of the gravitational constant. According to the Bekenstein equation, the total entropy expressed in bits is proportional to the area of uniform curvature, 4 π R2 , divided by 4 Ln 2 times the Planck area, G ħ / c3. Since the Planck area is proportional to tU –1, total entropy is proportional to tU 3. There must have been a time in the past when the total entropy of the universe was equal to one bit, which I would guess is the minimum amount of information that has meaning; the information associated with a coin toss. The value of tU corresponding to a single bit of entropy would be my idea of “The Beginning.” Information from the past is encoded into the present moment. Linear and chaotic interactions transform those bits according to the laws of determinism without any loss of information, obeying the second law of thermodynamics, a.k.a. the zeroth law of the universe. Meanwhile, stochastic interactions are laying new bits of information at an increasing rate across an ever-expanding surface of uniform curvature corresponding to the present moment. One of the raging controversies in the scientific community is the “vacuum catastrophe,” referring to the huge discrepancy between the mass-energy vacuum density based on cosmological arguments with an apparent flatness of space and the mass-energy vacuum density of virtual particle pairs based on quantum electrodynamics (QED). Using the model presented in this essay, the value of density, ρ, is found by dividing the rate of change of dEU / dtU by dVU / dtU = 4π R2 dR / dtU, with the assumption that dR/ dtU is at the maximum rate, c. The vacuum density is ρ = k T / 2 ħ G tU, and it decreases over time. Based on the known values of the parameters used in the formula, the vacuum density is currently 980 3.08 × 10-27 kg / m3, a surprisingly large value. However, it's not nearly as outlandish as the QED value for vacuum density of around 10 106 kg / m3. I'll conclude this essay on the following page with some bullet items that capture the key points of my Theory of Everything. 4

- 7. SUMMARY • There are three kinds of interactions: linear, deterministic, reversible; non-linear, deterministic, chaotic, irreversible; stochastic, probabilistic, irreversible. • Entropy is equivalent to information. • Information requires uncertainty; thus, only stochastic interactions are capable of producing information. • Linear and chaotic deterministic interactions preserve and transform information in causal space according to the “laws of nature.” • Causal space has one time dimension, requiring three spatial dimensions because they must match the number of rotational degrees of freedom. • A free-falling observer is incapable of measuring any spatial curvature of three-dimensional space because of rotational symmetry. • Due to the asymmetry of time, there is a radius of temporal curvature, R, expressed in units of length, centered on the beginning of time. • Order emerges from chaotic interactions as fractal-like patterns that repeat on different spatial and temporal scales. • The universe is a fractal with the properties of scale-invariance and self-similarity. • Due to scale-invariance, solutions to the general relativity field equations are exact solutions for the entire universe and approximate solutions for to its sub parts. • The Schwarzschild formula R = 2 E G / c2 is an exact formula of a closed system, e.g. the universe. However, the radius of the universal pseudosphere is exactly twice this value. • The universe is in a permanent state of maximal entropy and so the Bekenstein equation can be applied to it. Thus, the universe must expand in order to accommodate more information. • There exists a universal time parameter, tU, which marks the expansion of the universe. • The universe expands maximally at a rate dR / dtU that is bounded by the speed of light, c. • Since tU corresponds to the present moment, proper time of an observer cannot get ahead of tU. The geodesic paths of free-falling bodies maximize proper time up to the limit of tU. • Time, having a radius of curvature equal to R, does not have time translation symmetry over cosmological time periods. Thus, the law of conservation of mass-energy does not apply to the universe as a whole. • The quantity of mass-energy in the universe increases in proportion to tU 2. • The quantity of entropy-information in the universe increases in proportion to tU 3. • There is an equivalency between mass-energy and entropy-information (“it equals bit”). • Since mass-energy and entropy-information increase at different rates, they are linked by the Szilárd equation with a decreasing temperature, T, proportional to tU –1. • The vacuum density of mass-energy is ρ = 1 / (8π G tU 2 ) k T / 2 ħ G tU, with a present value of 980 3.08 × 10-27 kg/m3 . 5

- 8. Appendix A – A Picture Is Worth 1,000 Words The schematic diagram below explains the cosmology of the Theory of Everything. The universe expands from left to right beginning at a time t' = 0, which can be interpreted as the “big bang” or whatever initial state is appropriate. The magenta curves indicate “now” surfaces of uniform curvature having radii of curvature, R', centered on t' = 0. The present radius of curvature at Here and Now is R = c tU where dR / dtU = c. Looking in any direction, x, y, or z out into space, we see the universe as it was younger and when R' of the “now” surface of uniform curvature was smaller. Because the universe was younger at these locations, their times appear to be lagging behind tU from the vantage point of Here and Now, creating time dilation proportional to the distances Ö x2 + y2 + z2. This is the reason for the cosmological red shift. Temperatures, T', shown above the red thermometers, are inversely proportional to time t', so true temperatures are proportional to distances Ö x2 + y2 + z2 . However, because of the cosmological red shift, all temperatures appear the same from our vantage point of Here and Now. The so-called cosmic microwave background (CMB) is actually the composite of all temperatures of every era after those temperatures have been red-shifted in proportion to distances. The CMB isn't just one red-shifted temperature from a particular era, but all temperatures after they've been red-shifted. The radius of curvature always expands at the speed of light at a surface of uniform curvature: dR' / dt' º c. However, the cosmological time dilation slows distant expansion velocities from the vantage point of Here and Now. This makes objects from previous eras appear to recede away from Here and Now, as shown by the green arrows. Thus, the true origin of the Hubble constant is the apparent slowing down of distant recessional velocities from cosmological time dilation. 6

- 9. Appendix B – The Point of Inflection According to the standard cosmological model (SCM), the universe is currently on the precipice of something big. Since the big bang, gravity has been slowing the rate of universal expansion – until now. Dark energy – also known as the cosmological constant – has caused the rate of expansion to start picking up recently. In the future, the expansion will continue to accelerate, causing a number of cosmologists to fear that space itself will be torn apart. They call this future event “the big rip.” In calculus, the point of a curve where the slope stops decreasing and starts increasing is called a point of inflection. The size of the universe is now at a point of inflection. On the other hand, according to my Theory of Everything (TOE), the rate of expansion as expressed by the radius of temporal curvature is, was and always will be equal to the speed of light. The graphs below compare the SCM and TOE models. “Time Since the Beginning” and “Radius” are on normalized scales, where 1.0 = tU » 13+ billion years and 1.0 = R = c tU. The blue curve corresponds to the SCM and the red line corresponds to my TOE. The point of inflection of the blue curve is occurring at t' = 1.0. The SCM rationale for a rate of expansion that decreases and then increases is as follows. Whereas gravity puts the brakes on expansion, it's becoming a non-factor as the universe expands because no additional matter is being added to the universe. On the other hand, since the density of dark energy is constant, there is more outward “pressure” to expand as the universe gets larger, and this is just now overwhelming the tendency for the universe to collapse under gravity. Doesn't it seem a bit odd that a once-in-a-universe event such as this would wait until just after the human species had a chance to evolve into intelligent creatures and began to contemplate the universe? Or could it be that astronomers really don't know how big or how old the universe actually is? After all, if distances to the “standard candles” used by astronomers to judge distances are slightly off, then the calculated previous rates of expansion will be off too. The most reliable “standard candles” are in our immediate vicinity, so if the rate of expansion right around us seems to be X, then it just might be that the rate of expansion always has been X. My TOE looks at things differently than SCM: Mass-energy is being added to the universe and it is proportional to tU 2, while entropy (which drives expansion) is proportional to tU 3. Temperature, being proportional to tU – 1, balances the two, keeping the rate of expansion constant. It's not that the radius of curvature is forced to expand at the speed of light; instead, the speed of light is forced to equal the rate of expansion in order to prevent travel into the past, which would violate causality. 7

- 10. Appendix C – Some Observations and Experiments One of the most controversial claims of my TOE is that Newton’s so-called constant, G, is a variable that decreases over cosmological time, and I think there is some evidence that suggests G is not constant. Astronomers estimate distances based on apparent luminosities of stars, which decrease by the square of their true distances from Earth, compared to their intrinsic luminosities. The intrinsic luminosity increases with the star’s diameter and its surface gravity. If the value of G was greater in the past, the intrinsic luminosity of a distant star would be greater than an identical nearby star. In other words, if G were greater in the past distant stars would be actually farther away from Earth than their apparent luminosities would indicate. The following chart shows measurements of the cosmological red shift based on the apparent luminosities of distant stars. The blue circles depict stars with distances computed using “standard candles” based on the assumption that G is a constant. The red circles depict the same stars assuming greater values of G and higher intrinsic luminosities in the past, which shifts their true distances toward the right. Based on standard cosmology, the rate of expansion of the universe is slowing down. This is shown by the blue line, which bends upward with increasing distance. My TOE claims that the cosmological red shift must be linear over distance since all observers recede from the Beginning at the same speed (c) in their own frames of reference. Based on their greater true intrinsic luminosities, distant stars are shifted from the curved blue line toward the straight red line.1 This doesn’t prove a thing; however, if G does decrease over cosmological time, then it would explain why the observed rate of expansion seems to have slowed down even if the true rate were constant. Astronomers claim that observations of distant supernovae prove that G has remained constant for billions of years. But this assumes an average star in the past began with the same amount of material as an average star forming today. But if G were significantly greater in the past, couldn’t smaller stars then appear much the same as medium-sized Sun-like stars today? Observations of Mars clearly indicate remnants of rivers, lakes, and possibly seas on the Martian surface. Although today’s temperatures at the Martian equator can reach 20°C at high noon, the mean surface temperature is −60°C. These chilly temperatures are partly due to the fact that Mars doesn’t have much of an atmosphere, but its doubtful that a thicker atmosphere would make much 1 This means that distances to far away galaxies are greater than indicated from astronomical observations. 8

- 11. SUMMARY • There are three kinds of interactions: linear, deterministic, reversible; non-linear, deterministic, chaotic, irreversible; stochastic, probabilistic, irreversible. • Entropy is equivalent to information. • Information requires uncertainty; thus, only stochastic interactions are capable of producing information. • Linear and chaotic deterministic interactions preserve and transform information in causal space according to the “laws of nature.” • Causal space has one time dimension, requiring three spatial dimensions because they must match the number of rotational degrees of freedom. • A free-falling observer is incapable of measuring any spatial curvature of three-dimensional space because of rotational symmetry. • Due to the asymmetry of time, there is a radius of temporal curvature, R, expressed in units of length, centered on the beginning of time. • Order emerges from chaotic interactions as fractal-like patterns that repeat on different spatial and temporal scales. • The universe is a fractal with the properties of scale-invariance and self-similarity. • Due to scale-invariance, solutions to the general relativity field equations are exact solutions for the entire universe and approximate solutions for to its sub parts. • The Schwarzschild formula R = 2 E G / c2 is an exact formula of a closed system, e.g. the universe. However, the radius of the universal pseudosphere is exactly twice this value. • The universe is in a permanent state of maximal entropy and so the Bekenstein equation can be applied to it. Thus, the universe must expand in order to accommodate more information. • There exists a universal time parameter, tU, which marks the expansion of the universe. • The universe expands maximally at a rate dR / dtU that is bounded by the speed of light, c. • Since tU corresponds to the present moment, proper time of an observer cannot get ahead of tU. The geodesic paths of free-falling bodies maximize proper time up to the limit of tU. • Time, having a radius of curvature equal to R, does not have time translation symmetry over cosmological time periods. Thus, the law of conservation of mass-energy does not apply to the universe as a whole. • The quantity of mass-energy in the universe increases in proportion to tU 2. • The quantity of entropy-information in the universe increases in proportion to tU 3. • There is an equivalency between mass-energy and entropy-information (“it equals bit”). • Since mass-energy and entropy-information increase at different rates, they are linked by the Szilárd equation with a decreasing temperature, T, proportional to tU –1. • The vacuum density of mass-energy is ρ = 1 / (8π G tU 2 ) k T / 2 ħ G tU, with a present value of 980 3.08 × 10-27 kg/m3 . 5

- 12. As depicted on the chart, most of the blue dots fall outside the instrument-error ranges of other blue dots and follow the length-of-day curve. Anderson and the other authors are quick to point out that they don’t believe a change in the Earth’s rotation itself affects the G measurements, nor do they believe G actually changes. Thus, they are at a loss to explain why measured values of G are correlated with LOD, although they suspect there is a common driver. Some have suggested periodic changes in the Earth’s core affect LOD, which is reasonable. But why would changes in the Earth’s core affect laboratory measurements of G at the surface? Well here’s my hypothesis: The drivers behind the variations in measurements of G and LOD are true variations in G itself. Gravitational mass and inertial mass are two sides of the same coin. If the true value of G increases, the Earth’s inertia will also increase. Then in order to conserve angular momentum, the Earth’s speed of rotation must slow down, thus increasing the LOD. At any rate, Cavendish torsion balance data strongly suggest that G is not a constant but a variable.4 The fact that the period of G correlates with the period of LOD is interesting, although it might just be a coincidence. But matching amplitudes would really support the hypothesis of a causal connection between them. From the Anderson et al paper, the measurements of G over the 5.9-year LOD cycle vary between 6.672 and 6.675 10 –11 m 3 s – 2 kg – 1. Unfortunately, different labs made these measurements using different instruments with different statistical errors. At any rate, the measurements deviated 0.0225% from the mean value. I looked up the LOD over a 5.9-year period, and the variations deviated about 1.5 ms from the mean sidereal day of 86,164 sec. These LOD variations are only 0.0000017% compared to the hypothetical 0.0225% variation of G. The angular momentum of a sphere having a mass M and radius R is given by the formula L = ⅘ ω M R2.. The speed of rotation, ω, is inversely proportional to M, so if angular momentum is conserved with G (along with the inertial mass M) truly varying by 0.0225% , the LOD should vary by 19 seconds over a 5.9-year cycle. This is 13,000 times larger than the observed LOD variations, and such a huge discrepancy in LOD observations would seem to show my TOE hypothesis is all wet; however, some relevant facts were left out. The angular momentum of a sphere is dependent on R2 and the Earth is an obloid sphere, with its radius bulging out at the equator. Since the Earth is not perfectly rigid, the equatorial bulge would move slightly inward as G increases and the rotation slows down. Such a slight decrease in R might almost – but not quite – cancel the slowing down of the rotation due to an increase in inertial mass, M. In other words, a more detailed model of an elastic Earth might show that the data from the observations actually align with the hypothesis that G changes over time and causes LOD to change in synch with G. This warrants some further research in my opinion. 4 I still don’t have a clue why G would vary over a 5.9-year cycle, although it should be correlated with the local ambient cosmological temperature. Unfortunately, I don’t know of a way to measure that temperature directly, but I’d love to know if the LODs of other planets in our solar system also follow the same 5.9-year cycle. 10

- 13. Appendix C – Some Observations and Experiments One of the most controversial claims of my TOE is that Newton’s so-called constant, G, is a variable that decreases over cosmological time, and I think there is some evidence that suggests G is not constant. Astronomers estimate distances based on apparent luminosities of stars, which decrease by the square of their true distances from Earth, compared to their intrinsic luminosities. The intrinsic luminosity increases with the star’s diameter and its surface gravity. If the value of G was greater in the past, the intrinsic luminosity of a distant star would be greater than an identical nearby star. In other words, if G were greater in the past distant stars would be actually farther away from Earth than their apparent luminosities would indicate. The following chart shows measurements of the cosmological red shift based on the apparent luminosities of distant stars. The blue circles depict stars with distances computed using “standard candles” based on the assumption that G is a constant. The red circles depict the same stars assuming greater values of G and higher intrinsic luminosities in the past, which shifts their true distances toward the right. Based on standard cosmology, the rate of expansion of the universe is slowing down. This is shown by the blue line, which bends upward with increasing distance. My TOE claims that the cosmological red shift must be linear over distance since all observers recede from the Beginning at the same speed (c) in their own frames of reference. Based on their greater true intrinsic luminosities, distant stars are shifted from the curved blue line toward the straight red line.1 This doesn’t prove a thing; however, if G does decrease over cosmological time, then it would explain why the observed rate of expansion seems to have slowed down even if the true rate were constant. Astronomers claim that observations of distant supernovae prove that G has remained constant for billions of years. But this assumes an average star in the past began with the same amount of material as an average star forming today. But if G were significantly greater in the past, couldn’t smaller stars then appear much the same as medium-sized Sun-like stars today? Observations of Mars clearly indicate remnants of rivers, lakes, and possibly seas on the Martian surface. Although today’s temperatures at the Martian equator can reach 20°C at high noon, the mean surface temperature is −60°C. These chilly temperatures are partly due to the fact that Mars doesn’t have much of an atmosphere, but its doubtful that a thicker atmosphere would make much 1 This means that distances to far away galaxies are greater than indicated from astronomical observations. 8

- 14. Addendum – Revised Calculation of the Vacuum Energy Density The TOE is still a work in progress and I want to preserve everything for the record, including my mistakes.7 My preliminary estimate of the vacuum mass-energy density, ρ, was based on the assumption that the cosmological temperature, T, is approximately equal to the CMB temperature of 2.73 K. In my essay The Hidden Secrets of General Relativity Revealed, the cosmological temperature was calculated as the temperature of the expanding Bekenstein-Hawking surface, which is strictly a function of the age of the universe: T = ħ / (4π tU k) This value turned out to be much, much smaller than 2.73 K. Based on this calculation, the cosmological temperature is 1.37 × 10 -30 K, which reduces my original estimate of ρ (980 kg/m3 ) by 30 orders of magnitude! Combining ρ = k T / 2 ħ G tU with the above expression for T reduces ρ to the following simple formula. ρ = 1 / (8π G tU 2 ) Using the current measured value of G and assuming tU ≈ 14 billion years = 4.4 × 1017 seconds, we get the following result. ρ = 3.08 × 10-27 kg/m3 Earlier I mentioned the so-called “vacuum catastrophe” producing wildly different estimates of vacuum energy density depending on which methodology is used. The Hubble-constant methodology places ρ between 6.4 × 10-27 kg/m3 to 7.2 × 10 -27 kg/m3 , and happily my new value is just below this range. It should be noted, however, that there is a significant amount of hand waving associated with all currently-accepted cosmological models8 , especially those models featuring a so-called “accelerating expansion of the universe.” In each case, astronomical distance measurements are off because they’re based on the assumption that universal expansion has been slowing down over time9 instead of maintaining a constant rate, c. In contrast, ρ derived from my TOE is based entirely on first principles and uses only a single astronomical measurement, tU . In conclusion, although I made a serious error in assuming T is the same as the CMB temperature, the huge discrepancy between 1.37 × 10 -30 K and 2.73 K might be explained if the latter value (the observed temperature of all radiating matter in the universe after all red shifts are taken into account) is not strongly coupled with the cosmological temperature. In other words, the two temperatures decrease over time at different rates and are “disconnected” from each other because the universe is in a perpetual state of disequilibrium due to expansion. It is possible, however, that the two temperatures could asymptotically converge over time if the universe would attain a state of equilibrium. 7 The mistakes I made in this essay are shown as strike-through characters in the text. 8 A universal model having a contemporaneous volume with a fixed amount of mass-energy fundamentally wrong. The “interior volume” of the universe is a mirage: Reality is embedded in an asymmetric temporal surface with curvature diminishing over time and an implied radius of curvature, R = tU c; however, R should never be interpreted as the radius of a contemporaneous spherical volume. Furthermore, the amount of mass-energy distributed throughout this fictitious volume is not constant, as assumed in most of the prevailing cosmological models. Instead, the total mass-energy is distributed across Bekenstein-Hawking surfaces of uniform temporal curvatures while increasing in proportion to tU 2 . 9 To compound the problem, there’s a consensus among cosmologists that the universe just recently has decided to “accelerate” its expansion, needing “dark energy” to fuel this acceleration. Then there’s the problem of spiral galaxies appearing to rotate much faster than they should according to Newton’s law of gravity, needing “dark matter” to boost their rotational speeds. No wonder the field of cosmology is such a hopeless mess. (By the way, Erik Verlinde’s thermodynamic theory of gravity solves the rotational problem naturally without including dark matter. Kudos to Verlinde for having the courage to promote a very unpopular idea.) 12

- 15. Appendix E – Cosmic Goldilocks Soon after Einstein completed his masterpiece, the General Theory of Relativity, he noticed the GR field equations revealed a disturbing property of the universe; namely, that gravitating matter distributed throughout space would cause it to collapse. Einstein fixed that problem by inserting a so-called cosmological term, Λ gμν, into the field equations, which was used to exactly cancel out the mass causing gravitational collapse. Physically, Λ is a fixed quantity of energy per unit volume of empty space. In contrast, the density of gravitating matter decreases with volume because the total amount of matter in the bulk universe is assumed to be constant. This produces a highly unstable condition: If the volume of the universe should grow by just a smidgen, this would result in runaway expansion, and if the volume of the universe should shrink by just a smidgen, it would ultimately lead to the very collapse that Einstein feared. In order to keep things in balance, Λ would have to be ridiculously fine-tuned, just like the porridge in the story of Goldilocks: not too hot and not too cold, but just right. Eventually, Einstein gave up on his cosmological constant after it was discovered that the universe is indeed expanding. So once Λ gμν was removed from the GR field equations, gravitating matter would simply slow down the expansion. This raised another question: Would gravitating matter lose its grip and allow the universe to expand forever, or would it be powerful enough to reverse the expansion and lead to a Big Crunch? Well, it turned out that the universe is perfectly flat, and it’s poised at very the edge of eternal expansion (an open universe with negative curvature) and ultimate collapse (a closed universe with positive curvature). This led to another serious Goldilocks issue, called the “flatness problem.” Alan Guth came to the rescue with his inflation theory, which seemed to solve the problem, at least for the time being.10 Then a few more monkey wrenches were thrown in. Spiral galaxies appear to rotate much faster than they ought to according to Newton’s law of gravity and the amount of visible matter these galaxies contain. To fix that problem, cosmologists concluded that there must be enormous spherical clouds of invisible “dark matter” embedded in those galaxies. The problem is that there is way more “dark matter” needed to fix the anomalous rotations than there is ordinary matter.11 This would surely result in a very closed universe and a very rapid collapse, so an ad hoc solution popped up again: Einstein’s old cosmological constant, Λ, given a new name, “dark energy.” But in order to keep the universe nearly flat with all that “dark matter,” there had to be a lot of it. Of course, now we’re right back to the Goldilocks problem of how Nature achieved such a delicate balance between so much “dark matter” and “dark energy.”12 Then in 1998 is was discovered that just about now, exactly 13.8 billion years after the big bang, “dark energy” took over, and the universal expansion is speeding up!13 In fact, it’s speeding up so much that the universe will be torn apart in a hypothetical “Big Rip” at some point – maybe in our not too distant future. 10 Guth’s inflation model solves the riddle of how the universe got so perfectly flat by postulating an inflation event that occurred just before the Big Bang. Here, the universe inexplicably underwent an exponentially-increasing expansion, and then it stopped just as inexplicably as it started. This dramatic expansion conveniently “ironed out” all the wrinkles in space-time and flattened it out perfectly. 11 Estimates vary, but a rule of thumb is the universe has five times as much “dark matter” as regular matter. 12 So here’s the latest rundown: 68% of the universe is “dark energy,” 27% is “dark matter,” and only 5% is ordinary matter. Of course none of this is true, but it illustrates how hopelessly messed up the cosmological model is. 13 One might ask why the expansion decided to accelerate just as the human species evolved, crawled down from the trees, walked out of the African savanna, invented agriculture, science, mathematics and telescopes, and finally formulated a general theory of relativity that explains all of this. Wouldn’t it have been much more plausible for the acceleration to start either much earlier or much later than this special moment in time? Is it possible that cosmological distances to remote objects using astronomical observations are way off due to a faulty model, and the Hubble expansion is actually perfectly linear? 13

- 16. Then the so-called Vacuum Catastrophe made matters even worse. According to quantum electrodynamics (QED), empty space is filled with energy in the form of virtual particles, and this form of “dark energy” produces the same effect as Einstein’s cosmological constant. The problem is when all the virtual particles are added up, they amount to one ginormous amount of energy; an amount 120 orders of magnitude more than is needed to balance out all the gravitating mass. The proponents of QED are unwilling to admit their theory is wrong, so they believe something must be canceling out all this energy, but they just don’t know what it might be. Here’s another way of looking at the balancing act between gravity and Λ. According to general relativity, there is a cosmological pressure, Pc, associated with space-time that is equal to minus the energy density of the vacuum, ρe. Contrary to intuition, a negative cosmological pressure pushes space apart whereas a positive pressure pulls it together. (Because gravitational energy is negative, it generates positive pressure by definition.) The universal expansion rate will slow down when net Pc is positive and speed up when net Pc is negative. According to current models of a bulk universe based on general relativity, vacuum energy density is constant, so if the initial expansion rate was great enough, at some point the universe will cross over into negative pressure territory and lead to the “Big Rip.” Well let’s see how my TOE balances positive and negative pressures. Thanu Padmanabham published a paper in 2009 entitled “Thermodynamical Aspects of Gravity: New Insights” showing there is a deep connection between general relativity and thermodynamics. In particular, he shows the Schwarzschild solution of the field equations at an event horizon is mathematically equivalent to the fundamental thermodynamic equation T dS – dE = P dV, where P is interpreted as a cosmological pressure, Pc. If we are actually living on such an event horizon, this equation would describe our world. Dividing both sides of the equation by dV, Pc = T dS / dV – dE / dV = T dS / dV – ρe There are two components of Pc: A positive term, Pm = T dS / dV, corresponds to gravitating mass and a negative term, Pe = – ρe, correspons to vacuum energy density. In the preceding Addendum to this essay, the vacuum energy density, ρ, was expressed as mass density, in kg/m3 : ρ = 1 / (8π G tU 2 ) Using the mass-energy equivalency, e = m c2 , we convert ρ into energy density, ρe. Since this term in the equation is a negative quantity, it represents negative pressure that accelerates expansion. Pe = – ρe = – c2 / (8π G tU 2 ) The positive term in the thermodynamic equation, Pm = T dS / dV represents positive pressure that decelerates expansion. Pm = T dS / dV = T (dS / dA) (dA / dV) = T (k c3 / 4 G ħ) (2 / R) In the Addendum, T = ħ / (4π tU k), and of course R = c tU, as usual. Substituting those values of T and R in the above expression for Pm, we get Pm = c2 / (8π G tU 2 ) ⸫ Pc = T dS / dV – dE / dV = c2 / (8π G tU 2 ) – c2 / (8π G tU 2 ) = 0 Positive and negative pressures automatically cancel each other when space is considered to be the surface of an expanding event horizon and basing it on a thermodynamic model instead of a bulk volume. This eliminates Goldilocks problems requiring contrived solutions. As Einstein famously said, “We cannot solve our problems with the same thinking we used when we created them.” It’s time to abandon the kind of thinking that led to these problems in the first place. 14

- 17. Appendix F – Natural Units of Measure Most objects with we are familiar have a height, a width and a depth, otherwise known as volume, and are have contemporaneous parts, meaning all parts coexist in the same moments of time. A contemporaneous object like a bowling ball has a mass that can be weighed on a scale, and a volume that can found by measuring the circumference with a tape measure. People (including most cosmologists) tend to envision the entire volume of the universe as a contemporaneous object. But as I’ve shown, the only contemporaneous features of the universe are two-dimensional Bekenstein-Hawking event horizons with a Schwarzschild solutions equivalent to the fundamental thermodynamic equation. Space has three degrees of freedom with volume is defined as height × width × depth. Any arbitrary direction chosen as “depth” automatically points toward a temporal Beginning. With one degree of freedom assigned to “depth,” two other degrees of freedom are perpendicular to time and to each other, and lie on a contemporaneous, expanding Bekenstein-Hawking surface with a radius of curvature, R = c tU. Space has rotational symmetry, so all three dimensions are “depth” directions pointing back toward the past. In other words, a spatial volume is not a contemporaneous object, but is rather a container of residual information left over from the past. The larger the volume becomes, the less contemporaneous it is. Cosmological temperature, T, and Newton’s gravitational parameter, G, decrease over cosmological time while the quantity of information, H, per unit volume is constant. This is shown as follows, starting out with the fundamental thermodynamic equation at the horizon with P = 0. T dS = dE dS / dV = T -1 dE / dV = c2 / (8π T G tU 2 ) recalling ρe from Appendix E Dividing the above expression by k converts entropy, S, into information, HN, expressed as nats. dHN / dV = c2 / (8π k T G tU 2 ) The above expression has dimensions of m -3 (the nat being a pure number). Furthermore, we can see that G tU 2 k T is constant, so dHN / dV indeed has a constant value, which can be calculated by substituting the present-day values of G, T, and tU into the above equation. dHN / dV = 1.44 × 1043 nat / m3 The volume corresponding to one nat of information, VN, is found by inverting dHN / dV. The natural length, l N, and natural time, tN, follow directly from this value. VN = (dHN / dV) -1 = 6.94 × 10 -44 m3 l N = VN 1/3 = 4.11 × 10 -15 m tN = l N / c = 1.37 × 10 -23 sec Finally, the natural unit of mass, MN, is defined by the quantum of angular momentum, ħ, and using the dimensions of angular momentum: L = Mass × Velocity × Length. MN = ħ / (c l N ) = 8.52 × 10 –29 kg Conventional science considers Planck units as fundamental, but those units change over time as Newton’s parameter, G, decreases over time. The natural units of measure above were derived from thermodynamic properties of Bekenstein-Hawking surfaces of uniform temporal curvature instead of the GR equations of non-contemporaneous bulk volume. The natural units of measure are fundamental because they do not change over time. 15

- 18. Appendix G – The Impossible TOE Geometry The TOE presented in this essay is based on an expanding temporal surface having a decreasing uniform curvature, κ = 1 / R, with a radius of curvature measured in spatial units R = c tU. Space is perfectly symmetrical with three orthogonal degrees of freedom (aka dimensions) per Noether’s theorems. This leads to a rather peculiar type of geometry. The Minkowski metric defines four-dimensional space-time as follows. c2 dτ2 = (dx0)2 + (dx1)2 + (dx2)2 + (dx3)2 The variable τ is proper time as measured by a clock moving through space-time. The variable x0 = i c t, is the radius of the time coordinate expressed in spatial units (where i2 ≡ -1). The variables x1, x2, and x3 are the customary three dimensions of space. The reciprocal of radius x0 squared is the Gaussian curvature, K = 1 / (i c t)2 < 0, signifying time has negative curvature.14 The significance of negative curvature is that it folds away from the center of curvature everywhere instead of folding inward and around it; this kind of curvature is impossible to visualize in ordinary Cartesian space. The figure below is a schematic diagram of the geometry I’m trying to describe. An arrow labeled (x) points away from an observer, O, toward the Beginning. The two remaining degrees of freedom, (y) and (z) lie in a flat space-like plane orthogonal to (x), which is tangent to the blue temporal surface at the point O (“Here” and “Now”). Due to its negative curvature, the temporal surface bends away from the center of curvature, separating the observer’s past, to the left of the surface, from the future, to the right. As tU increases, the temporal surface becomes more flat (its Gaussian curvature becoming less negative). It’s very important to remember, however, that the temporal is not an ordinary sphere embedded “within” three-dimensional space. It sits entirely outside space in an “imaginary dimension” owing to its imaginary radius, i c t. Traveling within the red plane trace paths left of the temporal surface. This is strictly forbidden because those paths go back in time, which can only be attained at speeds faster than light. Also, the observer cannot see, hear, touch, taste, or smell anything lying on that plane. Objects in the space-like plane will be accessible only in the observer’s future. Each observer experiences reality differently as it evolves over time, so this scheme is radically relativistic, observer-dependent, and 14 A 2-dimensional surface of uniform negative curvature is a pseudosphere, having a surface area equal to 4π |R|2 , the same as an ordinary sphere. 16

- 19. Appendix D – What’s Below the Ontological Basement? The British physicist Paul Davies famously stated that information occupies the ontological basement of reality. From my own studies over the past decade, I gradually arrived at the same conclusion: Entropy (information) underlies everything we call physical “reality” or “universe” (see two other of my essays Order, Chaos and the End of Reductionism and Relativity in Easy Steps). This implies a hierarchical chain, as follows. Information → Time → Space → Energy → Matter The last four items in the chain (time, space, energy and matter) form what physicists define as the material universe in its entirety, but there are a growing number of them, like Davies, who are beginning to suspect that these are just the four upper floors of an edifice with a basement below them consisting of information. In fact, some have concluded that the key to a unified theory of quantum physics and gravity lies in the language of information. The question that remains is whether information is ontologically complete in and of itself. I’ve struggled with that question, and lately have come to the conclusion that it must depend on something else which stands completely apart from time, space, energy and matter. My reasoning is as follows. Most of us have an intuitive idea of information as having to act on a physical object. For example, flash drives store data using electrical charges applied to billions of tiny transistors. Information (according to Claude Shannon’s definition) requires uncertainty, so if each of those transistors could exist only in one possible state, there would be zero uncertainty about the flash drive’s actual configuration, rendering it useless because it couldn’t store any information. On the other hand, a functional 8 GB flash drive can store 6.8719 10 10 bits of information, meaning the drive may be configured in any of 2 68,719,000,000 unique configurations, an insane number of possibilities with a huge uncertainty. The flash drive’s information storage function depends entirely on uncertainty. We can envision physical computer hardware coming off the assembly lines without any firmware, operating system, software, or data. In other words, hardware can exist without containing any information, but, we cannot imagine firmware, an operating system, software or data existing in empty space without the physical hardware. Thus, information truly requires a physical substrate to act upon. The aforementioned Szilárd equation reveals that energy equals information multiplied by the temperature-dependent proportionality factor, kBT, suggesting that a substrate having thermodynamic properties5 must exist for the energy ↔ information equivalence to be valid. Ontological information relies on a universal substrate I’ll refer to as “Q”. Q (the universal substrate) → Ontological Information (the physical universe) The problem is that Q cannot consist of either matter or energy because mass-energy is just information in a condensed form. This suggests Q must be at least one additional level below Davies’ ontological basement, distinct from the physical universe itself and existing beyond it. The metaphor of physical reality represented by a building with information as its ontological basement is even more appropriate if we represent Q by the soil surrounding and supporting the basement. The soil can easily exist without the building, but not the other way around. Being outside the physical universe, Q seems similar to the description of the Deity.6 In any case, whatever Q is, it cannot be described or accessed as if it were a physical object. 5 In my essay Relativity in Easy Steps, I show how the general relativity field equations can be rearranged to describe a physical “substance” having energy, entropy and temperature, implying that space-time can be thought of as a “field” having physical and thermodynamic properties. Unfortunately, space-time cannot serve as the necessary substrate for information because space and time are both derived from information itself. 6 Except that the conventional western Deity is said to reside “above” the universe instead of “below” it. 11

- 20. Appendix H – Empty Space Finally Weighs In Information is equivalent to mass-energy, and every cubic meter of space contains the same quantity of information, 1.44 × 1043 nats. So one might reasonably ask how much a cubic meter of “empty” space weighs. The answer depends on the cosmological temperature, T, because the ratio of mass- energy to information is proportional to T according to the Szilárd equation. The Addendum to this essay determined the mass-energy density of space taking T into account: ρ = 1 / (8π G tU 2 ) kg / m3 By inspection, ρ is proportional to 1/tU because G tU is constant. As tU → 0, ρ → ∞. The state of infinite density is the “singularity” in the Big Bang model.17 Incremental mass, dM, is equal to ρ times incremental volume dV = 4π R2 dR = 4π c3 tU 2 dtU. dM = 4π c3 tU 2 dtU / (8π G tU 2 ) = c3 tU dtU / (2 G tU) Since (2 G tU ) is a constant, we can substitute present-day values, GP and tP, into the denominator. The total mass-energy of the universe, M, is then found by integrating dM over 0 ≤ tU ≤ tP . M = c3 / (2 GP tP) ∫ tU dtU = c3 tP 2 / (4 GP tP) = c2 R / (4 GP) Solving for the radius, R, R = 4 GP M / c2 R is exactly twice the Schwarzschild radius, RS, corresponding to the mass M. Accordingly, M is one half the mass corresponding to RS.18 There is another principle on which my TOE is based, which is a function of a constant quantity, namely the information density per unit volume and per unit surface area.19 According the Bekenstein-Hawking principle, the information contained within a volume cannot exceed the information capacity of the surface surrounding it, and my TOE hinges on it. So let’s see if that principle holds when applying the relationships derived in this essay. Let ρHV = uniform information density per unit volume = c2 / (8π kT G tU 2 ) Let ρHA = uniform information density per unit surface area = c3 / (4 ħ G) The Bekenstein-Hawking information limit can be expressed by the following inequality. ∫V ρHV dV ≤ ∫A ρHA dA . Since ρHV and ρHA are constants, the inequality can be written as follows. dV/dA ≤ ρHA / ρHV dV/dA = ½ R = ½ c tU ≤ [c3 / (4 ħ G)] [ 8π kT G tU 2 / c2 ] From the Addendum we learned T = ħ / ( 4π tU k). Making this substitution, everything on the right- 17 However, unlike other cosmological big-bang models, my TOE does not postulate total mass-energy is constant. Instead, total mass-energy increases because information, the mass-energy equivalent, increases in proportion to total volume. This is allowed because time is asymmetric, making the law of mass-energy conservation invalid. 18 A sphere of constant density has a mass proportional to its volume, but a sphere saturated with information becomes a Schwarzschild sphere having a mass proportional to its radius: MS = c2 R / 2G. A pseudosphere has half the volume and would weigh half as much as an ordinary sphere if both have the same density and the same radius. This might explain the 1:2 ratio of M : MS. 19 The information density per unit area is constant across a B-H surface in “information saturation.” 18

- 21. hand side of the inequality reduces to ½ c tU. The inequality ½ c tU ≤ ½ c tU is true, and it also shows the volume is always in a state of “information saturation” limited by the information capacity of the B-H area. Therefore, the numerical relationships involving mass-energy, information, temperature, time, space, and gravitation in the TOE fit together perfectly and they point back to the fundamental B-H theorem. One might reasonably ask the following questions: “If information is equivalent to mass-energy and empty space is already saturated with information, then how can planets, stars, galaxies, etc. be added to the vacuum without exceeding the B-H limit and causing information overflow? Must we invent some sort of ‘dark energy’ to cancel out the excess mass-energy?” Fortunately for the sake of science, my answer to the second question is “No.” Now I’ll answer the first question. According to Wheeler’s “it from bit” principle, mass-energy is information in condensed form. All the raw material needed to all the fundamental particles comprising the physical universe is already available from the vacuum itself. But you may object, “The amount of mass in a lump of clay is far greater than the equivalent mass of the information contained in the lump’s volume.” Your objection would certainly be true based on the current cosmological temperature, on the order of 10 -30 K. But according to the Szilárd equation, all we have to do to increase mass is to raise the temperature of the vacuum. This is similar to quantum field theory, which says mass-energy is an excitation of an underlying quantum field. In this case, the underlying quantum field is space and time itself with its thermodynamic properties, and it can be excited by raising its temperature. The lump of clay could be seen as a “hot spot” in the vacuum. The vacuum (cosmological) temperature is proportional to curvature: T∝ 1/tU ∝ 1/R = κ. In other words, a local “hot spot” increases local curvature. You could even go as far to say that mass and curvature are equivalent.20 It’s extremely important to make a distinction between information and data. Information measures the number of ways bits could be arranged, while data represent a particular arrangement of bits. Think of empty space as a digital thumb drive where all the bits are scrambled in a completely random arrangement with no recognizable patterns. When data are entered into this thumb drive, the bits are arranged into patterns that represent physical reality (“it from bit”). Arranging bits doesn’t reduce the number of ways they could still be arranged, so no information is actually lost by arranging them. However, the arrangement does remove some uncertainty, and information is thereby converted into the form of recognizable physical objects (mostly particles) with much higher temperatures and mass-energies than the random background vacuum information at prevailing cosmological temperatures. Because there’s a 1:1 correspondence between the number of bits on the temporal surface and the number of bits in the so-called volume it surrounds, this brings about the radical idea of a 1:1 correspondence between events on a 2-dimensional holographic surface and events unfolding in a 3-dimensional space (the hologram). This is the holographic principle, which is gaining some traction in the science community. It is based on something called AdS/CFT correspondence, where AdS stands for Anti-de Sitter space and CFT stands for conformal field theory.21 It would be a serious mistake to interpret the holographic principle as being two equivalent versions 20 Albert Einstein and Nathan Rosen got the idea in 1935 that the elementary particles (there were only a couple of known elementary particles at that time) are actually tiny knots in space-time geometry. Unfortunately, their idea didn’t go anywhere. Today, theoretical physicists are shamelessly using the Einstein-Rosen paper to justify their own wild sci fi fantasies involving “wormholes” and “time tunnels” reaching out into far-away regions of space. I’ve read their paper and they mentioned no such thing, and in fact they explicitly warned against making that kind erroneous conclusion from their equations. 21 AdS is an n-dimensional manifold with negative curvature. Its boundary is equivalent to Minkowski space-time. I have no way of describing what conformal field theory is, except to say it’s way over my head. 19

- 22. of reality occurring simultaneously in two very remote places. According to my TOE, the space surrounding the observer is an historical record of what has already happened on an earlier temporal surface. In other words, the correspondence isn’t taking place in real time at all. Events appearing close to the observer happened on recent temporal surfaces, while events appearing farther away happened on earlier temporal surfaces. Objects observed “out there” are just a data trail left behind the temporal surface. The area of that surface increases in proportion to tU 2 and the information density on its surface, ρHA, increases in proportion to tU owing to a decreasing gravitational parameter, G. Thus, the residue of information left behind in the vacuum then has constant density per unit volume.22 Seeing the hologram unfold in 3-D is like watching a movie, except different images appearing simultaneously on the screen could have formed on different temporal surfaces at very different times depending on their relative distances from the observer. The rules of special relativity link those non-contemporaneous images together causally. CAUTION: Please do not misinterpret the schematic diagram below as a three-dimensional representation of physical reality. It is impossible to imagine (or draw) actual physical reality in three dimensions. The spaghetti shapes emerging from the blue curved surface are high-energy hot spot data forming on a cold temporal surface as it unfolds into the future at the speed of light. The temporal surface sheds those data along with other information from its surface into the volume it leaves behind on the left. The residual hot-spot data are then interpreted as “particles” observed moving and evolving in space-time. The colors correspond to temperatures, red being the coolest and yellow being the hottest. Hotter temperatures correspond to higher mass-energies per unit information. The “particles” trace “world lines” that start and end in space-time. For example, the yellow line began at an earlier time than the red and orange lines in this diagram. As previously cautioned, the above diagram is a schematic representation only. I consider so-called “world lines” as a bad representation of reality in general, because time is an imaginary distance (i c t), so it shouldn’t be shown as part of a “block universe” or similar representation as if it were another spatial dimension with a real distance. Also, the hot spots should be thought of as spread out over an entire temporal surface instead of emerging from specific locations as shown above. Unlike other models based on the holographic principle, this TOE places the mind of the observer within the expanding holographic surface (the temporal surface) instead of in the middle of the hologram (space) with the holographic surface far away. The mind finds the hologram image so convincing that it projects itself into the middle of this image. Taking our experiences from a 2- dimensional holographic surface and projecting them onto a participatory 3-dimensional hologram is a truly amazing feat. It’s a cosmic magic show the mind performs for itself.23 22 The information density per unit volume can be considered a never-changing fundamental constant like the speed of light, c. As shown in Appendix F, fundamental units of length, time, and mass are based on this quantity. 23 The Sanskrit word māyā ( माया ) is describes the illusory or false aspect of reality. It literally means “magic show” or “illusion.” 20

- 23. Appendix I – Life in the Hologram The English physicist James Jeans was famously quoted as follows. “Today there is a wide measure of agreement, which on the physical side of science approaches almost to unanimity, that the stream of knowledge is heading towards a non-mechanical reality; the universe begins to look more like a great thought than like a great machine. Mind no longer appears as an accidental intruder into the realm of matter; we are beginning to suspect that we ought rather to hail it as a creator and governor of the realm of matter.” We have seen that the physical universe (aka space-time) we assume exists around us is just an historical record of information and data in an expanding 2-dimensional Now. Would it make any sense to be in an historical record instead of being in Now? How else could we be other than being in Now? So the idea that we are really in Now but choose to mentally project ourselves into a space-time hologram of the past may not be too far-fetched. Chris Niebauer, a neuropsychologist and author, has made extensive studies about how the left and right hemispheres of the human brain operate. He made the following observation.24 “… the right brain is key in spatial processing. Rather than focusing on one thing at a time, the right brain senses the whole picture – both the things themselves and the spaces in between. One could say that the right brain understands that a figure is determined by its background; something the left brain tends to overlook. The truth is that no figure could exist without the background and the shape of the background is dependent upon the figure.” In other words, the brain’s left hemisphere25 focuses mostly on material objects, while ignoring the “empty” spaces between them. But what would reality look like if there were no spaces between objects? And as we saw in Appendix H, space is filled to the brim with information. In fact, even the tiny spaces between the atomic nucleus and the electron shells surrounding them are filled with “virtual particles” that affect the properties of atoms. So empty space matters even if it is ignored. The diagram below depicts how things are organized in an observer’s space-time hologram. Space runs horizontally and time runs vertically. An observer, O, sits atop a light cone, whose surface is defined by the light paths from distant objects to the observer, as shown by the dashed lines arranged in a circle 360° around the vertical axis of the cone. All visible objects lie along this ultra- thin surface.26 As far as the observer is concerned, nothing exists outside the cone. The only objects that exist are shown as colored spheres within the cone or along its surface. The spheres are embedded in a blue volume of “empty space,” which we know is filled with information. Objects visible along the 24 From Niebauer’s book No Self No Problem, Page 97. 25 Some consider the left hemisphere as the “seat of consciousness” or the “thinking” part of the brain. 26 Only two spatial dimensions can be shown. In actual space-time, the 3-dimensional cone is a 4-dimensional hypercone. All visible objects lie along the ultra-thin 3-dimensional surface of a hypercone. 21

- 24. cone’s surface include stars, galaxies, and everything else the observer can see with the naked eye or through telescopes. Objects inside the cone’s surface are not visible, but they do have causal impacts on the observer. For instance, the observer’s great-great-great grandparents living inside the light cone (all currently deceased) are not visible to the observer; however, the observer’s DNA came from all 32 of those individuals and they are thus very much causally linked. The observer cannot see dinosaurs living inside the light cone roaming the Earth, but the observer can study the fossils they left behind and imagine what those creatures may have looked like. The problem with the space-time version of reality is that light paths along the surface of the light mix space and time together. This is a consequence of the brain’s inability to envision 4- dimensional hyperbolic space-time where three space axes are real and one time axis is imaginary. So in order to produce a working model the brain can process and the mind can comprehend, the time dimension had to be combined with one of the space dimensions, the one pointing away from the observer. This leads to an illusion of objects “out there” being contemporaneous, whereas they are really separated by both distance and time by in-between spaces filled with information. Objects in the space-time hologram are organized according to their relevance to the observer. Contemporaneous objects close to the observer are generally more relevant than objects separated from the observer by time and distance. Since the mind of the observer is attached to a physical brain with limited processing power, the most relevant objects appear large and sharp to the observer having much higher resolutions than the least relevant objects, which appear small and fuzzy with poor resolution. Objects inside your home are much more relevant to your survival than objects in the Andromeda galaxy, so the former are given much higher priorities in your space-time hologram. The total information within the non-contemporaneous volume of space-time equals the information distributed across the expanding temporal surface, HS = π c5 tU 2 / ħ G. The hologram created by the observer significantly limits this information in a number of ways. 1. The location of the observer in the hologram is the apex of a “light cone” (actually the center of a 4-dimensional hypercone)27 and it excludes all information outside the hyperscone’s surface. The hologram of one observer excludes at least a part of every other observer’s hologram. 2. The “vastness of space” that seems so impressive to the observer is only the 3-dimensional outer surface of the hypercone. This surface is razor-thin in comparison to the interior of the hypercone, where everything else is causally-linked to the observer yet completely invisible. 3. Objects in the hologram are organized according to the relevance to the observer. There are far fewer data bits available for observing distant objects than close-up objects. 4. Spaces between objects in the hologram are filled with much more information than the objects themselves, yet this space appears “empty” and is generally ignored by the observer. As Niebauer notes, figures are determined as much by their backgrounds as the figures themselves. The observer can zoom in on distant objects by relocating nearer to them. This involves travel, which is restricted by the laws of special relativity, and of course travel alters the hologram. The reader might object to the statement that observers “create” their own holograms. The counter argument is that every hologram is uniquely observer-centric and it can be altered by reorienting the observer. This would not be the case if there were only a single, universal hologram. As James Jeans said, “Mind no longer appears as an accidental intruder into the realm of matter; we are beginning to suspect that we ought rather to hail it as a creator and governor of the realm of matter.” 27 The equation of a hypercone is x2 + y2 + z2 – w2 = 0. It is the same as the Minkowski metric for the path light follows, where τ = 0 and w = c t. 22