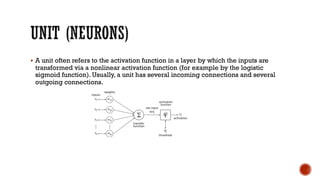

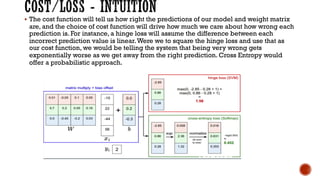

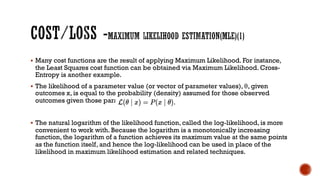

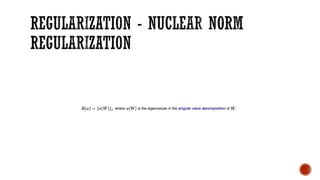

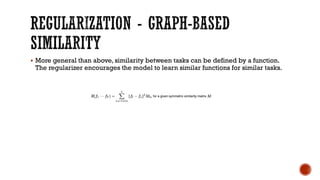

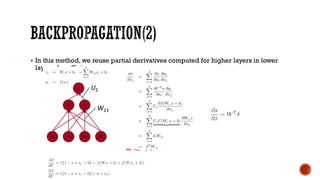

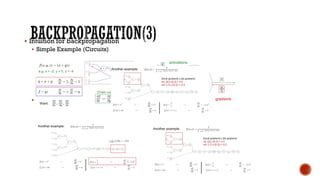

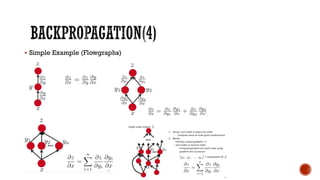

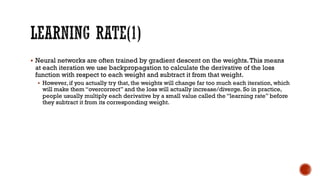

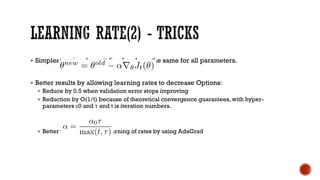

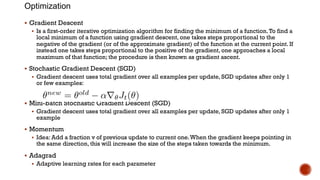

The document discusses key concepts in neural networks including units, layers, batch normalization, cost/loss functions, regularization techniques, activation functions, backpropagation, learning rates, and optimization methods. It provides definitions and explanations of these concepts at a high level. For example, it defines units as the activation function that transforms inputs via a nonlinear function, and hidden layers as layers other than the input and output layers that receive weighted input and pass transformed values to the next layer. It also summarizes common cost functions, regularization approaches like dropout, and optimization methods like gradient descent and stochastic gradient descent.