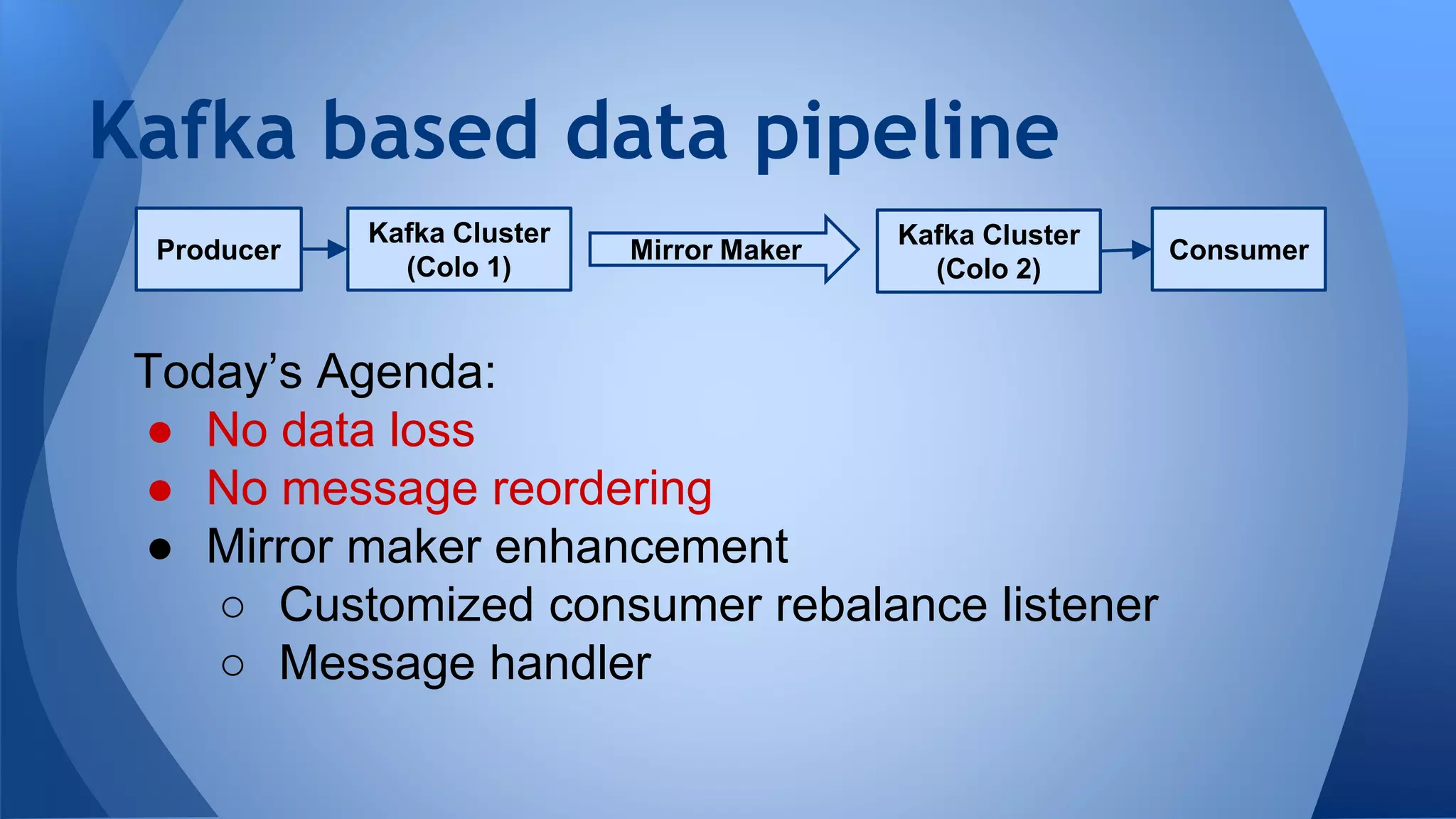

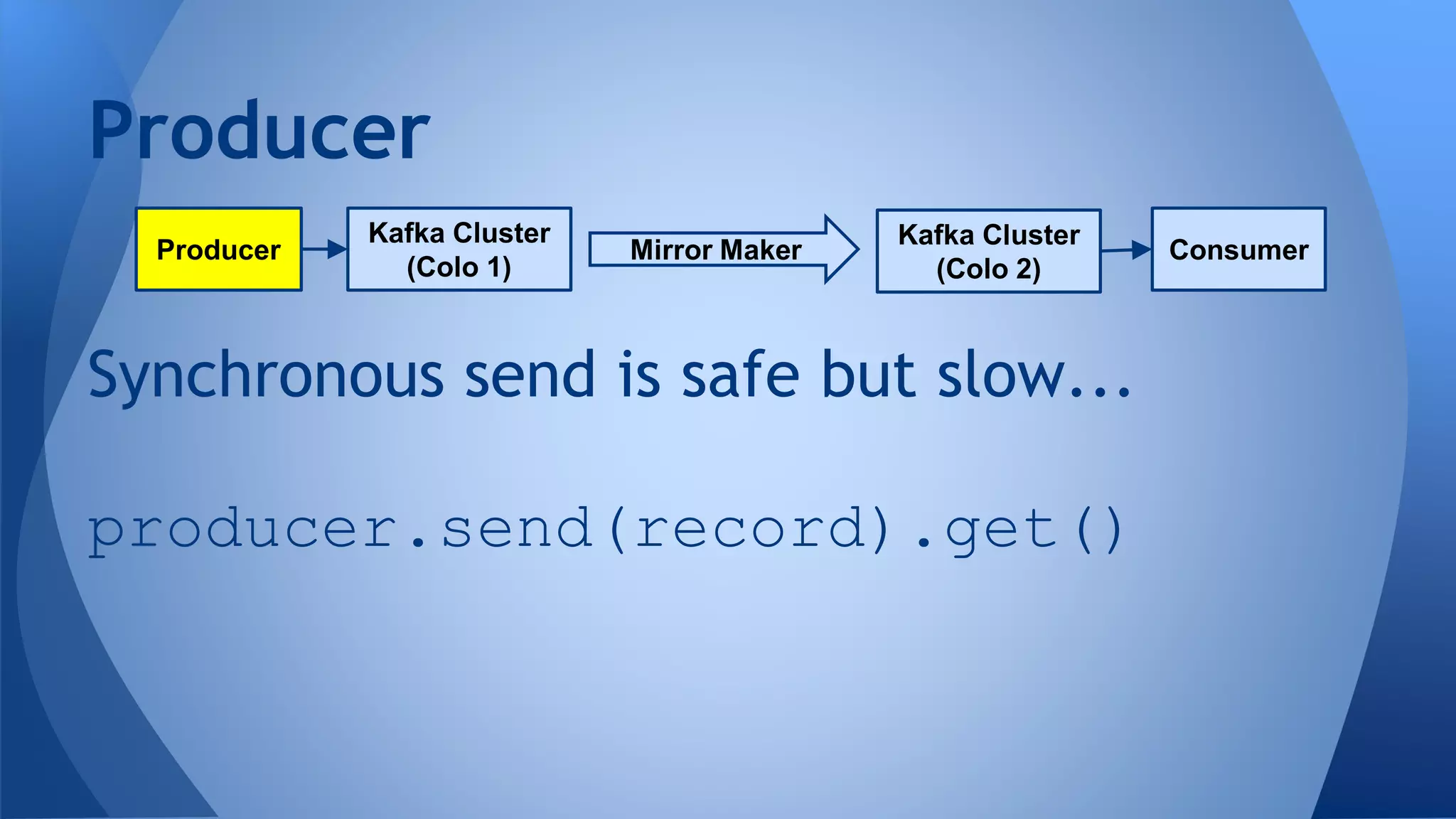

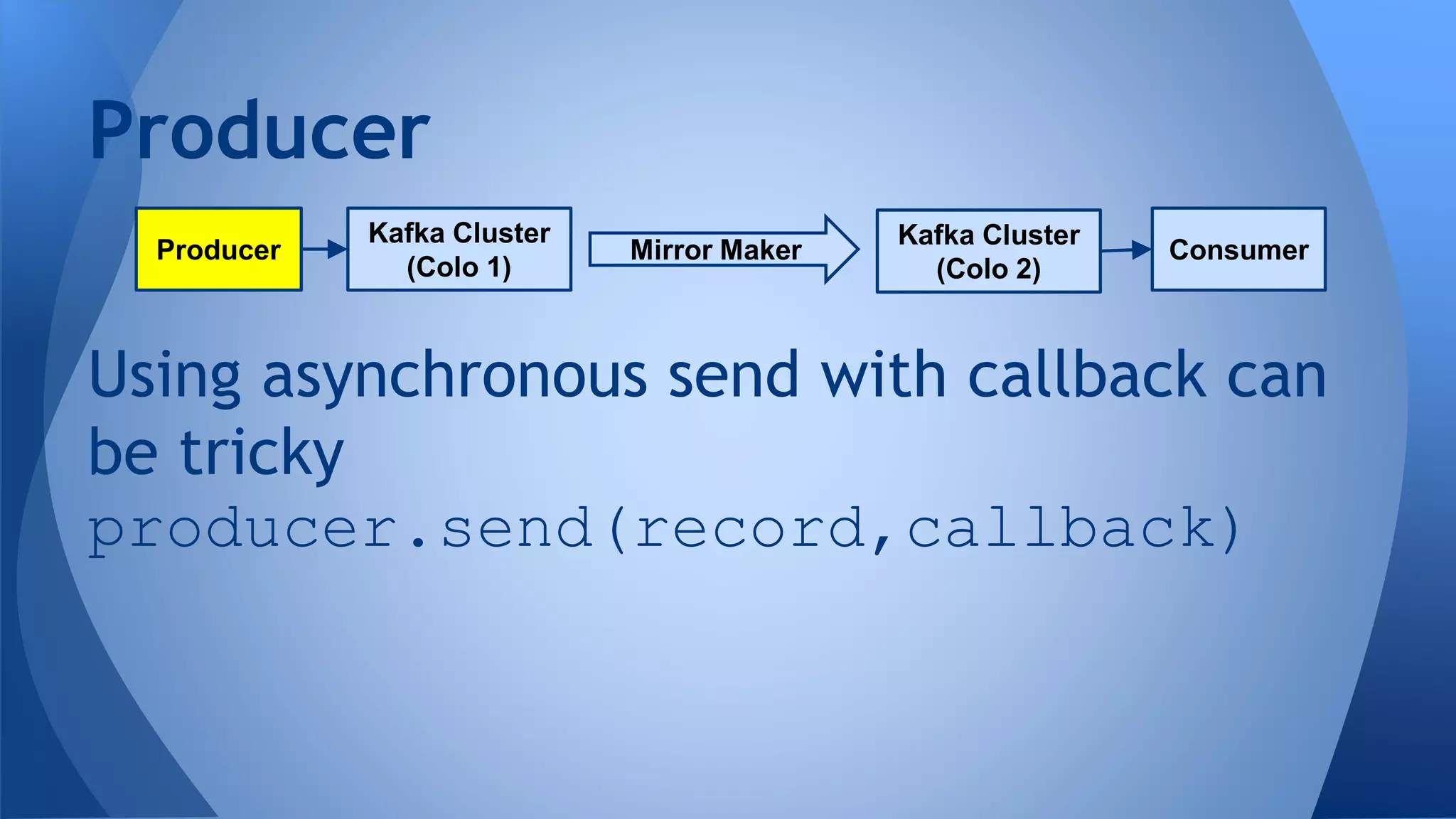

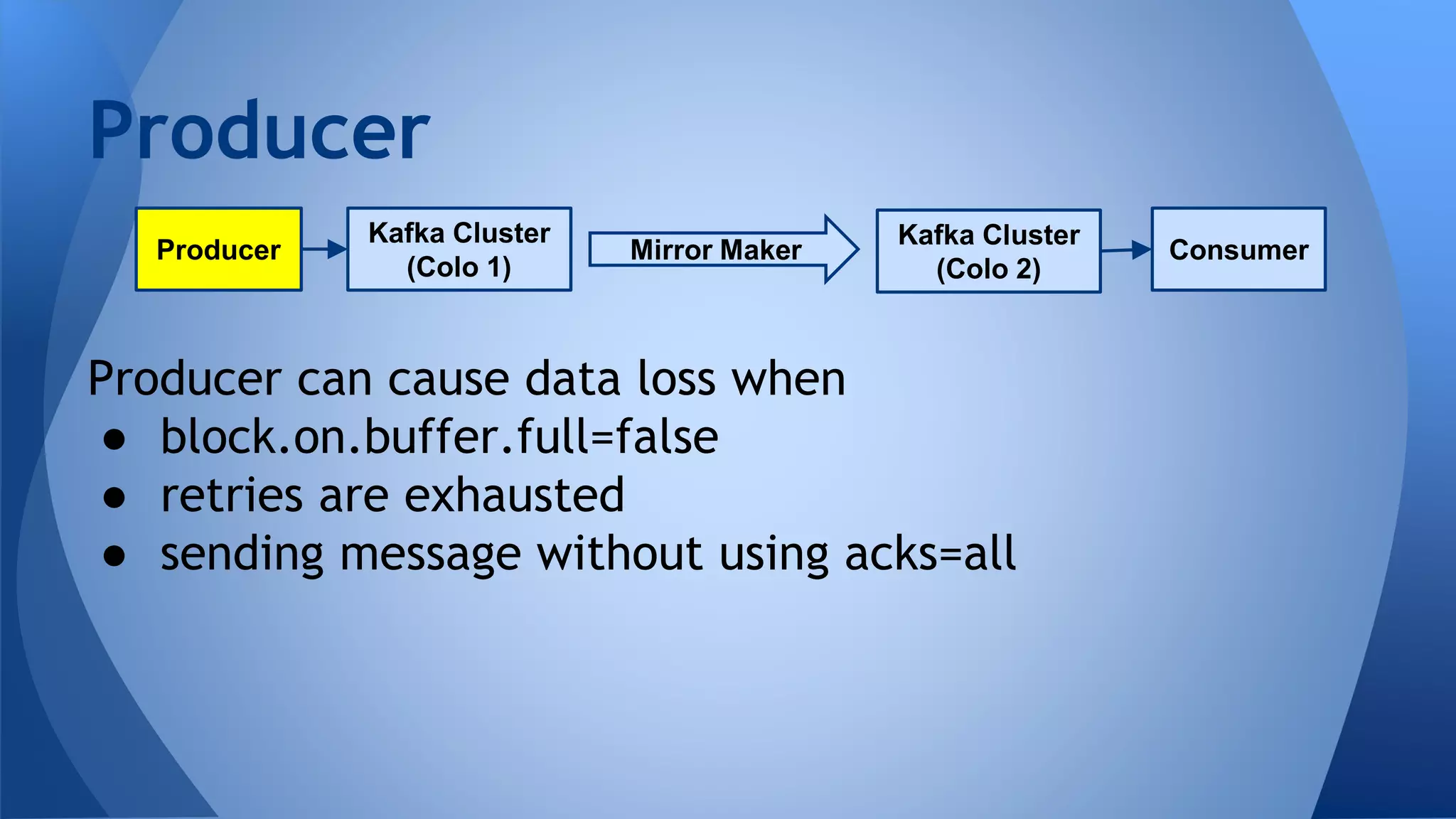

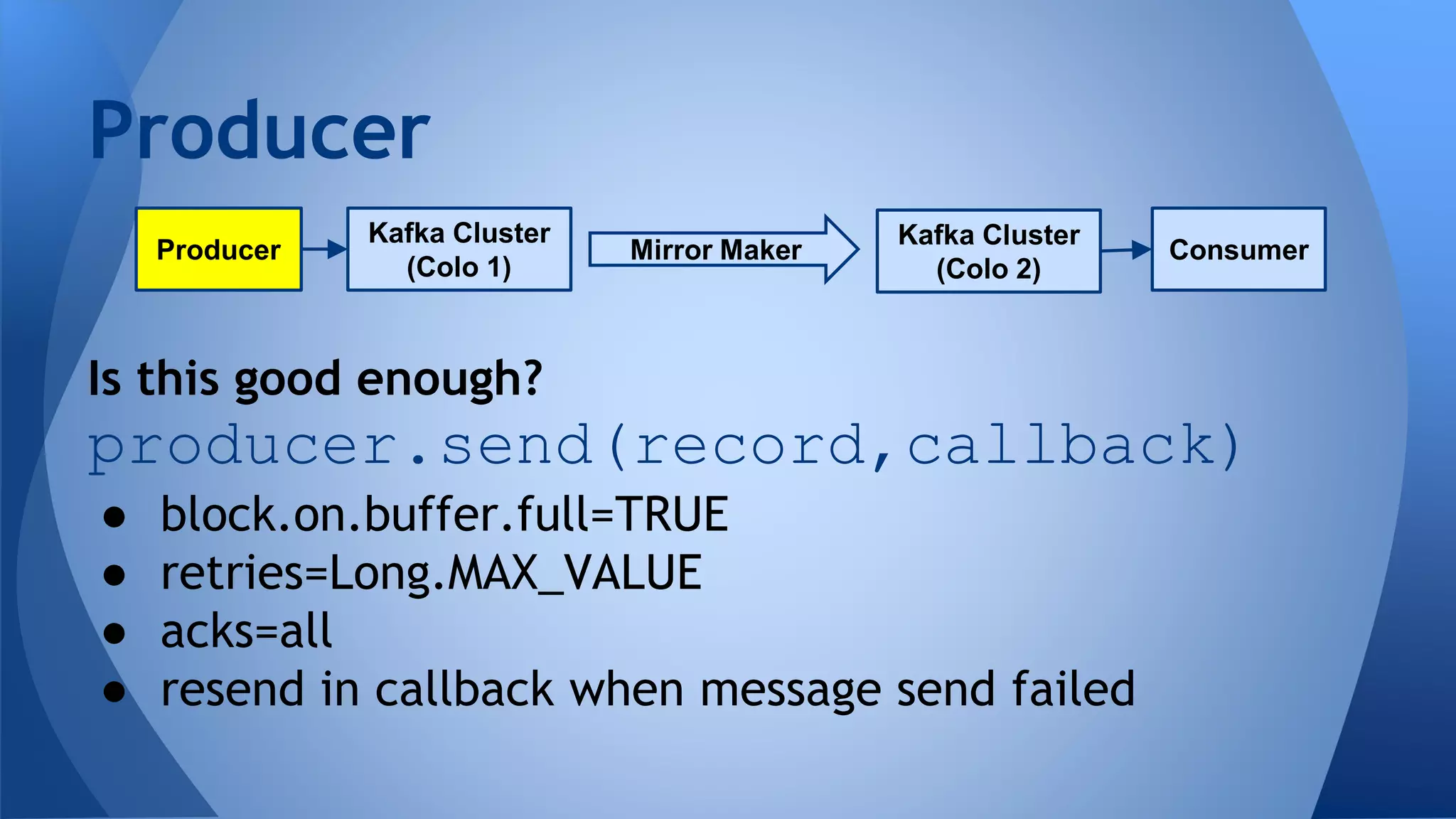

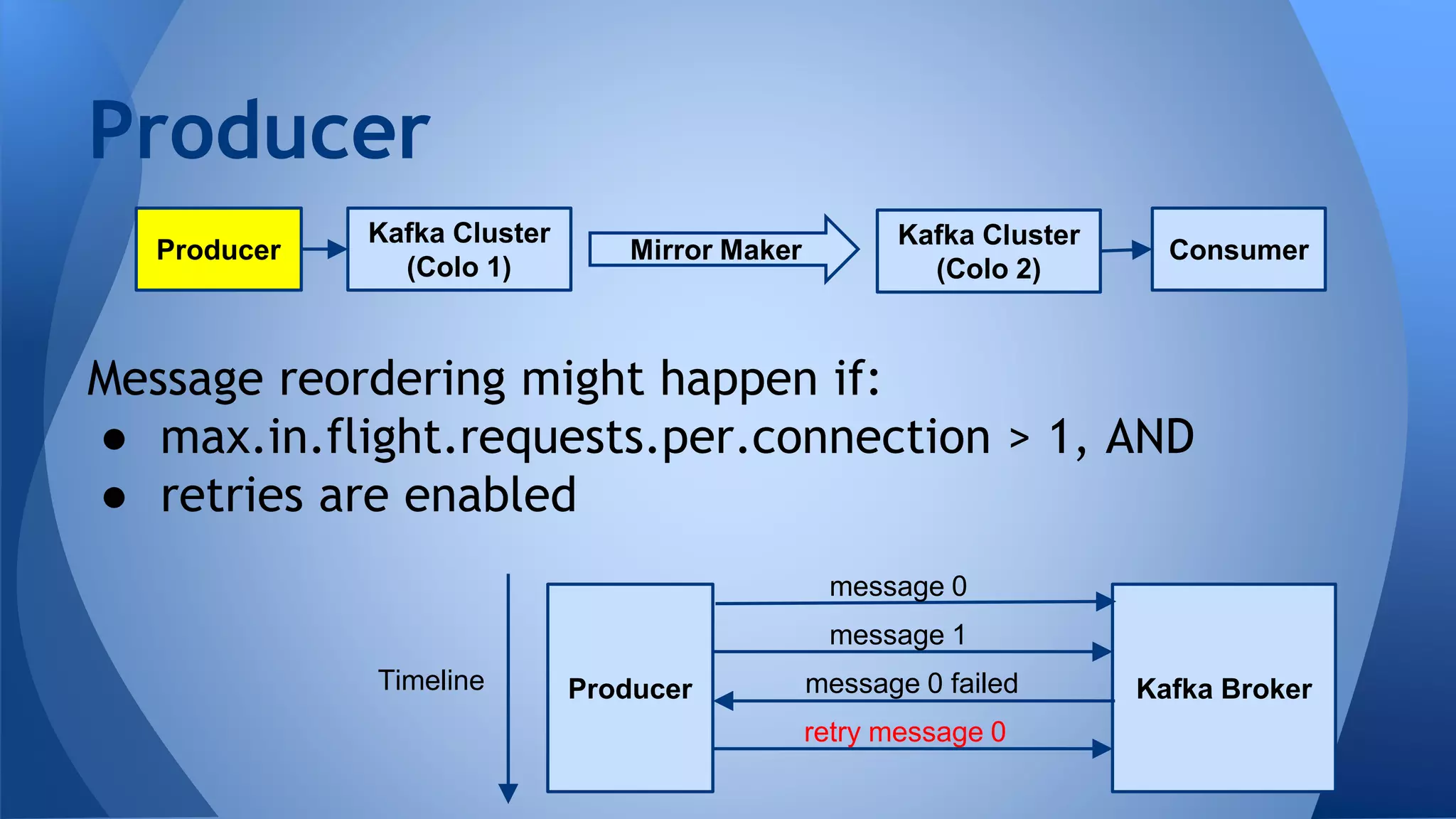

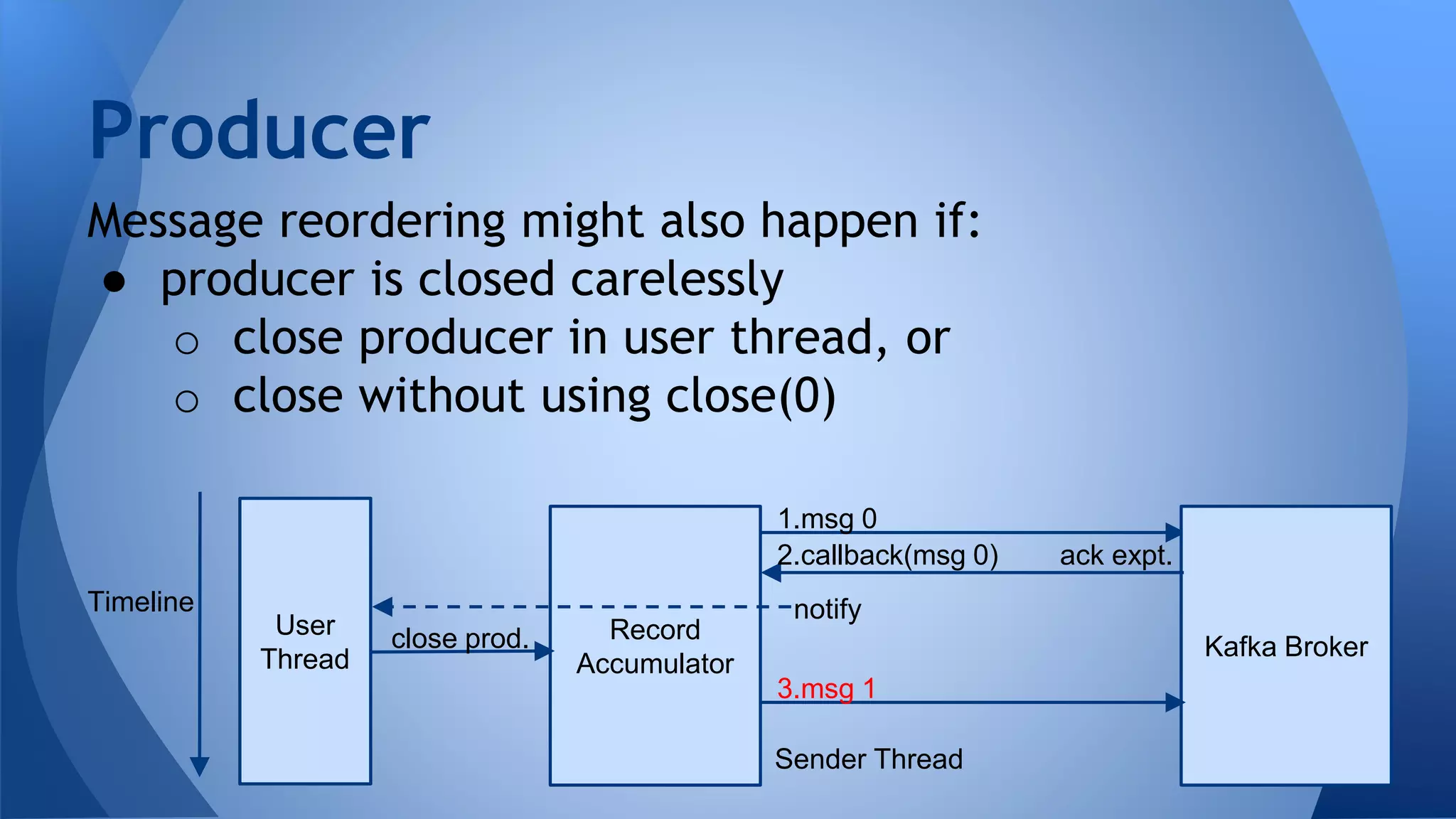

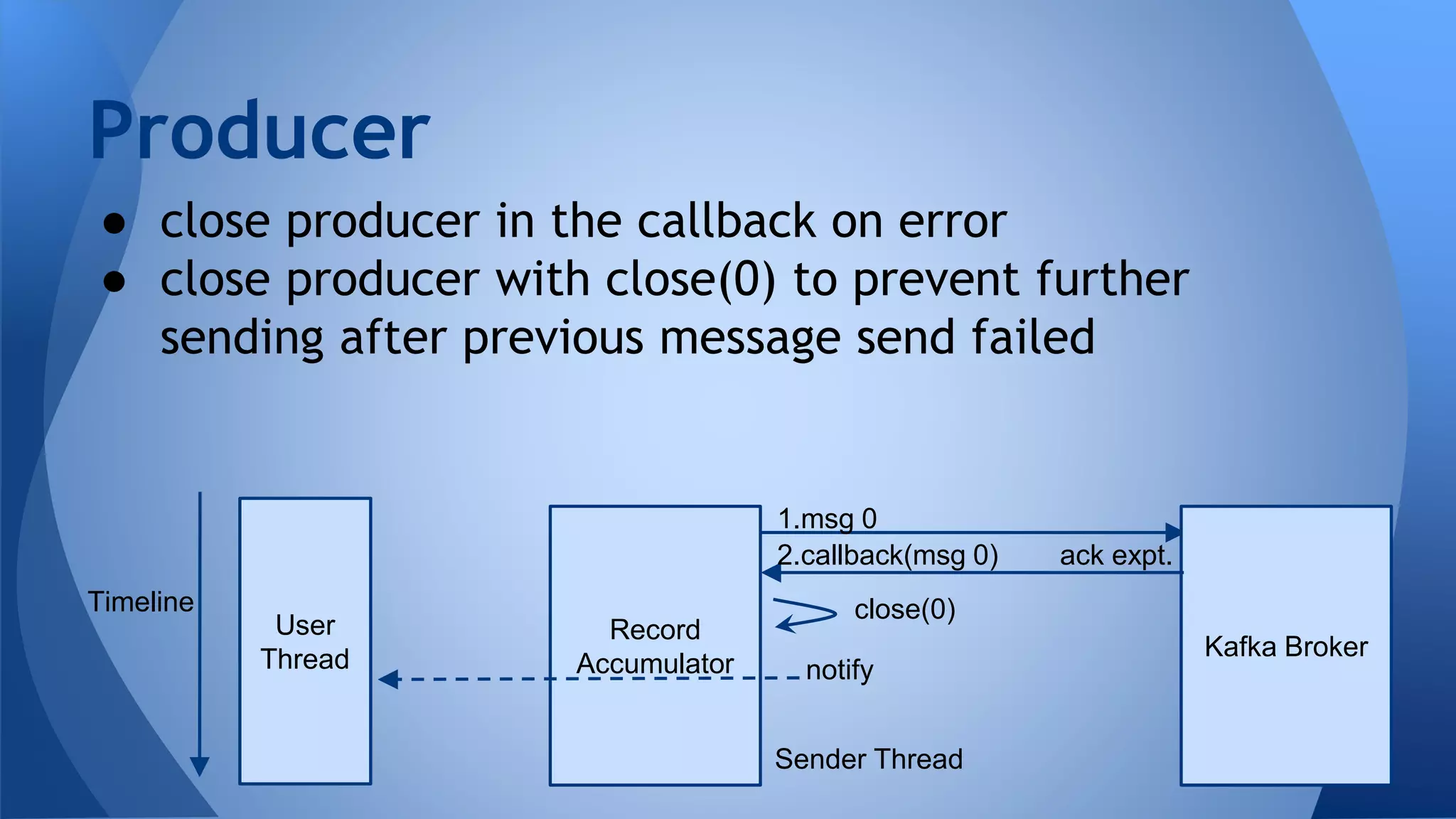

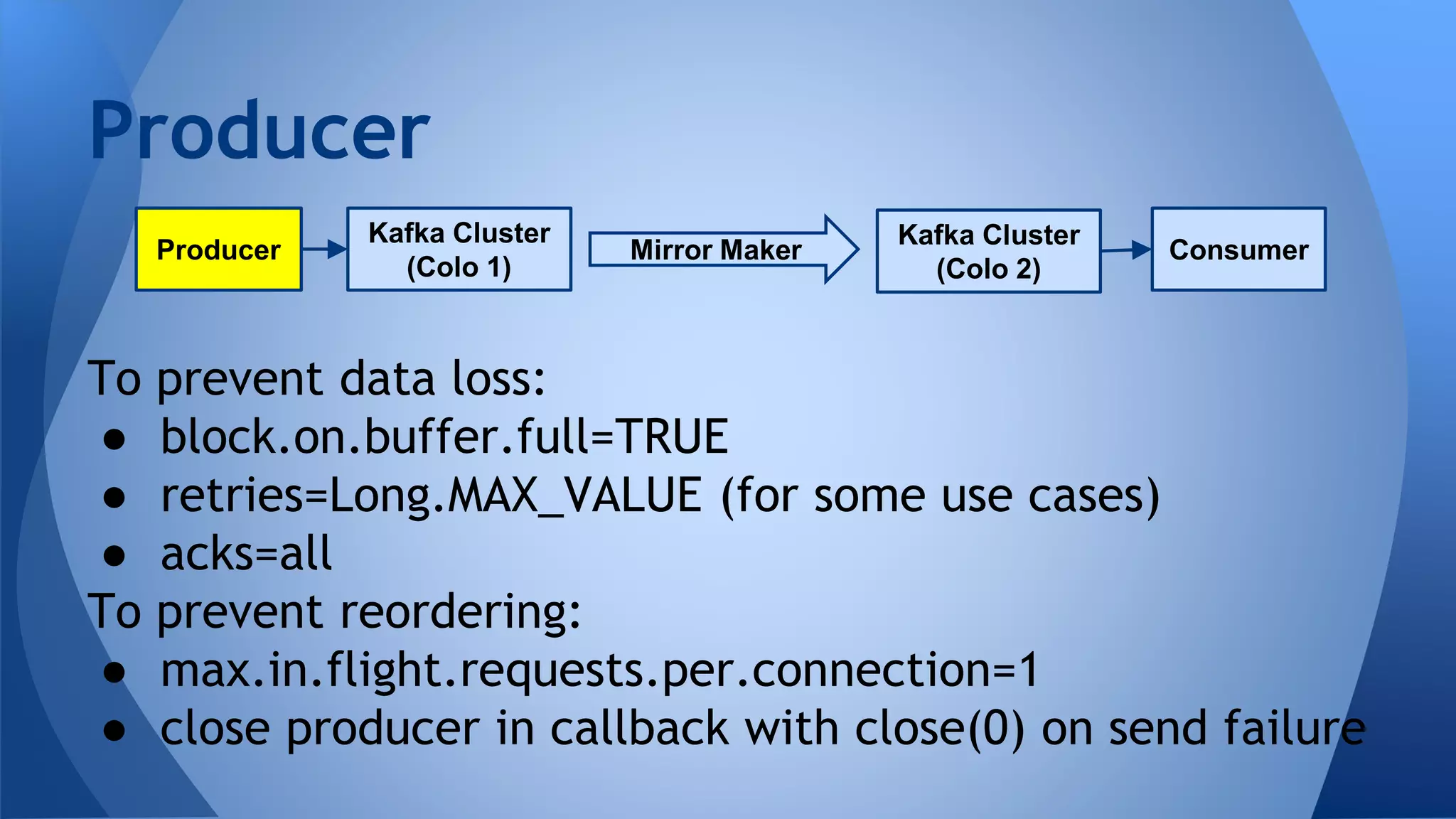

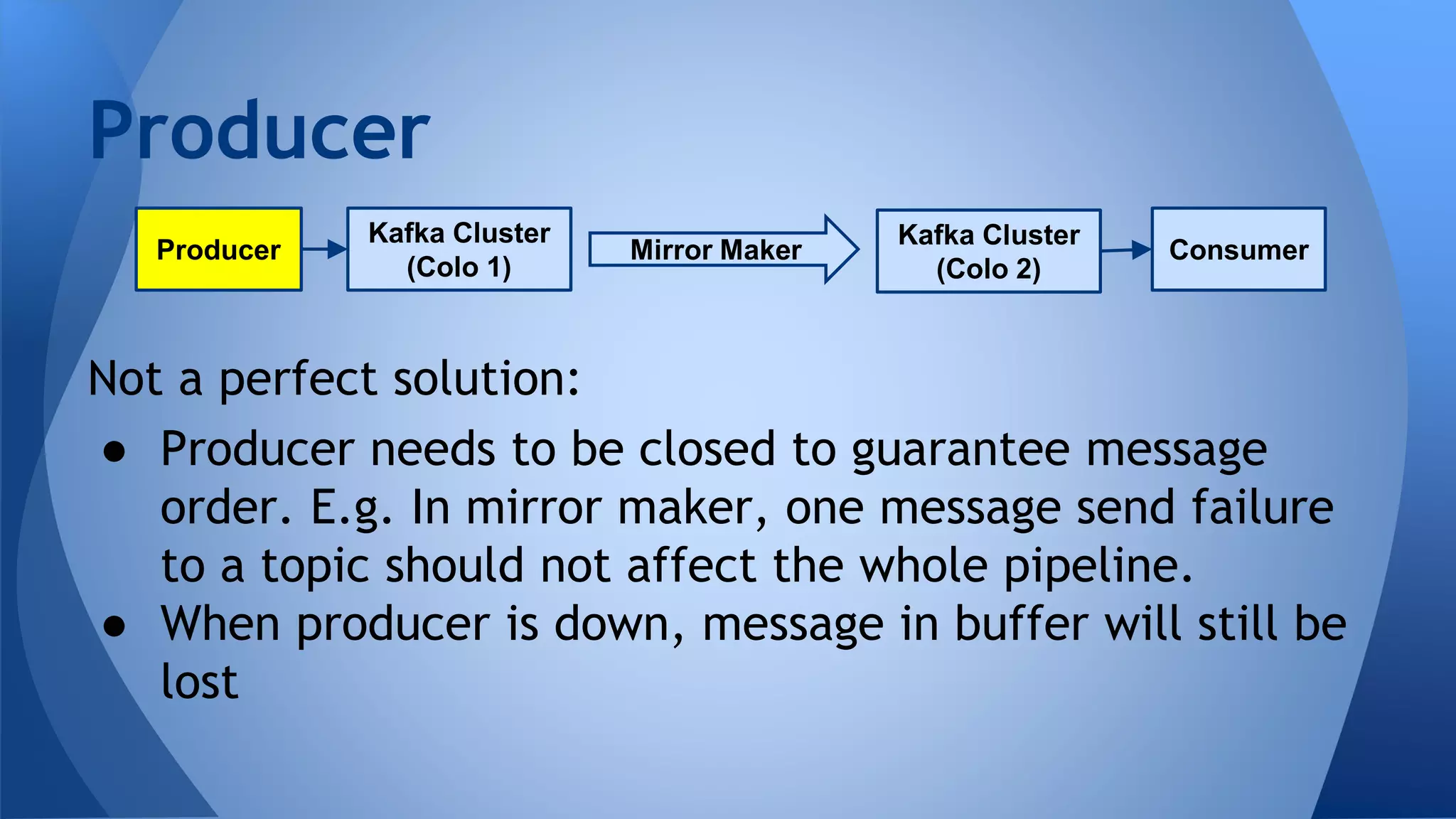

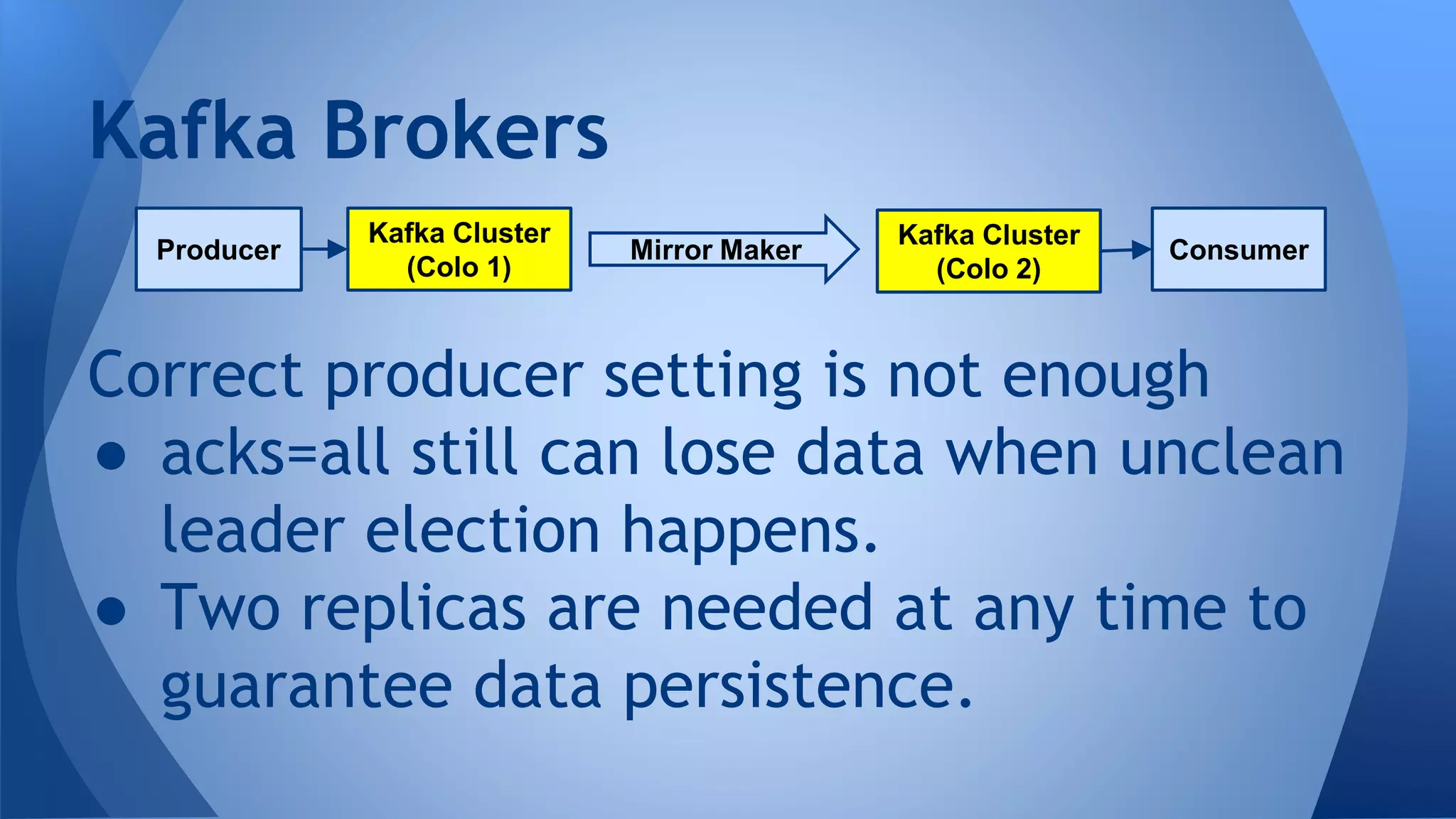

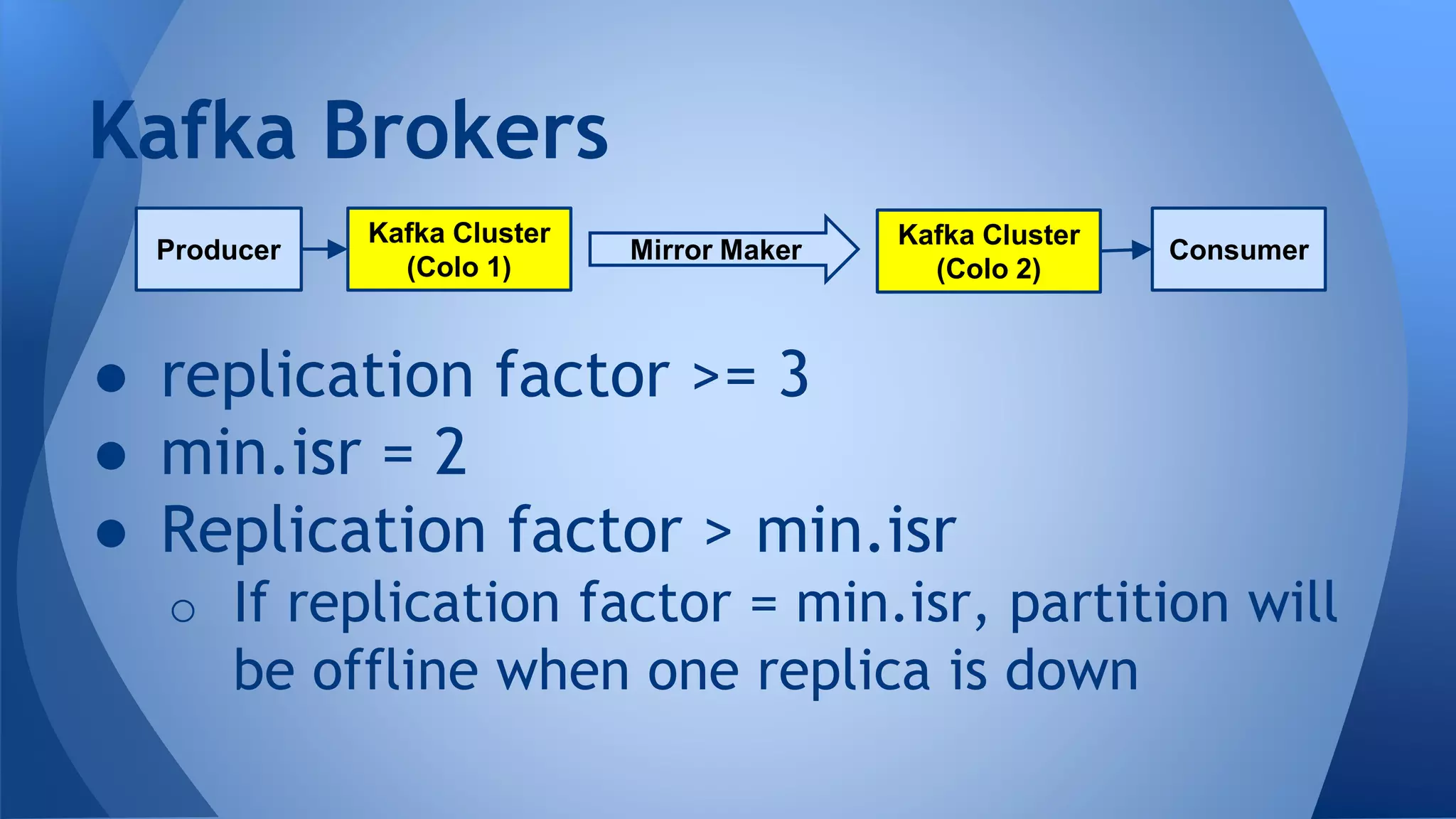

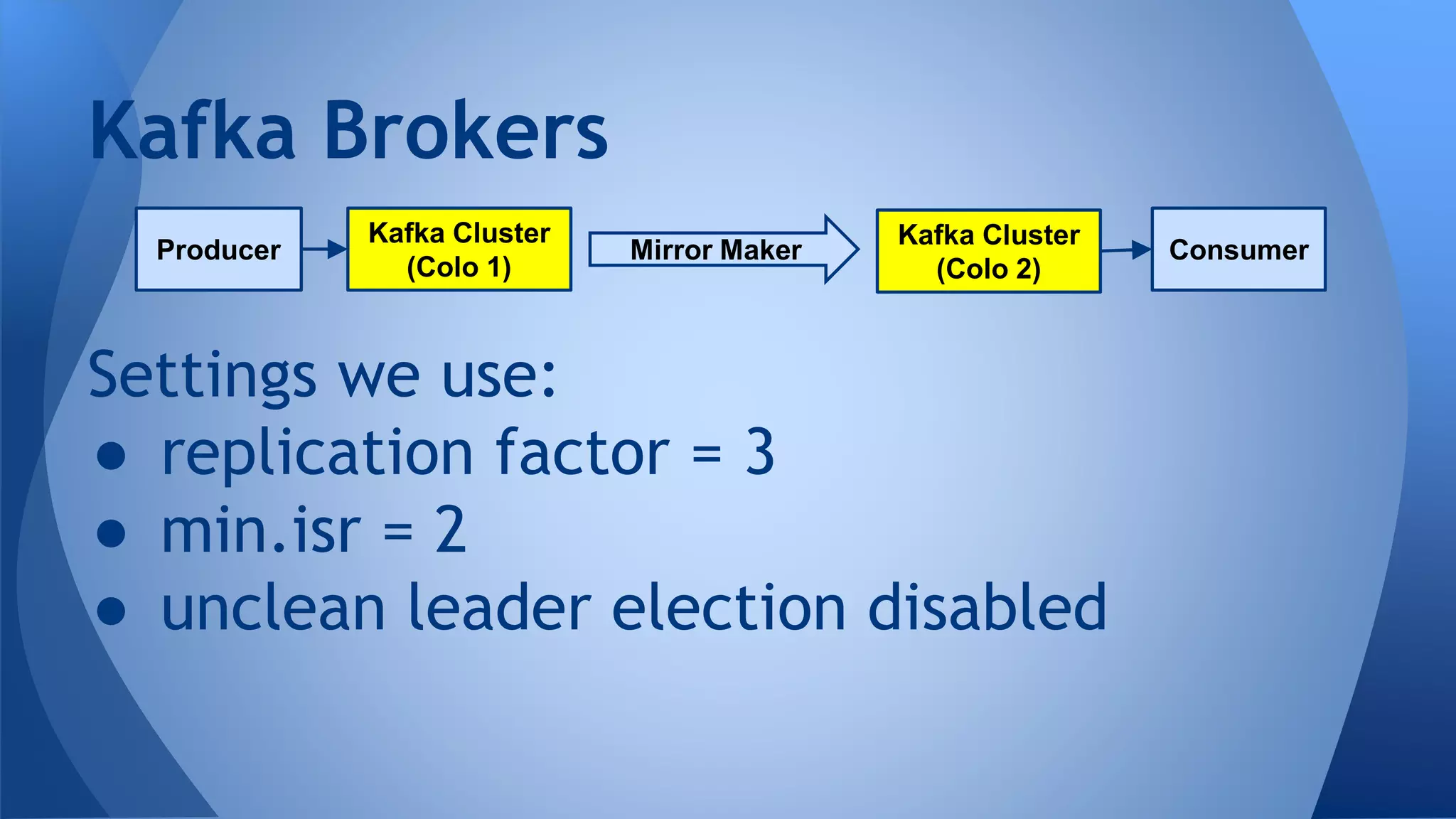

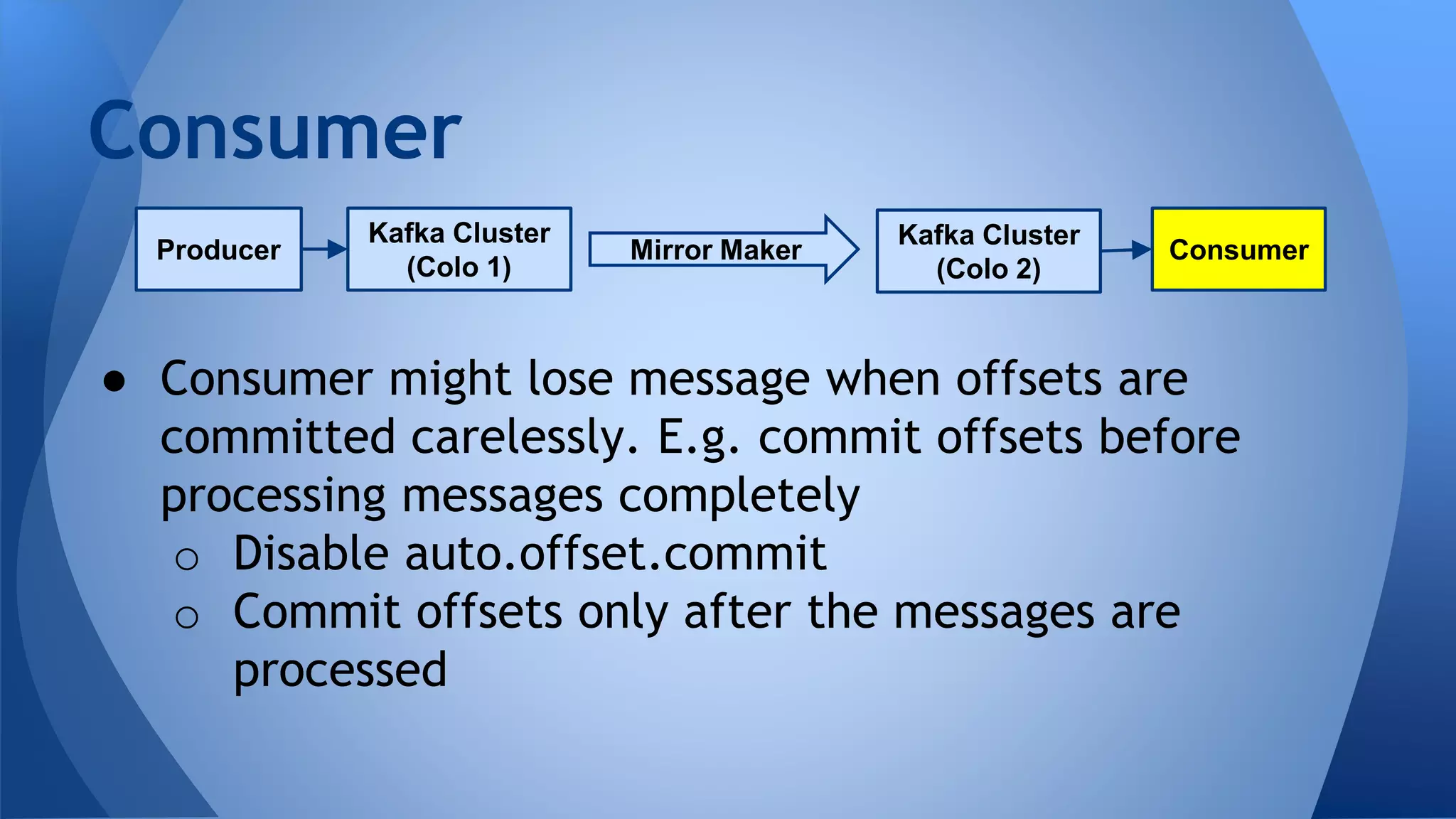

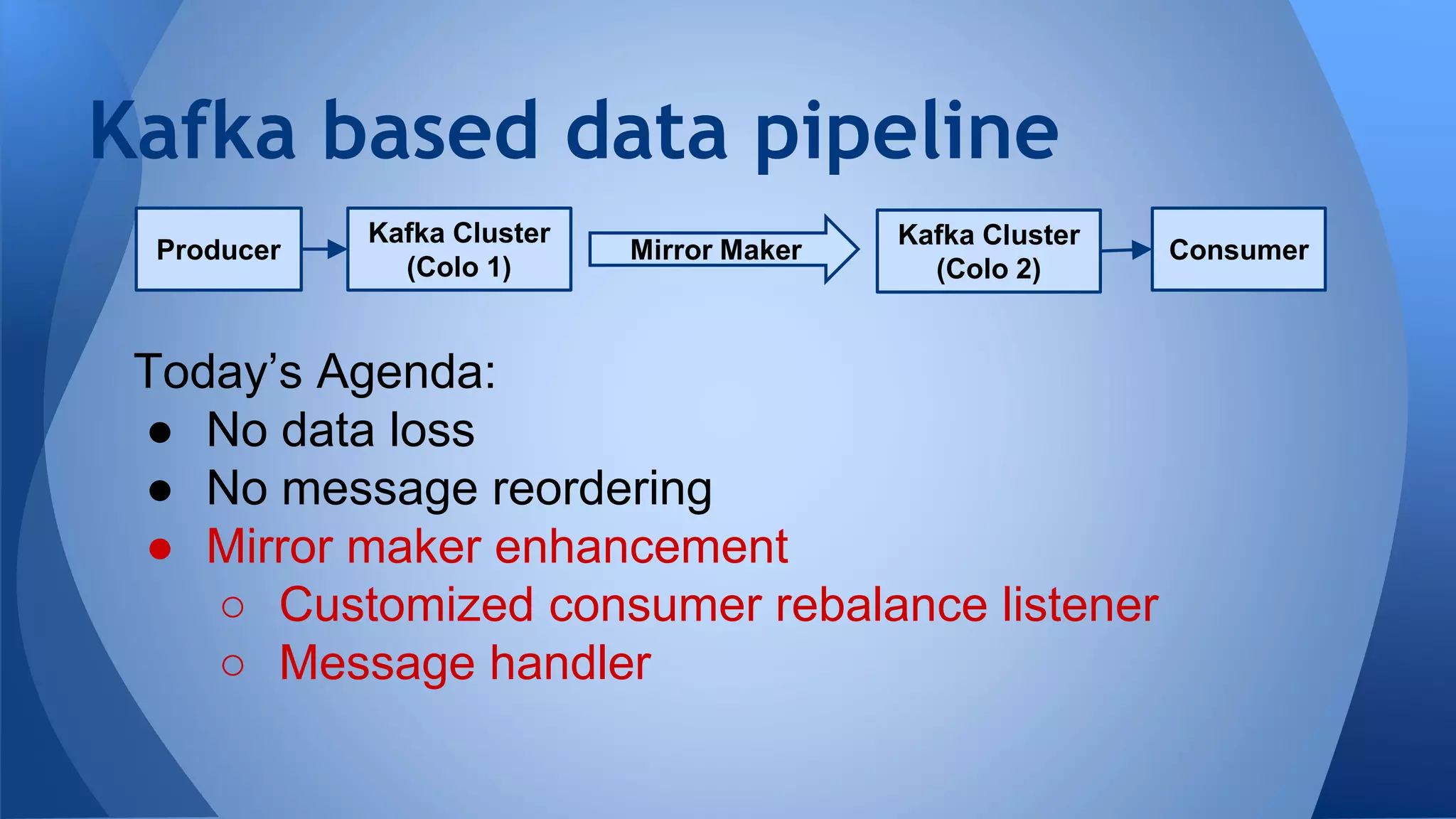

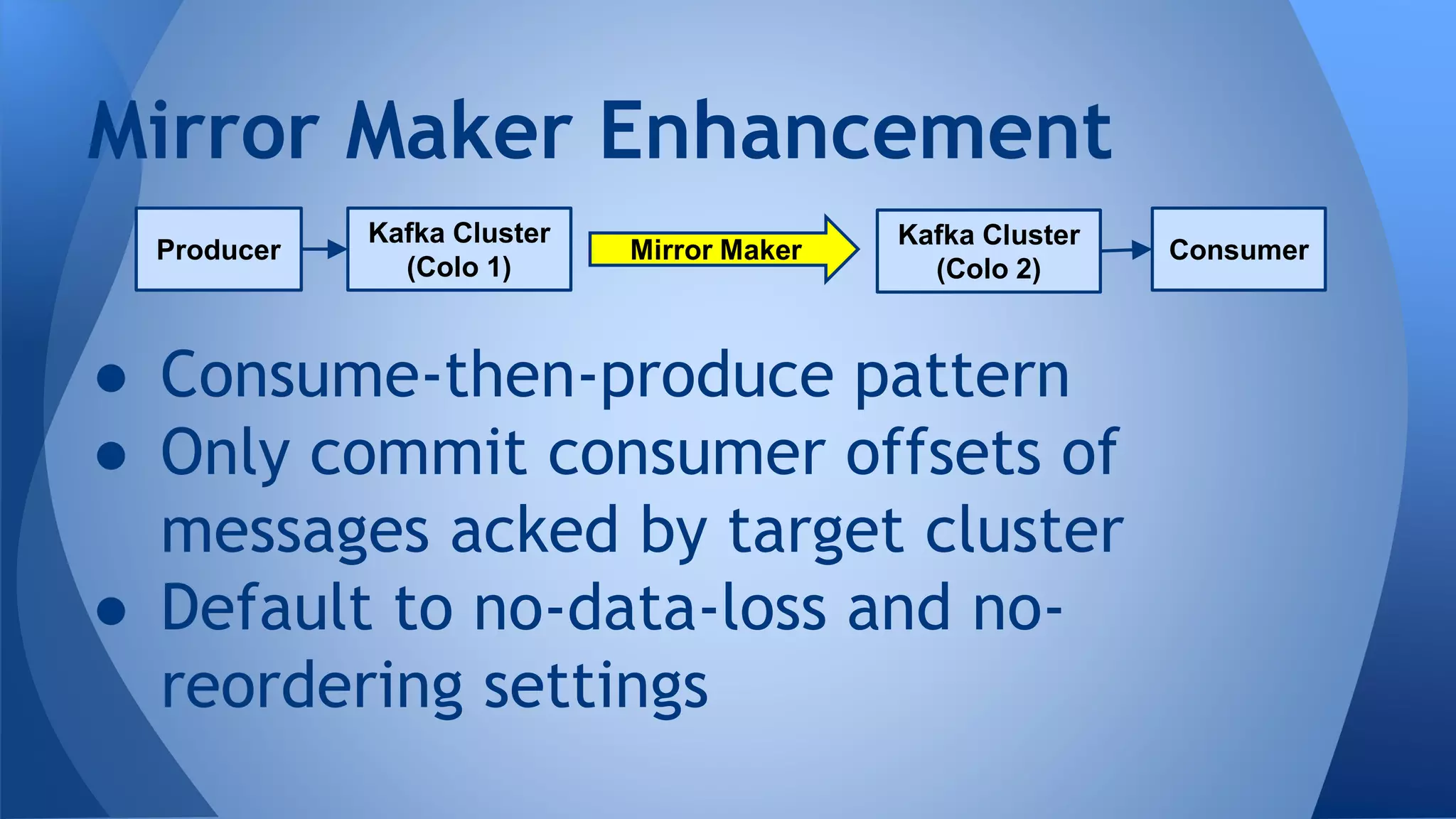

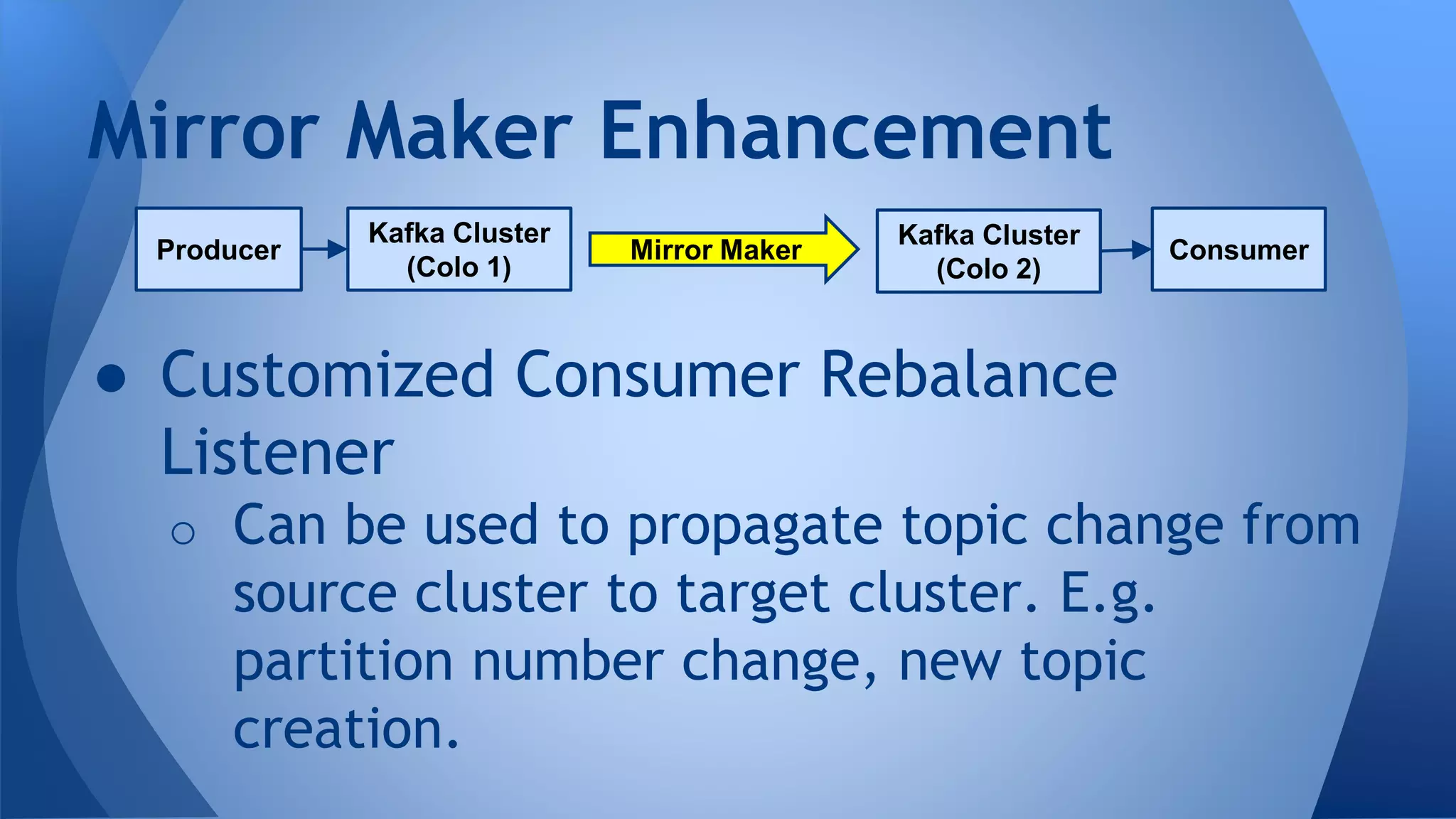

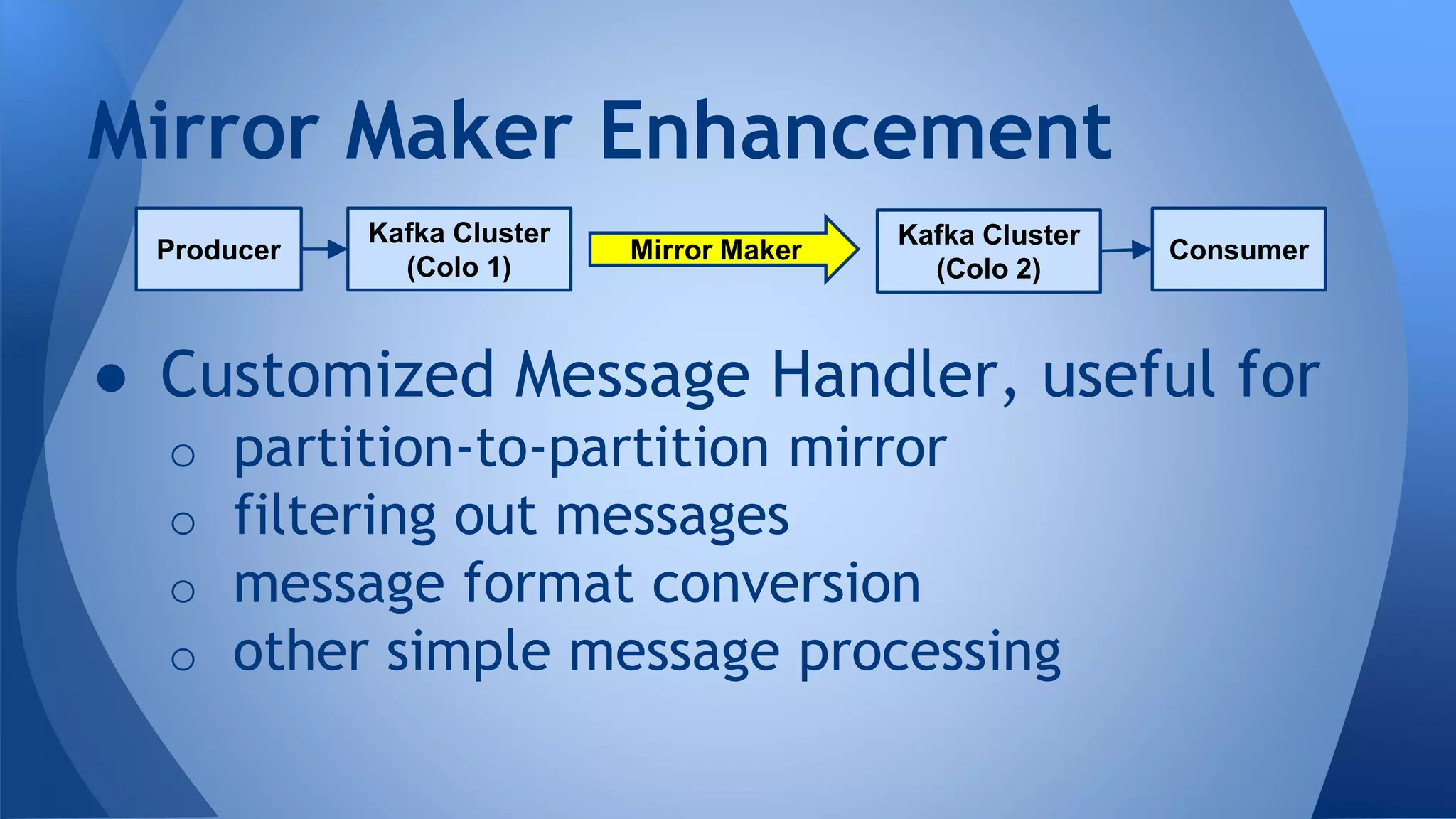

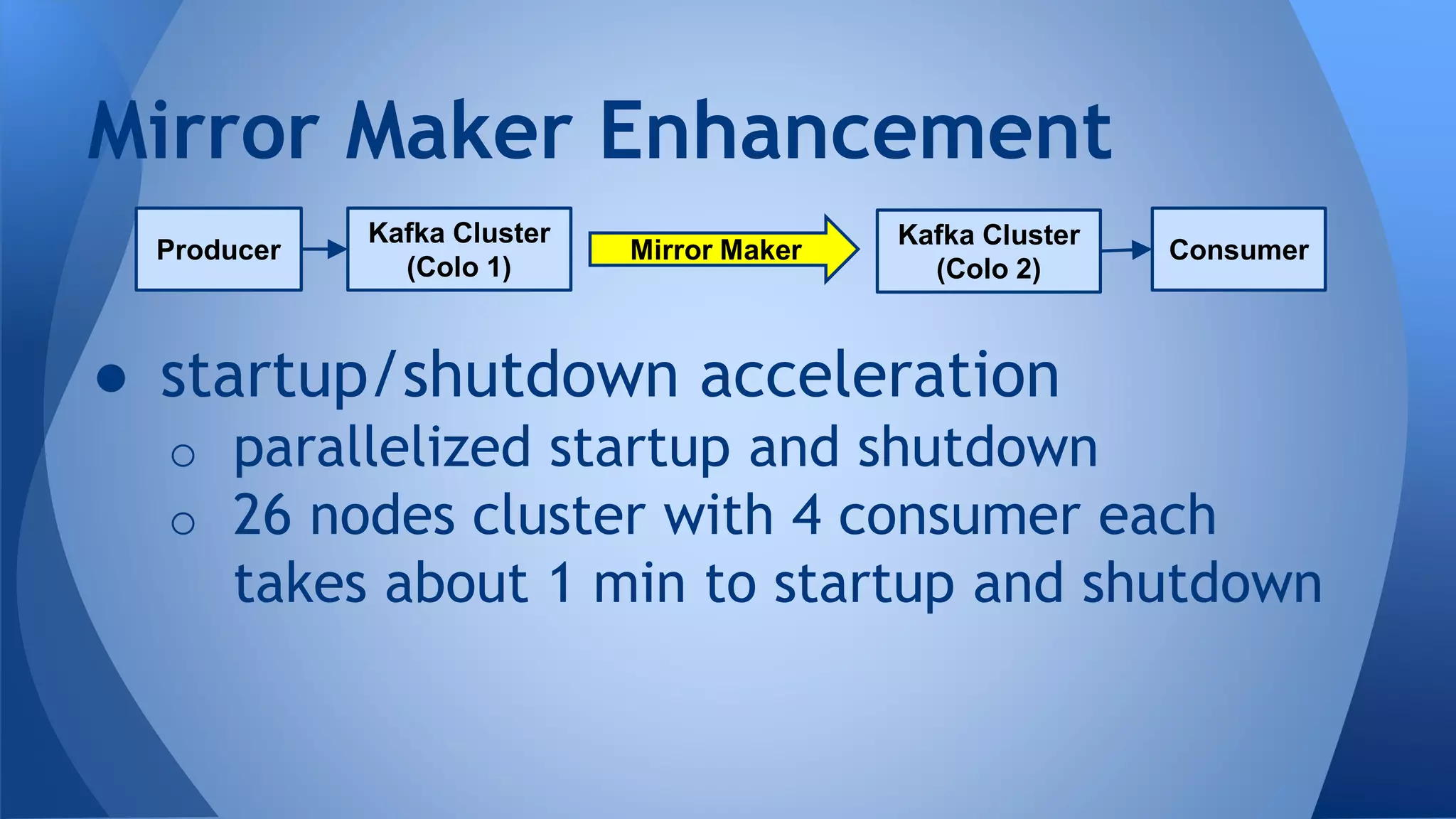

The document discusses how to configure Apache Kafka to prevent data loss and message reordering in a data pipeline. It recommends settings like enabling block on buffer full, using acks=all for synchronous message acknowledgment, limiting in-flight requests, and committing offsets only after messages are processed. It also suggests replicating topics across at least 3 brokers and using a minimum in-sync replica factor of 2. Mirror makers can further ensure no data loss or reordering by consuming from one cluster and producing to another in order while committing offsets. Custom consumer listeners and message handlers allow for mirroring optimizations.