Decision Transformers Model.pdf

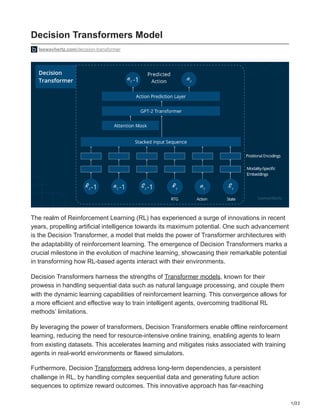

- 1. 1/22 Decision Transformers Model leewayhertz.com/decision-transformer The realm of Reinforcement Learning (RL) has experienced a surge of innovations in recent years, propelling artificial intelligence towards its maximum potential. One such advancement is the Decision Transformer, a model that melds the power of Transformer architectures with the adaptability of reinforcement learning. The emergence of Decision Transformers marks a crucial milestone in the evolution of machine learning, showcasing their remarkable potential in transforming how RL-based agents interact with their environments. Decision Transformers harness the strengths of Transformer models, known for their prowess in handling sequential data such as natural language processing, and couple them with the dynamic learning capabilities of reinforcement learning. This convergence allows for a more efficient and effective way to train intelligent agents, overcoming traditional RL methods’ limitations. By leveraging the power of transformers, Decision Transformers enable offline reinforcement learning, reducing the need for resource-intensive online training, enabling agents to learn from existing datasets. This accelerates learning and mitigates risks associated with training agents in real-world environments or flawed simulators. Furthermore, Decision Transformers address long-term dependencies, a persistent challenge in RL, by handling complex sequential data and generating future action sequences to optimize reward outcomes. This innovative approach has far-reaching

- 2. 2/22 implications for a wide range of applications, from robotics and autonomous vehicles to strategic gameplay and personalized user experiences. By embracing the synergy between Transformer architectures and reinforcement learning, Decision Transformers pave the way for a new era of AI-powered solutions and advancements. This article delves into the evolution of the Transformer model and highlights the challenges in reinforcement learning. It introduces offline reinforcement learning and explains sequence modeling via RL. The Decision Transformer model is presented with a detailed overview of its architecture and its key components. The article also explains how the Decision Transformer works and outlines its various applications and use cases. Lastly, the article offers guidance on how to use a Decision Transformer in a Transformer model, providing valuable insights for anyone interested in this innovative technology. Evolution of Transformer-based models Challenges of reinforcement learning What is offline reinforcement learning? What is reinforcement learning via sequence modeling? What is the Decision Transformer model? Its architecture and key components How does the Decision Transformer work? Applications and use cases of the Decision Transformer How to use the Decision Transformer in a Transformer? Evolution of Transformer-based models This section will briefly discuss the original Transformer architecture and four significant Transformer architectures that emerged shortly after its introduction. Before diving in, let’s understand the kind of tasks various models perform. Encoder-only models: Suitable for tasks requiring input comprehension, such as sentiment analysis. Decoder-only models: Designed for tasks that involve text generation, like story- writing. Encoder-decoder models or sequence-to-sequence models: Used for tasks that require generating text from input, like summarization. Transformer by Google In 2017, Google researchers introduced a network architecture called Transformer in their paper “Attention is all you need.” Designed primarily for natural language processing tasks, Transformers changed the field by addressing the limitations of Recurrent Neural Networks (RNNs).

- 3. 3/22 Unlike RNNs, which handle one word at a time, Transformers can process complete sentences at once. This makes Transformers more efficient and better at understanding context. The attention mechanism helps Transformers access past information more accurately than RNNs, which have difficulty keeping context beyond the previous state. Transformers also use positional embeddings to track word positions within sentences. This advantage has led Transformers to outperform RNNs in almost all language tasks. Undoubtedly, learning about Transformers’ innovative design and incredible growth in recent years is essential. This groundbreaking architecture led to the emergence of four significant Transformer-based models: OpenAI’s GPT, Google’s BERT, OpenAI’s GPT-2, and Facebook’s RoBERTa. GPT by OpenAI Output: Probabilities Over Tokens Softmax Add & Layer Norm Pointwise Feed Forward Add & Layer Norm Masked Multi-Headed Self-Attention Input: X hL We T hL xWe +Wp Embedding Matrix We Transposed Embedding We T Transformer Block Repeat xL = 12 Transformer_block 1,…,L hl = (hl -1) l = LeewayHertz The evolution of GPT in recent years is nothing short of remarkable, making it an excellent starting point for discussing Transformers. GPT, short for Generative Pre-training, pioneered the concept of unsupervised learning for pre-training, followed by supervised learning for fine-tuning—a method now widely adopted by many Transformers.

- 4. 4/22 GPT was initially trained on a unique dataset comprising 7,000 unpublished books, showcasing its prowess in understanding complex language patterns. Its architecture, composed of 12 stacked decoders, distinguishes it as a decoder-only model. Despite having 117 million parameters, GPT-1’s capacity pales compared to the more advanced Transformers developed today. GPT’s unidirectional nature, which involves masking tokens to the right of the current token, allows it to generate one token at a time and use that output for the subsequent timestep. GPT marked the dawn of a new era for Transformers, paving the way for even more astonishing innovations that would soon follow. The evolution of GPT models is a testament to the incredible advancements in natural language processing. Spanning several iterations, GPT models have consistently pushed the boundaries of what AI language models can achieve. GPT (GPT-1): The first iteration, GPT, or Generative Pre-trained Transformer, introduced the concept of unsupervised learning for pre-training and supervised fine- tuning. It utilized 12 decoders in its architecture and was trained on a dataset of 7,000 unpublished books. With 117 million parameters, GPT-1 was a groundbreaking model for its time, though its successors have since eclipsed it. GPT-2: Building upon the foundation of GPT-1, GPT-2 featured an impressive 1.5 billion parameters, significantly increasing the model’s capabilities. It utilized 48 decoders in its architecture and was trained on a much larger dataset of 8 million web pages. Despite concerns about potential misuse, GPT-2 demonstrated an extraordinary ability to generate coherent and contextually relevant text. GPT-3: The latest powerful iteration, GPT-3, boasts 175 billion parameters. This model has shown remarkable performance across a wide range of tasks, including translation, summarization, and even programming assistance. Its advanced architecture and unparalleled capacity for contextual understanding have positioned GPT-3 as a leader in the field of natural language processing.

- 5. 5/22 GPT-4: It is the most recent breakthrough in OpenAI’s continuous endeavor to scale up deep learning. As a multimodal model, GPT-4 is designed to accept both image and text inputs while generating text outputs. This innovative approach allows the model to demonstrate human-level performance across numerous professional and academic benchmarks. GPT-4 builds on the success of its predecessors, incorporating the advancements made in earlier iterations while pushing the boundaries of AI capabilities. Its ability to process and understand both visual and textual data enables GPT-4 to tackle a wider range of tasks and adapt to diverse real-world applications more effectively. While GPT-4 may not yet surpass human performance in every scenario, it represents a significant step forward in the field of artificial intelligence. GPT-4 demonstrates its potential to revolutionize industries and reshape our understanding of AI’s role in modern society by excelling in various benchmarks. With the release of GPT-4, OpenAI reaffirms its commitment to advancing deep learning and creating models that improve upon existing technology and pave the way for future breakthroughs. As the AI community continues to explore the possibilities offered by GPT-4, we can expect further innovations and exciting developments in the realm of artificial intelligence. Google’s BERT

- 6. 6/22 W3 Classification Layer: Fully-Connected Layer + GELU + Norm Transformer Encoder Embedding Embedding To Vocal + Softmax W’ 1 W1 W1 O1 W2 W2 W’ 2 O2 W3 W’ 3 O3 W4 [MASK] W’ 4 O4 W5 W5 W’ 5 O5 LeewayHertz BERT, the Bidirectional Encoder Representation from Transformers, is a game-changing model that redefines how we approach natural language processing. BERT’s bidirectional nature sets it apart from its counterparts, as its attention mechanism can effectively capture the context from both the left and right directions of the current token. With a powerful architecture comprising 12 stacked encoders, BERT serves as an encoder-only model that can process entire sentences as input, allowing it to reference any word in the sentence for unparalleled performance. Boasting 110 million parameters, BERT shares the flexibility of GPT, as it can be trained on specific tasks and fine-tuned for a variety of applications. Its unique pre-training method, which essentially involves a fill-in-the-blank task, demonstrates the exceptional capabilities of this highly efficient model. Facebook’s RoBERTa Facebook’s RoBERTa, Robustly Optimized BERT Pre-training Approach, takes the power of BERT several notches higher. While RoBERTa shares the same architecture as BERT, the significant optimization it has undergone sets it apart. These enhancements have led to

- 7. 7/22 state-of-the-art results on various NLP benchmarks, including MNLI, QNLI, RTE, STS-B, GLUE, and RACE tasks. By eliminating BERT’s next-sentence pretraining objective, training with larger mini-batches and altering the masking pattern, RoBERTa has surpassed its predecessor in performance. Furthermore, RoBERTa has been trained on a more extensive dataset, incorporating existing unannotated NLP datasets and CC-News, a novel collection of public news articles. The rapid evolution of Transformer-based models has significantly impacted the field of natural language processing. Researchers have created new models that perform specific NLP tasks more effectively than the original Transformer by separating and stacking encoder and decoder architectures. The widespread adoption of unsupervised learning for pre- training and supervised learning for fine-tuning has furthered the development of these models. Challenges of reinforcement learning Reinforcement learning is a branch of machine learning where agents learn to make decisions in an environment by interacting with it and receiving feedback in the form of rewards. The goal is to maximize the cumulative reward over time by discovering the best sequence of actions. This learning process enables agents to adapt their behavior and improve their decision-making capabilities in various situations. Although widely employed in applications such as autonomous driving, robot navigation and game playing, RL has several significant limitations, such as: Sample efficiency Sample efficiency is a challenge in reinforcement learning (RL) as it necessitates numerous interactions with the environment to learn an effective policy. Real-world scenarios like robotics or autonomous driving make these interactions time-consuming, costly, or risky. Efficient exploration strategies are vital for RL agents to gather valuable experiences while navigating their environment. Complex environments with vast state and action spaces require a larger number of samples to find optimal policies. Additionally, limited sample efficiency can hinder generalization, leading to poor performance in novel situations. Exploration vs. exploitation This is a limitation of reinforcement learning because it represents a fundamental trade-off that RL agents must navigate during the learning process. Exploration involves trying new actions in different states to gather information about the environment, while exploitation leverages the agent’s current knowledge to make decisions that maximize the reward function. Striking the right balance is crucial, as focusing too much on exploration may lead to suboptimal decisions and lower cumulative rewards, while excessive exploitation may

- 8. 8/22 cause the agent to miss out on discovering potentially better strategies. Achieving this balance is particularly challenging in complex and dynamic environments where the optimal policy may change over time. Thus, developing RL algorithms that effectively manage the exploration-exploitation trade-off is essential for ensuring their success and applicability in real-world tasks. Sparse rewards Sparse rewards represent a limitation of reinforcement learning because they make it difficult for agents to identify and learn from informative feedback. When rare non-zero rewards are only provided under particular circumstances, the agent may struggle to discern the causal relationship between its actions and the corresponding rewards. As a result, the learning process can become slow and inefficient. In environments with sparse rewards, the agent might need to take a long sequence of actions before receiving any meaningful feedback, making it harder to pinpoint which actions contributed to achieving the reward. Consequently, this may lead to extended training times or suboptimal performance, as the agent could fail to discover the most effective strategies. Researchers have developed various techniques to address the challenge of sparse rewards, such as reward shaping, hierarchical reinforcement learning, and intrinsic motivation, to provide more informative feedback or structure the learning process to guide the agent more effectively toward the optimal policy. Long-term dependencies Long-term dependencies represent a significant challenge in reinforcement learning because they require agents to consider the consequences of their actions over extended time horizons. In tasks like chess or Go, an agent must be able to plan and strategize multiple steps ahead, considering the potential outcomes of each move and their subsequent impact on the game’s state. When dealing with long-term dependencies, it becomes difficult for the agent to attribute the final reward or outcome to specific actions taken earlier in the sequence. This issue, known as the credit assignment problem, can slow down the learning process and hinder the agent’s ability to discover optimal policies. Several approaches have been developed to address the challenge of long-term dependencies in reinforcement learning. Techniques such as temporal-difference learning, eligibility traces, and Monte Carlo tree search methods have been used to help the agent better understand the long-term consequences of its actions and improve its decision-making capabilities in tasks with extended time horizons. Stochastic environments Stochastic environments pose a significant challenge for reinforcement learning because they introduce randomness and uncertainty into the dynamics of the system. In such environments, the outcomes of actions are not deterministic, meaning that the same action

- 9. 9/22 may lead to different results when applied in the same state. This unpredictability can make it difficult for RL agents to learn effective policies and strategies. Learning in stochastic environments requires the agent to cope with uncertainty and estimate the probability distributions of different outcomes. This adds complexity to the learning process and can slow down convergence to the optimal policy. To deal with these challenges, RL algorithms need to be robust and capable of adapting to the inherent variability in the environment. Various techniques have been developed to address stochastic environments’ challenges in reinforcement learning. These include methods such as Bayesian reinforcement learning, which explicitly models environmental uncertainty and algorithms incorporating exploration strategies to gather more information about the environment’s dynamics. By incorporating these approaches, RL agents can better learn to navigate and make decisions in stochastic environments despite the inherent randomness and uncertainty. Transfer learning Transfer learning is a limitation of reinforcement learning because it involves leveraging knowledge acquired from one task or environment and applying it to a different but related task or environment. The goal is to improve learning efficiency and performance in the target task by reusing the knowledge from the source task. However, RL algorithms often struggle to achieve this effectively, which can limit their practical applications. There are several reasons for compromised transfer learning capabilities in RL, such as: Domain differences: The source and target tasks may have distinct state and action spaces, making it challenging to directly apply the knowledge from one to the other. Task variations: The reward functions and dynamics of the source and target tasks may differ, complicating the process of transferring knowledge. Negative transfer: Knowledge transfer between tasks can sometimes decrease performance if the source and target tasks are not sufficiently similar. Safety and reliability Safety and reliability are critical concerns in reinforcement learning (RL), particularly when dealing with high-stakes or real-world applications. Ensuring an RL agent makes safe and reliable decisions is crucial to prevent unintended consequences, accidents, or failures. However, achieving safety and reliability presents several challenges: Exploration risks: RL agents explore different actions to discover the optimal policy during learning. This exploration can lead to actions that might have negative consequences, such as damaging equipment or causing harm in a physical environment.

- 10. 10/22 Lack of performance guarantees: Traditional RL algorithms often lack performance guarantees during the learning process, which makes it difficult to ensure that the agent will always behave safely and reliably. Partial observability: In many real-world environments, the agent may only have access to partial information about the system’s state, making it challenging to determine safe actions with certainty. Uncertainty and noise: Real-world environments often contain uncertainty and noise, such as sensor errors or unpredictable environmental changes. This uncertainty can make it difficult to accurately estimate the true state of the environment and its dynamics, leading to potential safety and reliability issues. Distributional shift: When an RL agent is deployed in a new environment, it may encounter situations that were not present in its training data. This distributional shift can result in the agent making suboptimal or unsafe decisions based on its previous experience. Overcoming these challenges is essential for advancements in the field of reinforcement learning and realizing its full potential across applications. What is offline reinforcement learning? Offline reinforcement learning, also known as batch reinforcement learning or data-driven reinforcement learning, is a method of training decision-making agents without direct interaction with the environment. Instead, agents learn from pre-collected data, which can originate from other agents or human demonstrations. This approach offers an alternative to online reinforcement learning, where agents gather data and learn from it through direct interaction with the environment or a simulator.

- 11. 11/22 Environment State,Reward Action ReinforcementLearningwithOnlineInteractions State,Actions,Rewards Environment Logged Interaction OfflineReinforcementLearning OnlineAgent OfflineAgent LeewayHertz Offline reinforcement learning involves the following process: Create a dataset using one or more policies and/or human interactions. Run offline RL on this dataset to learn a policy. This method is particularly beneficial when building a complex, expensive, or insecure simulator. However, offline reinforcement learning has a major drawback known as the counterfactual queries problem. This issue arises when an agent decides to perform an action for which no data is available in the pre-collected dataset. For example, suppose the agent wants to turn right at an intersection but the dataset does not contain information about this trajectory. In that case, the agent may struggle to make an informed decision. Despite this limitation, offline reinforcement learning offers a valuable alternative for training deep reinforcement learning agents, especially in situations where real-world interactions or simulator construction is challenging or impractical.

- 12. 12/22 What is reinforcement learning via sequence modeling? Reinforcement learning via sequence modeling is a method that combines reinforcement learning with sequence modeling techniques to address problems where the agent’s decision-making process involves a sequence of actions or states with temporal dependencies. This approach is necessary when the agent needs to learn optimal strategies in complex environments where the relationships between actions and states evolve over time. Sequence modeling is a technique in machine learning that focuses on analyzing and predicting sequences of data points. It deals with problems where the order and relationships between data points are significant, such as time series forecasting, natural language processing, and protein sequence analysis. Common algorithms used in sequence modeling include recurrent neural networks (RNNs), long short-term memory (LSTM) networks, and Transformers, which can capture complex patterns and dependencies within the data sequences. By understanding these patterns, sequence modeling techniques can generate predictions or generate entirely new sequences based on the learned context. These techniques allow the agent to learn from the history of states, actions, and rewards, which in turn helps it generate an optimal sequence of actions that maximize the cumulative reward over time. The necessity of reinforcement learning via sequence modeling arises in various applications, including: Natural language understanding: In tasks like dialogue systems or machine translation, agents must generate appropriate responses based on the context provided by the input text, which often requires understanding the relationships between words and phrases over time. Time-series prediction: When predicting future values in time-series data, such as stock prices or weather patterns, it is crucial to learn the data’s temporal dependencies and underlying patterns, which can be addressed using sequence modeling techniques combined with reinforcement learning. Robot control: In robotics, agents often need to learn the optimal sequence of actions to complete specific tasks, such as object manipulation or navigation, based on the history of states and rewards. Reinforcement learning via sequence modeling provides a suitable framework to tackle such problems. What is the Decision Transformer model? Its architecture and key components The Decision Transformer model, introduced by Chen L. et al. in “Decision Transformer: Reinforcement Learning via Sequence Modeling,” transforms the reinforcement learning (RL) landscape by treating RL as a conditional sequence modeling problem. Instead of relying on

- 13. 13/22 traditional RL methods, like fitting a value function to guide action selection and maximize returns, the Decision Transformer utilizes a sequence modeling algorithm (i.e., the Transformer) to generate future actions that achieve a specified desired return. This innovative approach hinges on an autoregressive model conditioned on the desired return, past states, and actions. By using generative trajectory modeling, that predicts the combined patterns of situations, actions, and rewards, the method simplifies and makes it easier for people to understand the reinforcement learning process, the Decision Transformer sidesteps the conventional RL process of return maximization and directly generates a series of future actions that fulfill the desired return. a t-1 a t CausalTransformer RTG Action State t-1 R t-1 S t-1 a t R t S t a LinearDecoder emb.+pos.enc. LeewayHertz The process is as follows: The Decision Transformer takes information from the most recent events (the last K timesteps) and uses three pieces of data for each event: the expected future rewards (return-to-go), the current situation (state), and the action taken. Using this information, the model can learn to generate actions that help achieve a desired outcome. Tokens are embedded using a linear layer for vector states or a CNN encoder for frame-based states. This means that the information (tokens) about the current situation is transformed into a more useful format for the model. If the information is a simple list of numbers (vector state), a basic method (linear layer) is used. If the information is a series of images (frame-based states), a more complex method (CNN encoder) designed for image processing is utilized. These inputs are then processed by a GPT-2 model, which predicts future actions through autoregressive modeling, employing a causal self-attention mask.

- 14. 14/22 The Decision Transformer model paves the way for a new era of advanced and efficient AI systems by reimagining the RL paradigm and leveraging the power of Transformers. This groundbreaking approach can potentially reshape reinforcement learning and unlock exciting new applications across diverse domains. The key components of a Decision Transformer include: Transformer architecture: The Decision Transformer utilizes the Transformer architecture, which is known for effectively processing sequential data. Transformers consist of multi-headed self-attention mechanisms and feed-forward neural networks, which enable the model to capture complex dependencies within the input data. State-Action-Reward sequences: The Decision Transformer learns from a dataset of state-action-reward (SAR) sequences, where each sequence represents the agent’s interaction with the environment. These sequences are used to train the Transformer in an offline reinforcement learning setting. Reward modeling: The Decision Transformer uses the reward information embedded within the SAR sequences to predict the optimal actions that lead to the highest cumulative rewards. This reward-driven approach ensures that the generated action sequences align with the agent’s goal of maximizing its rewards in the environment. Context conditioning: To predict the optimal action, the Decision Transformer conditions its predictions on the current state and the history of past states, actions, and rewards. This context conditioning allows the model to learn the relationships between past experiences and future actions, thus guiding the agent towards better decisions. Offline Reinforcement Learning: The Decision Transformer operates in an offline reinforcement learning setting, where the agent learns from a pre-collected dataset of SAR sequences without actively exploring the environment. This approach reduces computational requirements and enables learning from diverse sources, such as human demonstrations or other agents’ experiences. Sequence generation: Leveraging the Transformer’s ability to generate future sequences, the Decision Transformer predicts the sequence of actions that will maximize the agent’s cumulative reward. This sequence generation capability is key to the model’s success in reinforcement learning tasks. Combining these components, the Decision Transformer creates a powerful model bridging the gap between Transformers and reinforcement learning, enabling more efficient and versatile learning in various domains. The architecture of the Decision Transformer model The architecture of a Decision Transformer is based on the original Transformer architecture, with modifications to adapt it for reinforcement learning tasks. The key components of the Decision Transformer architecture are:

- 15. 15/22 Input representation: The input for the Decision Transformer consists of state-action- reward (SAR) tuples from the agent’s interaction with the environment. These tuples are represented as a sequence of tokens, which are then embedded into continuous vectors using an embedding layer. Positional encoding: Positional encodings are added to the embedded input vectors to capture the relative position of the input tokens. This allows the model to recognize the order of the input tokens and understand the temporal relationships between them. Multi-head self-attention: The core of the Transformer architecture is the multi-head self-attention mechanism. This component computes attention scores for each input token, capturing the dependencies between different tokens in the input sequence. The self-attention mechanism is applied multiple times in parallel (multi-head) to learn different aspects of the input data. Feed-forward neural network: A feed-forward neural network is applied to each position independently after the multi-head self-attention layer. This component consists of two linear layers with an activation function (usually ReLU) in between, which helps the model learn more complex patterns within the input data. Residual connections and layer normalization: In each layer of the Transformer, residual connections combine the outputs of the self-attention and feed-forward layers with their inputs. Layer normalization is then applied to stabilize the training process and improve the model’s generalization capability. Stacking layers: The Decision Transformer’s architecture comprises multiple layers of self-attention and feed-forward components stacked on top of each other. This deep structure enables the model to learn complex hierarchical relationships within the input data. Output layer: The final layer of the Decision Transformer is a linear layer that maps the continuous output vectors back to the action space, generating the predicted action sequence. Training objective: The Decision Transformer is trained to predict the action sequence that maximizes the agent’s cumulative reward. This is achieved by minimizing the cross-entropy loss between the predicted and ground truth action sequences derived from the input SAR tuples. In the Decision Transformer architecture, reinforcement learning is integrated implicitly through the way the input data is represented and the training objective is defined. The reinforcement learning aspects can be found in the following components: Input representation: The input consists of state-action-reward (SAR) tuples collected from the agent’s interaction with the environment. This data directly links the architecture to the reinforcement learning problem, as it captures the agent’s experience and the corresponding rewards.

- 16. 16/22 Training objective: The training objective in the Decision Transformer is designed to predict the action sequence that maximizes the agent’s cumulative reward. By minimizing the cross-entropy loss between the predicted action sequence and the ground truth action sequence, the model learns to make decisions that result in higher rewards. While the architecture itself is built on the Transformer structure, the reinforcement learning aspects are incorporated through the input representation and training objective. The Decision Transformer does not explicitly include traditional reinforcement learning components such as value functions or policy gradients. Instead, it leverages the power of the Transformer architecture to learn an effective policy for the given task by predicting future action sequences that maximize cumulative rewards. How does the Decision Transformer work? The Decision Transformer model works by leveraging the Transformer architecture to learn a policy for reinforcement learning tasks from collected trajectory data. Here’s a detailed explanation of its learning process: Learning from collected trajectory data The Decision Transformer uses offline reinforcement learning, meaning it works by learning from previously collected data, which includes sequences of states, actions, and rewards (called trajectories). These trajectories come from past experiences of other agents or even human demonstrations. To understand how the Decision Transformer works, imagine you have a dataset of these trajectories representing different experiences and their corresponding rewards. The model takes in the current situation and information about past experiences and tries to predict the best sequence of actions that would lead to the highest total reward. In simpler terms, the Decision Transformer looks at what has been done before in similar situations and uses this knowledge to decide what actions to take in the current situation to achieve the best outcome. Handling long-term dependencies One of the key challenges in reinforcement learning is handling long-term dependencies, where the agent must make decisions based on information that may not be immediately available or only becomes relevant after a series of steps. Transformers, by design, are well- suited to address this issue due to their ability to capture contextual information and dependencies across long sequences.

- 17. 17/22 The Decision Transformer benefits from the self-attention mechanism in Transformers, which allows the model to focus selectively on different parts of the input sequence and learn long- range dependencies between the states, actions, and rewards. As a result, the model can capture the relationships between past experiences and future decisions, effectively learning a policy that accounts for both immediate and long-term rewards. By combining the powerful representation learning capabilities of Transformers with trajectory data from reinforcement learning tasks, the Decision Transformer is able to learn effective policies that consider both short-term and long-term dependencies. This results in an agent capable of making decisions that maximize the cumulative reward over time, addressing one of the major challenges in reinforcement learning. Applications and use cases of the Decision Transformer The Decision Transformer has a wide range of applications and use cases due to its ability to learn from trajectory data and handle long-term dependencies. Some possible applications and use cases include: Robotics: Decision Transformers can be used to train robotic agents to perform complex tasks, such as navigation, manipulation, and interaction with their environment, by learning from collected demonstrations or past experiences. Autonomous vehicles: In the context of self-driving cars, Decision Transformers can learn optimal decision-making policies from collected driving data, enabling safer and more efficient navigation in various traffic conditions. Games: Decision Transformers can be employed to train agents that excel in strategic games, like Go, chess, or poker, by learning from a dataset of expert gameplays and mastering long-term planning. Finance: Decision Transformers can be applied to optimize trading strategies, portfolio management, and risk assessment by learning from historical financial data and taking into account long-term dependencies. Healthcare: In medical decision-making, Decision Transformers can be used to optimize treatment plans, diagnose diseases, or predict patient outcomes by learning from historical patient data and understanding the long-term effects of different interventions. Natural Language Processing (NLP): Although not a direct application of reinforcement learning, Decision Transformers can be adapted to solve NLP tasks that involve making decisions or generating responses based on long-term dependencies in text data, such as machine translation, text summarization, or dialogue systems. Supply chain optimization: Decision Transformers can be used to optimize inventory management, demand forecasting, and routing decisions in complex supply chain networks by learning from historical data and considering long-term effects.

- 18. 18/22 Energy management: Decision Transformers can help optimize energy consumption, demand response, and grid stability in smart grid applications by learning from historical usage data and considering long-term dependencies. How to use the Decision Transformer in a Transformer? Scenario: Here, we are demonstrating a Decision Transformer model, created by Huggingface. This model is trained to become an ‘expert’ using offline reinforcement learning. It’s specifically designed to operate effectively in the Gym Walker2d environment.This model learns how to make optimal decisions for a two-legged walking agent without directly interacting with the environment, using past experiences and data collected from other sources. The model then applies its knowledge to help the agent navigate and perform tasks effectively in the Gym Walker2d setting. Step 1: Install the package pip install git+https://github.com/huggingface/transformers Step 2: Initiating the model Employing the Decision Transformer is fairly straightforward; however, due to its autoregressive nature, attention must be given to properly organizing the model’s inputs at every time step. HuggineFace has provided a Python script and a Colab notebook to illustrate the utilization of this model. Use the below code to load pre-trained Decision Transformer model from transformers import DecisionTransformerModel model_name = "edbeeching/decision-transformer-gym-hopper-expert" model = DecisionTransformerModel.from_pretrained(model_name) Step 3: Establishing the environment HuggingFace offers pre-trained checkpoints for Gym Hopper, Walker2D, and Halfcheetah environments. import gym env = gym.make("Hopper-v3") state_dim = env.observation_space.shape[0] # state size act_dim = env.action_space.shape[0] # action size

- 19. 19/22 Step 4: Establishing autoregressive prediction function The model executes an autoregressive prediction process, where predictions made at the current time-step t rely on outputs from earlier time-steps. This function contains considerable detail, which we will strive to elucidate in the comments. # Function that gets an action from the model using autoregressive prediction # with a window of the previous 20 timesteps. def get_action(model, states, actions, rewards, returns_to_go, timesteps): # This implementation does not condition on past rewards states = states.reshape(1, -1, model.config.state_dim) actions = actions.reshape(1, -1, model.config.act_dim) returns_to_go = returns_to_go.reshape(1, -1, 1) timesteps = timesteps.reshape(1, -1) # The prediction is conditioned on up to 20 previous time-steps states = states[:, -model.config.max_length :] actions = actions[:, -model.config.max_length :] returns_to_go = returns_to_go[:, -model.config.max_length :] timesteps = timesteps[:, -model.config.max_length :] # pad all tokens to sequence length, this is required if we process batches padding = model.config.max_length - states.shape[1] attention_mask = torch.cat([torch.zeros(padding), torch.ones(states.shape[1])]) attention_mask = attention_mask.to(dtype=torch.long).reshape(1, -1) states = torch.cat([torch.zeros((1, padding, state_dim)), states], dim=1).float() actions = torch.cat([torch.zeros((1, padding, act_dim)), actions], dim=1).float() returns_to_go = torch.cat([torch.zeros((1, padding, 1)), returns_to_go], dim=1).float() timesteps = torch.cat([torch.zeros((1, padding), dtype=torch.long), timesteps], dim=1)

- 20. 20/22 # perform the prediction state_preds, action_preds, return_preds = model( states=states, actions=actions, rewards=rewards, returns_to_go=returns_to_go, timesteps=timesteps, attention_mask=attention_mask, return_dict=False,) return action_preds[0, -1] Step 5: Model evaluation To assess the model, we require further information, specifically the mean and standard deviation of the states utilized during training. Luckily, this data is accessible for each checkpoint’s model card on the Hugging Face Hub! Additionally, we need a target return for the model. This highlights the strength of return- conditioned offline reinforcement learning. By adjusting the target return, we can control the performance of the policy effectively. This capability could be extremely valuable in a multiplayer scenario, where we might want to fine-tune the difficulty of an opponent bot to match the player’s skill level. TARGET_RETURN = 3.6 # This was normalized during training MAX_EPISODE_LENGTH = 1000 state_mean = np.array( [1.3490015, -0.11208222, -0.5506444, -0.13188992, -0.00378754, 2.6071432, 0.02322114, -0.01626922, -0.06840388, -0.05183131, 0.04272673,]) state_std = np.array( [0.15980862, 0.0446214, 0.14307782, 0.17629202, 0.5912333, 0.5899924, 1.5405099, 0.8152689, 2.0173461, 2.4107876, 5.8440027,])

- 21. 21/22 state_mean = torch.from_numpy(state_mean) state_std = torch.from_numpy(state_std) state = env.reset() target_return = torch.tensor(TARGET_RETURN).float().reshape(1, 1) states = torch.from_numpy(state).reshape(1, state_dim).float() actions = torch.zeros((0, act_dim)).float() rewards = torch.zeros(0).float() timesteps = torch.tensor(0).reshape(1, 1).long() # take steps in the environment for t in range(max_ep_len): # add zeros for actions as input for the current time-step actions = torch.cat([actions, torch.zeros((1, act_dim))], dim=0) rewards = torch.cat([rewards, torch.zeros(1)]) # predicting the action to take action = get_action(model, (states - state_mean) / state_std, actions, rewards, target_return, timesteps) actions[-1] = action action = action.detach().numpy() # interact with the environment based on this action state, reward, done, _ = env.step(action) cur_state = torch.from_numpy(state).reshape(1, state_dim)

- 22. 22/22 states = torch.cat([states, cur_state], dim=0) rewards[-1] = reward pred_return = target_return[0, -1] - (reward / scale) target_return = torch.cat([target_return, pred_return.reshape(1, 1)], dim=1) timesteps = torch.cat([timesteps, torch.ones((1, 1)).long() * (t + 1)], dim=1) if done: break The code block executes the interaction between the Decision Transformer model and the environment, updating the model’s inputs and tensors as necessary while taking steps within the environment. Endnote The Decision Transformer model transforms the way reinforcement learning agents are trained and interact with their environments. By merging the best of both worlds – the unparalleled ability of transformers to process sequential data and the dynamic learning capabilities of reinforcement learning – Decision Transformers unlock new possibilities for a range of applications and industries. With their efficient offline learning process and proficiency in handling long-term dependencies, Decision Transformers address the limitations of traditional RL approaches and unlock new potential for creating advanced, intelligent systems. The applications for Decision Transformers are vast and far-reaching, from autonomous vehicles and robotics to strategic gameplay and personalized user experiences. As we look to the future of AI, the Decision Transformer model stands as a testament to the power of innovation and interdisciplinary research. By embracing the synergy between Transformer architectures and reinforcement learning, we can unlock a new era of AI- powered solutions, enhancing our capabilities and improving our understanding of the world around us. Leverage advanced Decision Transformer models to enhance AI-driven customer experiences. Contact LeewayHertz’s Transformer development services for your consultancy and development needs!