Breaking down the AI magic of ChatGPT: A technologist's lens to its powerful components!

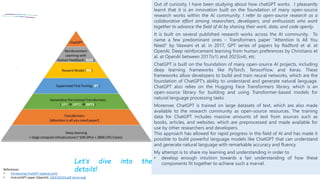

- 1. Out of curiosity, I have been studying about how chatGPT works. I pleasantly learnt that it is an innovation built on the foundation of many open-source research works within the AI community. I refer to open-source research as a collaborative effort among researchers, developers, and enthusiasts who work together to advance the field of AI by sharing their work, data, and code openly. It is built on several published research works across the AI community. To name a few predominant ones – Transformers paper "Attention Is All You Need" by Vaswani et al. in 2017, GPT series of papers by Radford et al. at OpenAI, Deep reinforcement learning from human preferences by Christiano et al. at OpenAI between 2017(v1) and 2023(v4), etc. ChatGPT is built on the foundation of many open-source AI projects, including deep learning frameworks like PyTorch, TensorFlow, and Keras. These frameworks allow developers to build and train neural networks, which are the foundation of ChatGPT's ability to understand and generate natural language. ChatGPT also relies on the Hugging Face Transformers library, which is an open-source library for building and using Transformer-based models for natural language processing tasks Moreover, ChatGPT is trained on large datasets of text, which are also made available to the research community as open-source resources. The training data for ChatGPT includes massive amounts of text from sources such as books, articles, and websites, which are preprocessed and made available for use by other researchers and developers. This approach has allowed for rapid progress in the field of AI and has made it possible to build powerful language models like ChatGPT that can understand and generate natural language with remarkable accuracy and fluency. My attempt is to share my learning and understanding in order to • develop enough intuition towards a fair understanding of how these components fit together to achieve such a marvel. References: • Introducing ChatGPT (openai.com) • InstructGPT paper (OpenAI): 2203.02155.pdf (arxiv.org) Let’s dive into the details!

- 2. The significance of deep learning in contemporary AI lies in its ability to perform tasks that were previously difficult or impossible for traditional machine learning algorithms. Deep learning has been used to improve image and speech recognition, natural language processing, and autonomous driving, among other applications. It has also enabled the development of advanced AI systems, such as AlphaGo, which beat human champions at the game of Go. Importantly, Deep learning is a universal function approximator. This means that a deep neural network with a sufficient number of parameters can approximate any function, including highly nonlinear and complex ones, to an arbitrary degree of accuracy. One of the key advantages of deep learning is its ability to learn features automatically from raw data, which can save time and effort in feature engineering. Additionally, deep learning models can continue to improve their performance as they are exposed to more data, making them particularly useful in applications where data is abundant. As a result, deep learning has become a powerful tool for solving complex problems and driving innovation in AI. Image source: Deep learning - Wikipedia

- 3. Paper: [1706.03762] Attention Is All You Need (arxiv.org) Transformer architecture is arguably one of the most impactful research papers in the last few years. It has disrupted almost all subdomains of cognitive AI like natural language processing (NLP) tasks such as machine translation, question answering, language understanding, etc., computer vision tasks such as image classification, object detection, etc., speech processing tasks like Automatic Speech Recognition (ASR), diarization, etc., to reinforcement learning like TransformRL. The Transformer architecture is a type of neural network that uses self-attention mechanisms to process sequential data, such as natural language. Instead of using recurrent or convolutional layers, the Transformer network consists of an encoder and a decoder, both composed of multiple layers of self-attention and feedforward neural networks. Intuitively, The self-attention mechanism allows a neural network to dynamically focus on different parts of the input data by computing the importance of each element (such as word in a sentence) based on its relationship with all the other elements. This enables the network to process sequences of data effectively and adaptively, without relying on a fixed processing order.

- 4. Papers: language_understanding_paper.pdf (openai.com), Language Models are Unsupervised Multitask Learners (openai.com) [2005.14165] Language Models are Few-Shot Learners (arxiv.org) Then, comes the simple yet powerful and scalable idea of self-supervised learning. In this setup, the ML algorithm learns from unlabeled data by predicting certain aspects of the data, such as the next word in a sentence. This approach enables the development of models that can generalize well to new domains and tasks, without the need for labeled data. GPT, GPT 2 and GPT 3 applies this technique on hundreds of billions of tokens (read sub-words loosely) crawled on the Internet data to create what is called a base Language Model (LM). For training, only Decoder component of Transformer is employed in auto-regressive manner. Intuitively, it means that the model is asked to predict the next word or sequence of words given a context of preceding words from a corpus of text data and the process repeats over the humongous training data such as books, articles, websites, without any explicit supervision or labels from the training data. Importantly, the decoder implements a masked attention which intuitively means that only the past tokens are used for causal self-attention and the future tokens are masked during the attention calculation. Source: language_understanding_paper.pdf (openai.com)

- 5. Papers: language_understanding_paper.pdf (openai.com), Language Models are Unsupervised Multitask Learners (openai.com) [2005.14165] Language Models are Few-Shot Learners (arxiv.org) As an astonishing result of this simple training approach, the model learns what is popularly known as representation learning i.e., generate high-quality text representations that capture the semantic and syntactic structure of natural language. This enables the model to perform well on a wide range of downstream NLP tasks with minimal additional training. The models across the GPT versions all follow this basic approach, however, with increasing number of model layers results in higher number of parameters, data size, length of training time. A critical insight on the learnings of GPT LMs reveal that they are excellent meta and multi-task learners. As the authors of GPT3 explained in their paper, the model demonstrates zero-shot, one-shot and few-shot in-context learning during inference time without any gradient updates. This is truly mind-blowing! Source: [2005.14165] Language Models are Few-Shot Learners (arxiv.org)

- 6. Paper: [2203.02155] Training language models to follow instructions with human feedback (arxiv.org) Next, several humans (referred to as labelers) are engaged from different domains to create labelled data for different tasks. The labelers are hired following a screening test which is mentioned in the precursor of chatGPT called InstructGPT (see paper above). During this process, a labeler is shown a prompt from the prompt dataset. The labeler demonstrates the desired output. This prompt + labeler response is used as a supervised dataset. Of course, at a much smaller scale - may be thousands. The pre-trained auto regressive model (GPT) is used as a base to fine-tune following the prepared supervised dataset. This is referred to as Supervised Fine Tuning (SFT). InstructGPT paper (OpenAI): [2203.02155] Training language models to follow instructions with human feedback (arxiv.org) Prompt - A piece of text or a question that a user inputs to initiate a conversation with the model. The prompt provides context for the model to generate a response that is relevant and useful to the user. The quality of the response generated by ChatGPT is highly dependent on the quality and specificity of the prompt provided by the user. Therefore, providing a clear and concise prompt can help ensure that the model generates a response that meets the user's needs. GPT [Prompt, Response] pairs dataset Supervised fine-tuning SFT

- 7. Paper: [2203.02155] Training language models to follow instructions with human feedback (arxiv.org) Well, the model is kind of ready, but its responses may have potential misalignment to human values. Examples of human values include honesty, compassion, fairness, respect, freedom, responsibility, and loyalty. Ensuring that AI systems are aligned with human values and goals can help to promote ethical and responsible use of AI and avoid potential negative consequences, such as bias or unintended harm. As one can appreciate, this is quite a challenging task for algorithms to learn about. To approach this quite open ended and challenging set of issues, reinforcement learning from human feedback (RLHF) is used. RLHF is a more recent approach that extends the reward model to incorporate feedback from humans. The idea is to provide a way for humans to give feedback to the AI system about whether its actions align with their values and preferences. The AI system can then use this feedback to adjust its behaviour and improve its alignment with human values over time. This reward model(𝑟𝜃 ) tends to assign higher reward (a scalar value) to the generated text if it is better aligned with human values. The Reward model (𝑟𝜃) is implemented by taking the SFT model and modifying it by replacing the unembedding layer with one that outputs a numerical value (as scalar reward). This reward can be used to assess the quality of the response. InstructGPT paper (Open AI) :[2203.02155] Training language models to follow instructions with human feedback (arxiv.org) – Labeling interface Loss function for the reward model: Intuition of the loss function is to compare two possible predictions and try to make the one that labelers thought was better to have a higher score. This formula uses the dataset of comparisons that labelers have already ranked for each prompt to express what the best predictions are.

- 8. In order to understand Reinforcement Learning from Human Feedback (RLHF), let’s first understand the bare basics of reinforcement learning system. In this type of machine learning, the task is to learn from experience through trial and error. Let’s take Autonomous driving as an example to help understand the different components of Reinforcement Learning(RL): Environment: The environment in which the autonomous vehicle operates, including the road, weather, other vehicles, pedestrians, and obstacles. State space: The set of possible states that the vehicle can be in at any given time. This includes information about the vehicle's speed, position, acceleration, and other relevant sensor data. Action space: The set of possible actions that the vehicle can take. This includes turning the steering wheel, applying the brakes, accelerating, and other actions that the vehicle can perform. Reward function: The function that evaluates the performance of the vehicle based on a predefined set of criteria. This includes staying within the lane, maintaining a safe distance from other vehicles, and reaching the destination as quickly and safely as possible. Policy: Part of the agent, the decision-making algorithm that maps the current state of the vehicle to the optimal action to take. This can be a neural network, decision tree, or other machine learning algorithm. Training data: The data used to train the reinforcement learning algorithm. This includes real-world driving data, simulated driving data, and other data sources. Image source: https://www.oreilly.com/library/view/ros-robotics- projects/9781783554713/ch10s02.html

- 9. With the bare basics on RL, let’s see how RL is used along with factoring in for human preferences. Now, the reward model we saw earlier is used in the reinforcement learning (RL) setup. Here, the SFT model is further fine-tuned using the reward model. It follows a policy gradient variant called Proximal Policy Optimization (PPO). In the context of policy gradient method of RL training involving language model, • Action space is all the possible tokens from the vocabulary of the SFT model. • State space is the possible input token sequences which is equivalent to size of vocabulary ^ maximum sequence length of input x. This is a very large state space. • policy function takes the state from the environment and returns the probability distribution over actions. Here the policy (𝜋𝜙 𝑅𝐿 ) is implemented as a language model that is initialized from the SFT model. It takes prompt (x) as input and returns a sequence of tokens with their probability distributions (𝜋𝜙 𝑅𝐿 (𝑦|𝑥) ). Intuitively the objective function does the following - the reward model output is adjusted with the difference between the SFT model output and the learned RL policy (using KL-Divergence). This mitigates over-optimization of the RL and ensures that the overall generated text is like the SFT model however adjusted for human preferences. nstructGPT paper (OpenAI): 2203.02155.pdf (arxiv.org) – Labeling interface

- 10. Well, as you must have already experienced, ChatGPT behaves like a Swiss knife. It can perform different types of tasks like brainstorming (e.g. create a 5-point strategy to start a company that is based on applied AI?), classification (e.g. rate sarcasm in the text in a scale of 1=not at all, 10=extremely sarcastic), information extraction (e.g. read all place names from the article below), generation (e.g. write a create ad for the following product description aimed at under 30 year adults to run on Facebook), rewriting (e.g. rewrite the following text to be more light-hearted), open/closed QA (e.g. what shape is the earth, ), role play (e.g. imagine you are a leading astronaut, explain <followed by a specific question>), summarization (summarize the following information for an 8th grade student), etc. In conclusion, the power comes from how the auto-regressive model surprisingly exhibits meta learning and multi-task learning capabilities coupled with the grounding to human values using the RM in an RLHF setup as we saw during our exploration. With the pace of advancements happening in the AI space, so much has happened since ChatGPT. Exciting times ahead!