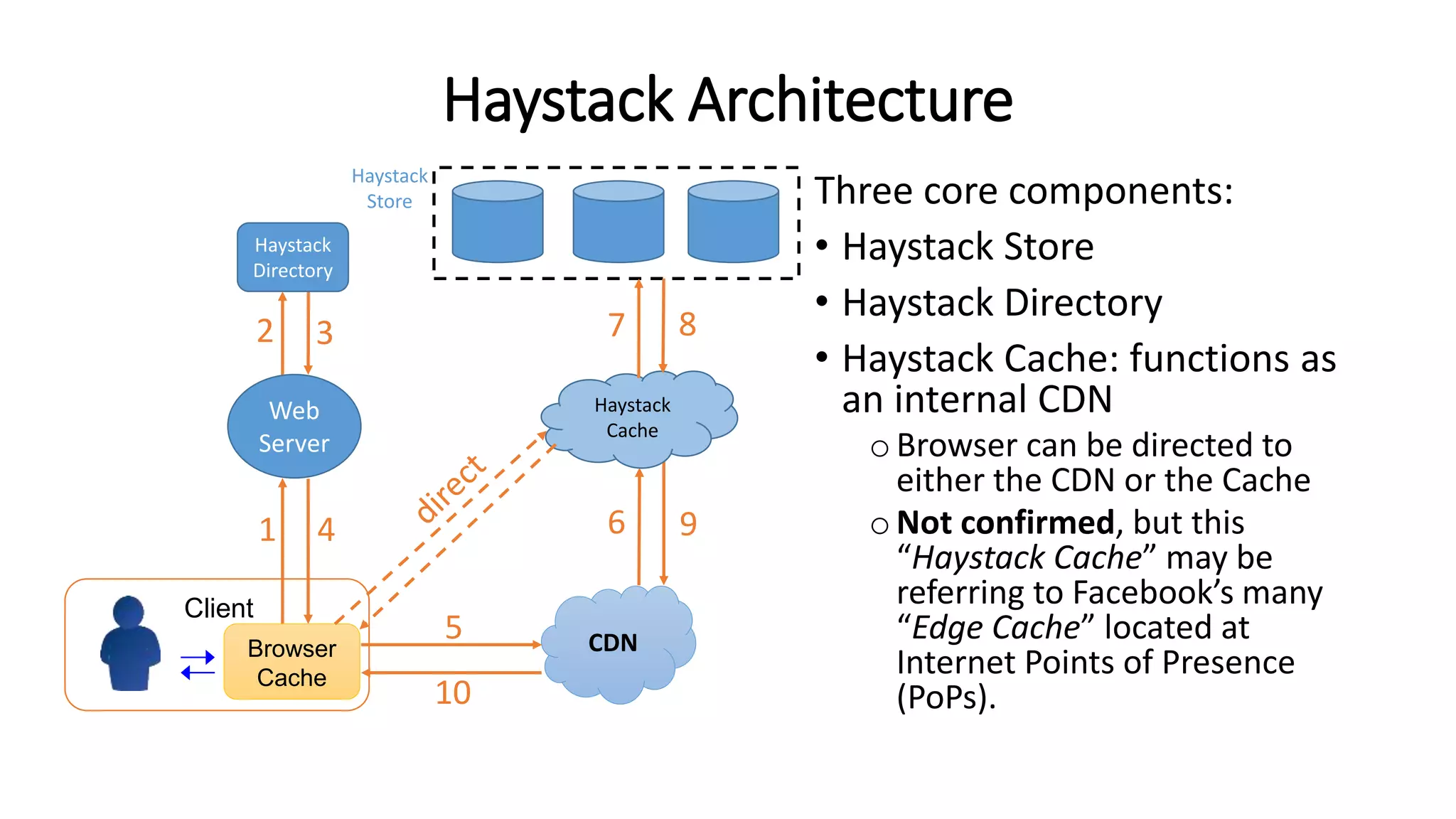

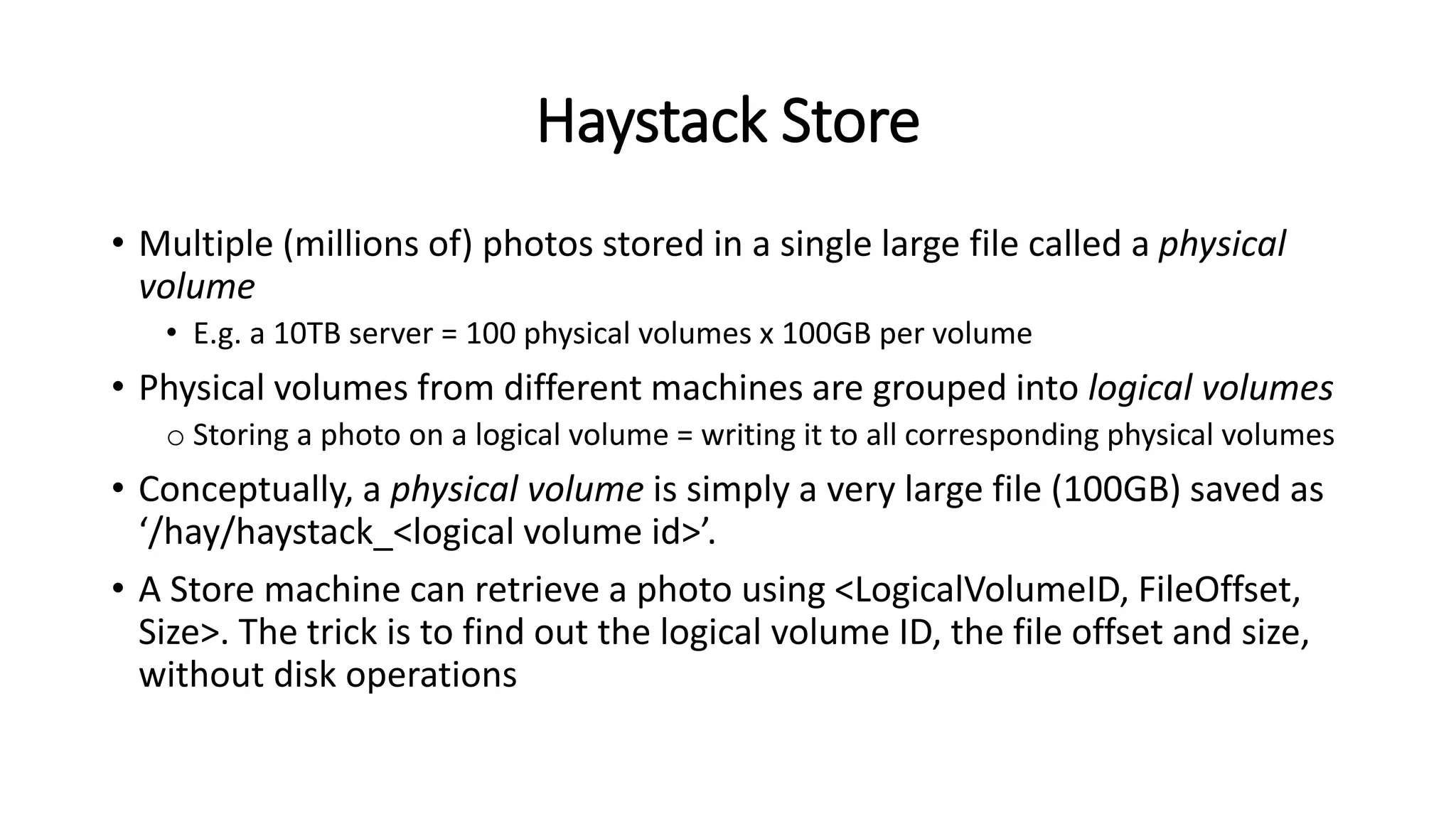

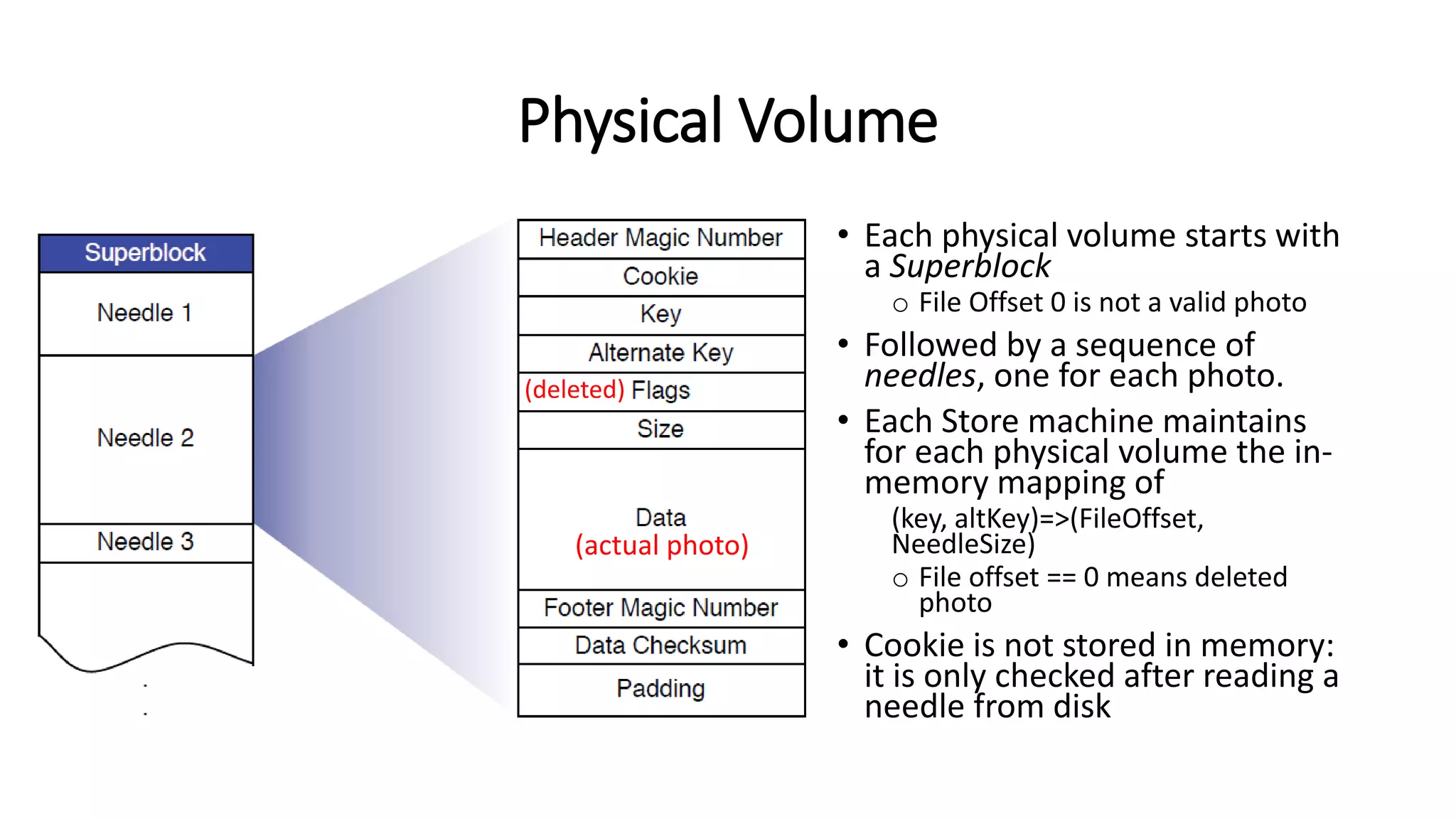

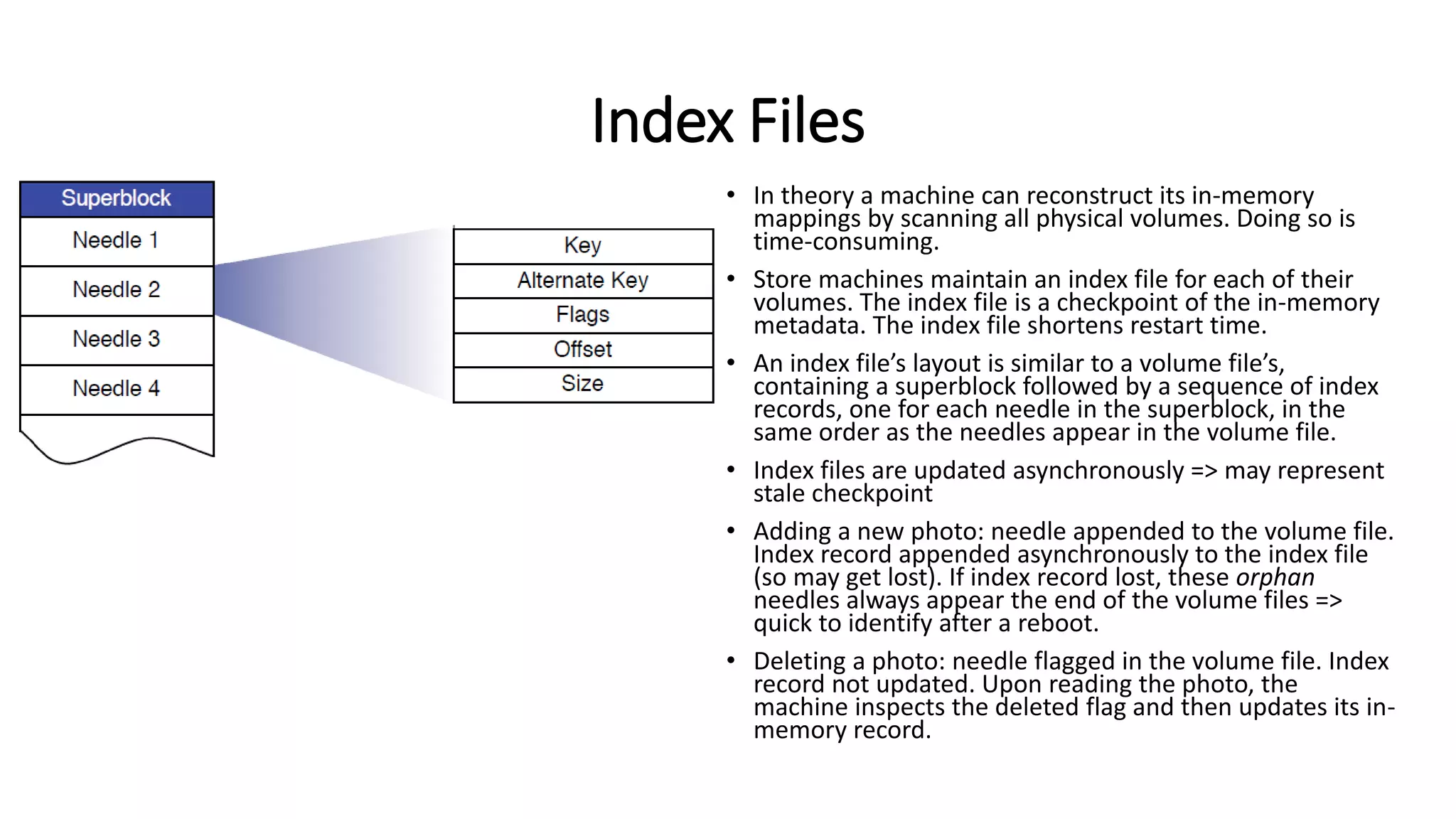

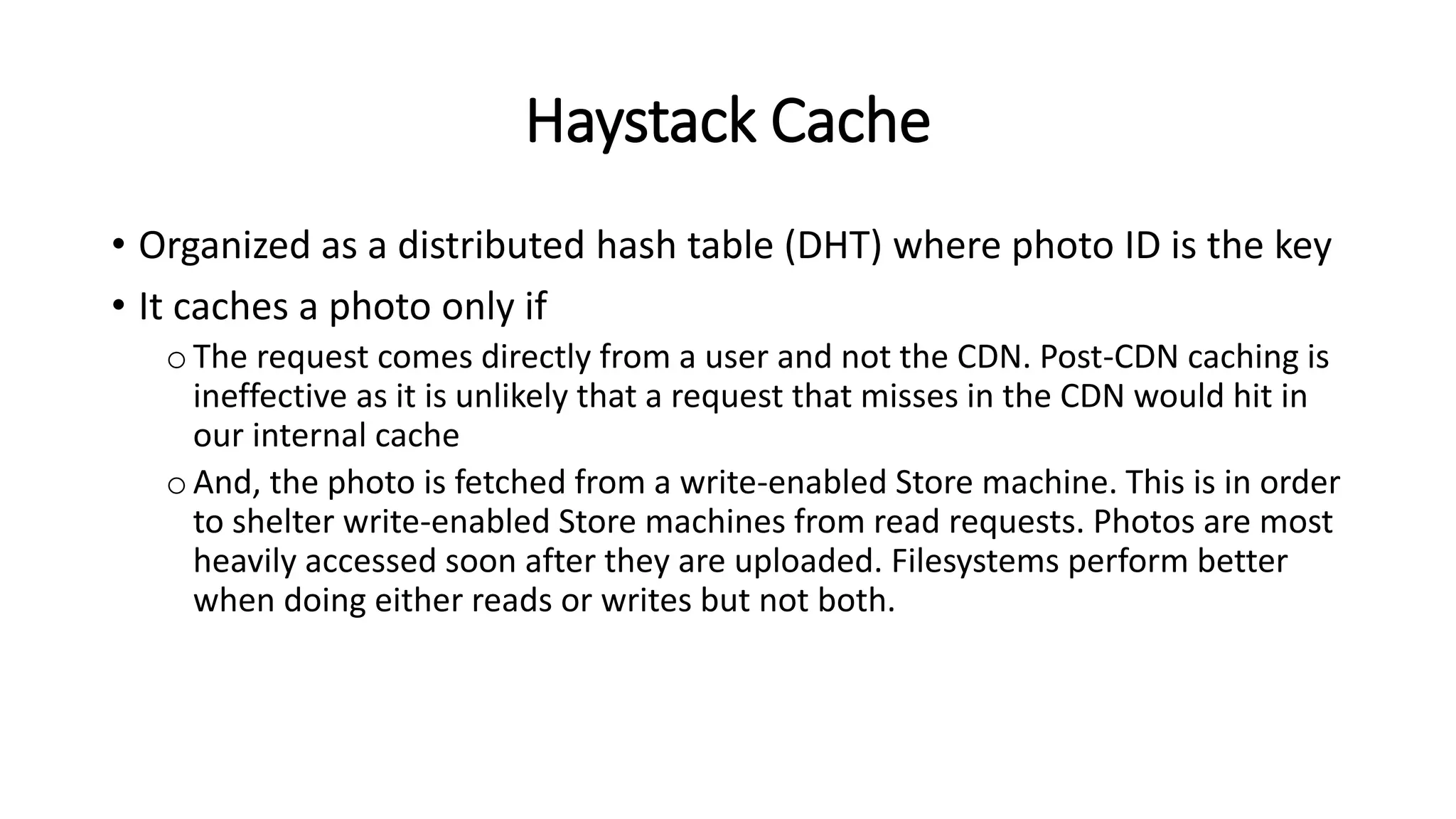

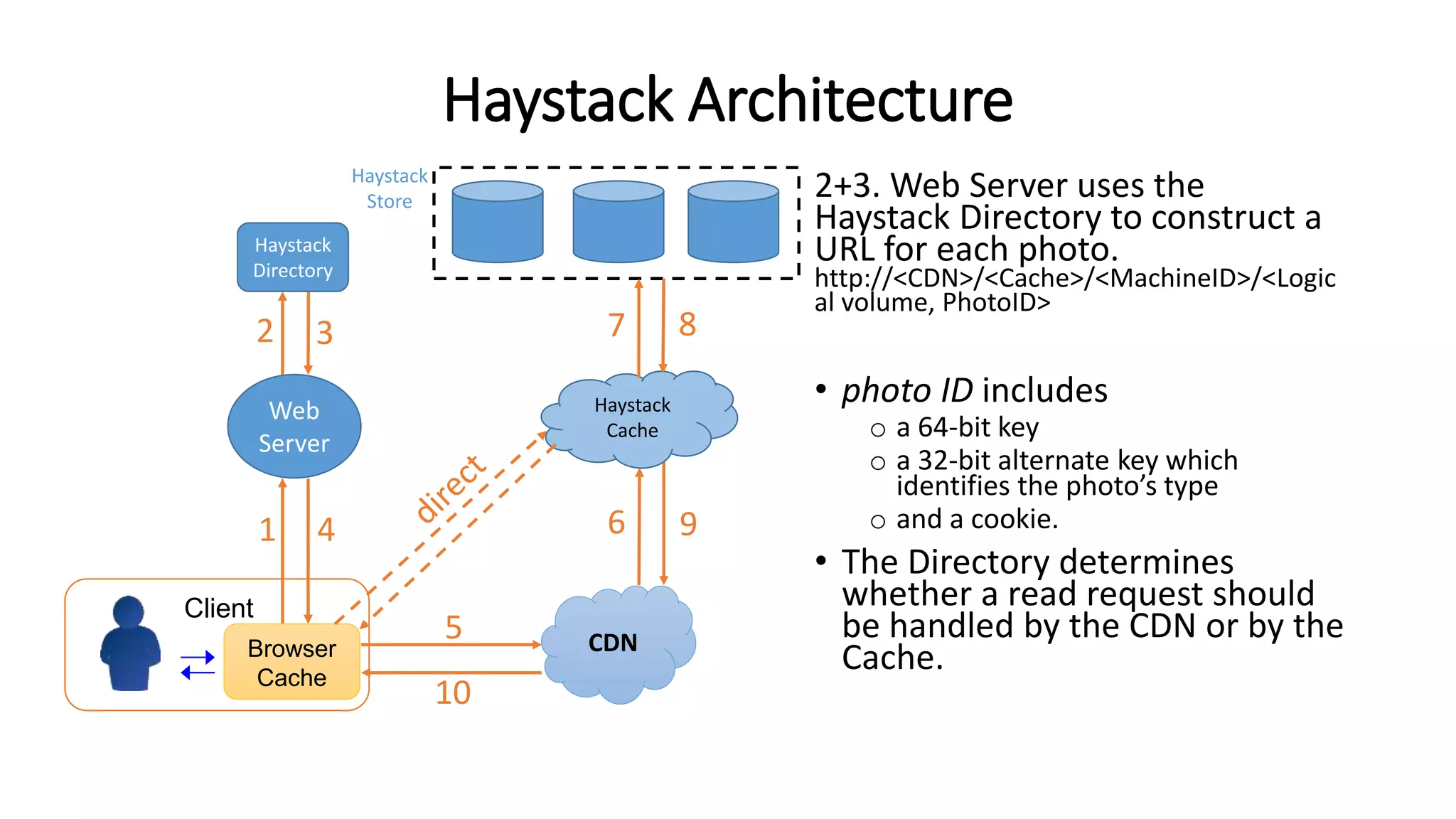

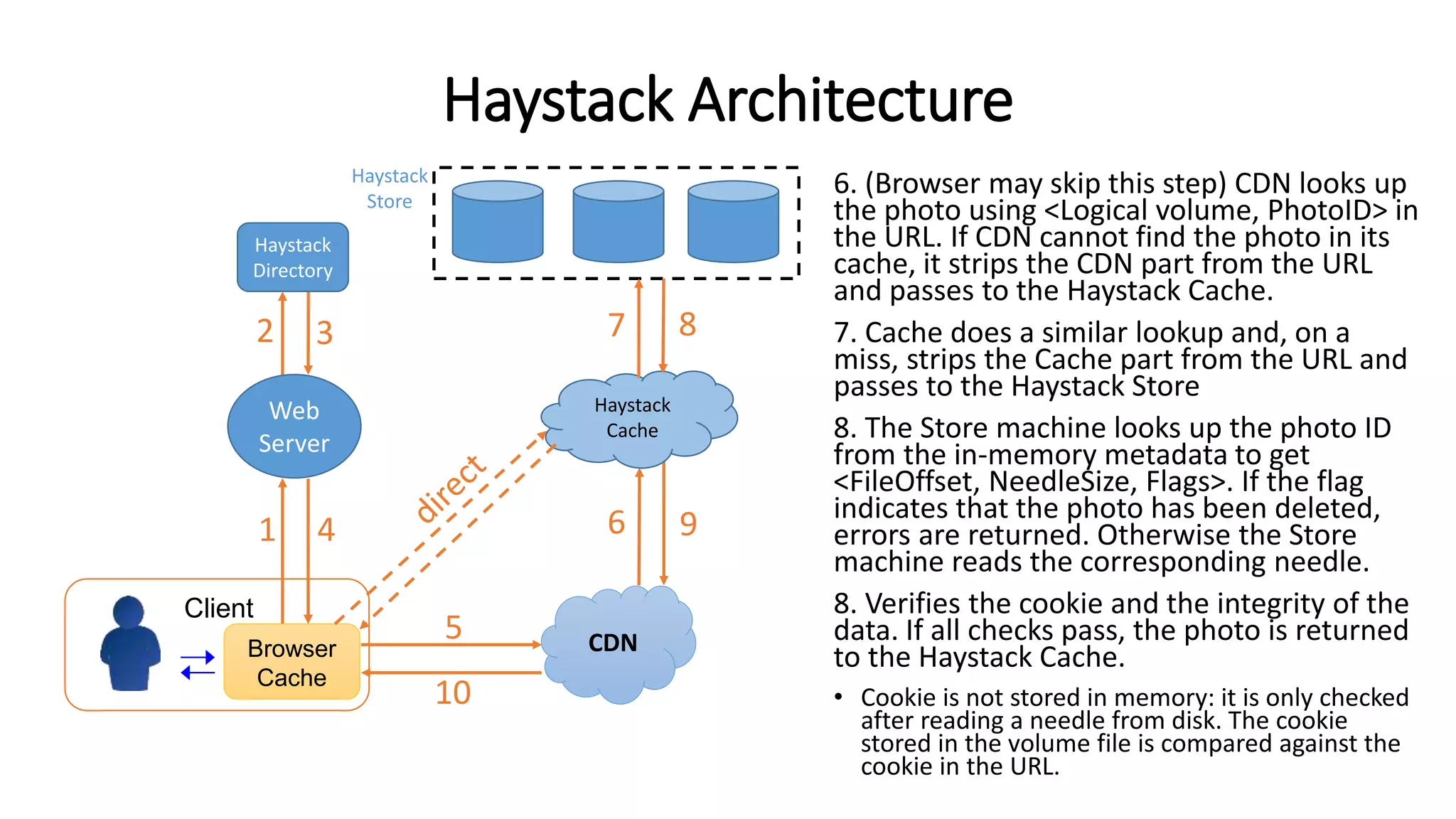

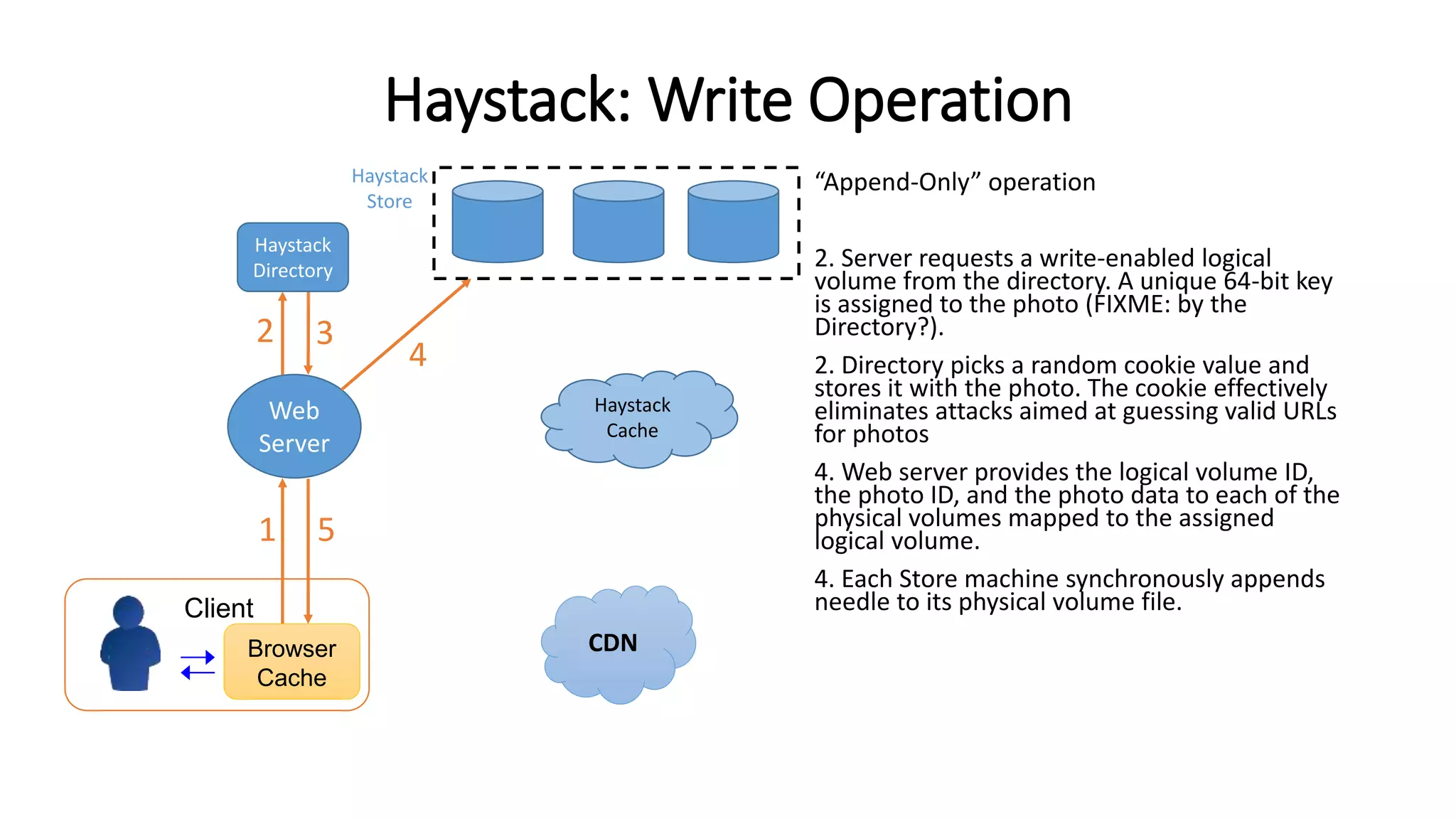

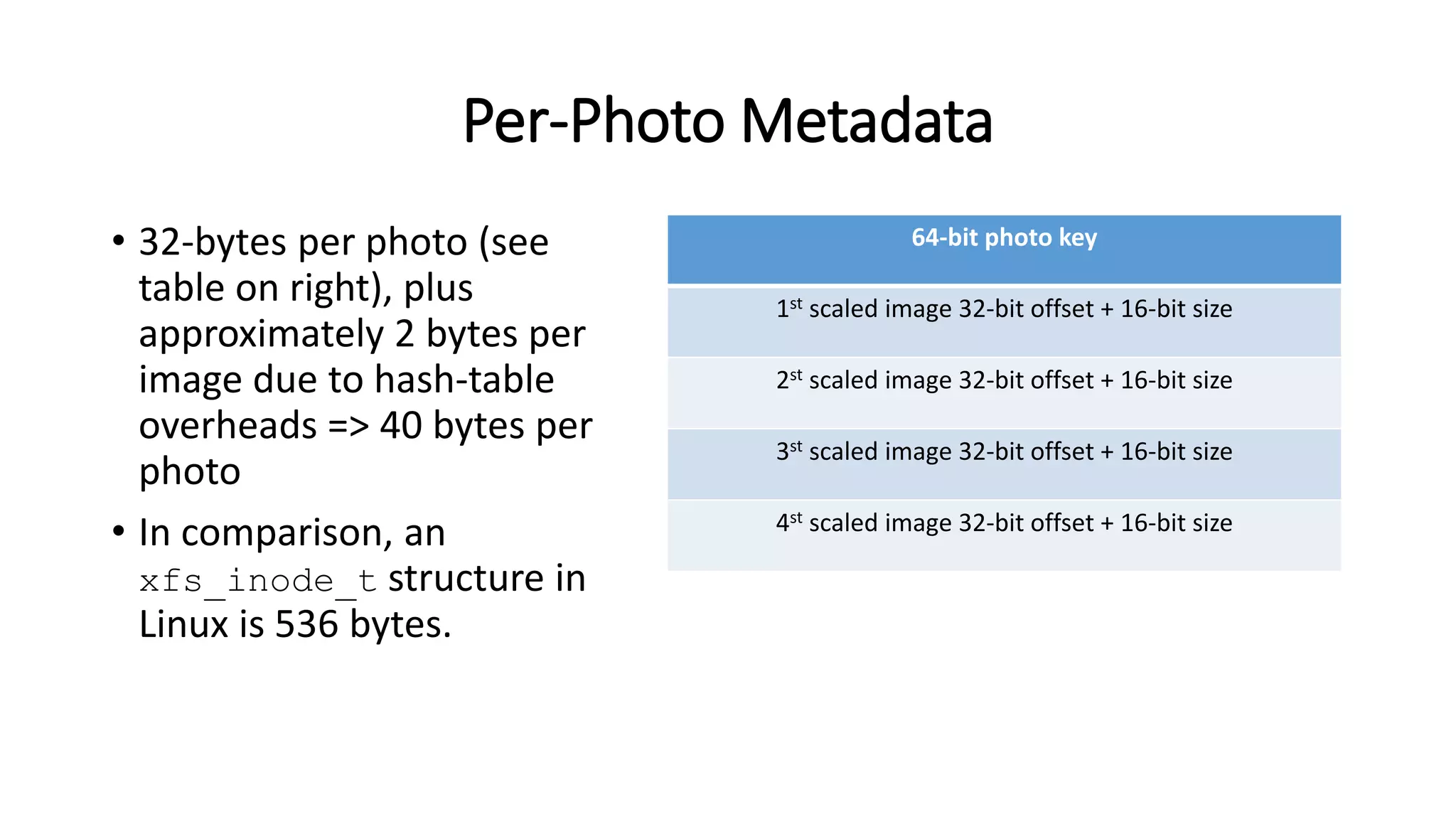

The document provides a summary of Facebook's Haystack photo storage system, contrasting it with Google's GFS by highlighting its architecture and functionality. Haystack combines multiple photos into large logical volumes and manages metadata efficiently without per-file overhead, accommodating Facebook's read-heavy photo usage without modification. Key components include the Haystack Store, Directory, and Cache, ensuring optimal performance and redundancy in photo retrieval and storage.