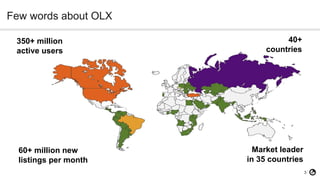

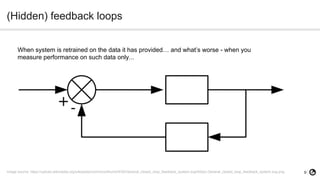

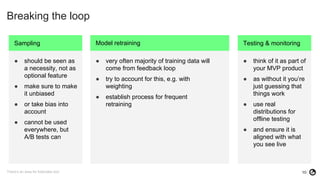

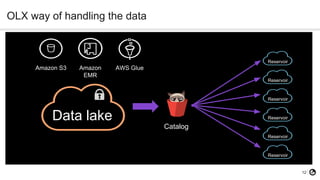

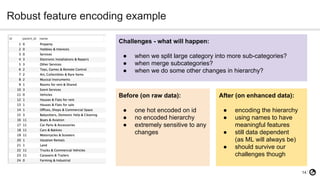

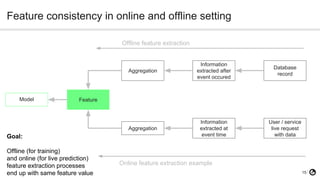

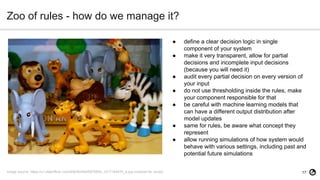

The document discusses technical debt in machine learning (ML), explaining its unique components and factors that contribute to its increase, such as lack of testing and inadequate monitoring. It emphasizes the importance of managing technical debt through frequent retraining, feature consistency, and clear decision-making processes. The author, a senior data scientist at OLX Group, highlights the rapid proliferation of technical debt in ML systems due to specific challenges in model performance and data dependencies.