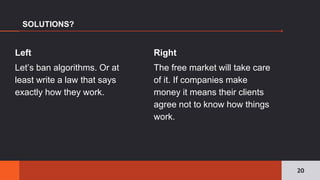

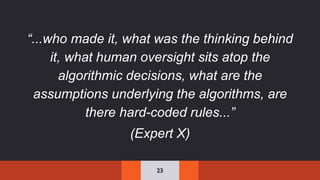

The document discusses the pervasive and often opaque nature of technology and algorithms, highlighting their potential to reinforce societal biases and create risks in information security. It emphasizes the need for transparency in algorithmic decision-making, including insights on functionality, human oversight, and data sources. Various proposed solutions to enhance transparency are explored, suggesting legislative action to clarify how algorithms operate and ensuring public accountability.

![17

“Man is a hackable animal [..]

Computers are hacked through pre-

existing faulty code lines. Humans

are hacked through pre-existing

fears, hatreds, biases and cravings”

Yuval Harari](https://image.slidesharecdn.com/algorithmicandtechnologicaltransparency-181103204404/85/Algorithmic-and-technological-transparency-17-320.jpg)