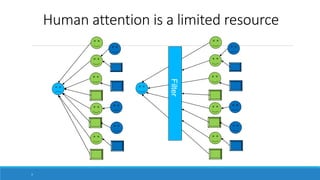

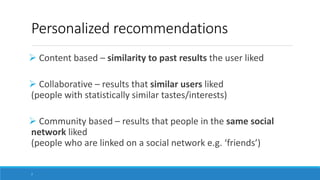

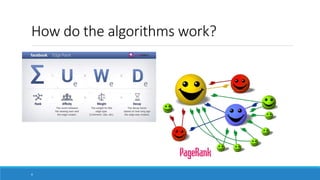

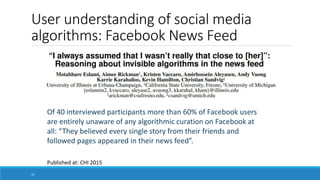

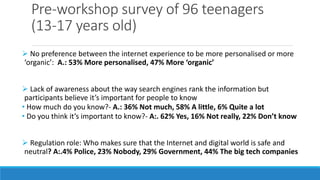

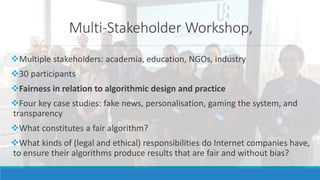

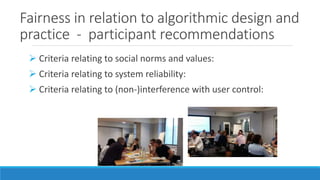

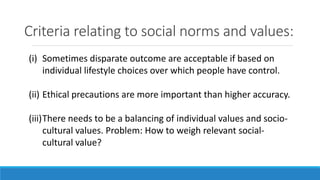

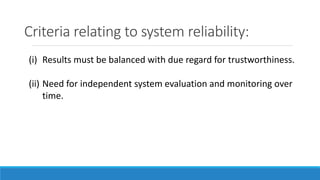

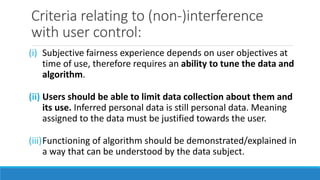

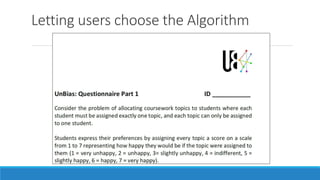

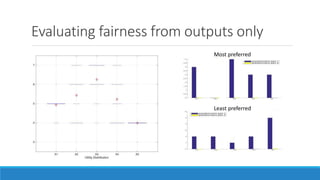

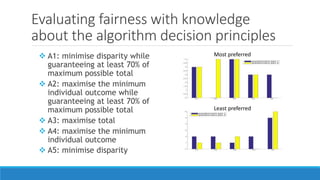

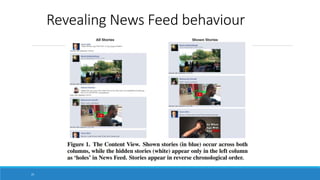

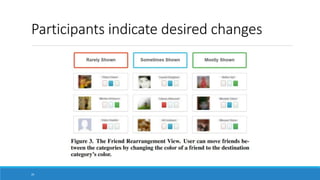

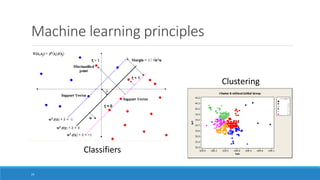

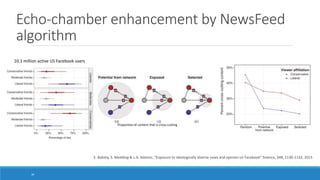

The document discusses human agency in algorithmic systems, particularly focusing on user perceptions and control over social media algorithms, like those used by Facebook. It highlights a lack of user awareness regarding algorithmic curation and presents recommendations for creating fair algorithms that balance individual and cultural values, ensure system reliability, and afford users greater control over their data. The findings stem from workshops and surveys involving various stakeholders, emphasizing the need for transparency and options for users in navigating algorithmic content.