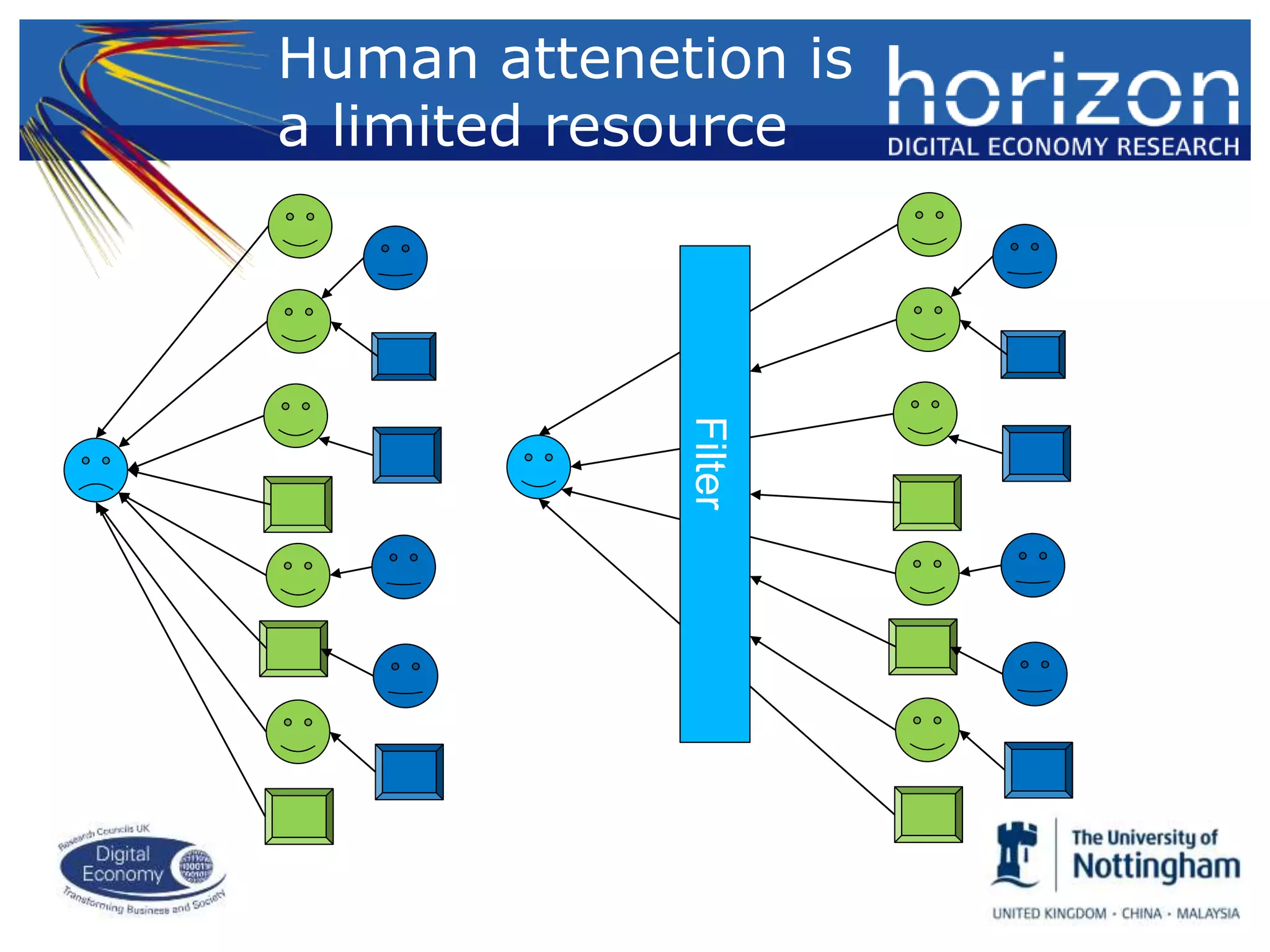

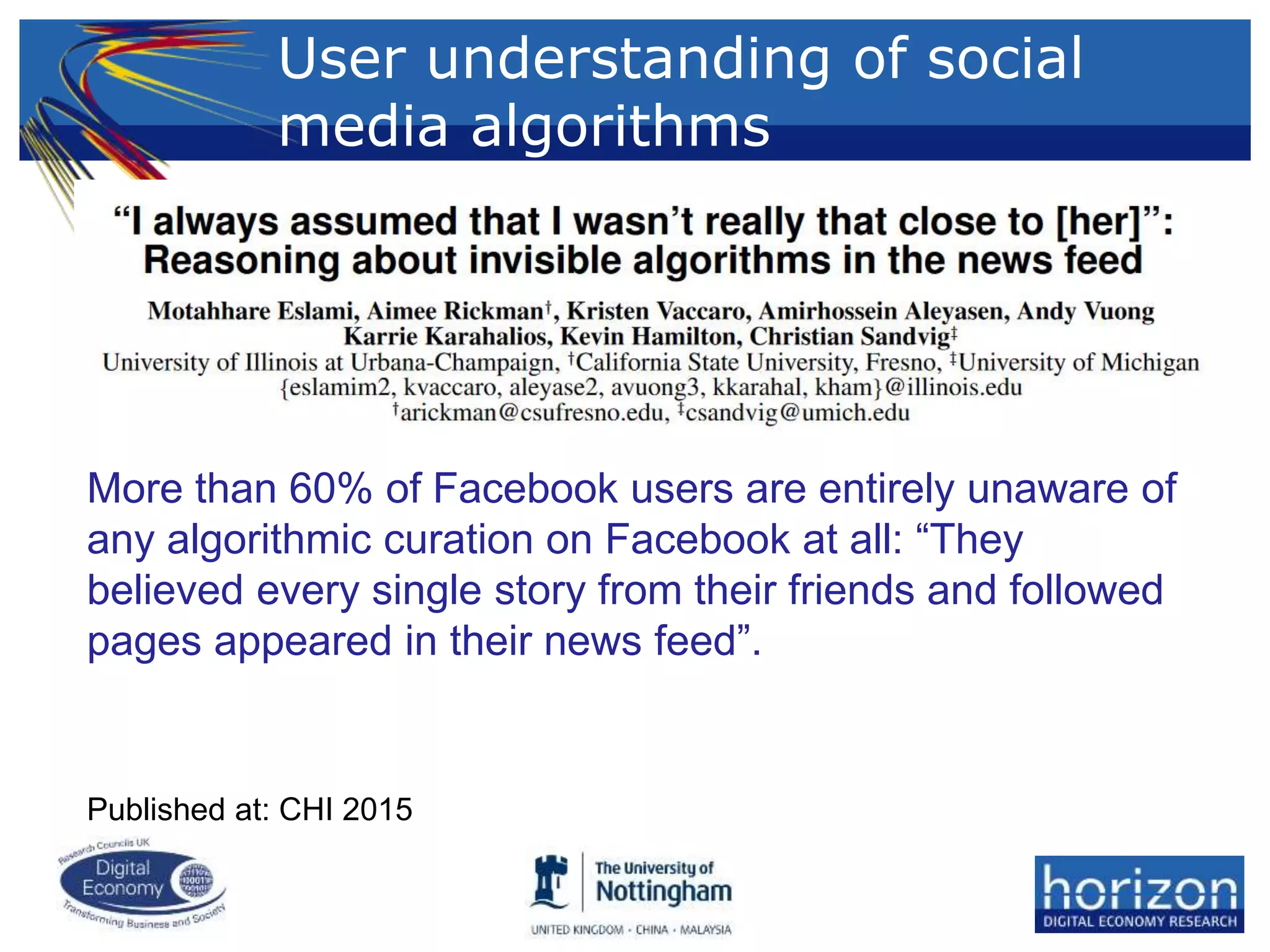

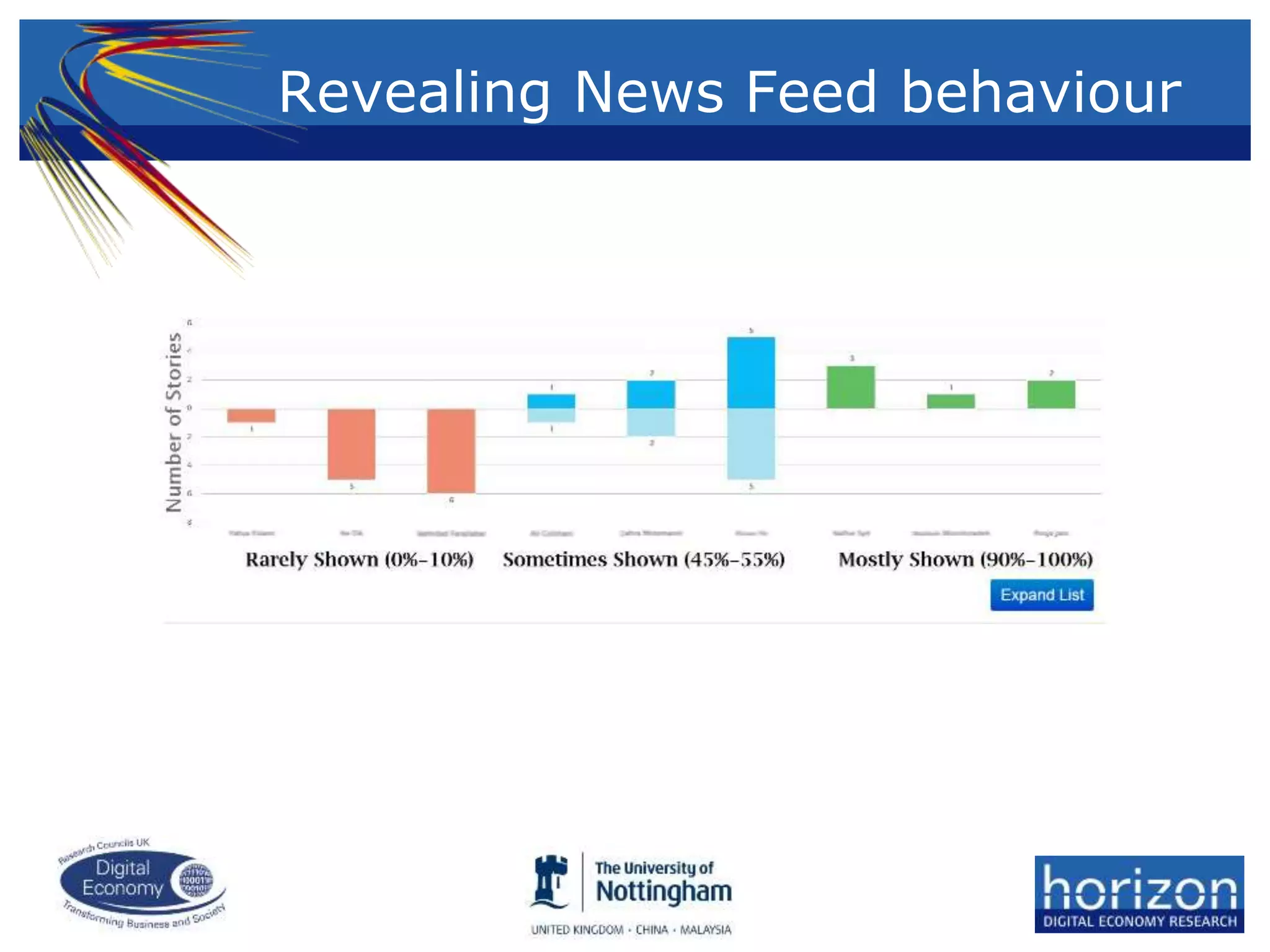

The document discusses user agency in algorithm-mediated social networks, emphasizing that user attention is a limited resource that platforms compete over, often through personalized recommendations. It highlights a lack of awareness among users regarding algorithmic filtering and the potential manipulation of behavior for advertising or political ends. The author proposes a framework for promoting fairness and transparency in algorithmic systems, including user education, tool development, and policy recommendations.