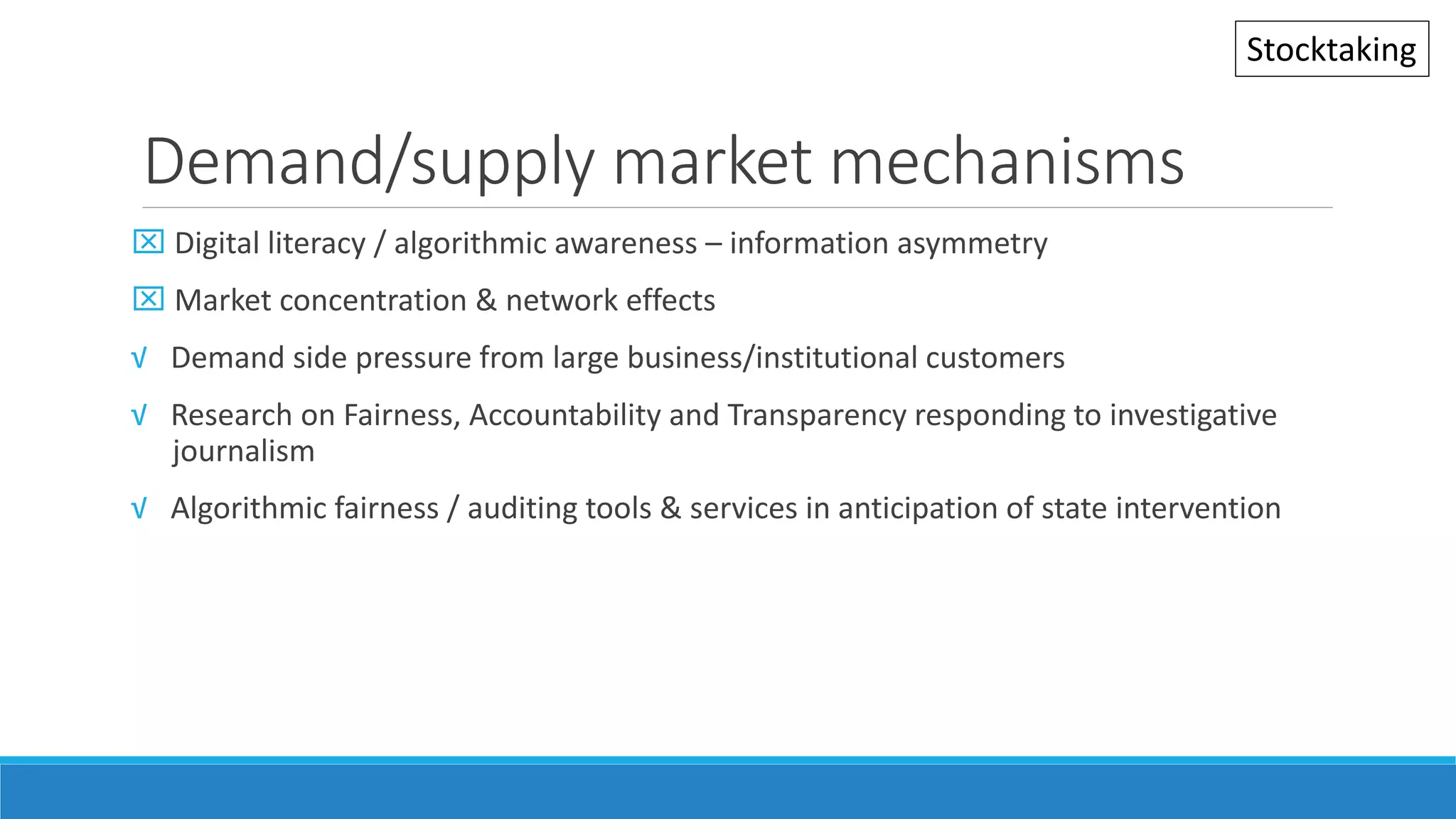

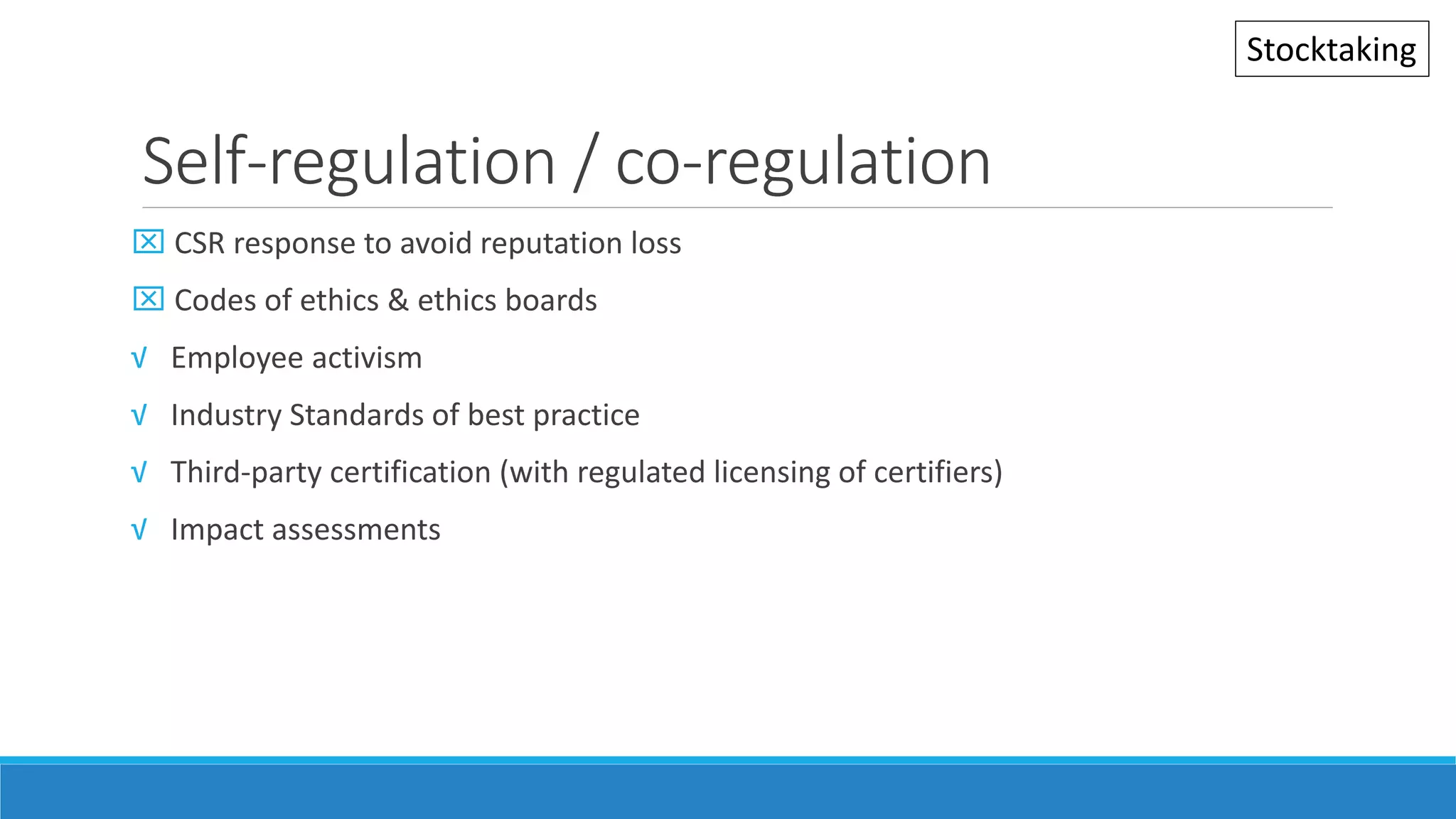

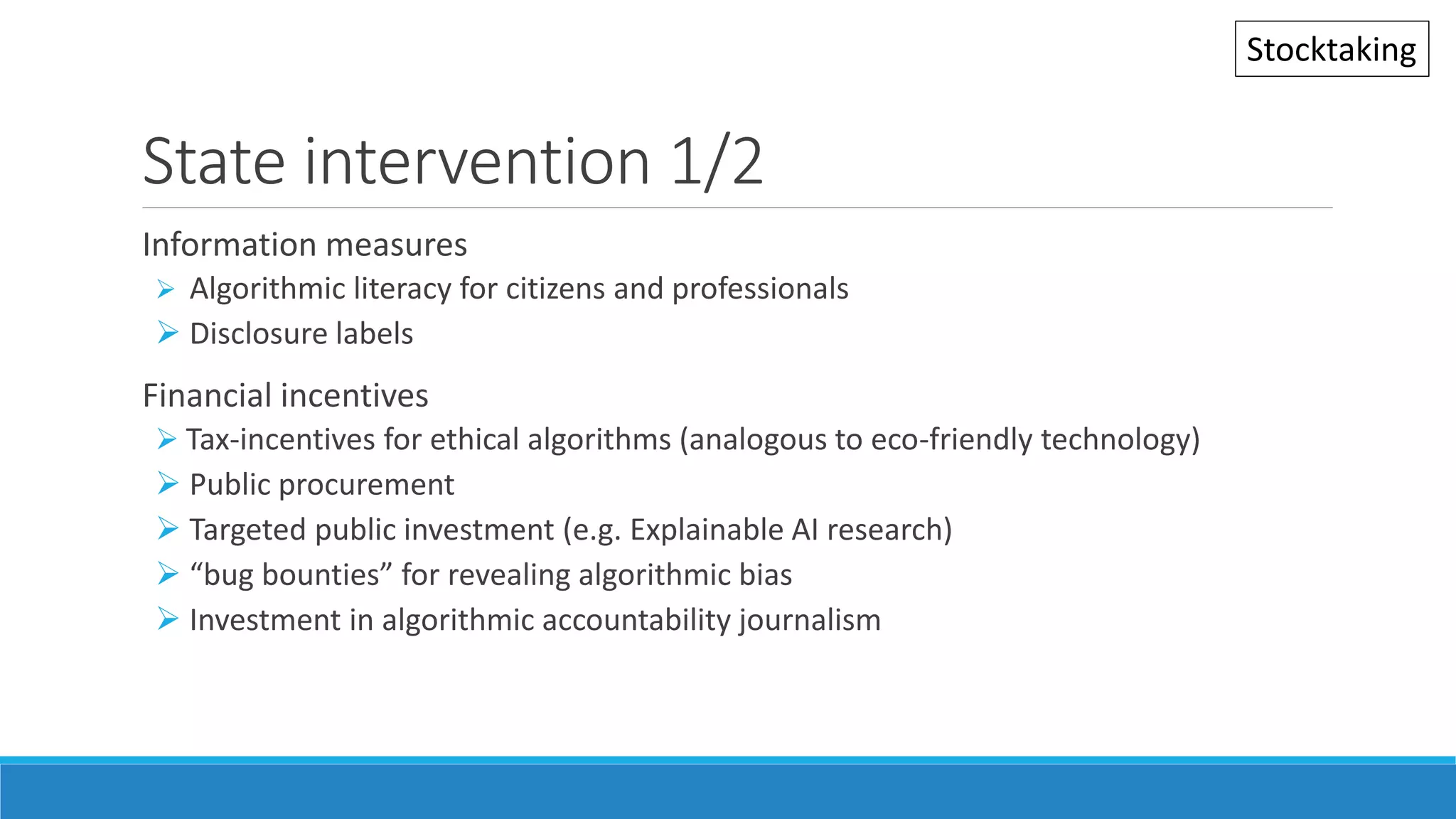

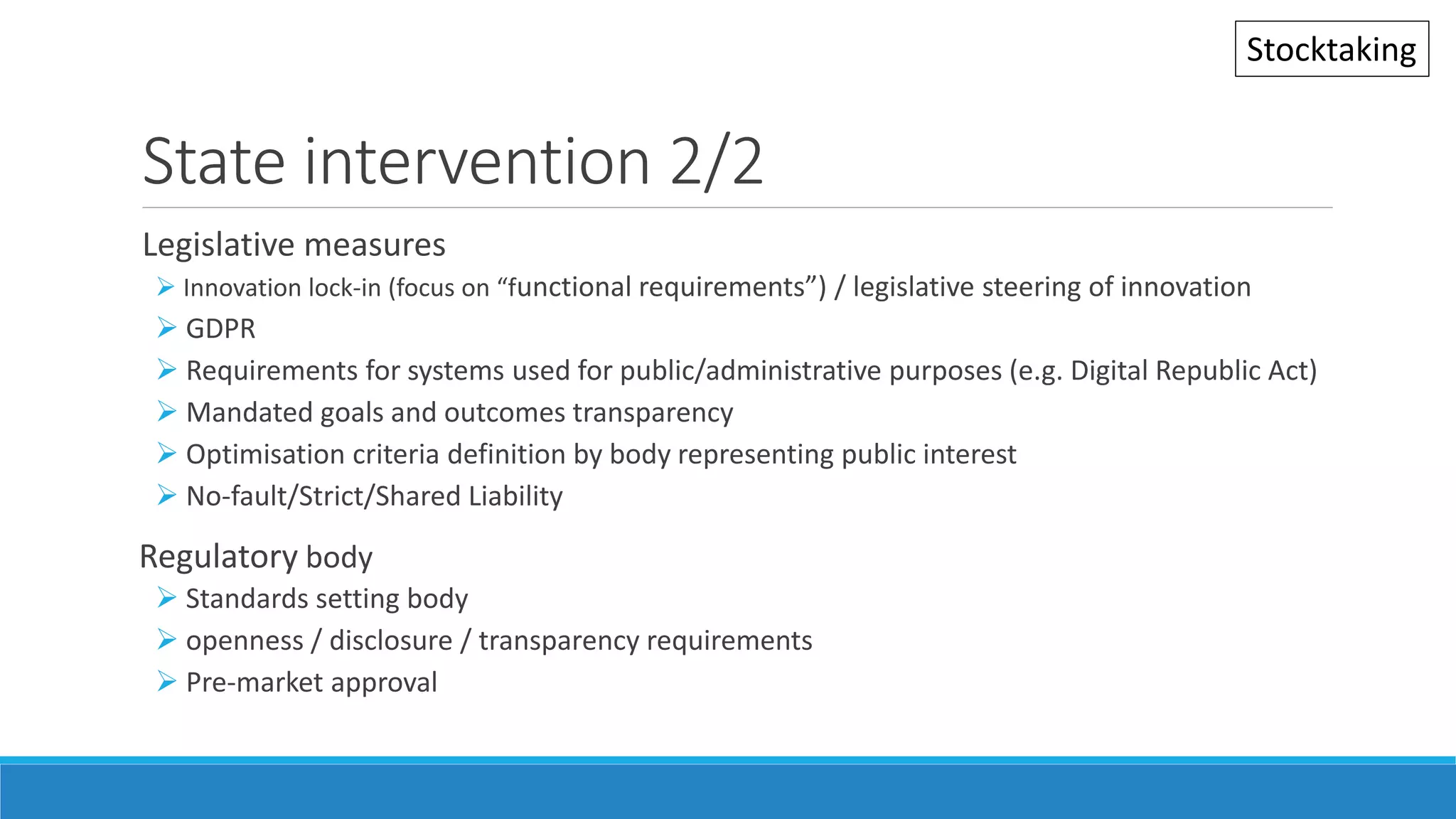

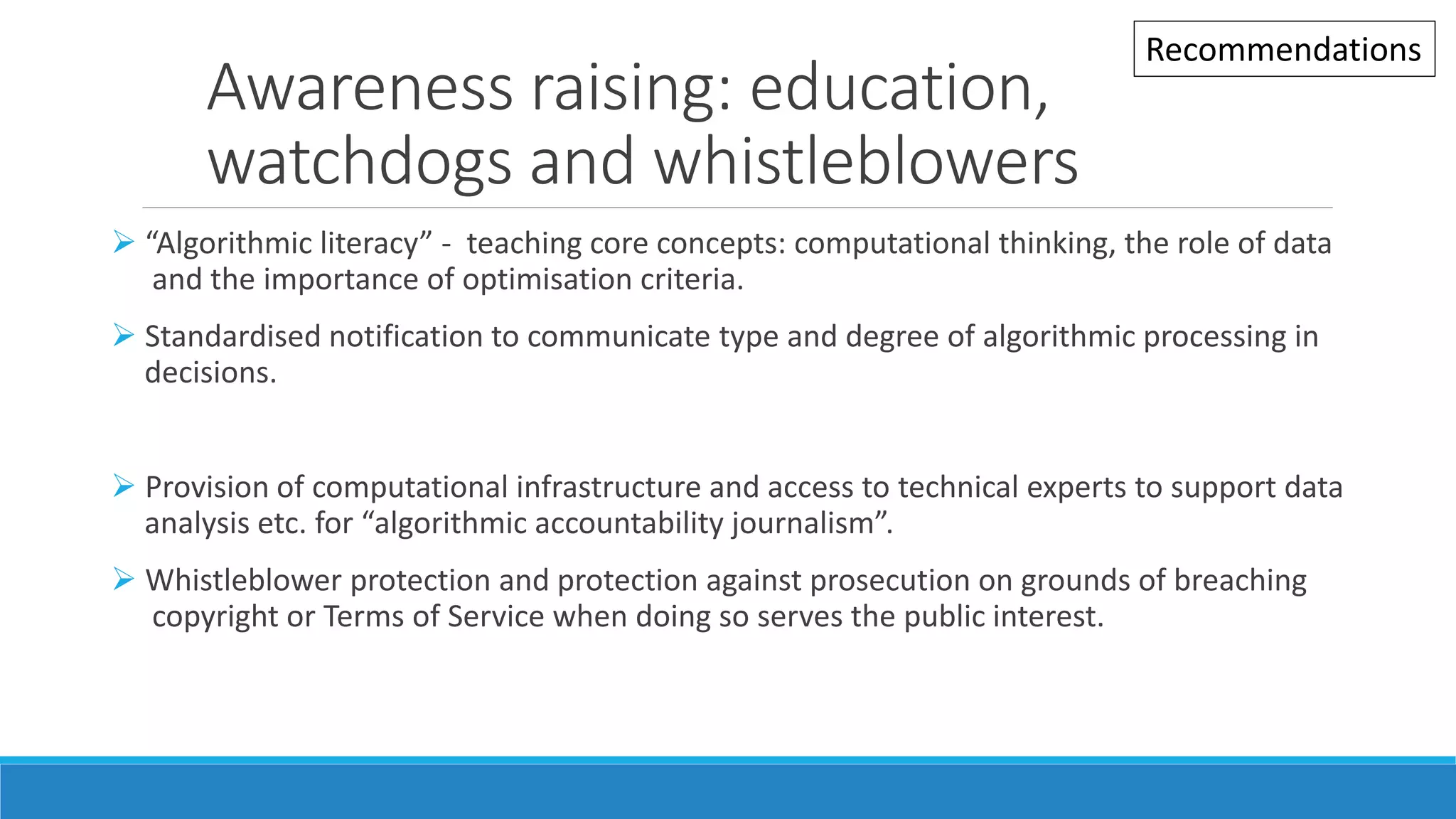

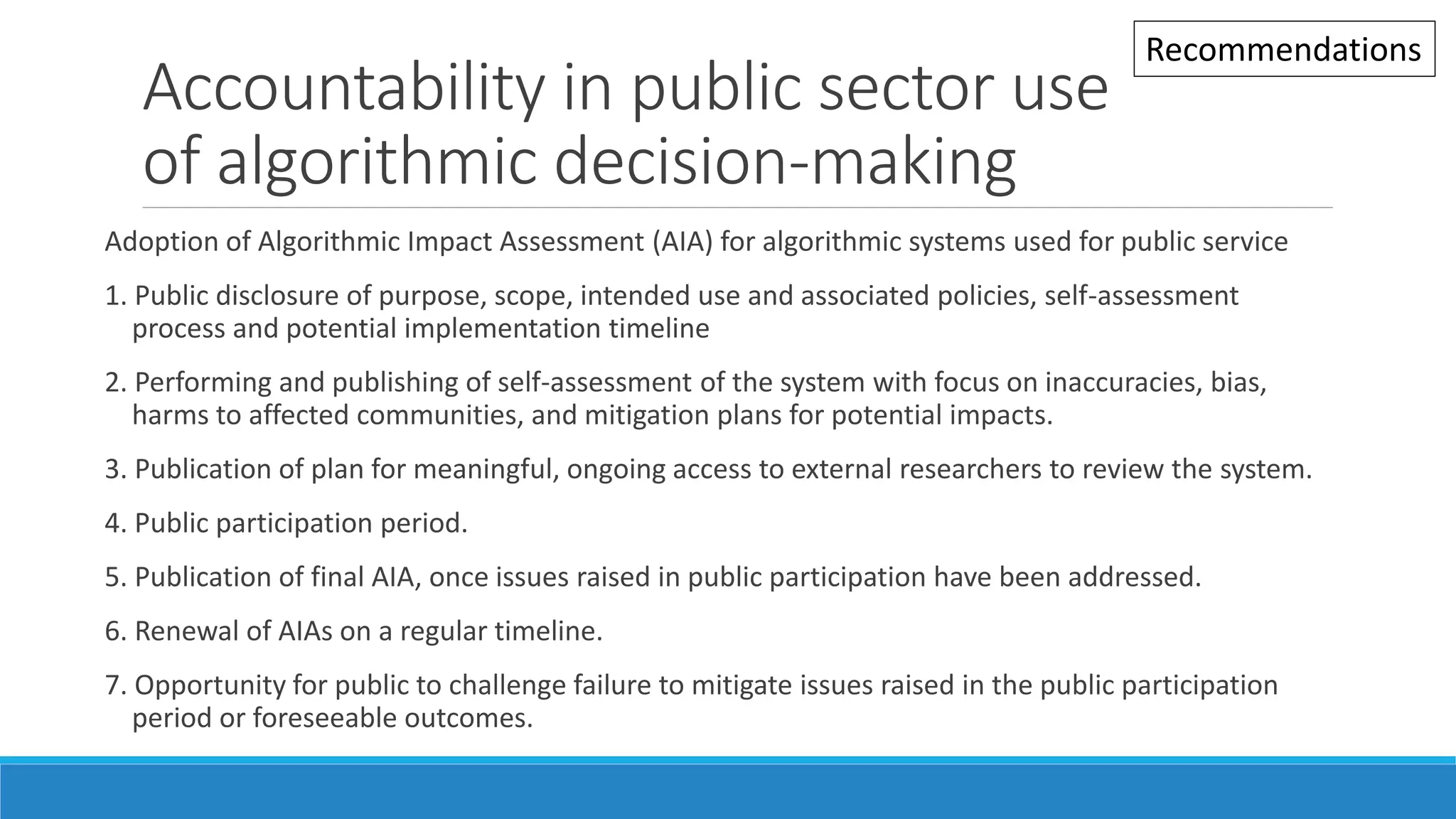

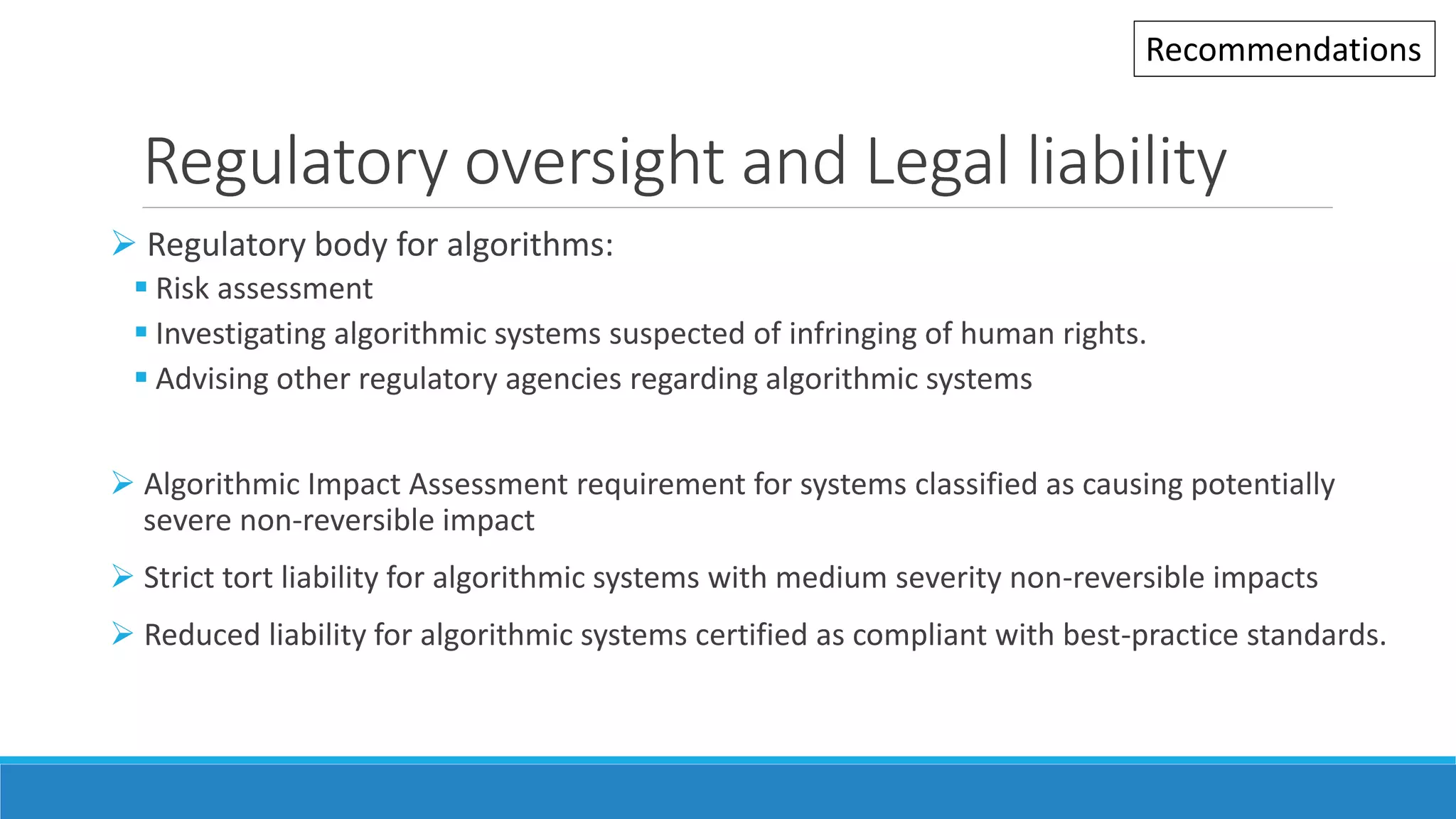

The document outlines a governance framework for algorithmic accountability and transparency, detailing regulatory successes and failures, with a focus on policy recommendations for improving digital literacy and algorithmic awareness. It emphasizes the need for algorithmic impact assessments in public sector use and suggests establishing a global algorithm governance forum for best practices and coordination. Key proposals include regulatory oversight, public participation in algorithmic system reviews, and legal liabilities for harmful algorithmic applications.