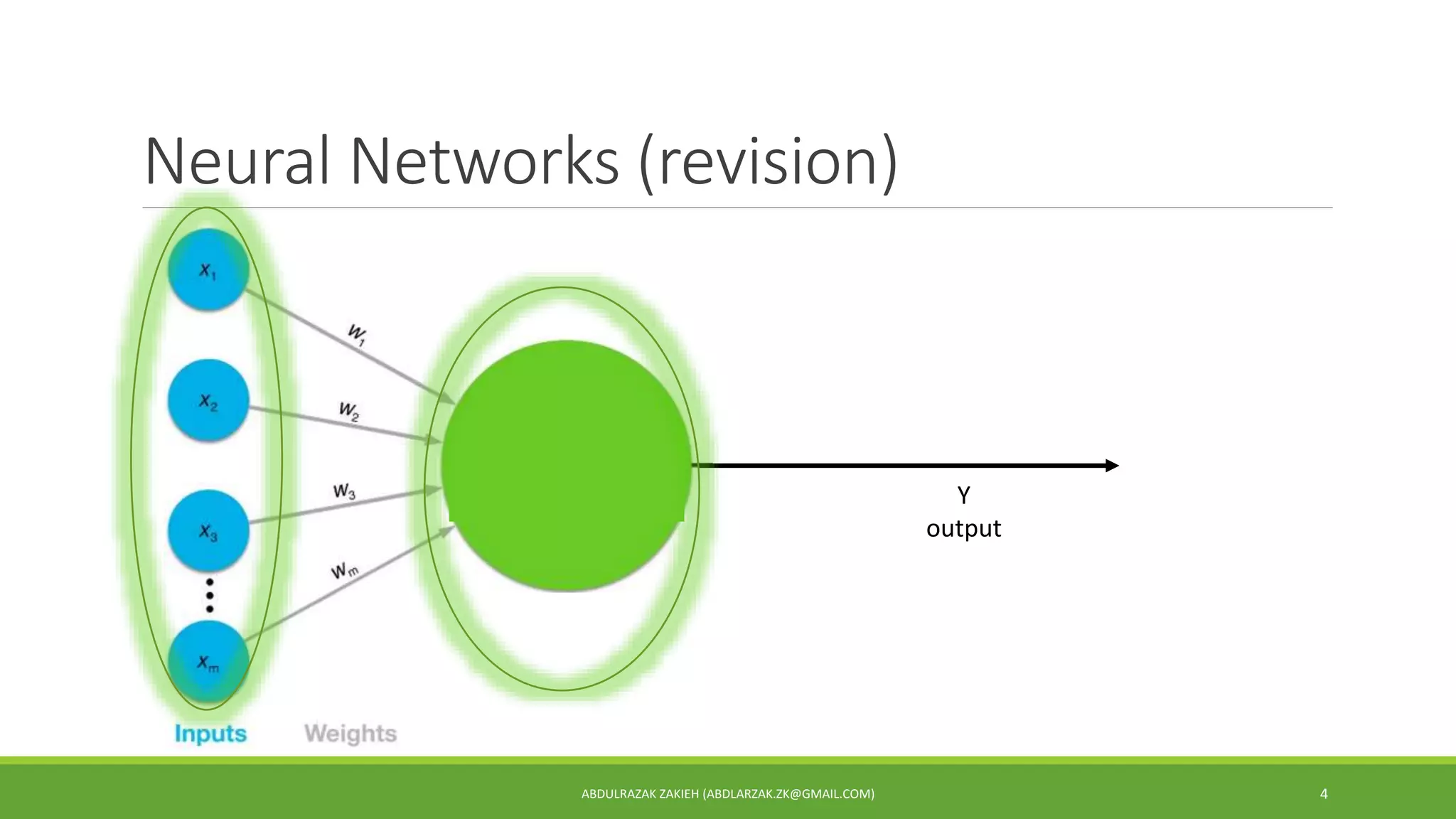

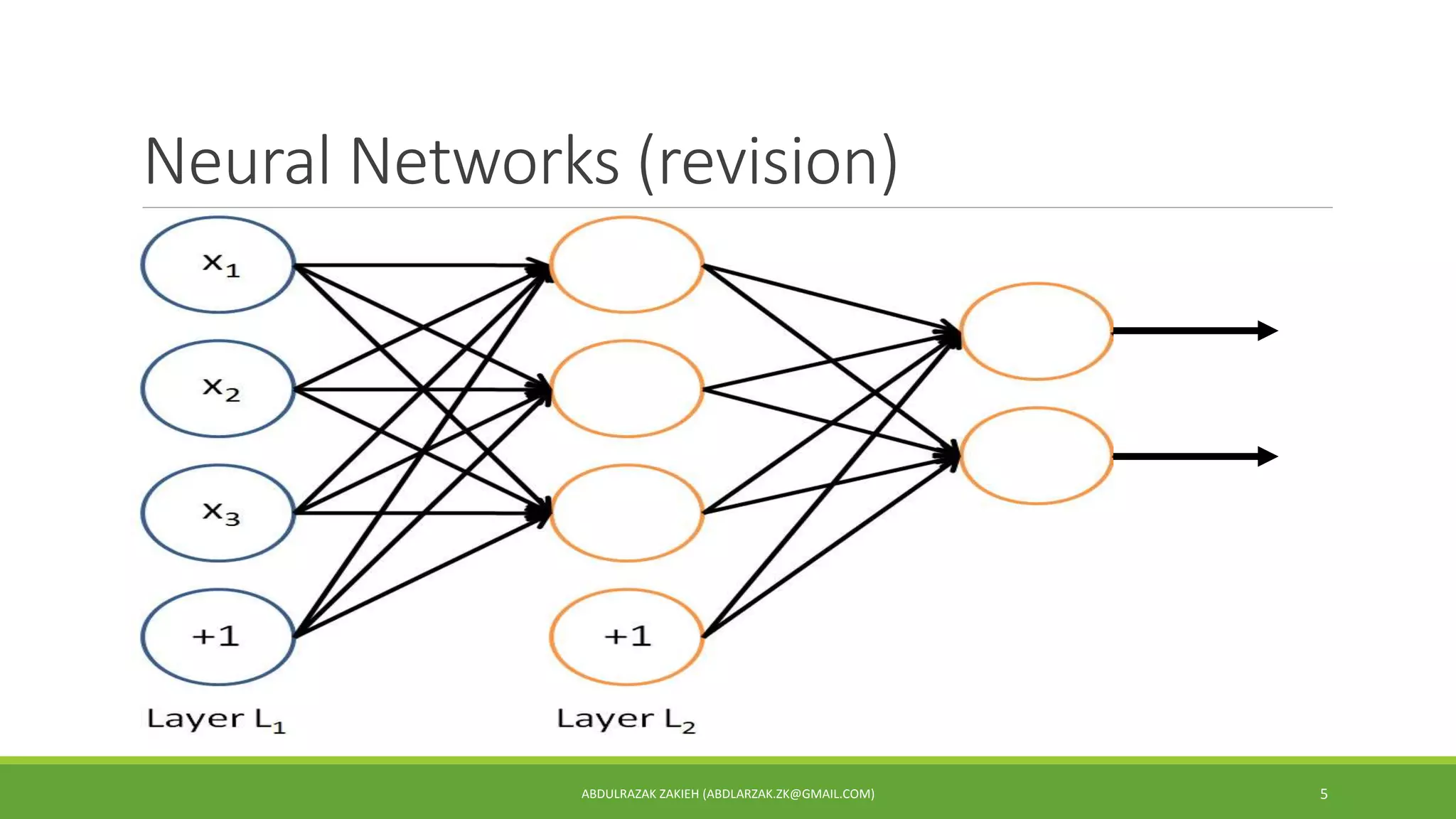

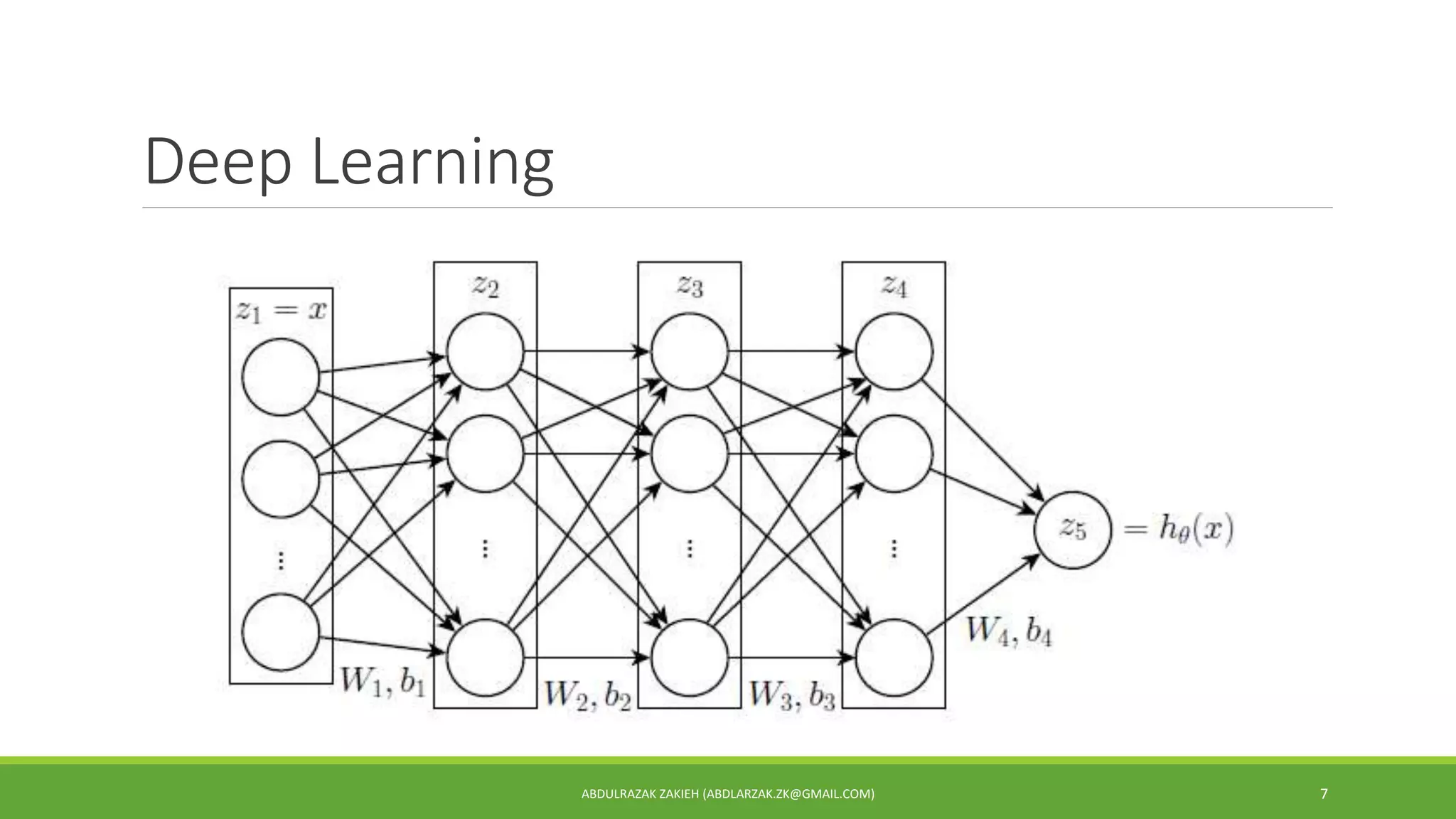

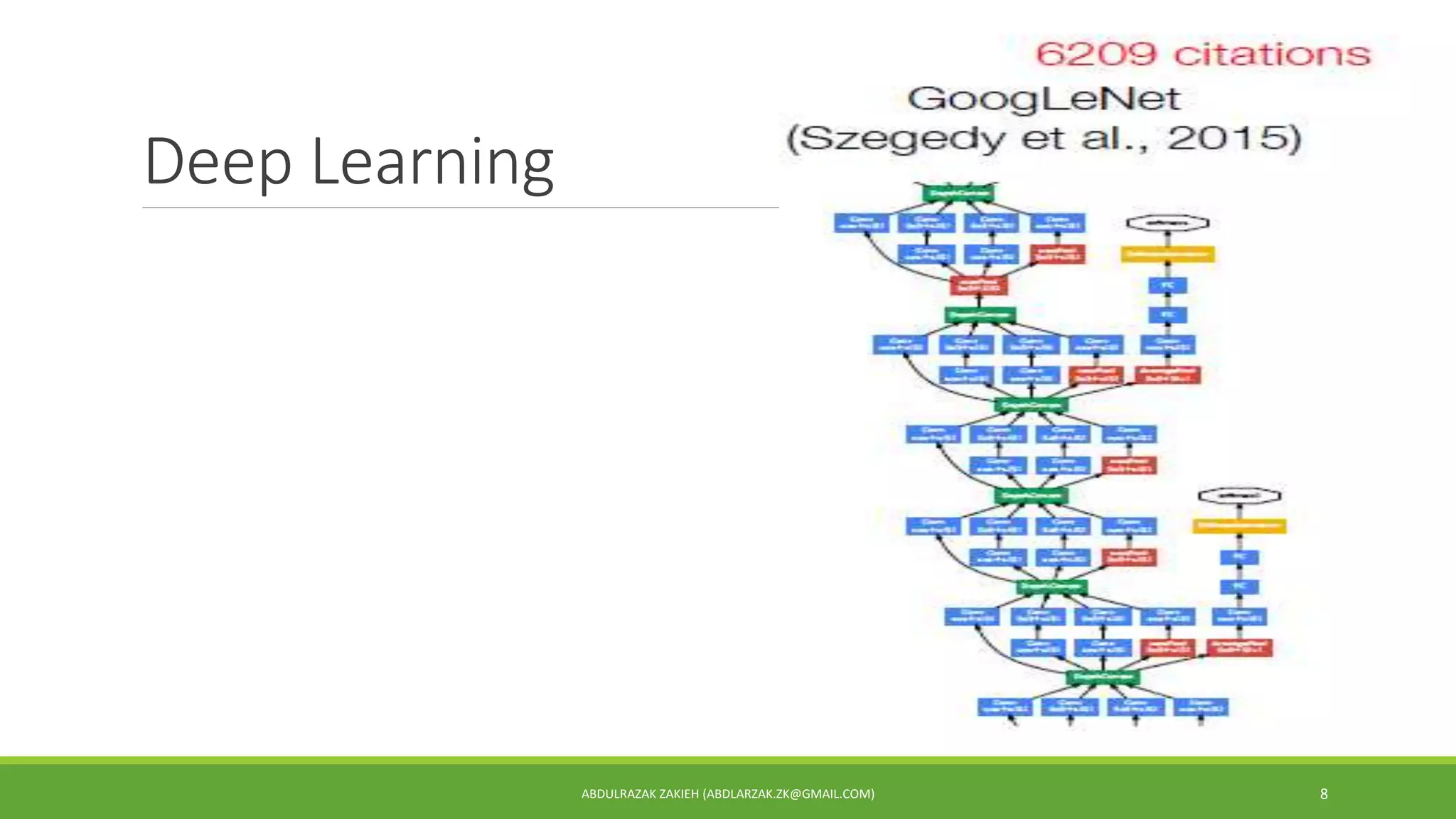

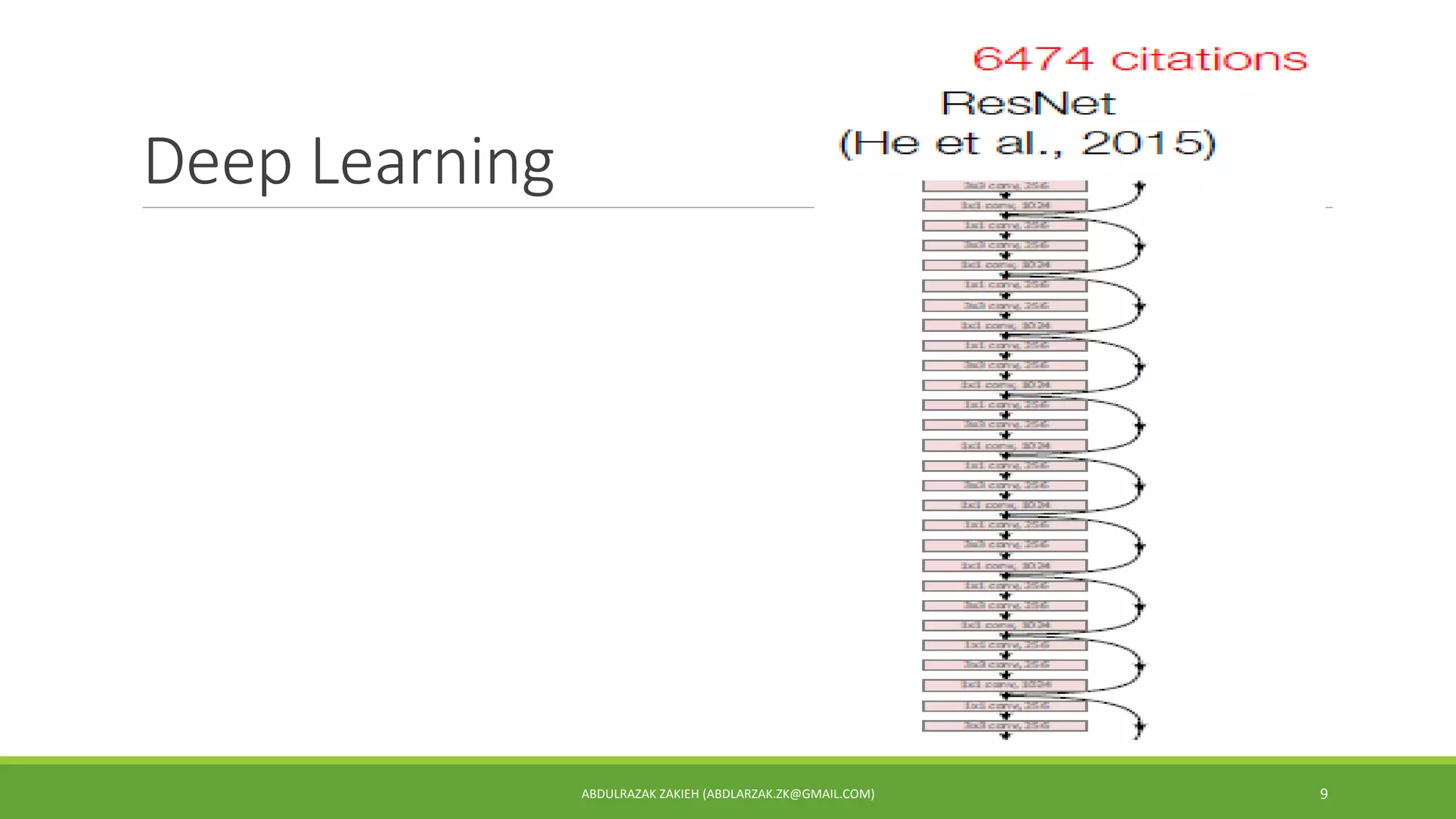

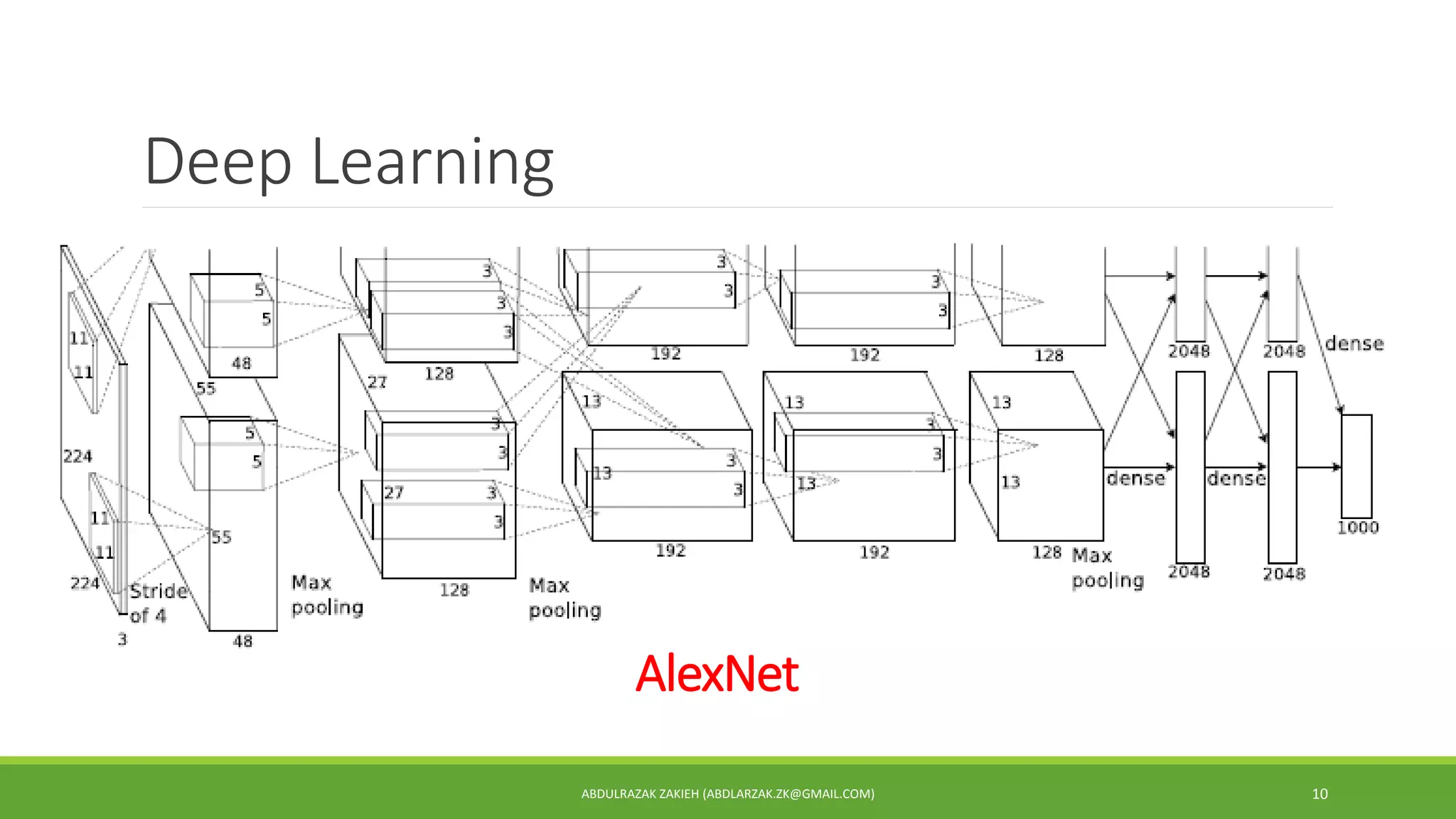

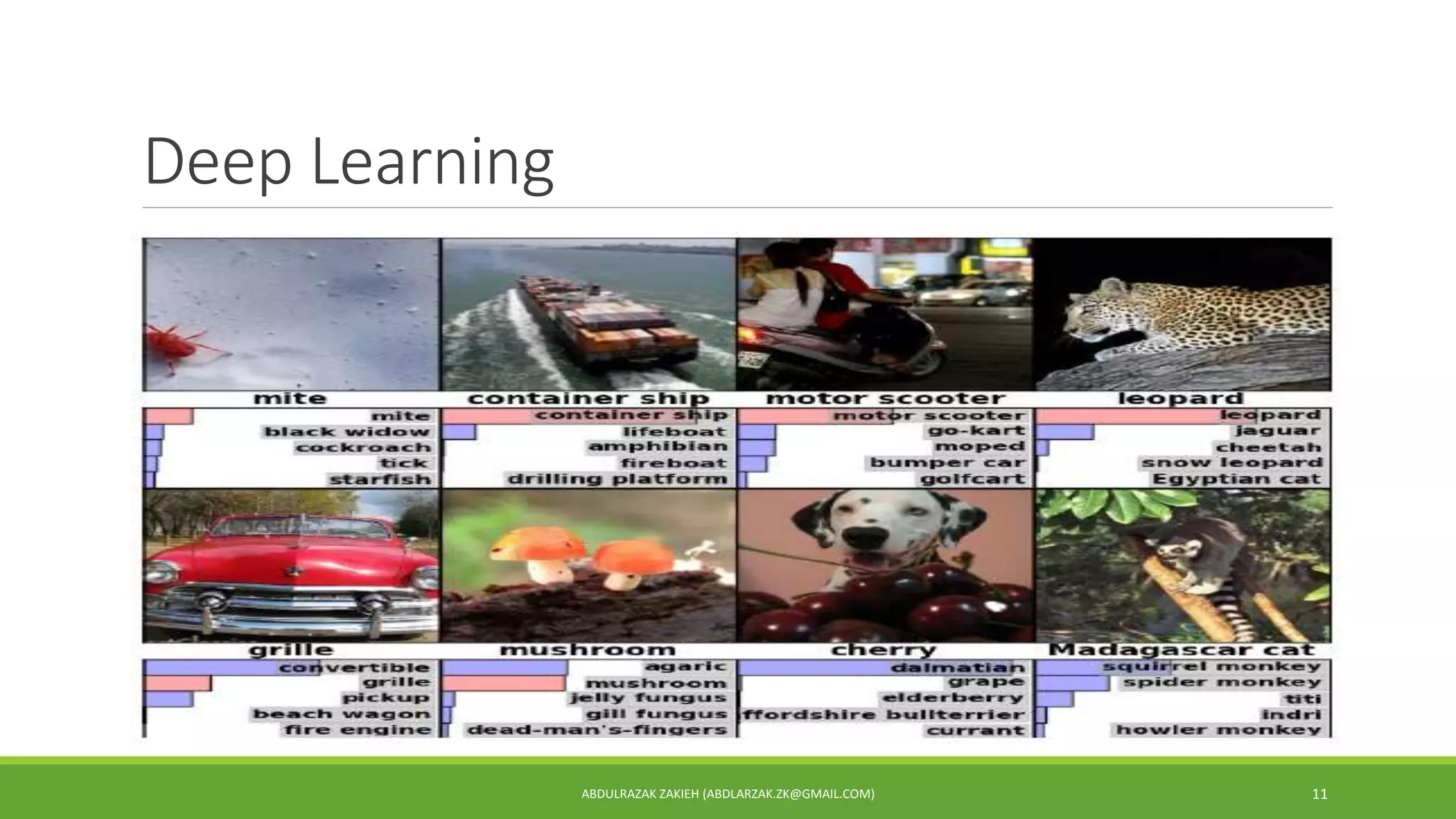

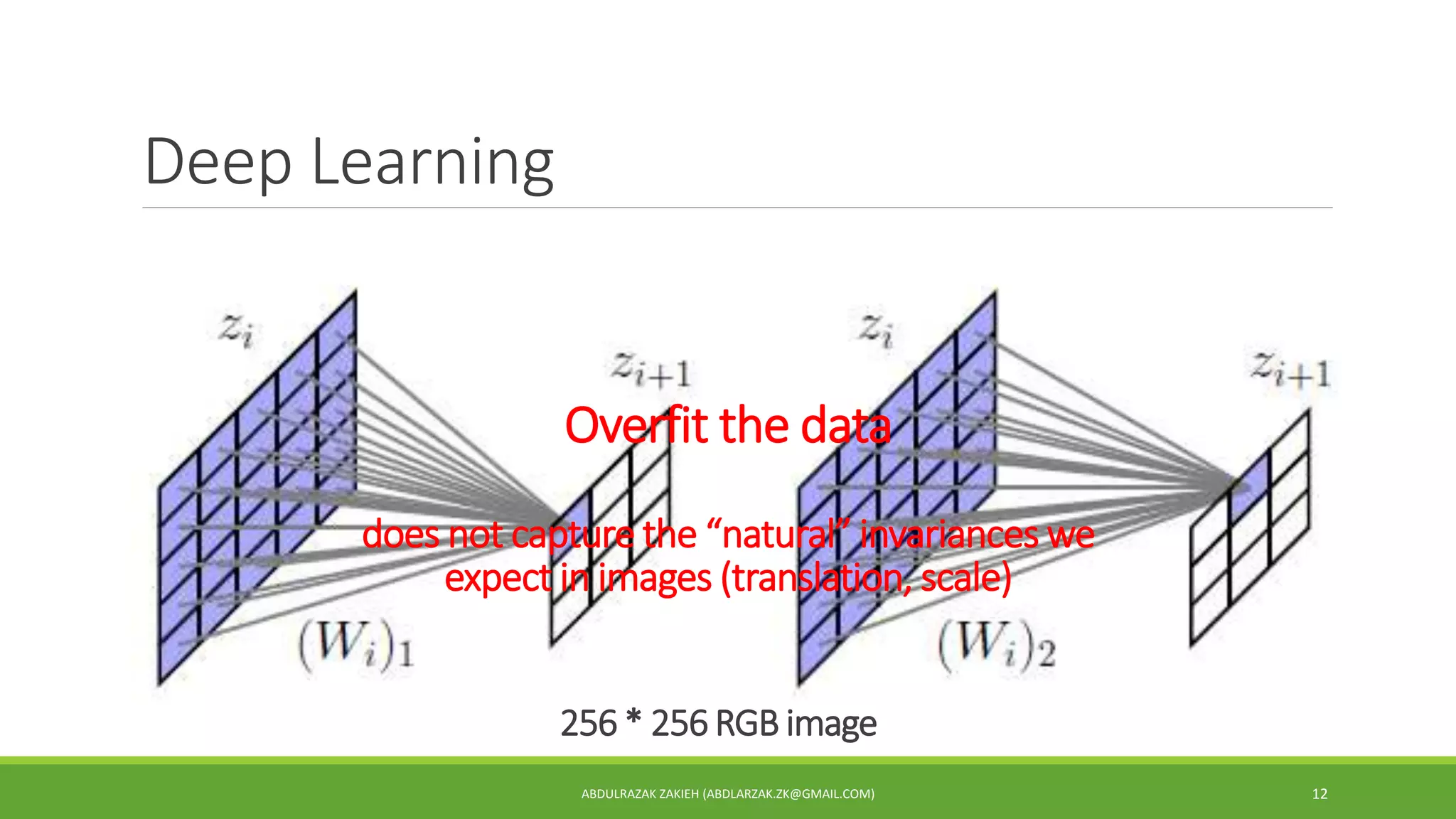

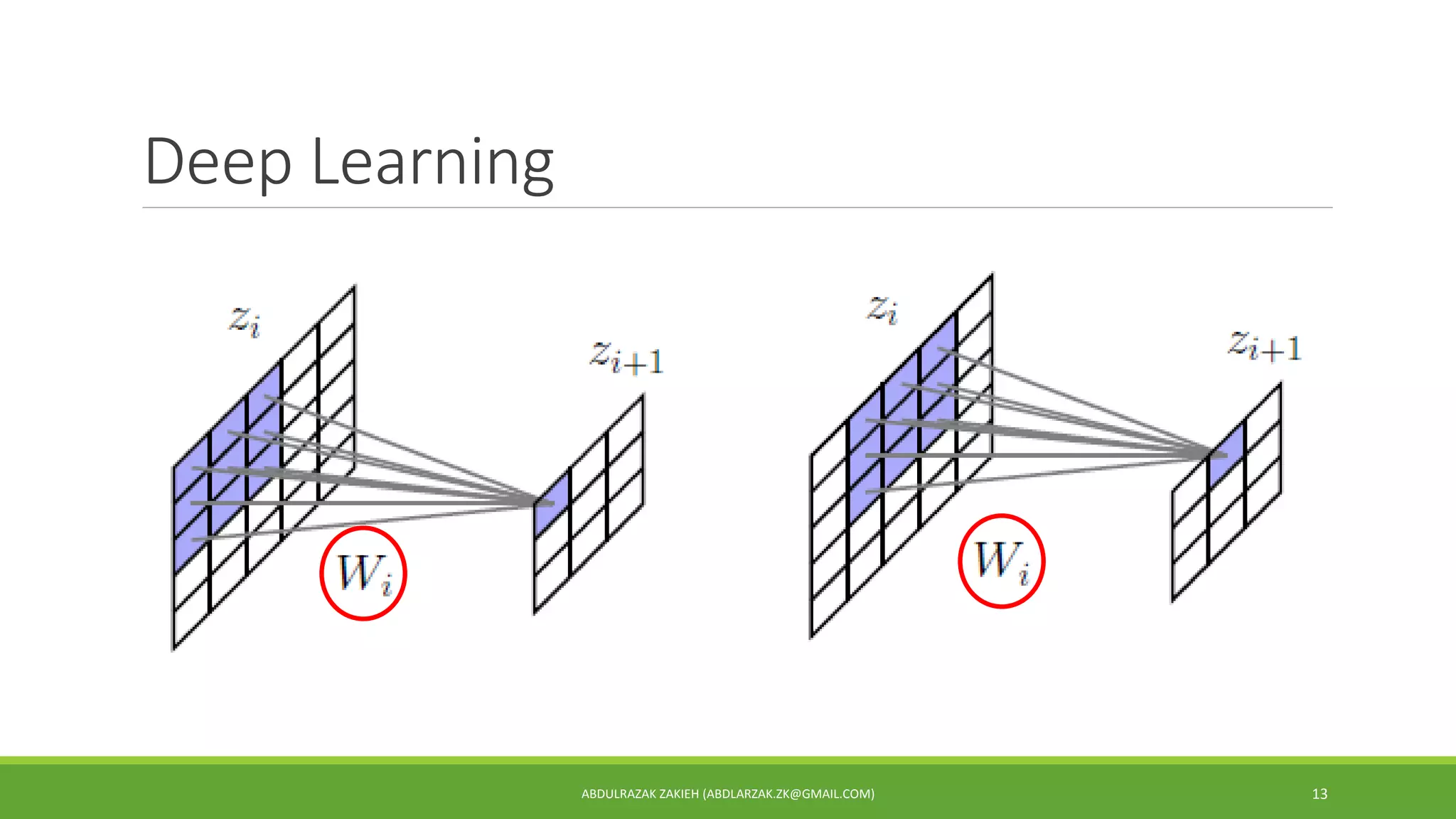

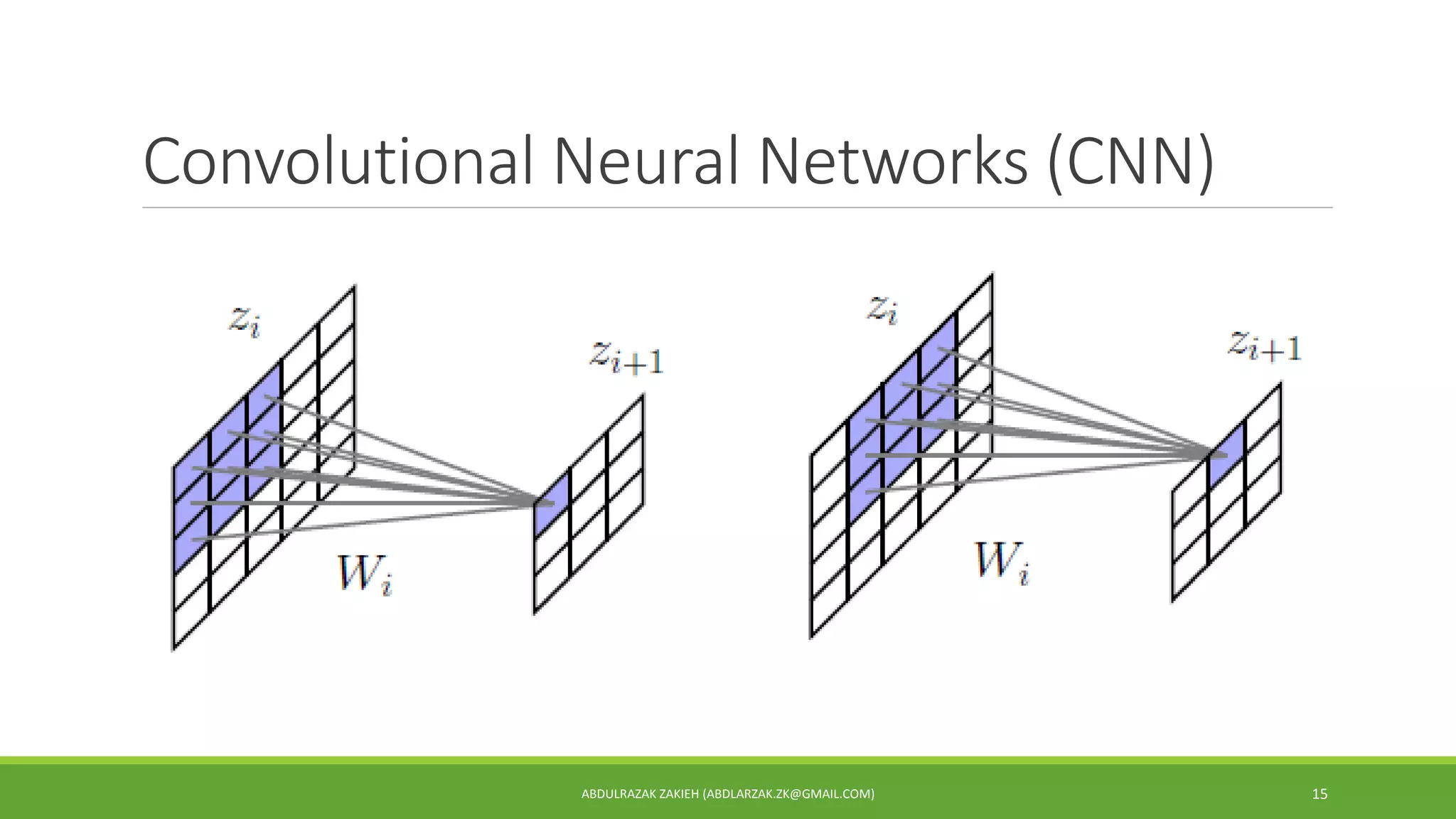

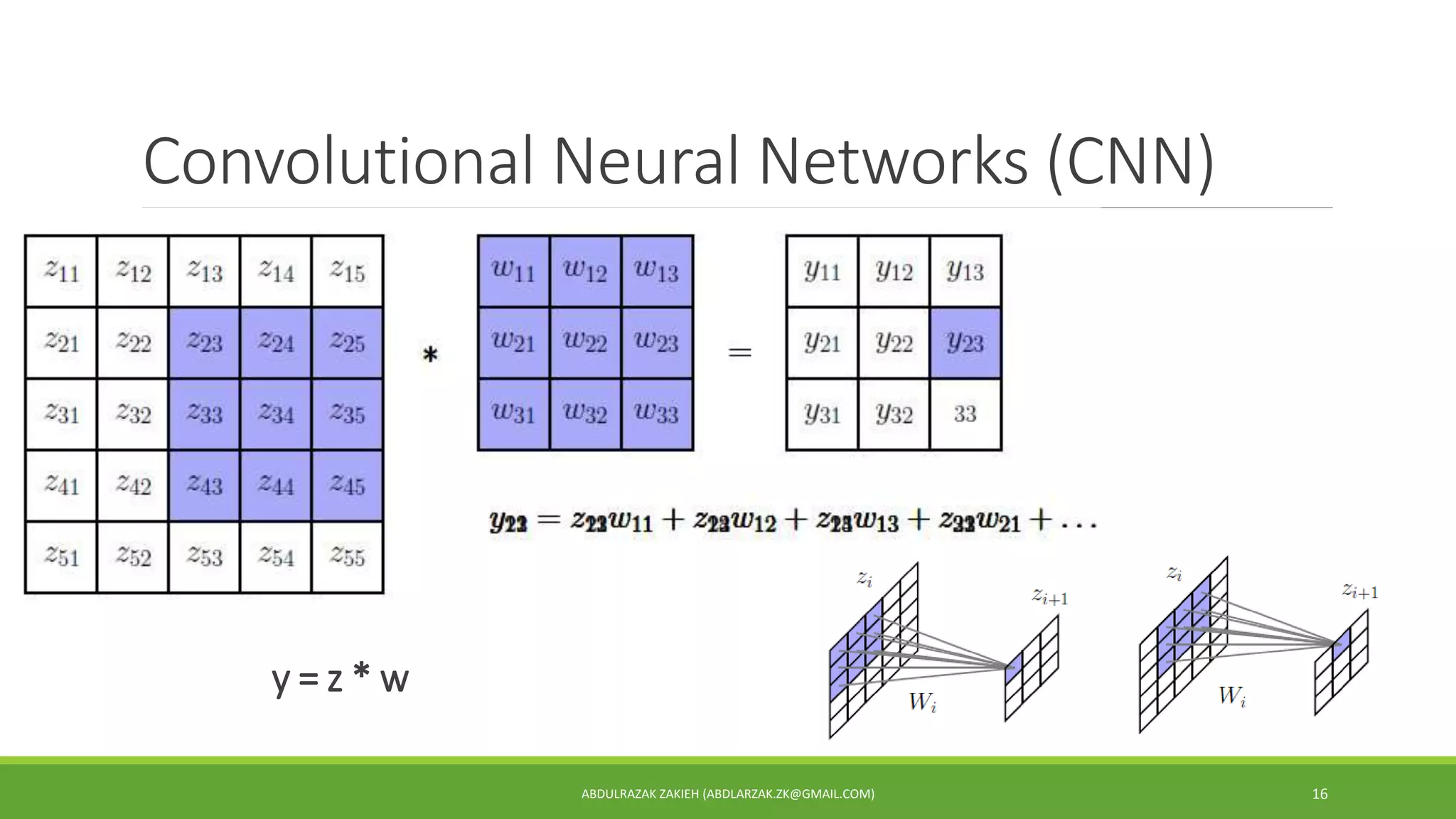

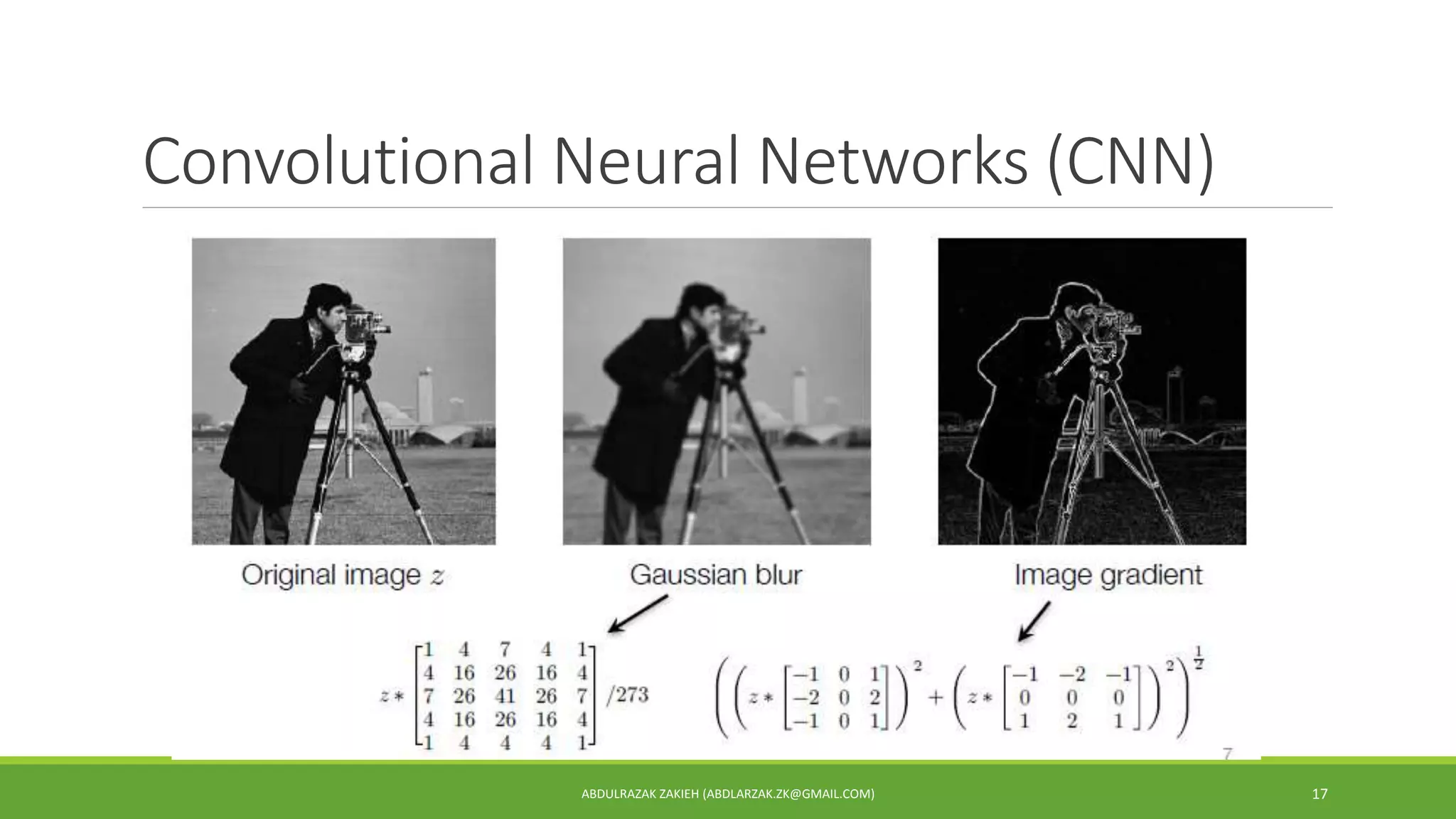

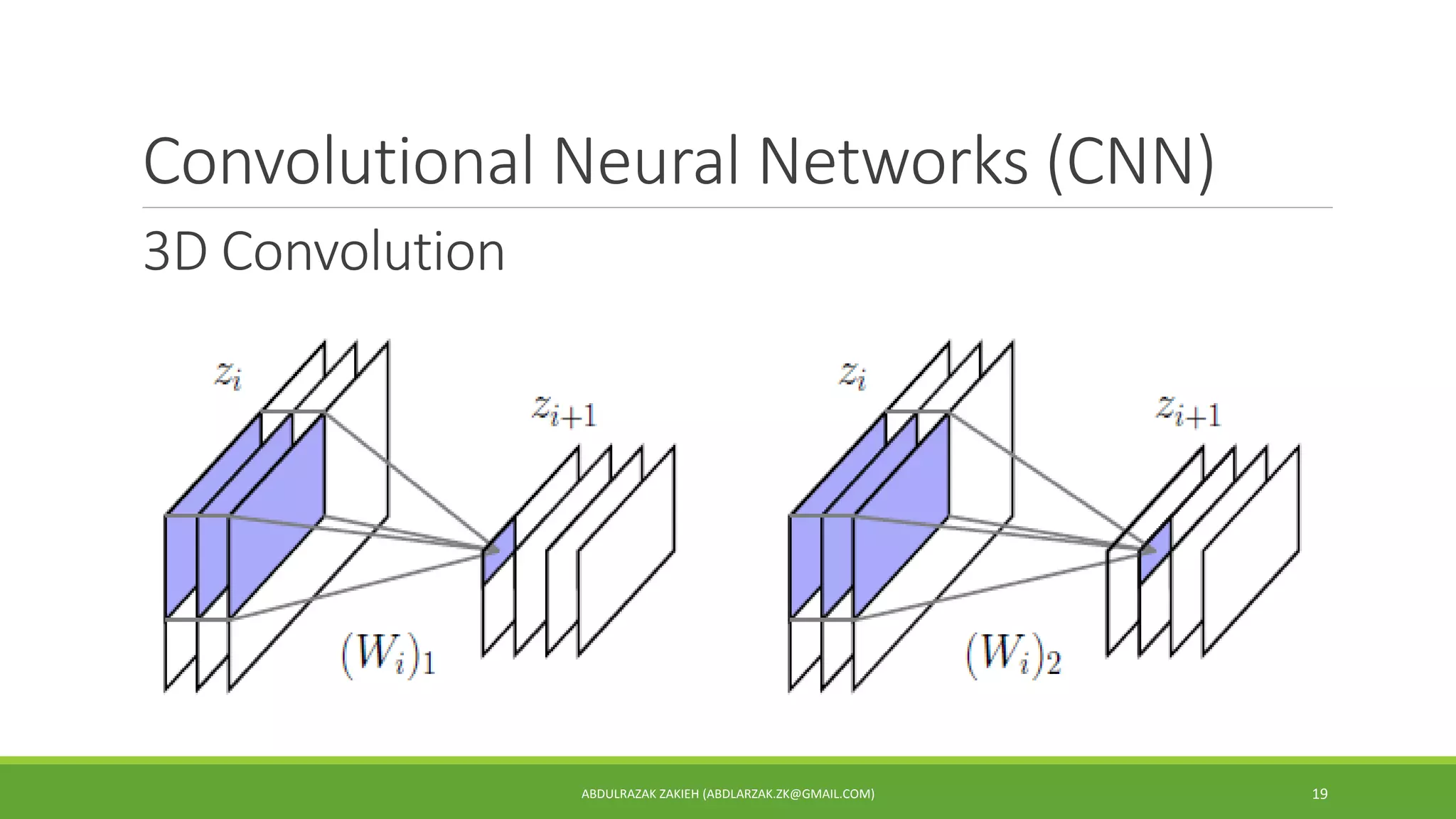

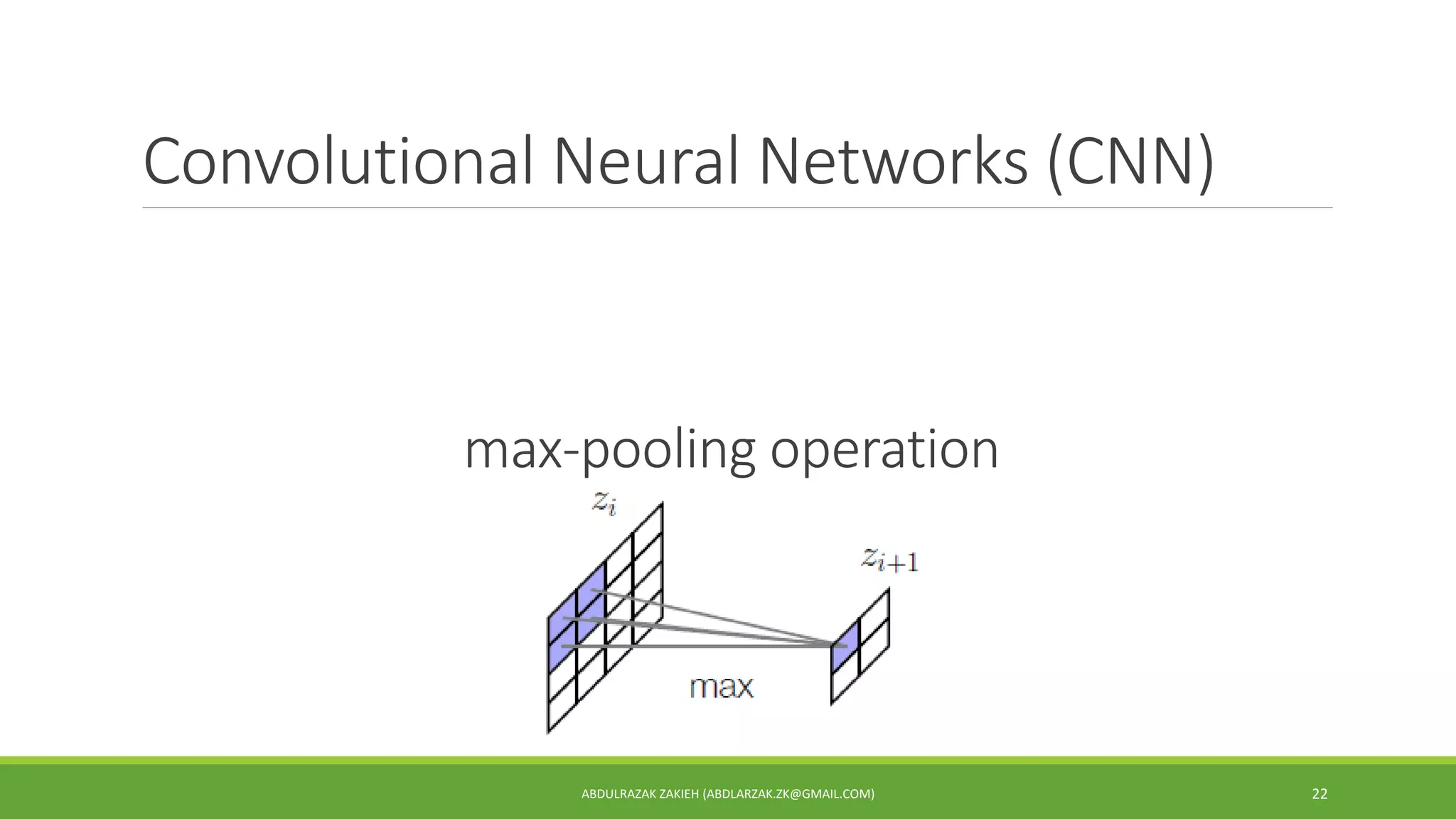

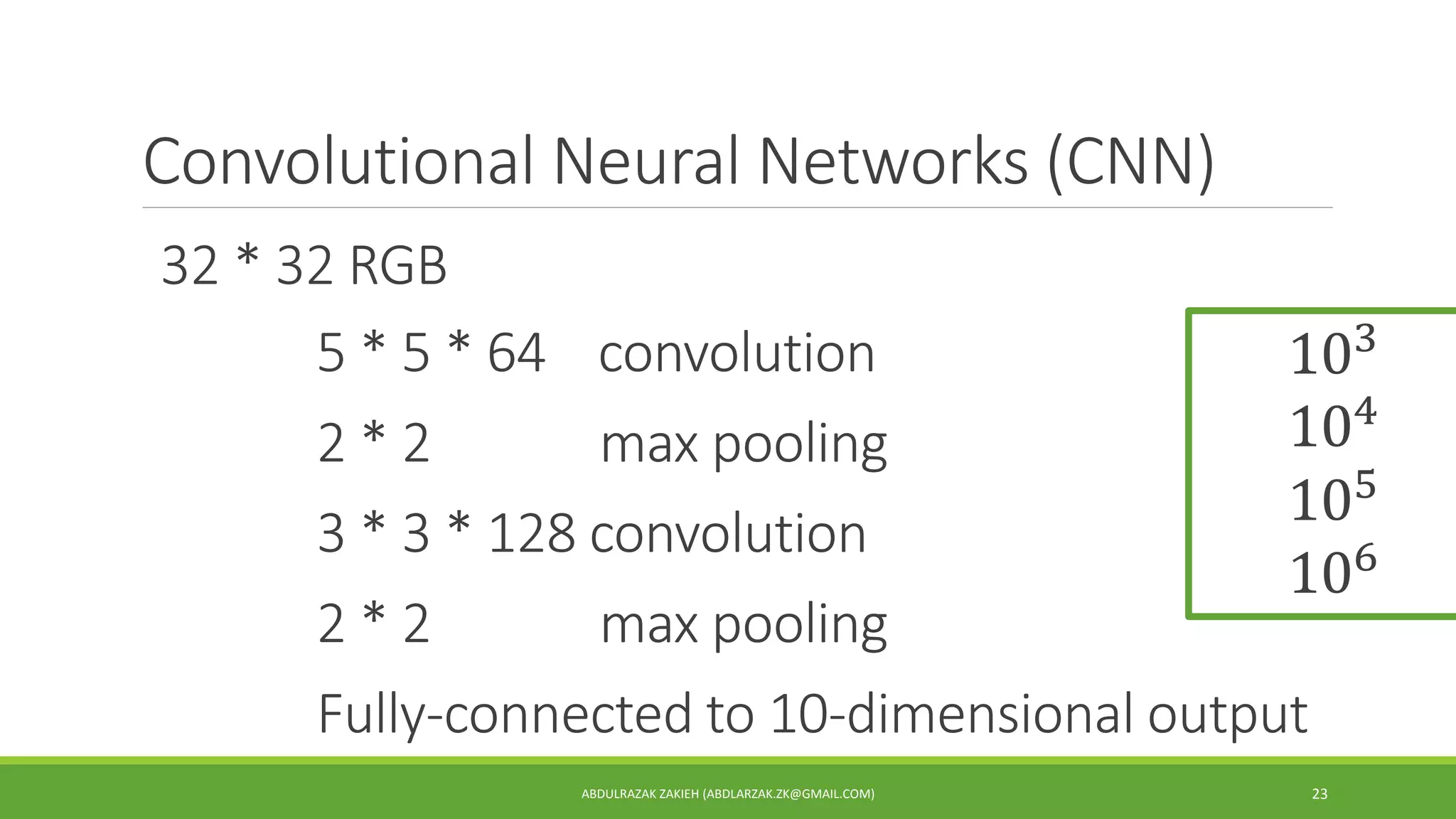

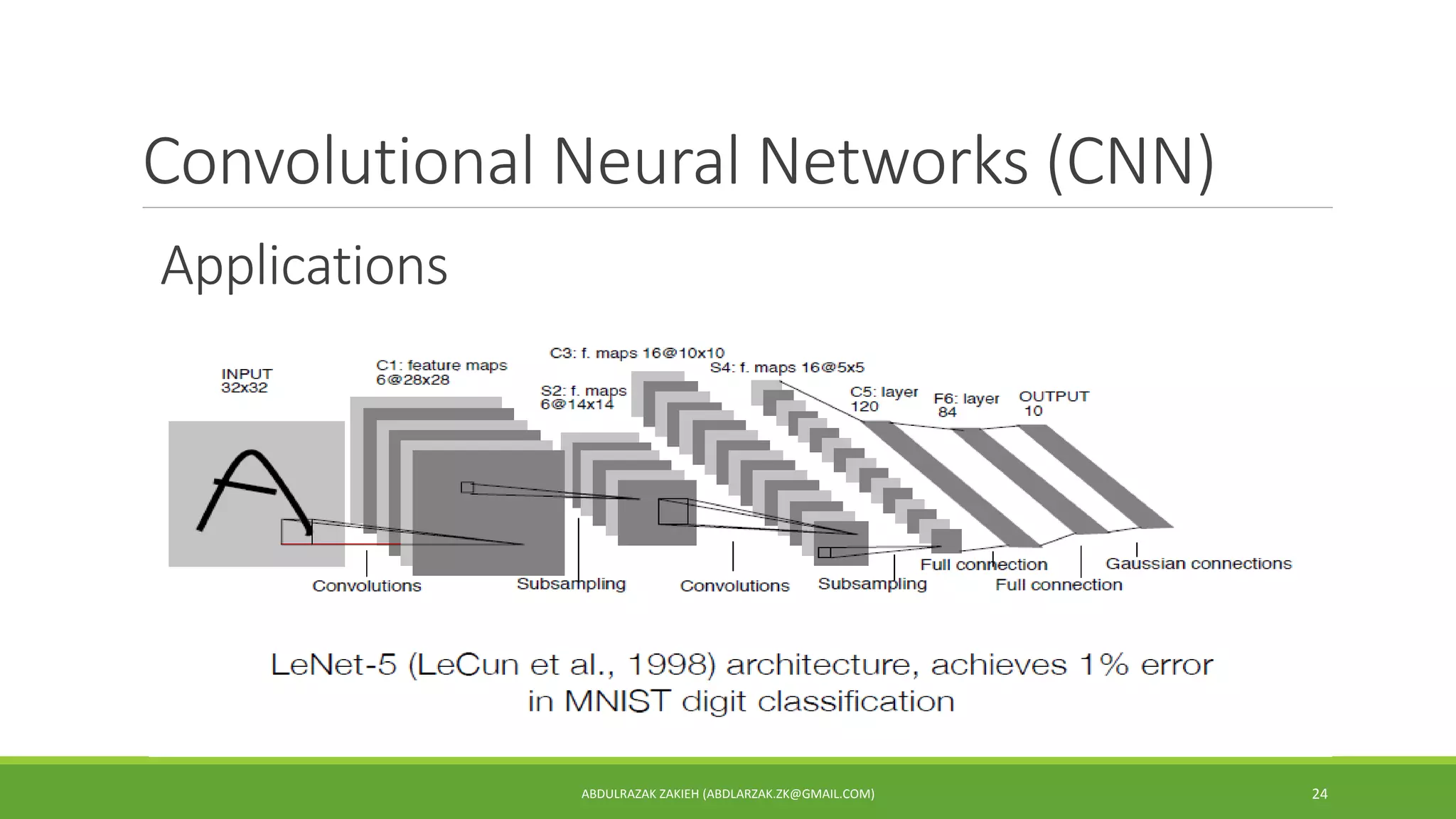

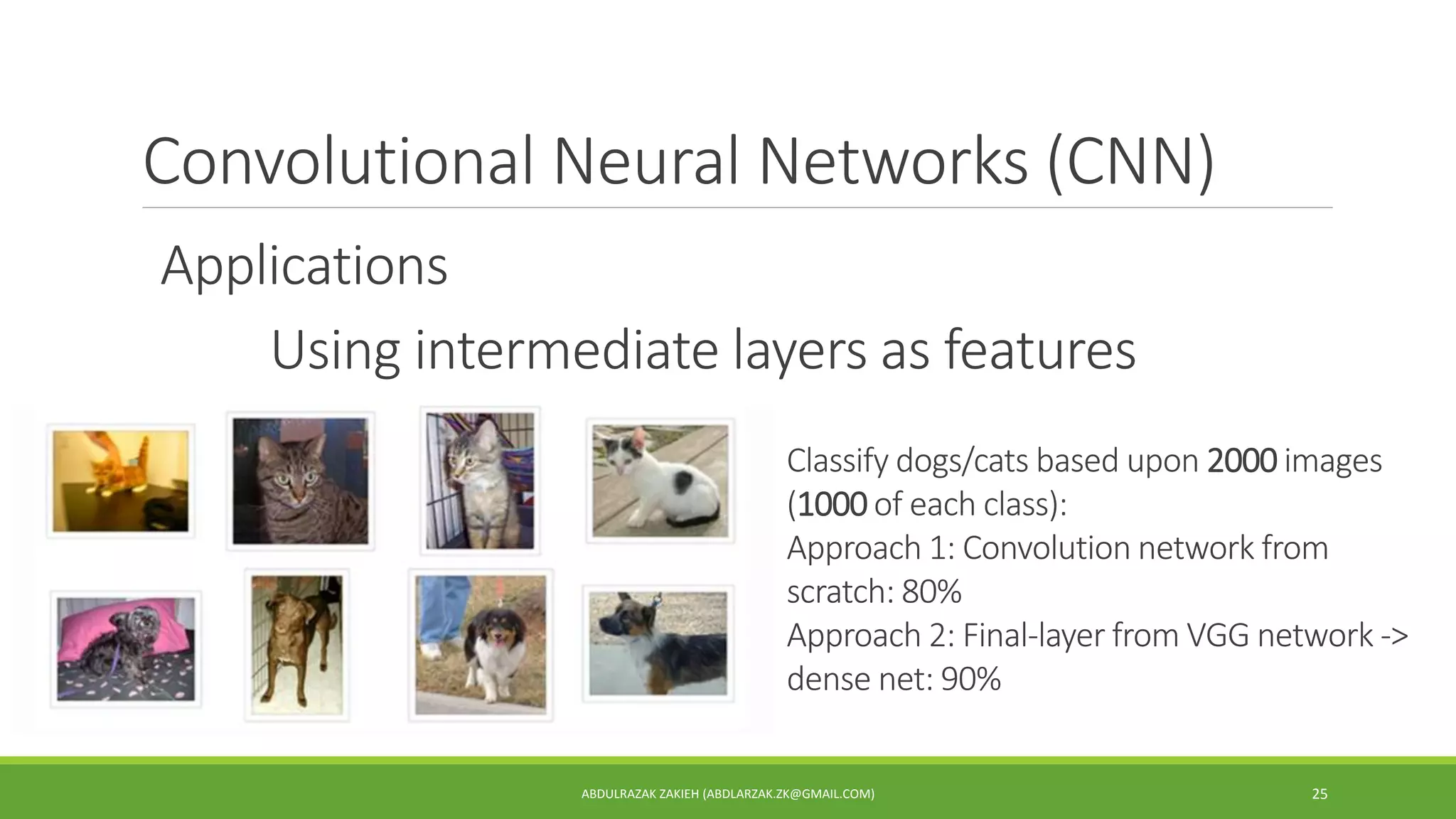

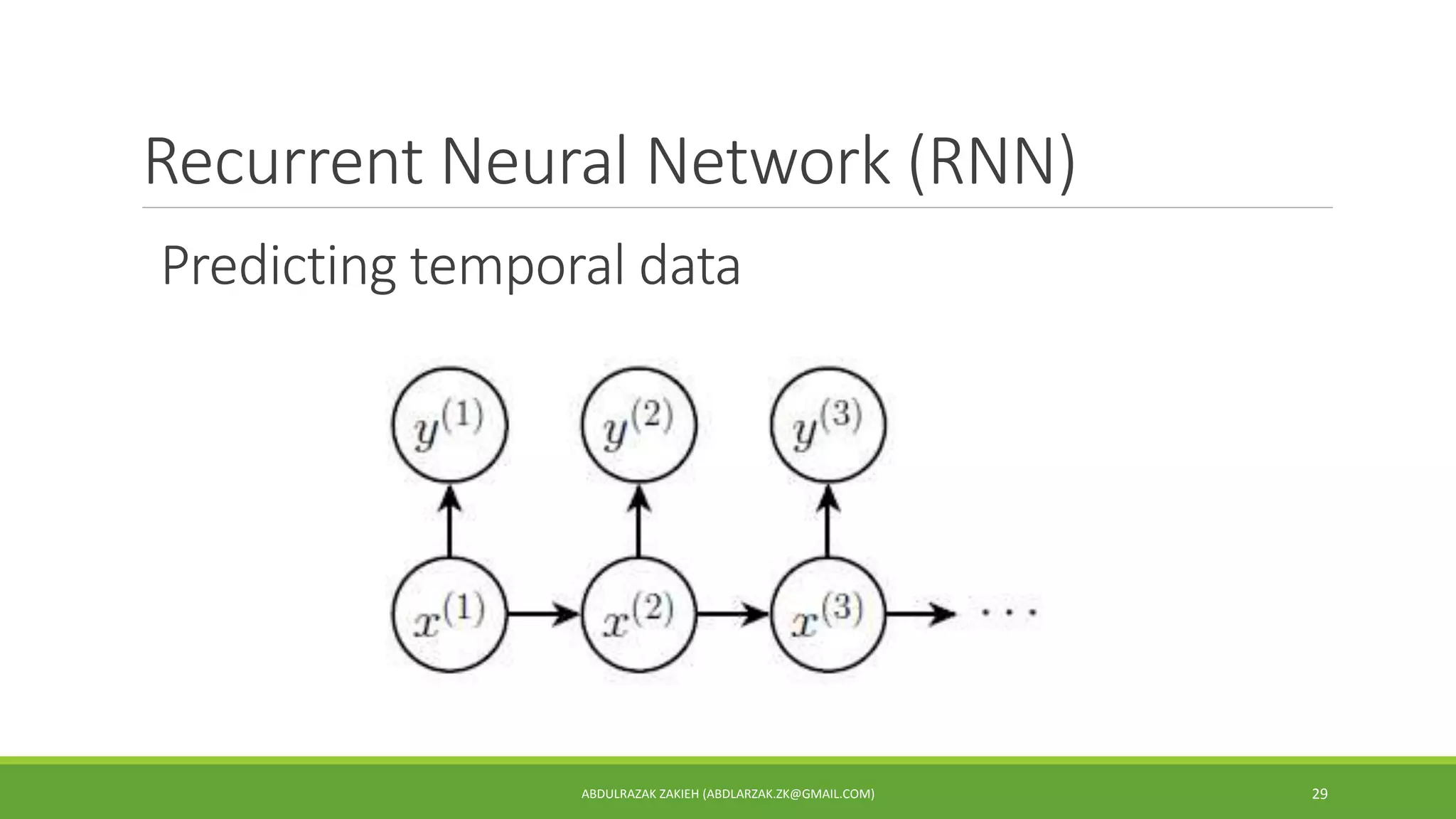

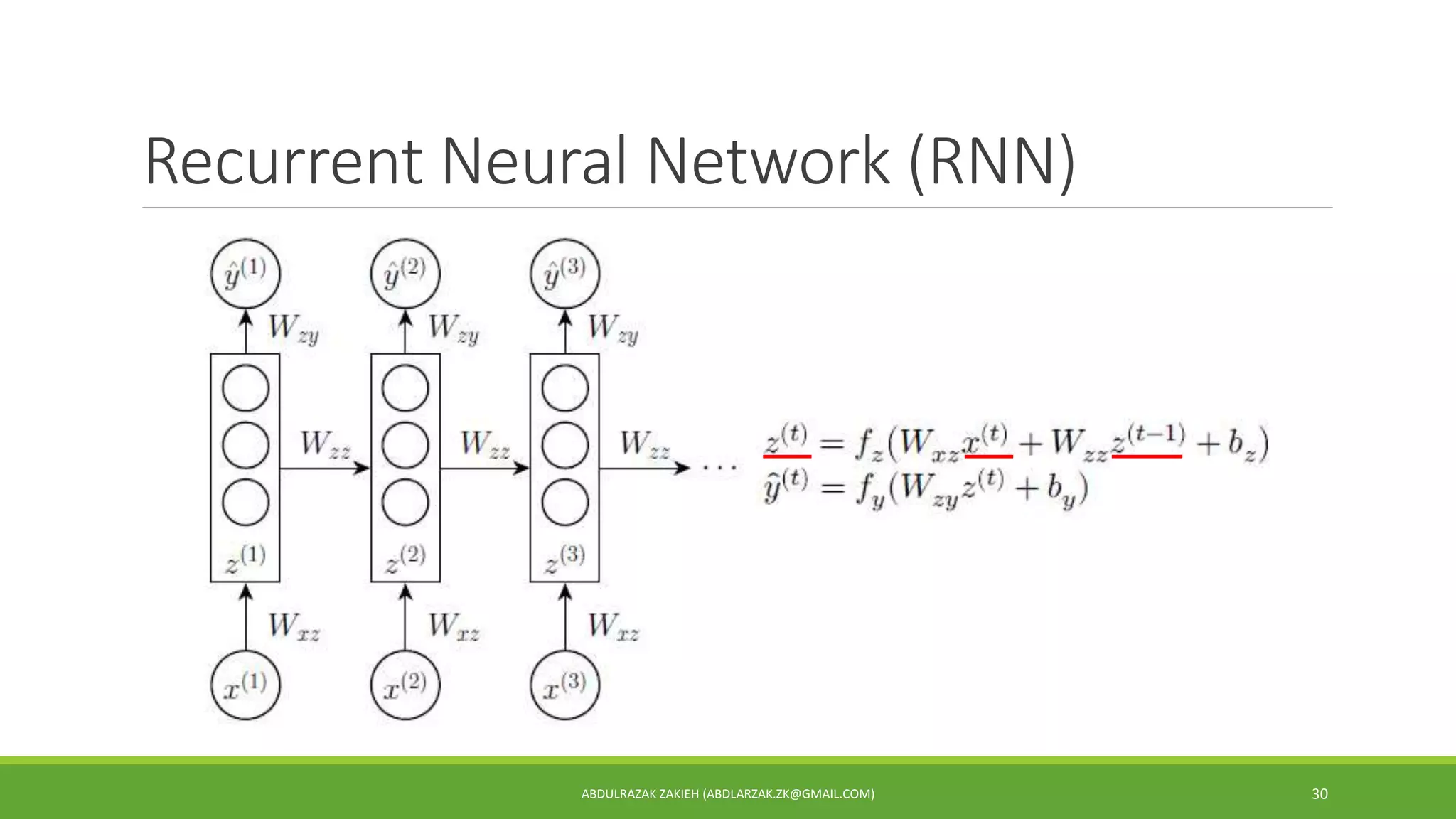

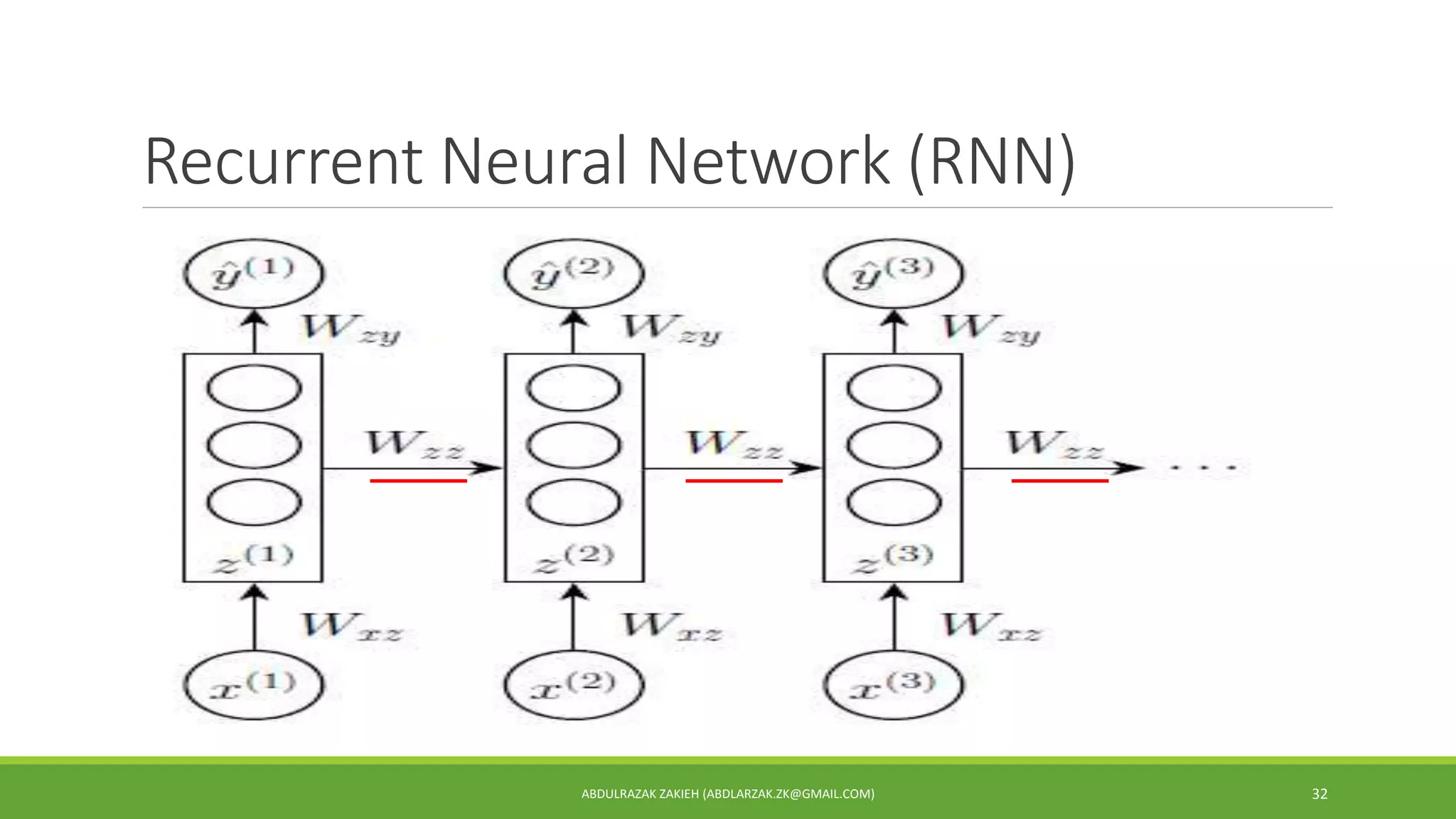

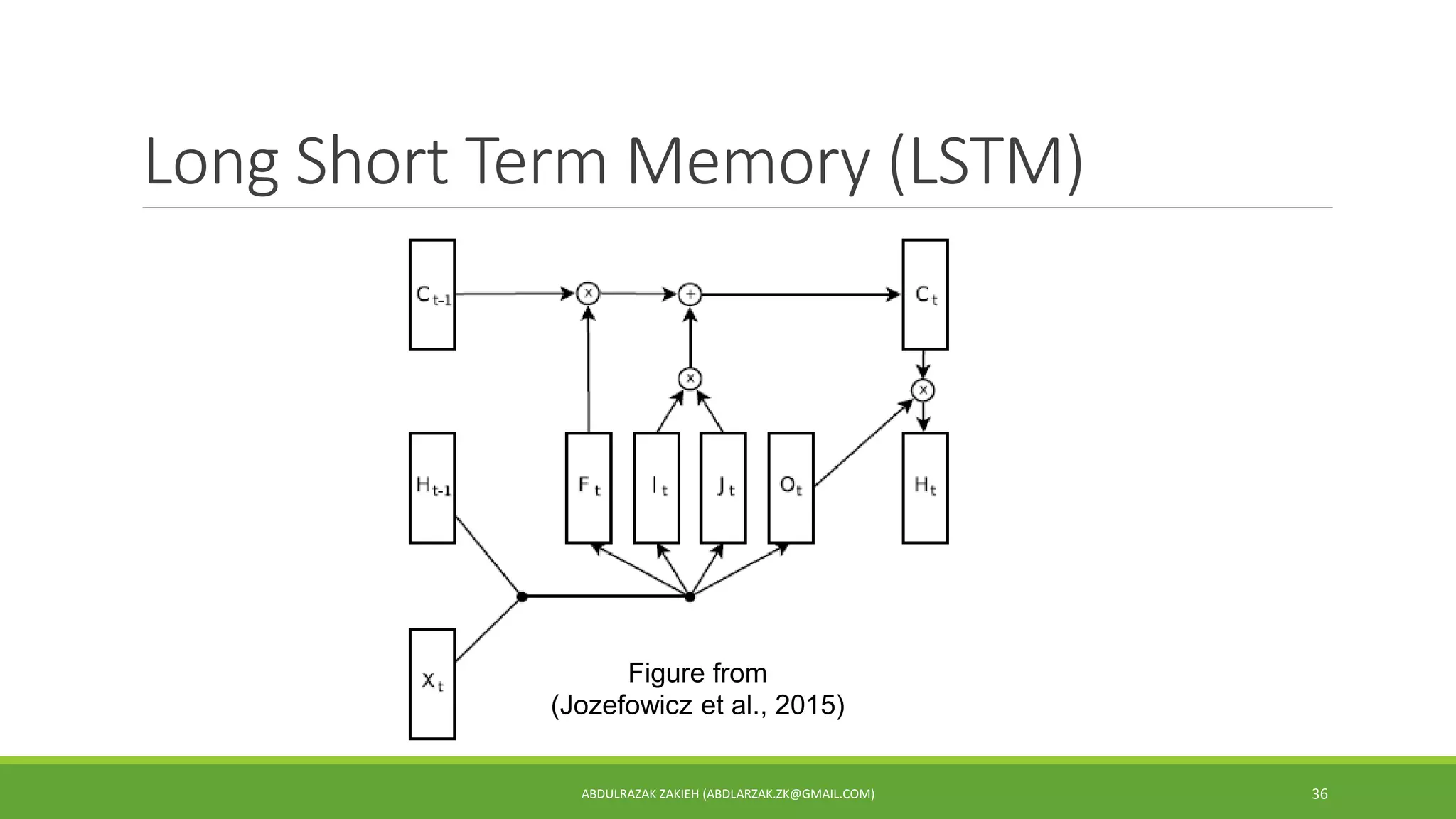

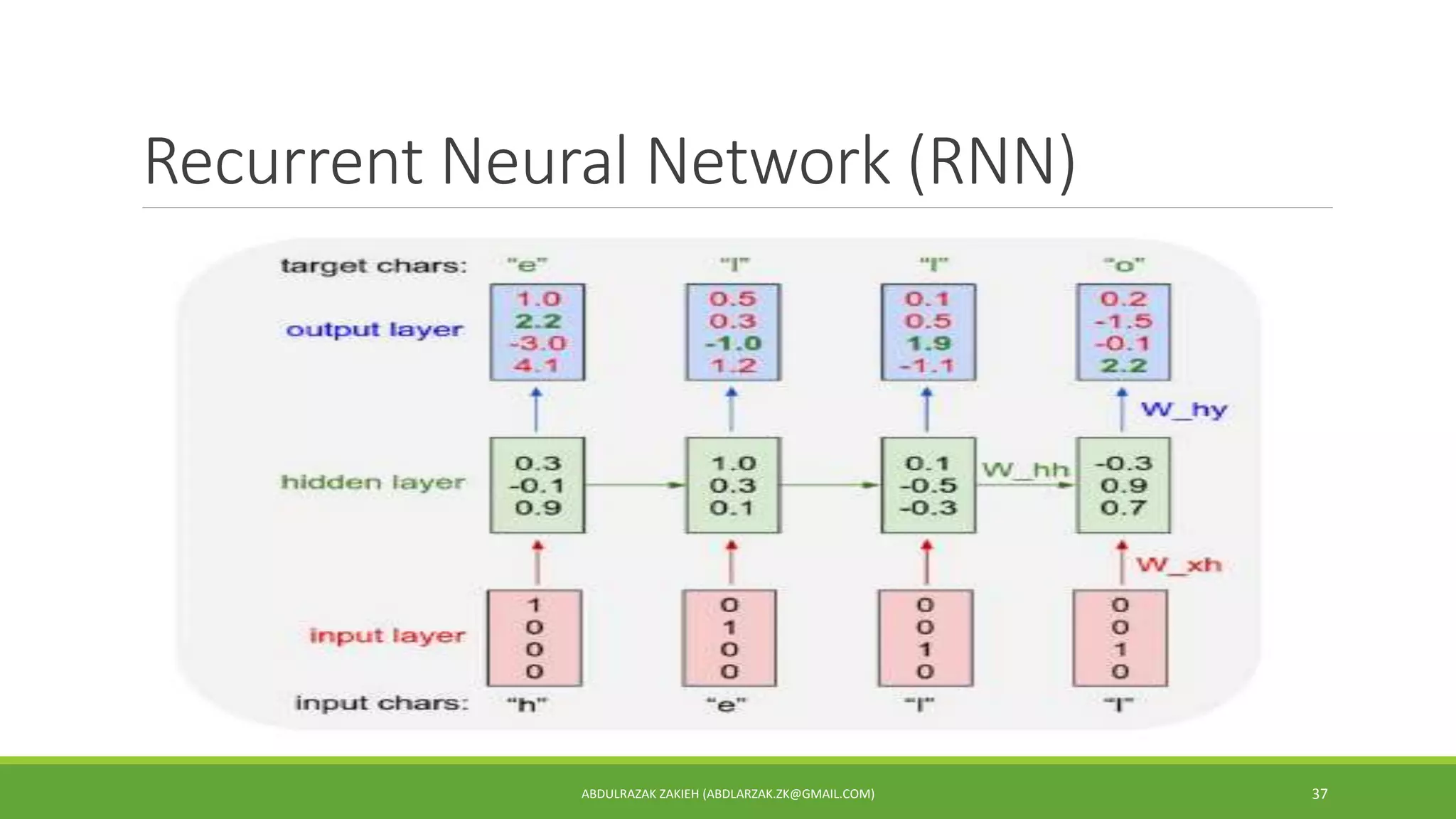

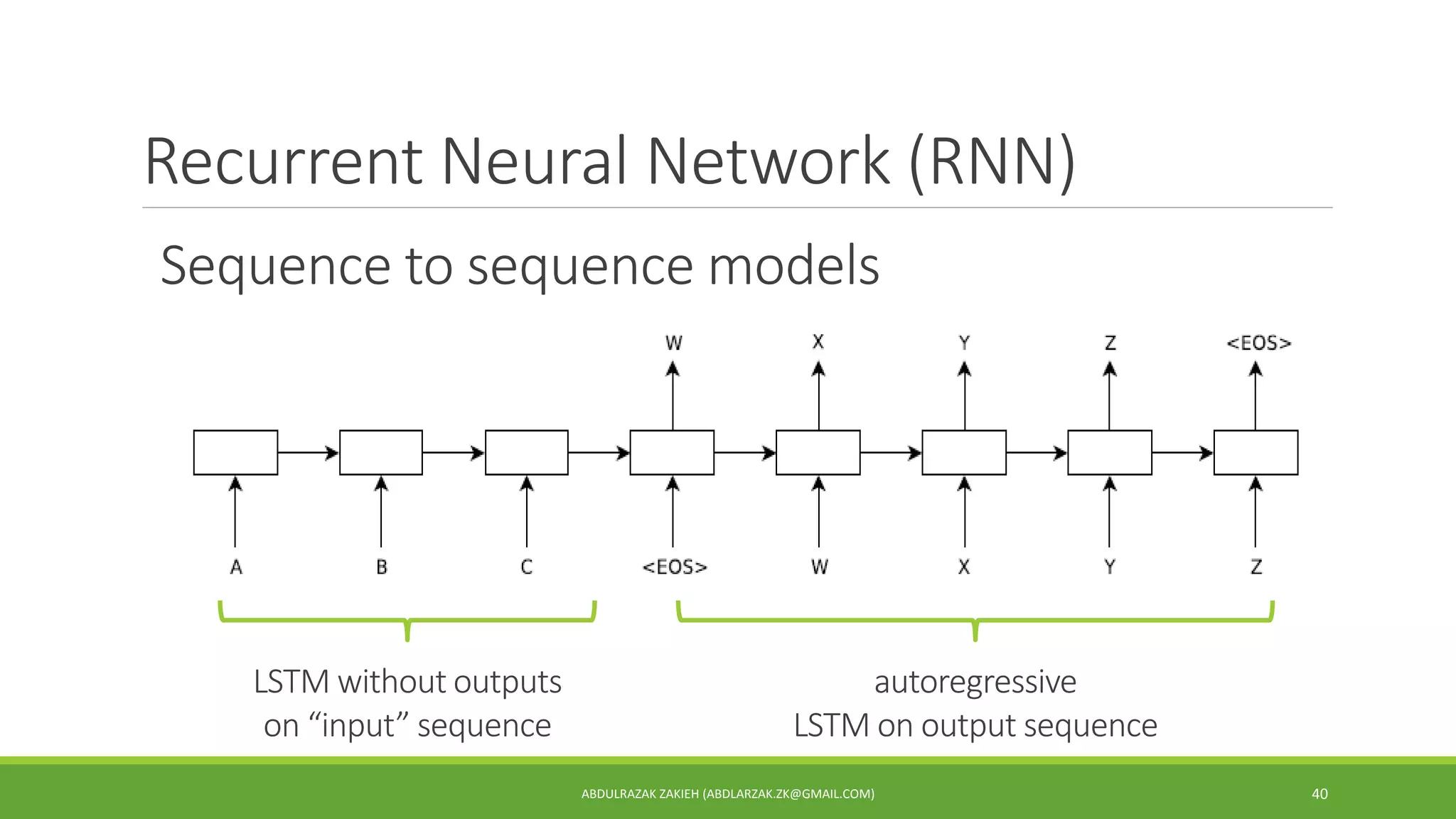

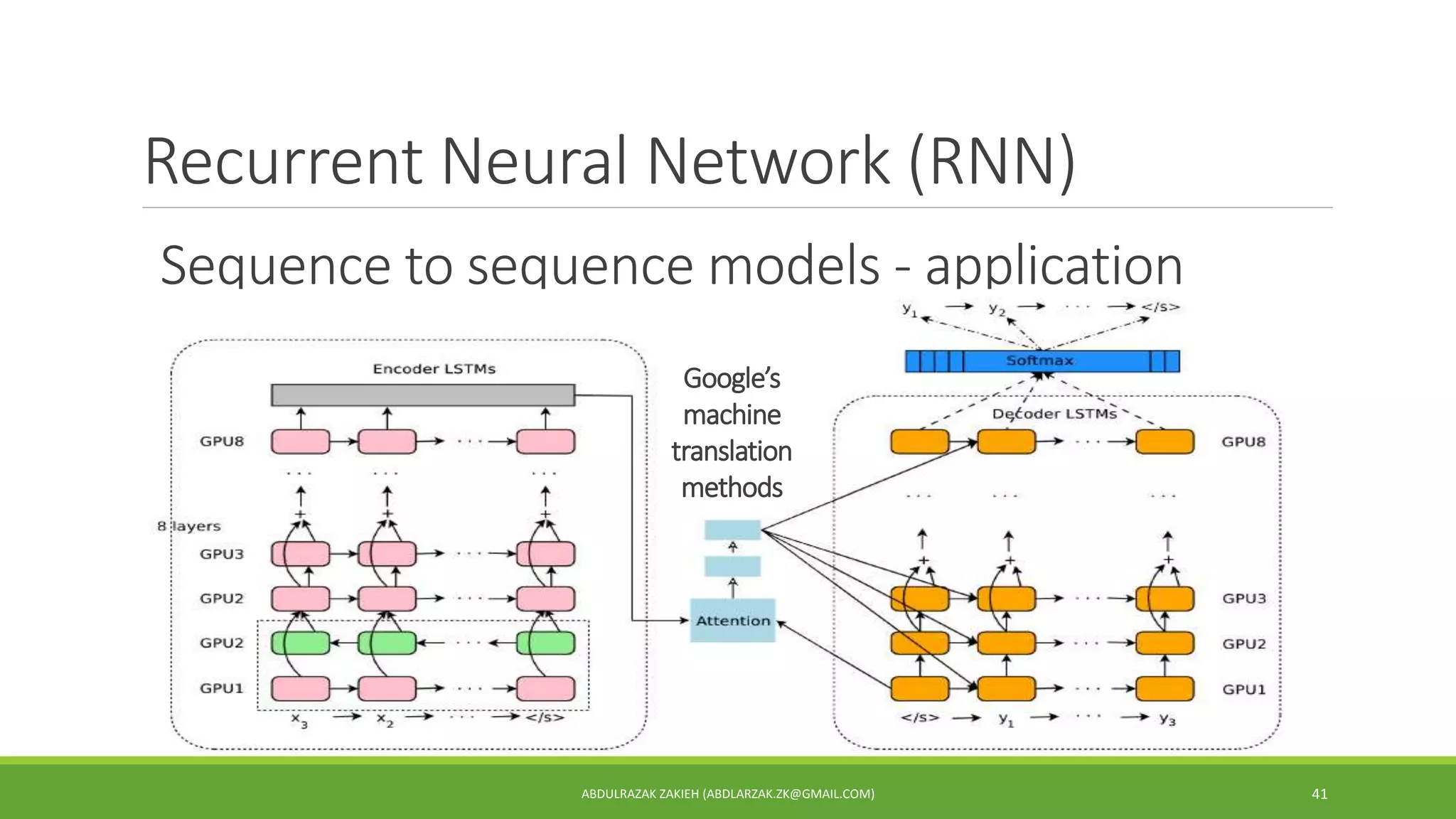

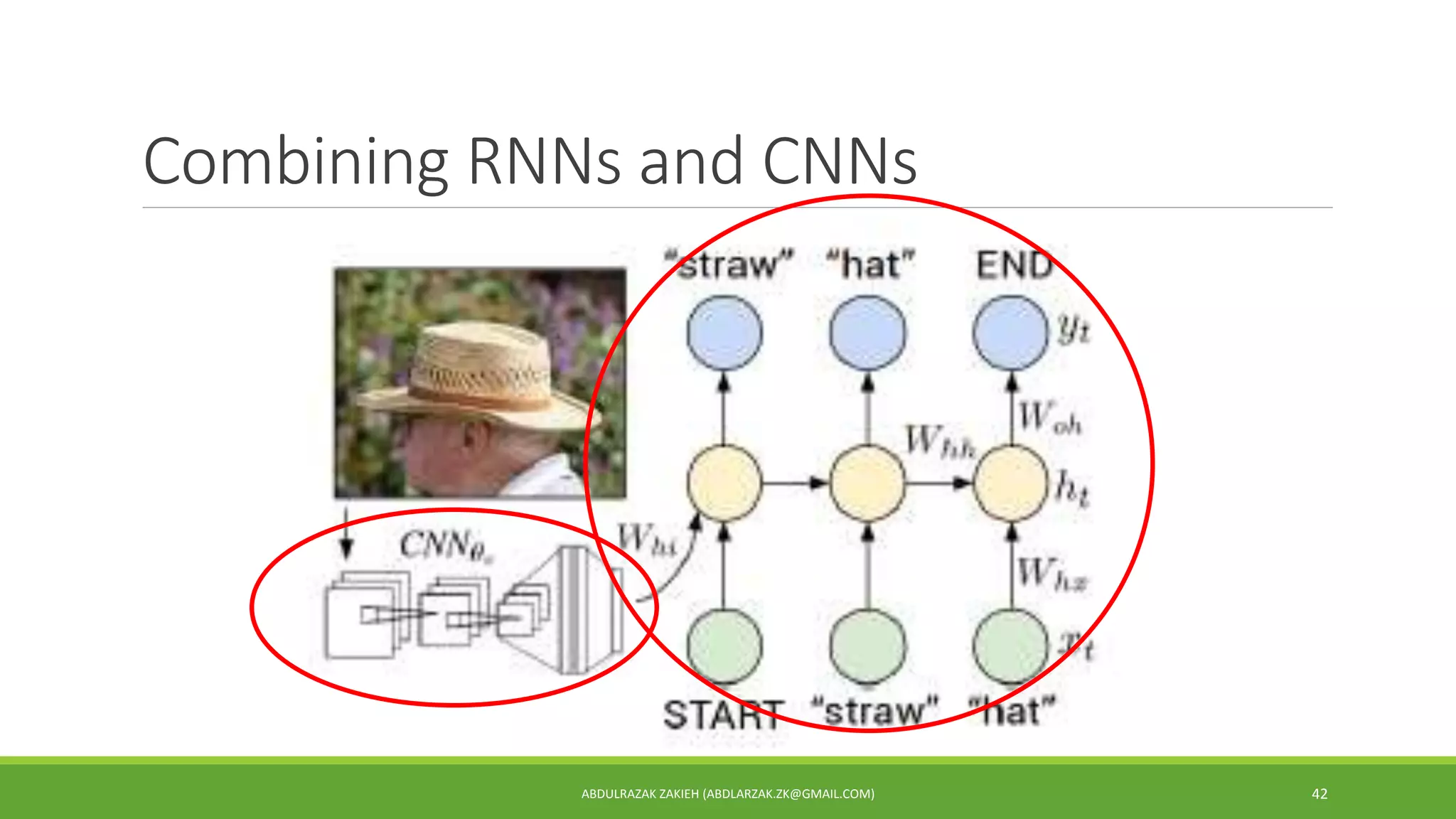

The document provides an overview of deep learning topics focused on neural networks, including convolutional neural networks (CNN), recurrent neural networks (RNN), and long short-term memory (LSTM). It discusses architecture, training techniques, and applications such as image classification and sequence prediction, highlighting methods and best practices. Various concepts related to CNN and RNN are explored, emphasizing their roles in modern deep learning applications.