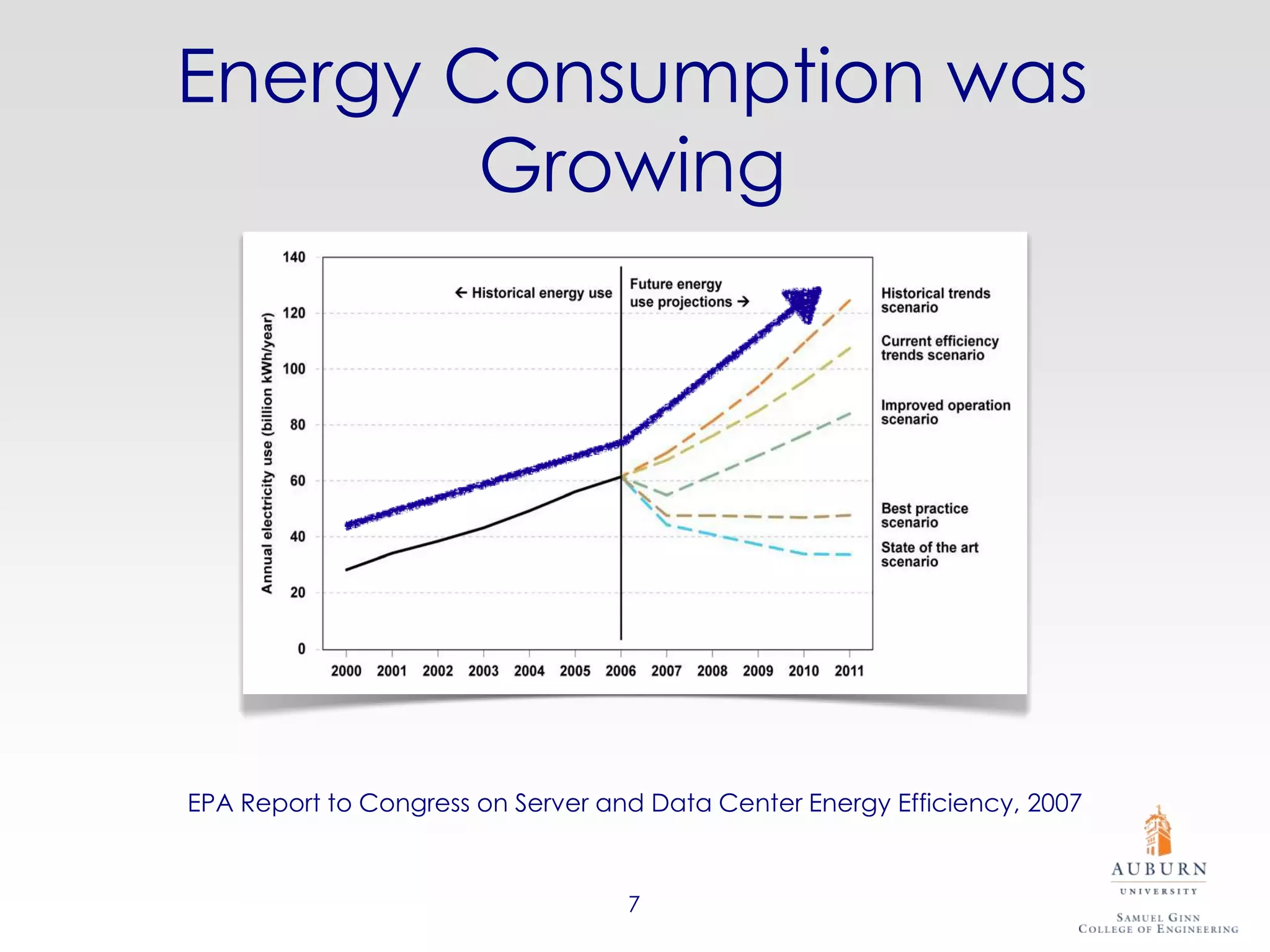

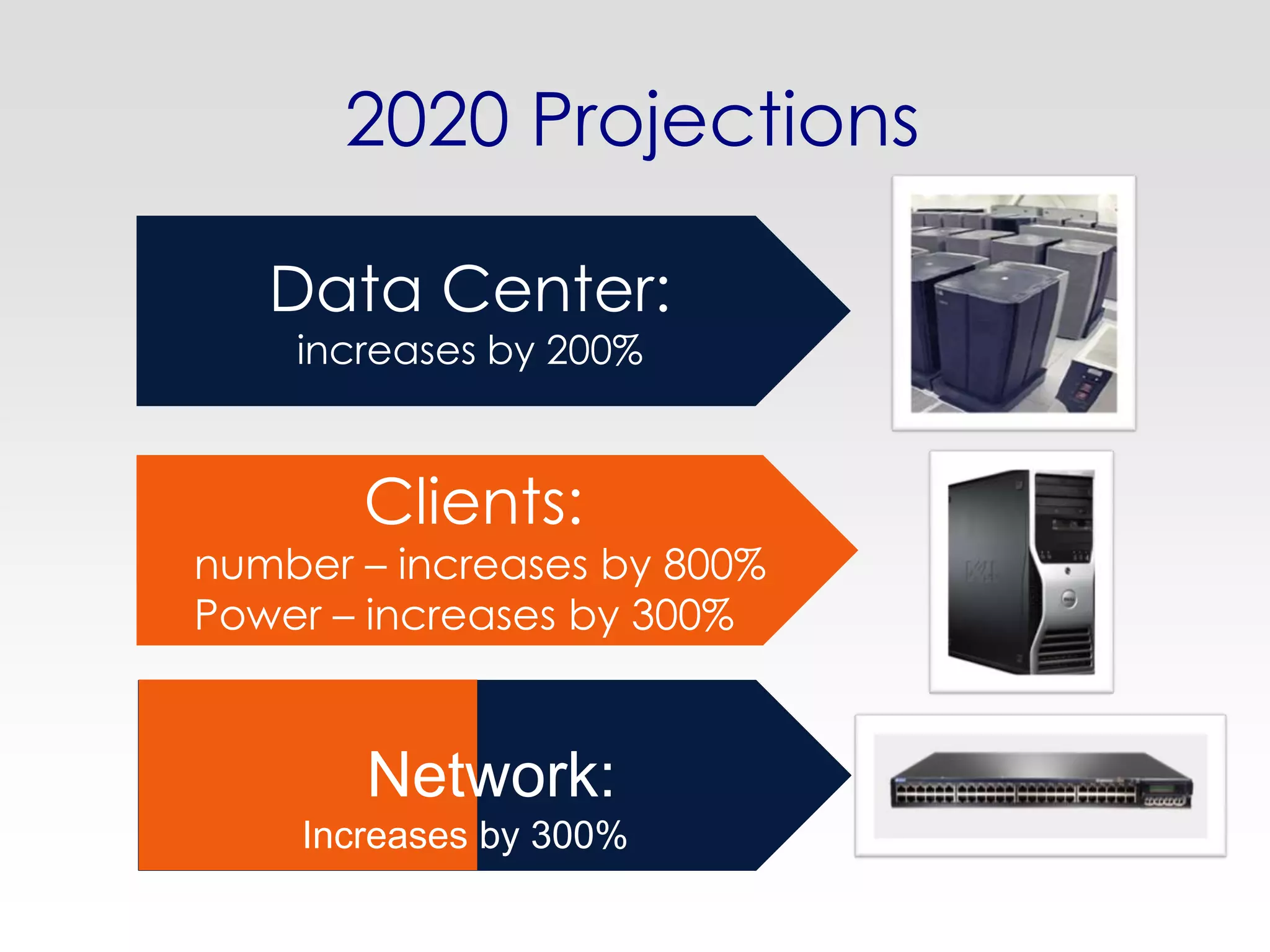

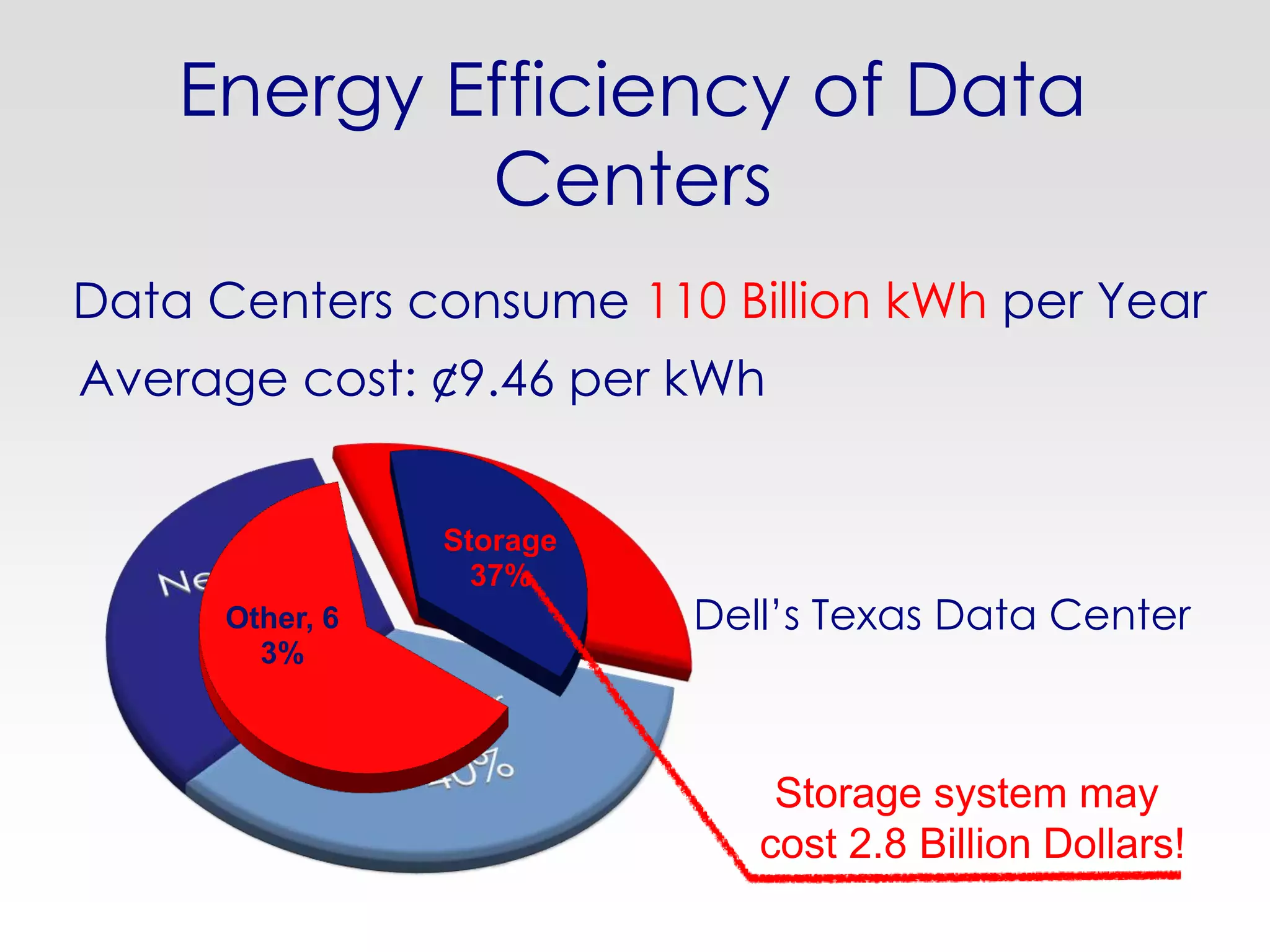

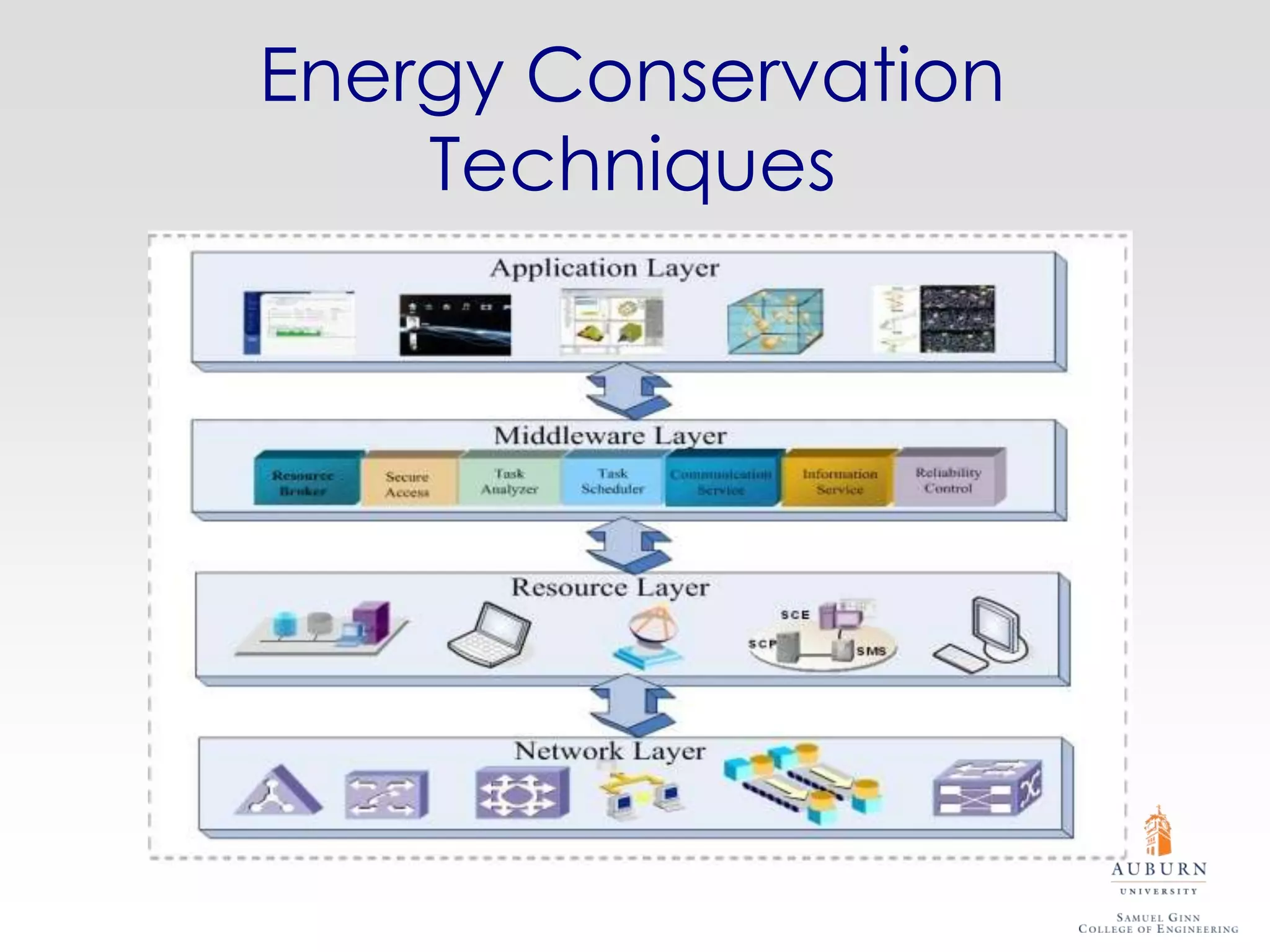

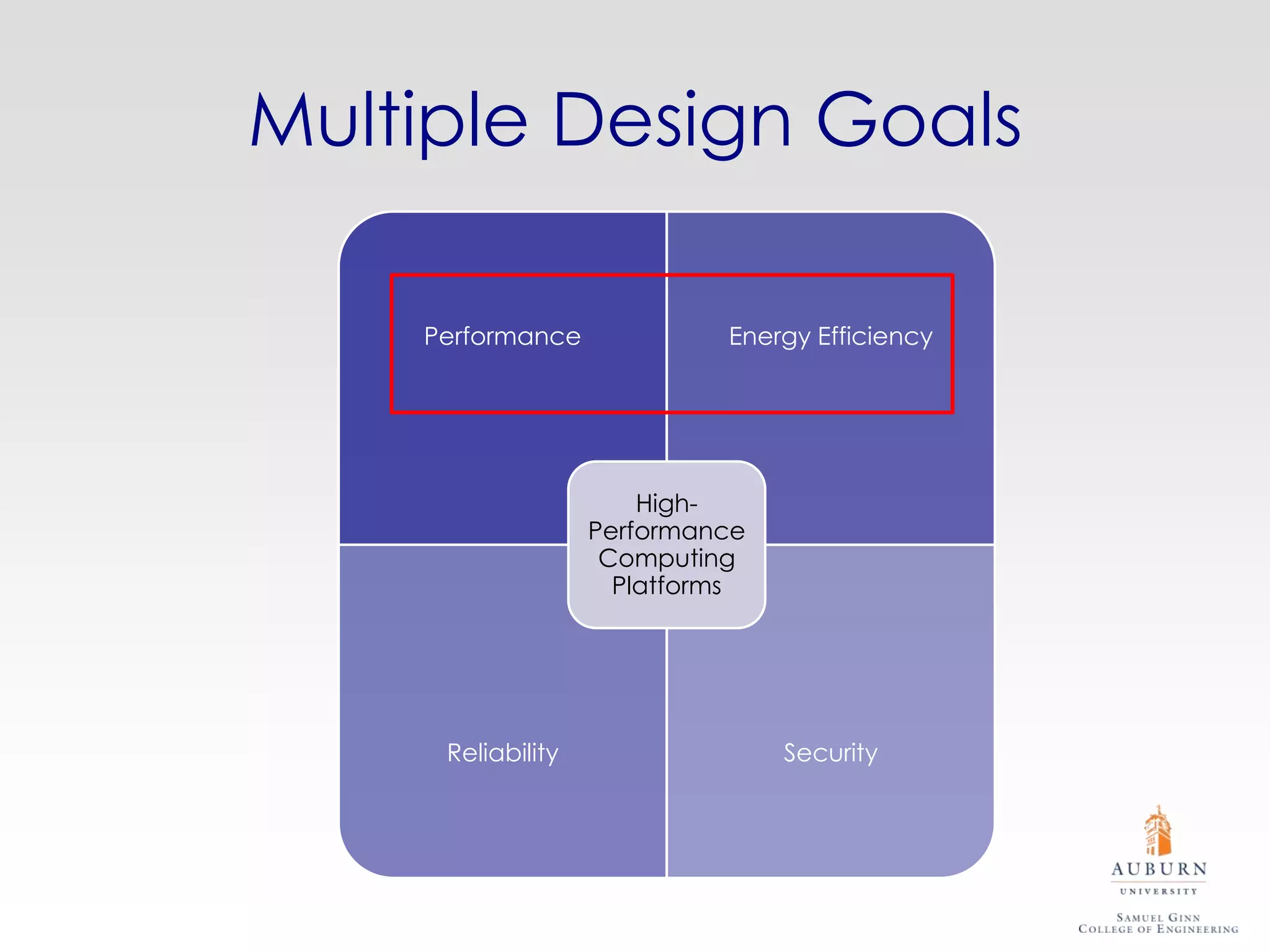

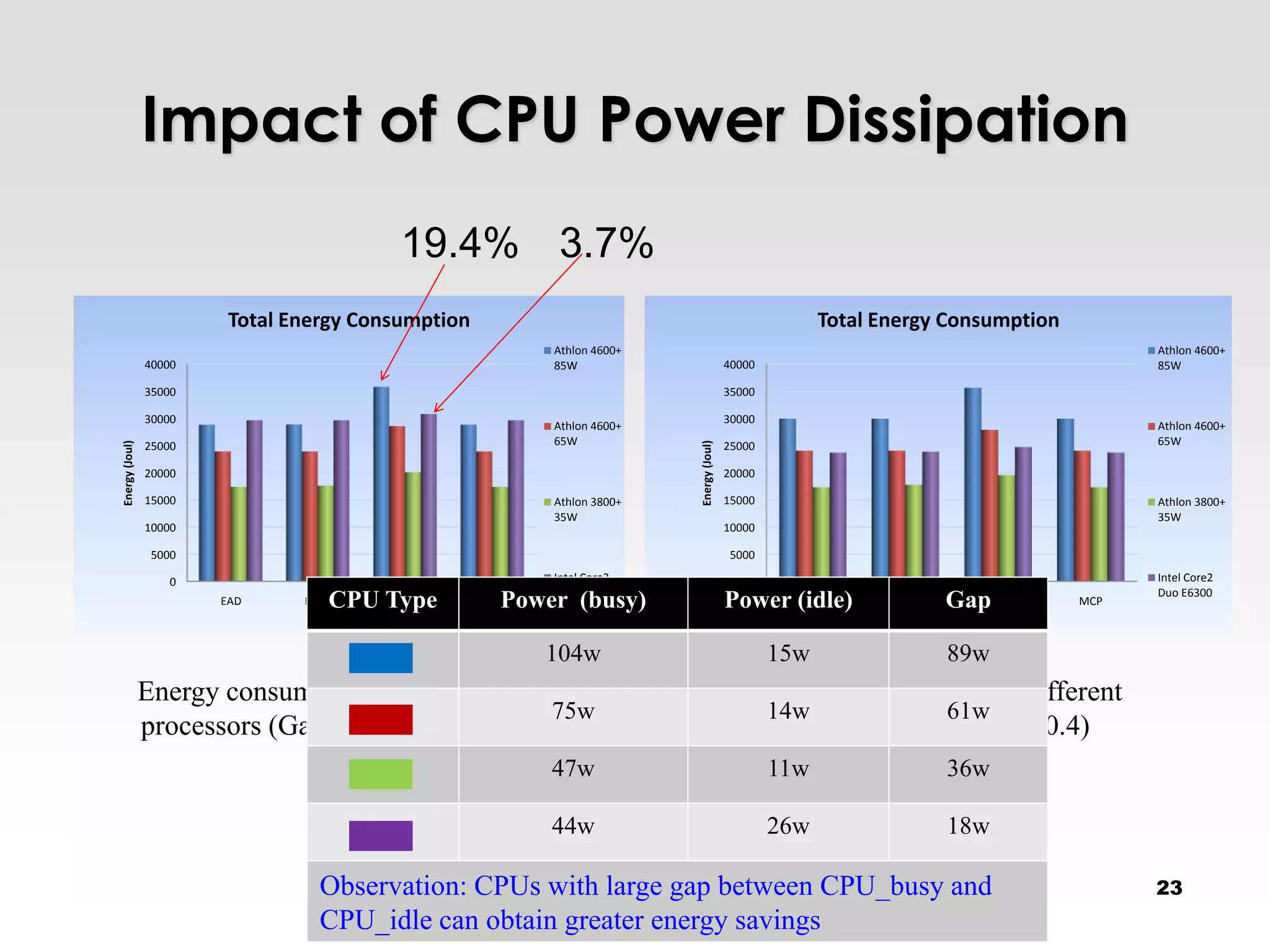

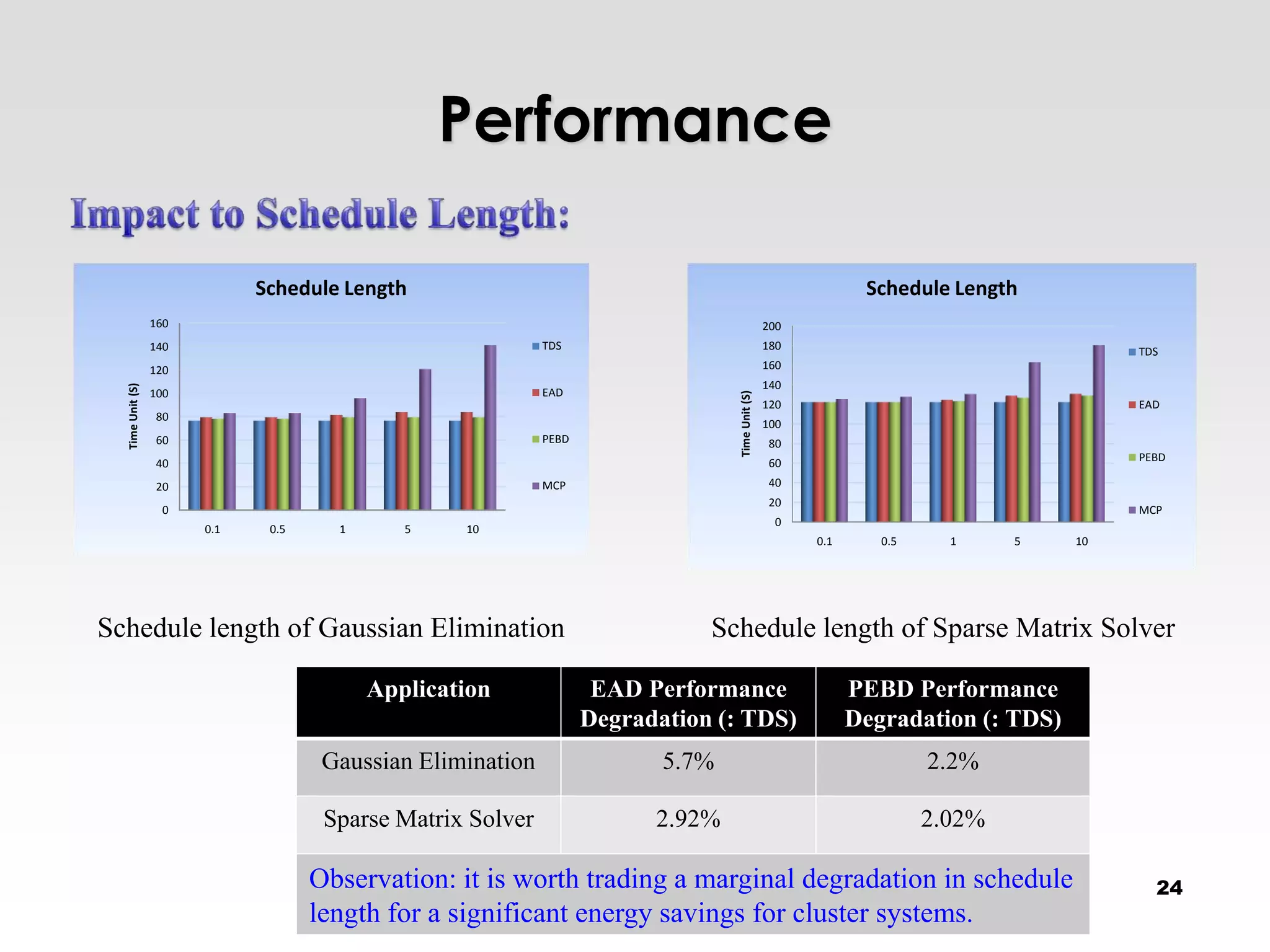

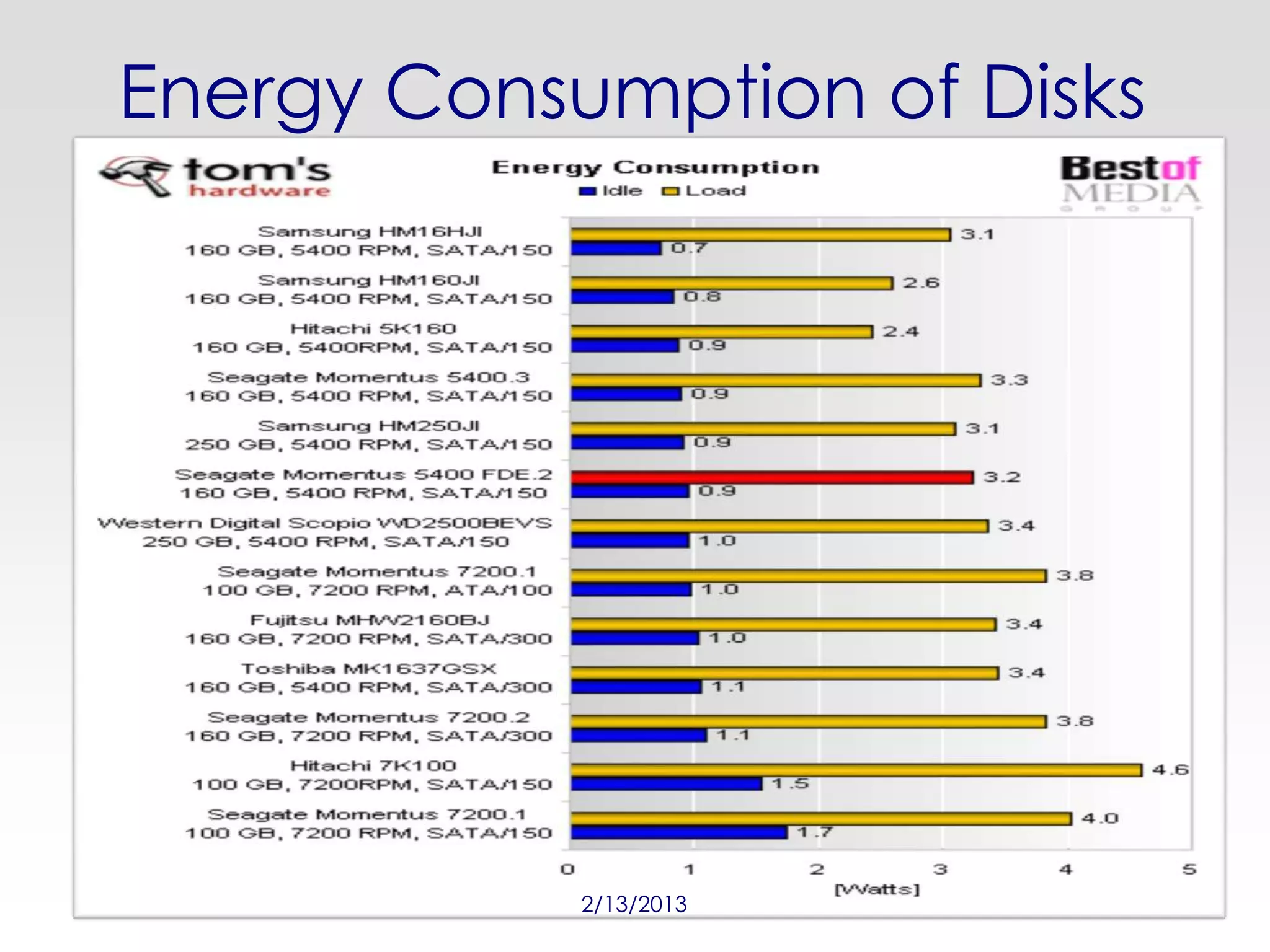

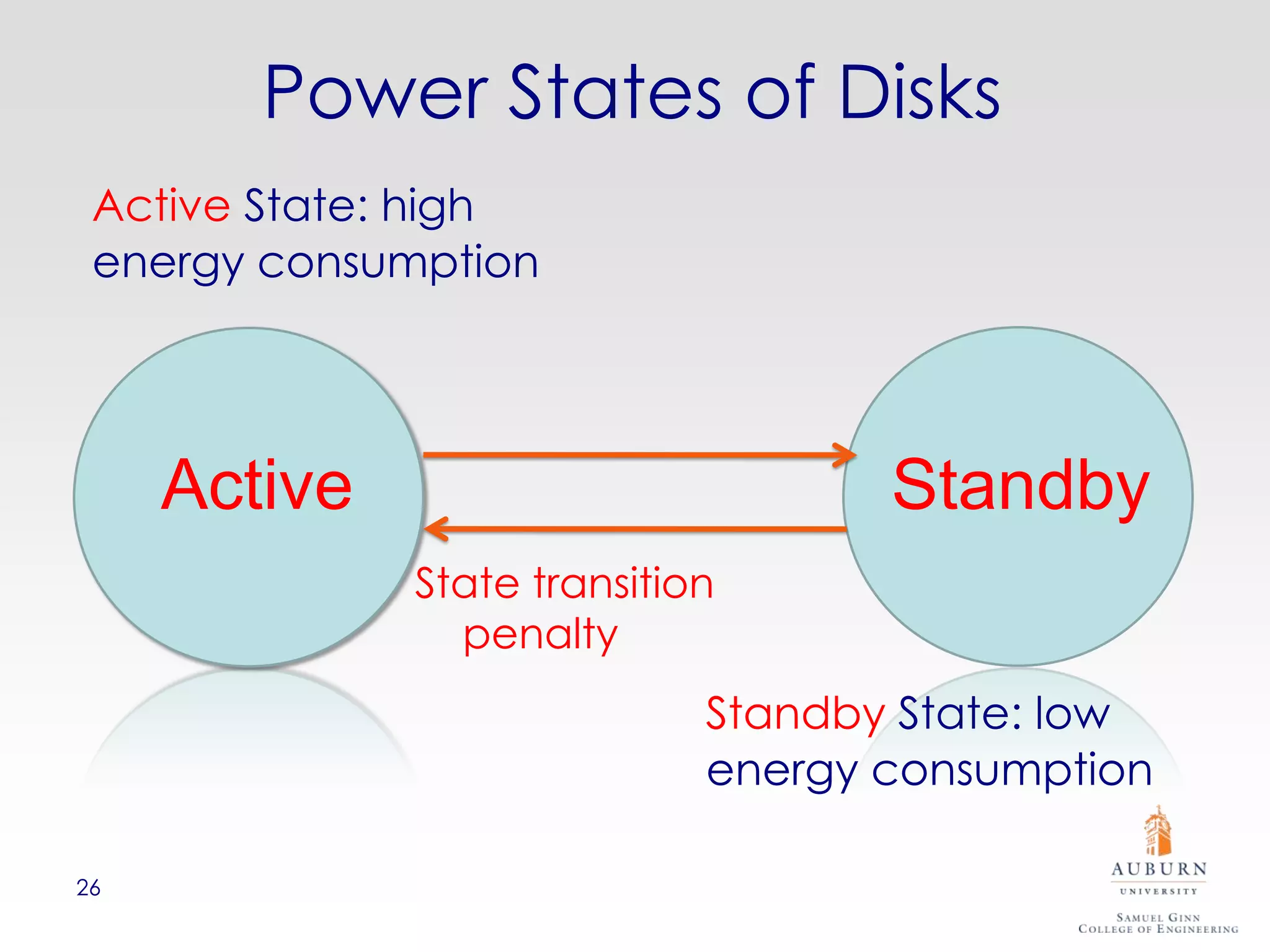

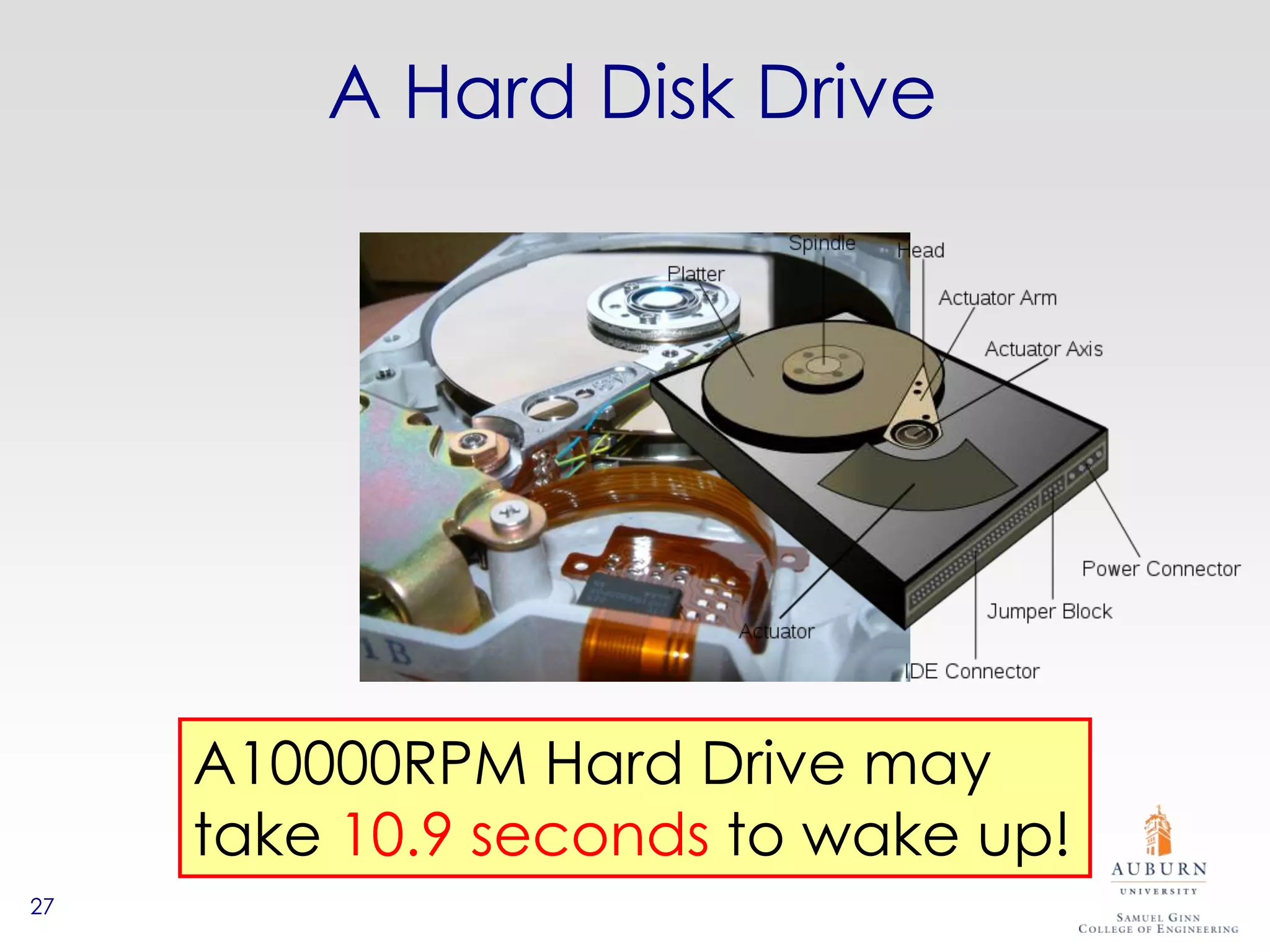

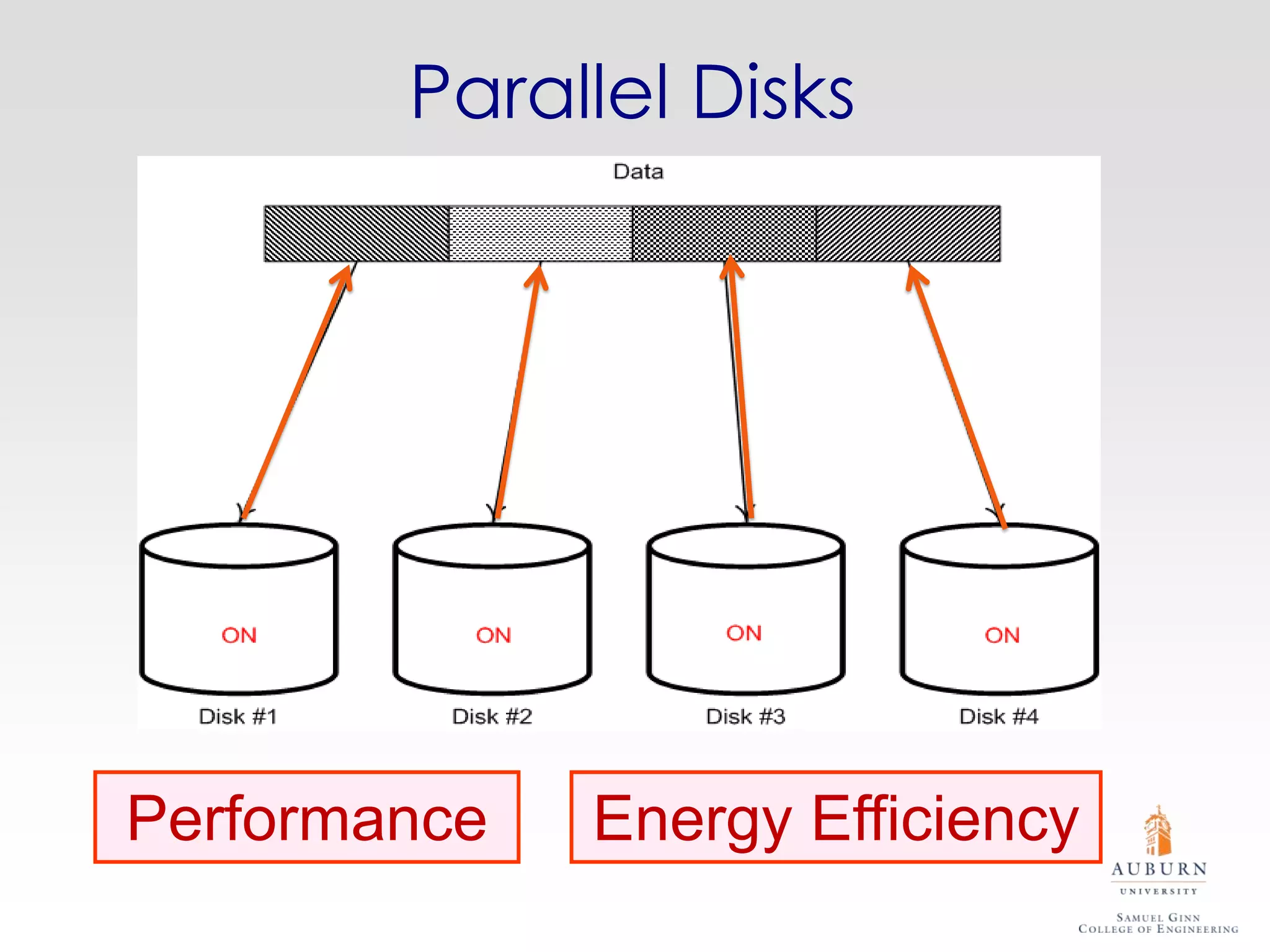

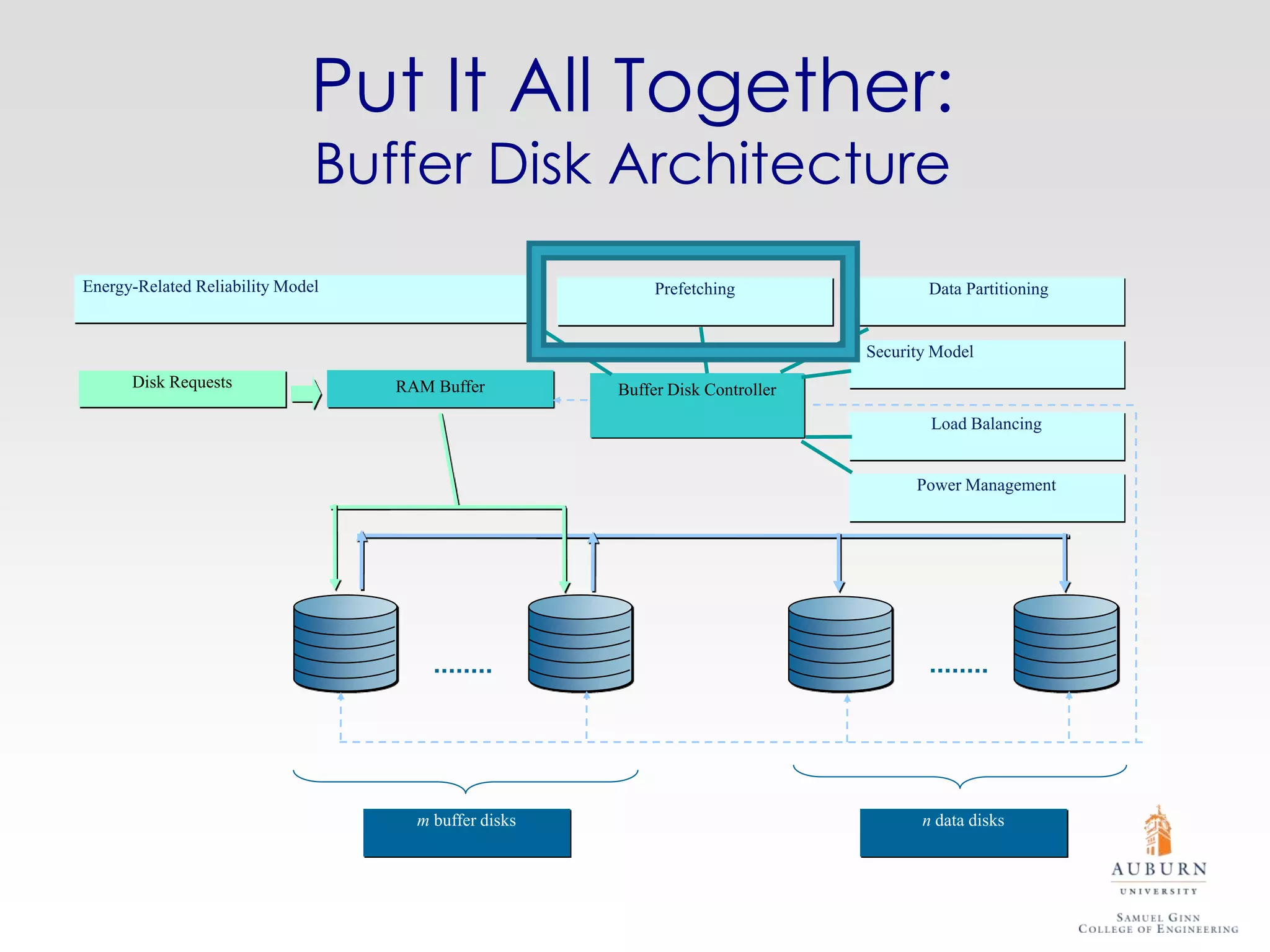

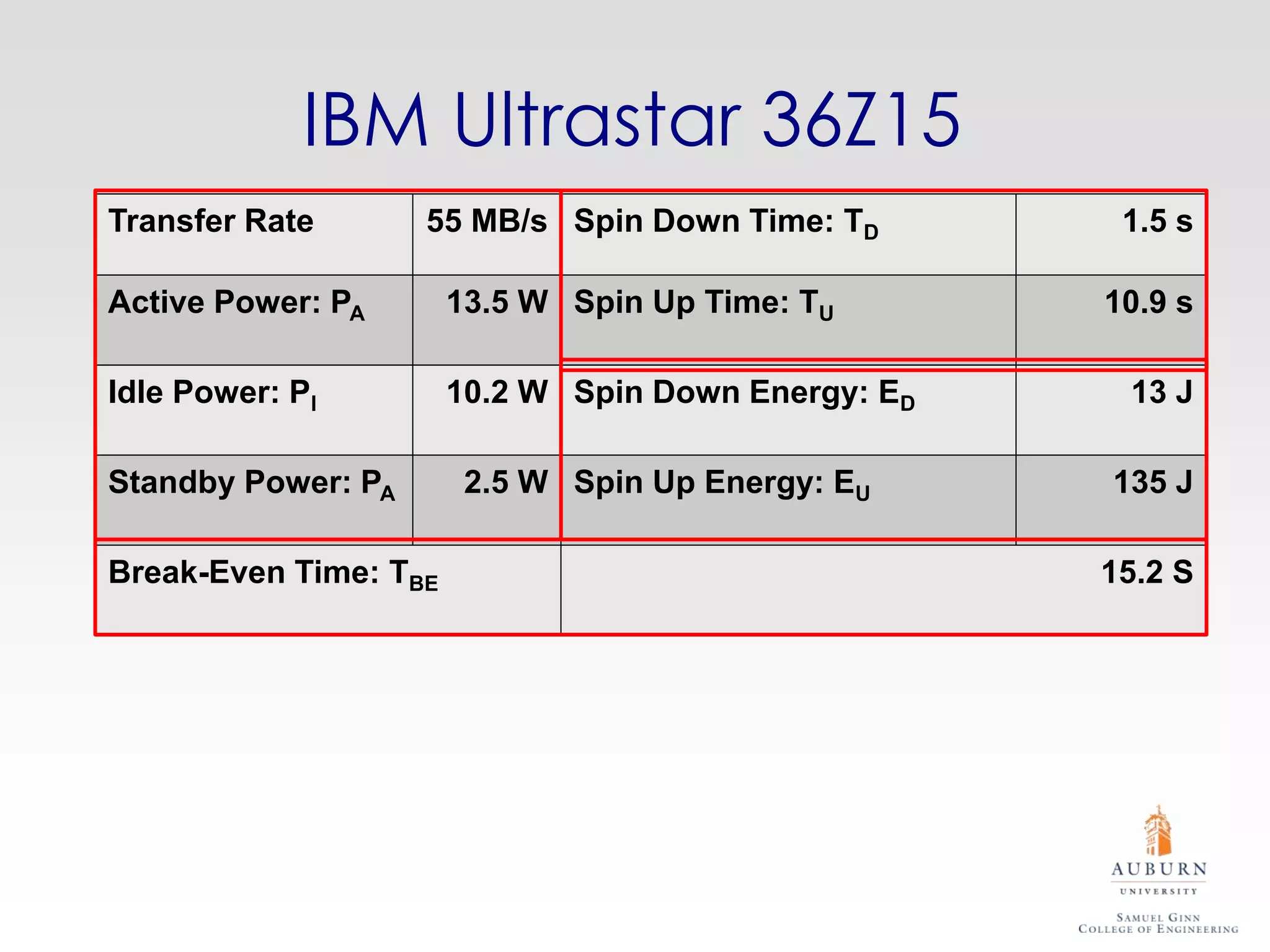

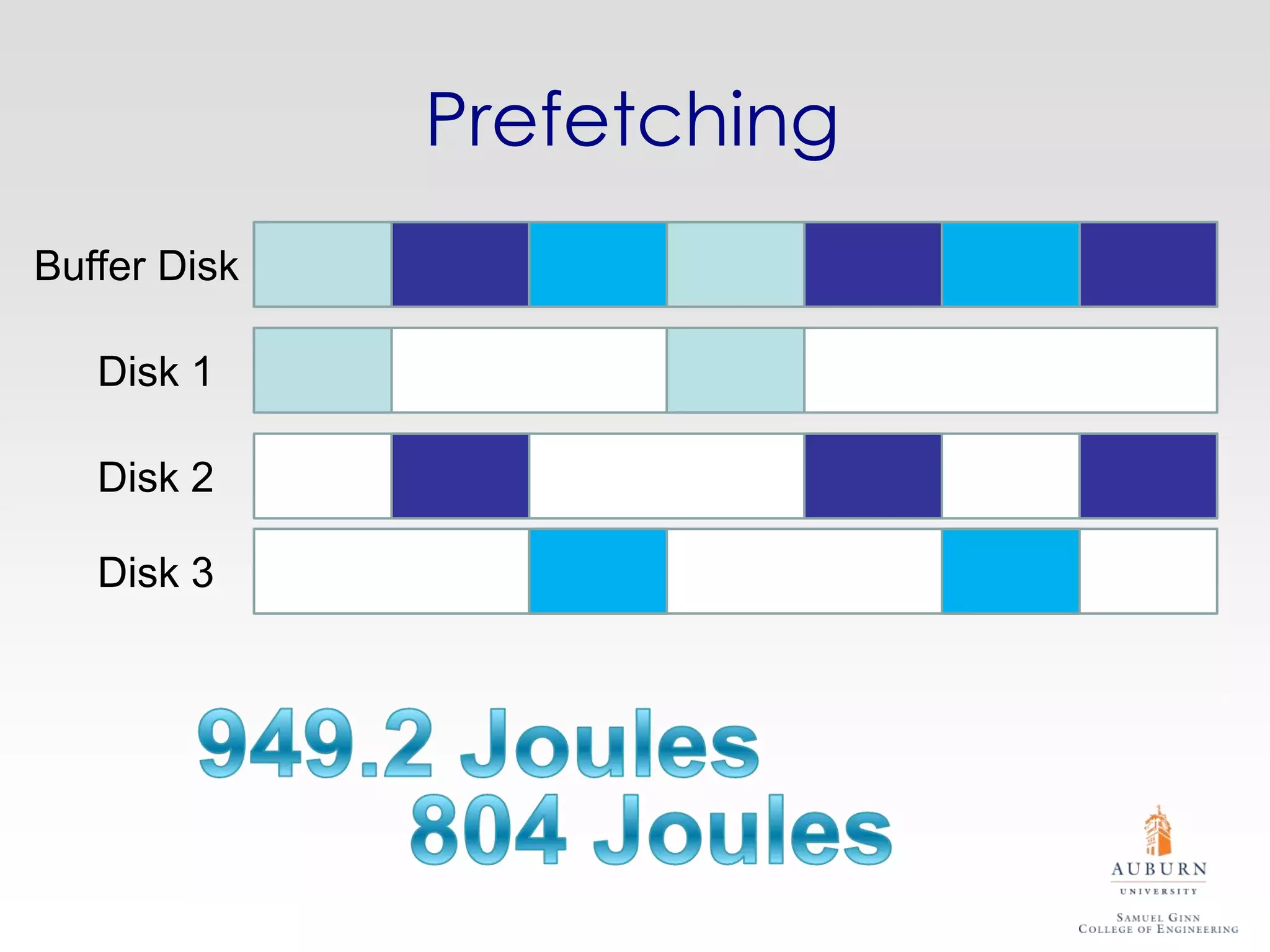

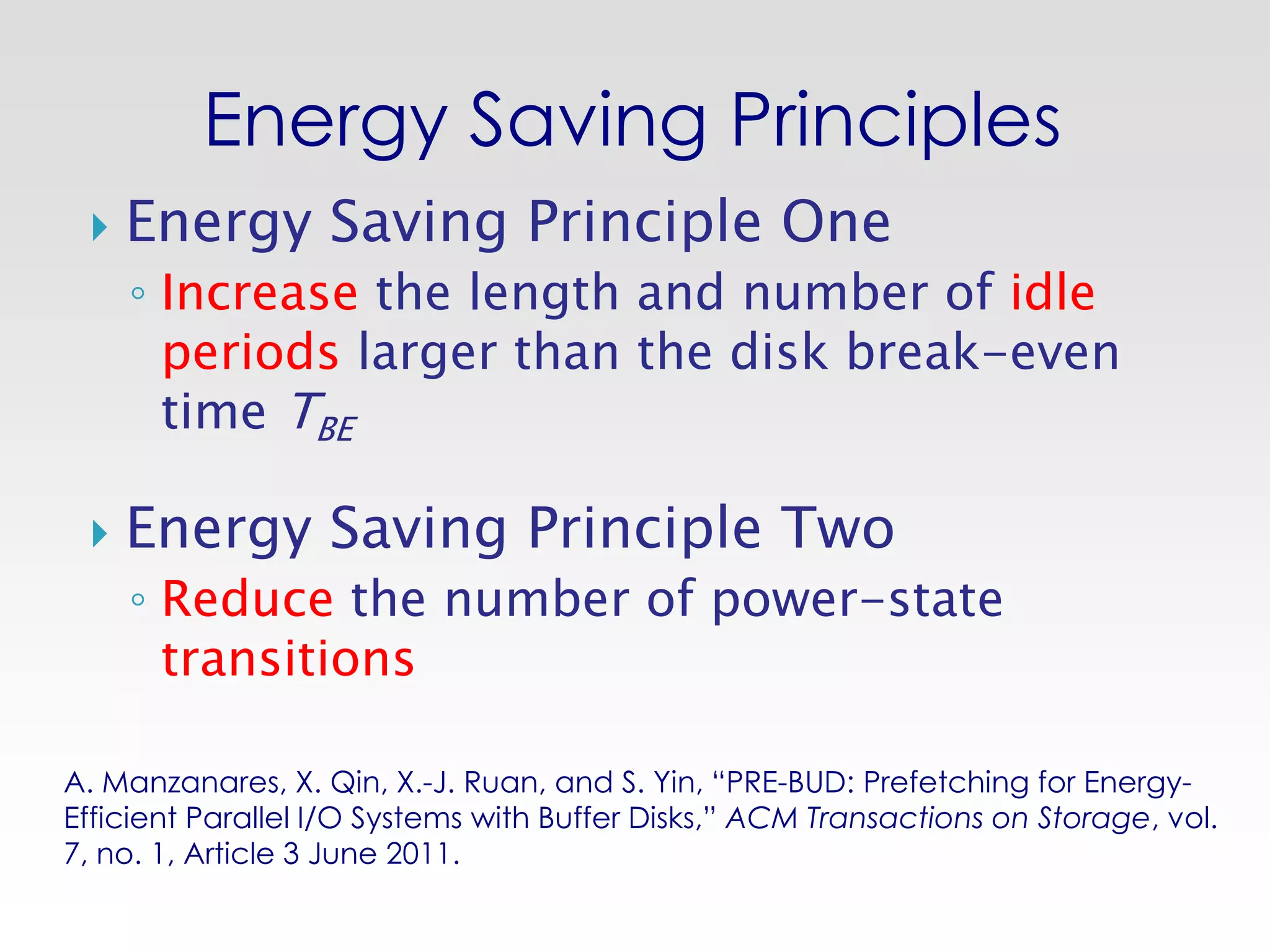

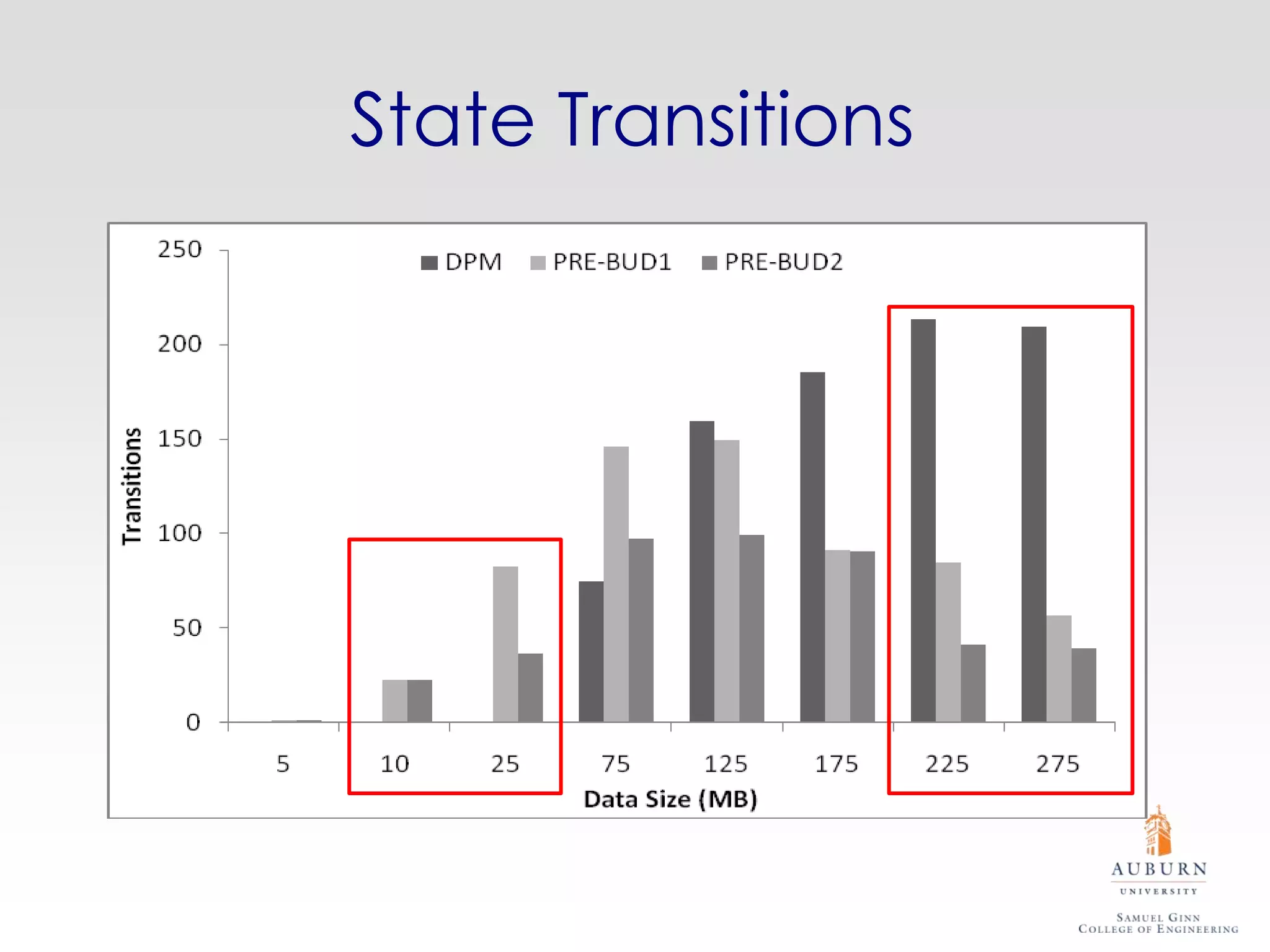

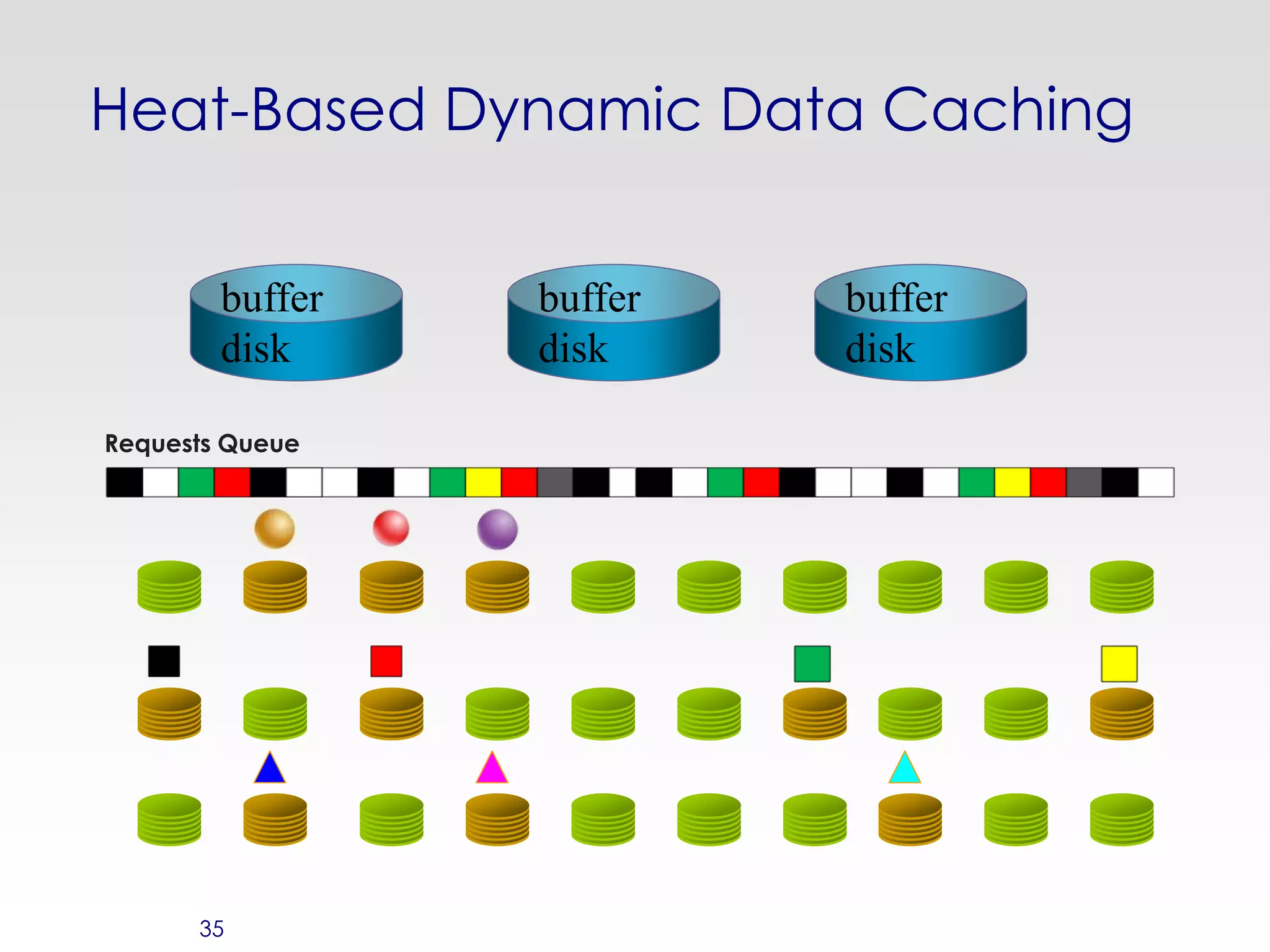

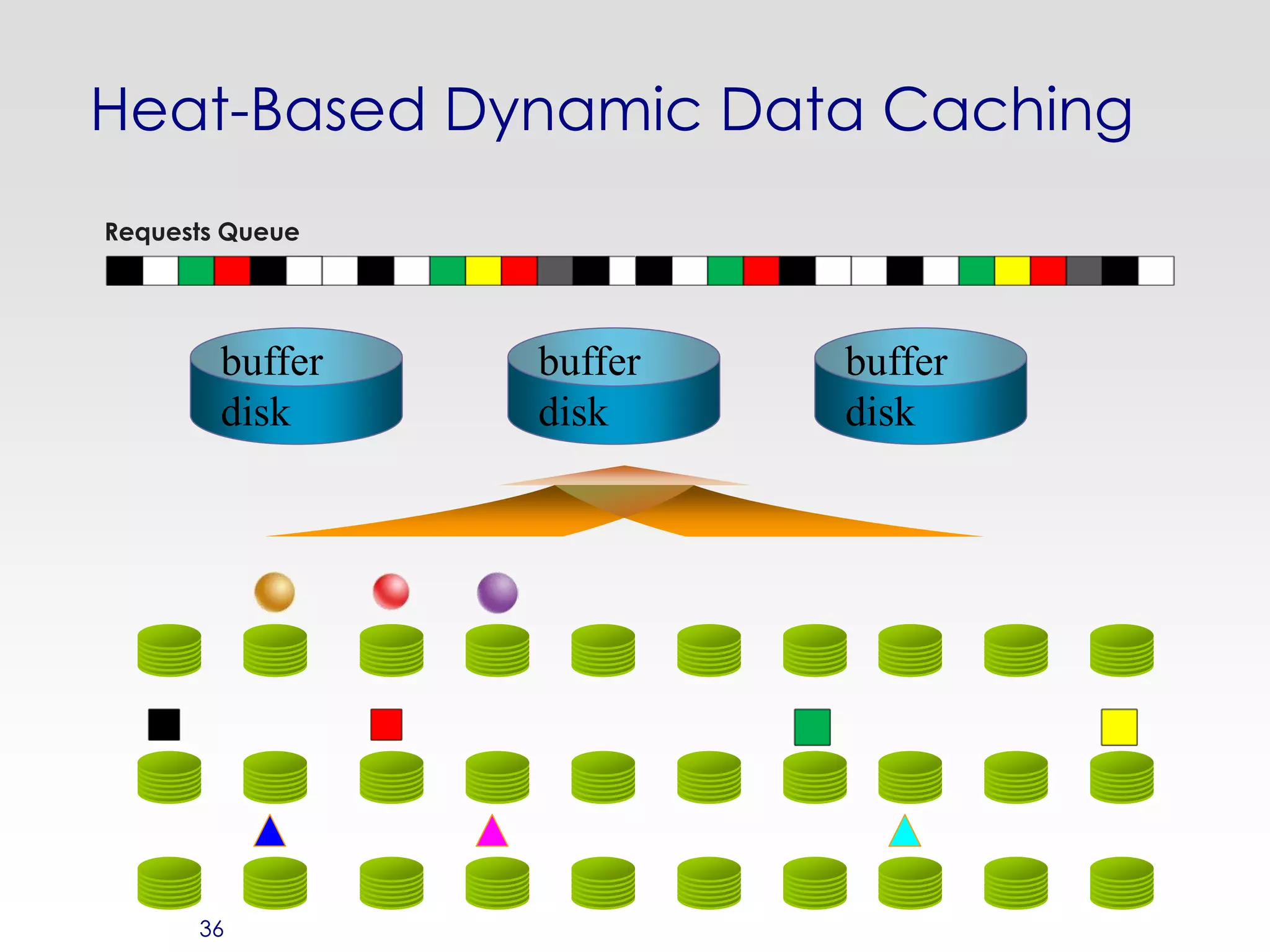

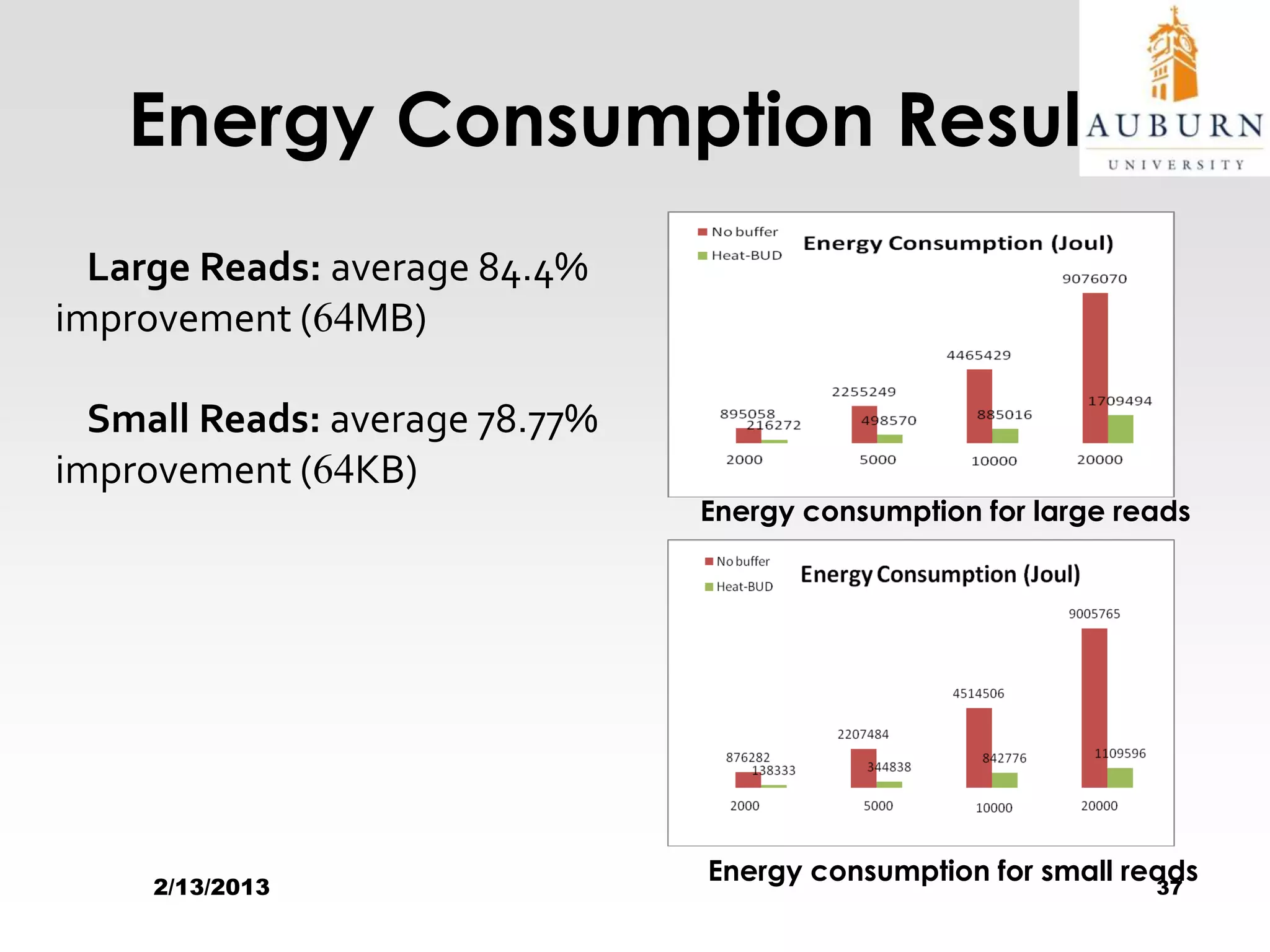

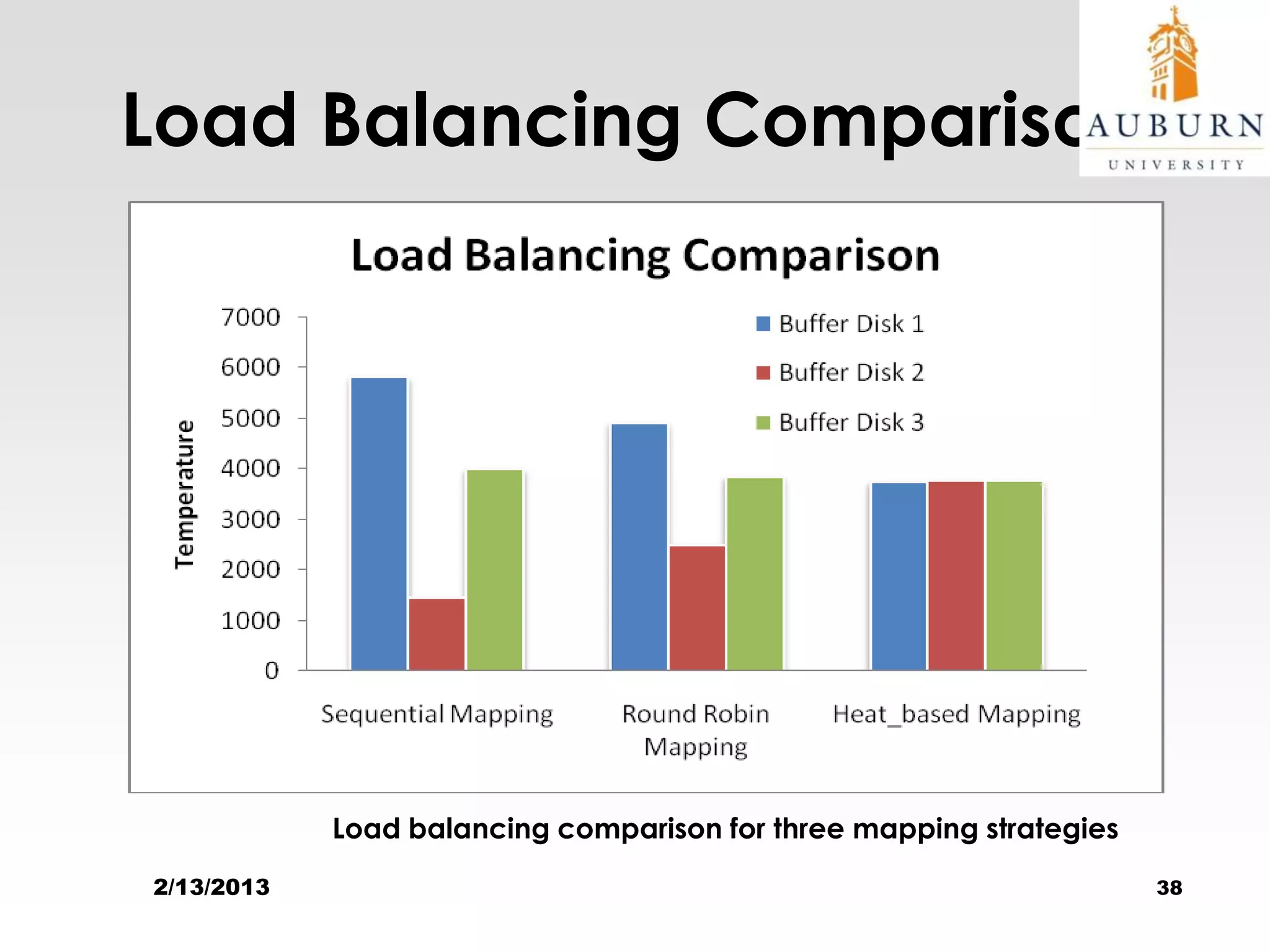

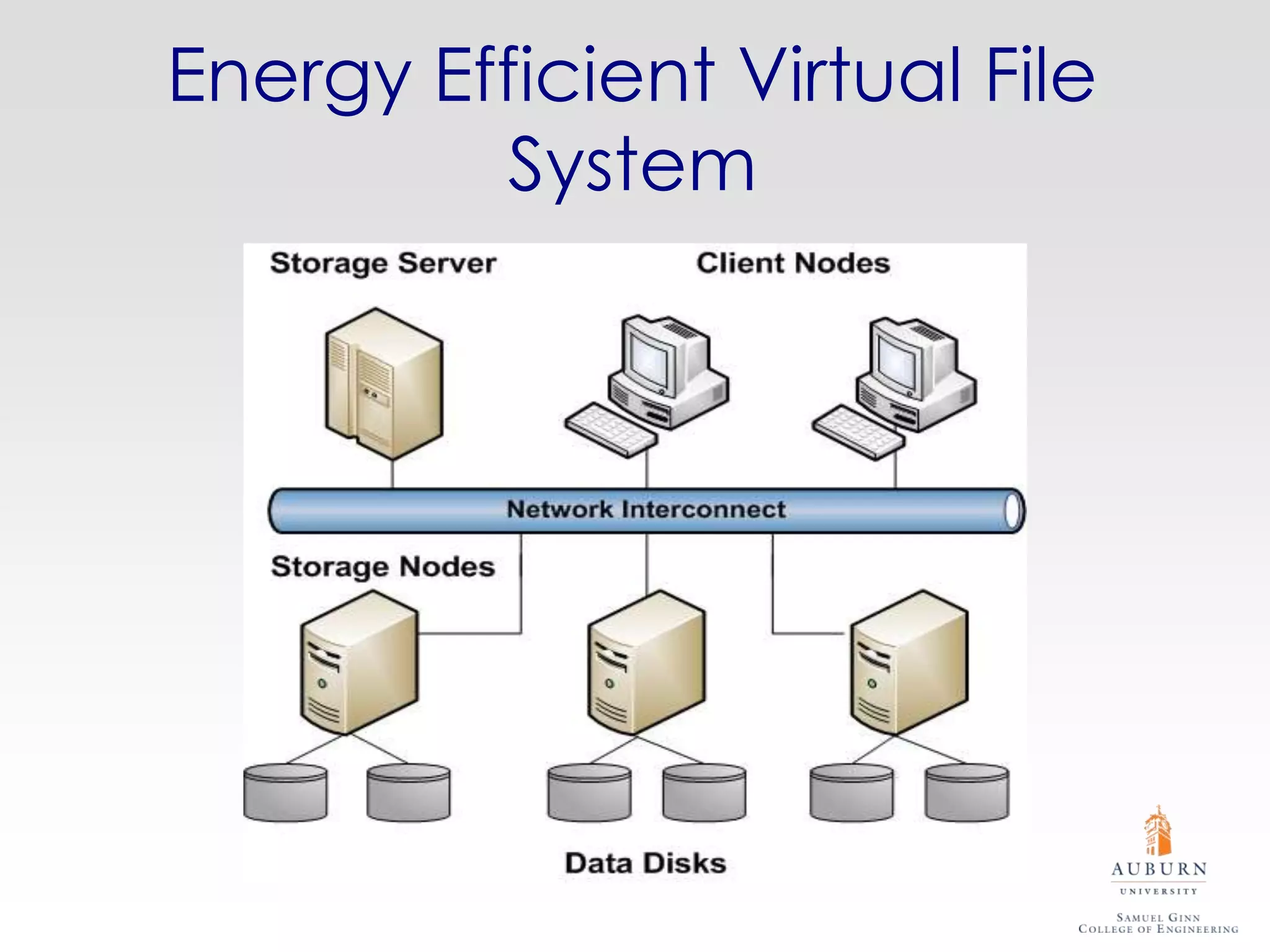

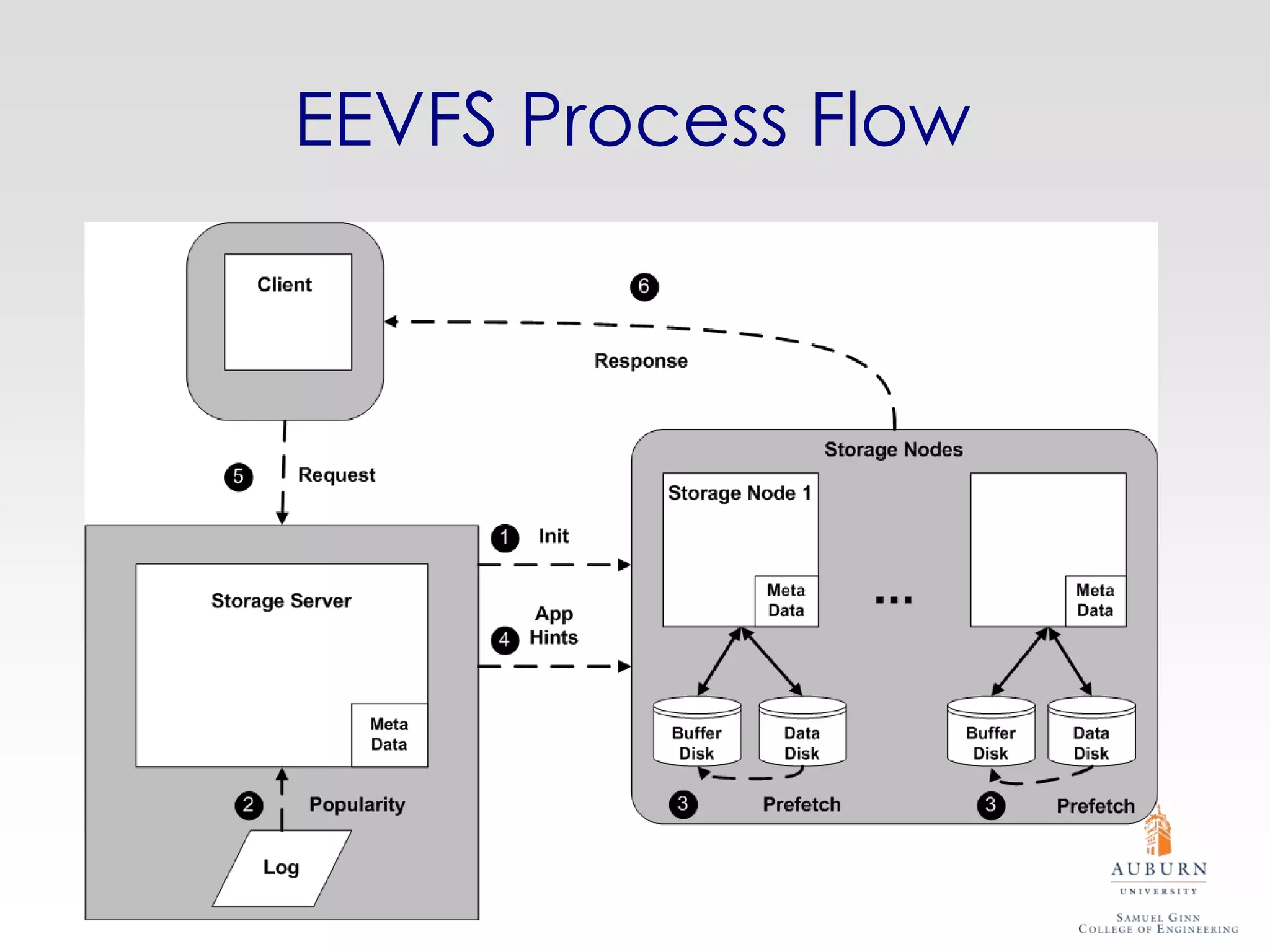

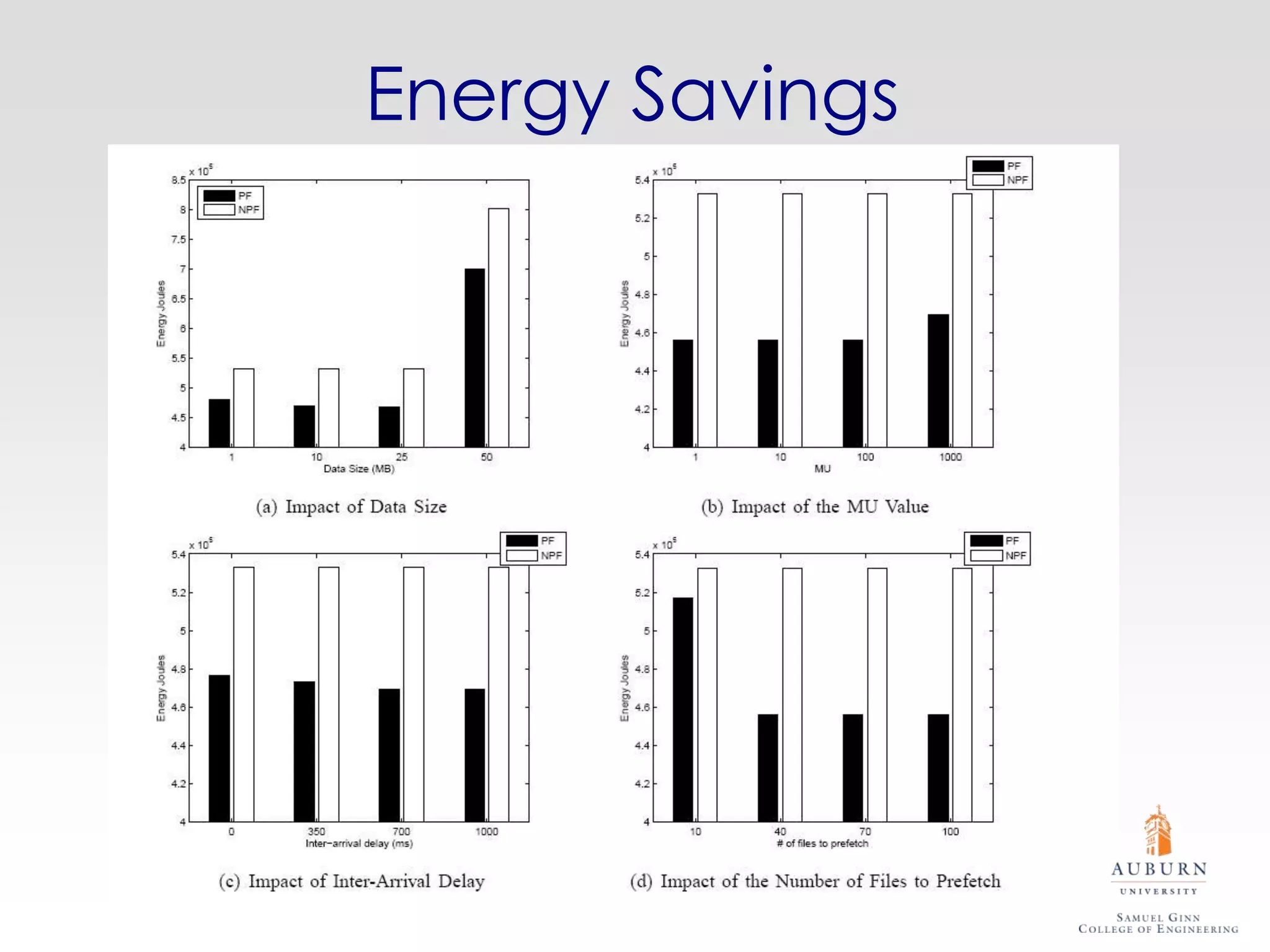

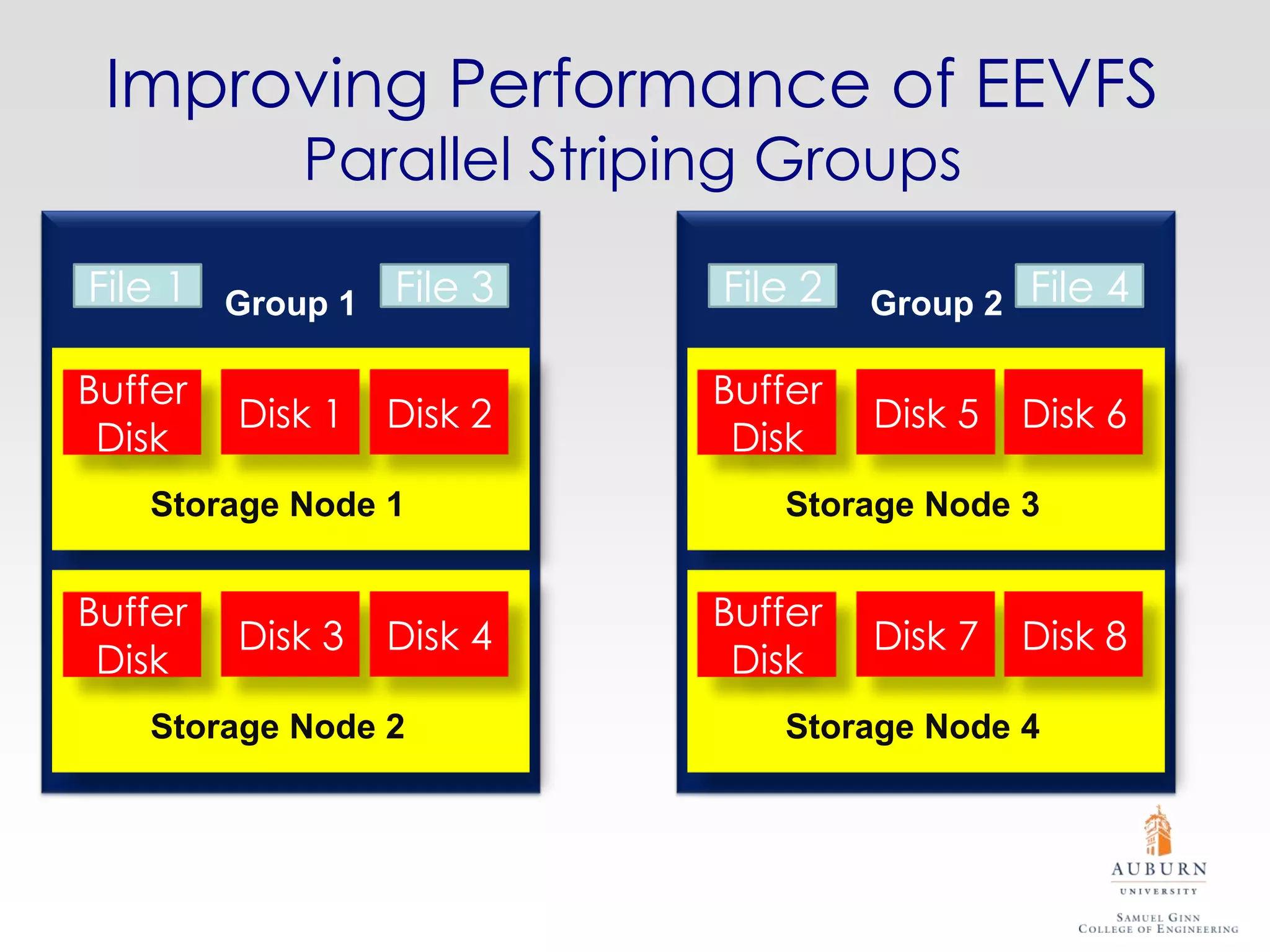

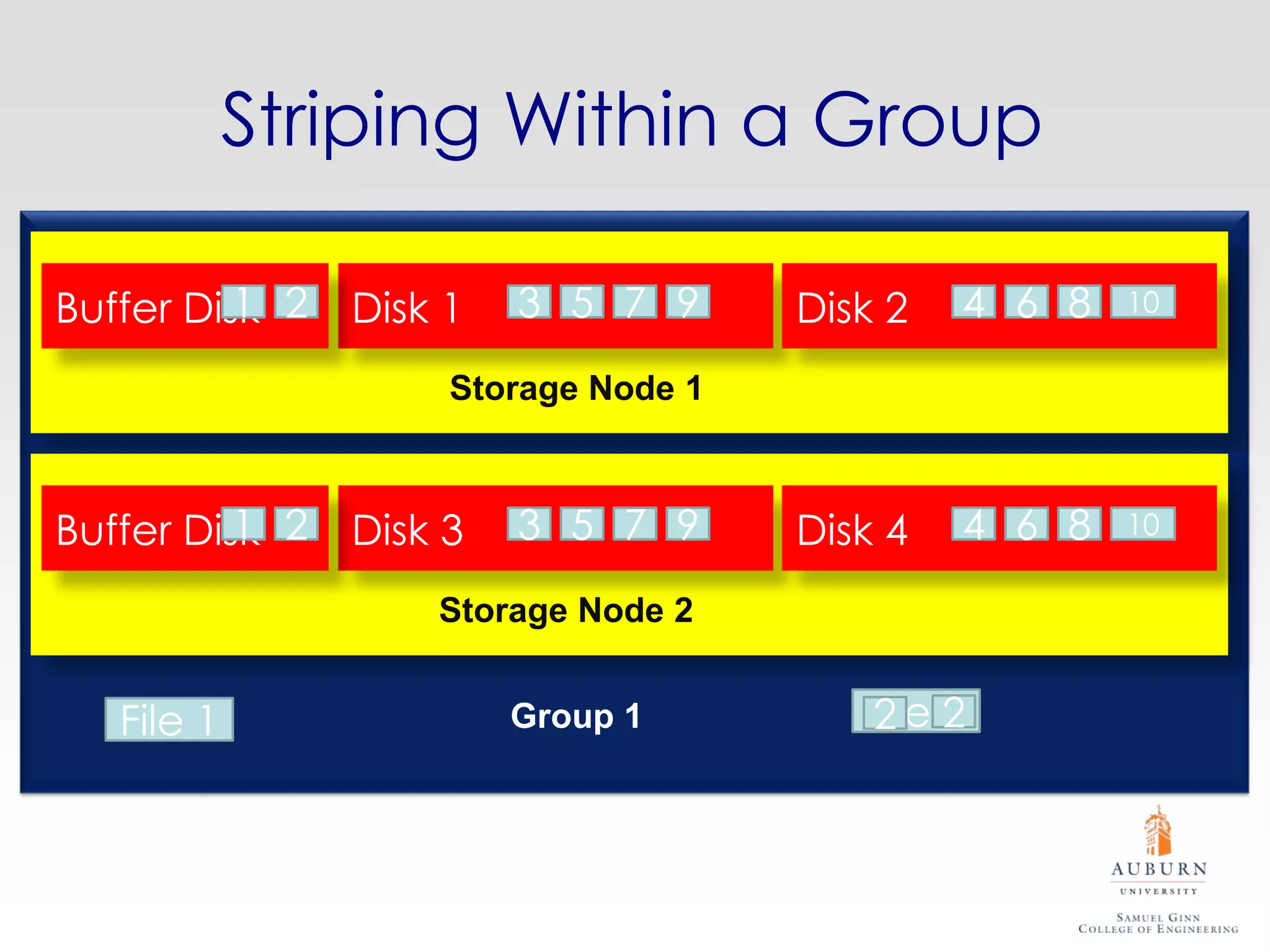

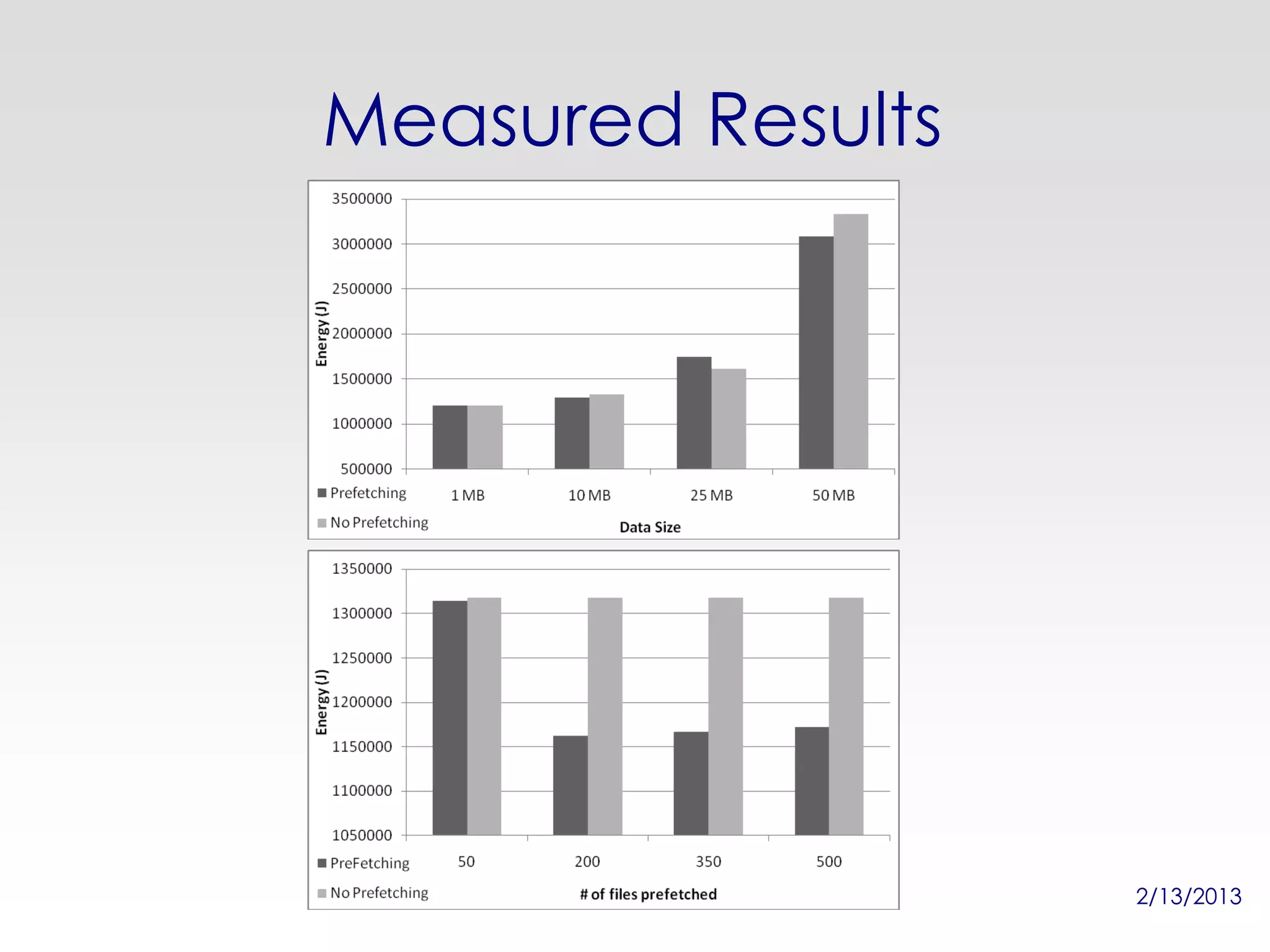

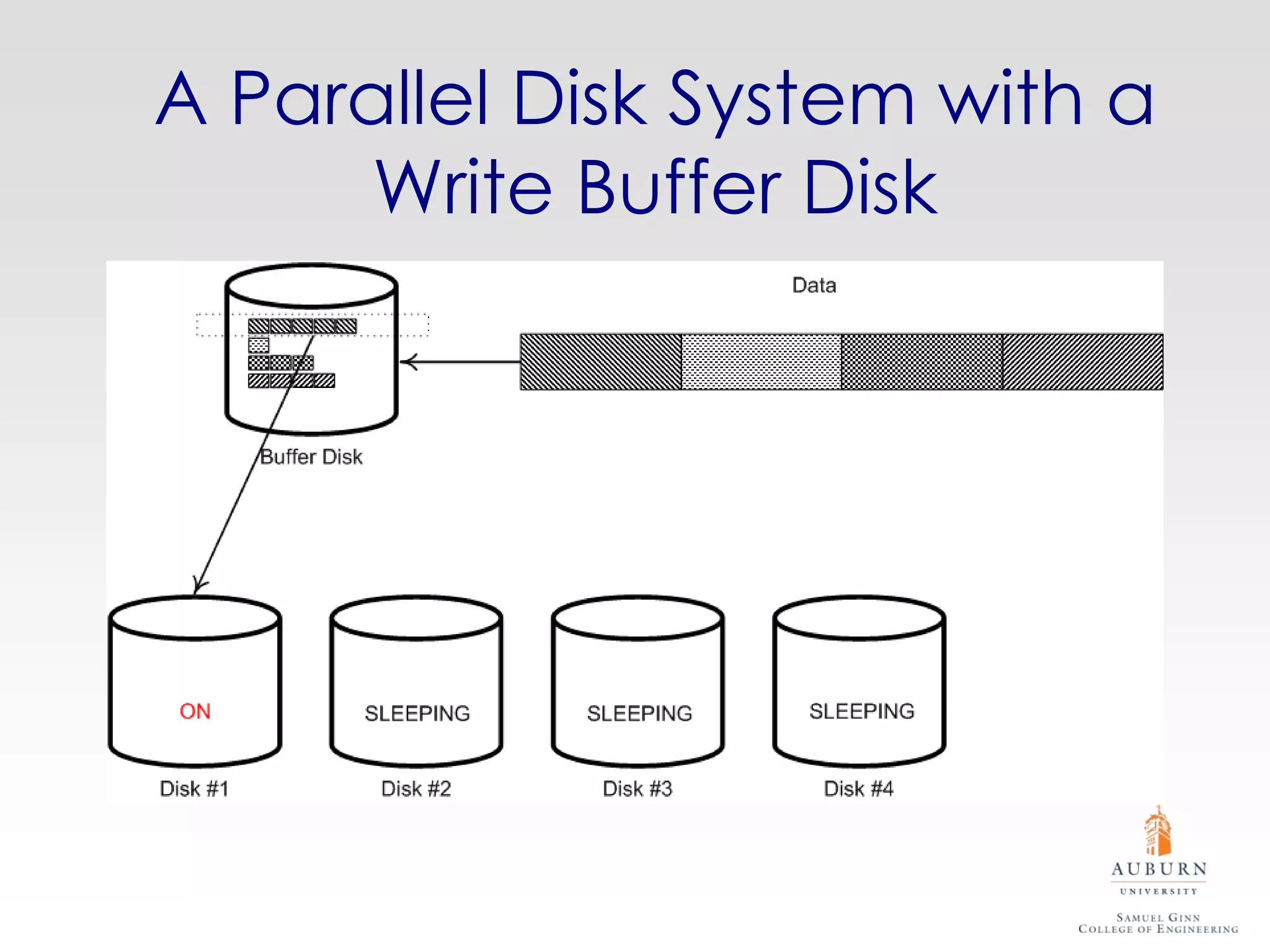

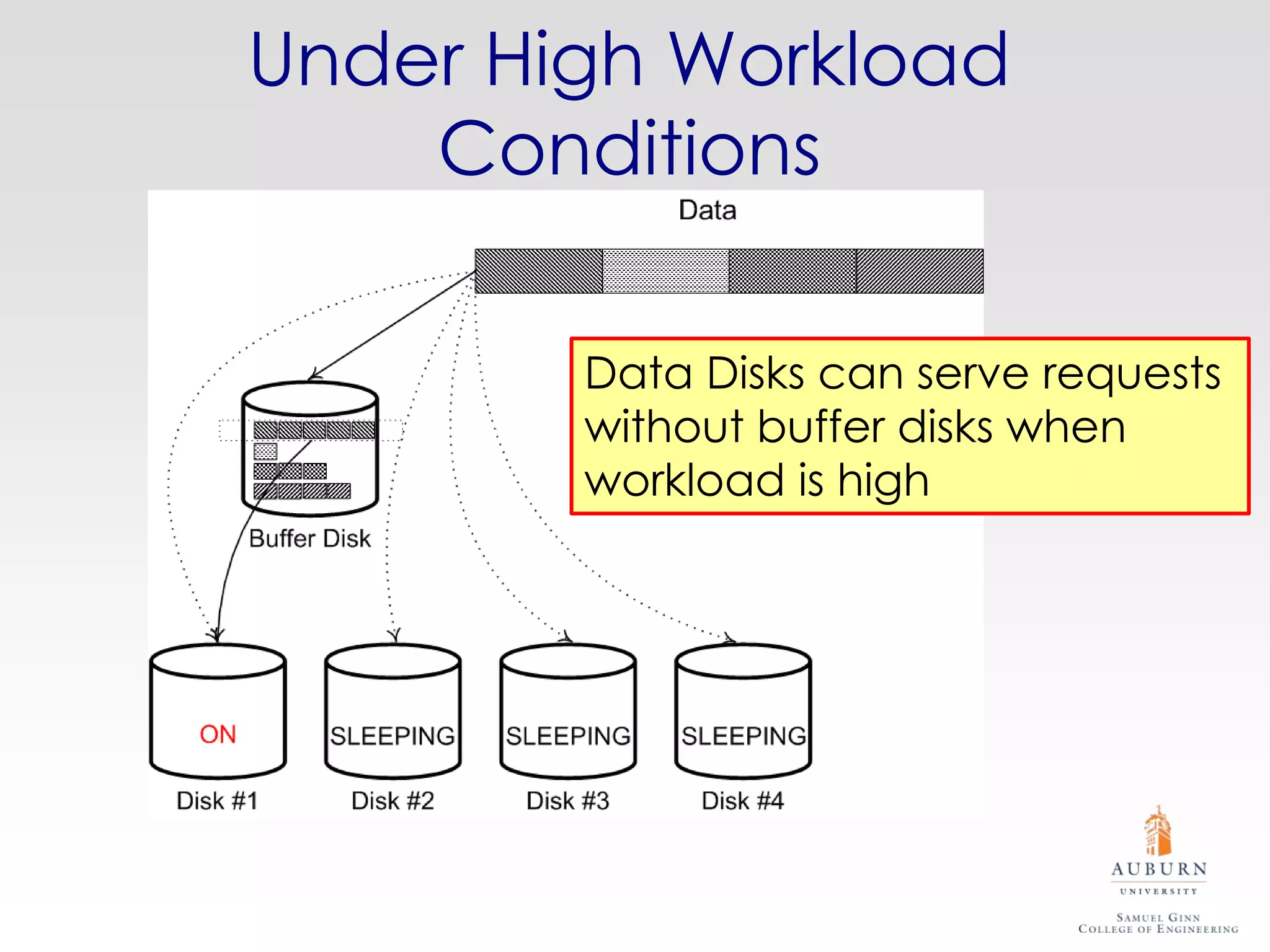

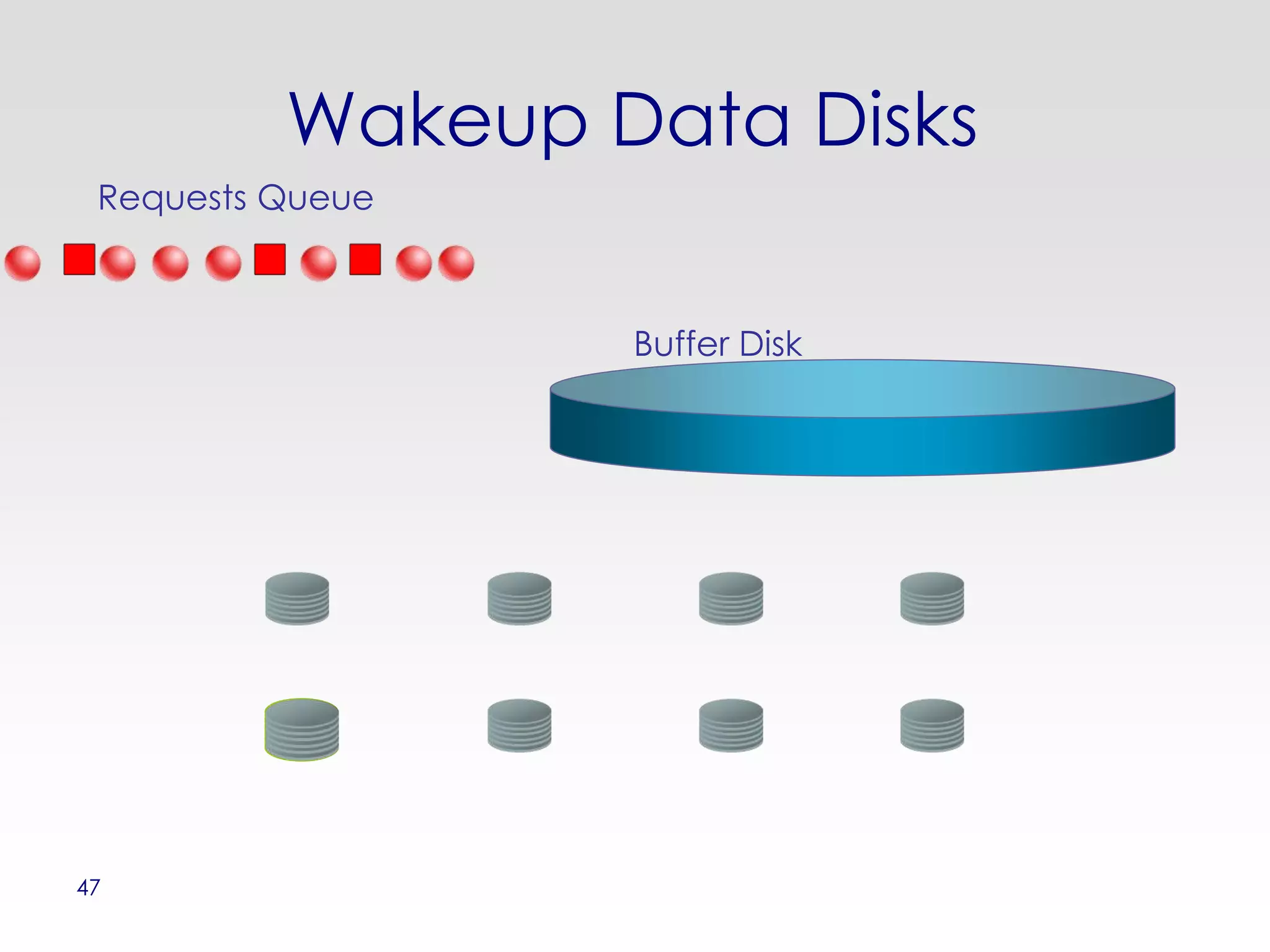

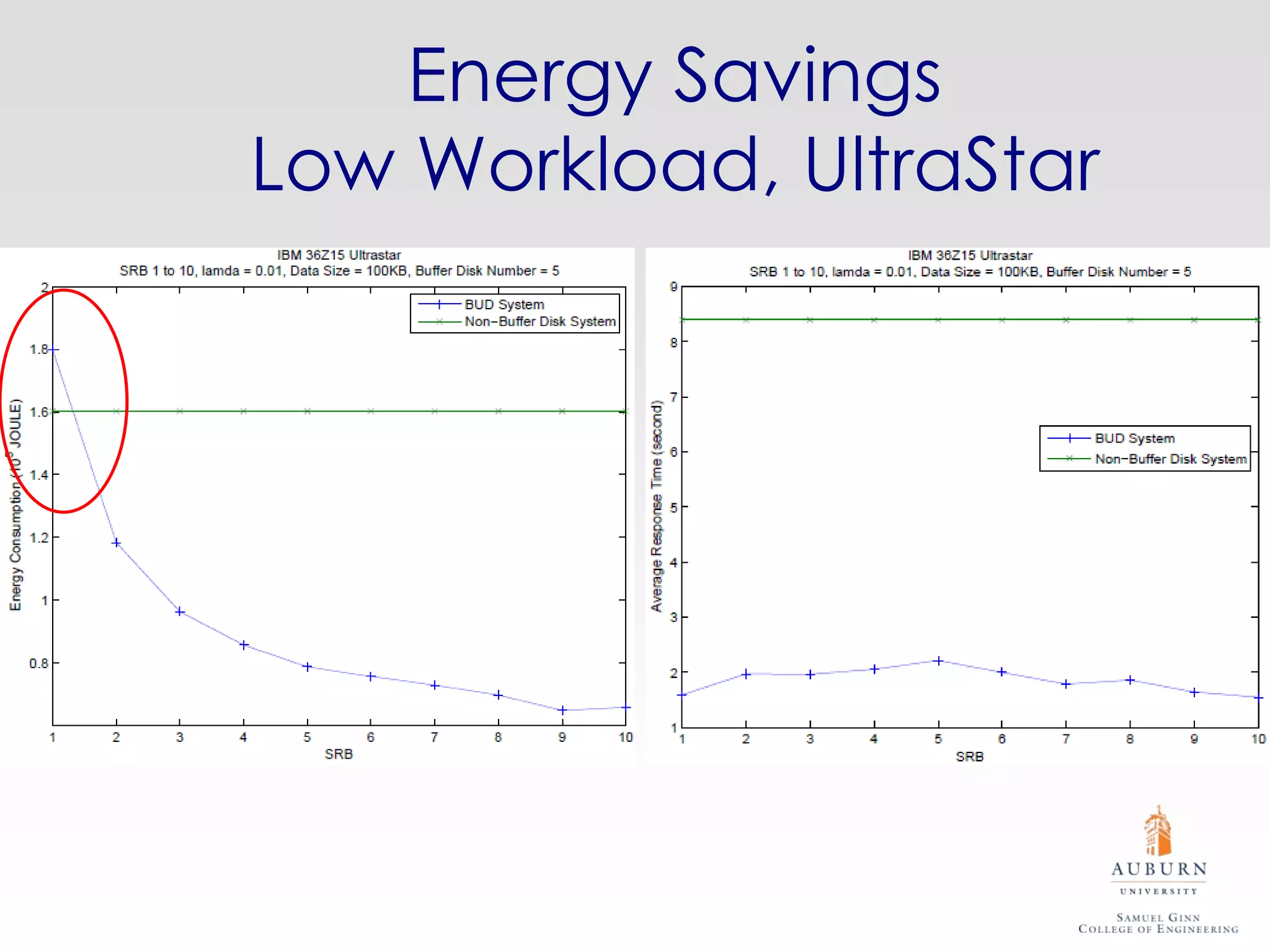

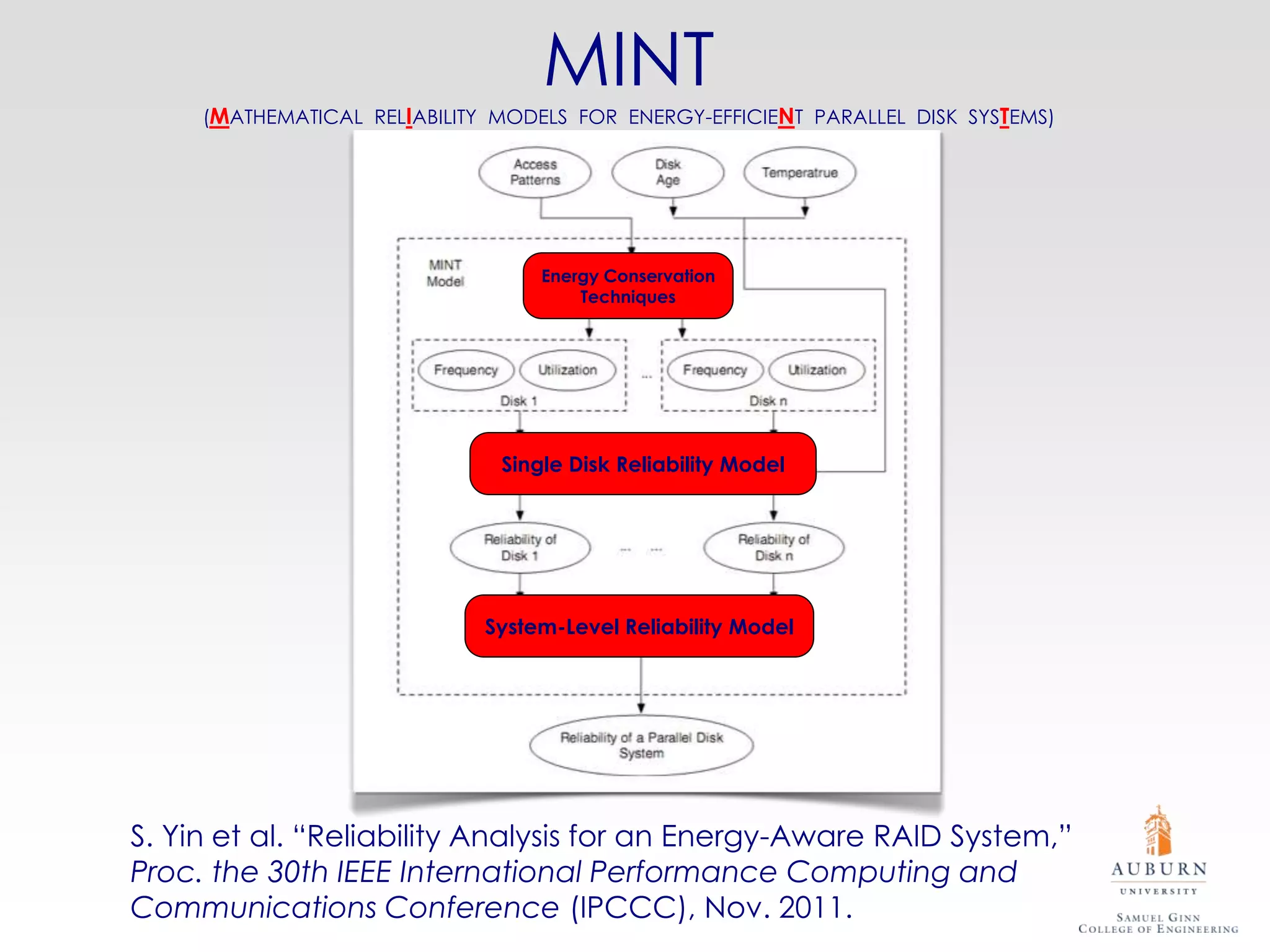

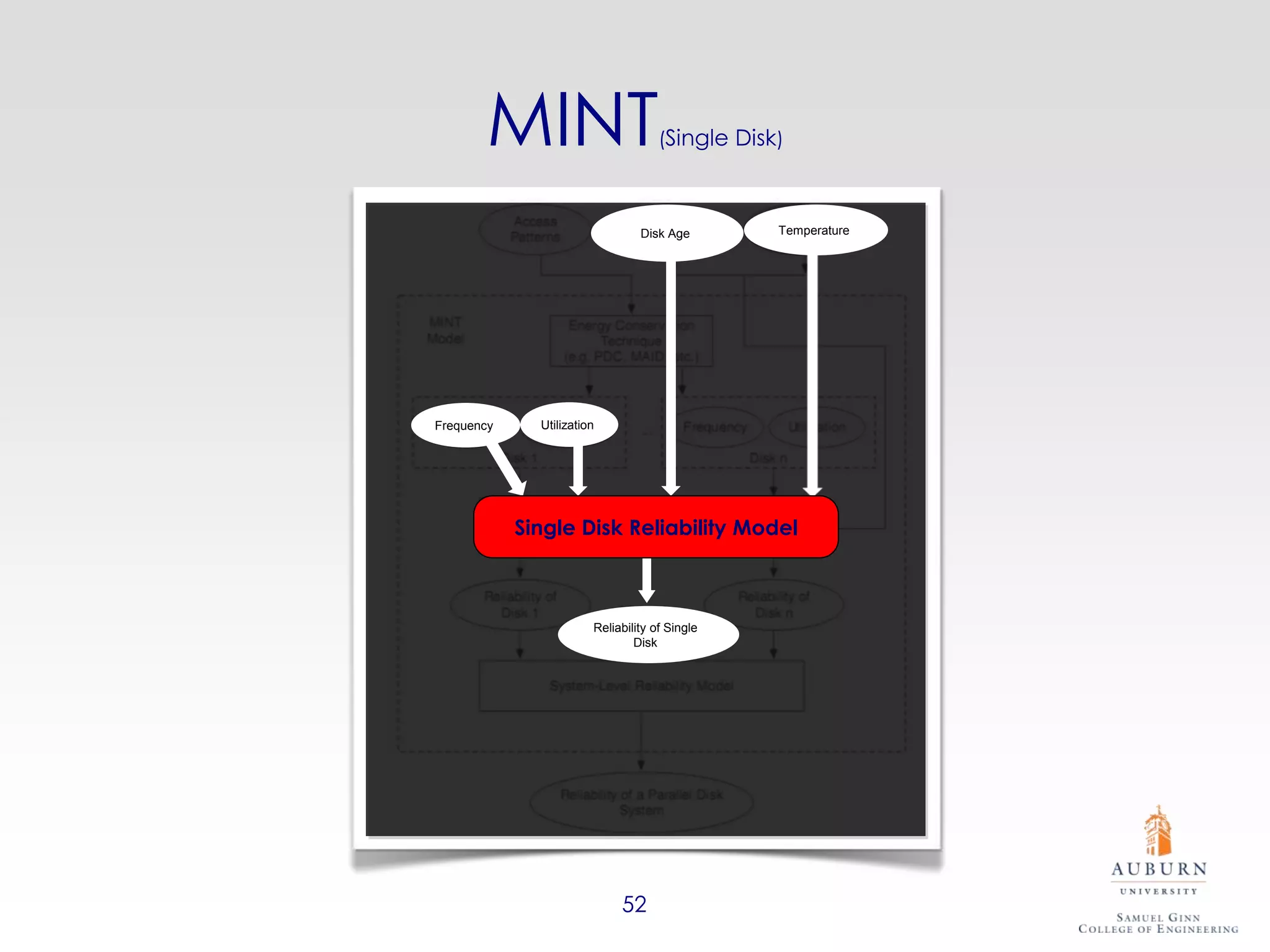

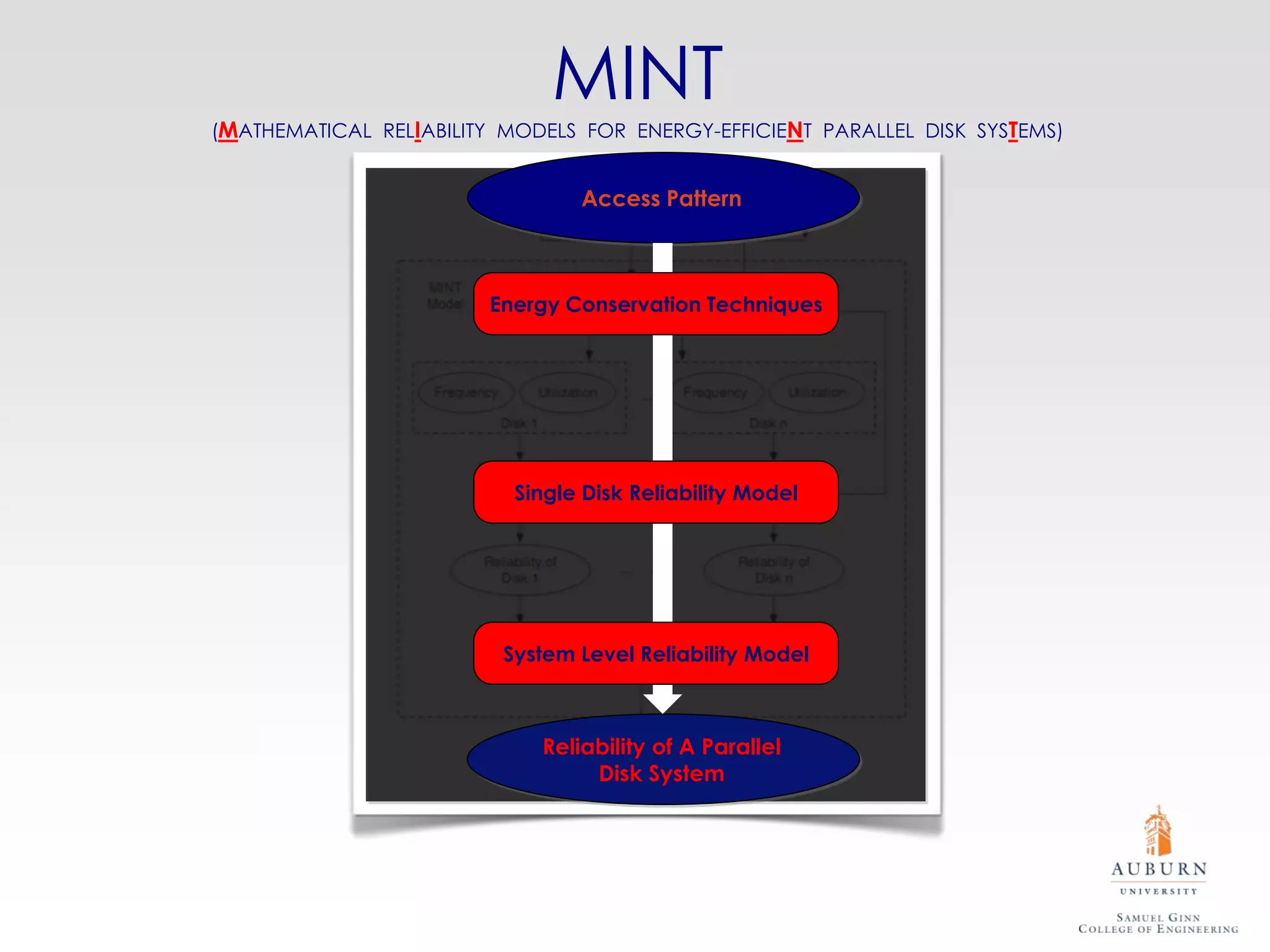

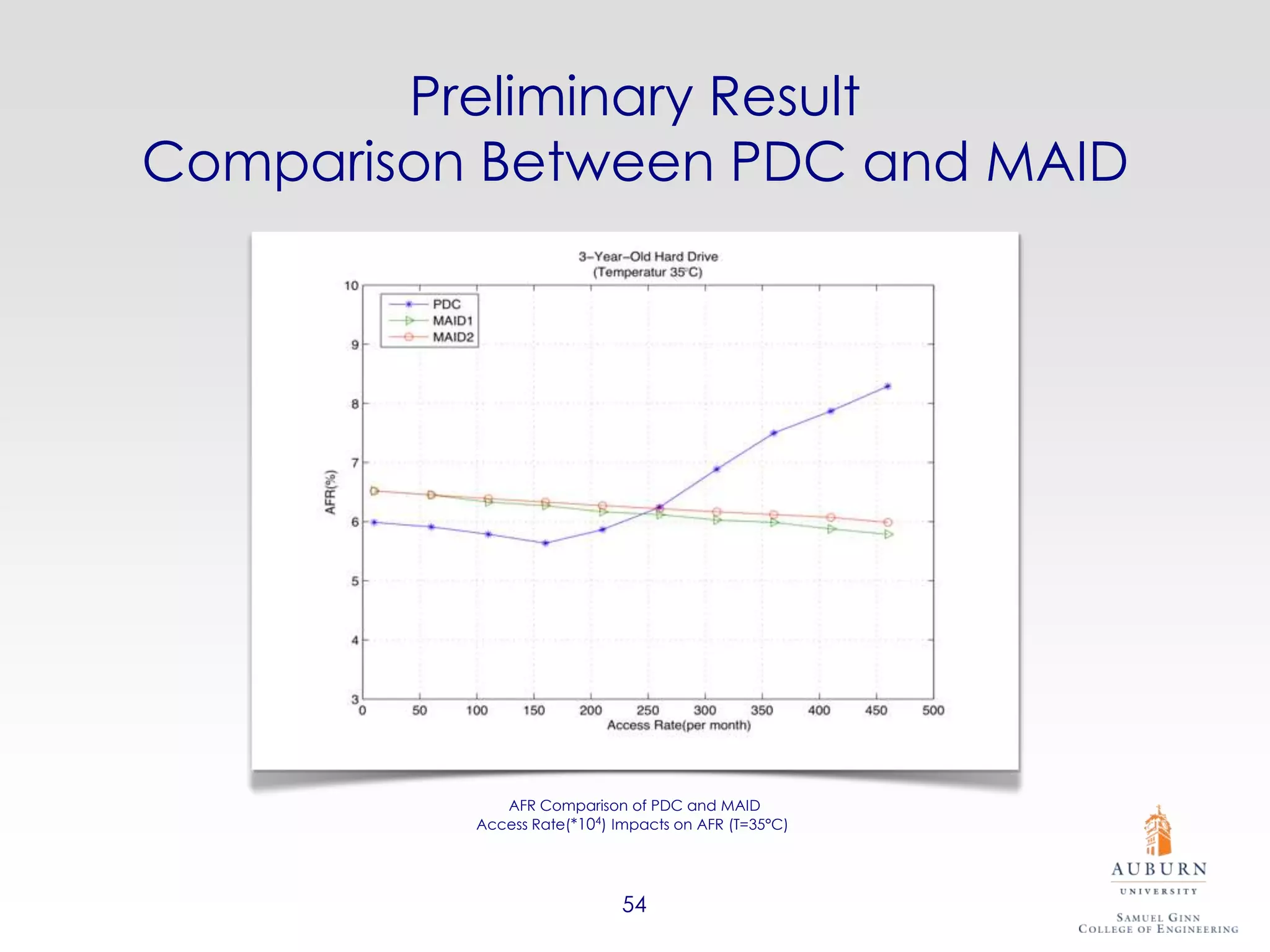

The document discusses energy-efficient data storage systems, focusing on techniques to improve energy conservation in data centers, including energy-aware scheduling algorithms and buffer disk architectures. It highlights the significant growth in energy consumption projected for data centers by 2020 and suggests various strategies to enhance energy efficiency while maintaining performance and reliability. Key findings include the importance of reducing power state transitions and maximizing idle periods to achieve energy savings.