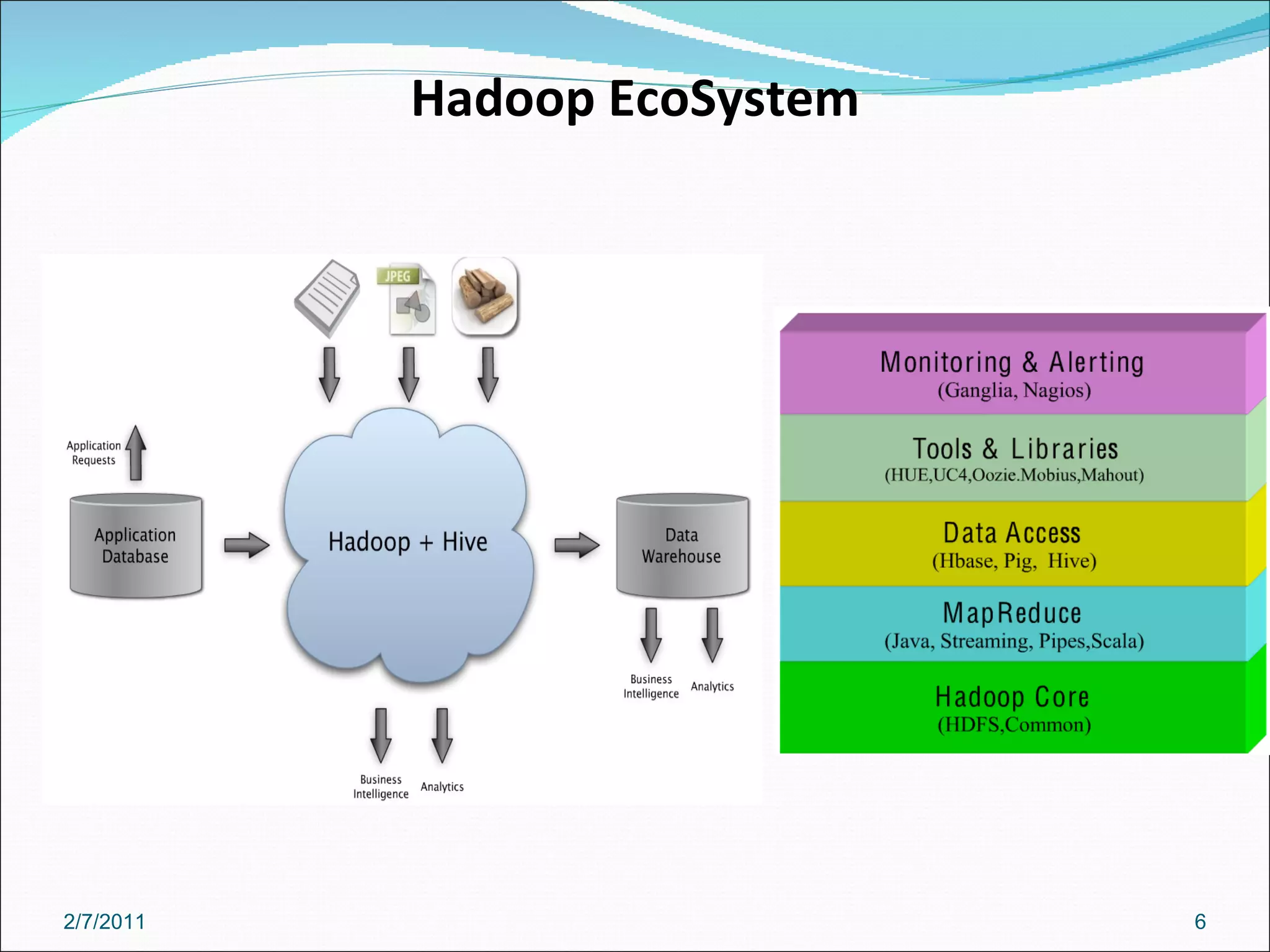

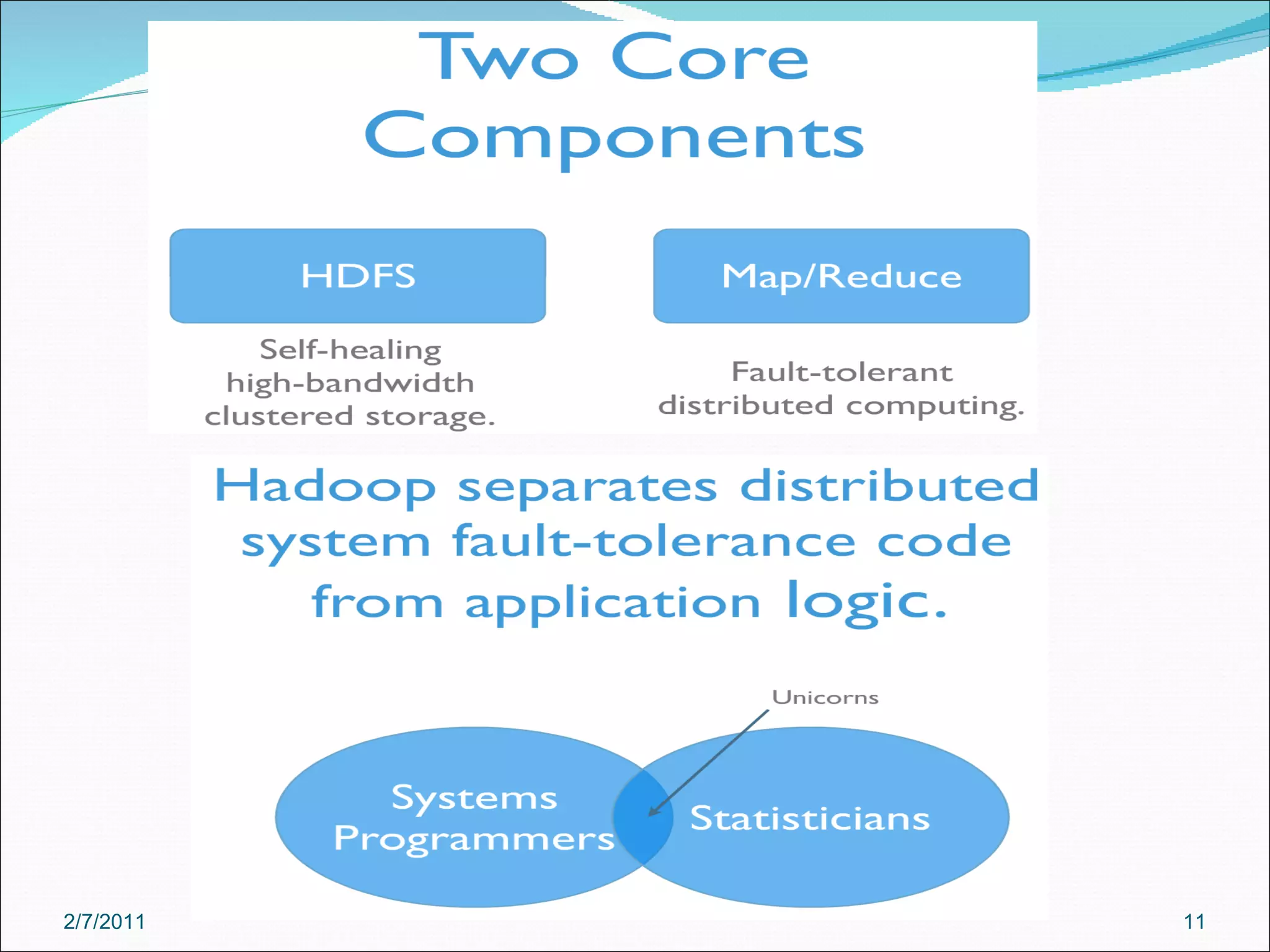

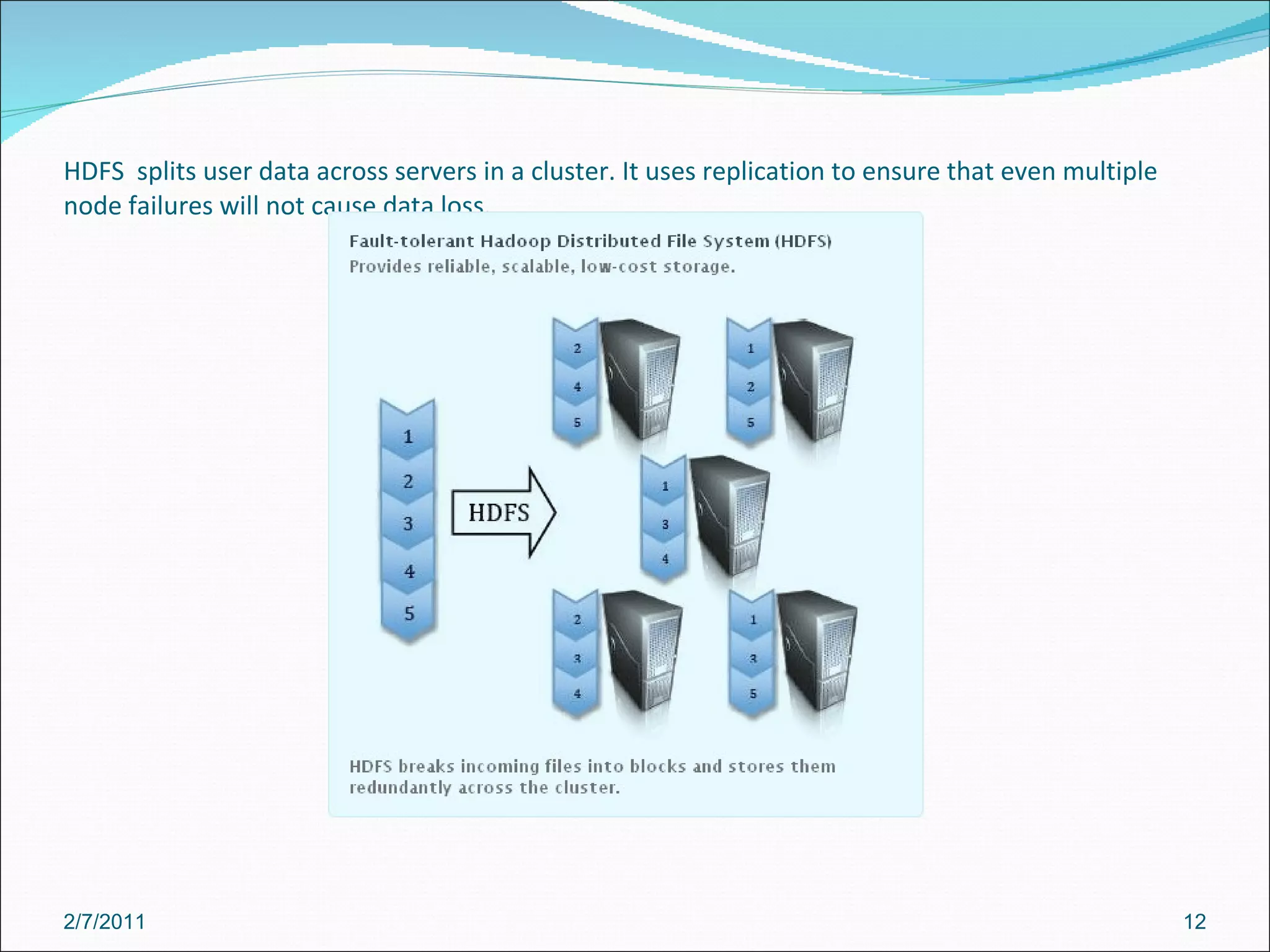

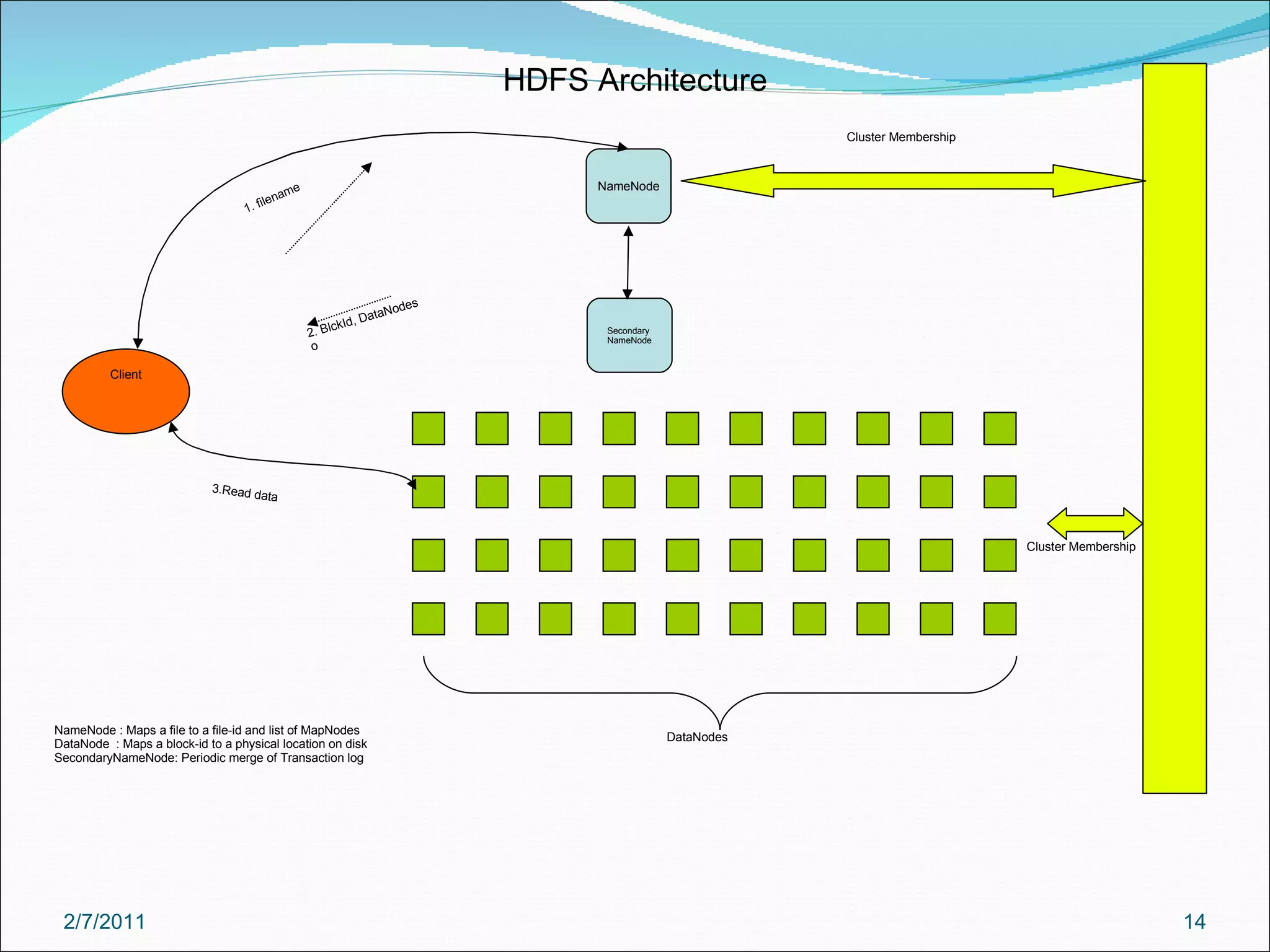

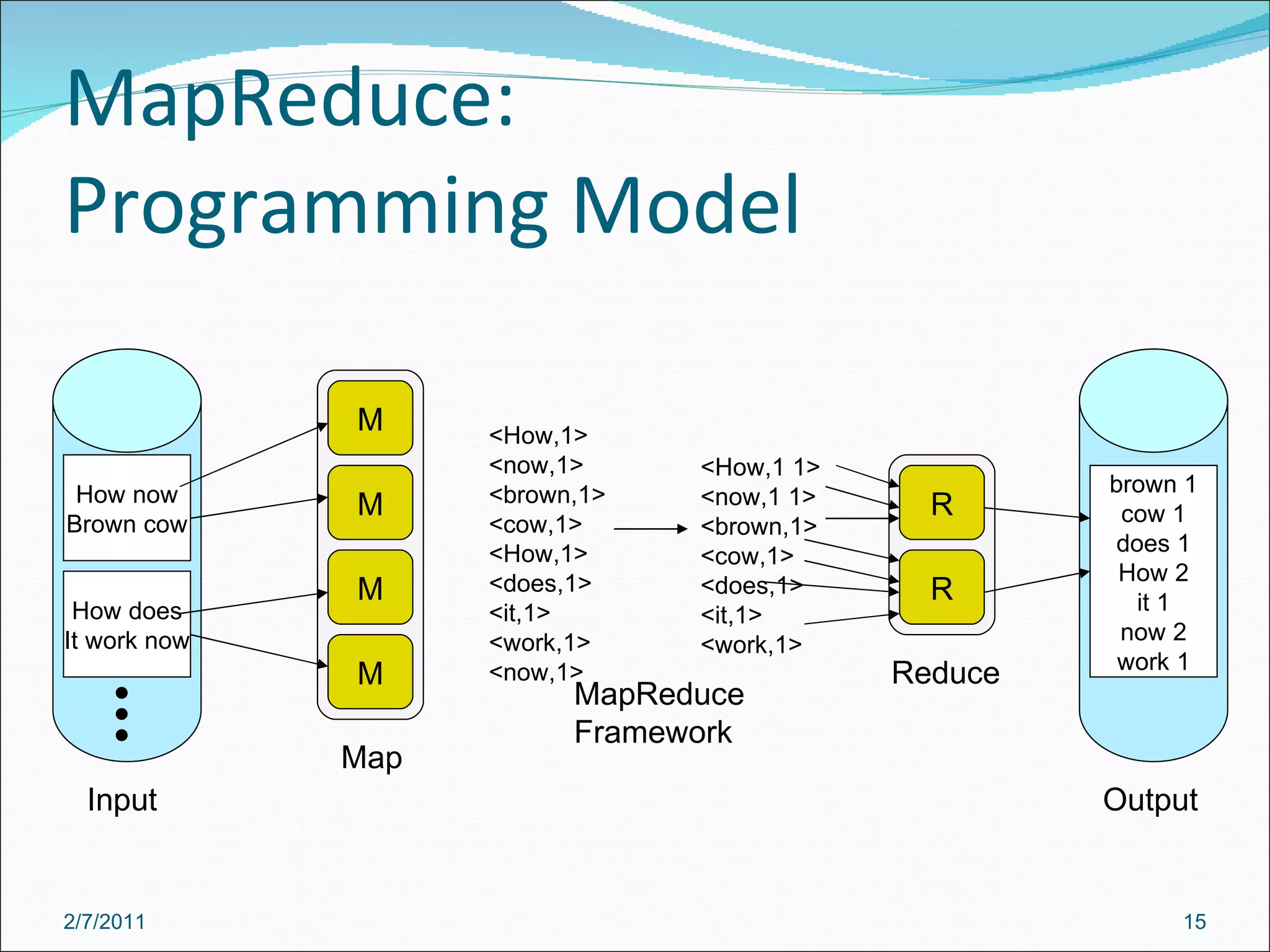

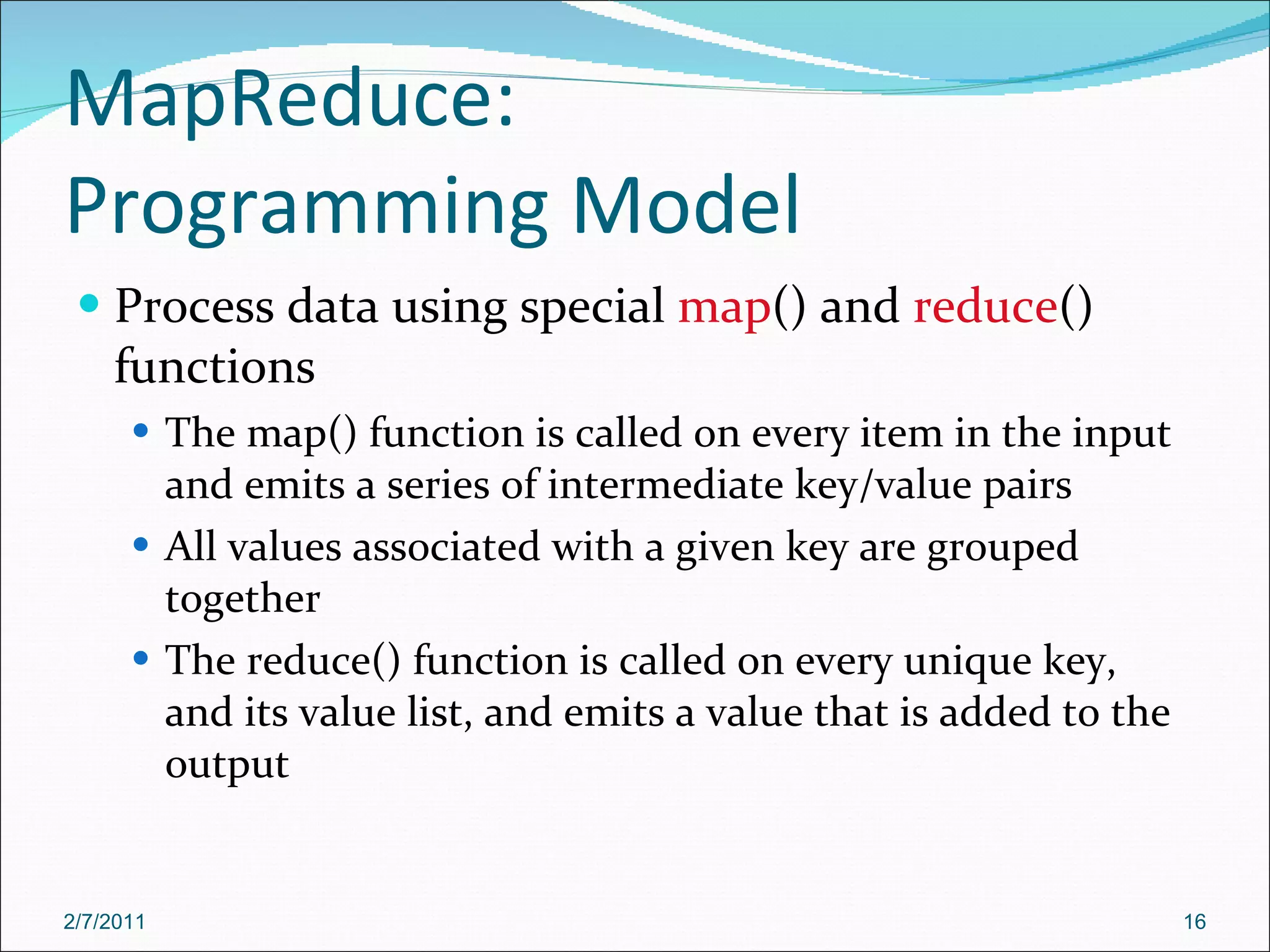

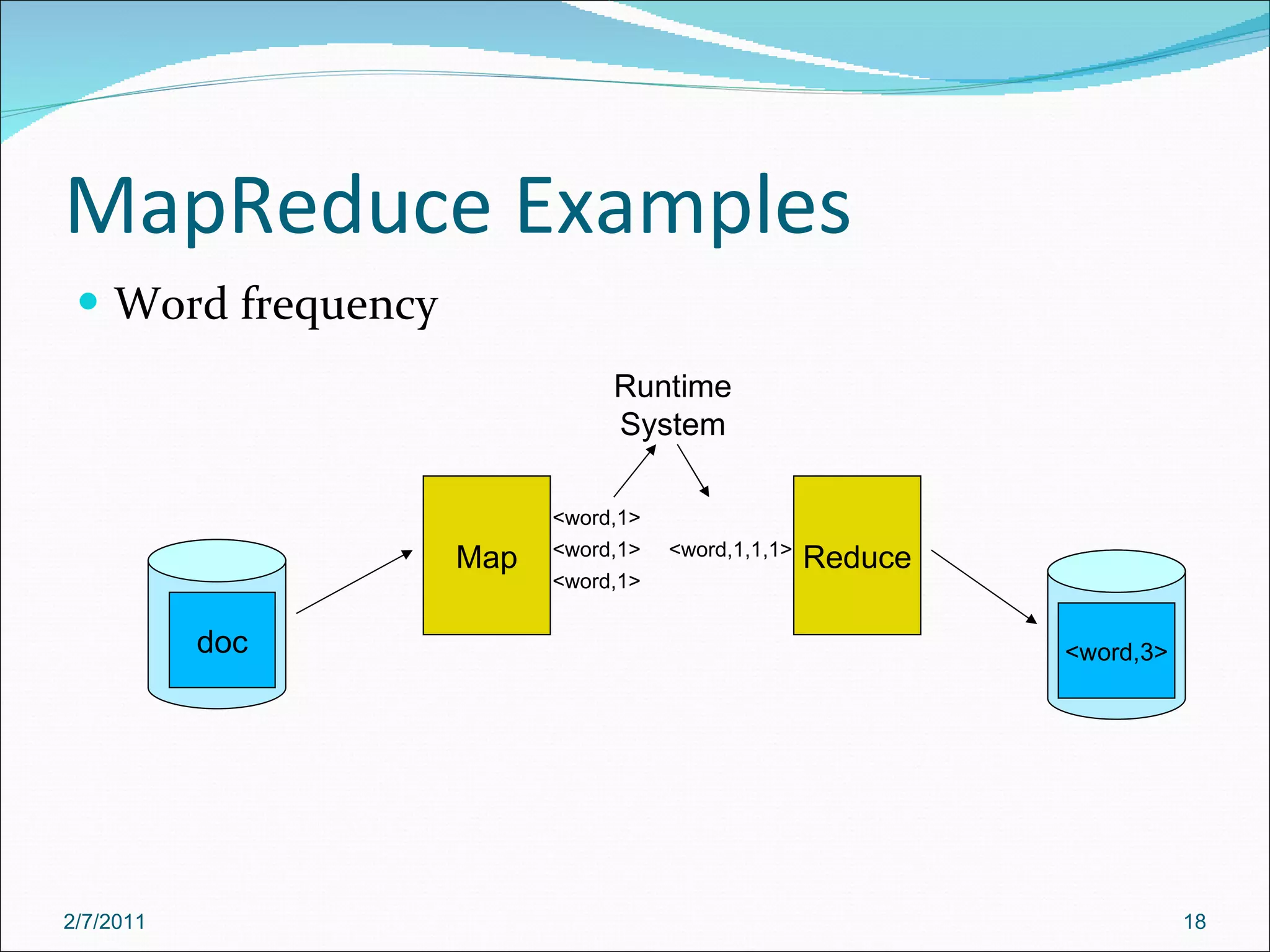

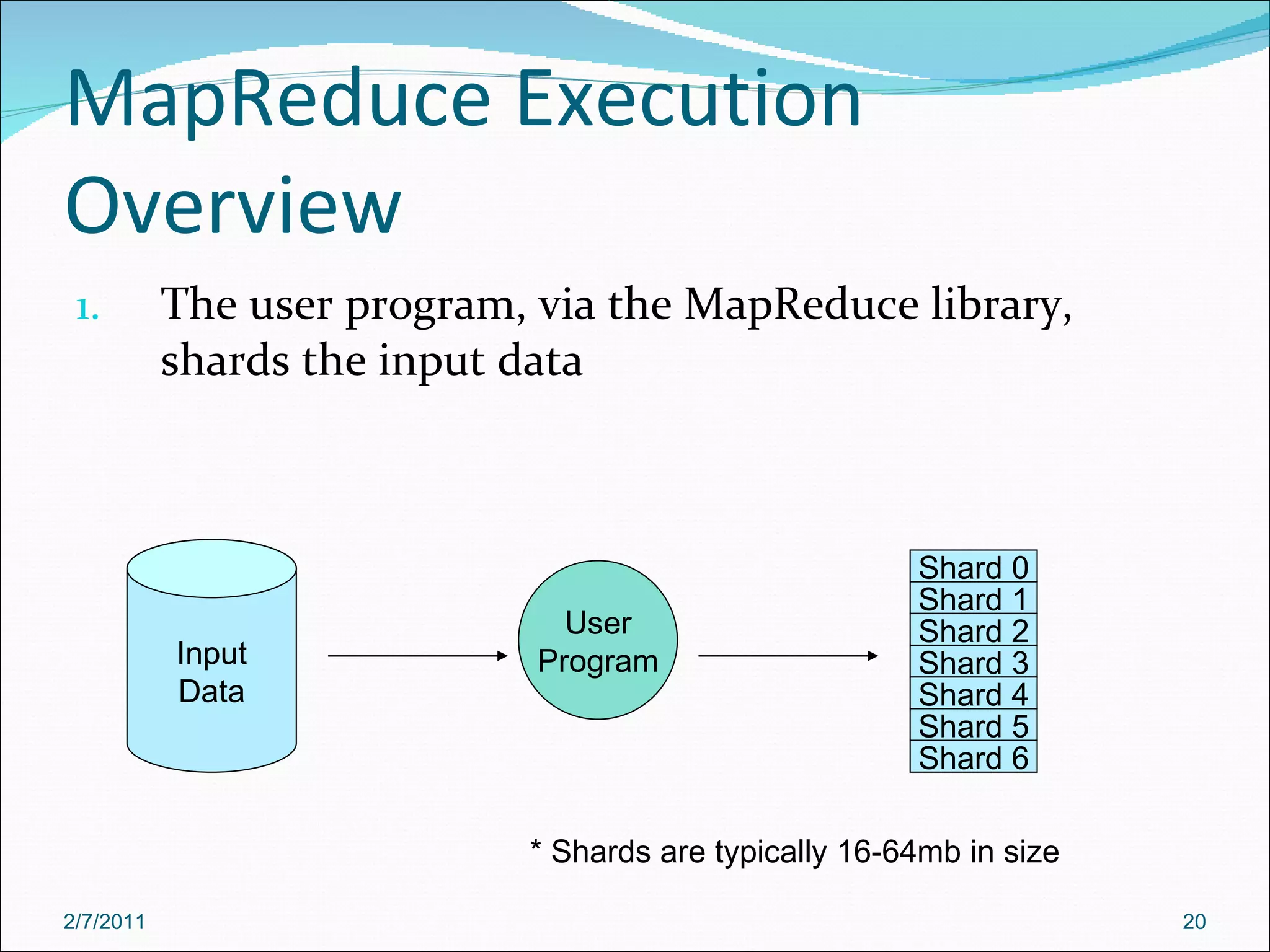

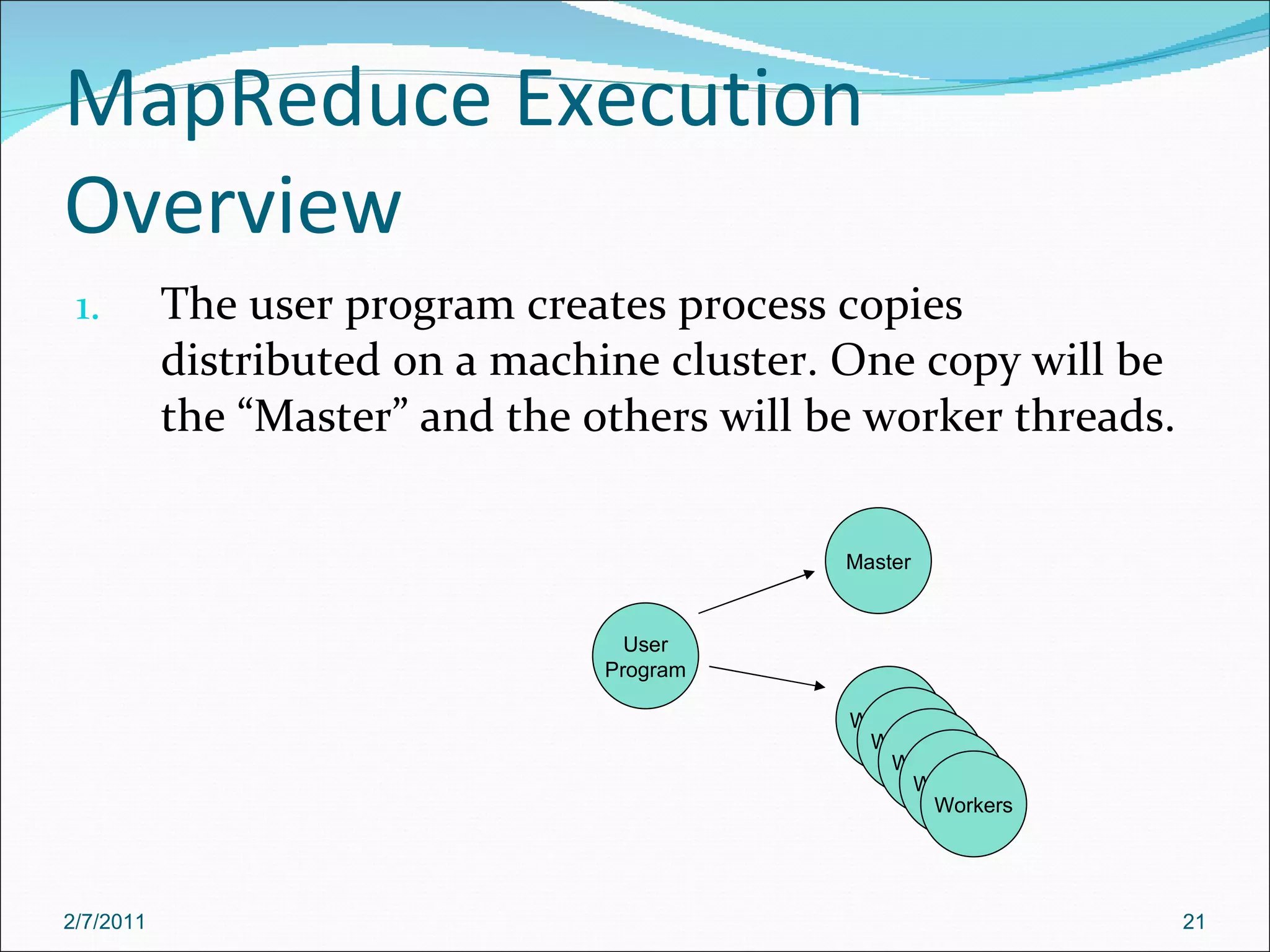

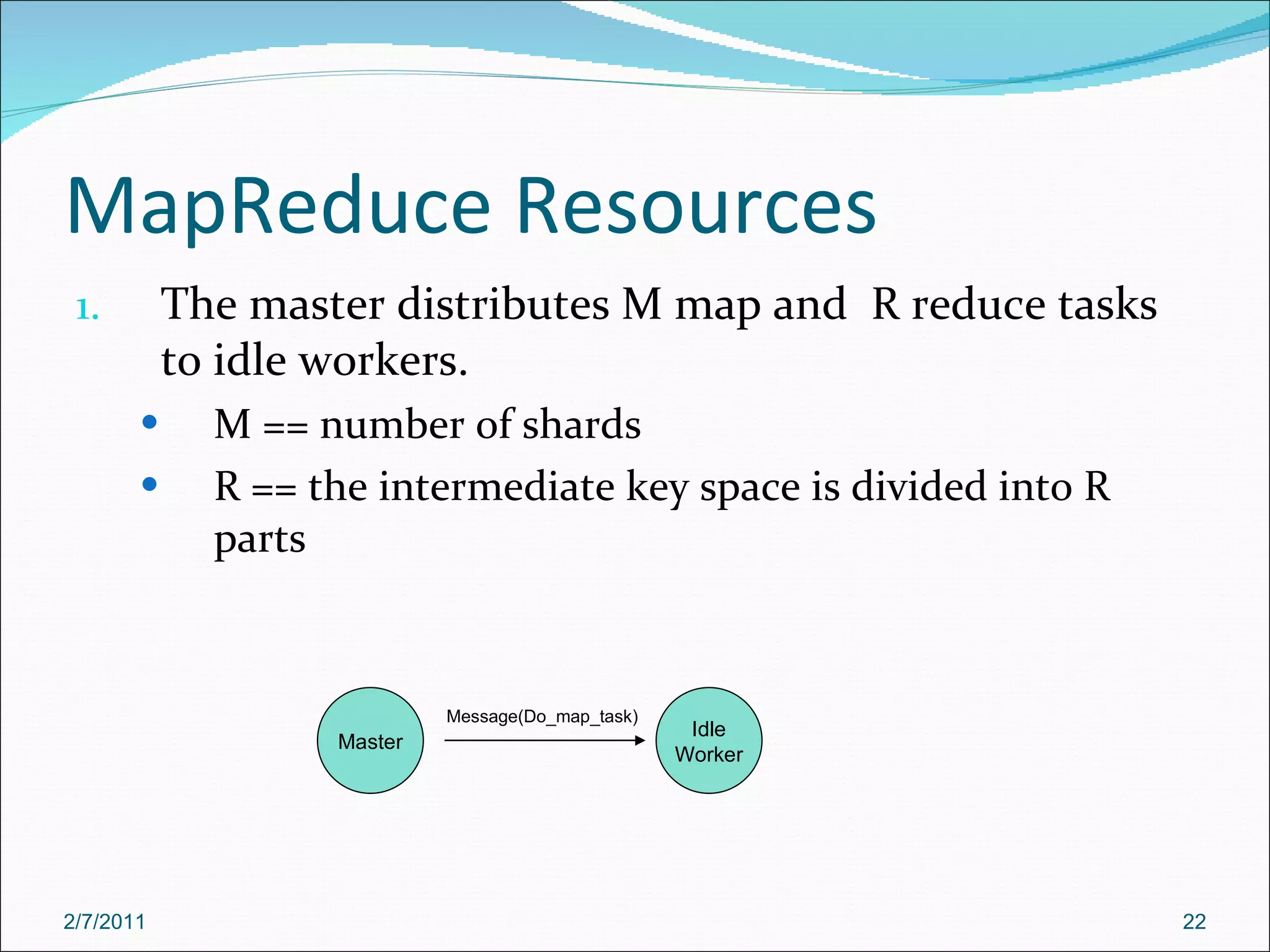

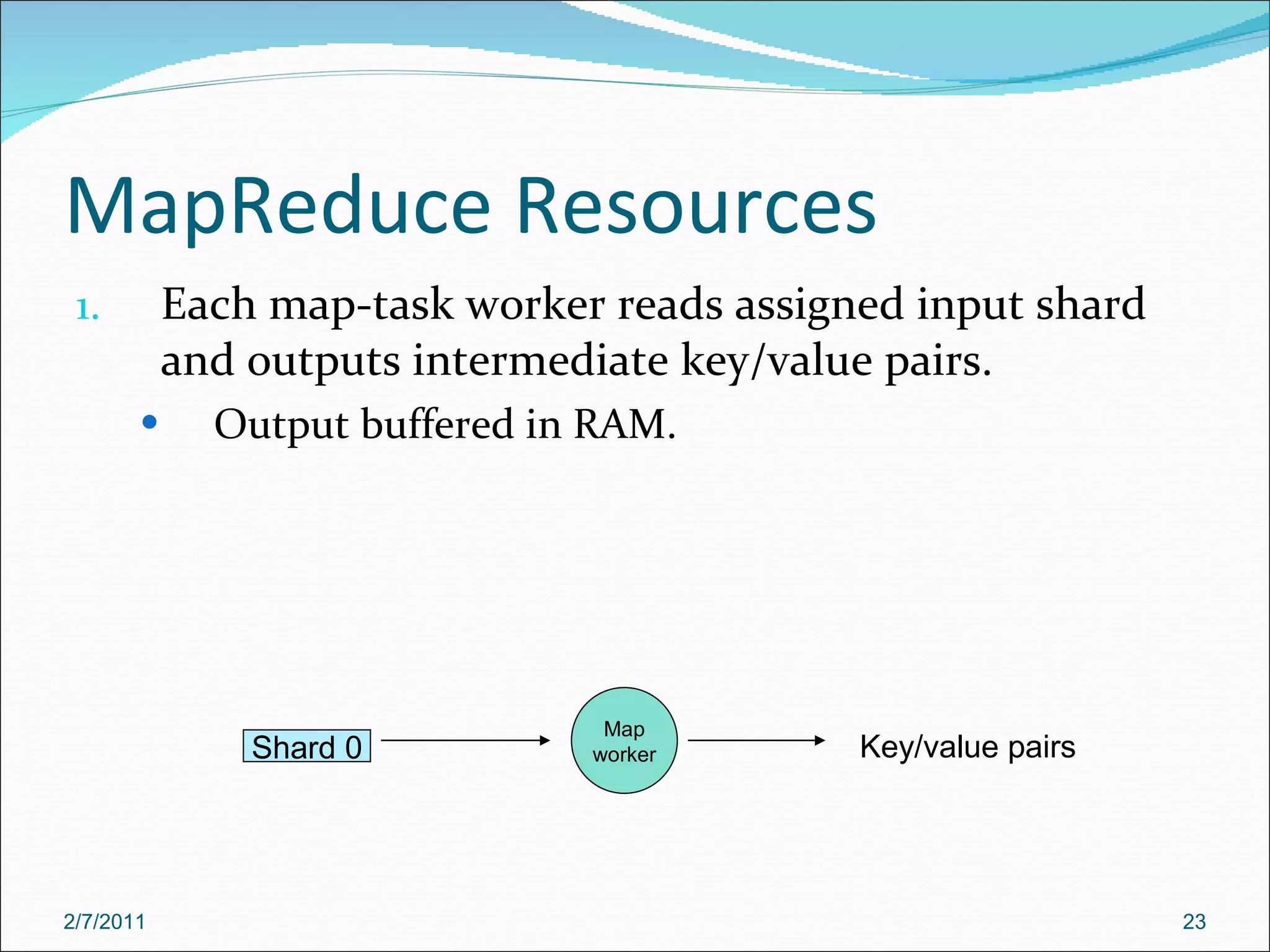

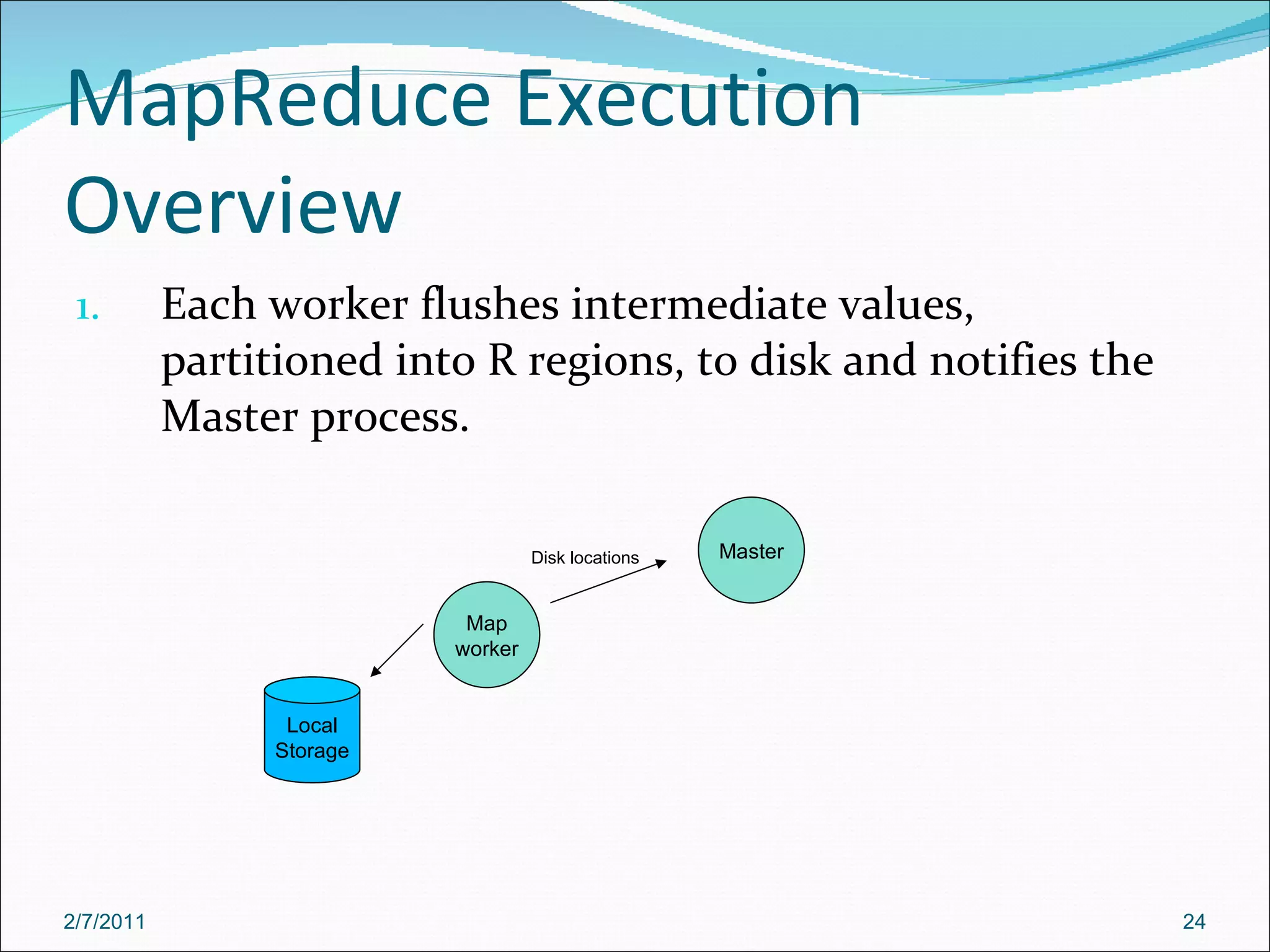

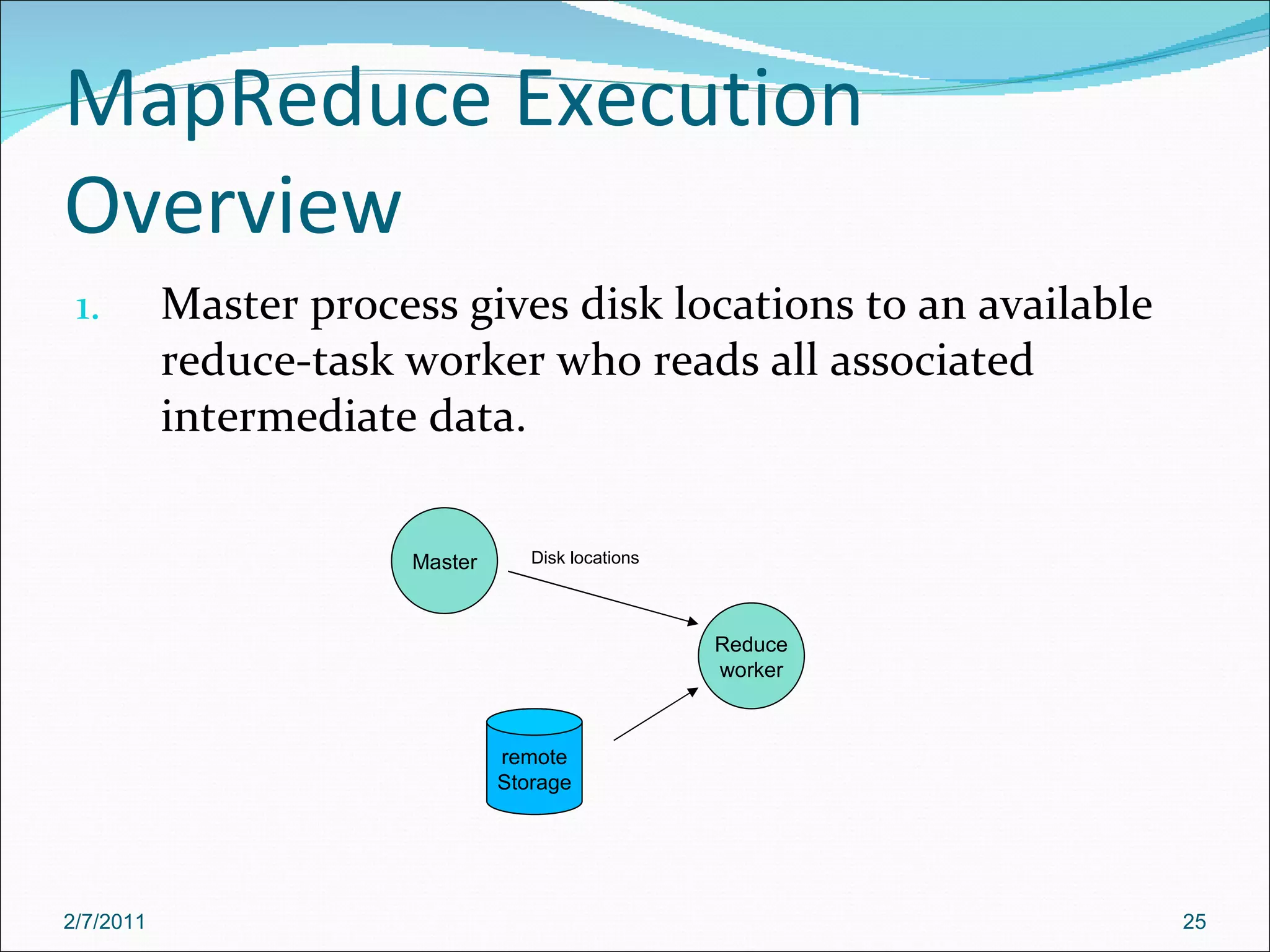

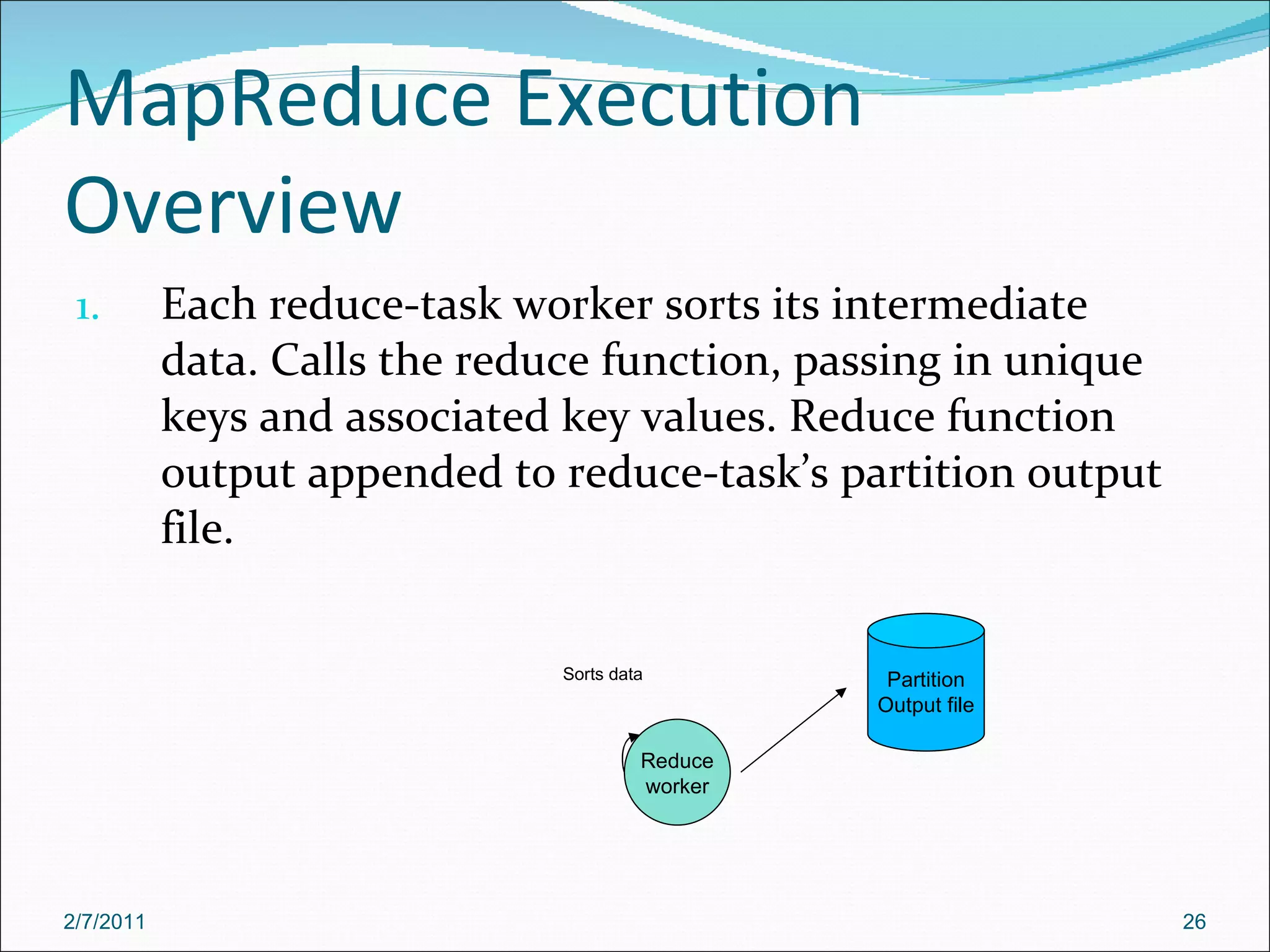

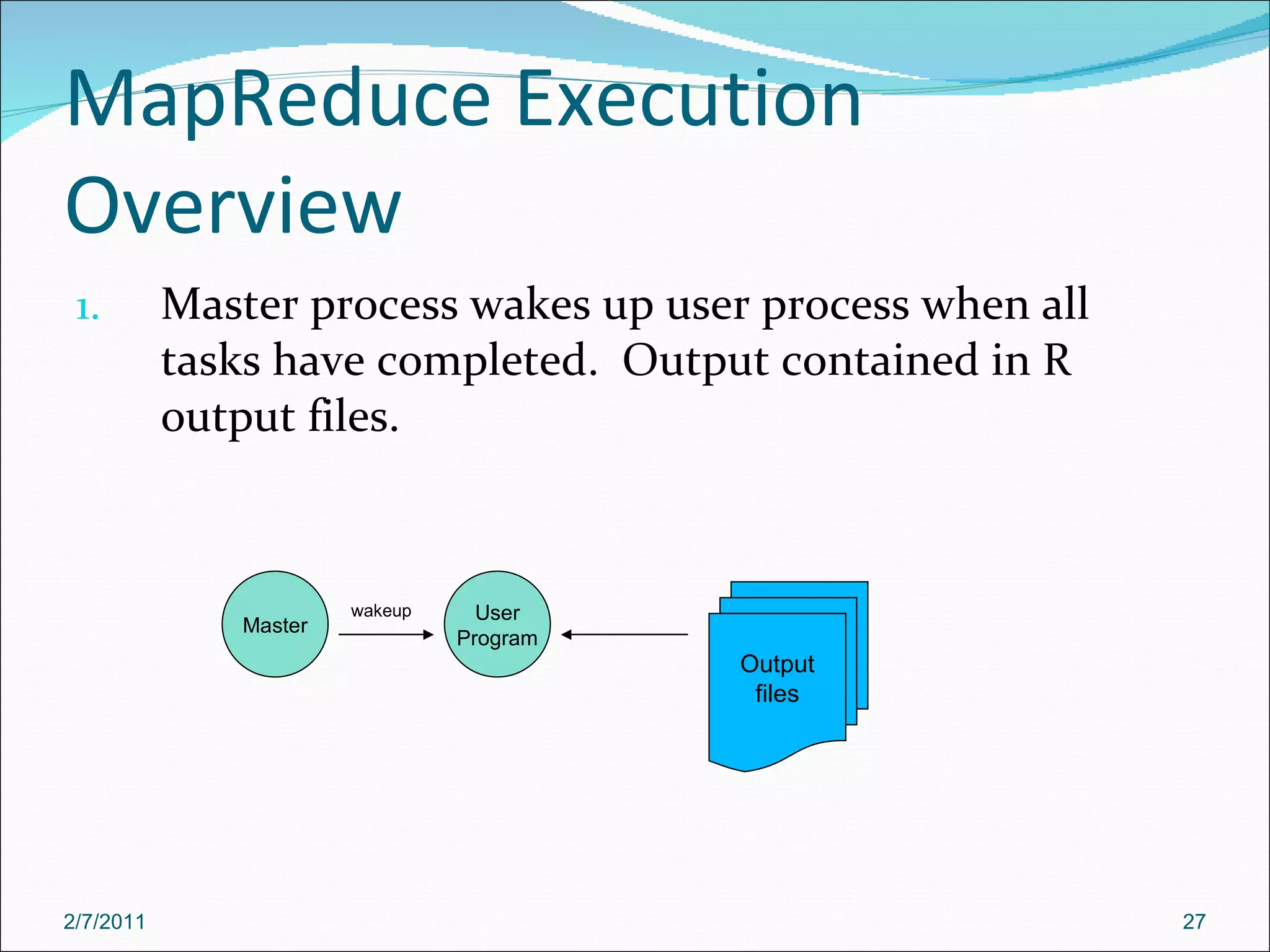

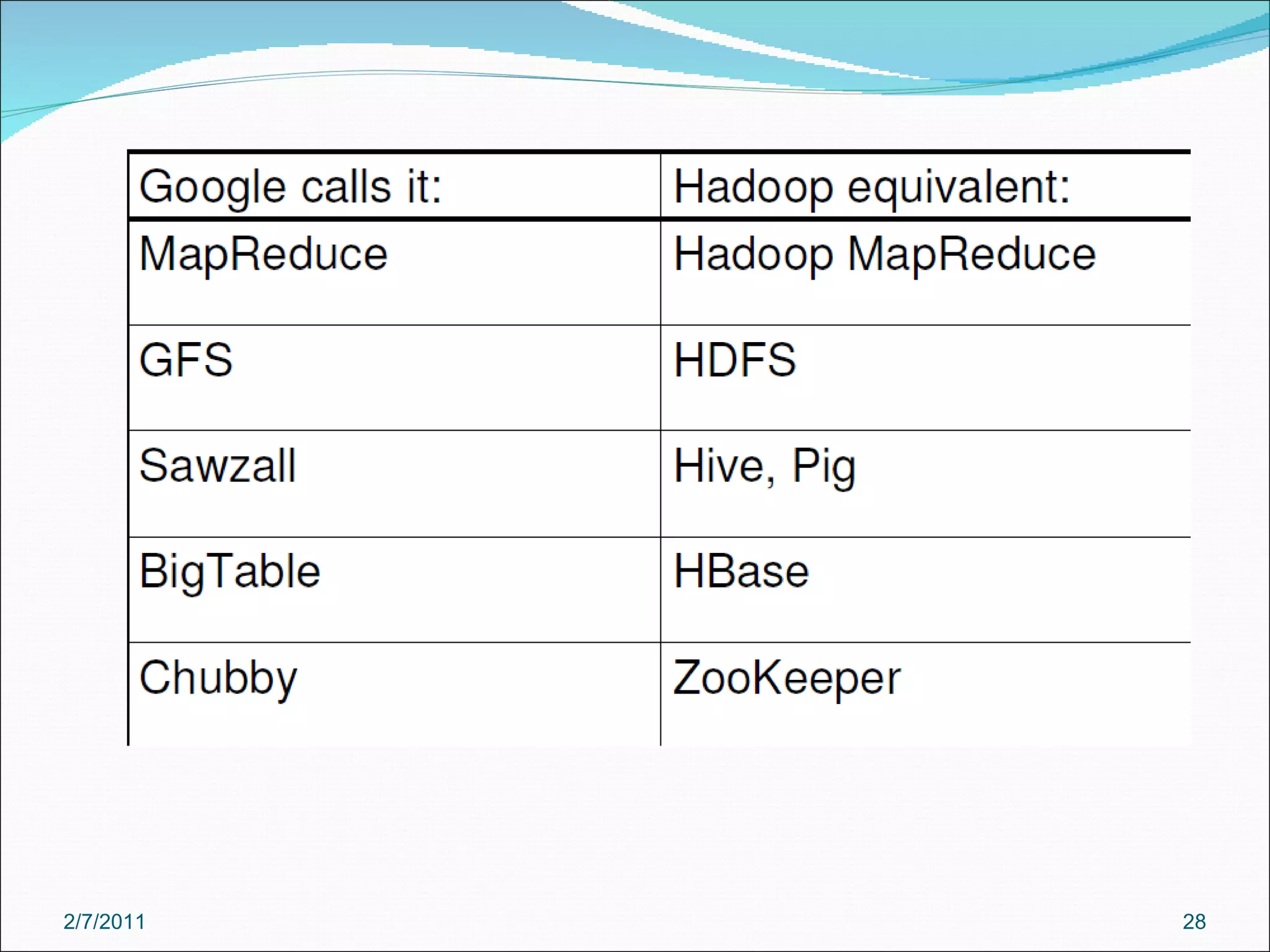

The document provides an overview of Hadoop distributed computing. It discusses how Hadoop uses MapReduce and HDFS to efficiently process large amounts of data across clusters of commodity servers. Key features of Hadoop include scaling to large datasets, handling failures automatically, and providing a simple programming model through MapReduce.