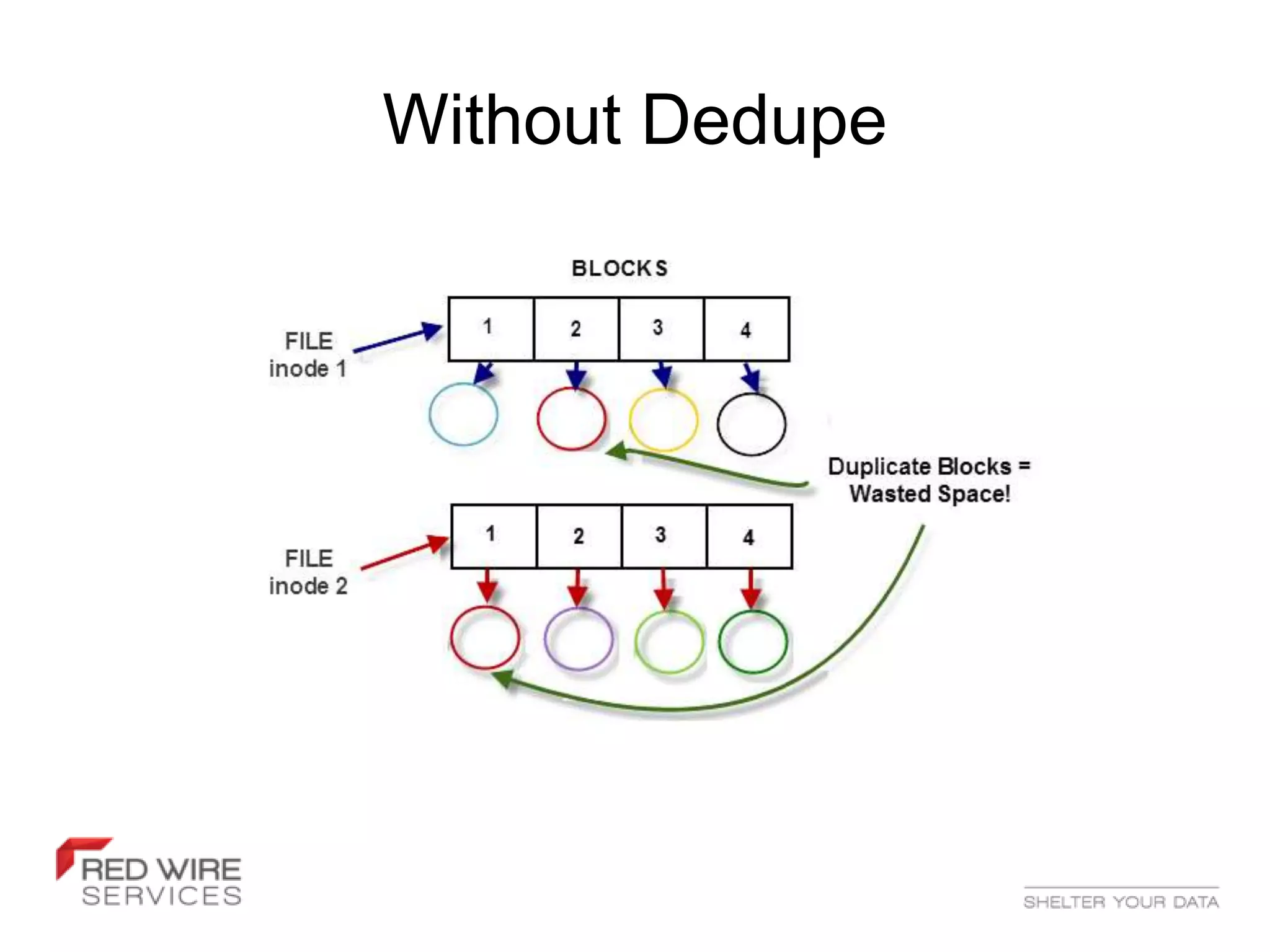

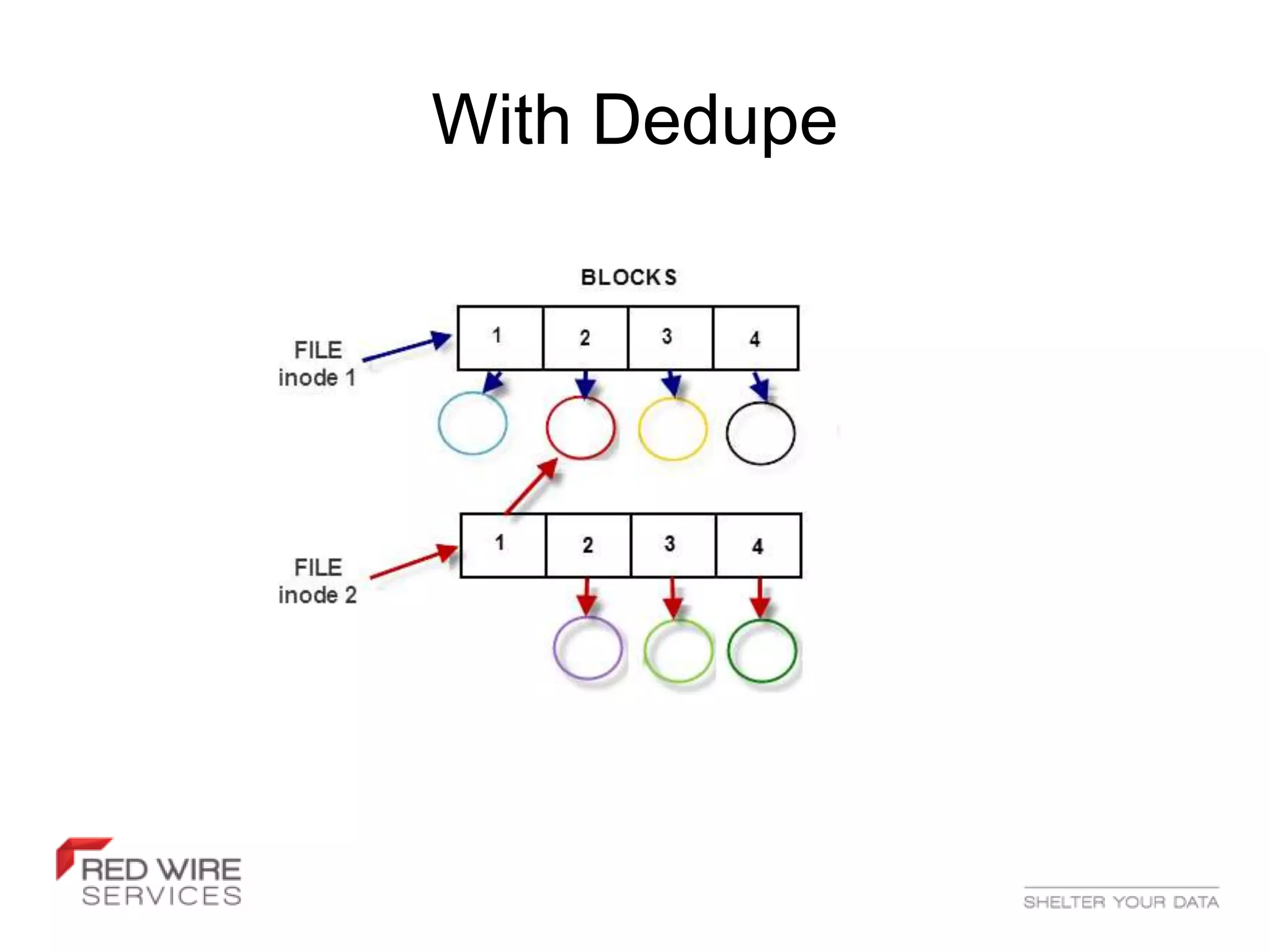

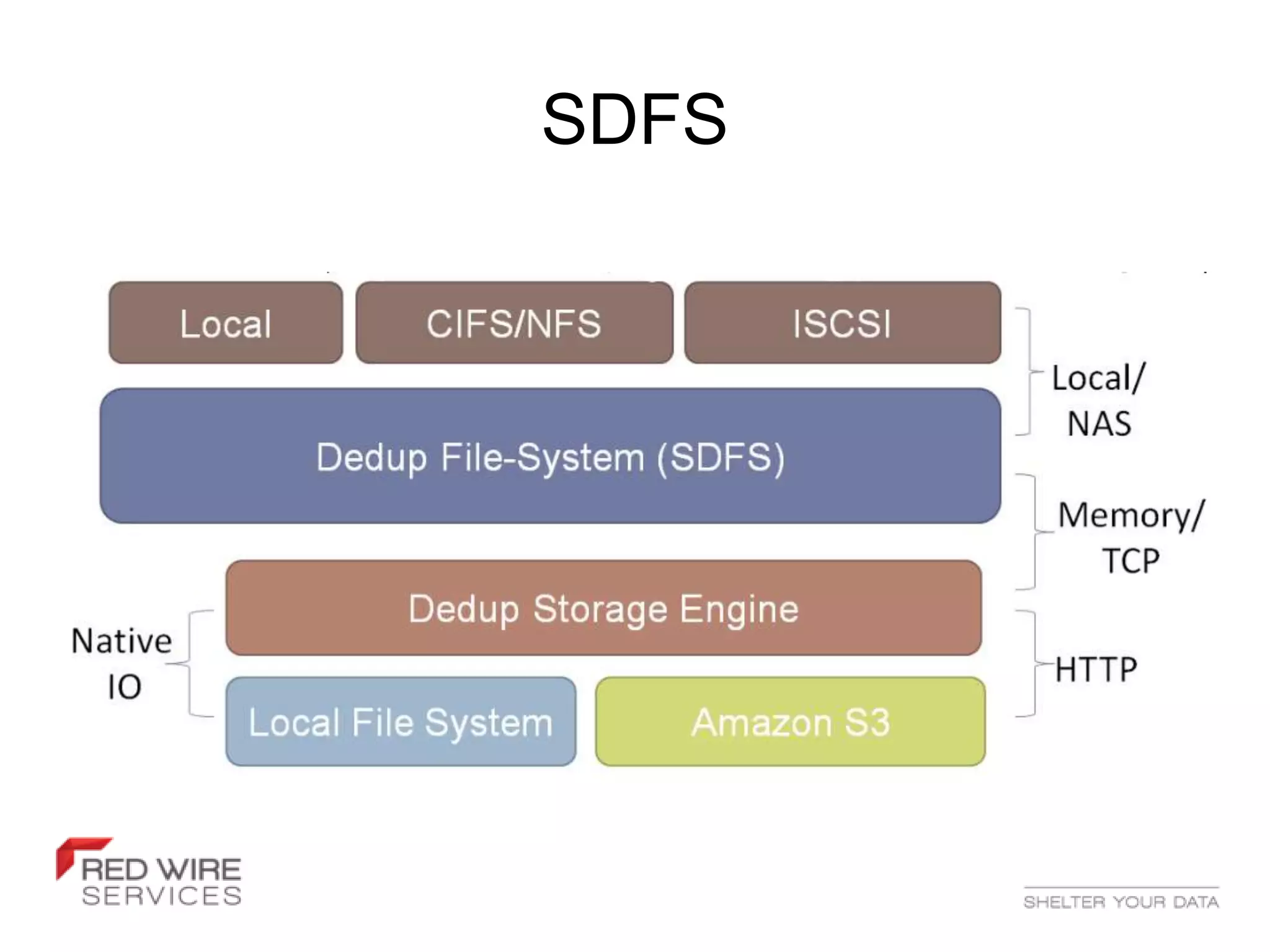

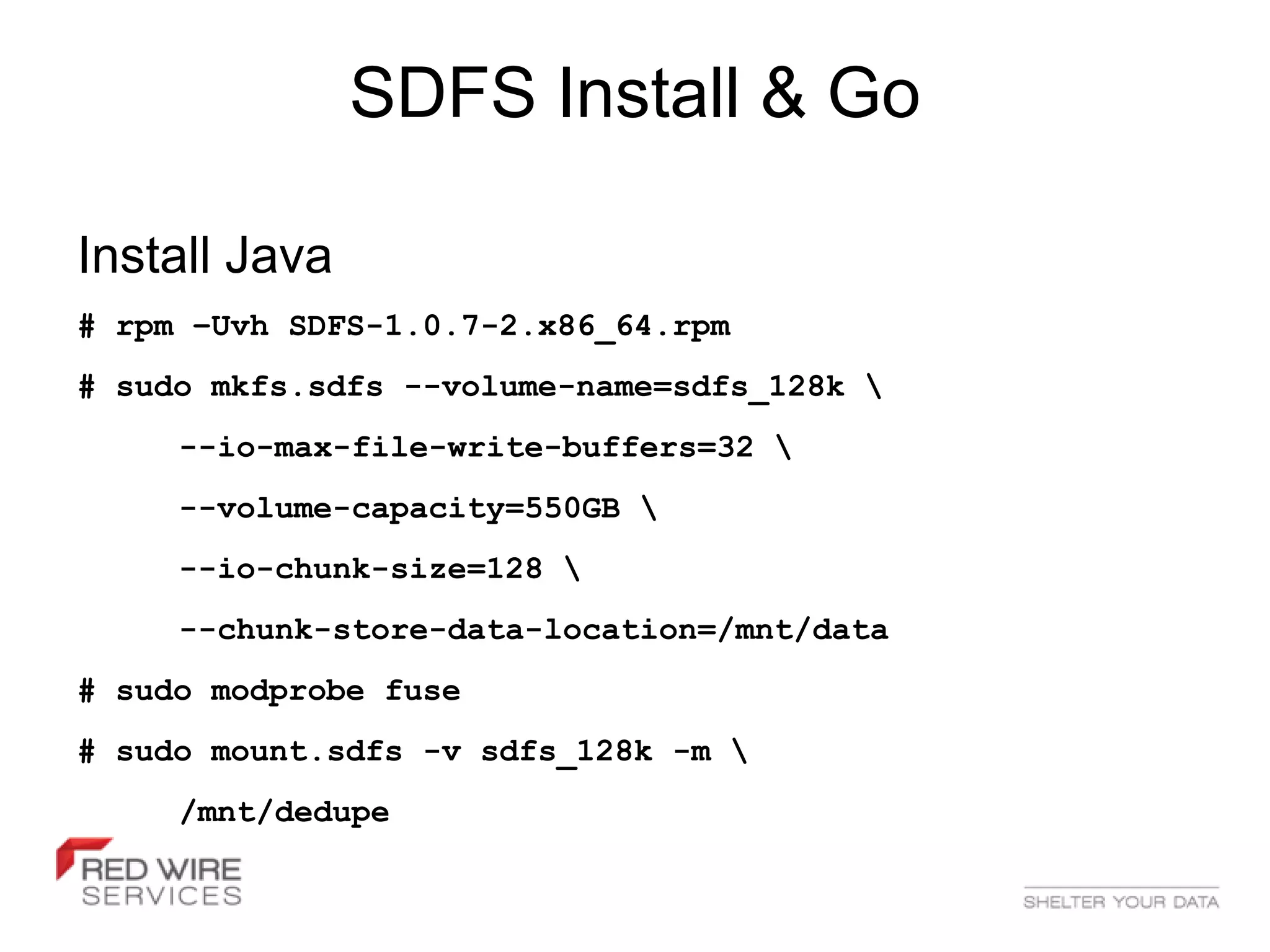

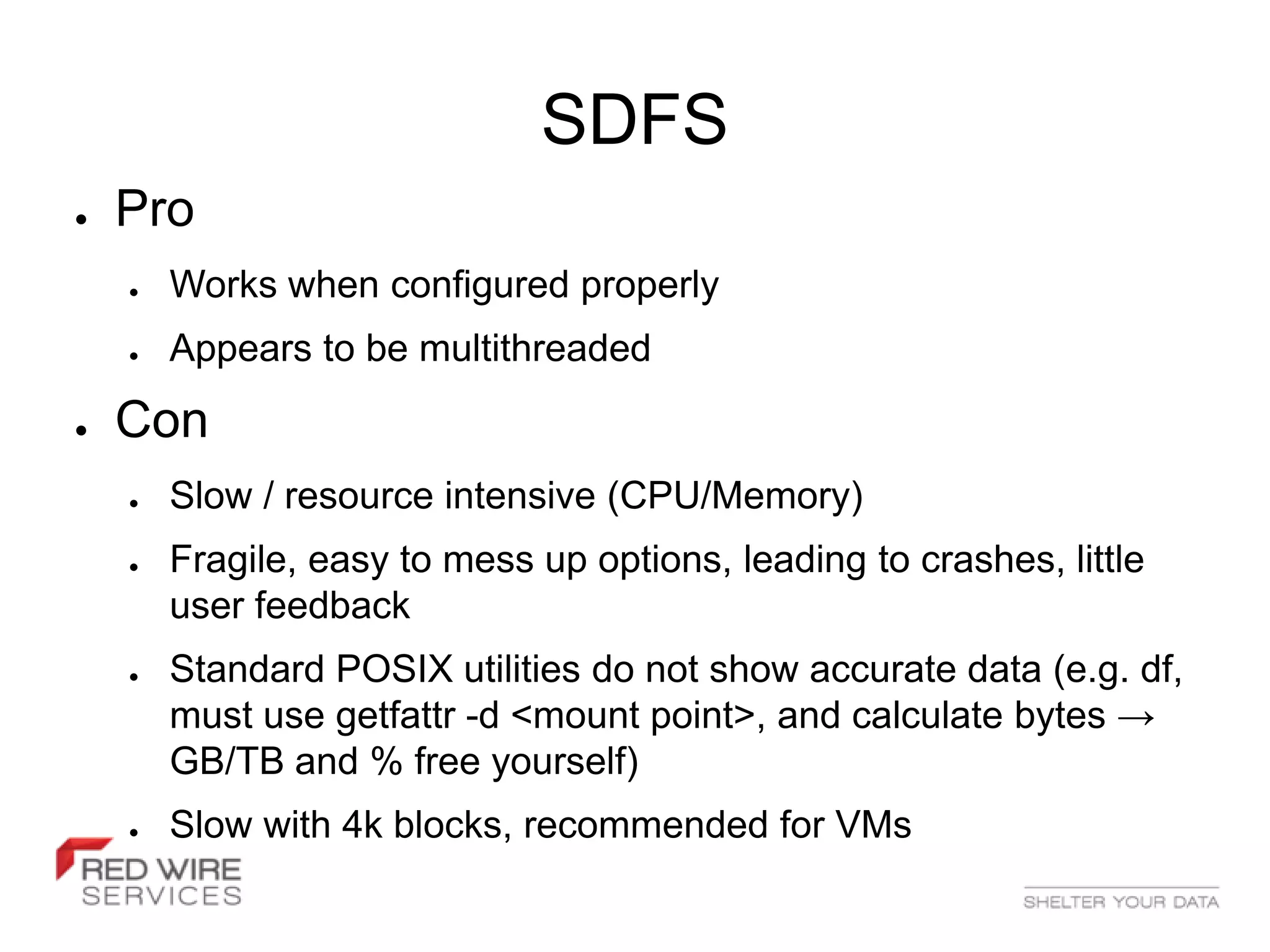

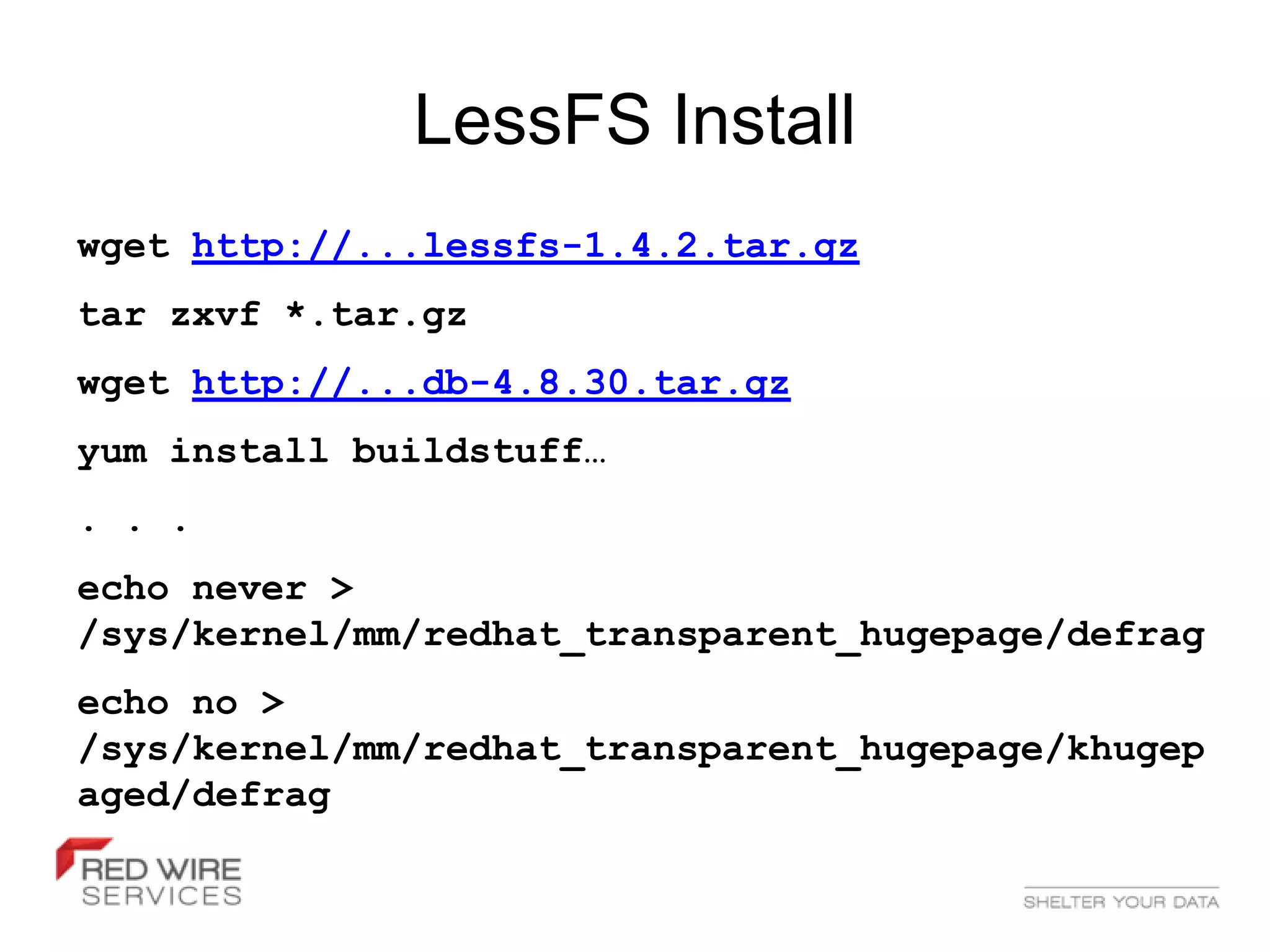

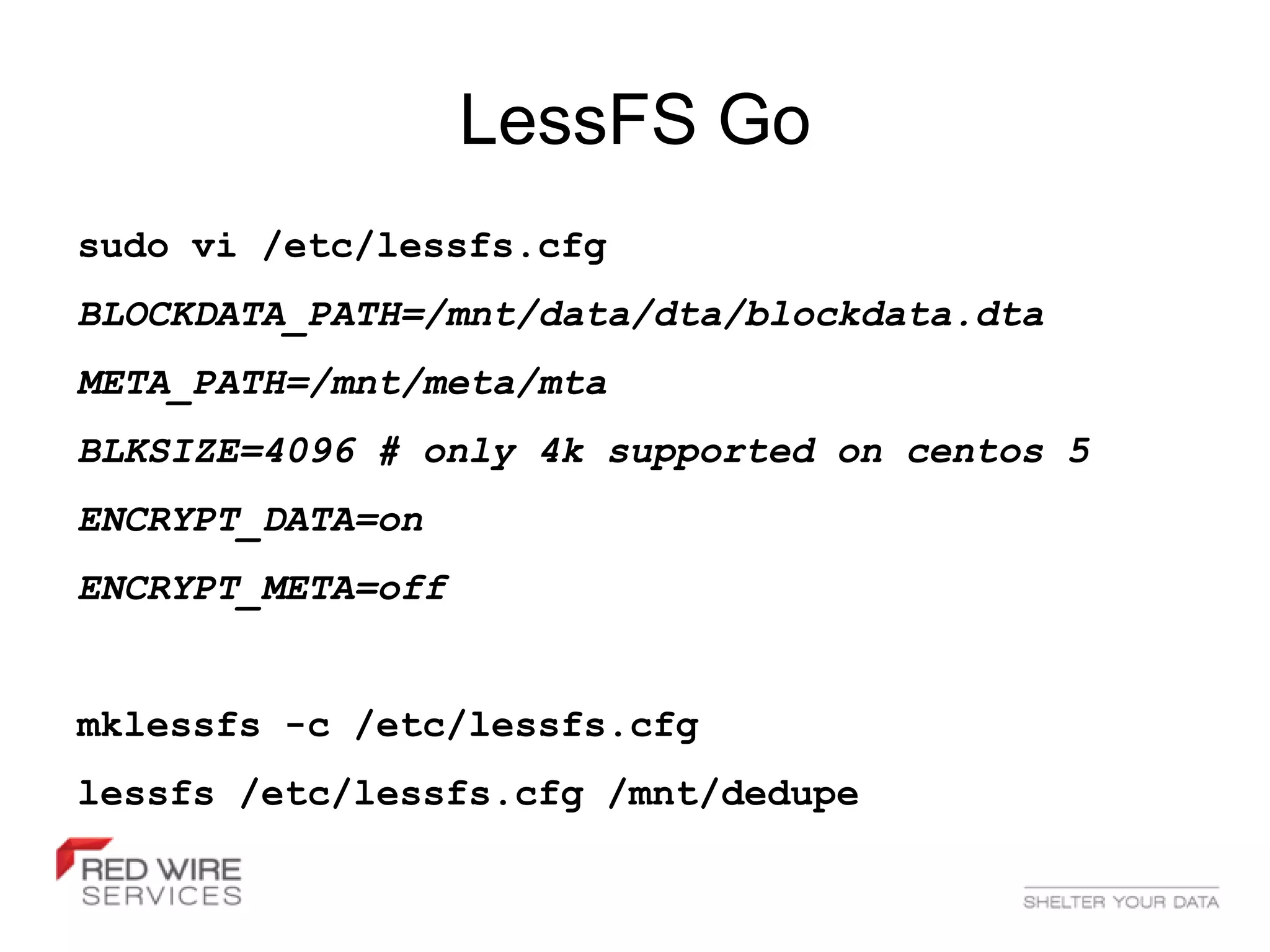

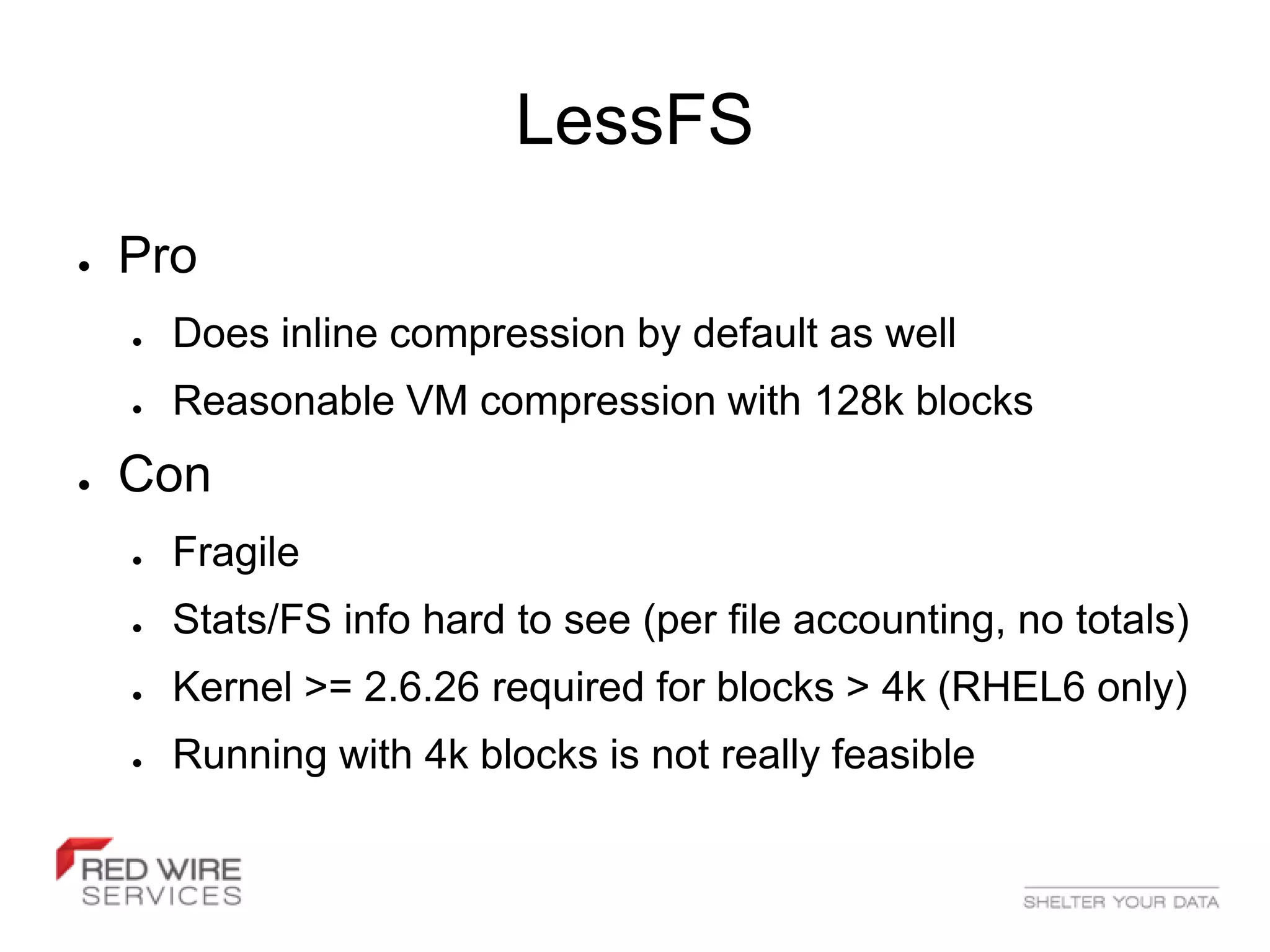

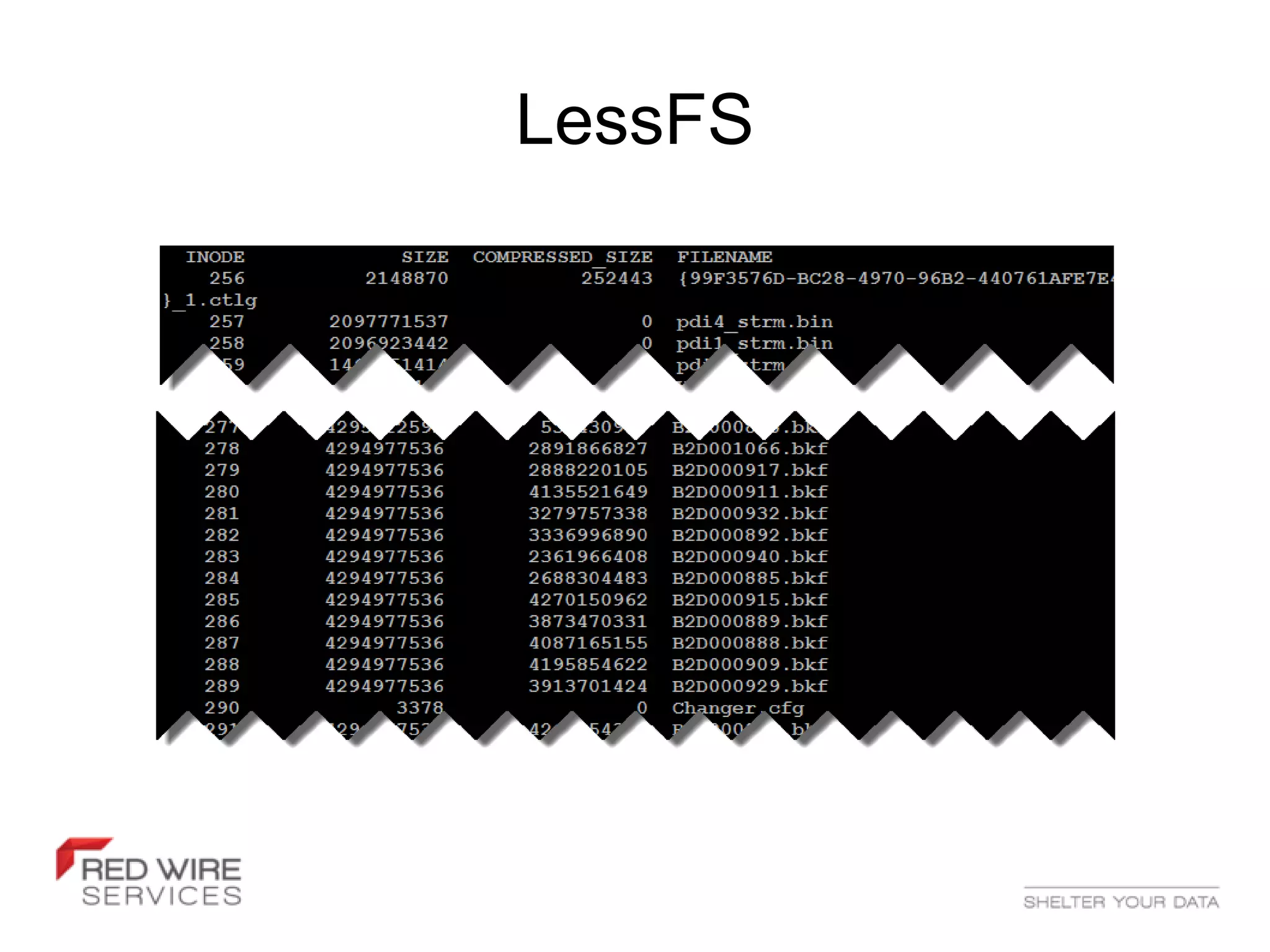

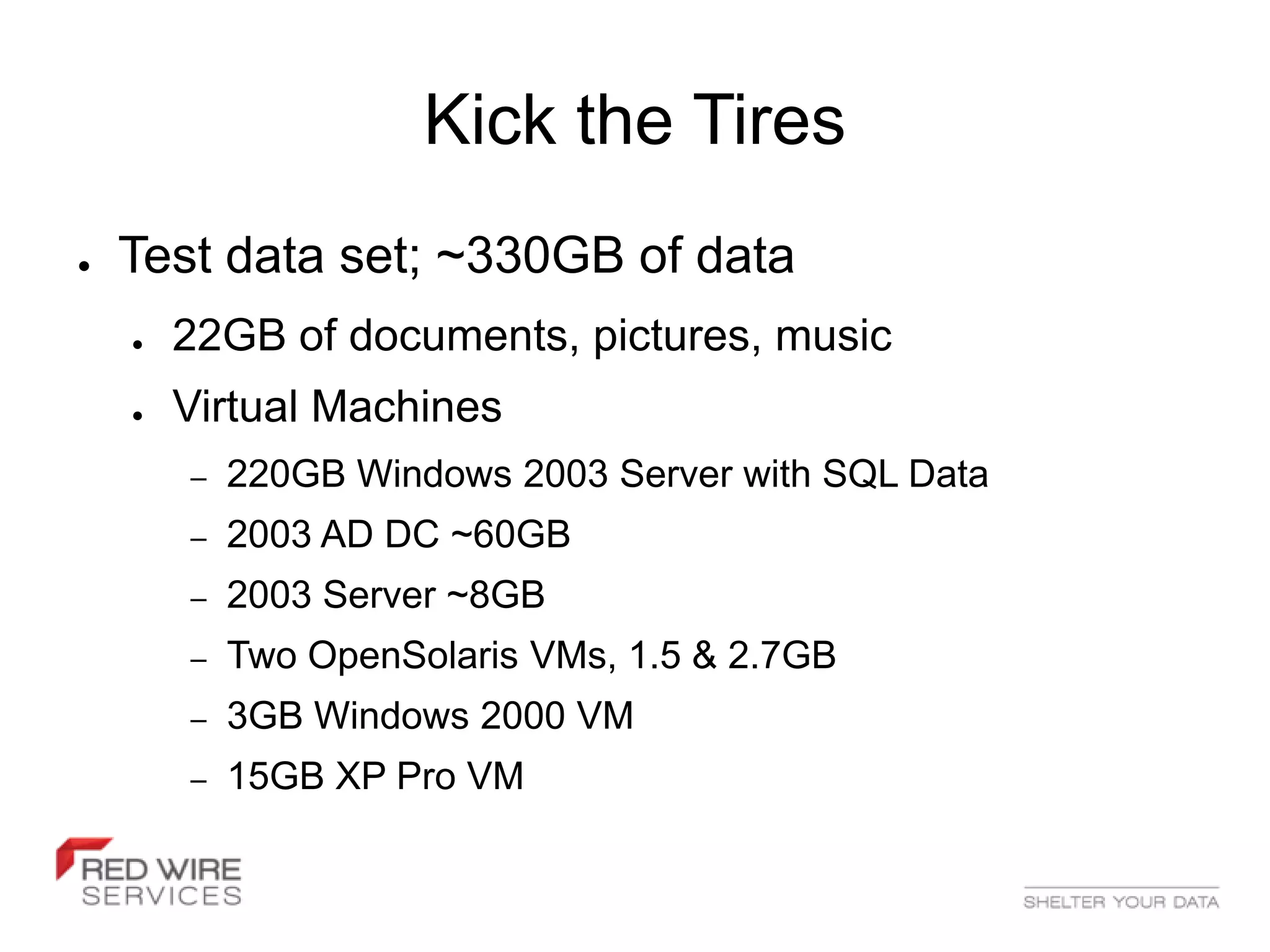

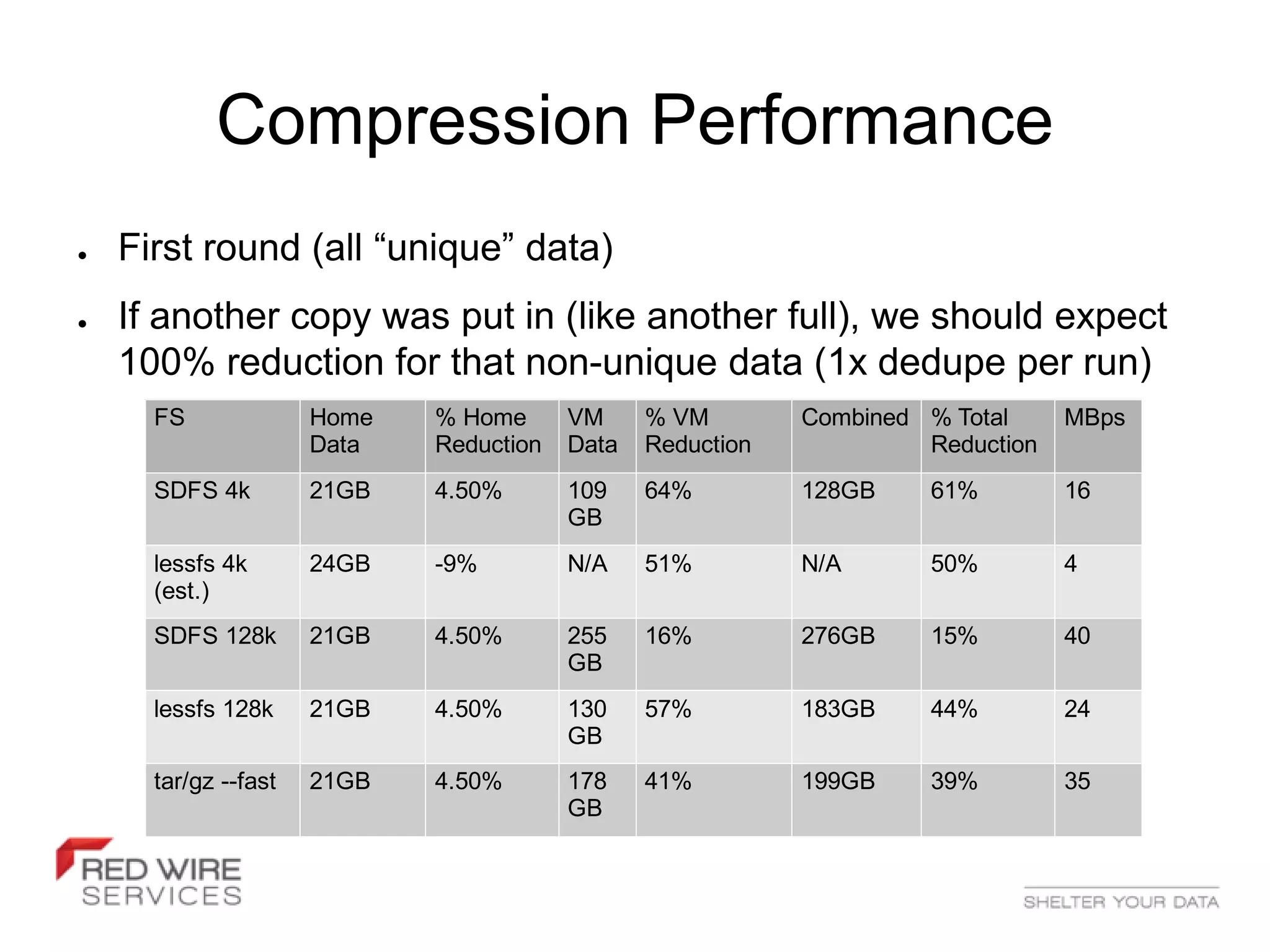

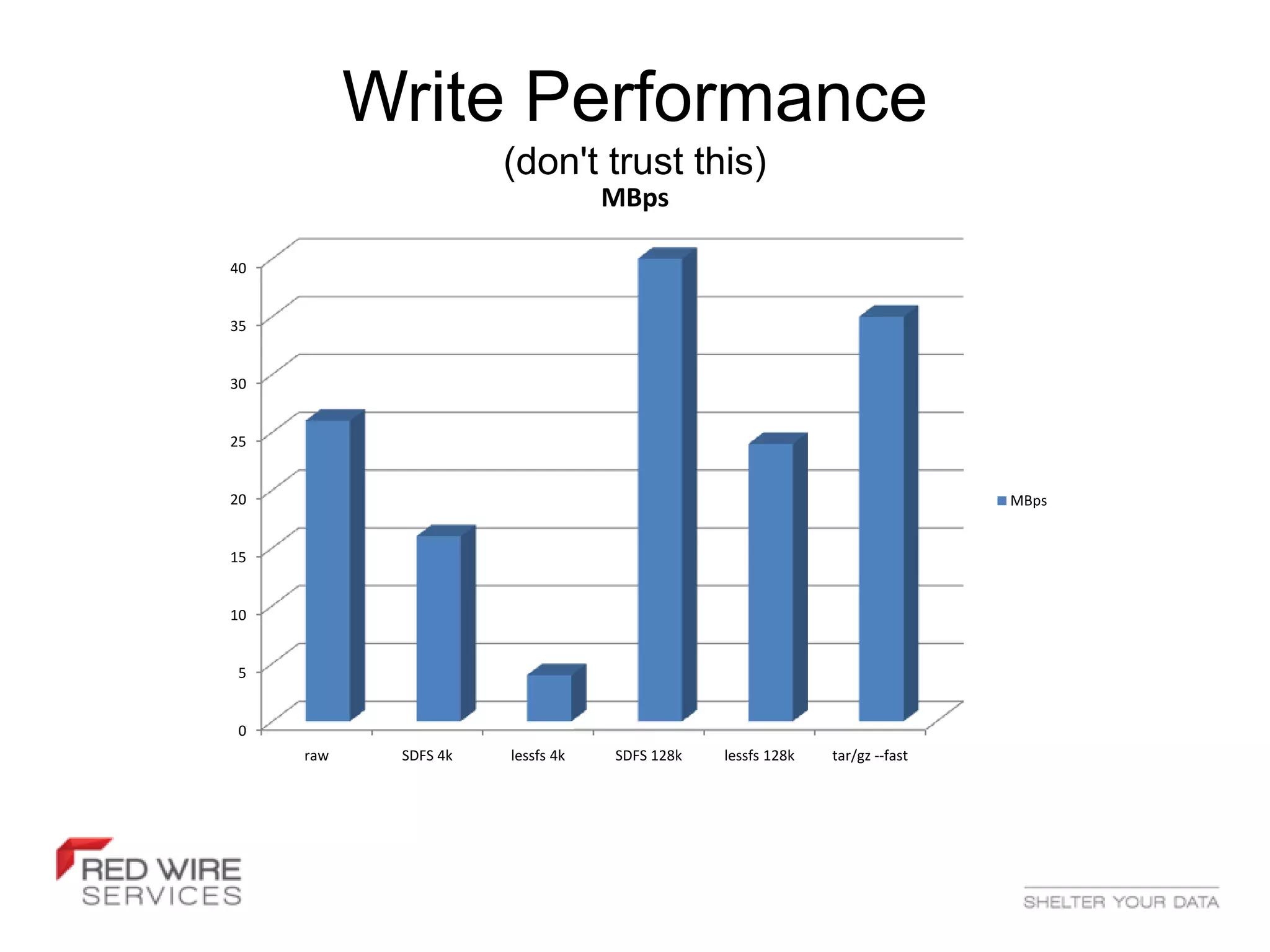

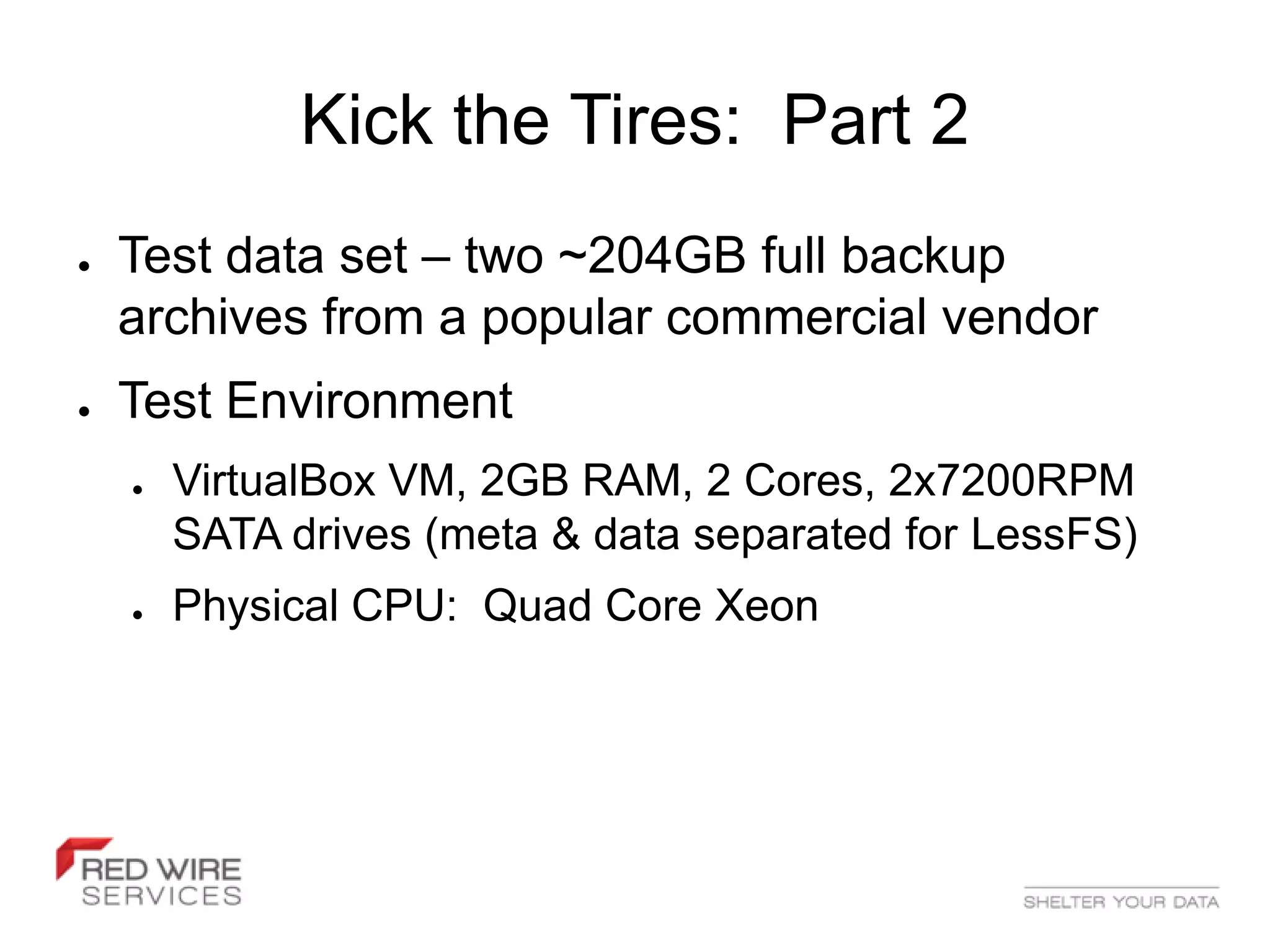

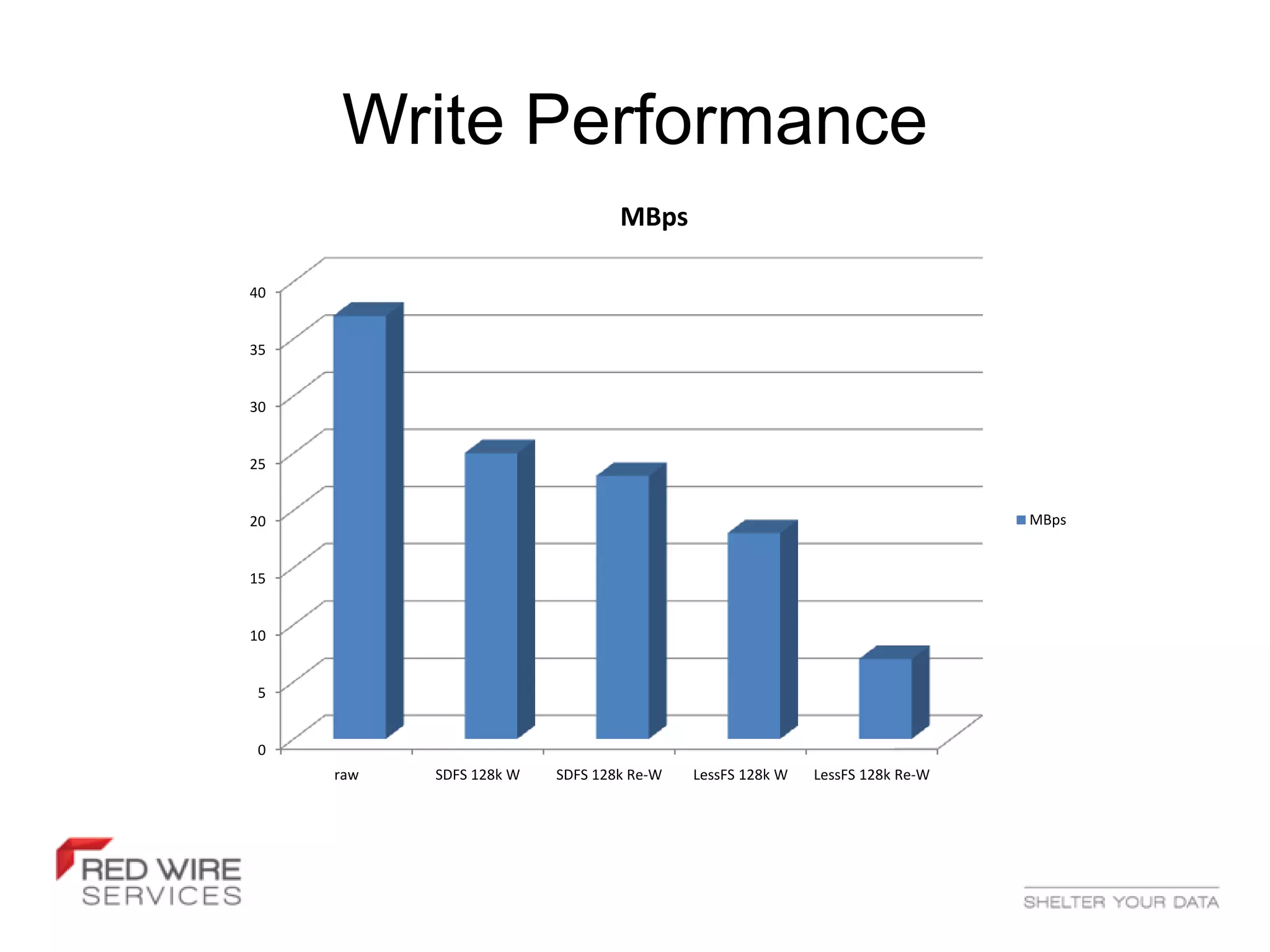

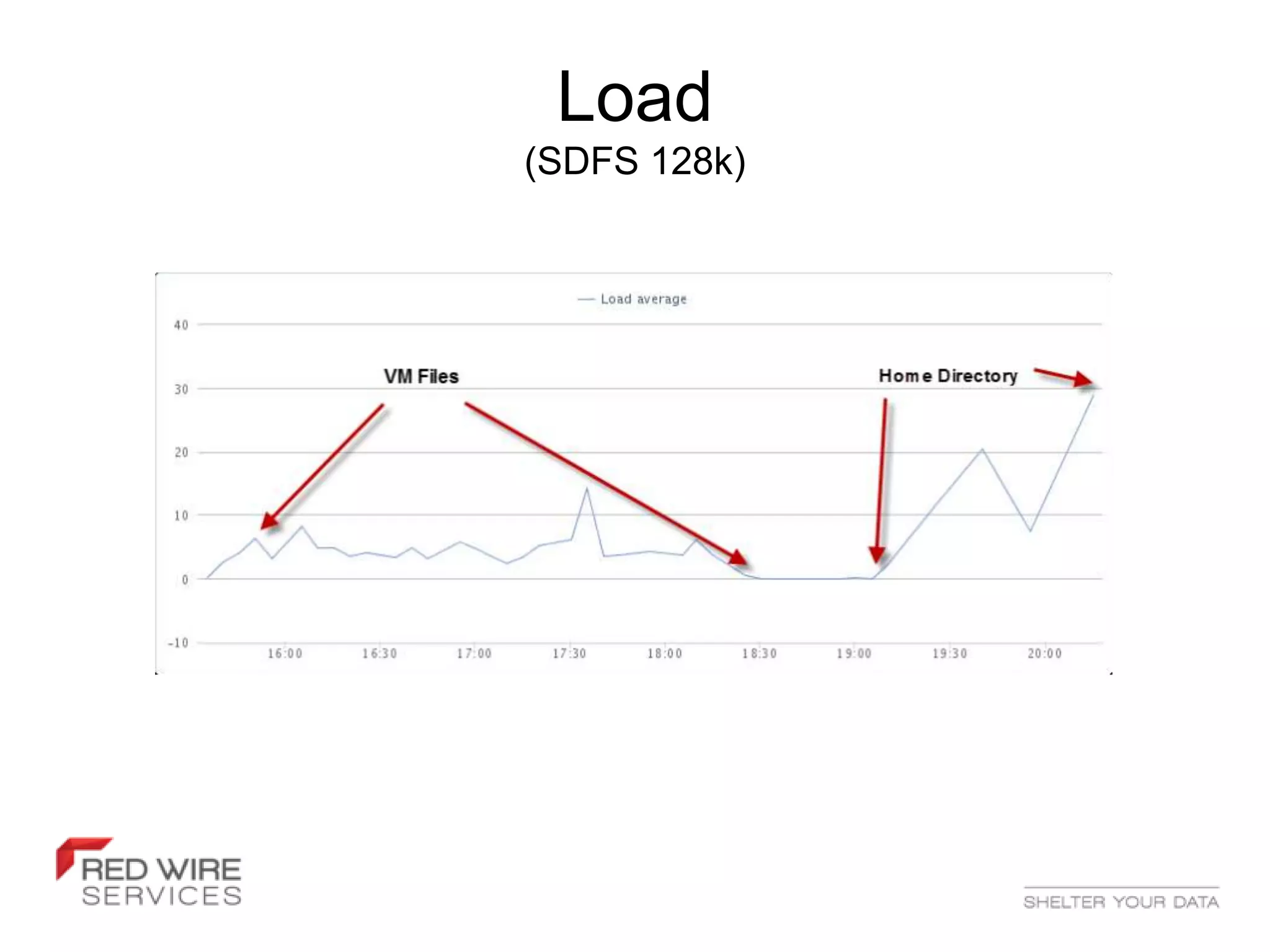

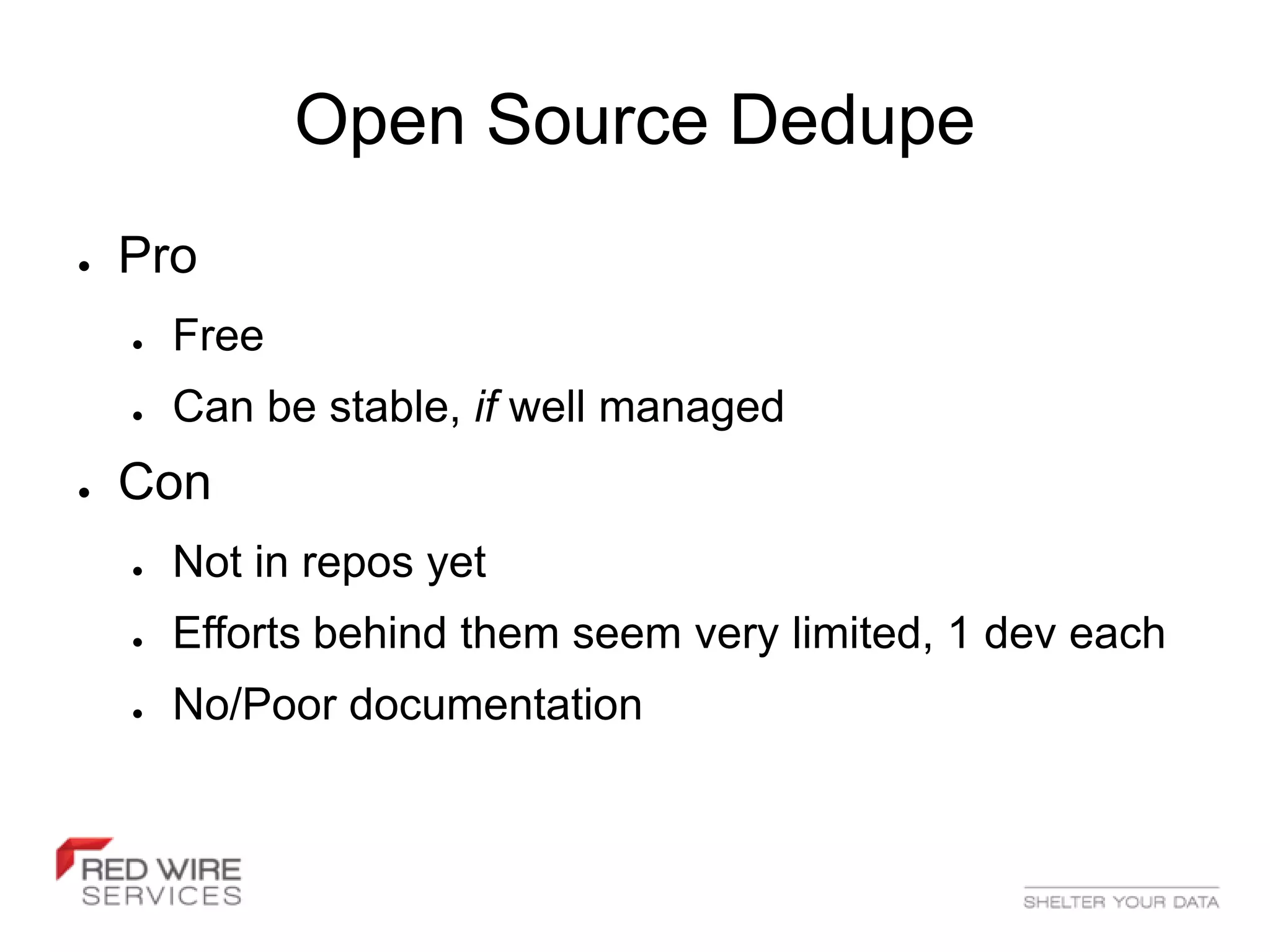

The document discusses data deduplication, a technique aimed at eliminating redundant data to optimize storage utilization. It covers types of deduplication, benefits, drawbacks, and both commercial and open-source implementations. It highlights key open-source solutions like SDFS and Lessfs, their installation processes, performance, and reliability considerations.