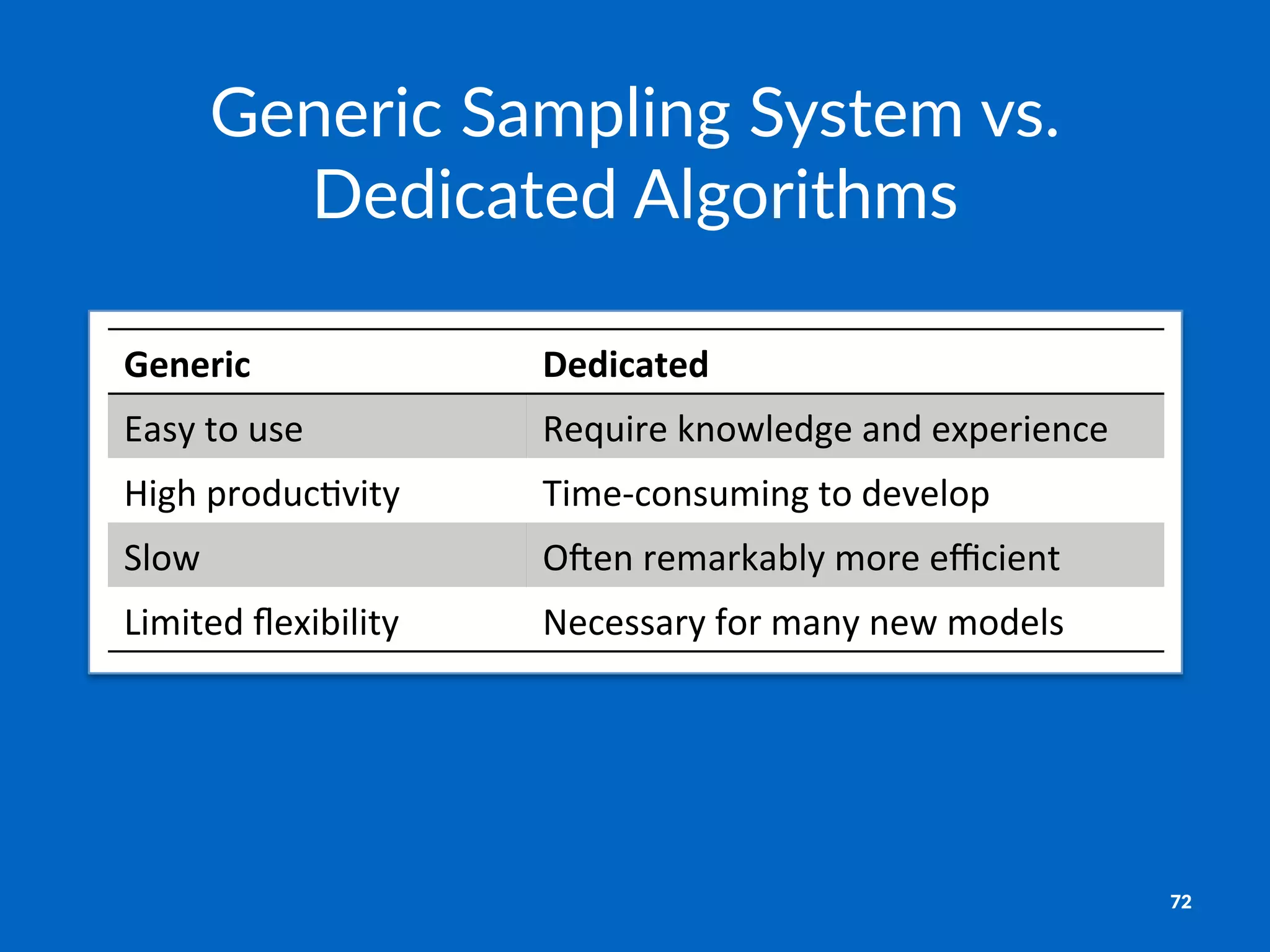

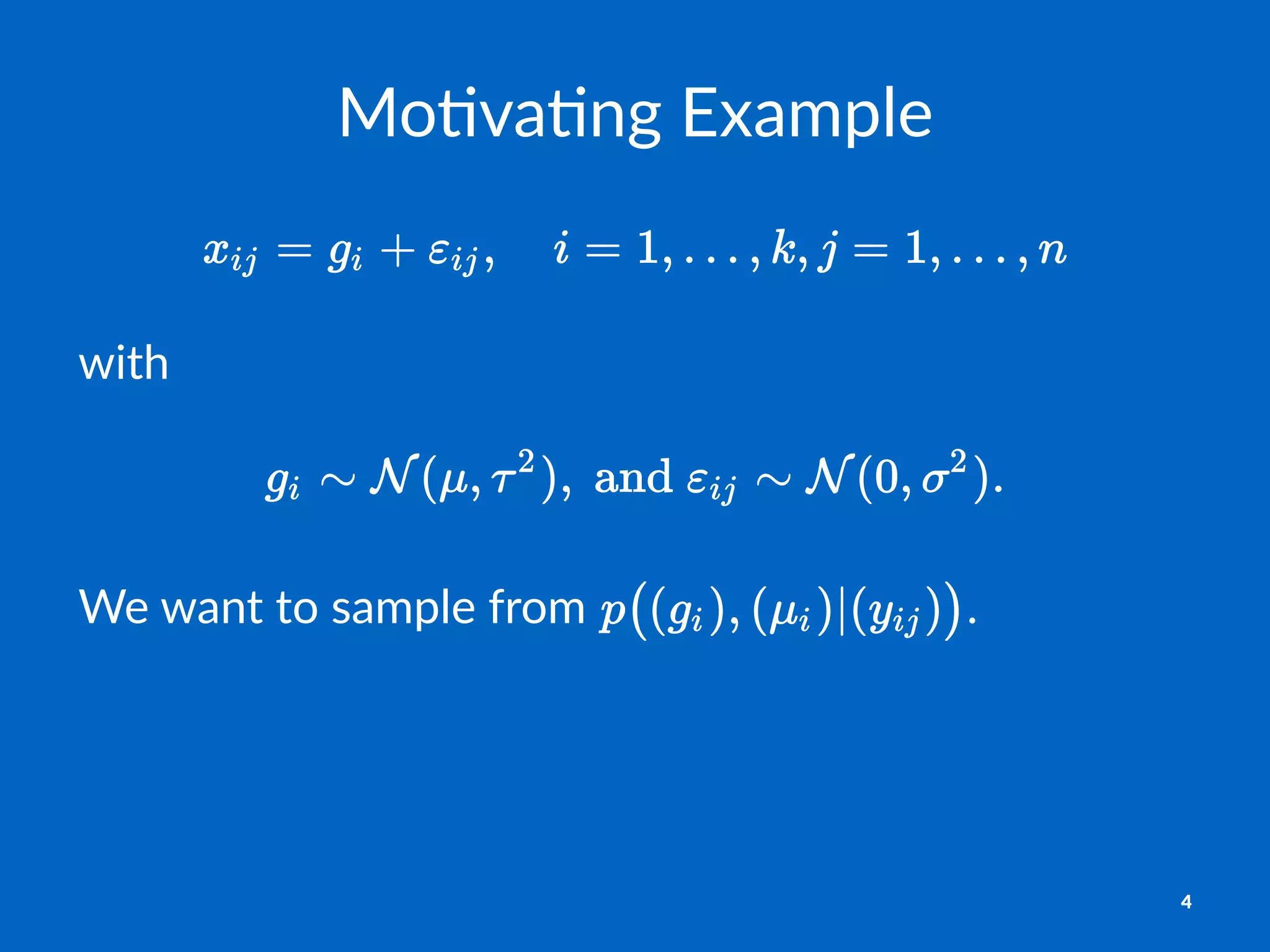

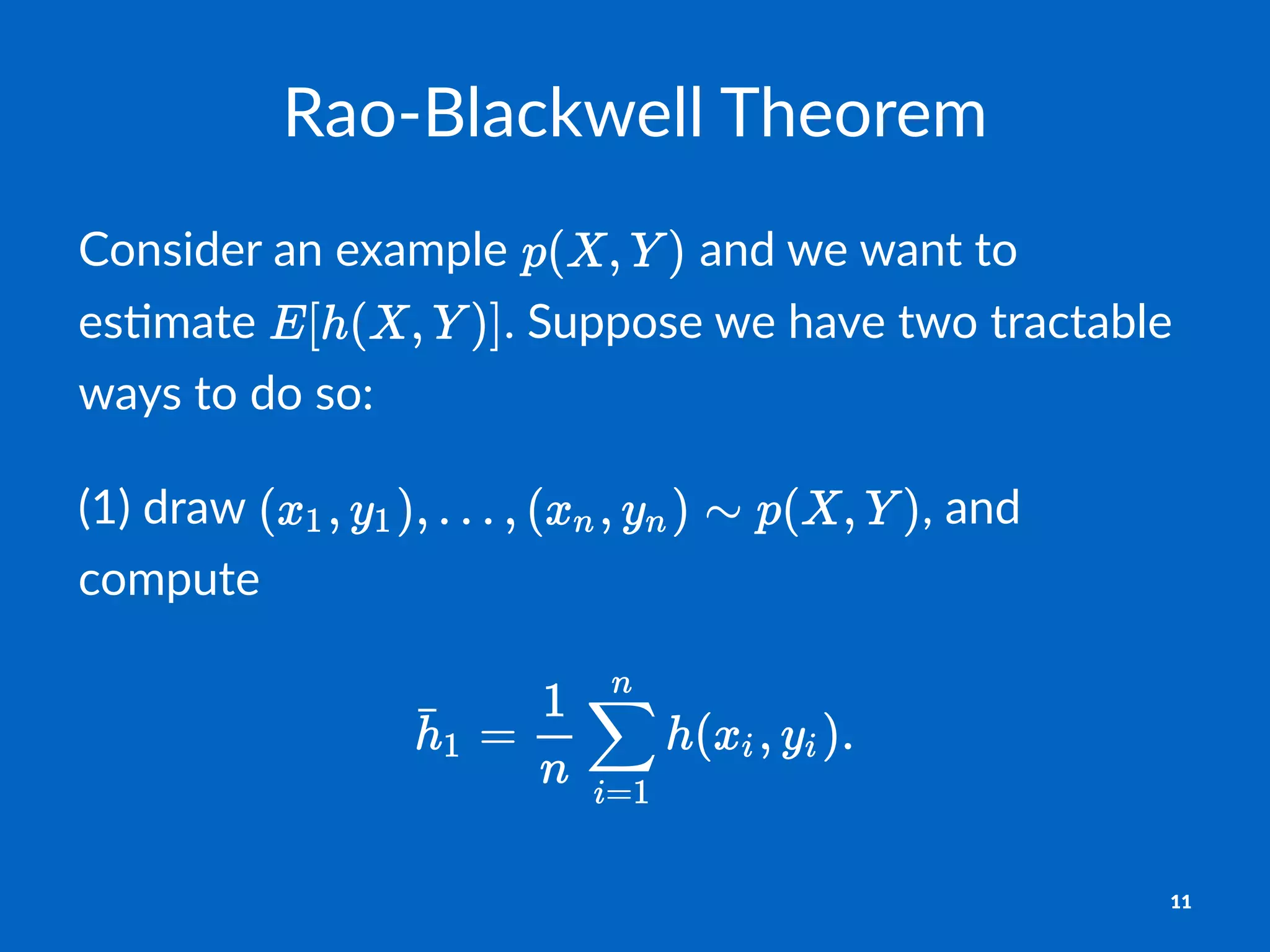

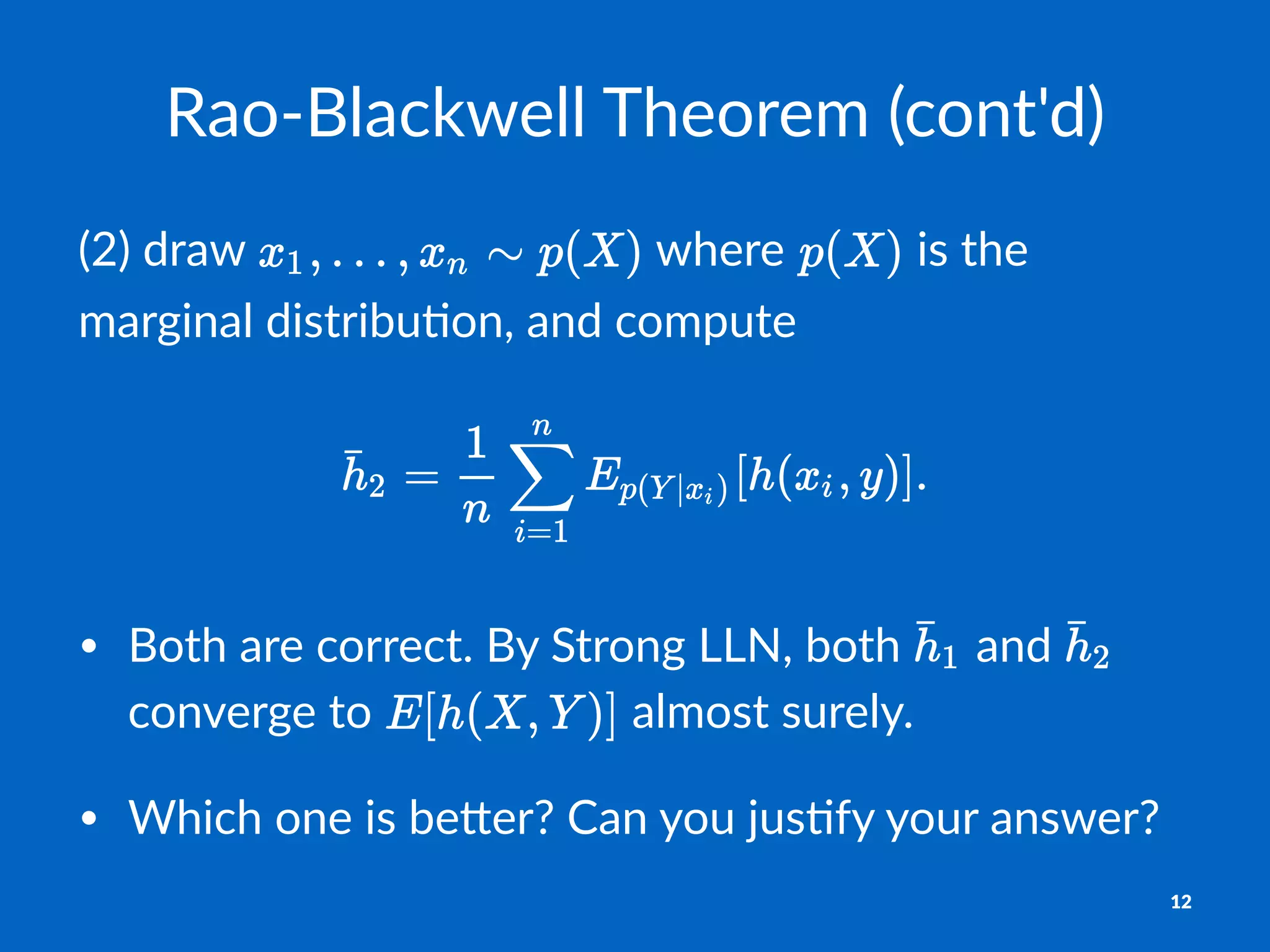

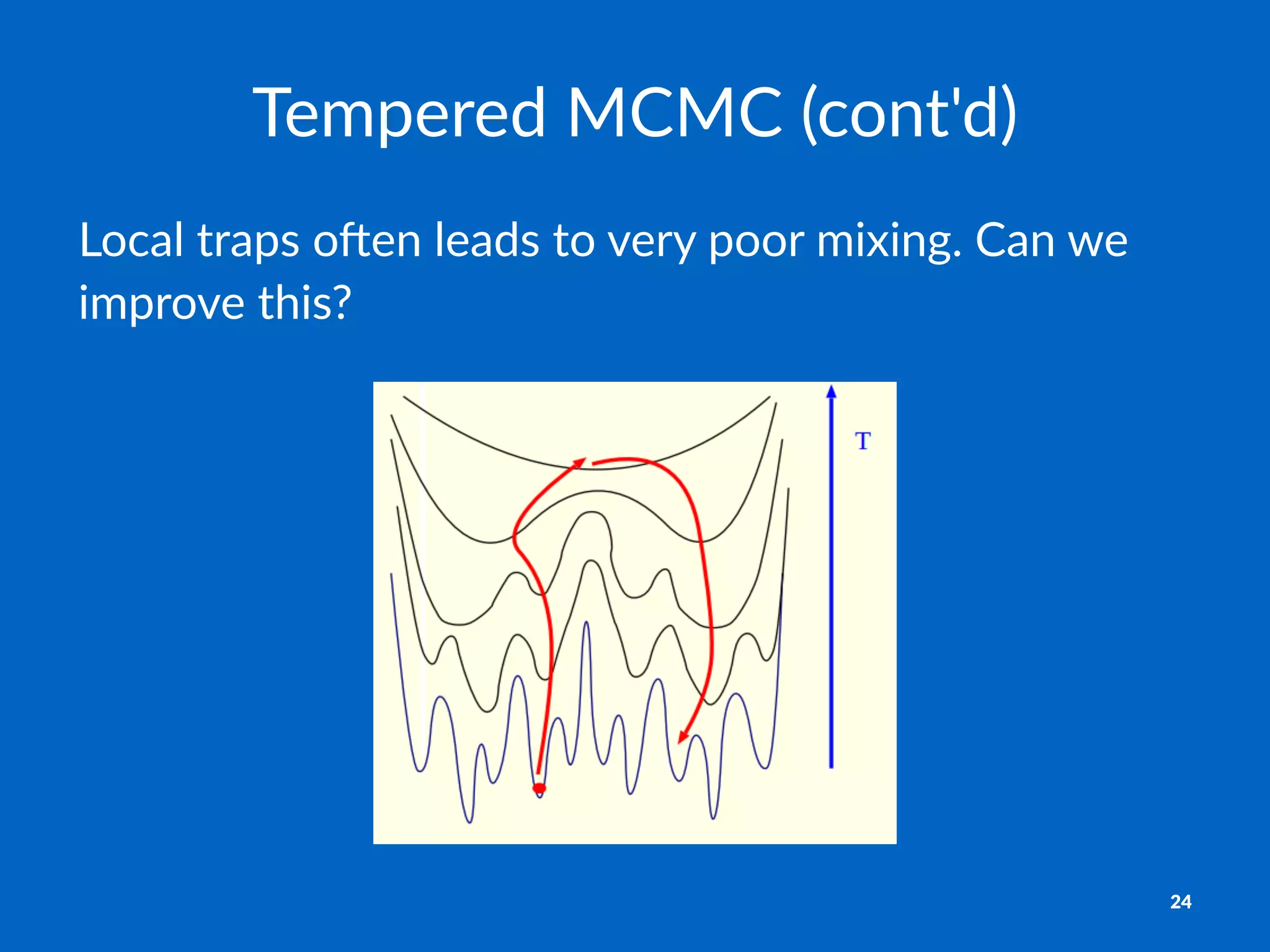

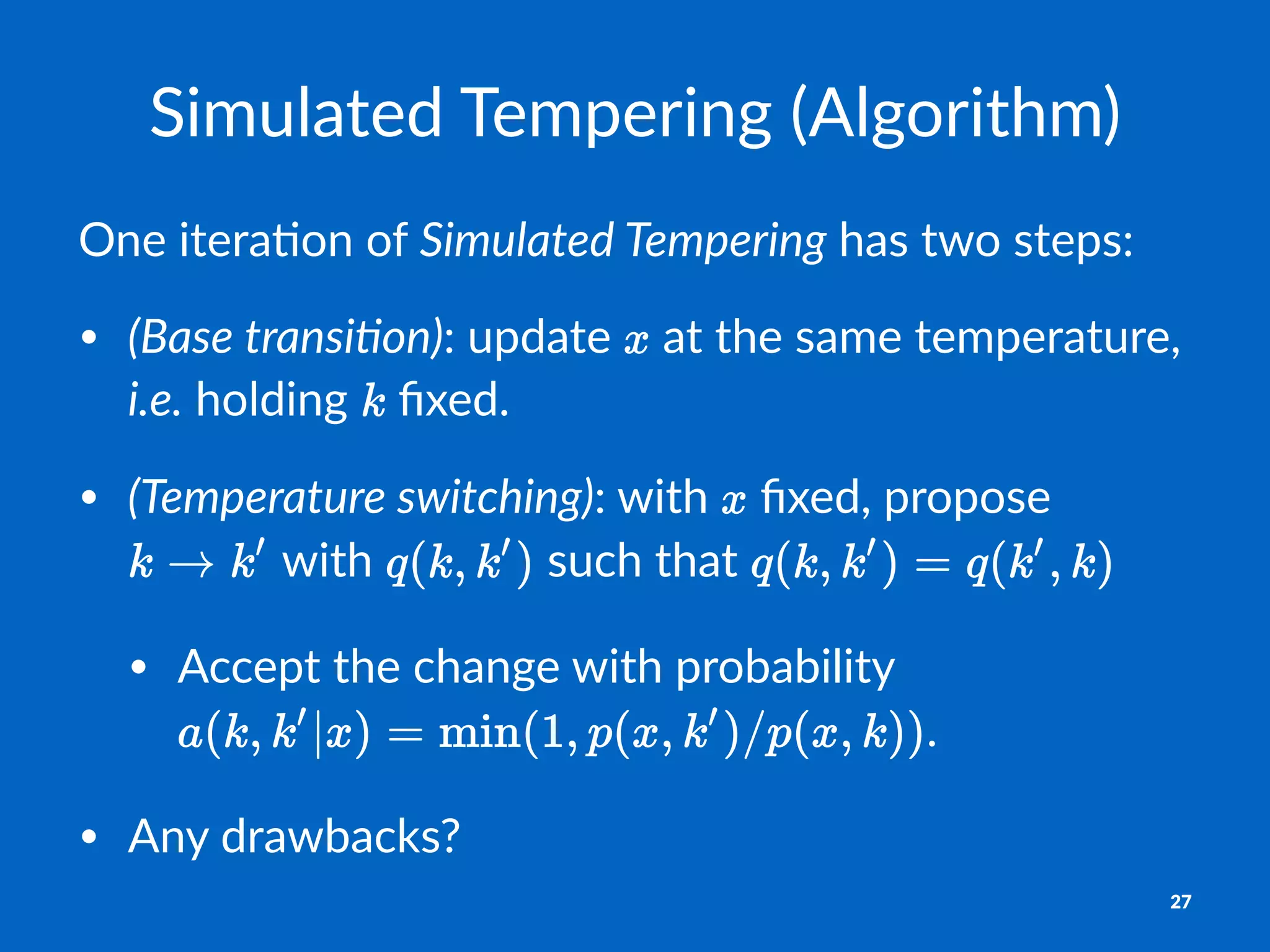

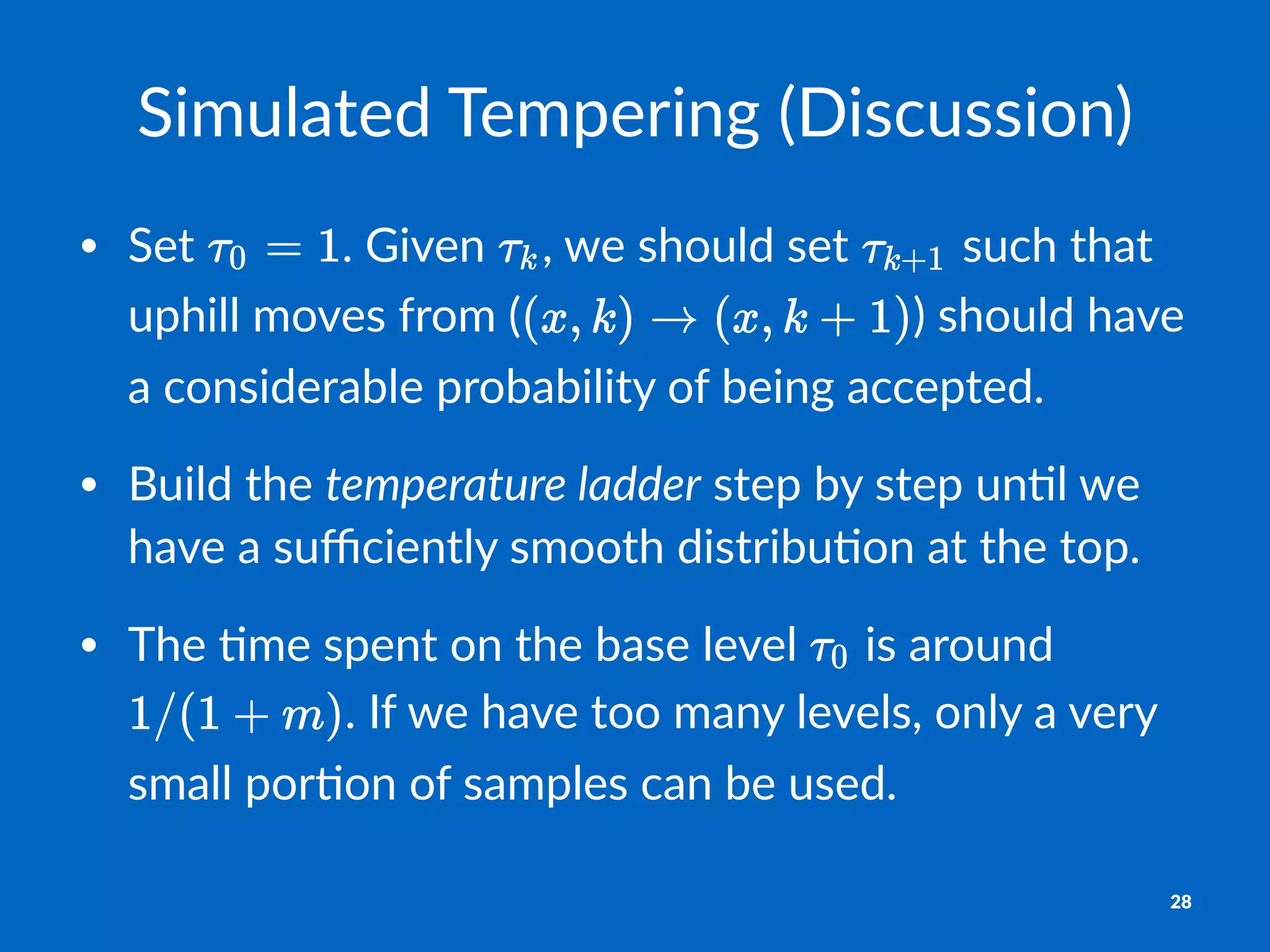

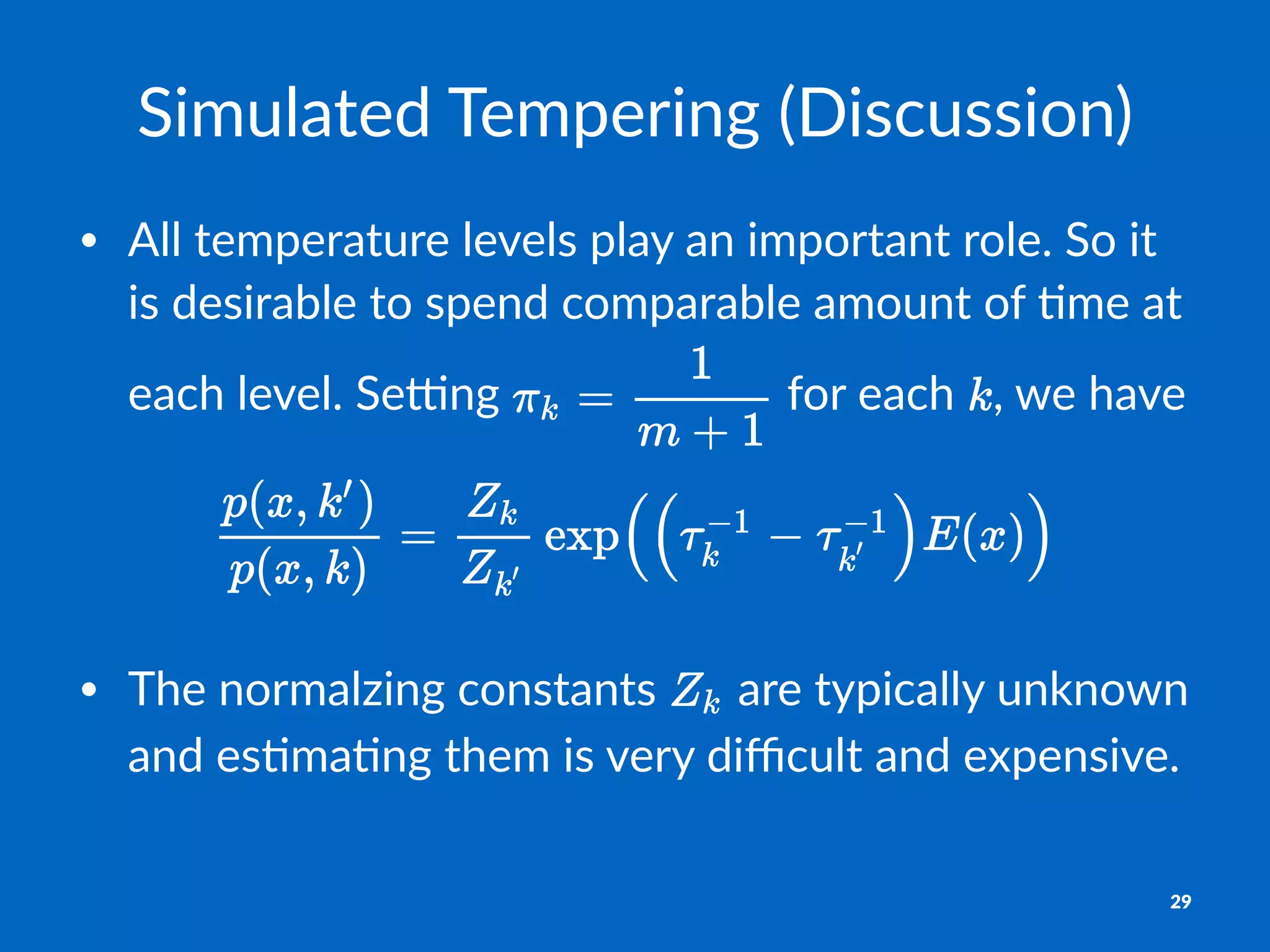

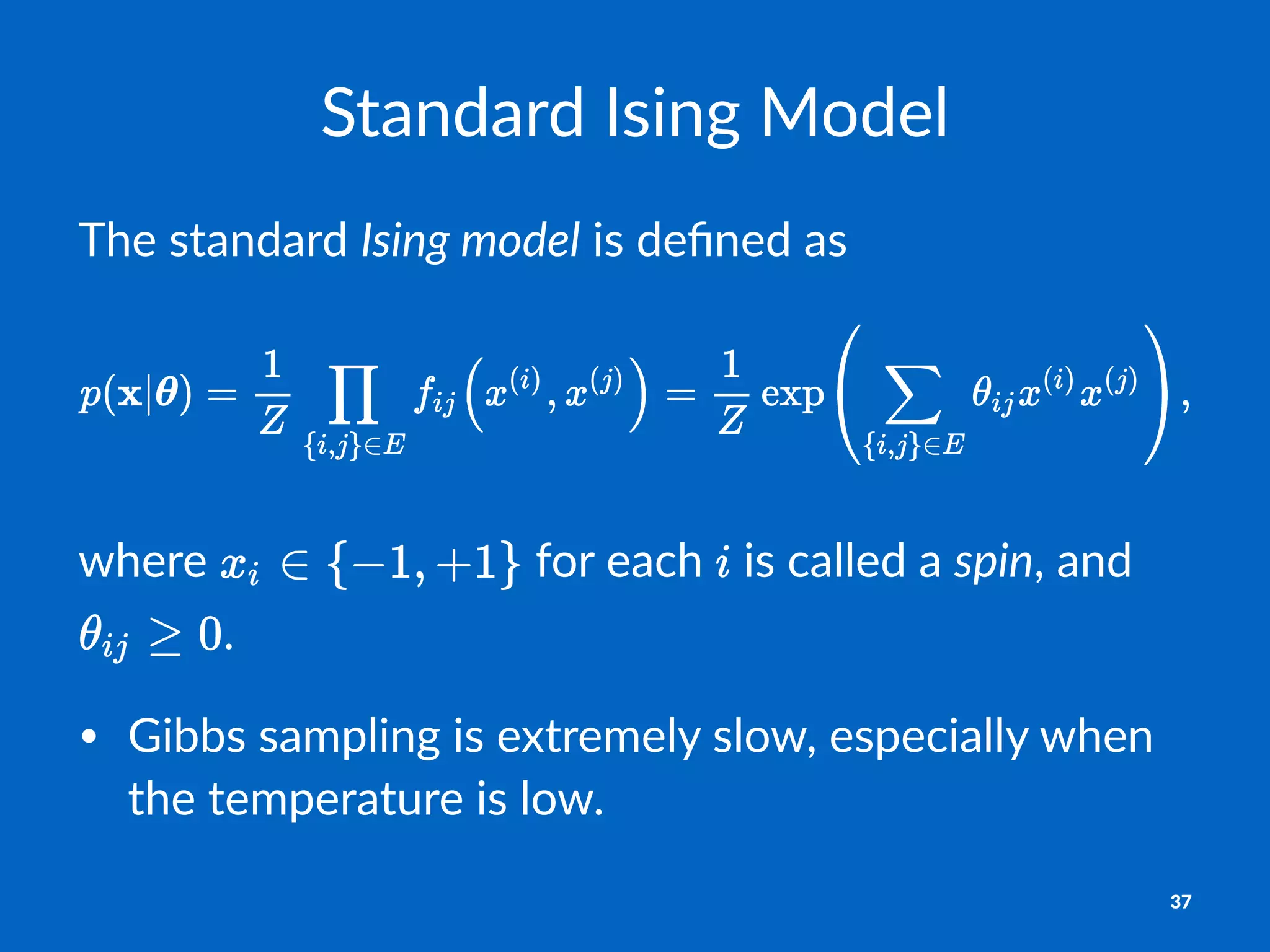

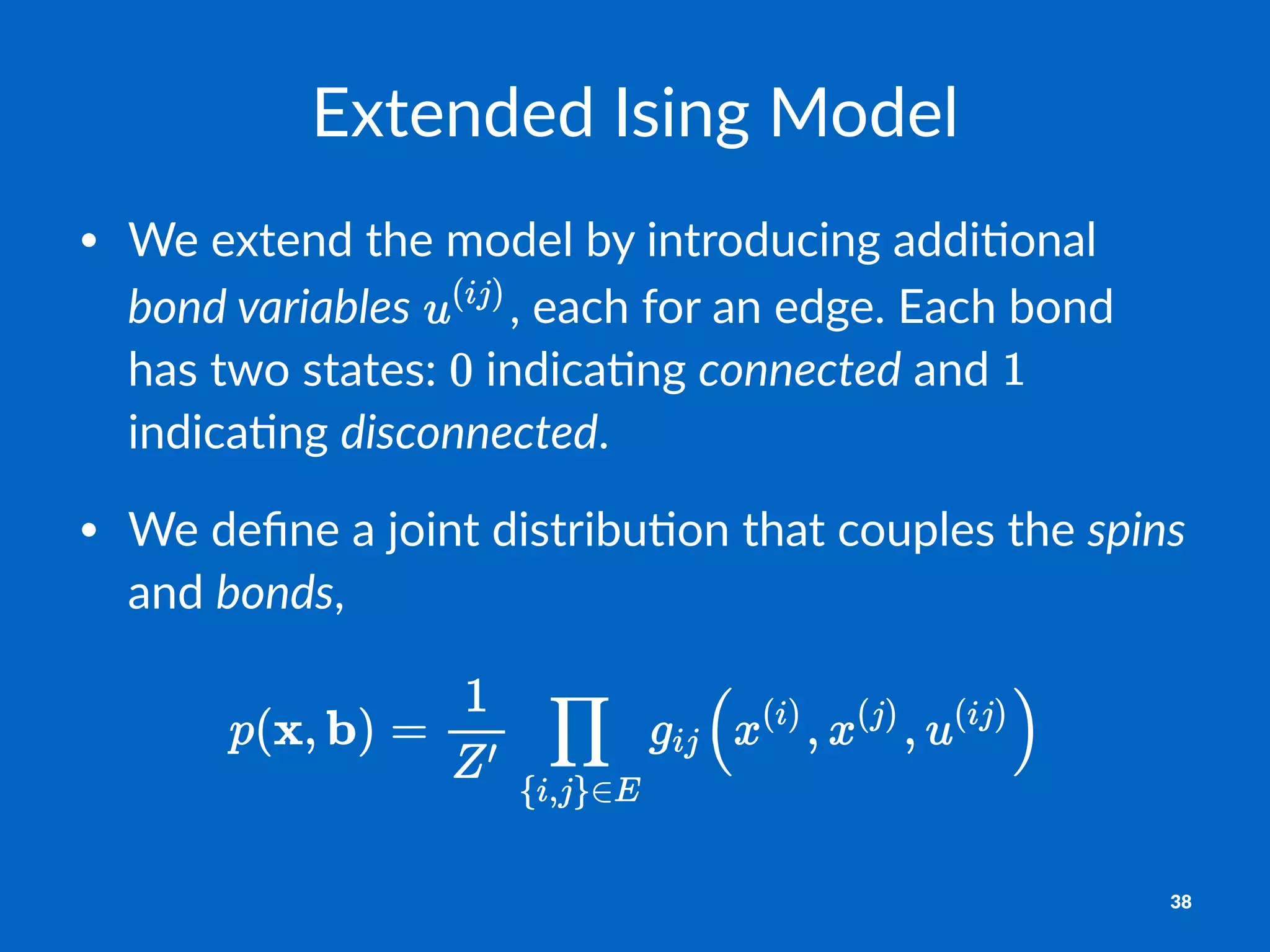

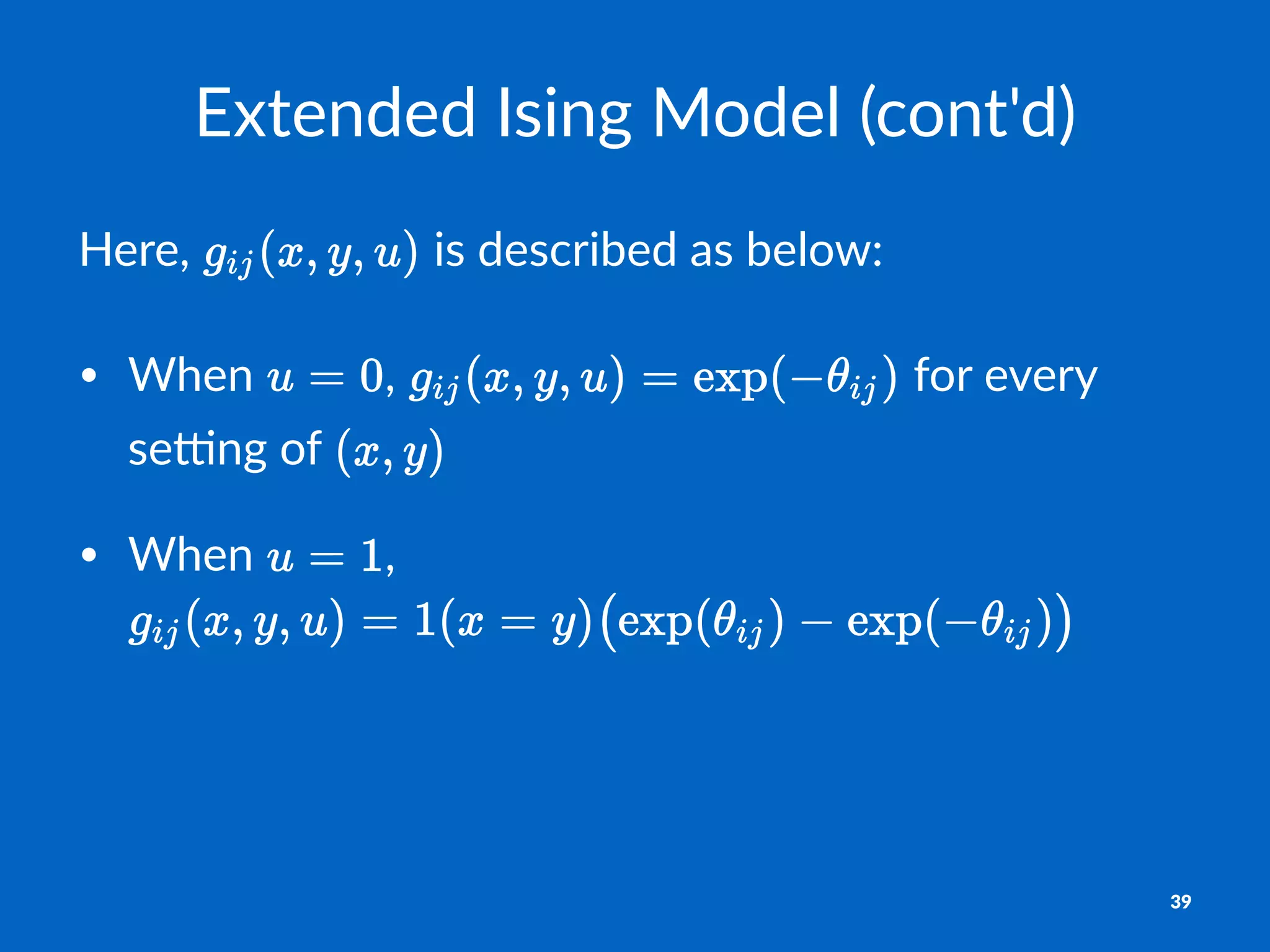

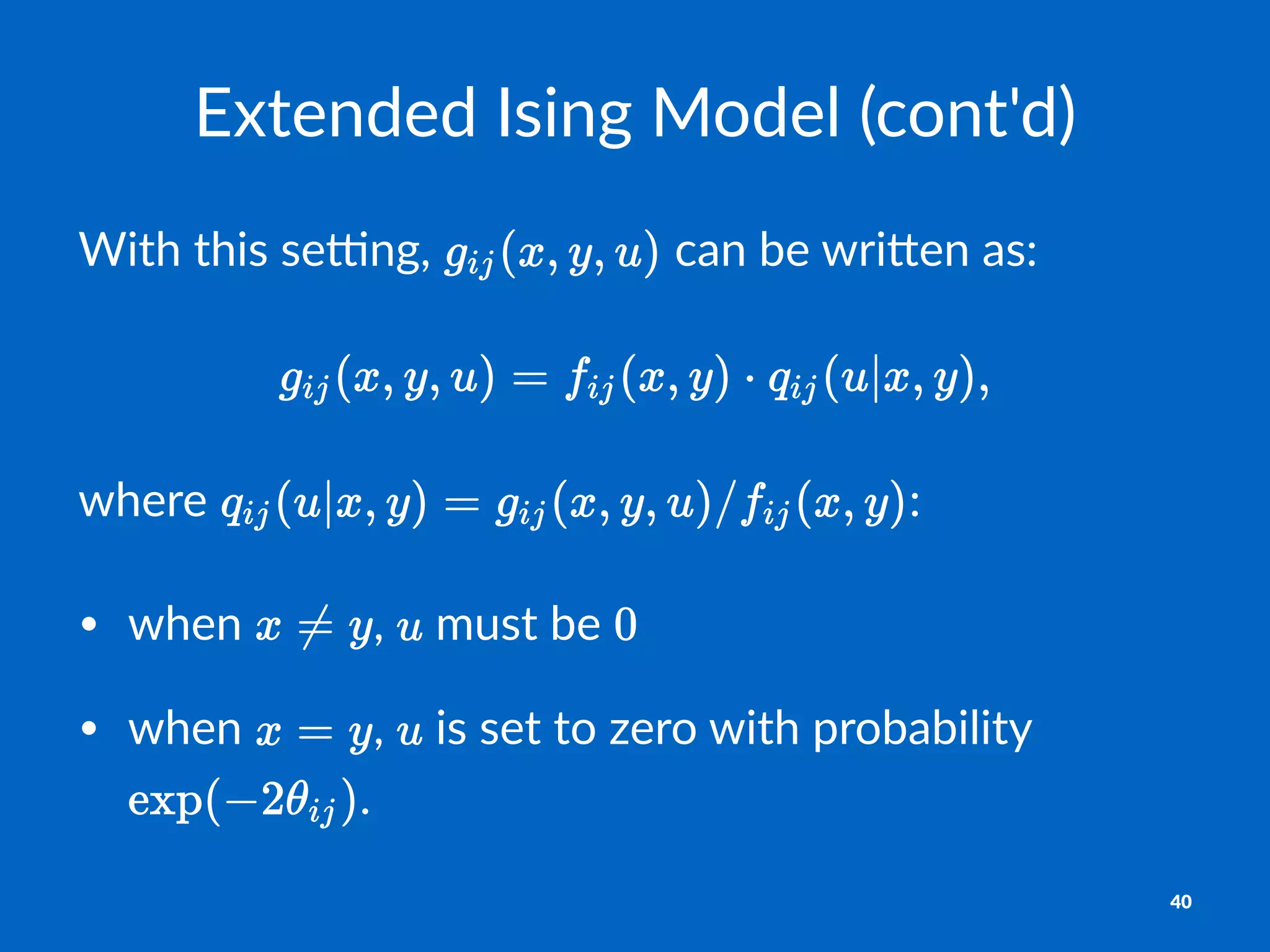

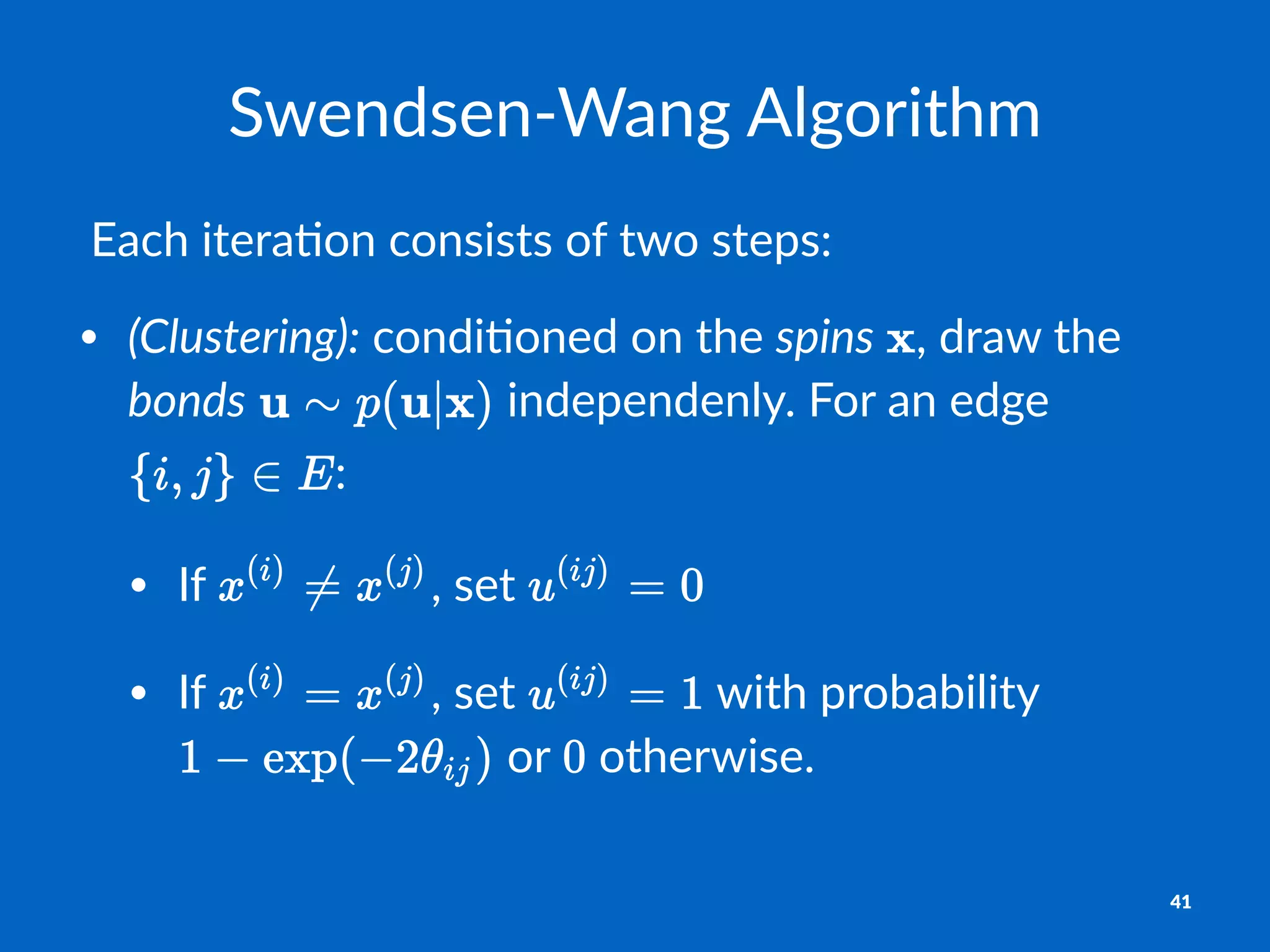

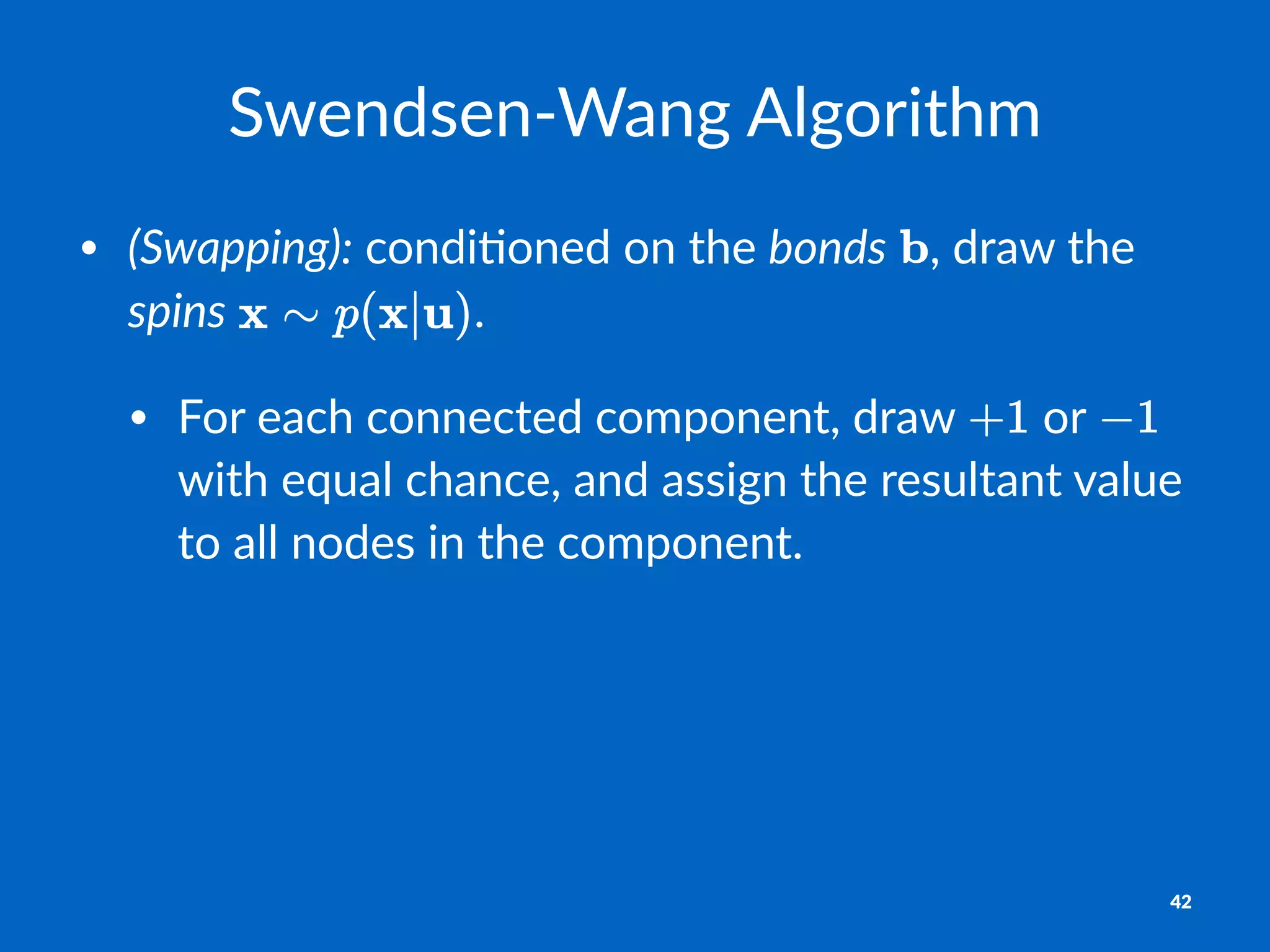

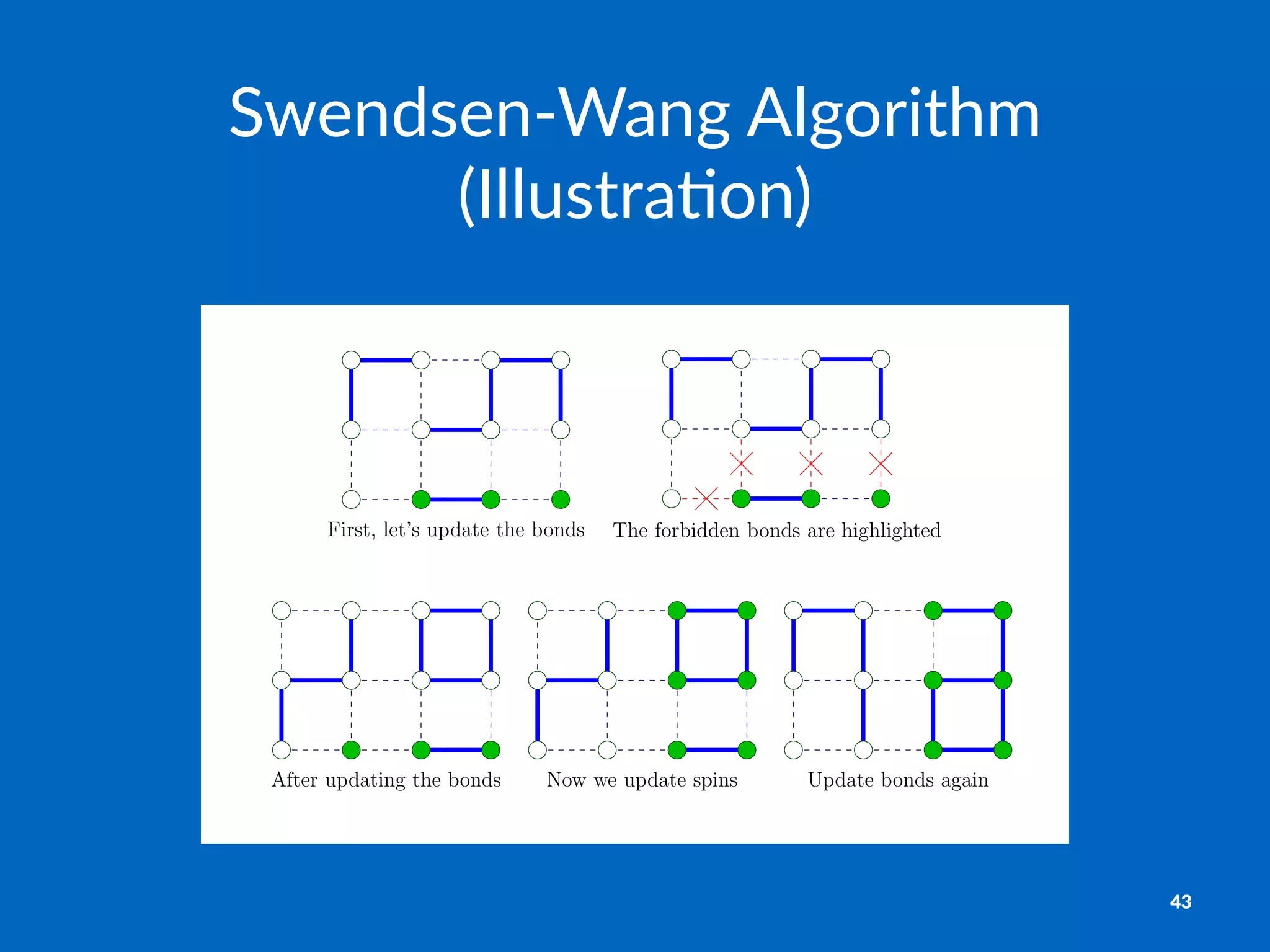

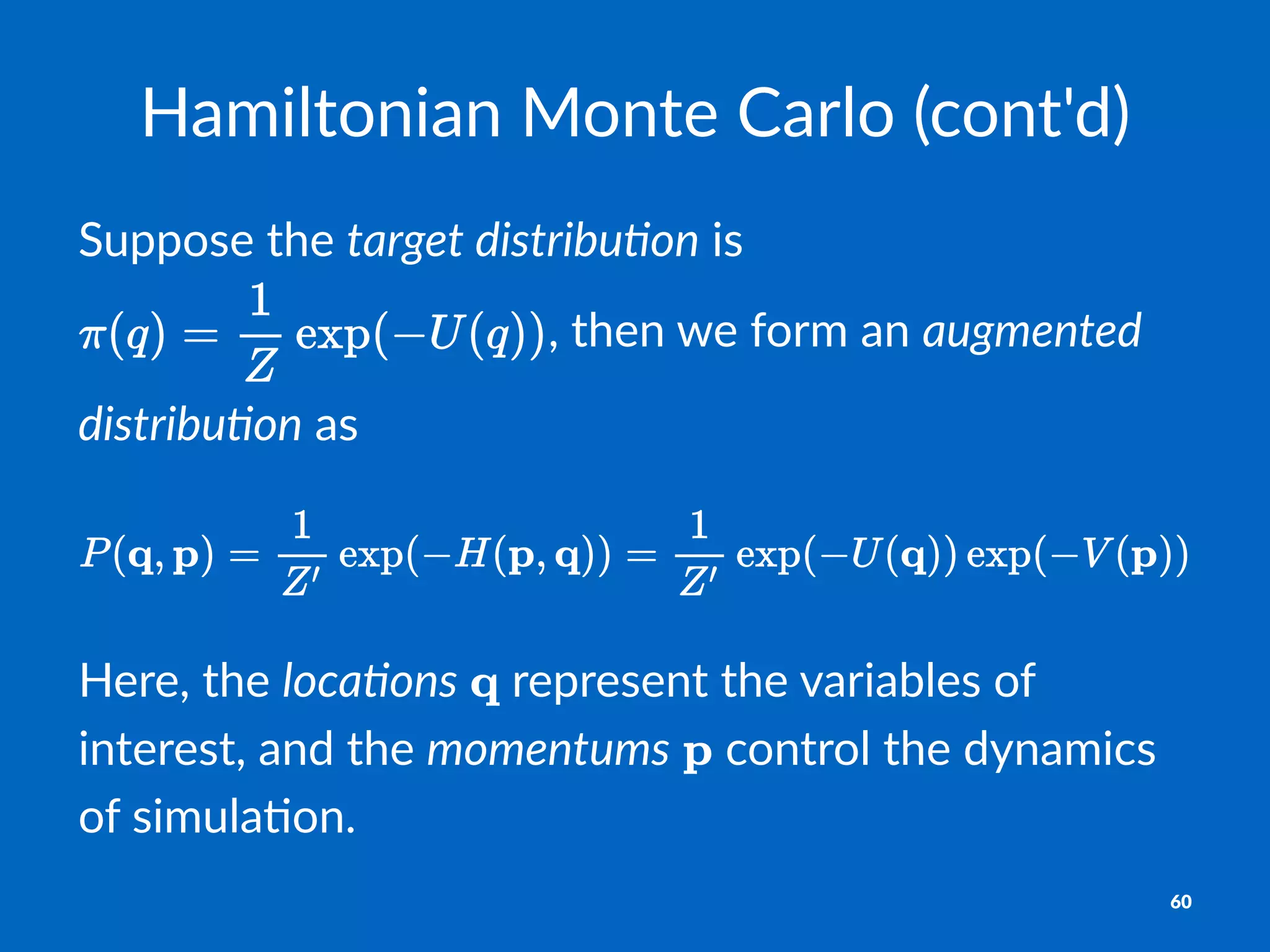

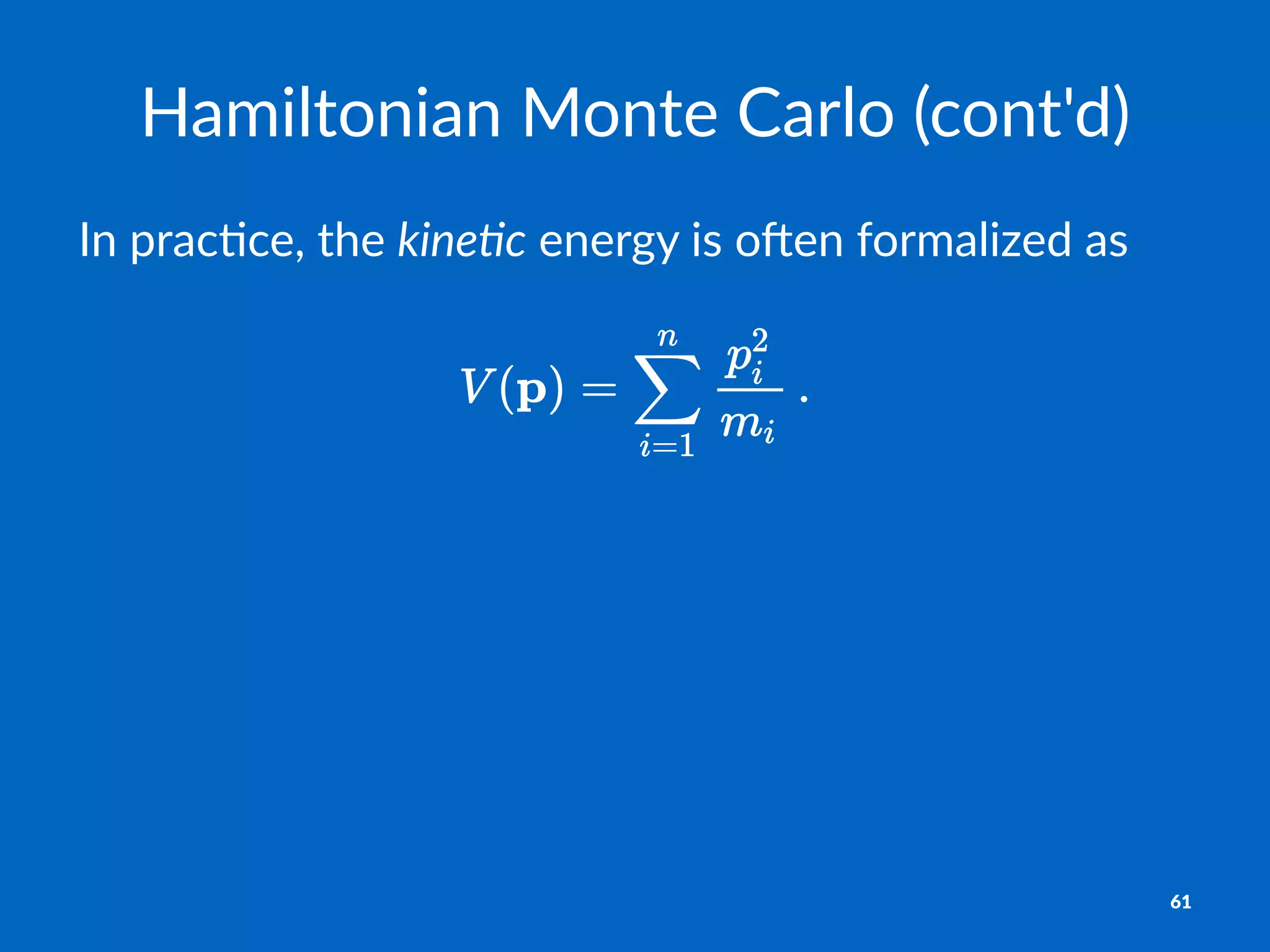

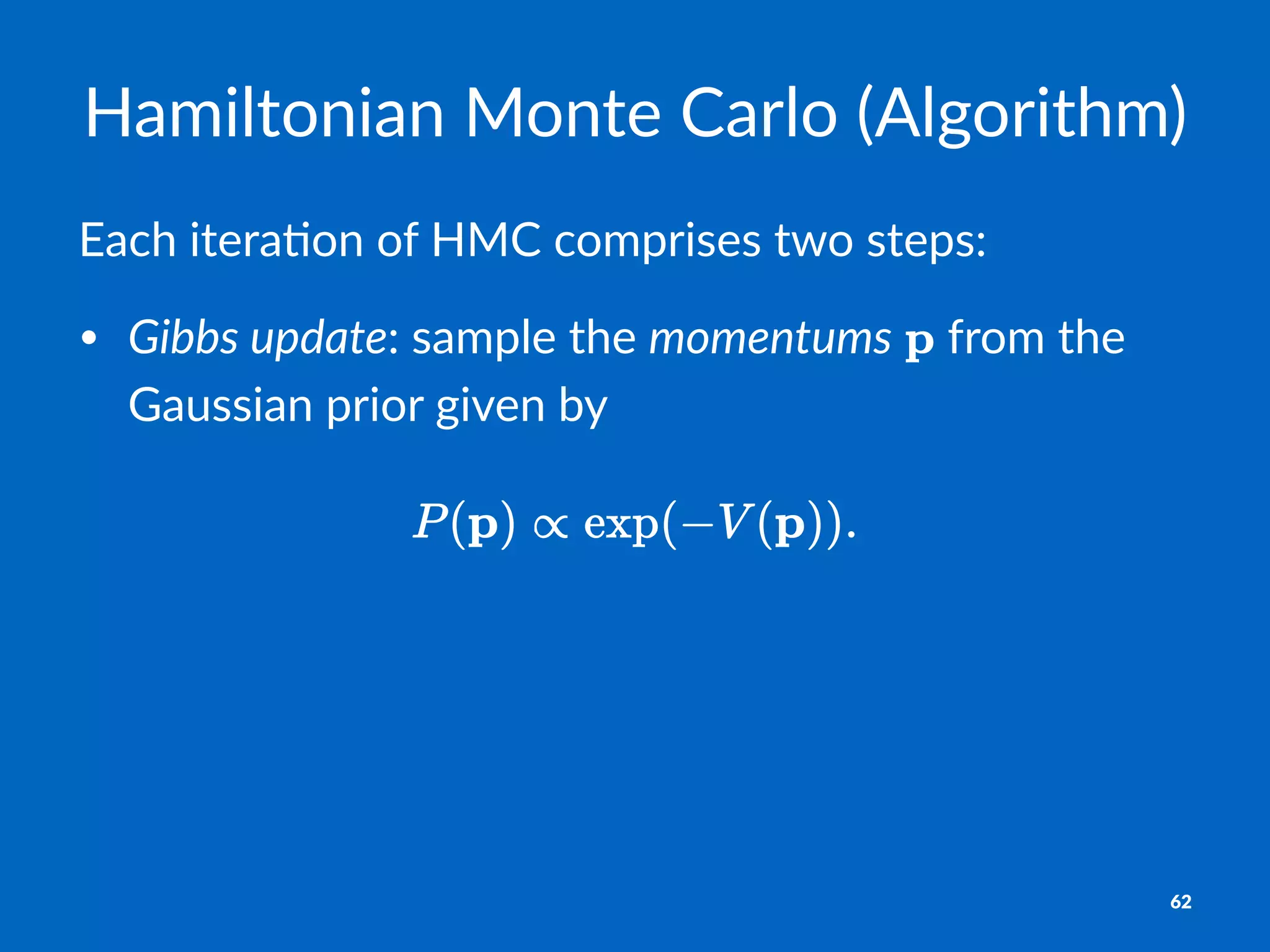

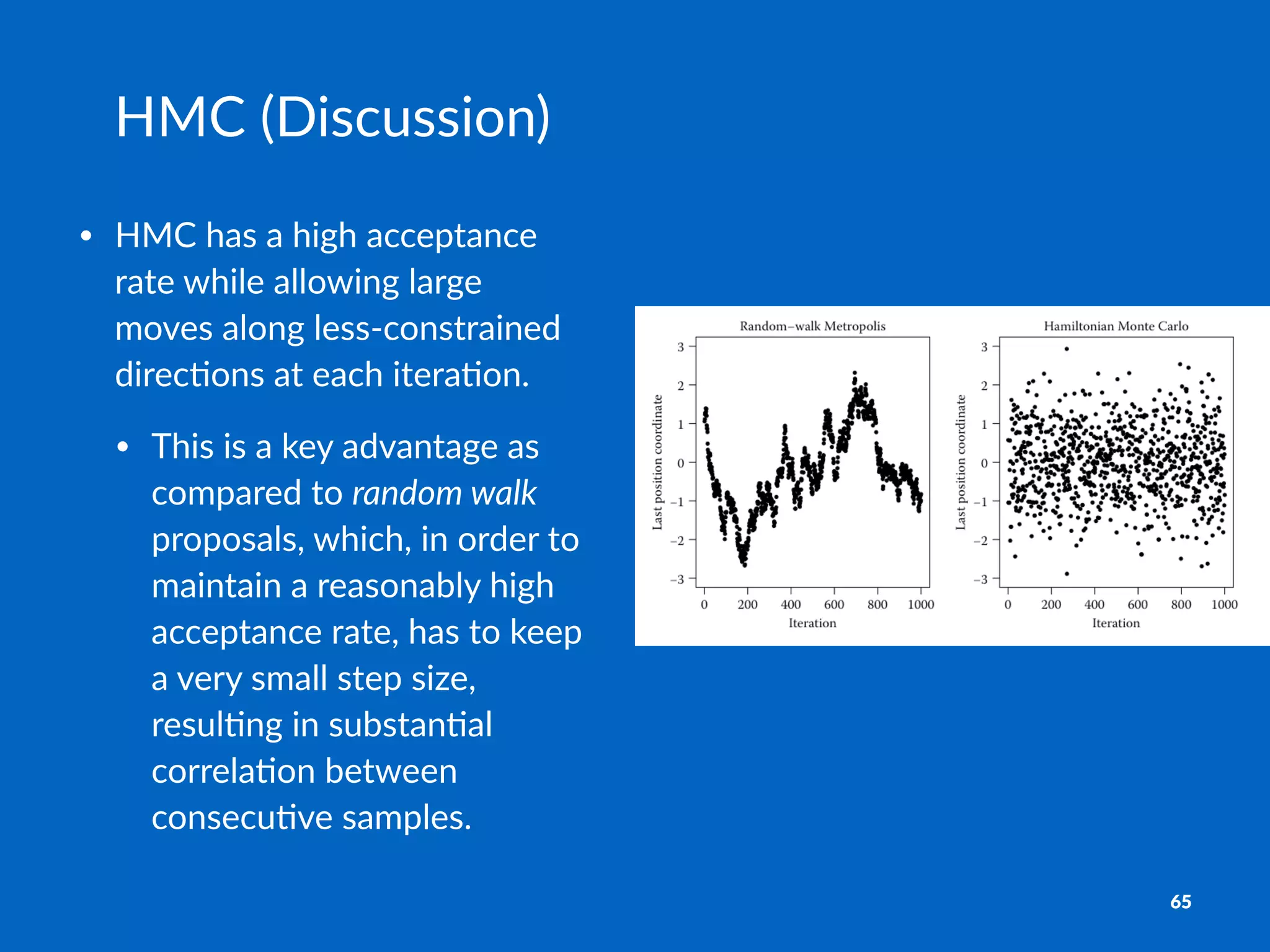

The document discusses advanced sampling techniques used in Markov Chain Monte Carlo (MCMC) methods including collapsed Gibbs sampling, auxiliary variables, slice sampling, simulated tempering, and the Swendsen-Wang algorithm. Key concepts such as Rao-Blackwell theorem and Hamiltonian Monte Carlo are also explored, highlighting their applications and performance benefits in various sampling scenarios. Additionally, practical implementations and theoretical foundations of these techniques are considered to improve sampling efficiency and convergence.

![Stan%Example

data {

int<lower=0> N;

vector[N] x;

vector[N] y;

}

parameters {

real alpha;

real beta;

real<lower=0> sigma;

}

model {

for (n in 1:N)

y[n] ~ normal(alpha + beta * x[n], sigma);

}

71](https://image.slidesharecdn.com/lec3advsampleslides-150127040423-conversion-gate01/75/MLPI-Lecture-3-Advanced-Sampling-Techniques-71-2048.jpg)