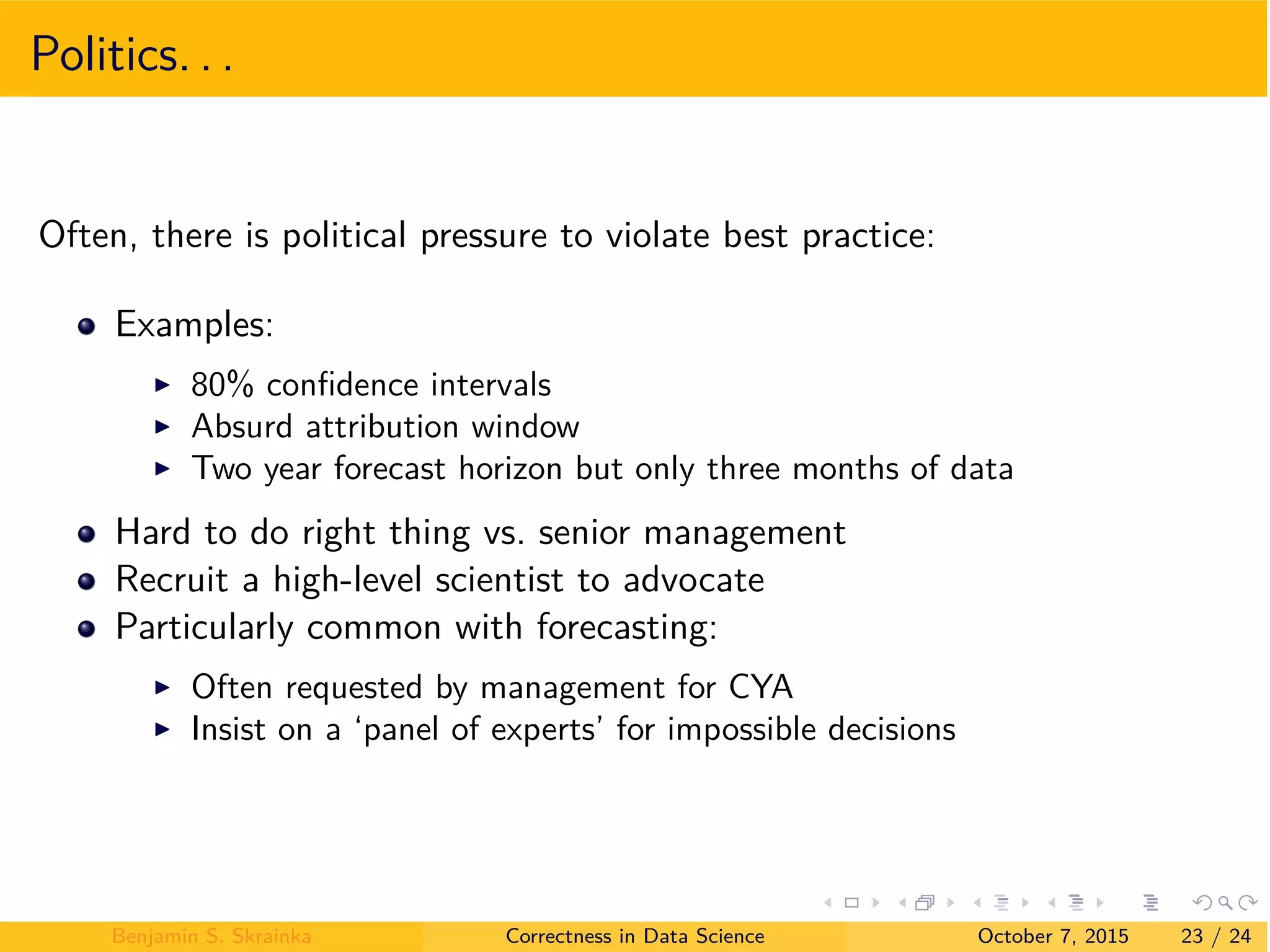

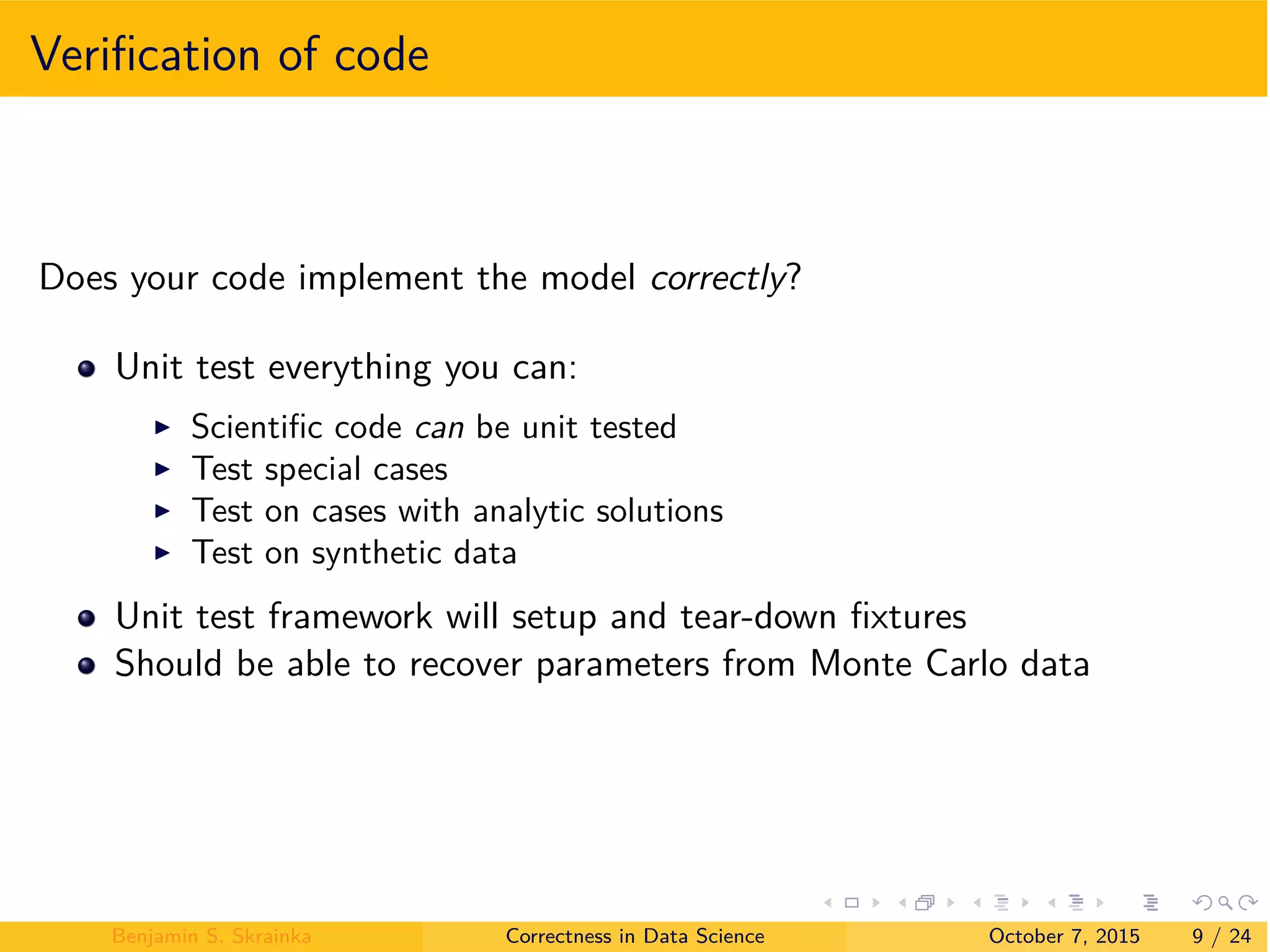

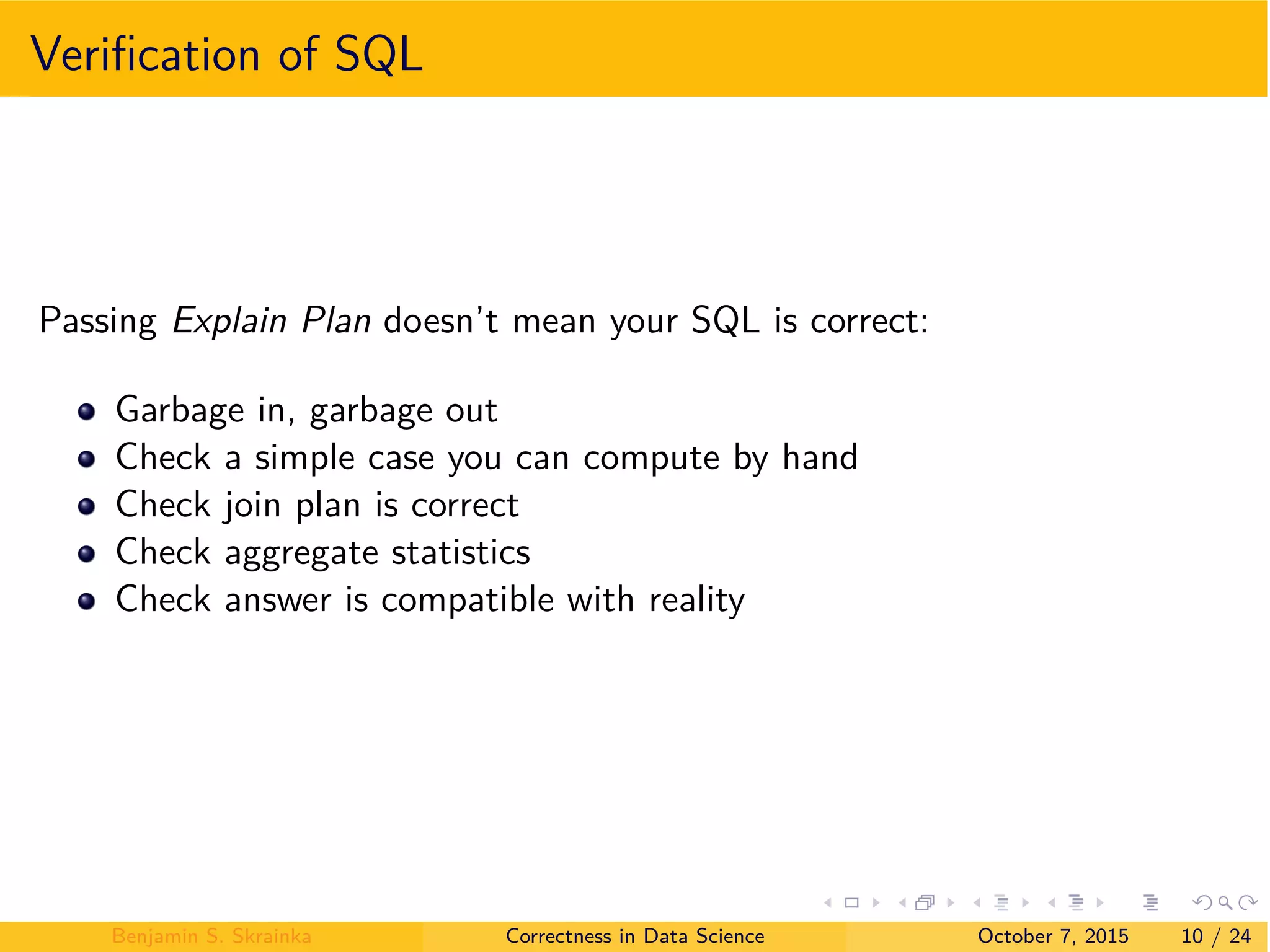

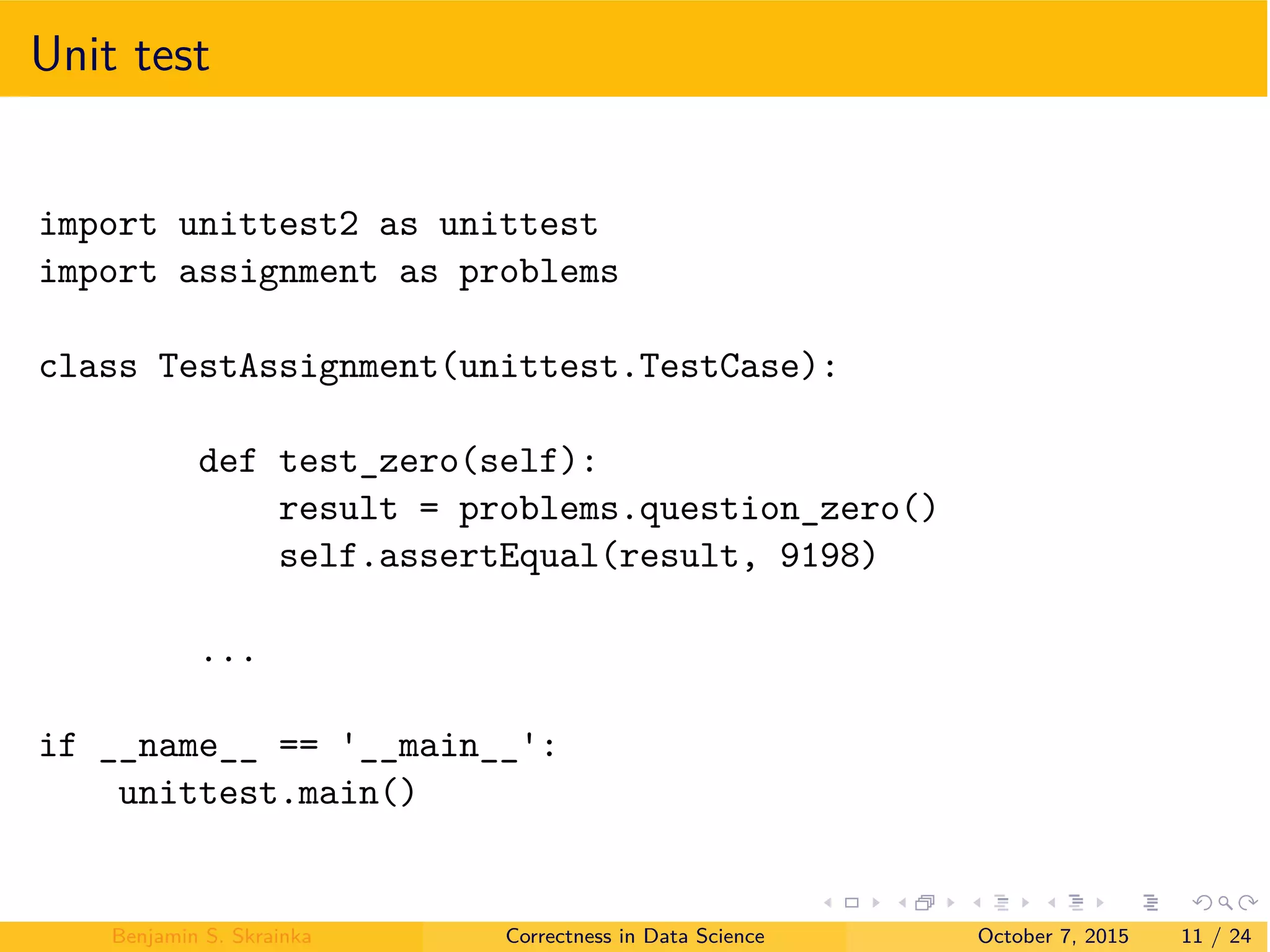

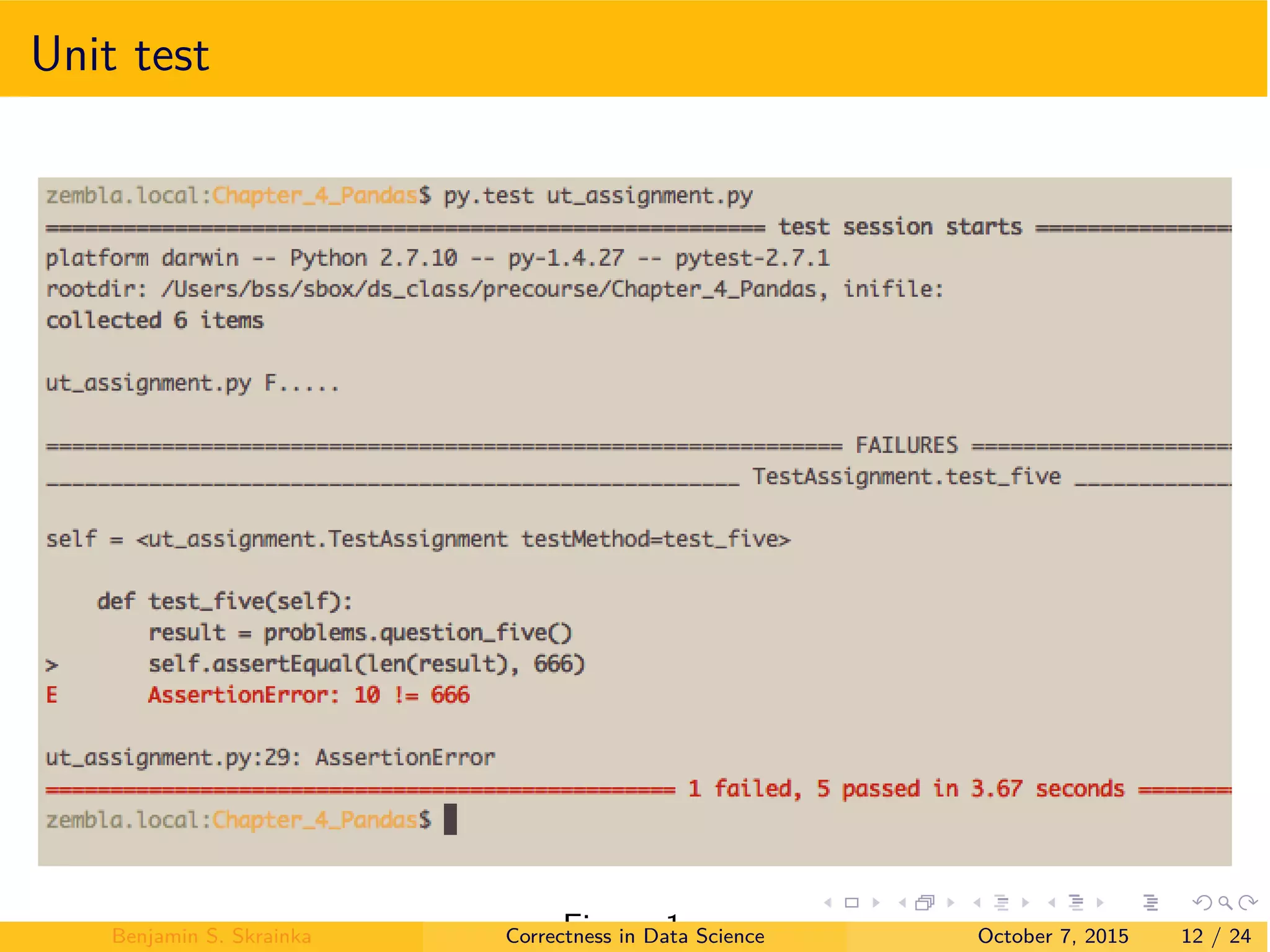

The document discusses the importance of correctness in data science, emphasizing the verification, validation, and uncertainty quantification (vv&uq) framework to ensure models are accurate and reliable. It outlines good practices and habits to improve the quality and integrity of data science work, highlighting that poor data science can lead to significant consequences. The conclusion stresses the need for adopting these methodologies and habits to enhance the overall quality of data science practices.

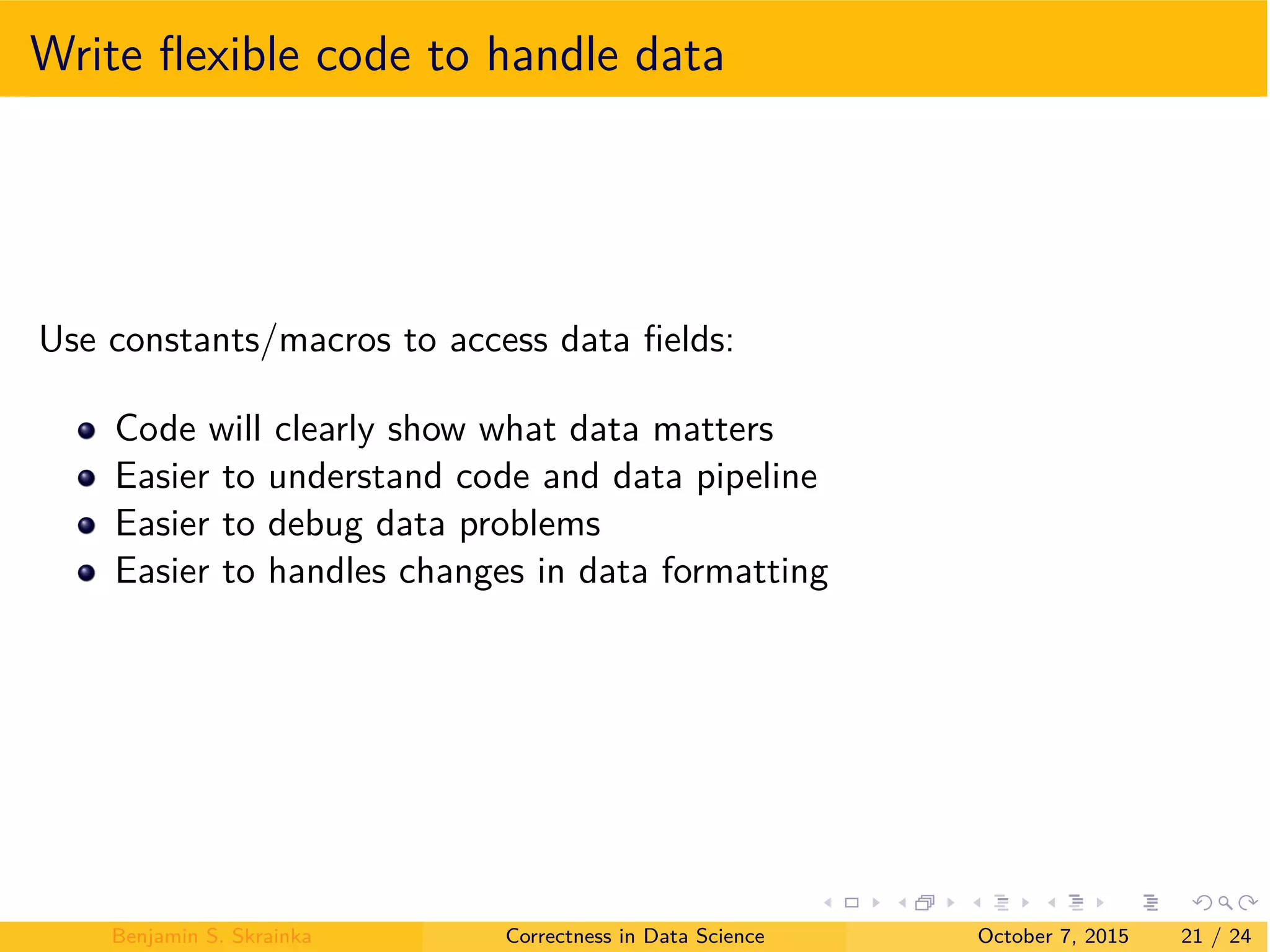

![Python example

# Setup indicators

ix_gdp = 7

...

# Load & clean data

m_raw = np.recfromcsv( bea_gdp.csv )

gdp = m_raw[:, ix_gdp]

...

Benjamin S. Skrainka Correctness in Data Science October 7, 2015 22 / 24](https://image.slidesharecdn.com/4dominodatasciencepop-upseattlebenskrainkacorrectnessindatascience-151013125215-lva1-app6891/75/Correctness-in-Data-Science-Data-Science-Pop-up-Seattle-24-2048.jpg)