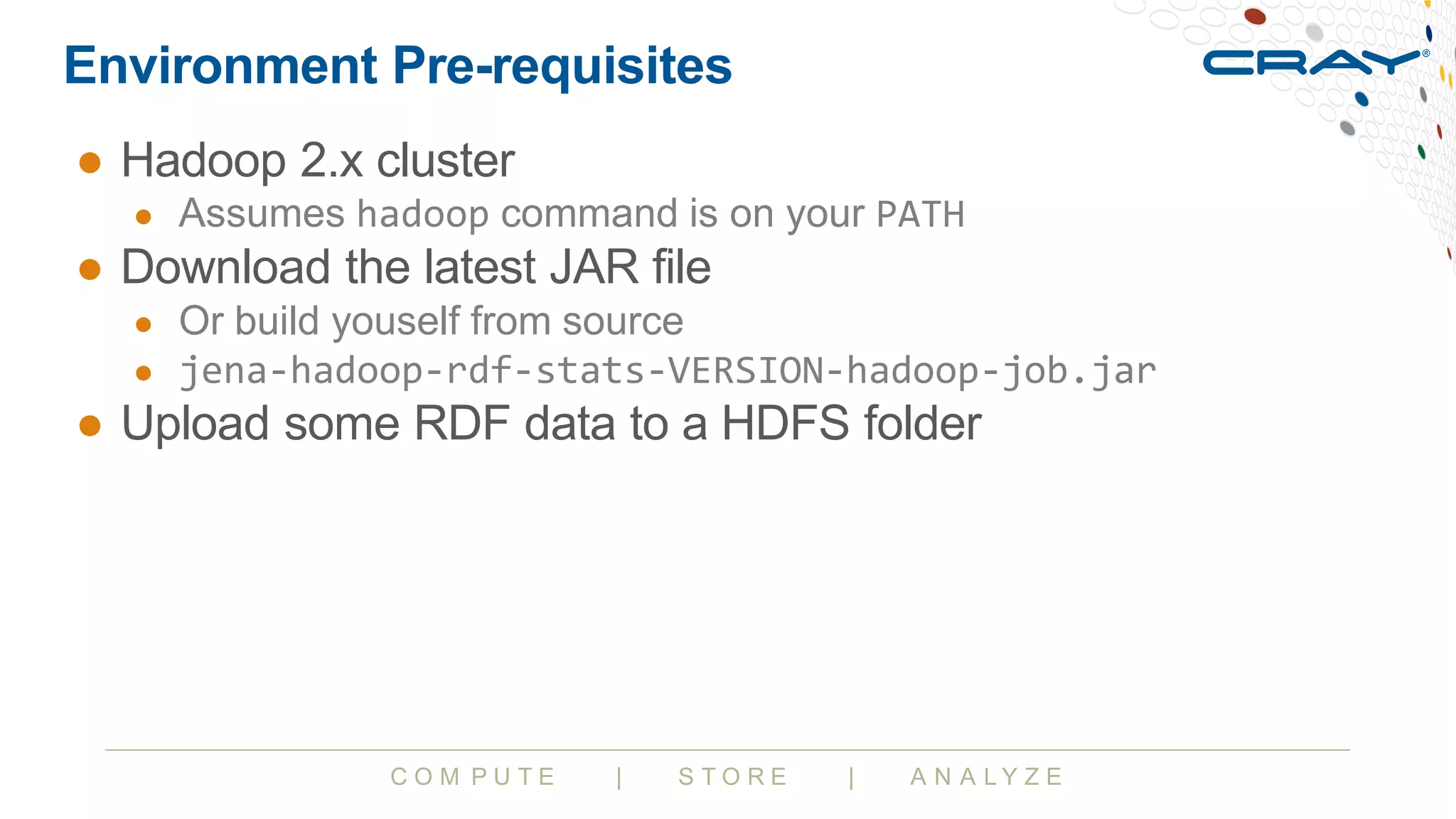

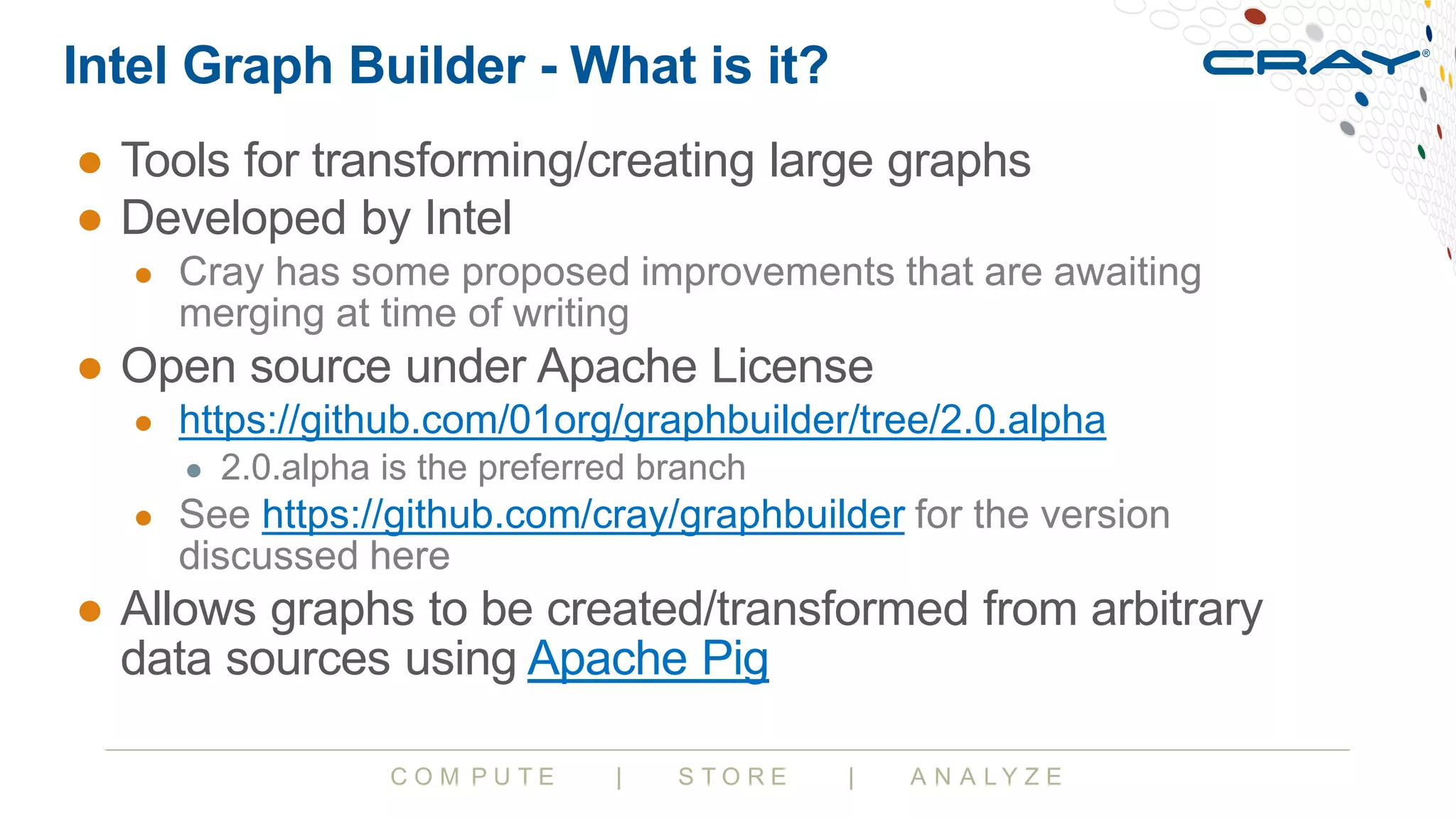

The document discusses projects for working with Resource Description Framework (RDF) data in the Hadoop ecosystem. It describes Apache Jena Elephas, a set of modules that enable RDF on Hadoop by providing Writable types for RDF primitives and input/output support. It also discusses Intel Graph Builder, which allows graphs to be created or transformed from data sources using Apache Pig. The document encourages participants to try out these projects and contribute by suggesting features, reporting issues, or contributing code.

![C O M P U T E | S T O R E | A N A L Y Z E

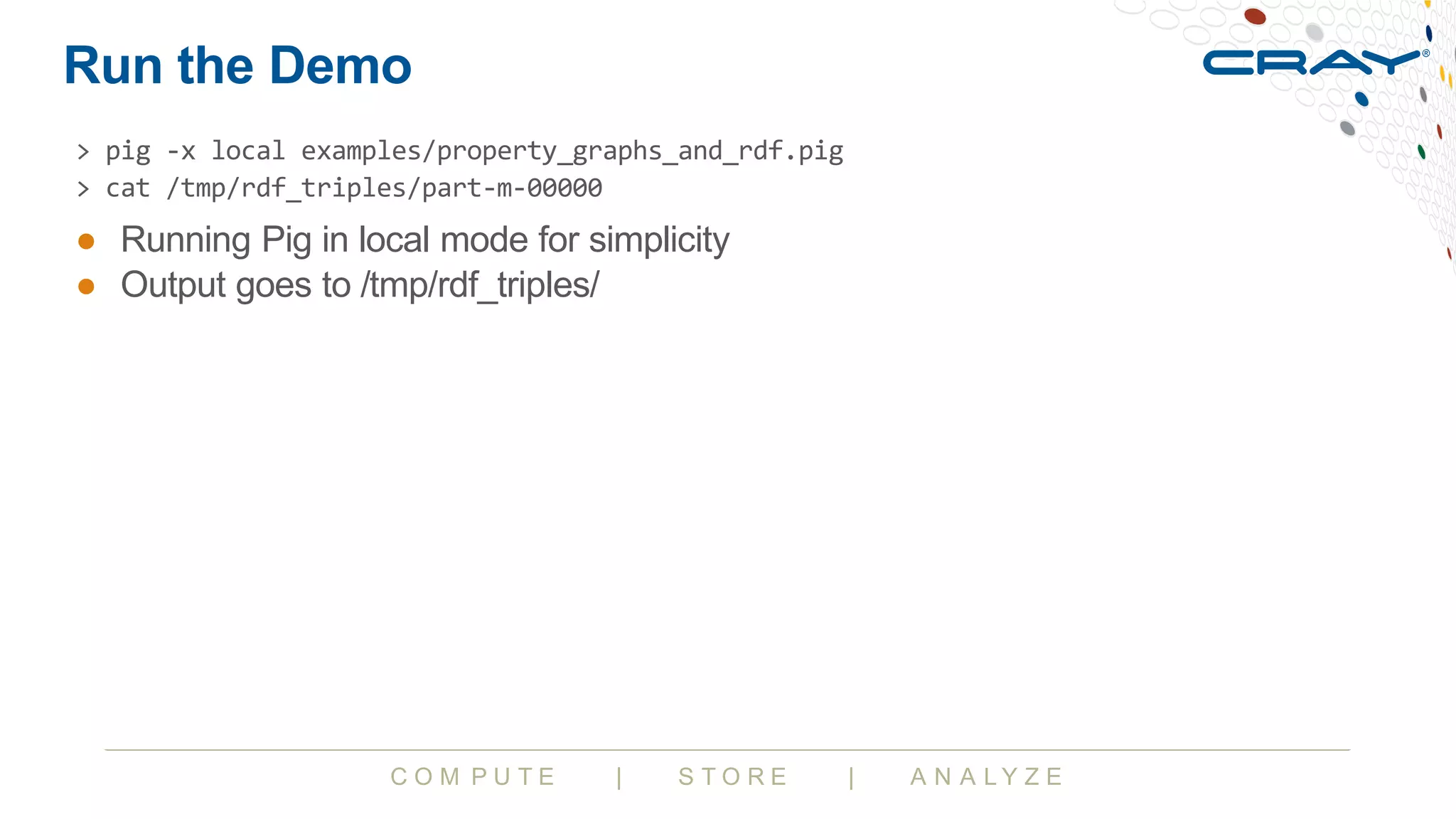

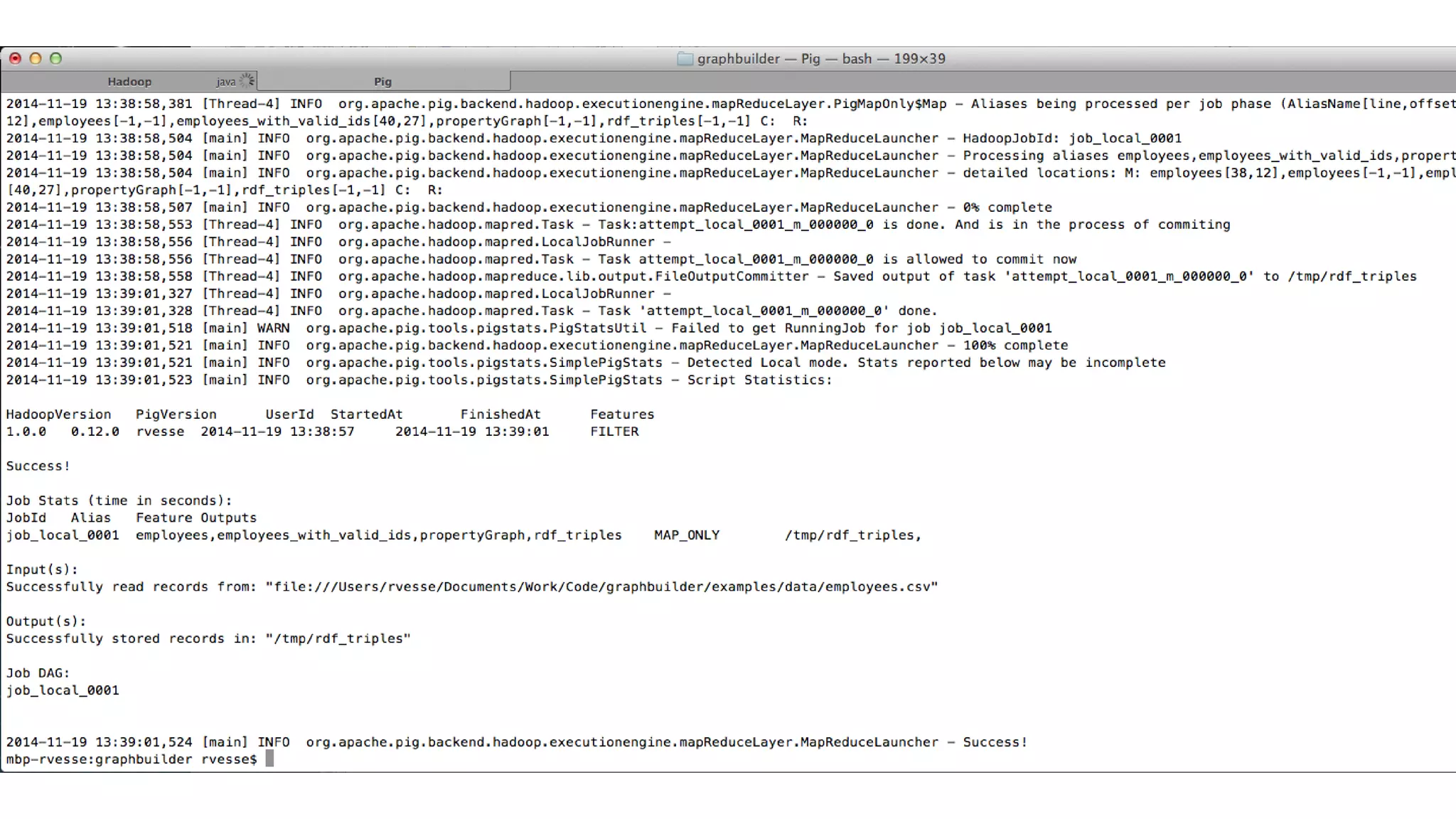

Intel Graph Builder - Pig Script Example

https://github.com/Cray/graphbuilder/blob/2.0.alpha/examples/property_graphs_and_rdf_example.pig

-- Rest of script omitted for brevity

-- Declare our mappings

propertyGraphWithMappings = FOREACH propertyGraph GENERATE (*,

[ 'idBase' # 'http://example.org/instances/',

'base' # 'http://example.org/ontology/',

'namespaces' # [ 'foaf' # 'http://xmlns.com/foaf/0.1/' ],

'propertyMap' # [ 'type' # 'a',

'name' # 'foaf:name',

'age' # 'foaf:age' ],

'uriProperties' # ( 'type' ),

'idProperty' # 'id' ]);

-- Convert to NTriples

rdf_triples = FOREACH propertyGraphWithMappings GENERATE FLATTEN(RDF(*));

-- Write out NTriples

STORE rdf_triples INTO '/tmp/rdf_triples' USING PigStorage();](https://image.slidesharecdn.com/apachejenaelephasandfriends-150216092412-conversion-gate02/75/Apache-Jena-Elephas-and-Friends-22-2048.jpg)