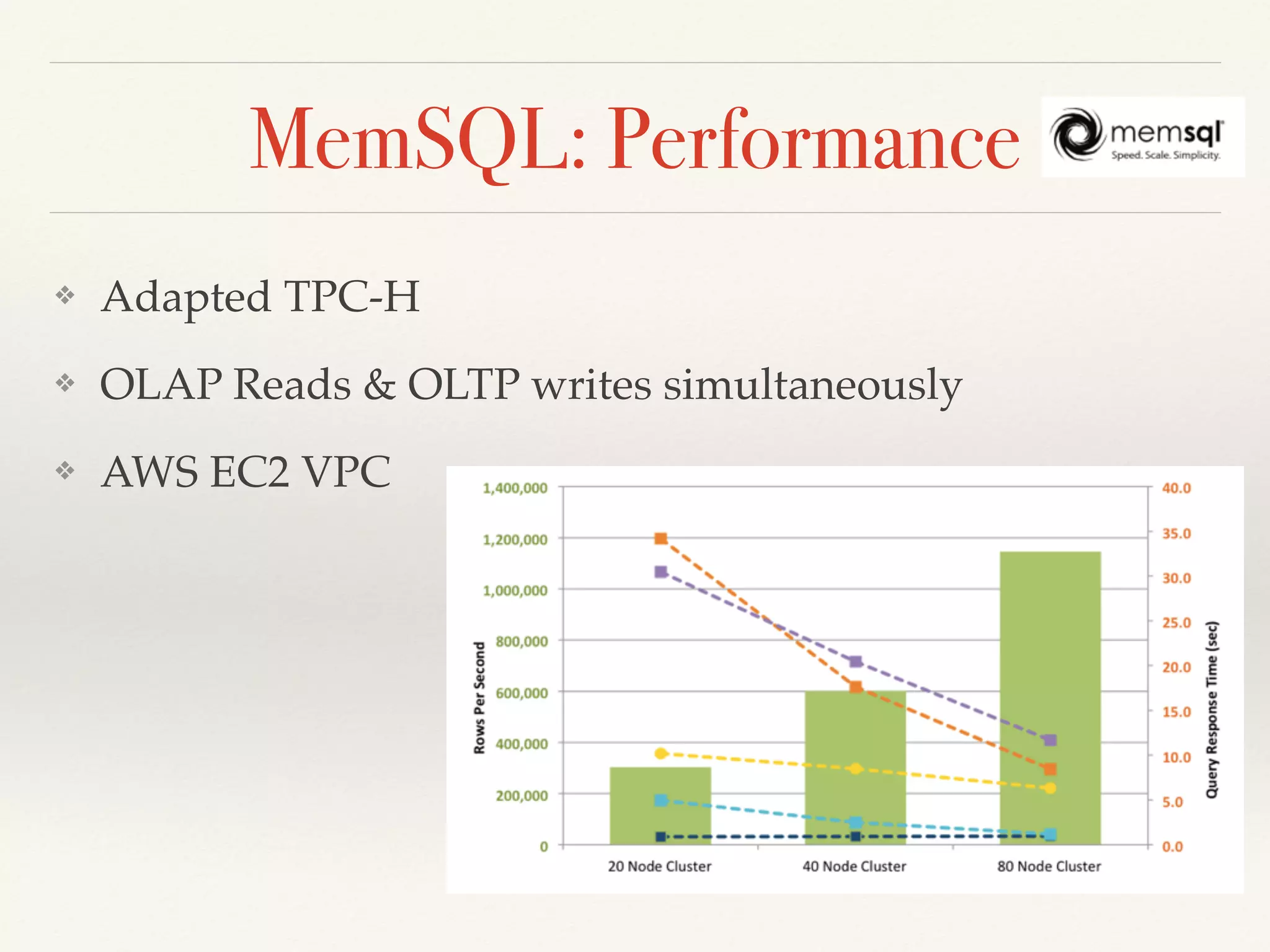

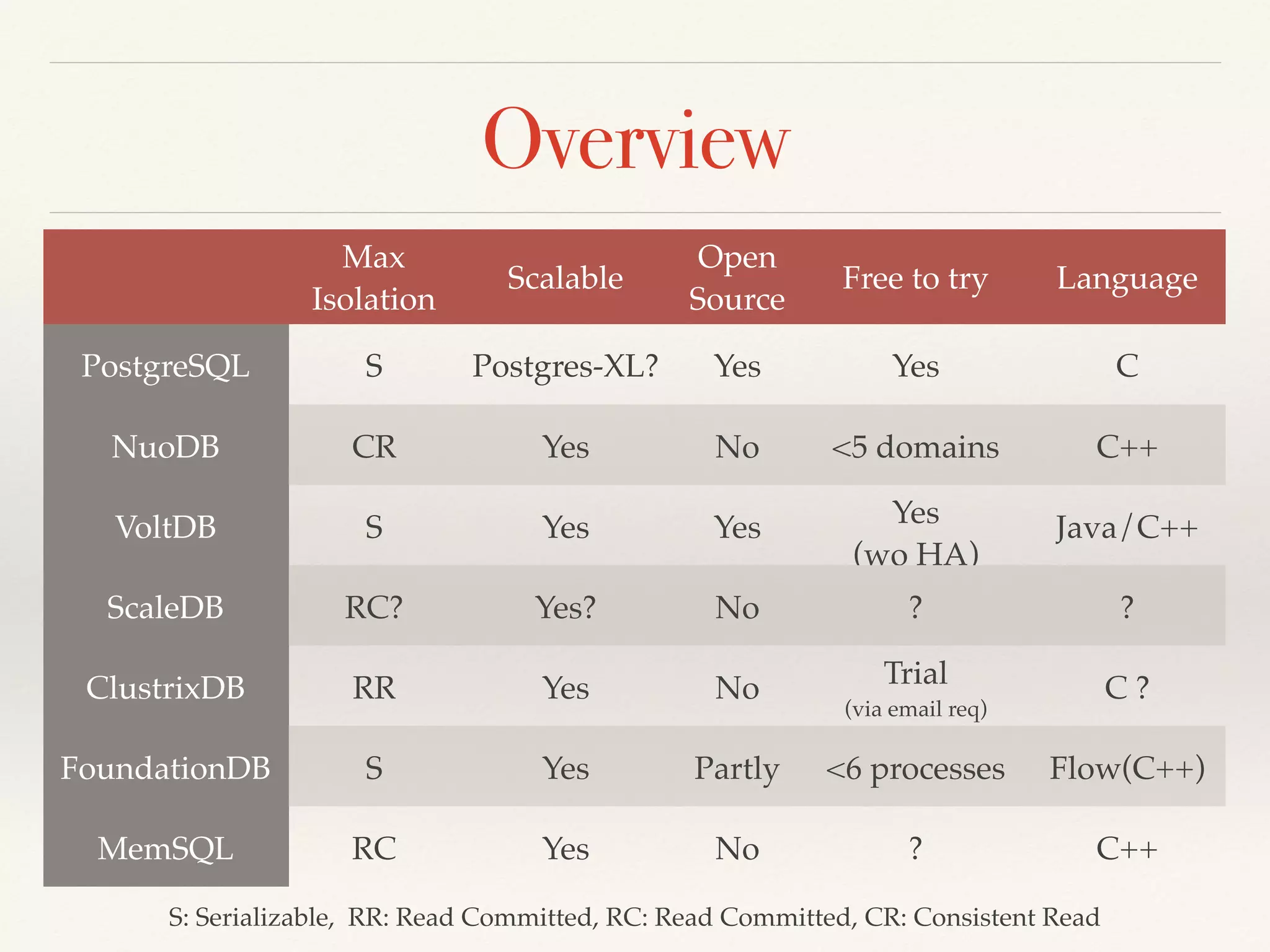

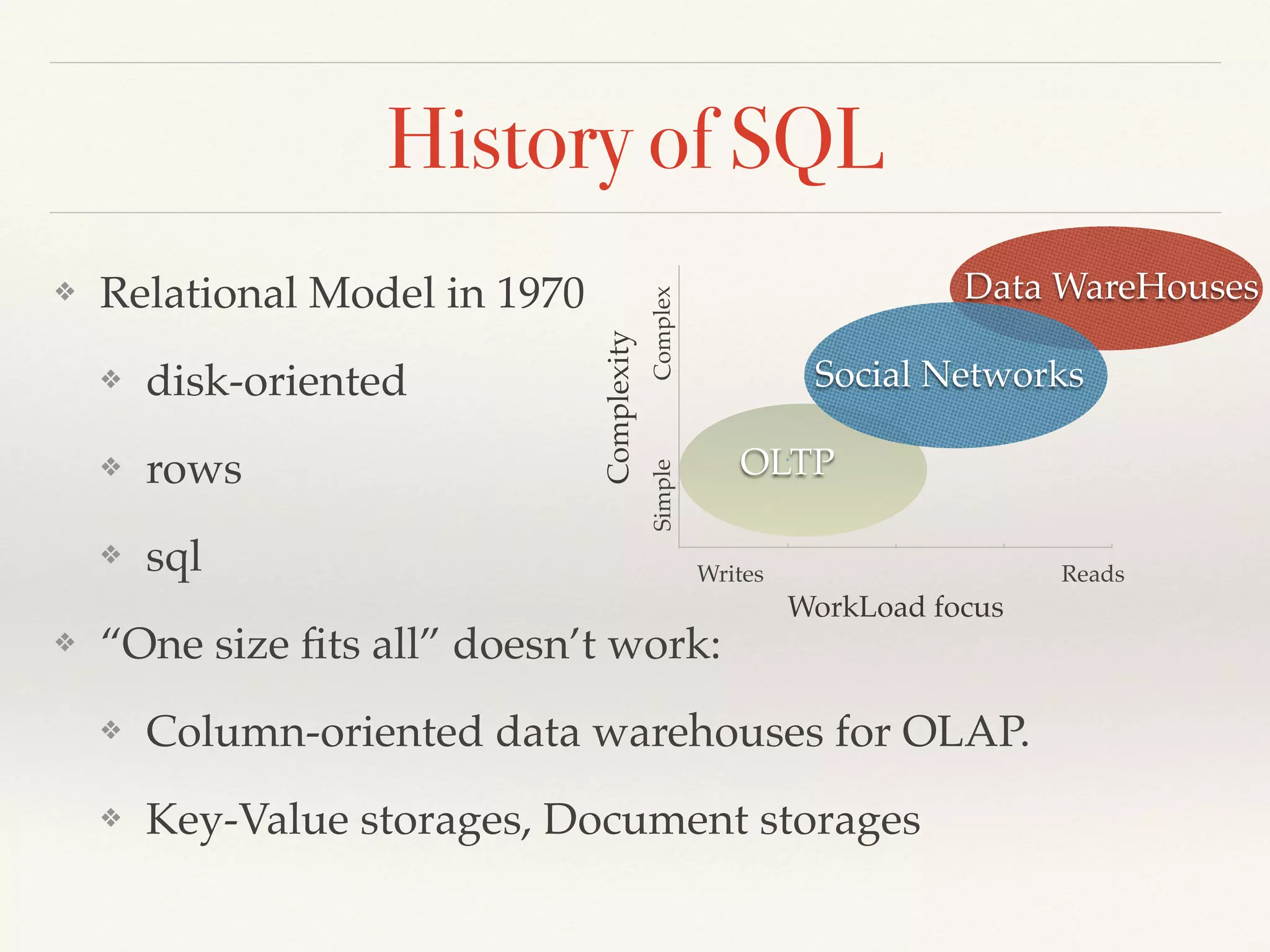

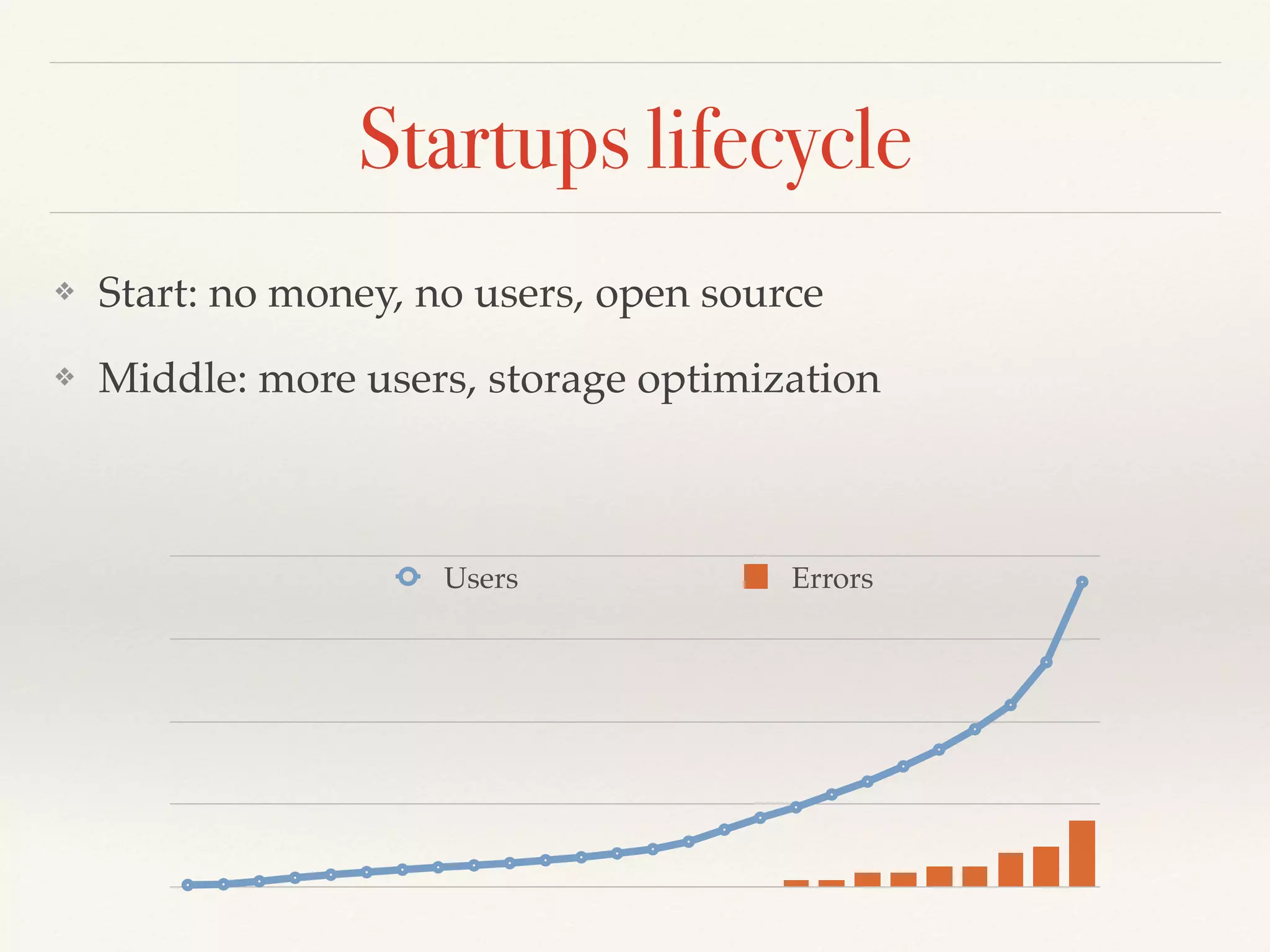

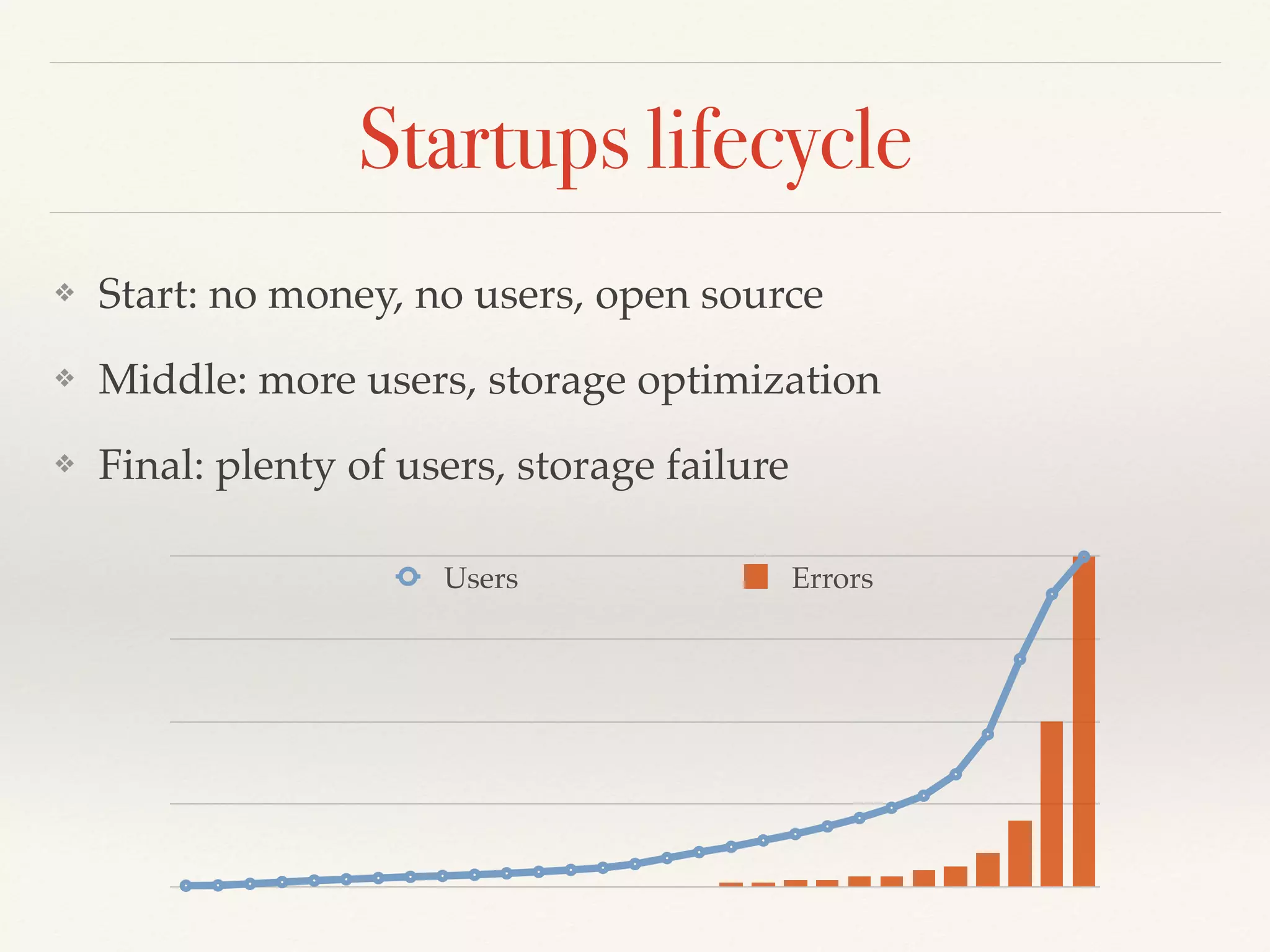

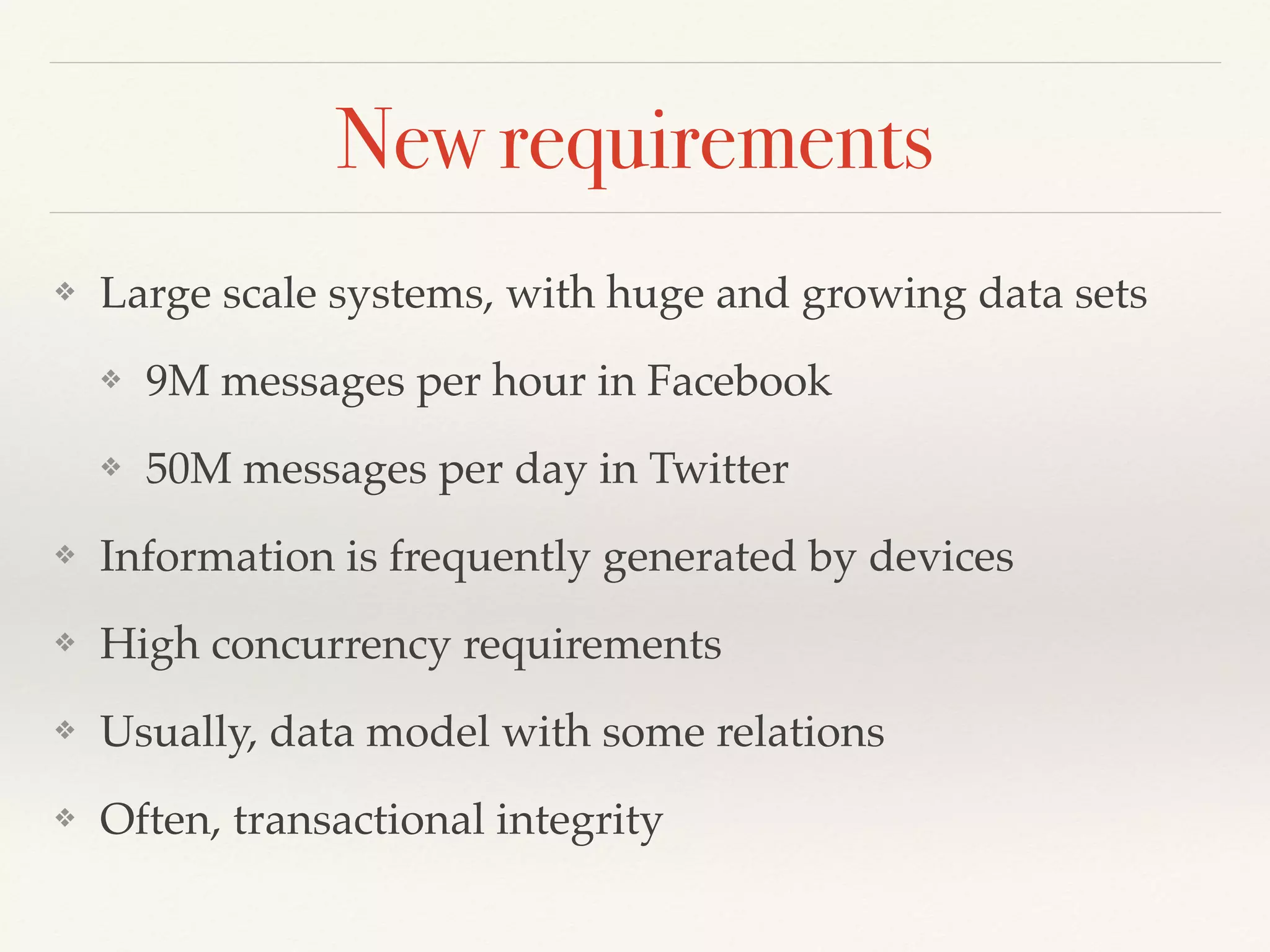

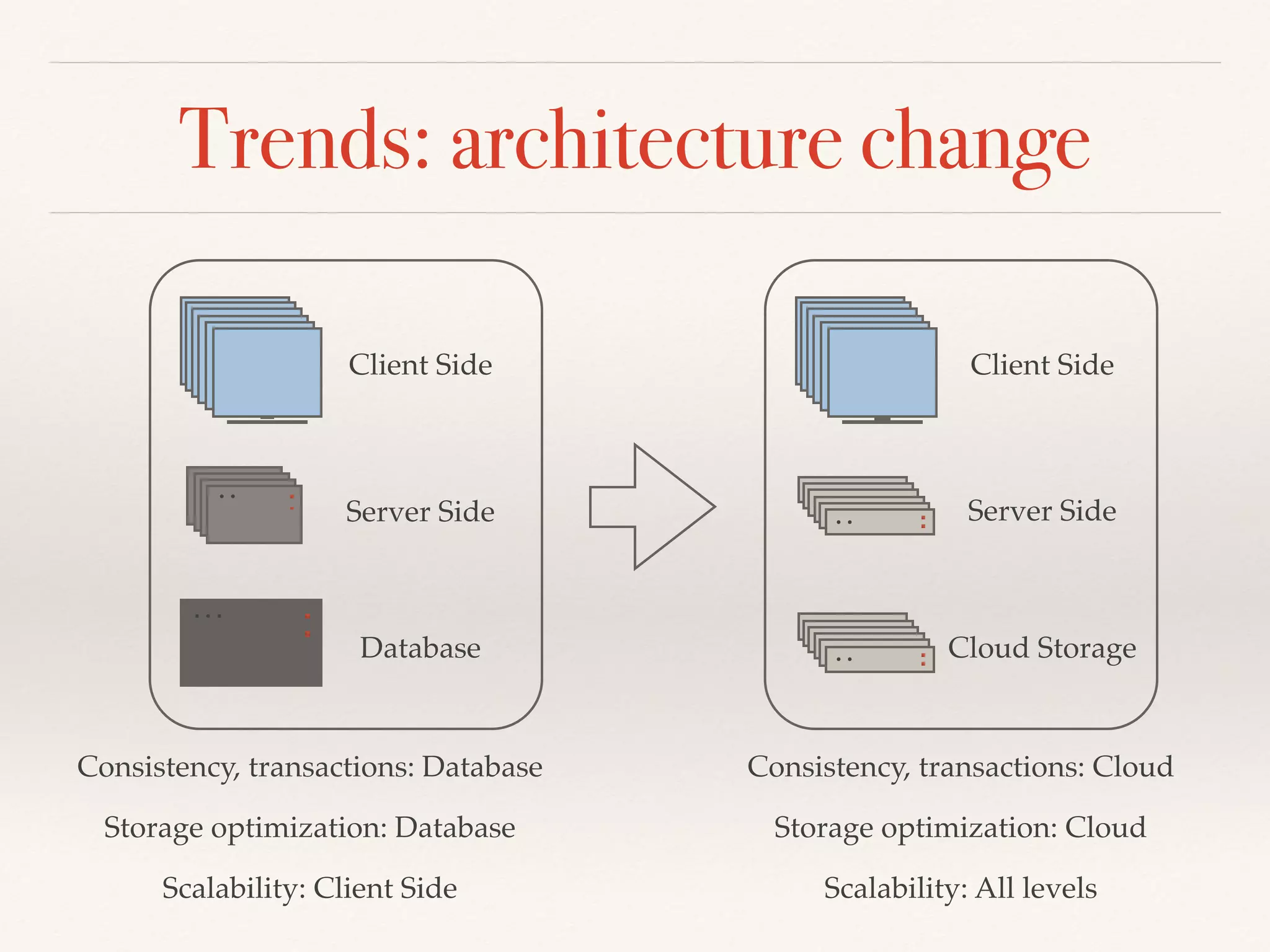

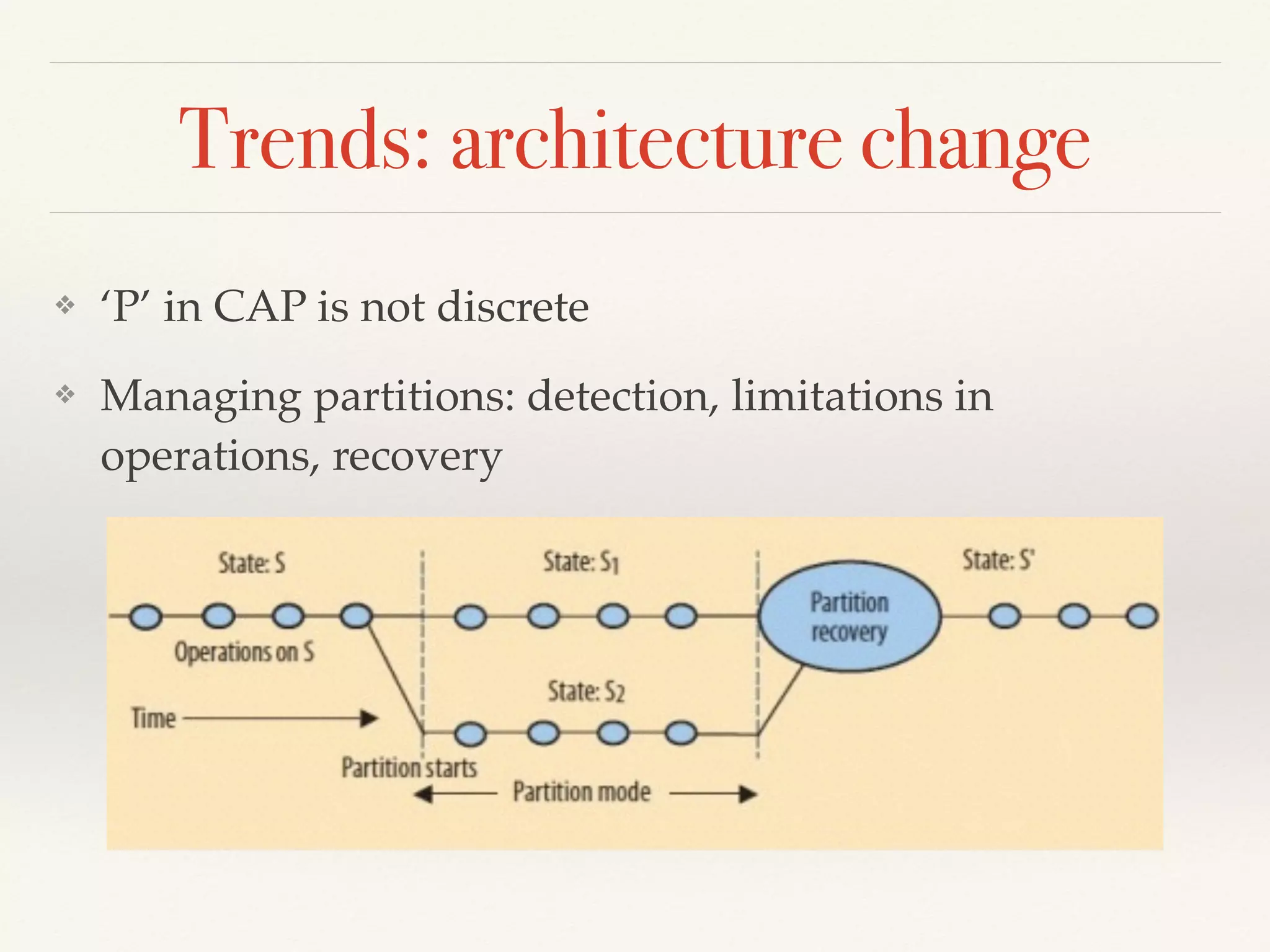

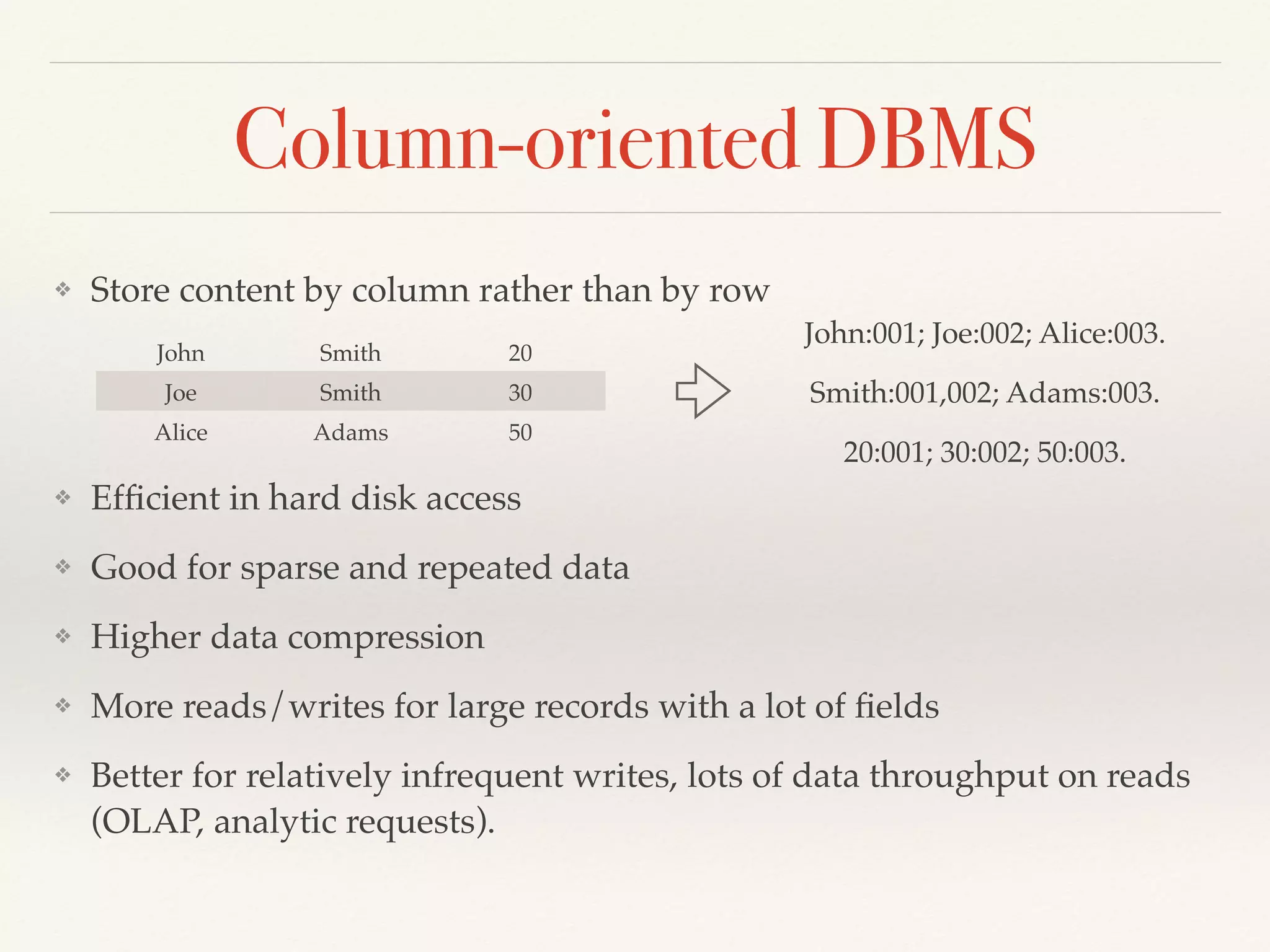

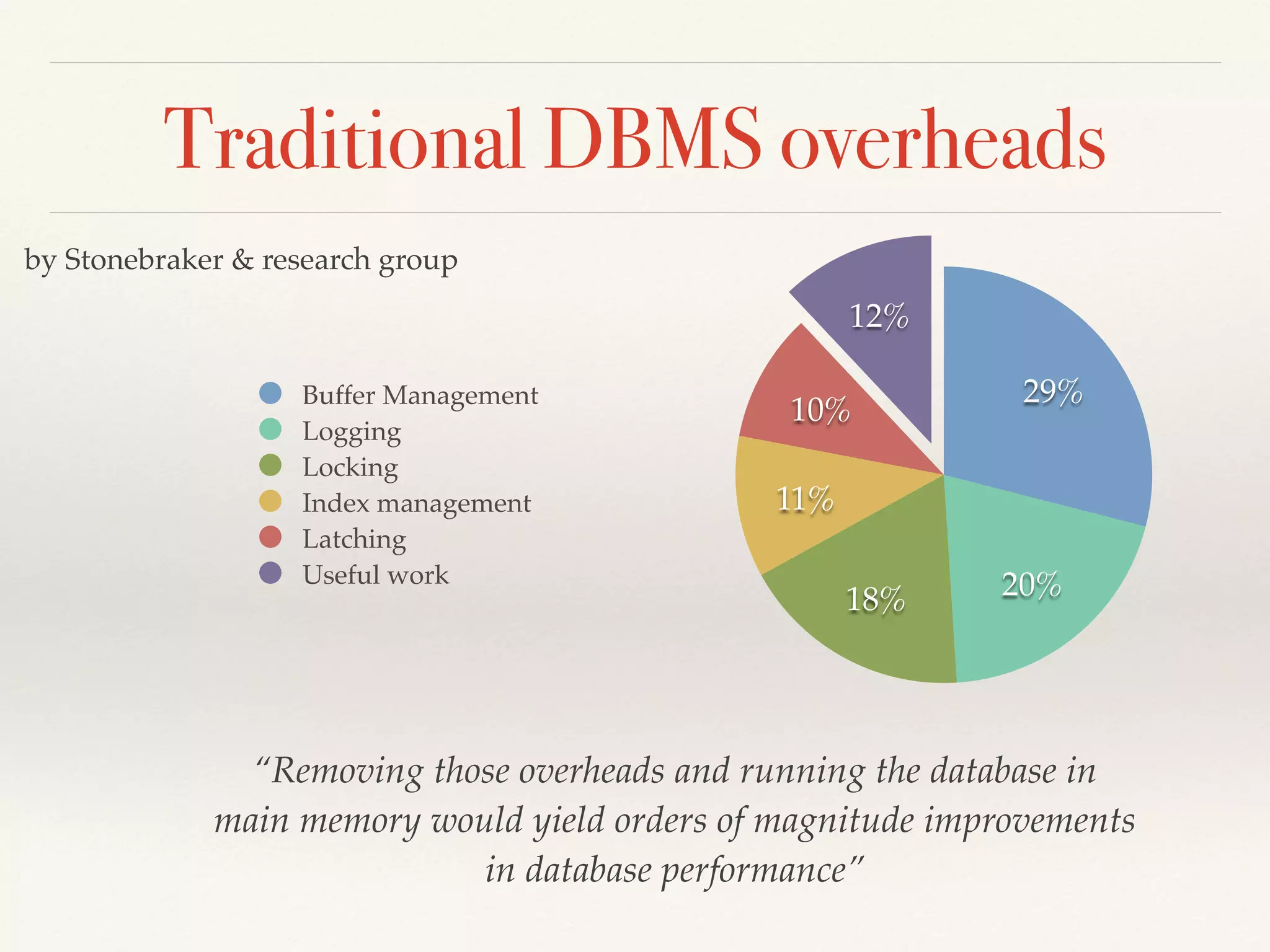

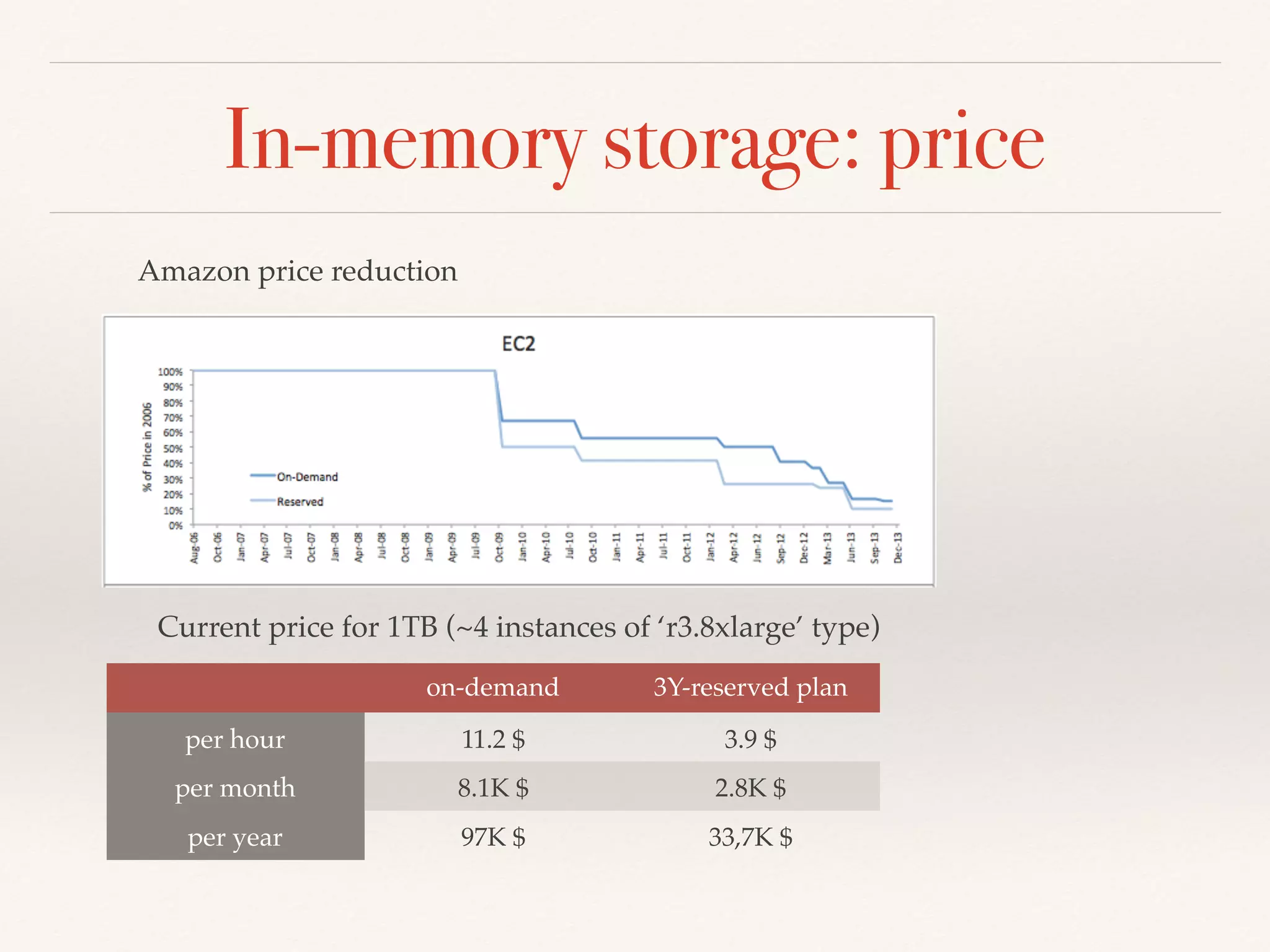

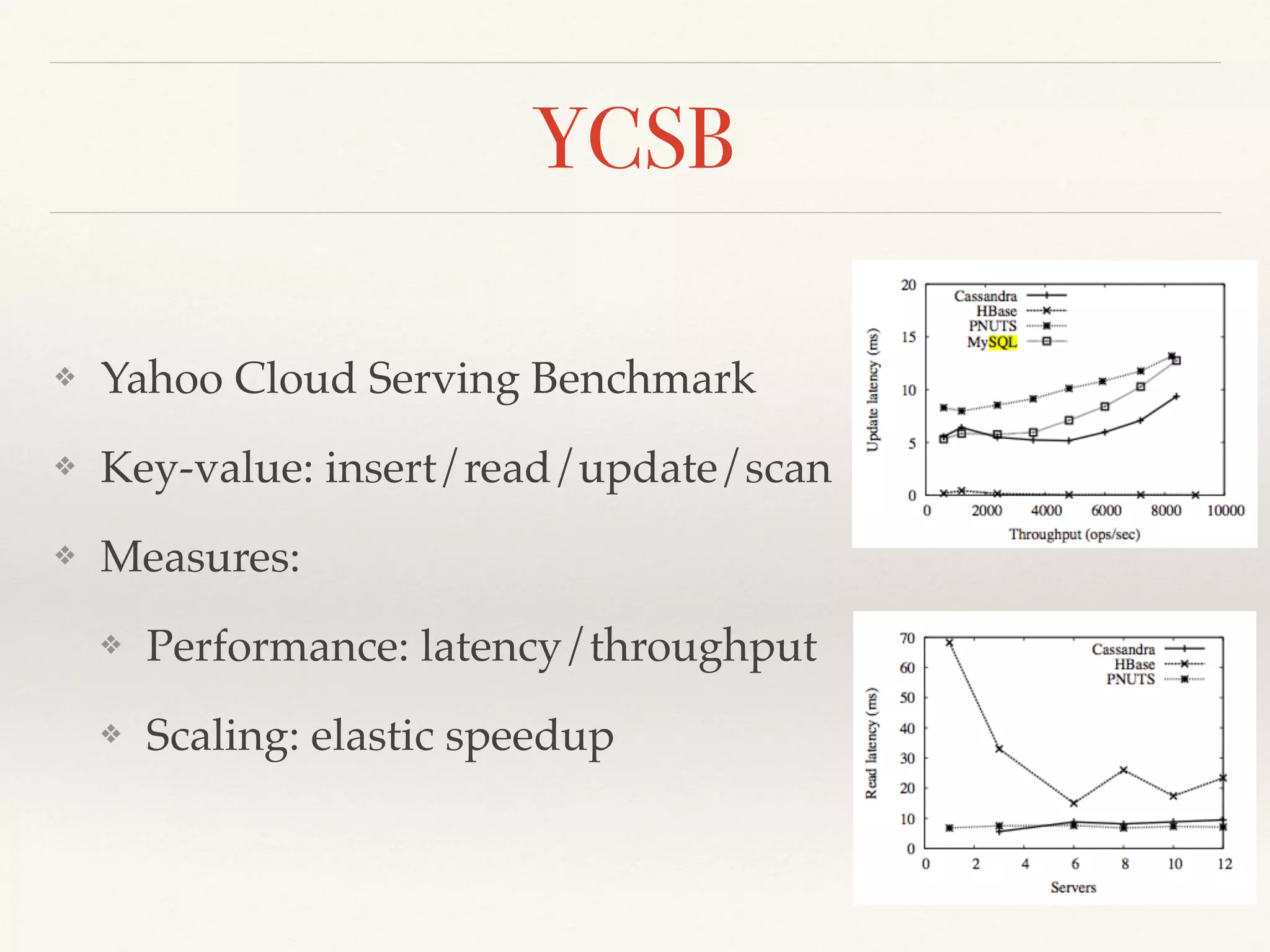

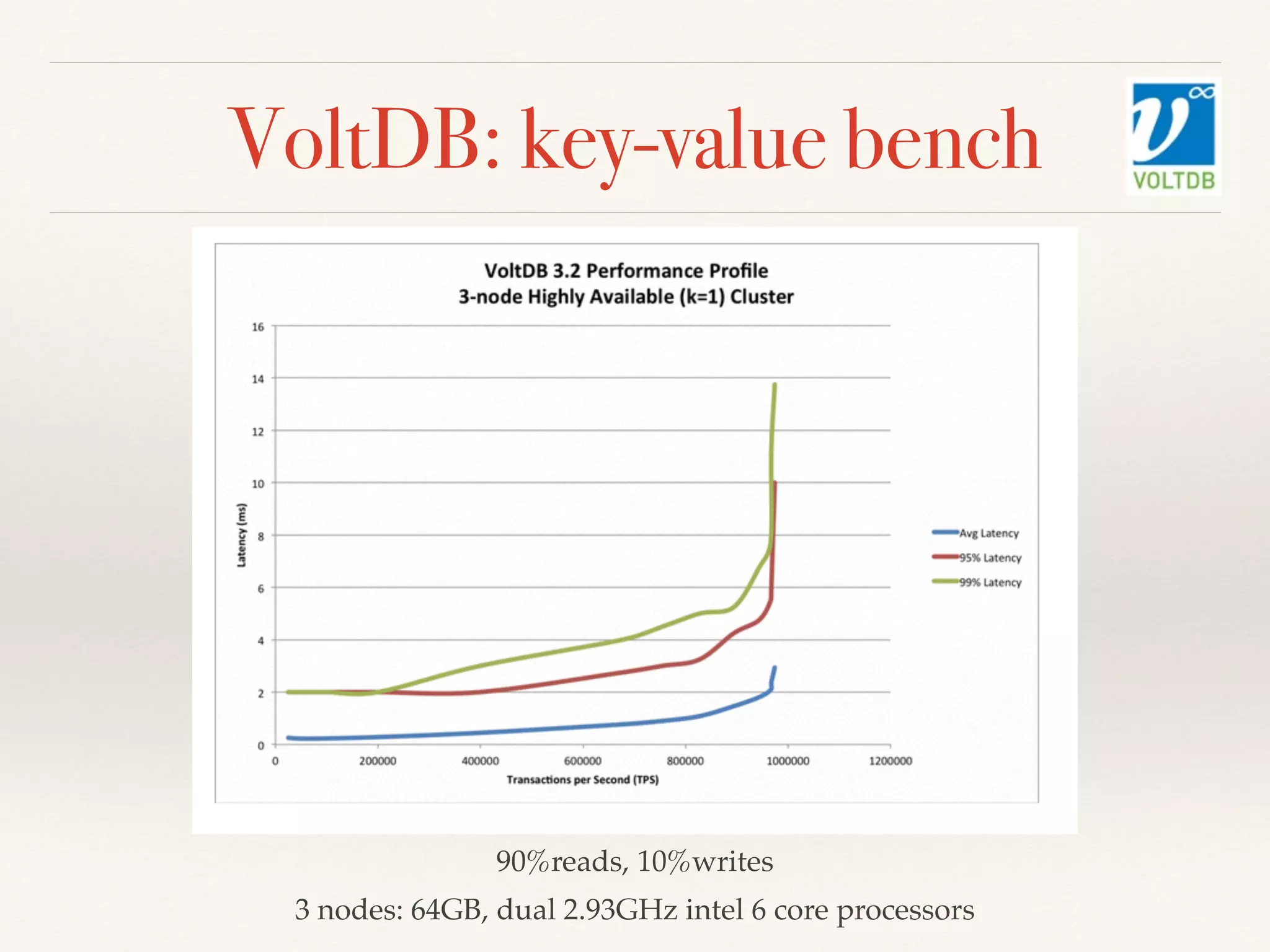

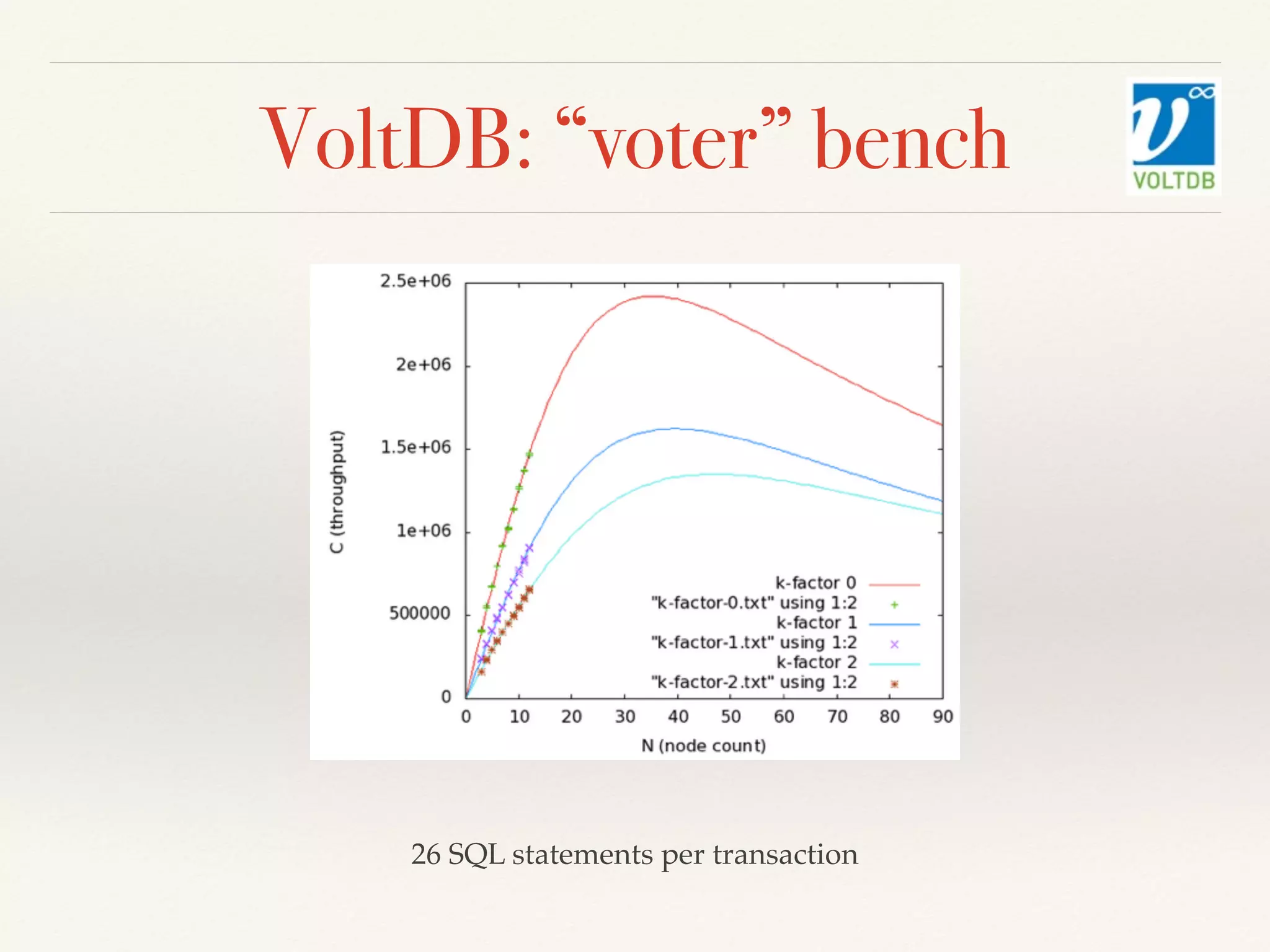

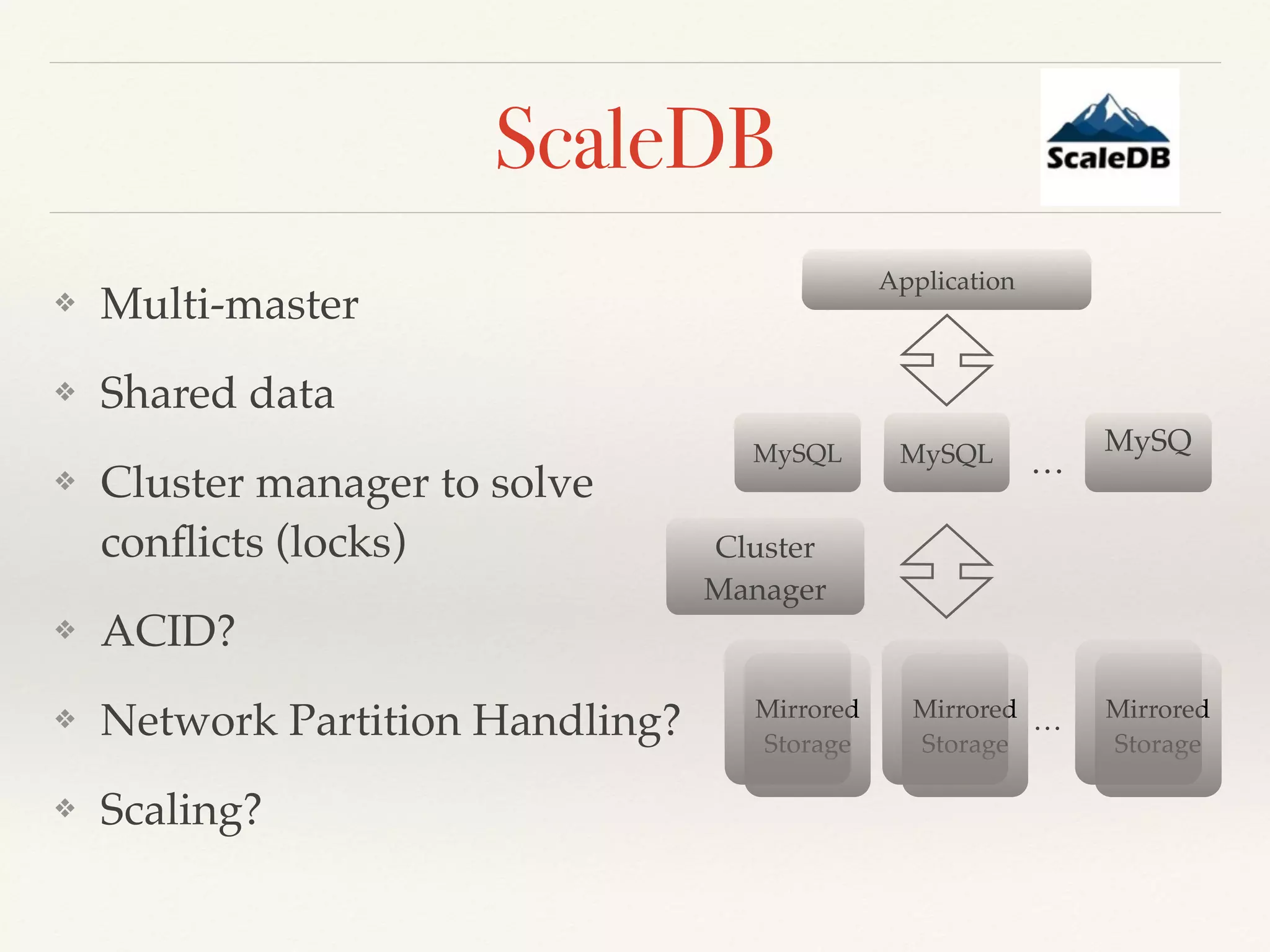

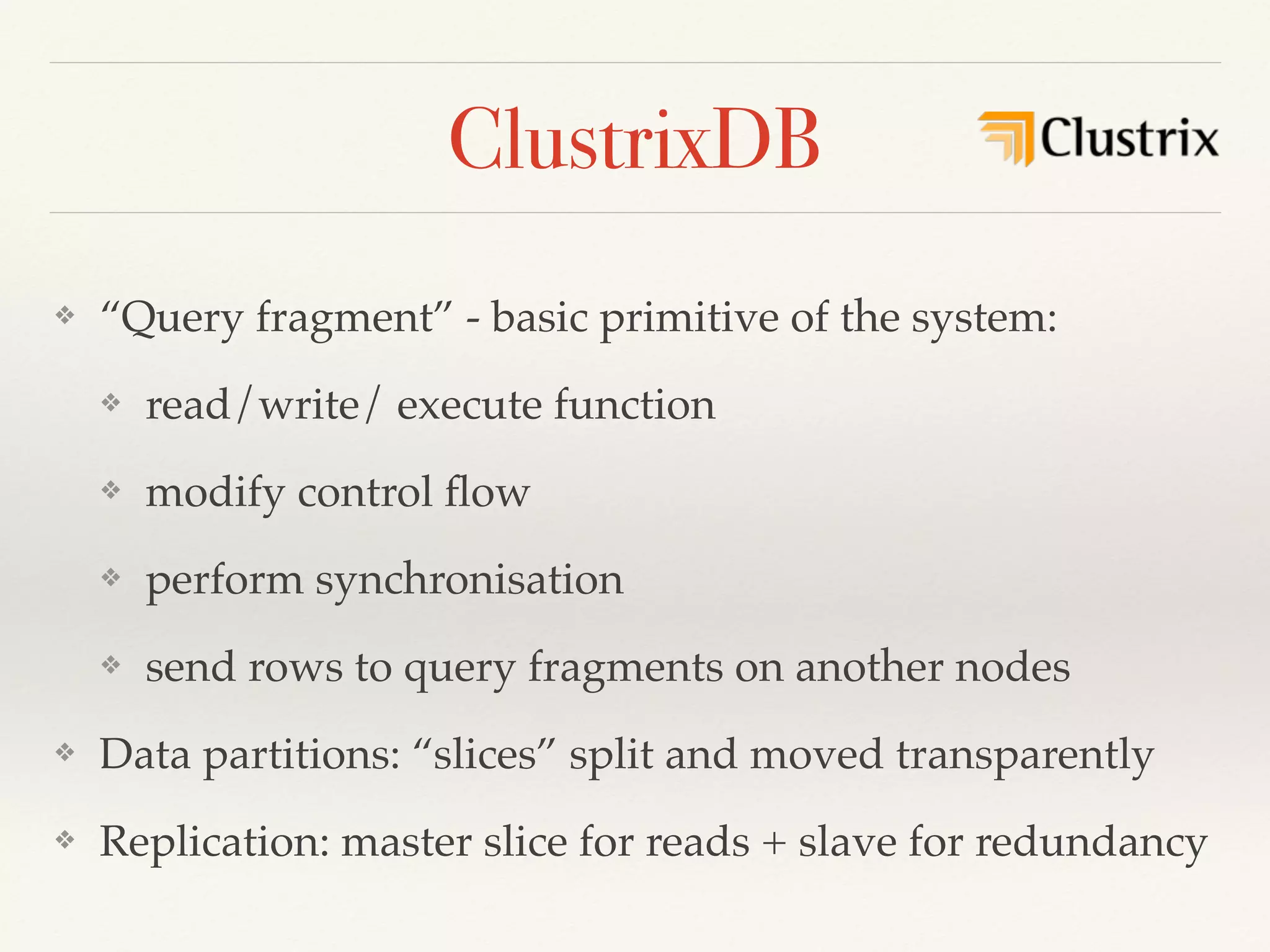

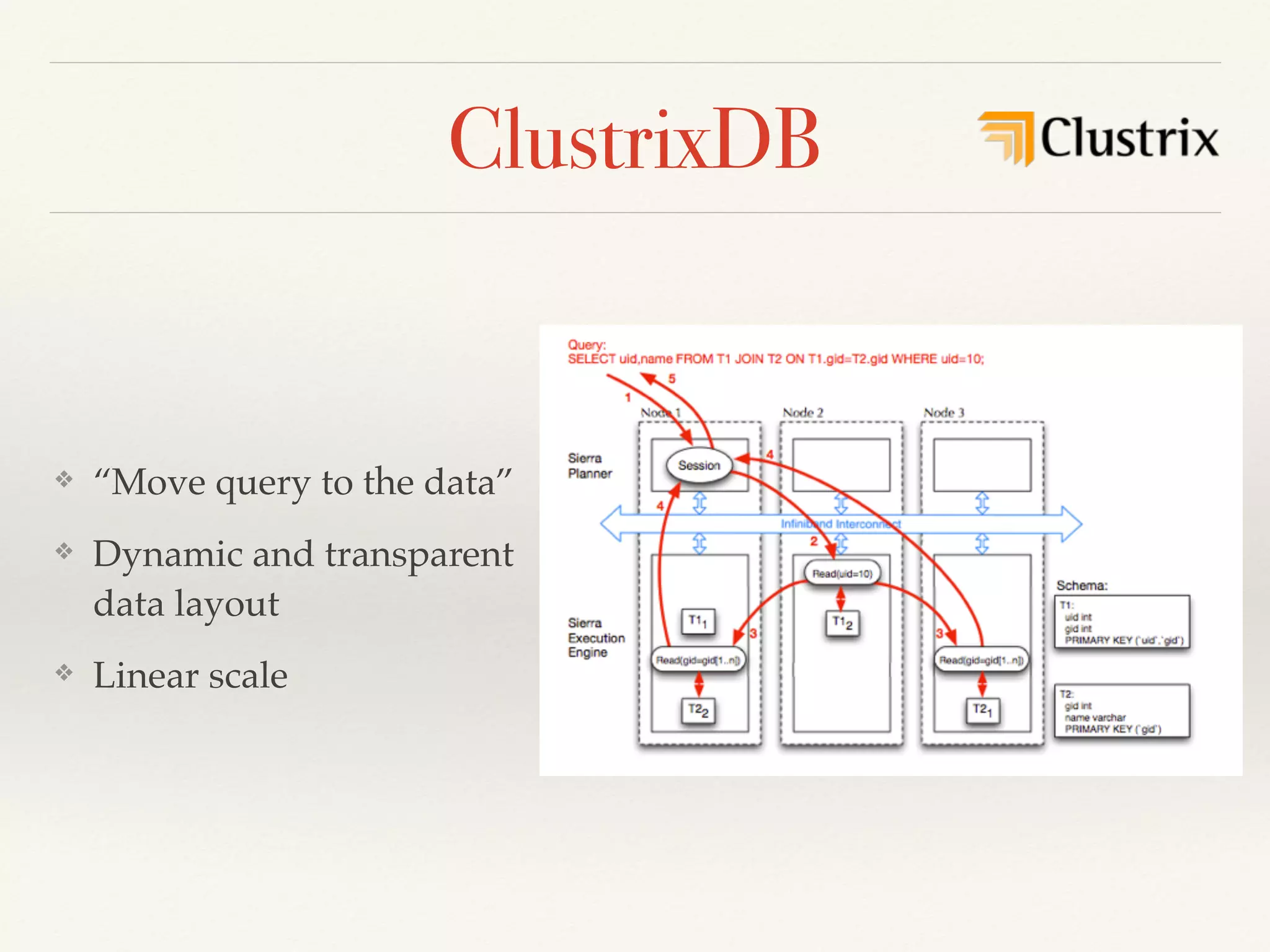

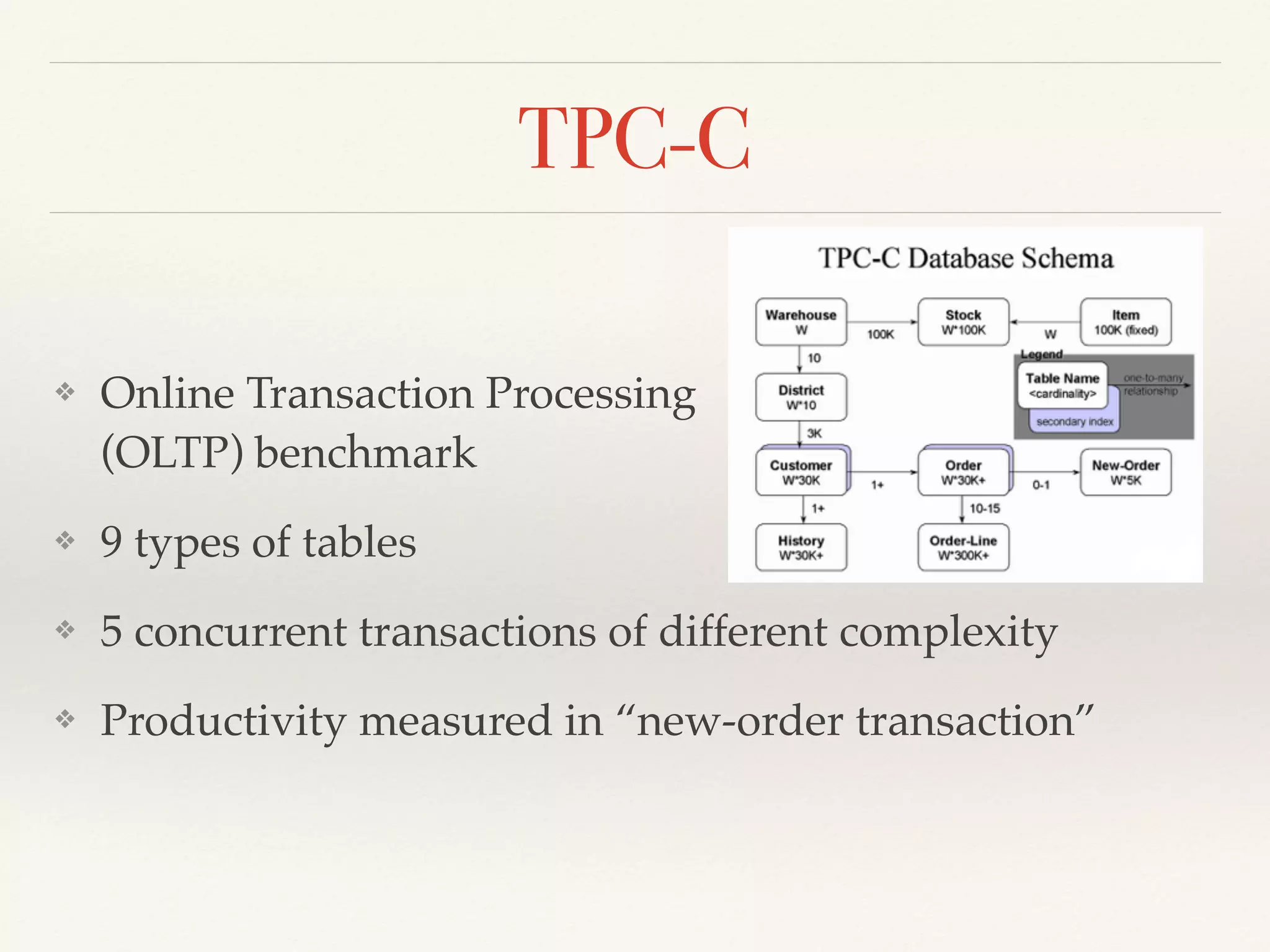

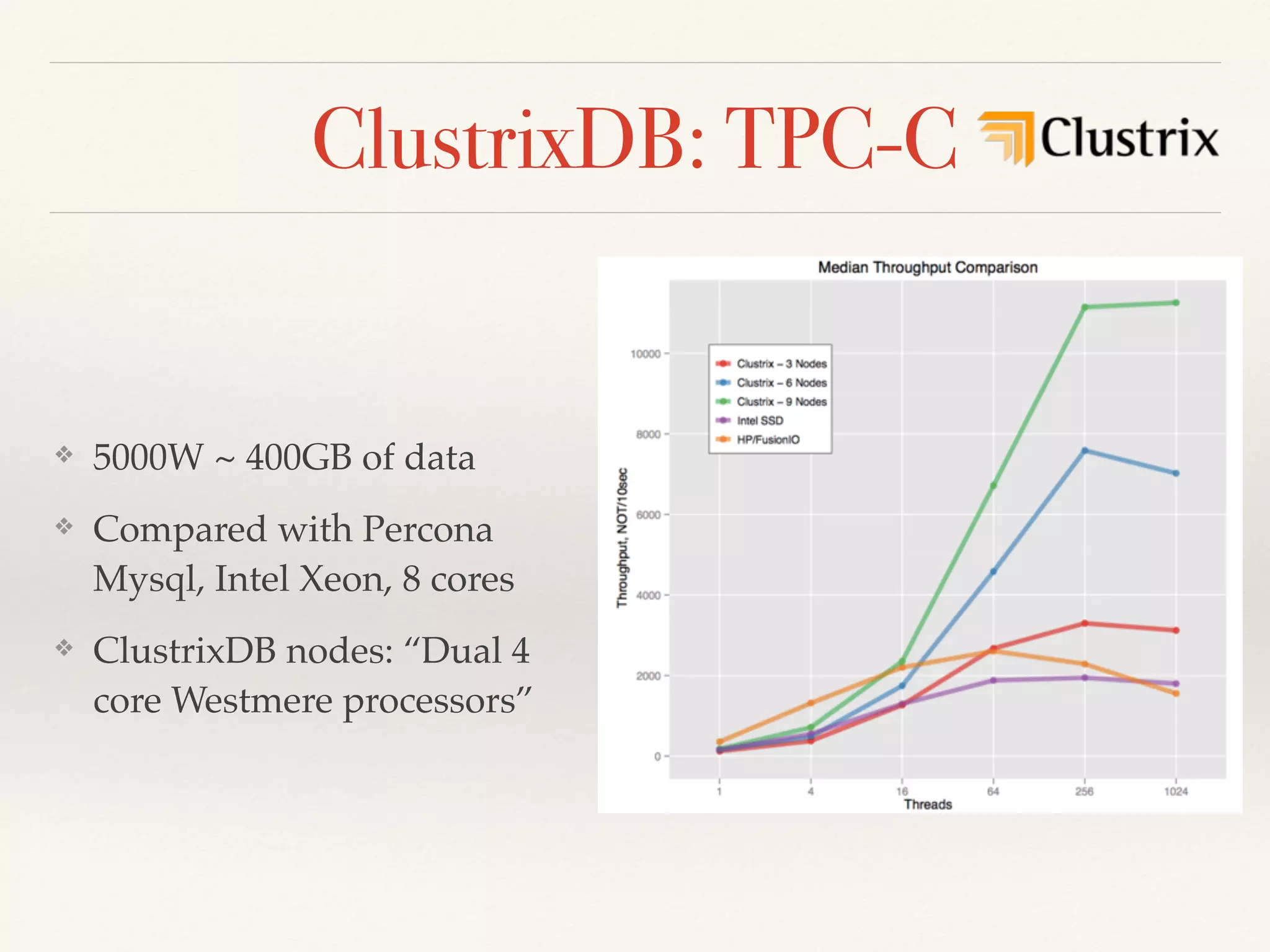

The document presents an overview of NewSQL databases, discussing their evolution from traditional SQL and NoSQL systems, and emphasizing their scalability and support for ACID transactions. It outlines various NewSQL implementations and their distinguishing features, highlighting trends in database architecture, performance, and storage optimization. Additionally, it addresses challenges in cross-sharding transactions and provides benchmarks for performance evaluation.

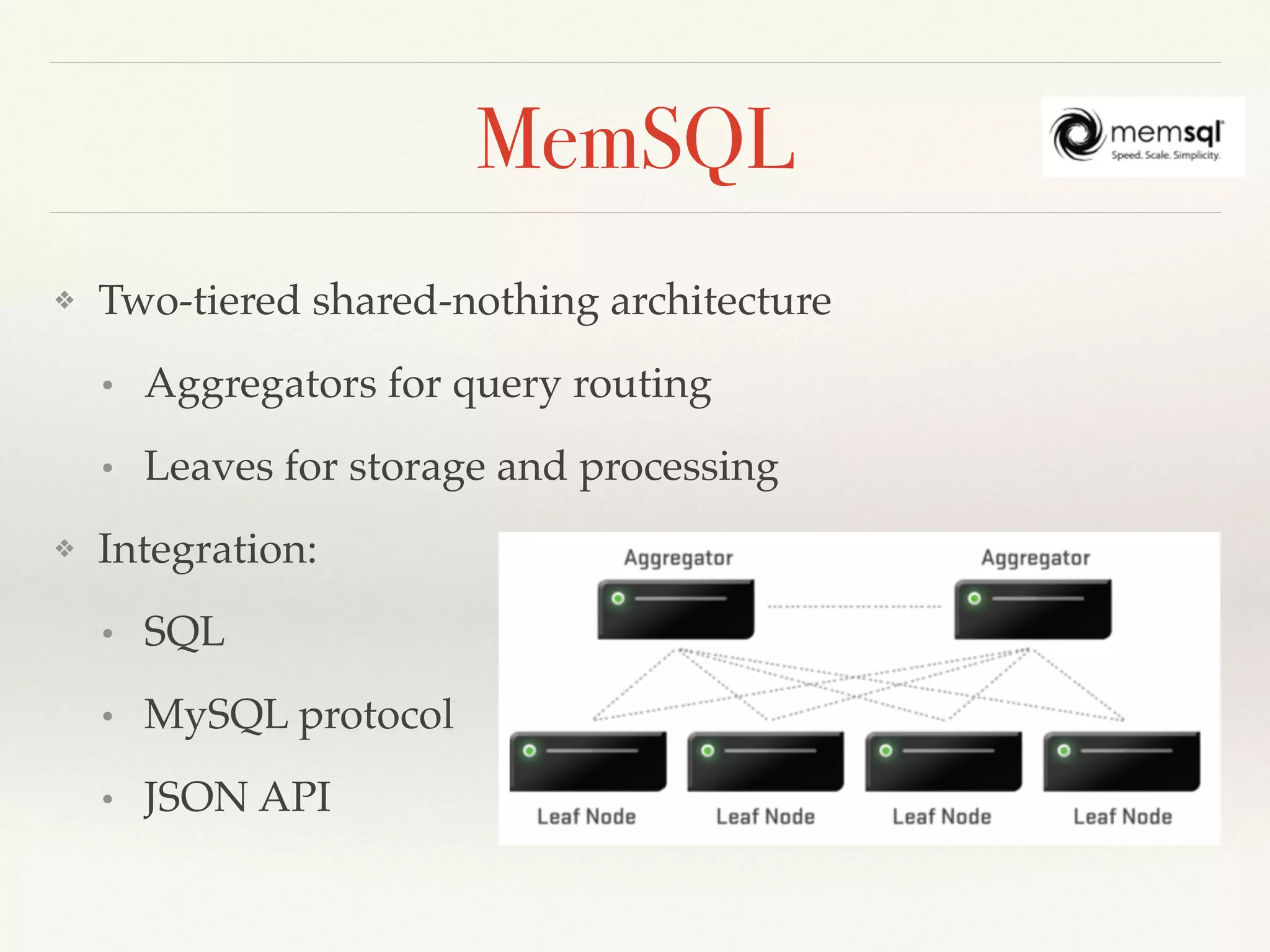

![MemSQL

❖ In-Memory Storage for OLTP

❖ Column-oriented Storage for OLAP

❖ Compiled Query Execution Plans (+cache)

❖ Local ACID transactions (no global txs for distributed)

❖ Lock-free, MVCC

❖ Fault tolerance, automatic replication,

redundancy (=2 by default)

❖ [Almost] no penalty for replica creation](https://image.slidesharecdn.com/newsql-2015-150213024325-conversion-gate01/75/NewSQL-overview-Feb-2015-44-2048.jpg)