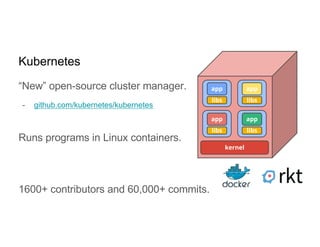

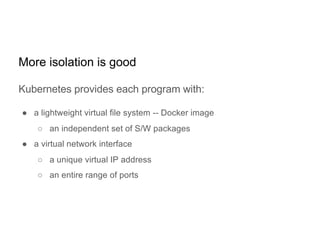

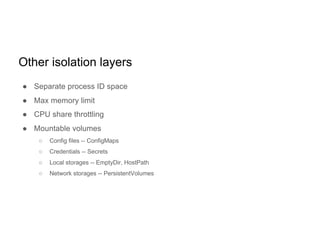

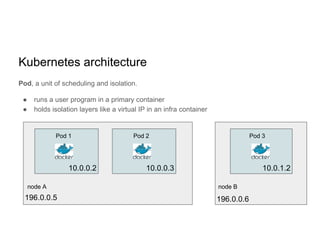

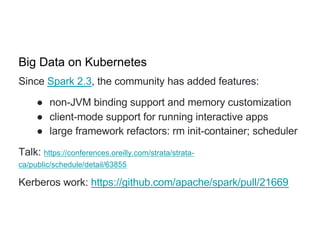

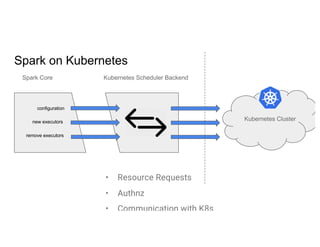

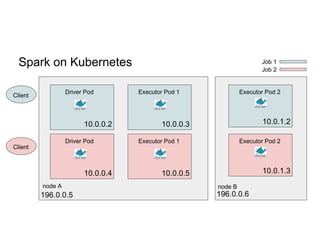

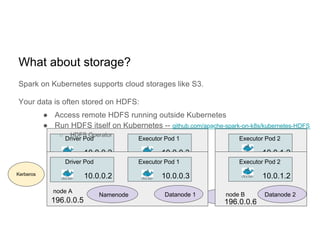

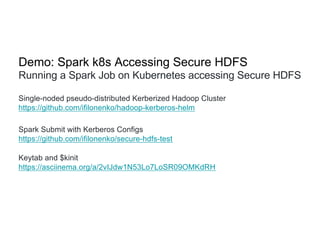

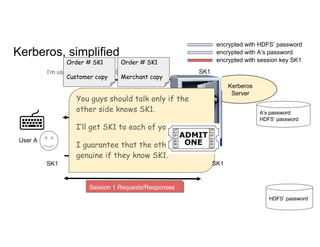

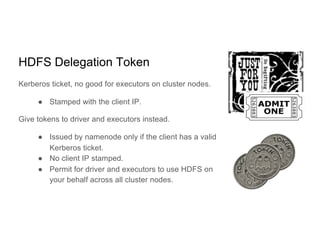

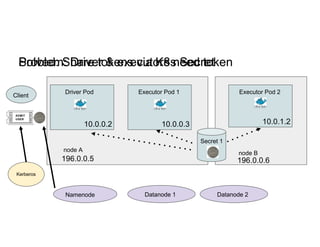

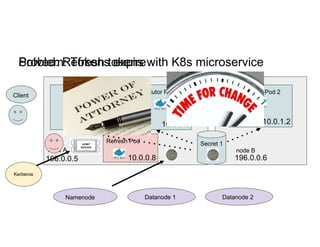

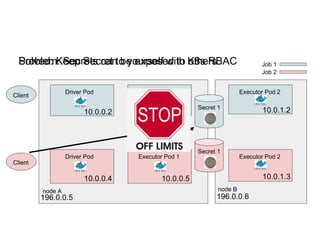

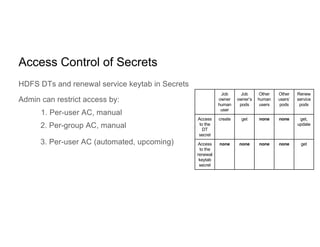

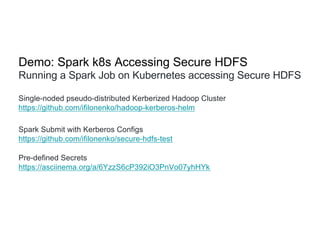

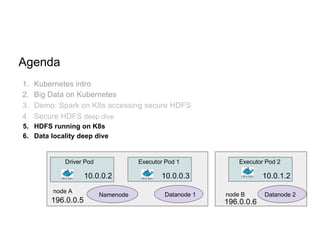

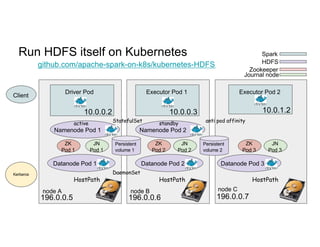

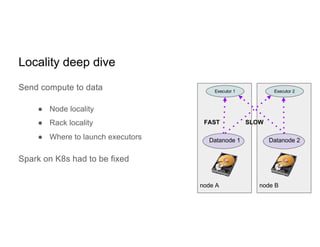

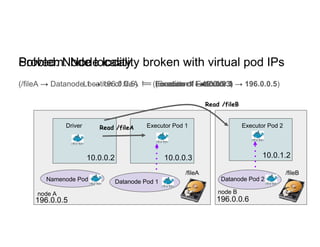

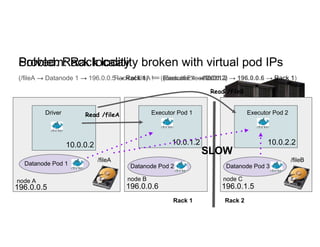

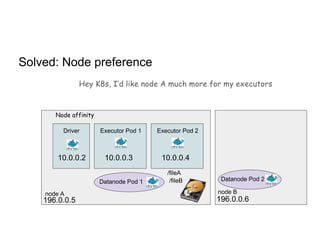

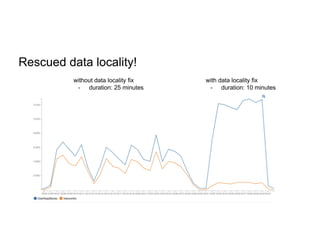

This document discusses running Apache Spark jobs on Kubernetes that access data from secure HDFS clusters. It begins with an introduction to Kubernetes and running big data workloads on it. It then demonstrates running a Spark job on Kubernetes that accesses a Kerberized HDFS cluster. The document delves into details of securing HDFS access and running HDFS itself on Kubernetes. It discusses how data locality was broken when running Spark on Kubernetes originally and how it was fixed to improve performance.