ML基本からResNetまで

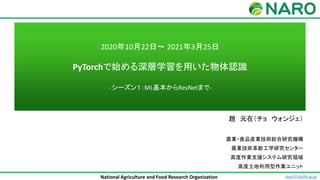

- 1. National Agriculture and Food Research Organization 2020年10月22日~ 2021年3月25日 PyTorchで始める深層学習を用いた物体認識 - シーズン1:ML基本からResNetまで- 趙 元在(チョ ウォンジェ) 農業・食品産業技術総合研究機構 農業技術革新工学研究センター 高度作業支援システム研究領域 高度土地利用型作業ユニット chou555@affrc.go.jp

- 2. National Agriculture and Food Research Organization Goals • Basic understanding of machine learning algorithms ➢ Linear regression, Logistic regression (classification) ➢ Neural networks, Convolutional Neural Network (CNN) • Basic understanding of CNN architecture for Object Detection ➢ AlexNet, VGG, ResNet, GoogLeNet / InceptionNet, R-CNN, YOLO, SSD … • Solve your problems using machine learning tools ➢ PyTorch and Python (ver. 3.x) chou555@affrc.go.jp

- 3. National Agriculture and Food Research Organization Course structure • About 30 min Lecture (Theory: 15min, Practice: 15min) • Programming tutorial using PyTorch chou555@affrc.go.jp

- 4. National Agriculture and Food Research Organization Schedule • Machine learning basic concepts and practice Python (ver3.x) • Linear regression • Logistic regression (classification) • Multivariable (Vector) linear / logistic regression • Neural networks and CNN • Deep learning for object detection ➢ AlexNet ➢ VGG ➢ ResNet ➢ GoogLeNet / InceptionNet ➢ R-CNN ➢ YOLO ➢ SSD ➢ SOTA(State of the art) Review: EfficientDet, YOLO-v4, etc. Paper list from 2014 to 2019 https://github.com/hoya012/deep_learning_object_detection chou555@affrc.go.jp

- 5. National Agriculture and Food Research Organization Acknowledgement • Deep learning zero to all (Korean / English) ➢ https://hunkim.github.io/ml/ • Dive into Deep Learning (English) ➢ https://d2l.ai/index.html • CS231n: Convolutional Neural Networks for Visual Recognition (English) ➢ http://cs231n.stanford.edu/ ➢ https://cs231n.github.io/ ➢ http://cs231n.stanford.edu/slides/ • Andrew Ng’s Machine Learning Class (English) ➢ https://www.coursera.org/learn/machine-learning ➢ http://holehouse.org/mlclass/ • PyTorch Tutorials ➢ https://pytorch.org/tutorials/ (English) ➢ https://github.com/omerbsezer/Fast-Pytorch (English) ➢ https://github.com/GunhoChoi/PyTorch-FastCampus (Korean / English) ➢ https://wikidocs.net/book/2788 (Korean) chou555@affrc.go.jp

- 6. National Agriculture and Food Research Organization Machine Learning Basics: Concepts • What is ML? • What is learning? ➢ supervised ➢ unsupervised ➢ reinforcement learning • What is regression? • What is classification? chou555@affrc.go.jp

- 7. National Agriculture and Food Research Organization Machine Learning Basics: What is ML? • Limitations of explicit programming ➢Spam filter: many rules ➢Automatic driving: too many rules • Machine Learning: “Field of study that gives computers the ability to learn without being explicitly programmed” Arthur Samuel (1959) https://en.wikipedia.org/wiki/Arthur_Samuel https://www.logianalytics.com/predictive-analytics/ machine-learning-vs-traditional-programming/ chou555@affrc.go.jp

- 8. National Agriculture and Food Research Organization Machine Learning Basics: What is learning? https://wendys.tistory.com/169 chou555@affrc.go.jp

- 9. National Agriculture and Food Research Organization Machine Learning Basics: What is learning? https://bigdata-madesimple.com/machine-learning-explained-understanding- supervised-unsupervised-and-reinforcement-learning/ https://blogs.nvidia.com/blog/2018/08/02/supervised-unsupervised-learning/ Supervised learning Most common problem type in ML. ex) disease and insect pest detection, crop detection.. chou555@affrc.go.jp

- 10. National Agriculture and Food Research Organization Machine Learning Basics: Types of supervised learning http://sqlmvp.kr/221844842758 • Regression ➢ Predicting final exam score(0~100) based on time spent • Binary classification ➢ Pass/non-pass based on time spent • Multi-label classification ➢ Letter grade (A, B, C, D and F) based on time spent https://youtu.be/qPMeuL2LIqY chou555@affrc.go.jp

- 11. National Agriculture and Food Research Organization Python Tutorial with Google Colab (1/3) https://colab.research.google.com/github/cs231n/cs231n.github.io/blob/master/python-colab.ipynb 1. Go to drive.google.com 2. Log into your Google account with username and password 3. アドオン取得のアイコンをクリック

- 12. National Agriculture and Food Research Organization Python Tutorial with Google Colab (2/3) 4. “Colaboratory” 検索 5. アイコンクリック 6. インストールクリック 7. “続行”クリック 8. IDクリック https://colab.research.google.com/github/cs231n/cs231n.github.io/blob/master/python-colab.ipynb

- 13. National Agriculture and Food Research Organization Python Tutorial with Google Colab (3/3) 9. 完了 10. その他→Google Colaboratory クリック 11. Hello World 出力テスト https://colab.research.google.com/github/cs231n/cs231n.github.io/blob/master/python-colab.ipynb

- 14. National Agriculture and Food Research Organization Linear Regression: Basic concepts Predicting exam score using regression Hours (x) Points (y) 1 2 2 4 3 6 4 ? Training dataset Test dataset https://wikidocs.net/53560 Our goal(Prediction of Points using regression) Dataset 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6 x_train = torch.FloatTensor([[1], [2], [3]]) y_train = torch.FloatTensor([[2], [4], [6]])

- 15. National Agriculture and Food Research Organization Linear Regression: Hypothesis Hours (x) Points (y) 1 2 2 4 3 6 4 ? Dataset 0 1 2 3 4 5 6 7 0 1 2 3 4 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6 𝑯 𝒙 = 𝑾𝒙 + 𝒃 Which hypothesis is better?

- 16. National Agriculture and Food Research Organization Linear Regression: Hypothesis Which hypothesis is better? 𝑯 𝒙 = 𝑾𝒙 + 𝒃 0 1 2 3 4 5 6 7 0 0.5 1 1.5 2 2.5 3 3.5 Hours (x) Points (y) 1 2 2 4 3 6 4 ? Dataset 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6

- 17. National Agriculture and Food Research Organization Linear Regression: Cost Function How fit the line to our training data? Hours (x) Points (y) 1 2 2 4 3 6 4 ? Dataset 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6 𝑯 𝒙 = 𝑾𝒙 + 𝒃 (𝐻(𝑥 1 ) − 𝑦(1) )2 +(𝐻(𝑥 2 ) − 𝑦(2) )2 +(𝐻(𝑥 3 ) − 𝑦(3) )2 3 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥 𝑖 ) − 𝑦(𝑖))2 Goal: Minimize Cost Minimize Cost(W, b) W, b cost function = loss function = error function = objective function

- 18. National Agriculture and Food Research Organization Linear Regression: Optimizer – Gradient Descent 接線の傾き=0 𝑯 𝒙 = 𝑾𝒙 + 𝒃, 𝒘𝒉𝒆𝒓𝒆 𝒃 = 𝟎 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) Learning rate What is learning rate? https://en.wikipedia.org/wiki/Learning_rate https://www.jeremyjordan.me/nn-learning-rate/

- 19. National Agriculture and Food Research Organization Linear Regression: Practice using Colab import torch import torch.nn as nn import torch.nn.functional as F import torch.optim as optim torch.manual_seed(1) x_train = torch.FloatTensor([[1], [2], [3]]) y_train = torch.FloatTensor([[2], [4], [6]]) W = torch.zeros(1, requires_grad=True) b = torch.zeros(1, requires_grad=True) optimizer = optim.SGD([W, b], lr=0.01) nb_epochs = 2000 for epoch in range(nb_epochs + 1): hypothesis = x_train * W + b cost = torch.mean((hypothesis - y_train) ** 2) optimizer.zero_grad() cost.backward() optimizer.step() if epoch % 100 == 0: print('Epoch {:4d}/{} W: {:.3f}, b: {:.3f} Cost: {:.6f}'.format( epoch, nb_epochs, W.item(), b.item(), cost.item() )) Hours (x) Points (y) 1 2 2 4 3 6 4 ? Dataset 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6 𝑯 𝒙 = 𝑾𝒙 + 𝒃 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥 𝑖 ) − 𝑦(𝑖) )2 Epoch 0/2000 W: 0.187, b: 0.080 Cost: 18.666666 Epoch 100/2000 W: 1.746, b: 0.578 Cost: 0.048171 Epoch 200/2000 W: 1.800, b: 0.454 Cost: 0.029767 Epoch 300/2000 W: 1.843, b: 0.357 Cost: 0.018394 Epoch 400/2000 W: 1.876, b: 0.281 Cost: 0.011366 Epoch 500/2000 W: 1.903, b: 0.221 Cost: 0.007024 Epoch 600/2000 W: 1.924, b: 0.174 Cost: 0.004340 Epoch 700/2000 W: 1.940, b: 0.136 Cost: 0.002682 Epoch 800/2000 W: 1.953, b: 0.107 Cost: 0.001657 Epoch 900/2000 W: 1.963, b: 0.084 Cost: 0.001024 Epoch 1000/2000 W: 1.971, b: 0.066 Cost: 0.000633 Epoch 1100/2000 W: 1.977, b: 0.052 Cost: 0.000391 Epoch 1200/2000 W: 1.982, b: 0.041 Cost: 0.000242 Epoch 1300/2000 W: 1.986, b: 0.032 Cost: 0.000149 Epoch 1400/2000 W: 1.989, b: 0.025 Cost: 0.000092 Epoch 1500/2000 W: 1.991, b: 0.020 Cost: 0.000057 Epoch 1600/2000 W: 1.993, b: 0.016 Cost: 0.000035 Epoch 1700/2000 W: 1.995, b: 0.012 Cost: 0.000022 Epoch 1800/2000 W: 1.996, b: 0.010 Cost: 0.000013 Epoch 1900/2000 W: 1.997, b: 0.008 Cost: 0.000008 Epoch 2000/2000 W: 1.997, b: 0.006 Cost: 0.000005

- 20. National Agriculture and Food Research Organization Recap: Linear Regression Hours (x) Points (y) 1 2 2 4 3 6 4 ? Dataset 𝑥𝑡𝑟𝑎𝑖𝑛 = 1 2 3 𝑦𝑡𝑟𝑎𝑖𝑛 = 2 4 6 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) 𝐻 𝑥 = 𝑊𝑥 + 𝑏 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥 𝑖 ) − 𝑦(𝑖))2 接線の傾き=0 Hypothesis Cost Gradient descent 学習をすることはデータセットを用いて Cost functionを最小にするWeightを求めること。 Mean Square Error, MSE

- 21. National Agriculture and Food Research Organization Logistic Regression: Basic concepts Binary Classification & Encoding • Plant disease detection: disease(1) or non-disease(0) • Target crop classification: target crop(1) or others(0) • Facebook feed: show(1) or hide(0) • Credit card fraudulent transaction detection: fraud(1) or legitimate(0)

- 22. National Agriculture and Food Research Organization Logistic Regression: Basic concepts http://hleecaster.com/ml-logistic-regression-concept/ Linear Regression Logistic Regression Pass(1) or Fail(0) based on study hours Sigmoid function = Logistic function

- 23. National Agriculture and Food Research Organization Logistic Regression: Hypothesis using sigmoid function 𝐻 𝑥 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑊𝑥 + 𝑏 = 1 1 + 𝑒−(𝑊𝑥+𝑏) = 𝜎(𝑊𝑥 + 𝑏) Hypothesis Hypothesis of Linear Regression

- 24. National Agriculture and Food Research Organization Logistic Regression: Cost function 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥 𝑖 ) − 𝑦(𝑖))2 Cost function of Linear Regression : 𝐻 𝑥 = 𝑊𝑥 + 𝑏 Linear Regression 𝐻 𝑥 = 1 1 + 𝑒−(𝑊𝑥+𝑏) Logistic Regression https://laptrinhx.com/working-of-linear-regression-2055506697/ Convex http://gemba-iot.com/?p=540 Non-Convex

- 25. National Agriculture and Food Research Organization Logistic Regression: Cost function New cost function for logistic 𝐶𝑜𝑠𝑡 𝑊 = 1 𝑚 𝑐(𝐻 𝑥 , 𝑦) 𝑐 𝐻 𝑥 , 𝑦 = ൝ − log 𝐻 𝑥 ∶ 𝑦 = 1 − log 1 − 𝐻 𝑥 ∶ 𝑦 = 0 0.5 𝐻 𝑥 = 1 1 + 𝑒−(𝑊𝑥+𝑏) https://pythonkim.tistory.com/28 𝑐 𝐻 𝑥 , 𝑦 = −𝑦 log 𝐻 𝑥 − 1 − 𝑦 log(1 − 𝐻(𝑥)) 𝐶𝑜𝑠𝑡 𝑊 = − 1 𝑚 𝑦 log 𝐻 𝑥 + 1 − 𝑦 log(1 − 𝐻(𝑥)) 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) Gradient descent

- 26. National Agriculture and Food Research Organization Logistic Regression: Practice using Colab 𝐶𝑜𝑠𝑡 𝑊 = − 1 𝑚 𝑦 log 𝐻 𝑥 + 1 − 𝑦 log(1 − 𝐻(𝑥)) 𝐻 𝑥 = 1 1 + 𝑒−(𝑊𝑥+𝑏)

- 27. National Agriculture and Food Research Organization Recap: Linear Regression & Logistic Regression Linear Regression Logistic Regression(Binary Classification) Hypothesis 𝐻 𝑥 = 𝑊𝑥 + 𝑏 𝐻 𝑥 = 1 1 + 𝑒−(𝑊𝑥+𝑏) Cost 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥 𝑖 ) − 𝑦(𝑖) )2 𝐶𝑜𝑠𝑡 𝑊 = − 1 𝑚 𝑦 log 𝐻 𝑥 + 1 − 𝑦 log(1 − 𝐻(𝑥)) Gradient Descent 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) https://pythonkim.tistory.com/28 https://laptrinhx.com/working-of-linear-regression-2055506697/ Y=1 H(x)=1 -> cost(1) = 0 H(x)=0 -> cost(0) = ∞ Y=0 H(x)=0 -> cost(0) = 0 H(x)=1 -> cost(1) = ∞

- 28. National Agriculture and Food Research Organization Multivariable Linear Regression: Basic concepts Quiz 1 (x1) Quiz 2 (x2) Quiz 3 (x3) Final (y) 73 80 75 152 93 88 93 185 89 91 80 180 96 98 100 196 73 66 70 142 Predicting exam score: Regression using three inputs (x1, x2, x3) Linear Regression: Hypothesis 𝐻 𝑥 = 𝑊𝑥 + 𝑏 Multivariable Linear Regression: 𝐻 𝑥1, 𝑥2, 𝑥3 = 𝑤1𝑥1 + 𝑤2𝑥2 + 𝑤3𝑥3 + 𝑏 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥1 𝑖 , 𝑥2 𝑖 , 𝑥3 𝑖 ) − 𝑦(𝑖) )2 Cost Function https://wikidocs.net/54841

- 29. National Agriculture and Food Research Organization Multivariable Linear Regression: Hypothesis using Matrix 𝐻 𝑥1, 𝑥2, 𝑥3 = 𝑤1𝑥1 + 𝑤2𝑥2 + 𝑤3𝑥3 + 𝑏 𝐻 𝑥1, 𝑥2, 𝑥3, … , 𝑥𝑛 = 𝑤1𝑥1 + 𝑤2𝑥2 + 𝑤3𝑥3 + ⋯ + 𝑤𝑛𝑥𝑛 + 𝑏 The dot product between a matrix and a vector https://hadrienj.github.io/posts/Deep-Learning-Book-Series-2.2-Multiplying-Matrices-and-Vectors/

- 30. National Agriculture and Food Research Organization Multivariable Linear Regression: Hypothesis using Matrix 𝐻 𝑥1, 𝑥2, 𝑥3 = 𝑤1𝑥1 + 𝑤2𝑥2 + 𝑤3𝑥3 + 𝑏 𝐻 𝑥1, 𝑥2, 𝑥3 = 𝑥1 𝑥2 𝑥3 ∙ 𝑤1 𝑤2 𝑤3 + 𝑏 = 𝑥1𝑤1 + 𝑥2𝑤2 + 𝑥3𝑤3 + 𝑏 𝐻 𝑥 = 𝑋𝑊 + 𝑏

- 31. National Agriculture and Food Research Organization Multivariable Linear Regression: Hypothesis using Matrix Quiz 1 (x1) Quiz 2 (x2) Quiz 3 (x3) Final (y) 73 80 75 152 93 88 93 185 89 91 80 180 96 98 100 196 73 66 70 142 Predicting exam score: Regression using three inputs (x1, x2, x3) https://wikidocs.net/54841 Hypothesis 𝐻 𝑥1, 𝑥2, 𝑥3 = 𝑤1𝑥1 + 𝑤2𝑥2 + 𝑤3𝑥3 + 𝑏 𝑥11 𝑥21 𝑥31 𝑥41 𝑥51 𝑥12 𝑥22 𝑥32 𝑥42 𝑥52 𝑥13 𝑥23 𝑥33 𝑥43 𝑥53 ∙ 𝑤1 𝑤2 𝑤3 + 𝑏 = 𝑥11𝑤1 + 𝑥12𝑤2 + 𝑥13𝑤3 𝑥21𝑤1 + 𝑥22𝑤2 + 𝑥23𝑤3 𝑥31𝑤1 + 𝑥32𝑤2 + 𝑥33𝑤3 𝑥41𝑤1 + 𝑥42𝑤2 + 𝑥43𝑤3 𝑥51𝑤1 + 𝑥52𝑤2 + 𝑥53𝑤3 + 𝑏 𝐻 𝑥 = 𝑋𝑊 + 𝑏 https://youtu.be/kPxpJY6fRkY

- 32. National Agriculture and Food Research Organization Multivariable Linear Regression: Practice using Colab 𝑥11 𝑥21 𝑥31 𝑥41 𝑥51 𝑥12 𝑥22 𝑥32 𝑥42 𝑥52 𝑥13 𝑥23 𝑥33 𝑥43 𝑥53 ∙ 𝑤1 𝑤2 𝑤3 + 𝑏 = 𝑥11𝑤1 + 𝑥12𝑤2 + 𝑥13𝑤3 𝑥21𝑤1 + 𝑥22𝑤2 + 𝑥23𝑤3 𝑥31𝑤1 + 𝑥32𝑤2 + 𝑥33𝑤3 𝑥41𝑤1 + 𝑥42𝑤2 + 𝑥43𝑤3 𝑥51𝑤1 + 𝑥52𝑤2 + 𝑥53𝑤3 + 𝑏 𝐻 𝑥 = 𝑋𝑊 + 𝑏 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥1 𝑖 , 𝑥2 𝑖 , 𝑥3 𝑖 ) − 𝑦(𝑖) )2

- 33. National Agriculture and Food Research Organization Recap: Multivariable Linear Regression & Logistic Regression Multivariable Linear Regression Logistic Regression(Binary Classification) Hypothesis 𝐻 𝑥 = 𝑋𝑊 + 𝑏 𝐻 𝑥 = 1 1 + 𝑒−(𝑊𝑥+𝑏) Cost 𝑐𝑜𝑠𝑡 𝑊, 𝑏 = 1 𝑚 𝑖=1 𝑚 (𝐻(𝑥1 𝑖 , 𝑥2 𝑖 , 𝑥3 𝑖 ) − 𝑦(𝑖) )2 𝐶𝑜𝑠𝑡 𝑊 = − 1 𝑚 𝑦 log 𝐻 𝑥 + 1 − 𝑦 log(1 − 𝐻(𝑥)) Gradient Descent 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) 𝑊 ≔ 𝑊 − 𝛼 𝜕 𝜕𝑊 𝑐𝑜𝑠𝑡(𝑊) https://pythonkim.tistory.com/28 https://laptrinhx.com/working-of-linear-regression-2055506697/ Y=1 H(x)=1 -> cost(1) = 0 H(x)=0 -> cost(0) = ∞ Y=0 H(x)=0 -> cost(0) = 0 H(x)=1 -> cost(1) = ∞

- 34. National Agriculture and Food Research Organization Multinomial Classification: Basic concepts Linear Model 𝑊𝑥 + 𝑏 2.0 1.0 0.1 0.7 0.2 0.1 Logit Softmax 𝑆(𝑦) 𝑆(𝑦) 𝑦 𝑥 Input Data 1.0 0.0 0.0 1-Hot Encoding 𝐷(𝑆, 𝐿) 𝐿 Cross Entropy Topic keywords: softmax, cross entropy, 1-hot encoding

- 35. National Agriculture and Food Research Organization Multinomial Classification: Softmax function 𝑆(𝑦𝑖) = 𝑒𝑦𝑖 σ𝑗=1 𝑘 𝑒𝑦𝑗 Linear Model 𝑊𝑥 + 𝑏 2.0 1.0 0.1 0.7 0.2 0.1 Logit Softmax 𝑆(𝑦) 𝑆(𝑦) 𝑦 𝑥 Input Data 1.0 0.0 0.0 1-Hot Encoding 𝐷(𝑆, 𝐿) 𝐿 Cross Entropy 2.0 1.0 0.1 𝑦 LOGIT SCORES SOFTMAX 𝑝 = 0.7 𝑝 = 0.2 𝑝 = 0.1 PROBABILITIES

- 36. National Agriculture and Food Research Organization Multinomial Classification: Cost function Linear Model 𝑊𝑥 + 𝑏 2.0 1.0 0.1 0.7 0.2 0.1 Logit Softmax 𝑆(𝑦) 𝑆(𝑦) 𝑦 𝑥 Input Data 1.0 0.0 0.0 1-Hot Encoding 𝐷(𝑆, 𝐿) 𝐿 Cross Entropy 𝐷 𝑆, 𝐿 = − 𝑖 𝐿𝑖 log(𝑆𝑖) Cross Entropy c𝑜𝑠𝑡 𝑊 = − 1 𝑛 σ𝑖=1 𝑛 σ𝑗=1 𝑘 𝐿𝑗 (𝑖) log(𝑝𝑗 (𝑖) )

- 37. National Agriculture and Food Research Organization Multinomial Classification: Practice using Colab 目標:アイリスの種類を分類する! setosa versicolor virginica https://archive.ics.uci.edu/ml/datasets/iris https://gist.github.com/curran/a08a1080b88344b0c8a7#file-iris-csv

- 38. National Agriculture and Food Research Organization Multinomial Classification: Practice using Colab Exploratory data analysis (EDA)

- 39. National Agriculture and Food Research Organization Multinomial Classification: Practice using Colab Exploratory data analysis (EDA)

- 40. National Agriculture and Food Research Organization Multinomial Classification: Practice using Colab

- 41. National Agriculture and Food Research Organization Multinomial Classification: Practice using Colab

- 42. National Agriculture and Food Research Organization Recap: Multinomial Classification 𝑆(𝑦𝑖) = 𝑒𝑦𝑖 σ𝑗=1 𝑘 𝑒𝑦𝑗 Linear Model 𝑊𝑥 + 𝑏 2.0 1.0 0.1 0.7 0.2 0.1 Logit Softmax 𝑆(𝑦) 𝑆(𝑦) 𝑦 𝑥 Input Data 1.0 0.0 0.0 1-Hot Encoding 𝐷(𝑆, 𝐿) 𝐿 Cross Entropy 2.0 1.0 0.1 𝑦 LOGIT SCORES SOFTMAX 𝑝 = 0.7 𝑝 = 0.2 𝑝 = 0.1 PROBABILITIES 𝐷 𝑆, 𝐿 = − 𝑖 𝐿𝑖 log(𝑆𝑖) Cross Entropy c𝑜𝑠𝑡 𝑊 = − 1 𝑛 σ𝑖=1 𝑛 σ𝑗=1 𝑘 𝐿𝑗 (𝑖) log(𝑝𝑗 (𝑖) )

- 43. National Agriculture and Food Research Organization Basics of Deep Neural Network: Perceptron http://cs231n.stanford.edu/slides/2016/winter1516_lecture5.pdf

- 44. National Agriculture and Food Research Organization Basics of Deep Neural Network: Single-Layer Perceptron Single-Layer Perceptron Input Layer Output Layer

- 45. National Agriculture and Food Research Organization Basics of Deep Neural Network: Single-Layer Perceptron AND gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 0 0 1 0 1 0 0 1 1 1 NAND gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 1 0 1 1 1 0 1 1 1 0

- 46. National Agriculture and Food Research Organization Basics of Deep Neural Network: Single-Layer Perceptron OR gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 0 0 1 1 1 0 1 1 1 1 XOR gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 0 0 1 1 1 0 1 1 1 0 Single-Layer Perceptronでは XOR gateを作成不可能!! Multi-Layer Perceptron

- 47. National Agriculture and Food Research Organization Basics of Deep Neural Network: Single-Layer Perceptron Implementation of XOR gate using PyTorch

- 48. National Agriculture and Food Research Organization Basics of Deep Neural Network: Multi-Layer Perceptron(MLP) XOR gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 0 0 1 1 1 0 1 1 1 0 Input Layer Hidden Layer Output Layer What is the difference between a neural network and a deep neural network, and why do the deep ones work better? - Cross Validated (stackexchange.com)

- 49. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab Vector, Matrix and Tensor 10 most common Maths Operation with Pytorch Tensor | by Nooras Fatima Ansari | Medium 2D Tensor t = (batch size, dim) dim batch_size 3D Tensor t = (batch size, width, height) width height batch_size

- 50. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab Vector(1D Tensor)

- 51. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab Matrix(2D Tensor) t = (batch size, dim) dim batch_size

- 52. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab torch.matmul — PyTorch 1.7.0 documentation

- 53. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab torch.mean — PyTorch 1.7.0 documentation

- 54. National Agriculture and Food Research Organization Basic of Python and PyTorch: Practice using Colab torch.max — PyTorch 1.7.0 documentation

- 55. National Agriculture and Food Research Organization Recap: Single-Layer Perceptron Single-Layer Perceptron Input Layer Output Layer

- 56. National Agriculture and Food Research Organization Recap: Multi-Layer Perceptron(MLP) XOR gate 𝒙𝟏 𝒙𝟐 𝒚 0 0 0 0 1 1 1 0 1 1 1 0 Input Layer Hidden Layer Output Layer What is the difference between a neural network and a deep neural network, and why do the deep ones work better? - Cross Validated (stackexchange.com)

- 57. National Agriculture and Food Research Organization Neural Network Overview: Forward Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 = 0.3 × 0.1 + 0.25 × 0.2 = 0.08 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 = 0.4 × 0.1 + 0.35 × 0.2 = 0.11 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 = 0.5199893401555818 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 = 0.5274723043445937 Forward

- 58. National Agriculture and Food Research Organization Neural Network Overview: Forward Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 = 0.45 × ℎ1 + 0.4 × ℎ2 = 0.44498412 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 = 0.7 × ℎ1 + 0.6 × ℎ2 = 0.68047592 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 = 0.60944600 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 = 0.66384491 𝑒1 = 1 2 (𝑦1 − 𝑜1) 2= 0.02193381 𝑒2 = 1 2 (𝑦2 − 𝑜2) 2= 0.00203809 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2 = 0.02397190 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 Forward

- 59. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒1 = 1 2 (𝑦1 − 𝑜1) 2 𝑒2 = 1 2 (𝑦2 − 𝑜2) 2 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 Back Propagation Update 𝑊5 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊5 連鎖律 - Wikipedia 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 = 2 × 1 2 (𝑦1 − 𝑜1) 2−1 × −1 + 0 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 = − 𝑦1 − 𝑜1 = − 0.4 − 0.60944600 = 0.20944600

- 60. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒1 = 1 2 (𝑦1 − 𝑜1) 2 𝑒2 = 1 2 (𝑦2 − 𝑜2) 2 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 Back Propagation Update 𝑊5 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊5 Logistic function - Wikipedia 𝜕𝑜1 𝜕𝑧3 = 𝑜1 × 1 − 𝑜1 = 0.60944600(1 − 0.60944600) = 0.23802157 𝜕𝑧3 𝜕𝑊5 = 𝑊5ℎ1 + 𝑊6ℎ2 = ℎ1 + 0 = 0.51998934

- 61. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒1 = 1 2 (𝑦1 − 𝑜1) 2 𝑒2 = 1 2 (𝑦2 − 𝑜2) 2 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 Back Propagation Update 𝑊5 using Chain rule & Gradient Descent 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊5 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 0.20944600 × 0.23802157 × 0.51998934 = 0.02592286 𝑊5 + = 𝑊5 − 𝛼 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 0.45 − (0.5 × 0.02592286) = 0.43703857 Learning Rate: 0.5

- 62. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.437 𝑥2 0.2 𝑊2 0.25 𝑊6 0.387 Targets 𝑊3 0.4 𝑊7 0.696 𝑦1 0.4 𝑊4 0.35 𝑊8 0.596 𝑦2 0.6 Back Propagation Update 𝑊6, 𝑊7, 𝑊8 using Chain rule & Gradient Descent (learning rate = 0.5) 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊6 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊6 → 𝑊6 + = 0.38685205 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊7 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜2 × 𝜕𝑜2 𝜕𝑧4 × 𝜕𝑧4 𝜕𝑊7 → 𝑊7 + = 0.69629578 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊8 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜2 × 𝜕𝑜2 𝜕𝑧4 × 𝜕𝑧4 𝜕𝑊8 → 𝑊8 + = 0.59624247

- 63. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊6 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊6 → 𝑊6 + = 0.38685205 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊7 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜2 × 𝜕𝑜2 𝜕𝑧4 × 𝜕𝑧4 𝜕𝑊7 → 𝑊7 + = 0.69629578 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊8 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜2 × 𝜕𝑜2 𝜕𝑧4 × 𝜕𝑧4 𝜕𝑊8 → 𝑊8 + = 0.59624247 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊5 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕𝑊5 → 𝑊5 + = 0.43703857 𝑊5 0.45 0.437 𝑊6 0.4 0.387 𝑊7 0.7 0.696 𝑊8 0.6 0.596 Old New

- 64. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Back Propagation Update 𝑊1 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 = 𝜕𝑒1 𝜕ℎ1 + 𝜕𝑒2 𝜕ℎ1 𝜕𝑒1 𝜕ℎ1 = 𝜕𝑒1 𝜕𝑧3 × 𝜕𝑧3 𝜕ℎ1 = 𝜕𝑒1 𝜕𝑜1 × 𝜕𝑜1 𝜕𝑧3 × 𝜕𝑧3 𝜕ℎ1 = −(𝑦1 − 𝑜1) × 𝑜1 × 1 − 𝑜1 × 𝑊5 𝜕𝑒1 𝜕ℎ1 = 0.02243370

- 65. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Back Propagation Update 𝑊1 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 = 𝜕𝑒1 𝜕ℎ1 + 𝜕𝑒2 𝜕ℎ1 𝜕𝑒2 𝜕ℎ1 = 𝜕𝑒2 𝜕𝑧4 × 𝜕𝑧4 𝜕ℎ1 = 𝜕𝑒2 𝜕𝑜2 × 𝜕𝑜2 𝜕𝑧4 × 𝜕𝑧4 𝜕ℎ1 = −(𝑦2 − 𝑜2) × 𝑜2 × 1 − 𝑜2 × 𝑊7 𝜕𝑒2 𝜕ℎ1 = 0.00997311

- 66. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Back Propagation Update 𝑊1 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 = 𝜕𝑒1 𝜕ℎ1 + 𝜕𝑒2 𝜕ℎ1 = 0.02243370 + 0.00997311 = 0.03240681

- 67. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.3 𝑊5 0.45 𝑥2 0.2 𝑊2 0.25 𝑊6 0.4 Targets 𝑊3 0.4 𝑊7 0.7 𝑦1 0.4 𝑊4 0.35 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Back Propagation Update 𝑊1 using Chain rule 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 𝜕ℎ1 𝜕𝑧1 = ℎ1 × 1 − ℎ1 = 0.51998934(1 − 0.51998934) = 0.24960043 𝜕𝑧1 𝜕𝑊1 = 𝑥1 = 0.1

- 68. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 1) Initialize Variables 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Inputs Weights 𝑥1 0.1 𝑊1 0.300 𝑊5 0.45 𝑥2 0.2 𝑊2 0.249 𝑊6 0.4 Targets 𝑊3 0.400 𝑊7 0.7 𝑦1 0.4 𝑊4 0.349 𝑊8 0.6 𝑦2 0.6 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) 𝑧1 = 𝑊1𝑥1 + 𝑊2𝑥2 𝑧2 = 𝑊3𝑥1 + 𝑊4𝑥2 ℎ1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧1 ℎ2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧2 𝑧3 = 𝑊5ℎ1 + 𝑊6ℎ2 𝑧4 = 𝑊7ℎ1 + 𝑊8ℎ2 𝑜1 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧3 𝑜2 = 𝑠𝑖𝑔𝑚𝑜𝑖𝑑 𝑧4 𝑒𝑡𝑜𝑡𝑎𝑙 = 𝑒1+ 𝑒2= 1 2 (𝑦1 − 𝑜1) 2 + 1 2 (𝑦2 − 𝑜2) 2 Back Propagation Update 𝑊1 using Chain rule & Gradient Descent 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 0.03240681 × 0.24960043 × 0.1 = 0.00080888 𝑊1 + = 𝑊1 − 𝛼 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 0.3 − (0.5 × 0.00080888) = 0.29959556

- 69. National Agriculture and Food Research Organization Neural Network Overview: Back Propagation 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊1 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊1 → 𝑊1 + = 0.29959556 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊2 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ1 × 𝜕ℎ1 𝜕𝑧1 × 𝜕𝑧1 𝜕𝑊2 → 𝑊2 + = 0.24919112 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊3 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ2 × 𝜕ℎ2 𝜕𝑧2 × 𝜕𝑧2 𝜕𝑊3 → 𝑊3 + = 0.39964496 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕𝑊4 = 𝜕𝑒𝑡𝑜𝑡𝑎𝑙 𝜕ℎ2 × 𝜕ℎ2 𝜕𝑧2 × 𝜕𝑧2 𝜕𝑊4 → 𝑊4 + = 0.34928991 𝑊1 0.3 0.300 𝑊2 0.25 0.249 𝑊3 0.4 0.400 𝑊4 0.35 0.349 Old New

- 70. National Agriculture and Food Research Organization Neural Network Overview: Forward Propagation using updated weights 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Forward 1) Initialize Variables 2) Activation Function: Sigmoid Function Loss Function: MSE(Mean Square Error) Inputs Weights 𝑥1 0.1 𝑊1 0.300 𝑊5 0.437 𝑥2 0.2 𝑊2 0.249 𝑊6 0.387 Targets 𝑊3 0.400 𝑊7 0.696 𝑦1 0.4 𝑊4 0.349 𝑊8 0.596 𝑦2 0.6 New Old

- 71. National Agriculture and Food Research Organization Implement XOR using PyTorch: Practice using Colab Input Layer Hidden Layer1 Hidden Layer2 Hidden Layer3 Output Layer BCELoss — PyTorch 1.7.0 documentation

- 72. National Agriculture and Food Research Organization Implement XOR using PyTorch: Practice using Colab

- 73. National Agriculture and Food Research Organization Recap: Forward & Back Propagation 𝑥1 𝑥2 𝑧1 𝑧2 ℎ2 ℎ1 𝑧3 𝑧4 𝑜2 𝑜1 Input Layer Hidden Layer Output Layer 𝑊1 𝑊2 𝑊3 𝑊4 𝑊5 𝑊6 𝑊7 𝑊8 Forward Back Propagation Loop: 1. Sample a batch of data 2. Forward propagation it through the graph, get loss 3. Back propagation to calculate the gradients 4. Update The parameters using the gradients “Fully-connected” layers winter1516_lecture4.pdf (stanford.edu)

- 74. National Agriculture and Food Research Organization Neural Network Overview: A big problem ML with Tensorflow (hunkim.github.io) • Back propagation just did not work well for normal neural networks with many layer • Other rising machine learning algorithms: SVM, RandomForest, etc. • 1995 ”Comparison of Learning Algorithm For Handwritten Digit Recognition” by LeCun et.al found that this new approach worked better

- 75. National Agriculture and Food Research Organization Neural Network Overview: Breakthrough • In 2006, Hinton, Simon Osindero and Yee-Whye Teh published, “A fast learning algorithm for deep belief nets” • Yoshua Bengio et al. in 2007 with “Greedy Layer-Wise Training of Deep Networks” Solutions • Neural networks with many layers really could be trained well, if the weights are initialized in a clever way rather than randomly. • Deep machine learning methods are more efficient for difficult problems than shallow methods. • Rebranding to Deep Nets, Deep Learning ML with Tensorflow (hunkim.github.io)

- 76. National Agriculture and Food Research Organization Neural Network Overview: Breakthrough Geoffrey Hinton’s summary of findings up to today • Our labeled datasets were thousands of times too small • Our computers were millions of times too slow • We initialized the weights in a stupid way • We used the wrong type of non-linearity A Brief History of Neural Nets and Deep Learning – Skynet Today

- 77. National Agriculture and Food Research Organization Neural Network Overview: Better non-linearity Problem of Sigmoid function winter1516_lecture5.pdf (stanford.edu) Active region Saturated region Saturated region → Slow convergence problem

- 78. National Agriculture and Food Research Organization Neural Network Overview: Better non-linearity Back propagation (chain rule) (×) 𝑧 = 𝑥𝑦 winter1516_lecture4.pdf (stanford.edu) Vanishing Gradient (NN winter2: 1986 - 2006)

- 79. National Agriculture and Food Research Organization Neural Network Overview: Better non-linearity ReLU: Rectified Linear Unit winter1516_lecture5.pdf (stanford.edu)

- 80. National Agriculture and Food Research Organization Neural Network Overview: Better non-linearity ReLU: Rectified Linear Unit winter1516_lecture5.pdf (stanford.edu)

- 81. National Agriculture and Food Research Organization Neural Network Overview: Better non-linearity Why Sigmoid?. Ever since you have started to learn… | by Swain Subrat Kumar | núcleoML | Medium General: ELU → Leaky ReLU → ReLU → tanh → sigmoid CS231n: ReLU → Leaky ReLU or ELU

- 82. National Agriculture and Food Research Organization Neural Network Overview: Practice using Colab Activation Functions — ML Glossary documentation (ml-cheatsheet.readthedocs.io)

- 83. National Agriculture and Food Research Organization Neural Network Overview: Practice using Colab Activation Functions — ML Glossary documentation (ml-cheatsheet.readthedocs.io)

- 84. National Agriculture and Food Research Organization Neural Network Overview: Practice using Colab scikit-learn: machine learning in Python — scikit-learn 0.23.2 documentation (scikit-learn.org)

- 85. National Agriculture and Food Research Organization Neural Network Overview: Practice using Colab

- 86. National Agriculture and Food Research Organization Recap: Neural Network’s Breakthrough Geoffrey Hinton’s summary of findings up to today • Our labeled datasets were thousands of times too small • Our computers were millions of times too slow • We initialized the weights in a stupid way • We used the wrong type of non-linearity A Brief History of Neural Nets and Deep Learning – Skynet Today

- 87. National Agriculture and Food Research Organization Recap: Better non-linearity Back propagation (chain rule) (×) 𝑧 = 𝑥𝑦 winter1516_lecture4.pdf (stanford.edu) Vanishing Gradient (NN winter2: 1986 - 2006)

- 88. National Agriculture and Food Research Organization Recap: Better non-linearity Why Sigmoid?. Ever since you have started to learn… | by Swain Subrat Kumar | núcleoML | Medium General: ELU → Leaky ReLU → ReLU → tanh → sigmoid CS231n: ReLU → Leaky ReLU or ELU

- 89. National Agriculture and Food Research Organization Recap: Neural Network’s Breakthrough Geoffrey Hinton’s summary of findings up to today • Our labeled datasets were thousands of times too small ✓ • Our computers were millions of times too slow ✓ • We initialized the weights in a stupid way → How to solve the problem?? • We used the wrong type of non-linearity✓ A Brief History of Neural Nets and Deep Learning – Skynet Today

- 90. National Agriculture and Food Research Organization Weight initialization: Xavier Initialization Input Layer Hidden Layer-1 Hidden Layer-2 Output Layer • Normal Initialization 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 where 𝑛𝑖𝑛𝑝𝑢𝑡 is number of the input layers, 𝑛𝑜𝑢𝑡𝑝𝑢𝑡 is number of the output layers. • Uniform Initialization 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 ※ここで、入力レイヤと出力レイヤの数がほぼほぼ同じであるばあいには、 𝝈 𝑾 = Τ 𝟏 𝒏𝒊𝒏𝒑𝒖𝒕, 𝑎 𝑜𝑟 𝑏 = Τ 𝟑 𝒏𝒊𝒏𝒑𝒖𝒕 を用いて初期化を行う。 Understanding the difficulty of training deep feedforward neural networks, http://proceedings.mlr.press/v9/glorot10a/glorot10a.pdf

- 91. National Agriculture and Food Research Organization Weight initialization: Xavier Initialization Input Layer Hidden Layer-1 Hidden Layer-2 Output Layer Understanding the difficulty of training deep feedforward neural networks, http://proceedings.mlr.press/v9/glorot10a/glorot10a.pdf Normal Initialization Uniform Initialization 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 Hidden Layer-1 𝑛𝑖𝑛𝑝𝑢𝑡 = 4 𝑛𝑜𝑢𝑡𝑝𝑢𝑡 = 2 𝝈 𝑾 = 𝟐 𝟒 + 𝟐 ≈ 𝟎. 𝟓𝟖 𝑾~𝑵 𝟎, 𝟎. 𝟓𝟖𝟐 𝑛𝑖𝑛𝑝𝑢𝑡 = 3 𝑛𝑜𝑢𝑡𝑝𝑢𝑡 = 4 𝒂 = 𝟔 𝟒 + 𝟐 = 𝟏 𝑾~𝑼 −𝟏, 𝟏 Hidden Layer-2 𝑾~𝑵 𝟎, 𝟎. 𝟓𝟑𝟐 𝑾~𝑼 −𝟎. 𝟗𝟑, 𝟎. 𝟗𝟑

- 92. National Agriculture and Food Research Organization Weight initialization: Xavier Initialization Xavier Initialization* = Glorot Initialization Understanding the difficulty of training deep feedforward neural networks, http://proceedings.mlr.press/v9/glorot10a/glorot10a.pdf Sigmoid, tanh などの活性化関数に利用する!!

- 93. National Agriculture and Food Research Organization Weight initialization: Xavier Initialization torch.nn.init — PyTorch 1.7.0 documentation

- 94. National Agriculture and Food Research Organization Weight initialization: Xavier Initialization torch.nn.init — PyTorch 1.7.0 documentation

- 95. National Agriculture and Food Research Organization Weight initialization: He Initialization Input Layer Hidden Layer-1 Hidden Layer-2 Output Layer • Normal Initialization 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 where 𝑛𝑖𝑛𝑝𝑢𝑡 is number of the input layers, • Uniform Initialization 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, [1502.01852] Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification (arxiv.org)

- 96. National Agriculture and Food Research Organization Weight initialization: He Initialization Input Layer Hidden Layer-1 Hidden Layer-2 Output Layer Normal Initialization Uniform Initialization 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 Hidden Layer-1 𝑛𝑖𝑛𝑝𝑢𝑡 = 4 𝝈 𝑾 = 𝟐 𝟒 ≈ 𝟎. 𝟕𝟏 𝑾~𝑵 𝟎, 𝟎. 𝟕𝟏𝟐 𝑛𝑖𝑛𝑝𝑢𝑡 = 3 𝒂 = 𝟔 𝟒 = 𝟏. 𝟐𝟐 𝑾~𝑼 −𝟏. 𝟐𝟐, 𝟏. 𝟐𝟐 Hidden Layer-2 𝑾~𝑵 𝟎, 𝟎. 𝟖𝟐𝟐 𝑾~𝑼 −𝟏. 𝟒𝟏, 𝟏. 𝟒𝟏

- 97. National Agriculture and Food Research Organization Weight initialization: He Initialization ReLU系列の活性化関数に利用する!! Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, [1502.01852] Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification (arxiv.org)

- 98. National Agriculture and Food Research Organization Weight initialization: He Initialization torch.nn.init — PyTorch 1.7.0 documentation

- 99. National Agriculture and Food Research Organization Weight initialization: He Initialization torch.nn.init — PyTorch 1.7.0 documentation

- 100. National Agriculture and Food Research Organization Weight initialization: What’s different? (Xavier VS He) 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕

- 101. National Agriculture and Food Research Organization Weight initialization: What’s different? (Xavier VS He)

- 102. National Agriculture and Food Research Organization Weight initialization: What’s different? (Xavier VS He) machine learning - Xavier and he_normal initialization difference - Stack Overflow

- 103. National Agriculture and Food Research Organization Weight initialization: Proper initialization.. winter1516_lecture5.pdf (stanford.edu)

- 104. National Agriculture and Food Research Organization Recap: Weight initialization Input Layer Hidden Layer-1 Hidden Layer-2 Output Layer Xavier Initialization He Initialization Normal Initialization Uniform Initialization 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 𝑾~𝑵 𝟎, 𝝈 𝑾 𝟐 𝝈 𝑾 = 𝟐 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 𝑾~𝑼 − 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕 , 𝟔 𝒏𝒊𝒏𝒑𝒖𝒕 + 𝒏𝒐𝒖𝒕𝒑𝒖𝒕

- 105. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Background Local Receptive Field http://cs231n.stanford.edu/slides/2016/winter1516_lecture7.pdf

- 106. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) http://cs231n.stanford.edu/slides/2016/winter1516_lecture7.pdf

- 107. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Up: A regular 3-layer Neural Network. Down: A ConvNet arranges its neurons in three dimensions (width, height, depth), as visualized in one of the layers. Every layer of a ConvNet transforms the 3D input volume to a 3D output volume of neuron activations. In this example, the red input layer holds the image, so its width and height would be the dimensions of the image, and the depth would be 3 (Red, Green, Blue channels). https://cs231n.github.io/convolutional-networks/

- 108. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Raw Image Filter Fully connected layer Convolutional layer VS. https://yjjo.tistory.com/8 1 2 3 4 5 6 7 8 9 × 1 2 3 4 Raw Image Filter = Kernel = Window 1 2 3 4 5 6 7 8 9 Flatten = 37 47 67 77 Feature map = Activation map (1*1) + (2*2) + (4*3) + (5*4) = 37 Intuitively Understanding Convolutions for Deep Learning | by Irhum Shafkat | Towards Data Science Fully connected layer vs. Convolutional layer Sliding Window

- 109. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Convolution vs. Cross-Correlation 𝑓 ∗ 𝑔 𝑡 = න −∞ ∞ 𝑓 𝜏 𝑔 𝑡 − 𝜏 𝑑𝜏 Definition of convolution https://yjjo.tistory.com/8 https://en.wikipedia.org/wiki/Cross-correlation Convolution vs. Cross-Correlation – Glass Box (glassboxmedicine.com)

- 110. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) 2D Convolution: The Operation (Standard) Intuitively Understanding Convolutions for Deep Learning | by Irhum Shafkat | Towards Data Science Raw Image = Input features = 5 X 5 = 25 Output features = 3 X 3 = 9 N N 𝑶𝒖𝒕𝒑𝒖𝒕 𝒔𝒊𝒛𝒆 = (𝑵 − 𝑭) 𝒔𝒕𝒓𝒊𝒅𝒆 + 𝟏 Where, N = Input size, F = Filter size, stride N = 5 X 5, F = 3 X 3, stride = 1 ➞ Output features = (5 - 3) / 1 + 1 = 3

- 111. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) 2D Convolution: The Operation (padding = 1, stride = 2) 𝑶𝒖𝒕𝒑𝒖𝒕 𝒔𝒊𝒛𝒆 = (𝑵 − 𝑭) 𝒔𝒕𝒓𝒊𝒅𝒆 + 𝟏 Where, N = Input size, F = Filter size, stride N = 7 X 7 (raw: 5 X 5, padding: 1), F = 3 X 3, stride = 2 ➞ Output features = (7 - 3) / 2 + 1 = 3 https://github.com/vdumoulin/conv_arithmetic/blob/master/gif/padding_strides.gif https://yjjo.tistory.com/8

- 112. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Multi-channel Convolution: The Operation Intuitively Understanding Convolutions for Deep Learning | by Irhum Shafkat | Towards Data Science http://taewan.kim/post/cnn/

- 113. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) https://cs231n.github.io/convolutional-networks/

- 114. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) CNN 入出力パラメータ計算 Activation function SUM 各レイヤ別学習パラメータ数= 入力チャンネル数 X フィルタW X フィルタH X 出力チャンネル数 例1: Convolutional Layer1 = 1 X 4 X 4 X 20 = 320 例2: Fully connected layer = 160 X 100 = 160,000 http://taewan.kim/post/cnn/ Num. of params

- 115. National Agriculture and Food Research Organization FC Neural Network 入出力パラメータ計算 Convolutional Neural Network (CNN) Layer Input Node Output Node Num. of parameters SUM 各レイヤ別学習パラメータ数= 入力チャンネル数 X フィルタW X フィルタH X 出力チャンネル数 http://taewan.kim/post/cnn/ CNN vs. FC neural network(パラメータ数) CNN(208,320) , FC(1,055,400)でCNNが少ないパラメータで構成される。

- 116. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab LeNet (LeNet-5 using MNIST dataset) Layer Input Channel Filter Output Channel Stride Pooling Activation Function Input shape Output shape Num of parameters Conv1 1 (5, 5) 6 1 X ReLU (28, 28, 1) (28, 28, 6) 150 + 6 Avg Pooling1 6 X 6 2 (2, 2) X (28, 28, 6) (14, 14, 6) 0 Conv2 6 (5, 5) 16 1 X ReLU (14, 14, 6) (10, 10, 16) 2400 + 16 Avg Pooling2 16 X 16 2 (2, 2) X (10, 10, 16) (5, 5, 16) 0 FC1 X X X X X ReLU (5X5X16, 1) (120, 1) 30720 + 120 FC2 X X X X X ReLU (120, 1) (84, 1) 10080 + 84 FC3 X X X X X ReLU (84, 1) (10, 1) 840 + 10 SUM: 44,426 flatten LeCun, Yann, et al. "Gradient-based learning applied to document recognition." Proceedings of the IEEE 86.11 (1998): 2278-2324. Paper: 32 X 32 Paper: 32 X 32

- 117. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab https://pytorch.org/docs/stable/torchvision/datasets.html#mnist MNIST dataset https://en.wikipedia.org/wiki/MNIST_database • The MNIST database (Modified National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training various image processing systems. • the black and white images from NIST were normalized to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels. • The MNIST database contains 60,000 training images and 10,000 testing images. https://en.wikipedia.org/wiki/MNIST_database

- 118. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab mnist mean: 0.13066047430038452 mnist_std: 0.30810779333114624 Data processing (MNIST)

- 119. National Agriculture and Food Research Organization Data processing (MNIST) Convolutional Neural Network (CNN): Practice using Colab

- 120. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab Defining the model(LeNet) SUM: 44,426

- 121. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab Training the model(LeNet)

- 122. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab Test the model(LeNet)

- 123. National Agriculture and Food Research Organization Convolutional Neural Network (CNN): Practice using Colab Test the model(LeNet)

- 124. National Agriculture and Food Research Organization Convolutional Neural Network (CNN) Top 10 CNN Architectures Every Machine Learning Engineer Should Know, Top 10 CNN Architectures Every Machine Learning Engineer Should Know | by Trung Anh Dang | Jan, 2021 | Towards Data Science http://cs231n.stanford.edu/slides/2020/lecture_9.pdf

- 125. National Agriculture and Food Research Organization Recap: Convolutional Neural Network 各レイヤ別学習パラメータ数= 入力チャンネル数 X フィルタW X フィルタH X 出力チャンネル数 𝑶𝒖𝒕𝒑𝒖𝒕 𝒔𝒊𝒛𝒆 = (𝑵 − 𝑭) 𝒔𝒕𝒓𝒊𝒅𝒆 + 𝟏 Where, N = Input size, F = Filter size, stride N = 7 X 7 (raw: 5 X 5, padding: 1), F = 3 X 3, stride = 2 ➞ Output features = (7 - 3) / 2 + 1 = 3 https://github.com/vdumoulin/conv_arithmetic/blob/master/gif/padding_strides.gif LeCun, Yann, et al. "Gradient-based learning applied to document recognition." Proceedings of the IEEE 86.11 (1998): 2278-2324. Params: 44,426 LeNet-5

- 126. National Agriculture and Food Research Organization CNN: AlexNet ImageNet Classification with Deep Convolutional Neural Networks http://cs231n.stanford.edu/slides/2020/lecture_9.pdf https://papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout

- 127. National Agriculture and Food Research Organization CNN: AlexNet https://starpentagon.net/analytics/imagenet_ilsvrc2012_dataset/ https://starpentagon.net/analytics/imagenet_ilsvrc2012_dataset/

- 128. National Agriculture and Food Research Organization CNN: AlexNet https://papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

- 129. National Agriculture and Food Research Organization CNN: AlexNet Size / Operation Filter Depth Stride Padding Number of Parameters Forward Computation 3* 227 * 227 Conv1 + Relu 11 * 11 96 4 (11*11*3 + 1) * 96=34944 (11*11*3 + 1) * 96 * 55 * 55=105705600 96 * 55 * 55 Max Pooling 3 * 3 2 96 * 27 * 27 Norm Conv2 + Relu 5 * 5 256 1 2 (5 * 5 * 96 + 1) * 256=614656 (5 * 5 * 96 + 1) * 256 * 27 * 27=448084224 256 * 27 * 27 Max Pooling 3 * 3 2 256 * 13 * 13 Norm Conv3 + Relu 3 * 3 384 1 1 (3 * 3 * 256 + 1) * 384=885120 (3 * 3 * 256 + 1) * 384 * 13 * 13=149585280 384 * 13 * 13 Conv4 + Relu 3 * 3 384 1 1 (3 * 3 * 384 + 1) * 384=1327488 (3 * 3 * 384 + 1) * 384 * 13 * 13=224345472 384 * 13 * 13 Conv5 + Relu 3 * 3 256 1 1 (3 * 3 * 384 + 1) * 256=884992 (3 * 3 * 384 + 1) * 256 * 13 * 13=149563648 256 * 13 * 13 Max Pooling 3 * 3 2 256 * 6 * 6 Dropout (rate 0.5) FC6 + Relu 256 * 6 * 6 * 4096=37748736 256 * 6 * 6 * 4096=37748736 4096 Dropout (rate 0.5) FC7 + Relu 4096 * 4096=16777216 4096 * 4096=16777216 4096 FC8 + Relu 4096 * 1000=4096000 4096 * 1000=4096000 1000 classes Overall 62369152=62.3 million 1135906176=1.1 billion Conv VS FC Conv:3.7million (6%) , FC: 58.6 million (94% ) Conv: 1.08 billion (95%) , FC: 58.6 million (5%) https://medium.com/@smallfishbigsea/a-walk-through-of-alexnet-6cbd137a5637%22 https://wolfy.tistory.com/241 5 convolutional layers 3 FC layers 1000 ways sotfmax 各レイヤ別学習パラメータ数= 入力チャンネル数 X フィルタW X フィルタH X 出力チャンネル数 𝑶𝒖𝒕𝒑𝒖𝒕 𝒔𝒊𝒛𝒆 = (𝑵 − 𝑭) 𝒔𝒕𝒓𝒊𝒅𝒆 + 𝟏

- 130. National Agriculture and Food Research Organization CNN: AlexNet Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout

- 131. National Agriculture and Food Research Organization CNN: AlexNet Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout Hermann grid 最近、CNNで利用する主流のNormalization手法はLRNではなく、 Batch Normalizationである。 later inhibition

- 132. National Agriculture and Food Research Organization CNN: AlexNet Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout https://blueskyvision.tistory.com/421

- 133. National Agriculture and Food Research Organization CNN: AlexNet https://blueskyvision.tistory.com/421 Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout

- 134. National Agriculture and Food Research Organization https://cs231n.github.io/neural-networks-2/ CNN: AlexNet www.cs.toronto.edu/~rsalakhu/papers/srivastava14a.pdf Main Contributions • ReLU • LRN • multi-gpu • overlapping pooling • reduce overfitting ➢ data augmentation ➢ dropout https://pytorch.org/docs/stable/generated/torch.nn.Dropout.html

- 135. National Agriculture and Food Research Organization AlexNet: Practice using Colab Web site: https://en.d2l.ai/index.html Pdf download: https://d2l.ai/d2l-en.pdf

- 136. National Agriculture and Food Research Organization AlexNet: Practice using Colab Data Processing: Load fashion MNIST dataset https://www.kaggle.com/zalando-research/fashionmnist

- 137. National Agriculture and Food Research Organization AlexNet: Practice using Colab https://colab.research.google.com/github/d2l-ai/d2l-pytorch-colab/blob/master/chapter_convolutional-modern/alexnet.ipynb https://en.d2l.ai/chapter_convolutional-modern/alexnet.html 256 Params: 44,426 Params: 62.3 million

- 138. National Agriculture and Food Research Organization AlexNet: Practice using Colab Training https://github.com/d2l-ai/d2l-en/blob/master/chapter_convolutional-neural-networks/lenet.md Batch size vs epochs https://jerryan.medium.com/batch-size-a15958708a6

- 139. National Agriculture and Food Research Organization History of Convolutional Neural Network (CNN) Top 10 CNN Architectures Every Machine Learning Engineer Should Know, Top 10 CNN Architectures Every Machine Learning Engineer Should Know | by Trung Anh Dang | Jan, 2021 | Towards Data Science http://cs231n.stanford.edu/slides/2020/lecture_9.pdf

- 140. National Agriculture and Food Research Organization Top 10 CNN Architectures Every Machine Learning Engineer Should Know, Top 10 CNN Architectures Every Machine Learning Engineer Should Know | by Trung Anh Dang | Jan, 2021 | Towards Data Science http://cs231n.stanford.edu/slides/2020/lecture_9.pdf VGG(Visual Geometry Group)Net

- 141. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net https://arxiv.org/pdf/1409.1556.pdf%20http://arxiv.org/abs/1409.1556.pdf 貢献 1. 16-19レイヤで構成したアーキテクチャの 学習のための手法 2. 全Convolutional layerで3X3フィルタを適用

- 142. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net https://neurohive.io/en/popular-networks/vgg16/

- 143. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net https://medium.com/@msmapark2/vgg16- %EB%85%BC%EB%AC%B8-%EB%A6%AC%EB%B7%B0- very-deep-convolutional-networks-for-large-scale-image- recognition-6f748235242a With BatchNorm

- 144. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net 7X7 VS. 3X3 Filter 𝑶𝒖𝒕𝒑𝒖𝒕 𝒔𝒊𝒛𝒆 = (𝑵 − 𝑭) 𝒔𝒕𝒓𝒊𝒅𝒆 + 𝟏 各レイヤ別学習パラメータ数= 入力チャンネル数 X フィルタW X フィルタH X 出力チャンネル数 10X10 4X4 10X10 8X8 6X6 4X4 VGG16 논문 리뷰 — Very Deep Convolutional Networks for Large-Scale Image Recognition | by 강준영 | Medium

- 145. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html https://www.robots.ox.ac.uk/~vgg/data/flowers/102/categories.html

- 146. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html

- 147. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html

- 148. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html

- 149. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab

- 150. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab

- 151. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab

- 152. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab

- 153. National Agriculture and Food Research Organization VGG(Visual Geometry Group)Net: Practice using Colab

- 154. National Agriculture and Food Research Organization Top 10 CNN Architectures Every Machine Learning Engineer Should Know, Top 10 CNN Architectures Every Machine Learning Engineer Should Know | by Trung Anh Dang | Jan, 2021 | Towards Data Science http://cs231n.stanford.edu/slides/2020/lecture_9.pdf GoogLeNet / InceptionNet

- 155. National Agriculture and Food Research Organization GoogLeNet / InceptionNet https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Szegedy_Going_Deeper_With_2015_CVPR_paper.pdf

- 156. National Agriculture and Food Research Organization GoogLeNet / InceptionNet Network in Network 非線形関係を表現するために1X1 Convを導入 Cross Channel Parametric Pooling (CCCP) 1X1 Conv. のメリット 1. 非線形関係を表現可能 (Representation of non-linear) 2. 次元縮小 (Dimension reduction) https://arxiv.org/pdf/1312.4400.pdf%20http://arxiv.org/abs/1312.4400.pdf https://kangbk0120.github.io/articles/2018-01/inception-googlenet-review

- 157. National Agriculture and Food Research Organization GoogLeNet / InceptionNet Architectural Details GoogLeNetで利用したInception Module 1X1 Conv. のメリット 1. 非線形関係を表現可能 (Representation of non-linear) + ReLU 2. 次元縮小 (Dimension reduction)

- 158. National Agriculture and Food Research Organization GoogLeNet / InceptionNet Architectural Details https://deep-learning-study.tistory.com/389

- 159. National Agriculture and Food Research Organization GoogLeNet / InceptionNet Architectural Details Convolution Pooling Softmax Concatenation / Nomalize

- 160. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html https://www.robots.ox.ac.uk/~vgg/data/flowers/102/categories.html VGG16の例と同じデータセットを利用しています。

- 161. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab GoogLeNet Code Review (Inception module) https://github.com/pytorch/vision/blob/master/torchvision/models/googlenet.py

- 162. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab GoogLeNet Code Review (Auxiliary classifier) https://github.com/pytorch/vision/blob/master/torchvision/models/googlenet.py

- 163. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab GoogLeNet Code Review (Architecture)

- 164. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab ImageNetは1000クラス区分→Flower Datasetは102クラス区分が必要

- 165. National Agriculture and Food Research Organization GoogLeNet / InceptionNet: Practice using Colab

- 166. National Agriculture and Food Research Organization Top 10 CNN Architectures Every Machine Learning Engineer Should Know, Top 10 CNN Architectures Every Machine Learning Engineer Should Know | by Trung Anh Dang | Jan, 2021 | Towards Data Science http://cs231n.stanford.edu/slides/2020/lecture_9.pdf ResNet He Initialization

- 167. National Agriculture and Food Research Organization ResNet https://openaccess.thecvf.com/content_cvpr_2016/papers/He_Deep_Residual_Learning_CVPR_2016_paper.pdf

- 168. National Agriculture and Food Research Organization ResNet https://axa.biopapyrus.jp/deep-learning/cnn/image-classification/resnet.html Shortcut connection = Skip connection

- 169. National Agriculture and Food Research Organization ResNet https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

- 170. National Agriculture and Food Research Organization ResNet https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

- 171. National Agriculture and Food Research Organization ResNet

- 172. National Agriculture and Food Research Organization ResNet

- 173. National Agriculture and Food Research Organization ResNet: Practice using Colab Data processing (102 Category Flower Dataset) https://www.robots.ox.ac.uk/~vgg/data/flowers/102/index.html https://www.robots.ox.ac.uk/~vgg/data/flowers/102/categories.html VGG16とGoogLeNetの例と同じデータセットを利用しています。

- 174. National Agriculture and Food Research Organization ResNet: Practice using Colab https://www.programmersought.com/article/68543552068/ https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

- 175. National Agriculture and Food Research Organization ResNet: Practice using Colab

- 176. National Agriculture and Food Research Organization ResNet: Practice using Colab https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

- 177. National Agriculture and Food Research Organization ResNet: Practice using Colab https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

- 178. National Agriculture and Food Research Organization ResNet: Practice using Colab

- 179. National Agriculture and Food Research Organization ResNet: Practice using Colab