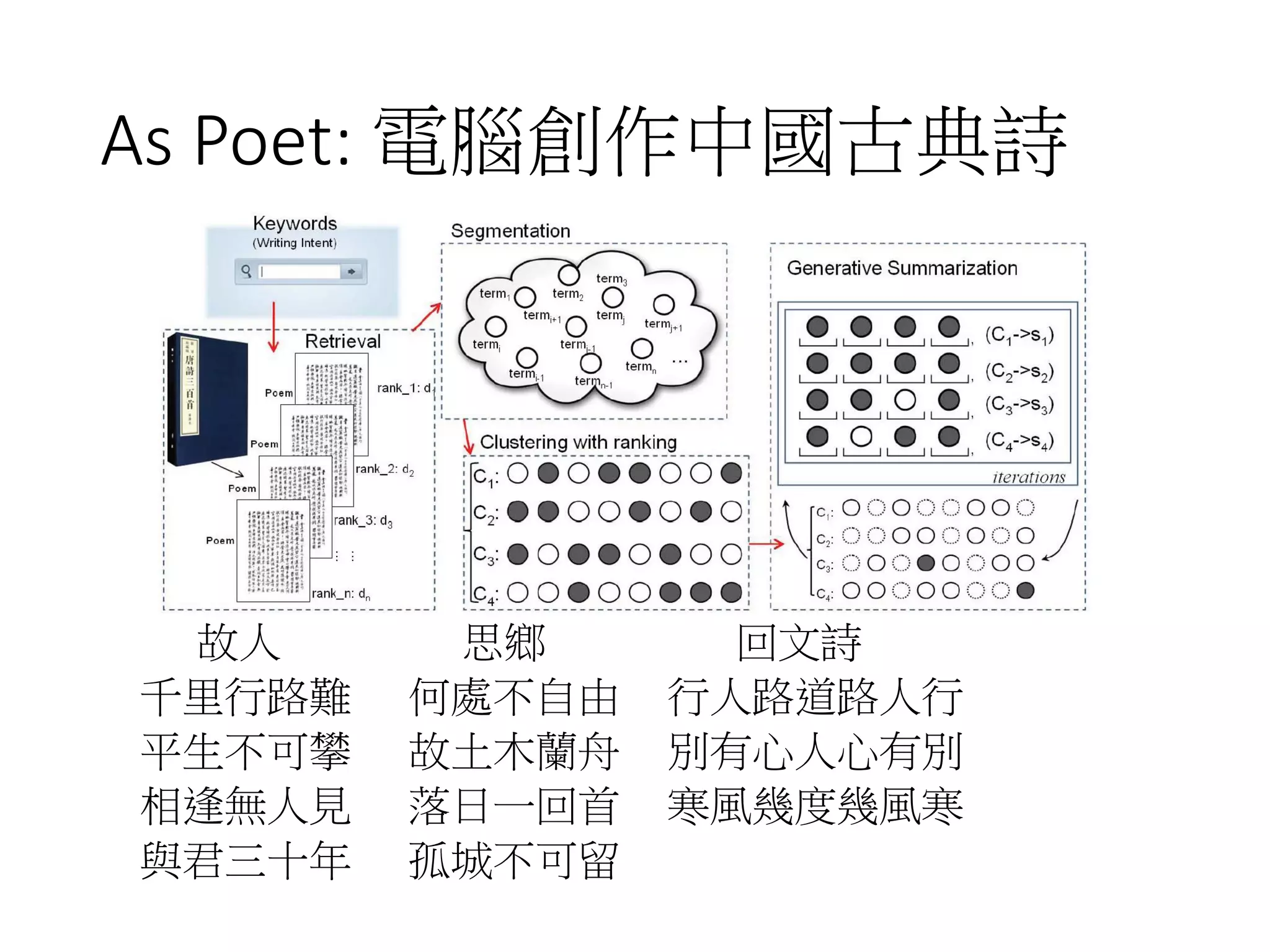

This document presents a comprehensive overview of artificial intelligence (AI), its historical developments, current applications, and future directions, specifically focusing on machine learning and recommendation systems. It discusses the evolution of AI from its theoretical foundations to practical uses across various domains including IoT, natural language processing, and e-commerce. The speaker, Professor Shou-de Lin, highlights significant milestones in AI, competitions that drive research, and potential future breakthroughs in machine discovery and human-computer interaction.

![Semi-supervised Learning

• Only a small

portion of data

are annotated

(usually due to

high annotation

cost)

• Leverage the

unlabeled data

for better

performance

16

[Zhu2008]](https://image.slidesharecdn.com/md-161124235136/75/DSC-x-TAAI-2016-71-2048.jpg)

![Transfer Learning

• Example: the knowledge for

recognizing an airplane may

be helpful for recognizing a

bird.

• Improving a learning task via incorporating

knowledge from learning tasks in other domains with

different feature space and data distribution.

• Reduces expensive data-labeling efforts

[Pan & Yang 2010]

2016/11/24 27

• Approach categories:

(1) Instance-transfer

(2) Feature-representation-transfer

(3) Parameter-transfer

(4) Relational-knowledge-transfer](https://image.slidesharecdn.com/md-161124235136/75/DSC-x-TAAI-2016-82-2048.jpg)

![Ranking of Recommendations

• Discounted cumulative gain (DCG)

• 𝐷𝐶𝐺 =

1

|𝑈| 𝑢 𝑖∈𝑈 𝑗=1

𝐿 𝑅 𝑖,𝑗

max{1,log2 𝑗}

• There are 𝐿 items in the ranked list

• 𝑈: User set

• Spearman’s rank correlation: For 𝑛 items

• 𝜌 = 1 −

6 𝑖=1

𝑛

𝑥 𝑖−𝑦 𝑖

2

𝑛3−𝑛

• 1 ≤ 𝑥𝑖 ≤ 𝑛: Predicted rank of item 𝑖

• 1 ≤ 𝑦𝑖 ≤ 𝑛: True rank of item 𝑖

• Kendall’s tau: For all 𝑛

2

pairs of items (𝑖, 𝑗)

• 𝜏 =

𝑐−𝑑

𝑛

2

, in range [−1,1]

• There are 𝑐 concordant pairs

• 𝑥𝑖 > 𝑥𝑗, 𝑦𝑖 > 𝑦𝑗 or 𝑥𝑖 < 𝑥𝑗, 𝑦𝑖 < 𝑦𝑗

• There are 𝑑 discordant pairs

• 𝑥𝑖 > 𝑥𝑗, 𝑦𝑖 < 𝑦𝑗 or 𝑥𝑖 < 𝑥𝑗, 𝑦𝑖 > 𝑦𝑗

70TAAI 16 Tutorial, Shou-de Lin](https://image.slidesharecdn.com/md-161124235136/75/DSC-x-TAAI-2016-176-2048.jpg)