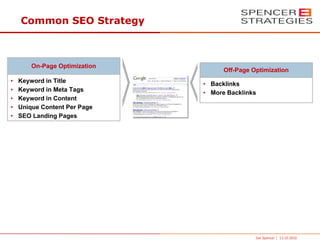

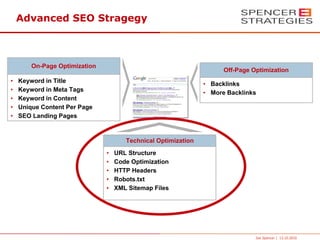

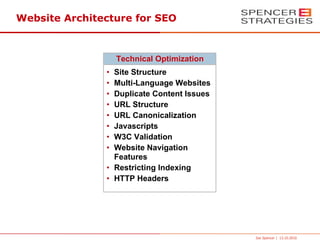

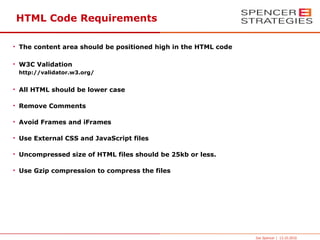

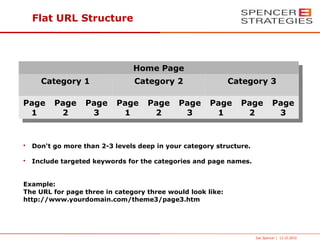

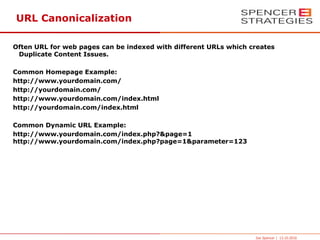

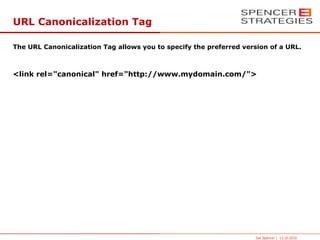

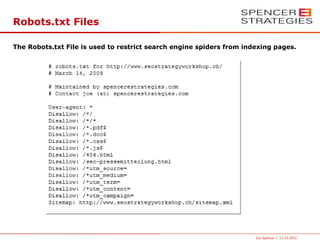

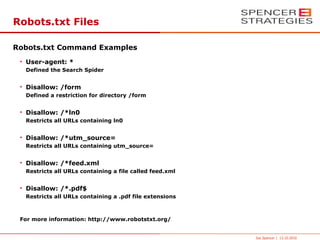

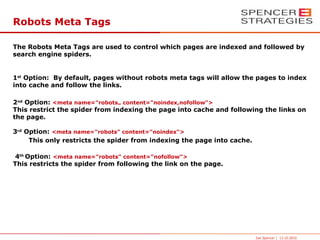

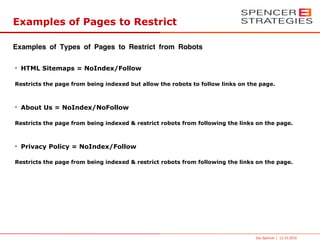

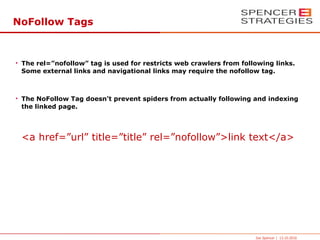

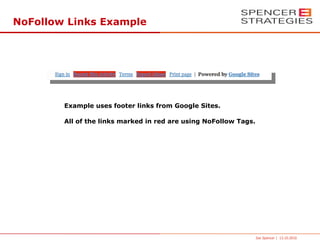

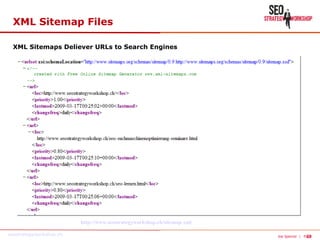

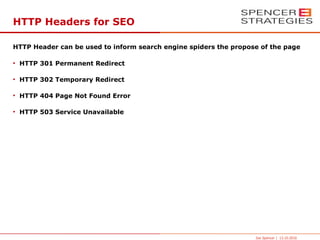

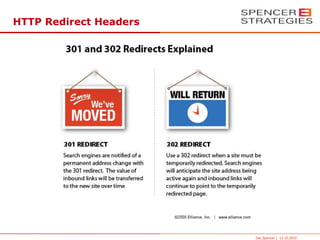

The document outlines a comprehensive SEO strategy emphasizing on-page and off-page optimization techniques, including keyword usage and backlinks. It also discusses technical optimization aspects like URL structure, canonicalization, and managing web crawlers using robots.txt and meta tags. The presentation stresses the importance of proper HTML, CSS, and JavaScript coding practices to enhance website performance and search engine visibility.