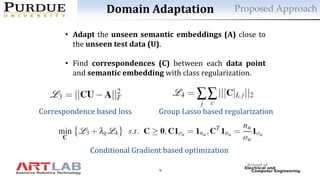

This document proposes a three-step approach for zero-shot image recognition using relational matching, domain adaptation, and calibration. The approach uses relational matching to find structural correspondences between semantic embeddings and features, domain adaptation to adapt unseen semantic embeddings to the test data domain, and calibration to reduce bias towards seen classes. Experimental results on four datasets show improved zero-shot and generalized zero-shot classification performance compared to previous methods, with domain adaptation providing the most benefit. Analysis of hubness and convergence properties are also presented.

![4

IntroductionRelated Work of ZSL

Zero-shot

Learning

Embedding

Methods

Transductive

approaches

Generative

approaches

Hybrid

approaches

• Linear embedding

[Bernardino et al. ICML’15]

• Deep Embedding

[Zhang et al. CVPR’17]

• Multiview

[Fu et al. TPAMI’15]

• Dictionary Learning

[Kodirov et al. ICCV’15]

• Constrained VAE

[Verma et al. CVPR’18]

• Feature GAN

[Xian et al. CVPR’18]

• Semantic Similarity

[Zhang et al. CVPR ’15]

• Convex Combo

[Norouzi et al. ICLR’13]

[Relate feature & semantics ]

[Use unlabeled test data] [Generate data]

[Novel class from old class]](https://image.slidesharecdn.com/v3-190728151507/85/Zero-shot-Image-Recognition-Using-Relational-Matching-Adaptation-and-Calibration-4-320.jpg)

![6

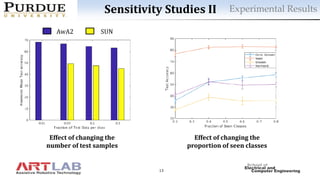

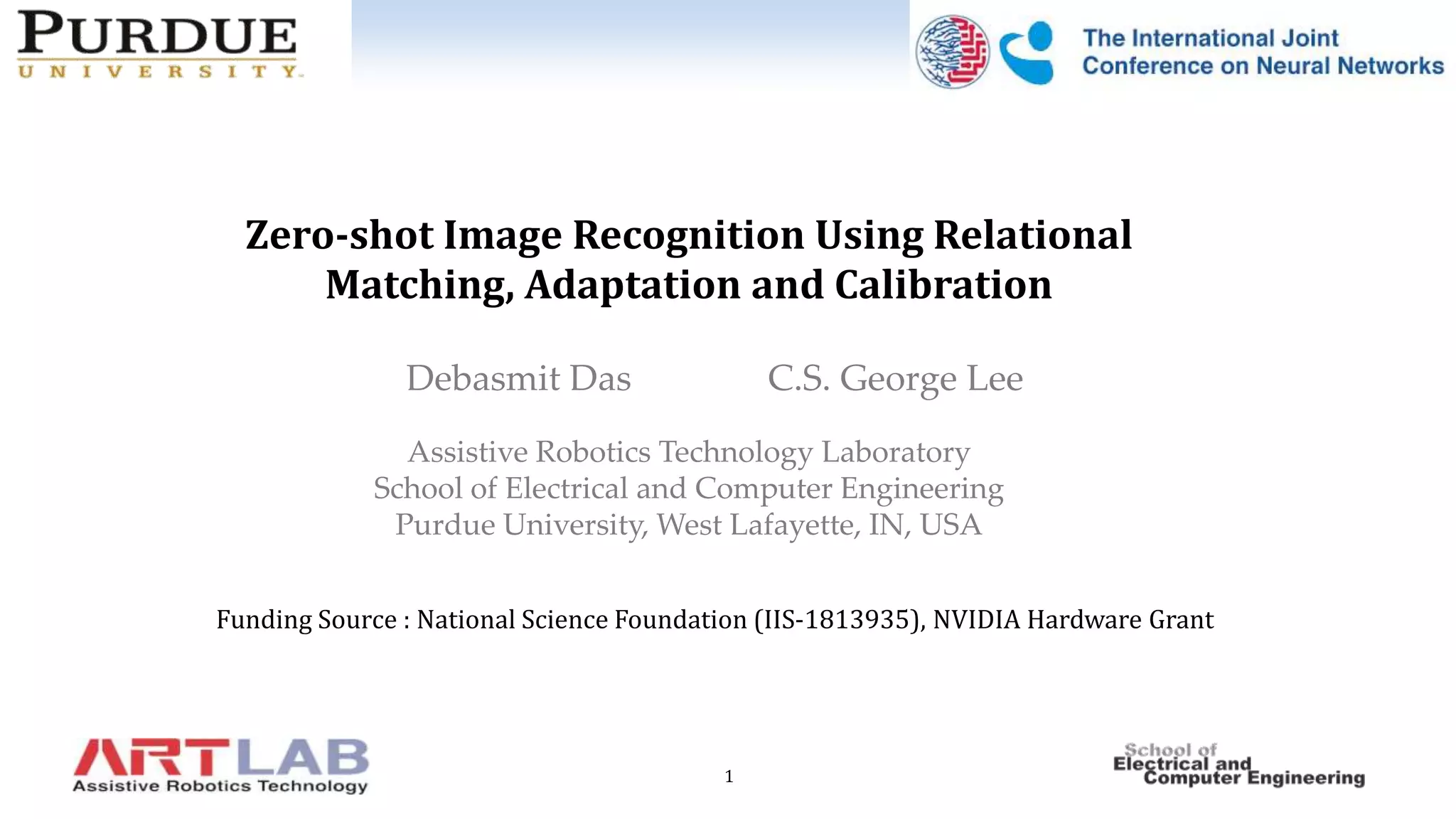

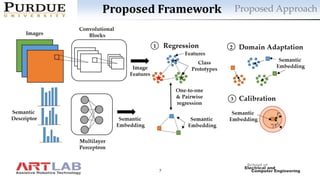

Proposed Solution

One-to-one and pairwise

regression

Domain Adaptation Calibration

• Need to adapt semantic

embeddings to unseen

test data.

• Use previous DA

approach [Das & Lee

EAAI’18].

• Find correspondences

between semantic

embedding and unseen

test samples.

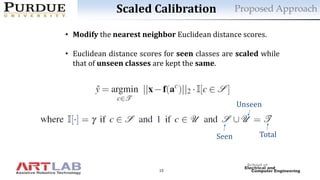

• Scaled calibration to

reduce scores of seen

classes.

• Implicit reduction of

variance of seen

classes.

• Structural matching

between semantics

and feature.

• Implicit

reduction of

dimensionality.

Proposed Approach

ADDRESS HUBNESS ADDRESS DOMAIN

SHIFT

ADDRESS BIASEDNESS](https://image.slidesharecdn.com/v3-190728151507/85/Zero-shot-Image-Recognition-Using-Relational-Matching-Adaptation-and-Calibration-6-320.jpg)

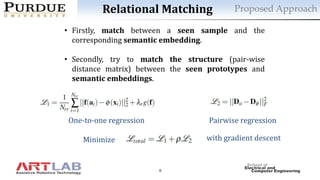

![11

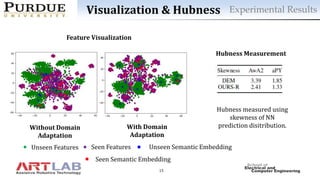

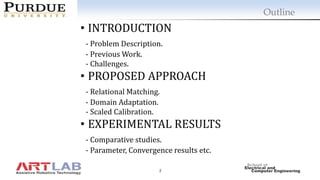

Experimental Results

• Animals with Attributes (AwA2)

[Lampert et al. TPAMI’14]

(Att – 85, Ysrc - 40 , Ytar - 10 )

• Pascal & Yahoo (aPY)

[Farhadi et al. CVPR’09]

(Att – 64, Ysrc - 20 , Ytar - 12 )

• Caltech-UCSD Birds (CUB)

[Welinder et al. ‘10]

(Att – 312, Ysrc - 150 , Ytar - 50 )

• Scene Understanding (SUN)

[Patterson et al. CVPR’12]

(Att – 102, Ysrc - 645, Ytar - 72 )

DatasetsComparison with previous work on four datasets.

Comparative Study

tr – Unseen class accuracy in traditional setting

u – Unseen class accuracy in generalized setting

s – Seen class accuracy in generalized setting

H – Harmonic mean of u and s

R – Relational Matching

RA – Relational Matching + Domain Adaptation

RC – Relational Matching + Scaled Calibration

RAC – Relational Matching + Domain

Adaptation + Scaled Calibration](https://image.slidesharecdn.com/v3-190728151507/85/Zero-shot-Image-Recognition-Using-Relational-Matching-Adaptation-and-Calibration-11-320.jpg)