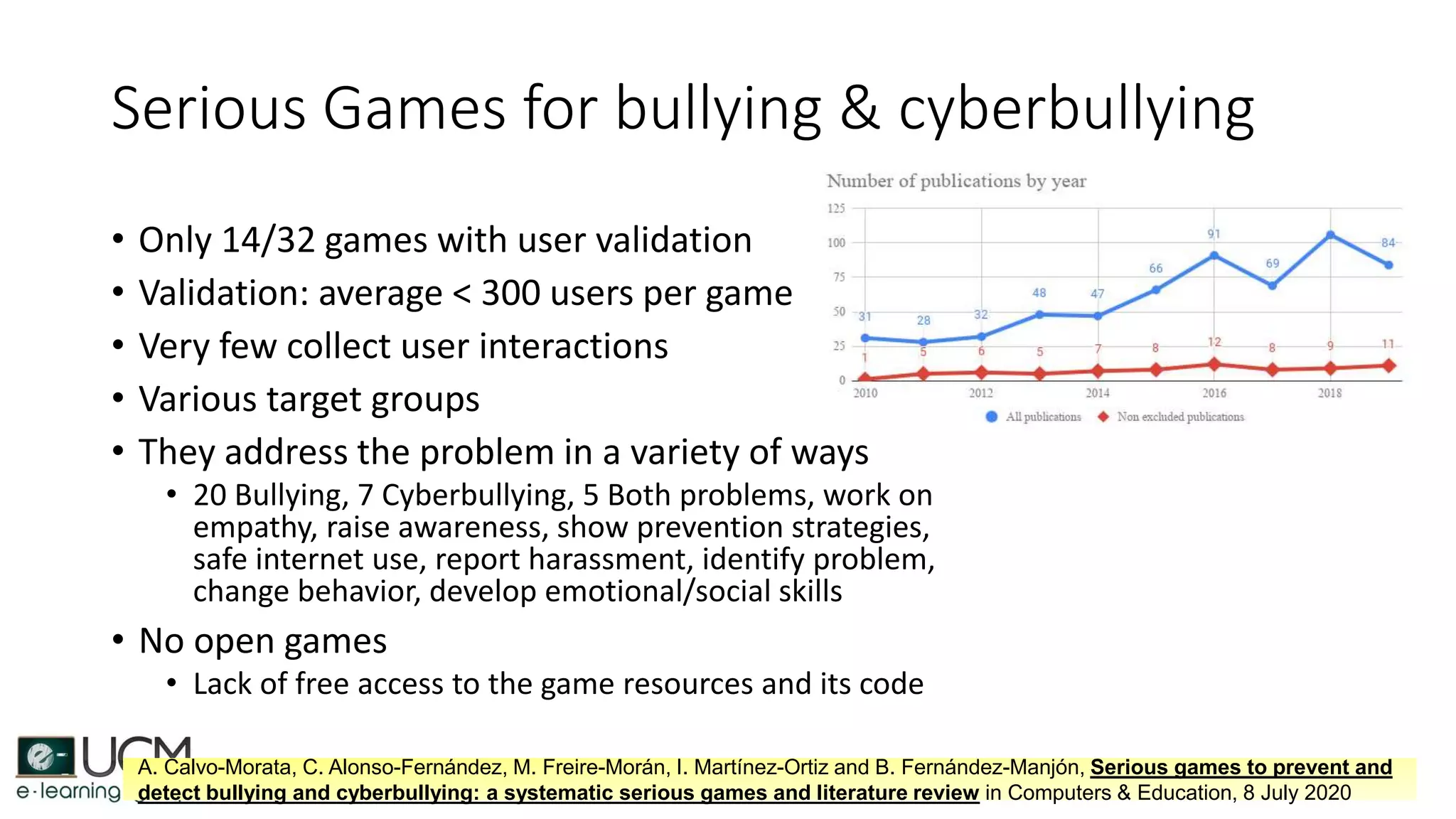

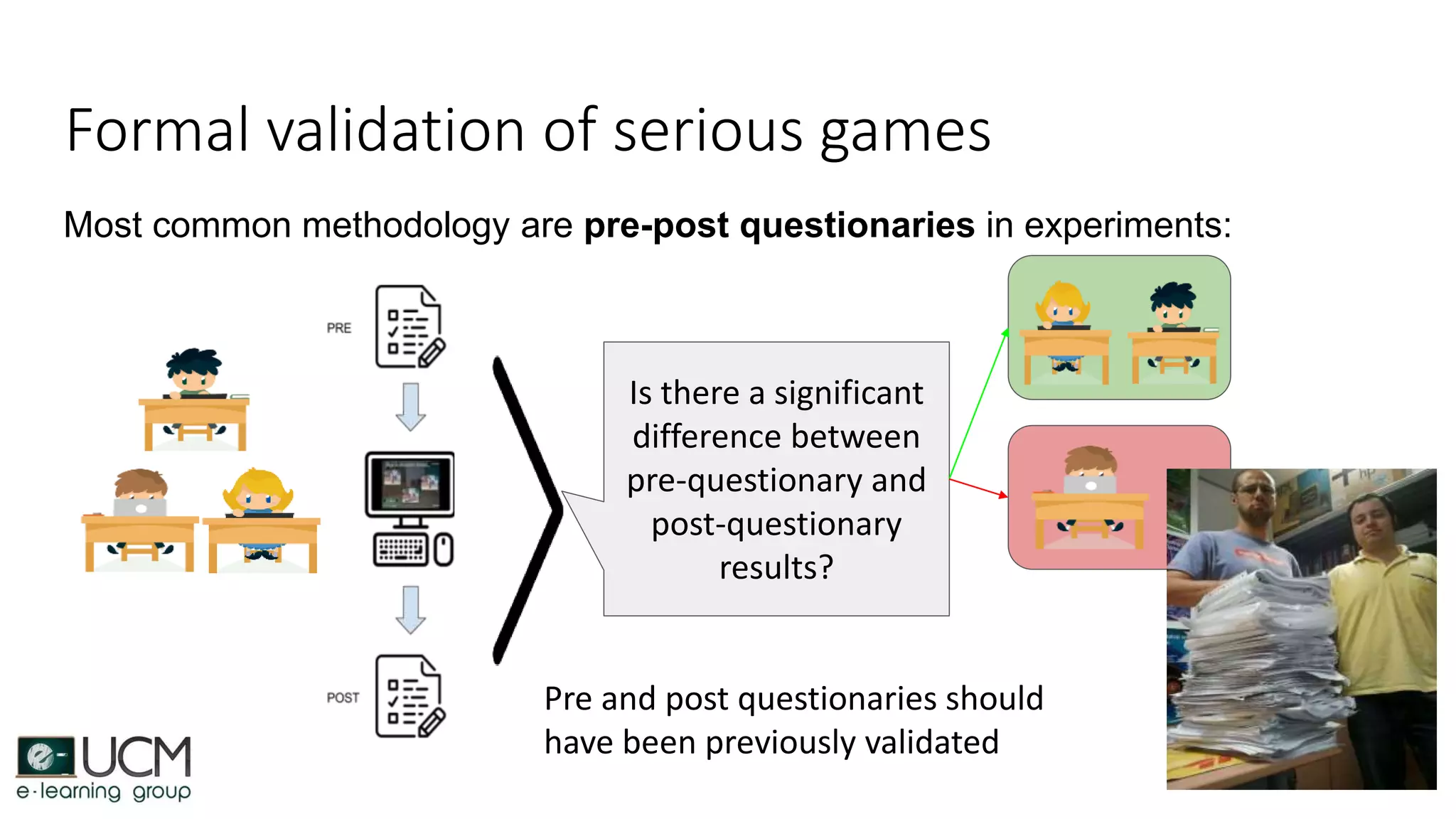

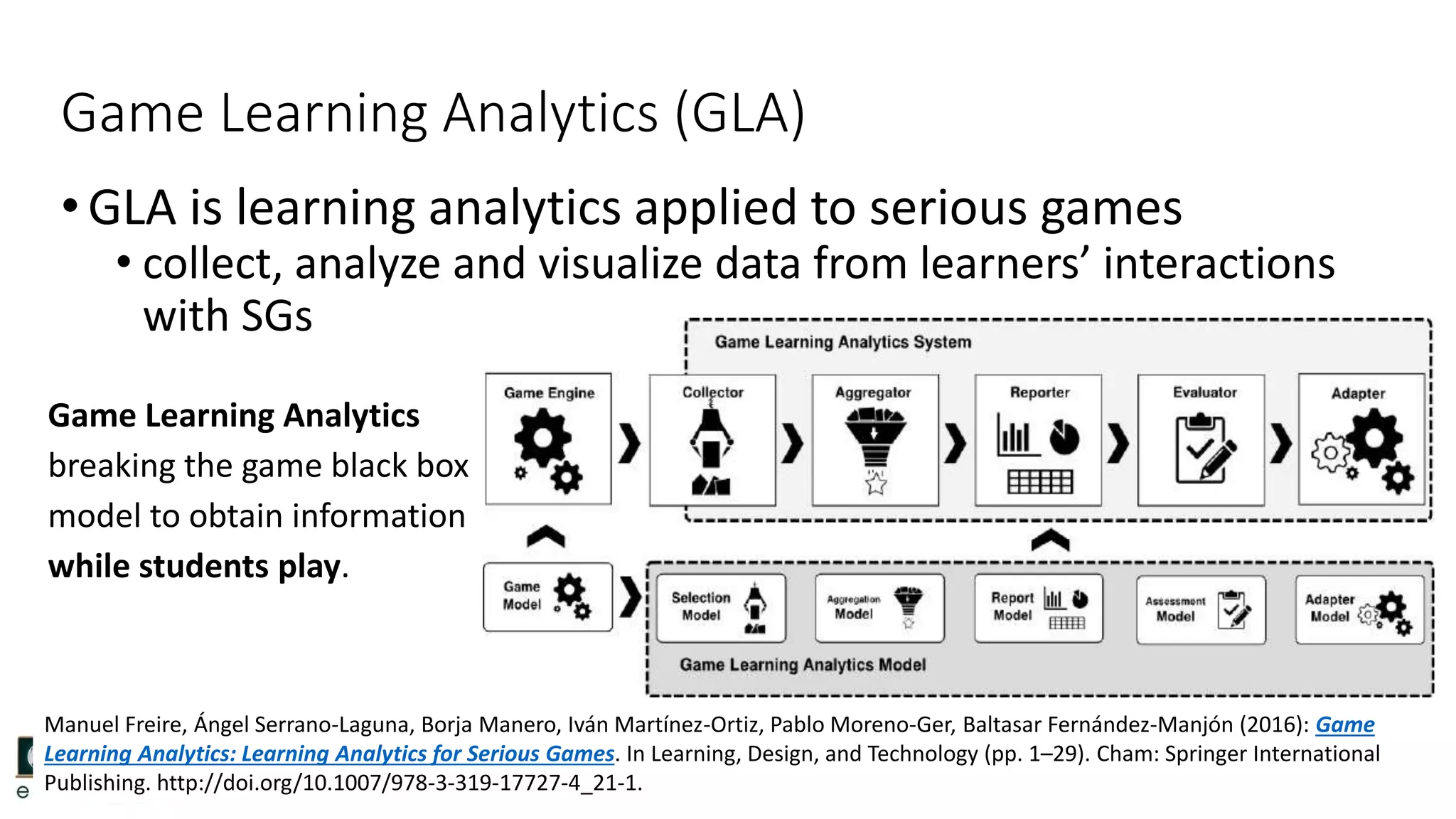

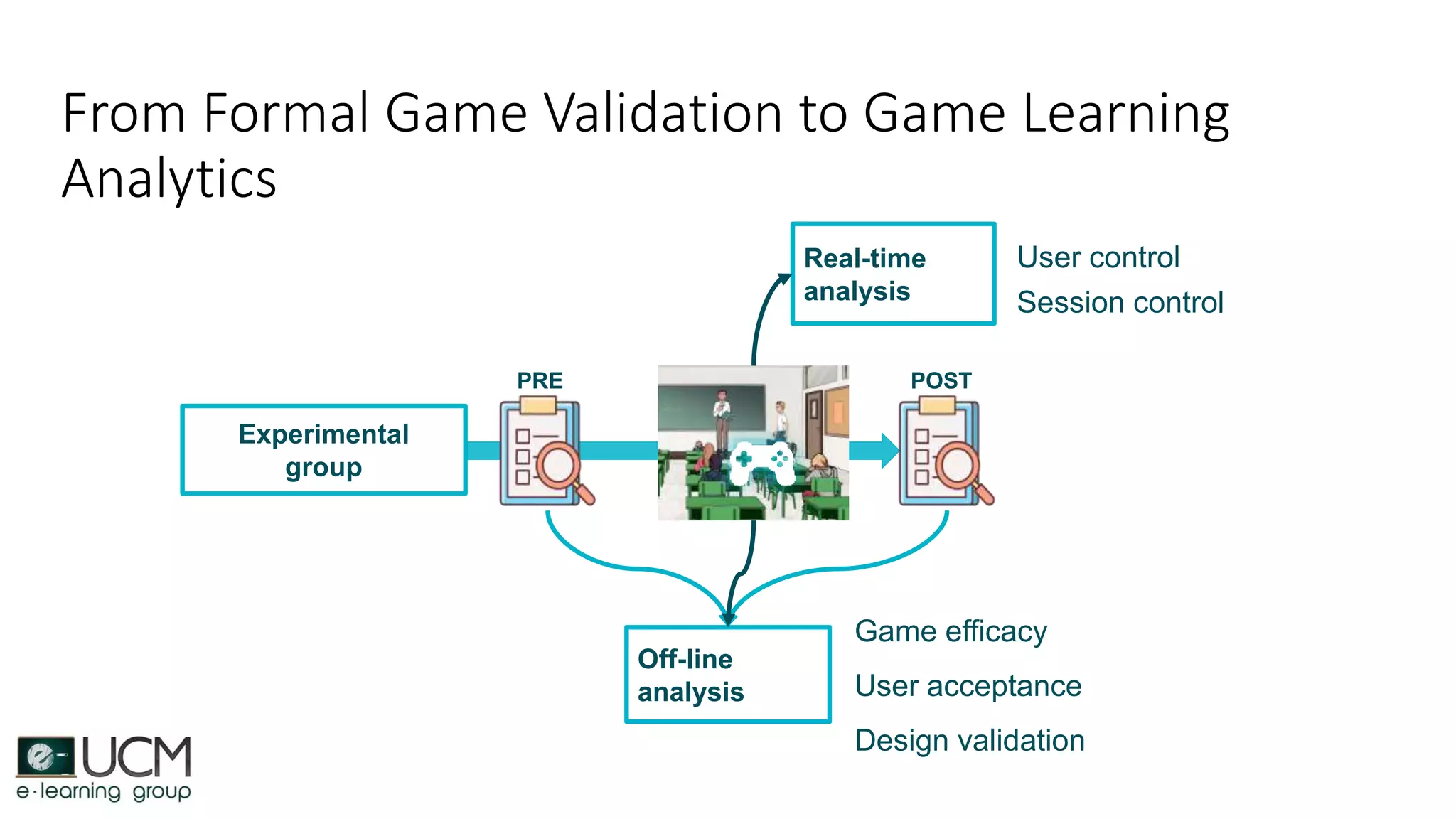

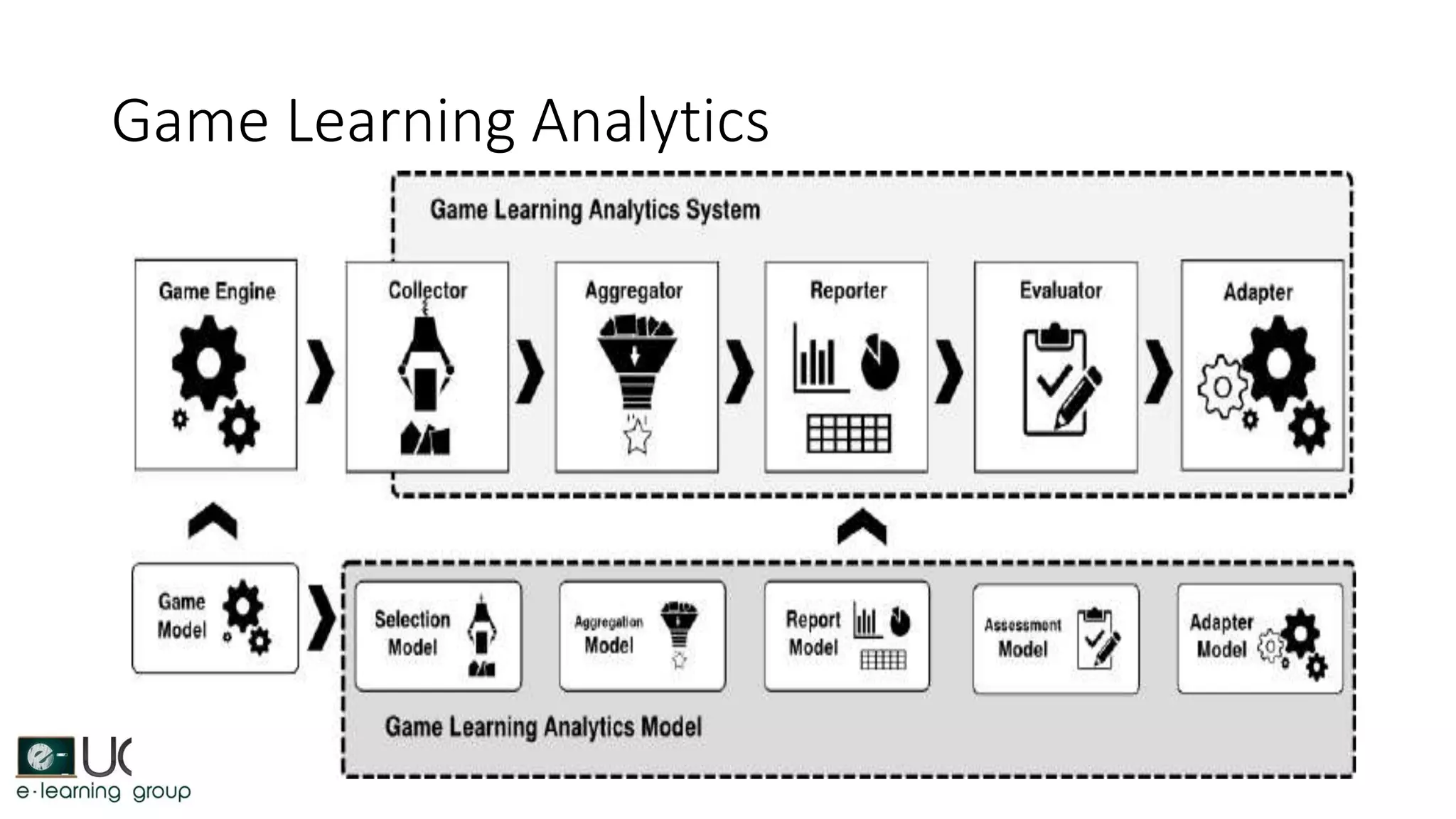

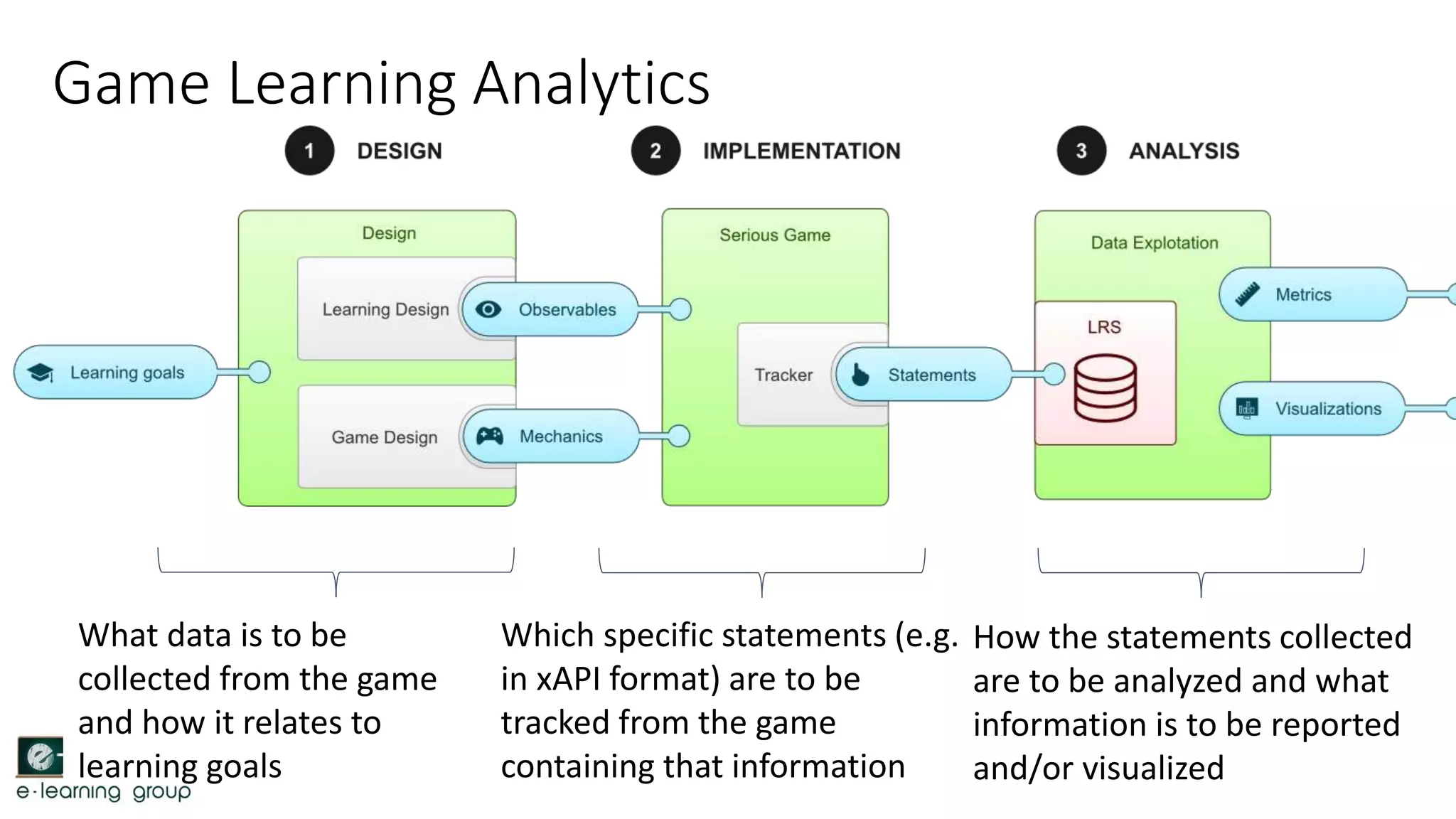

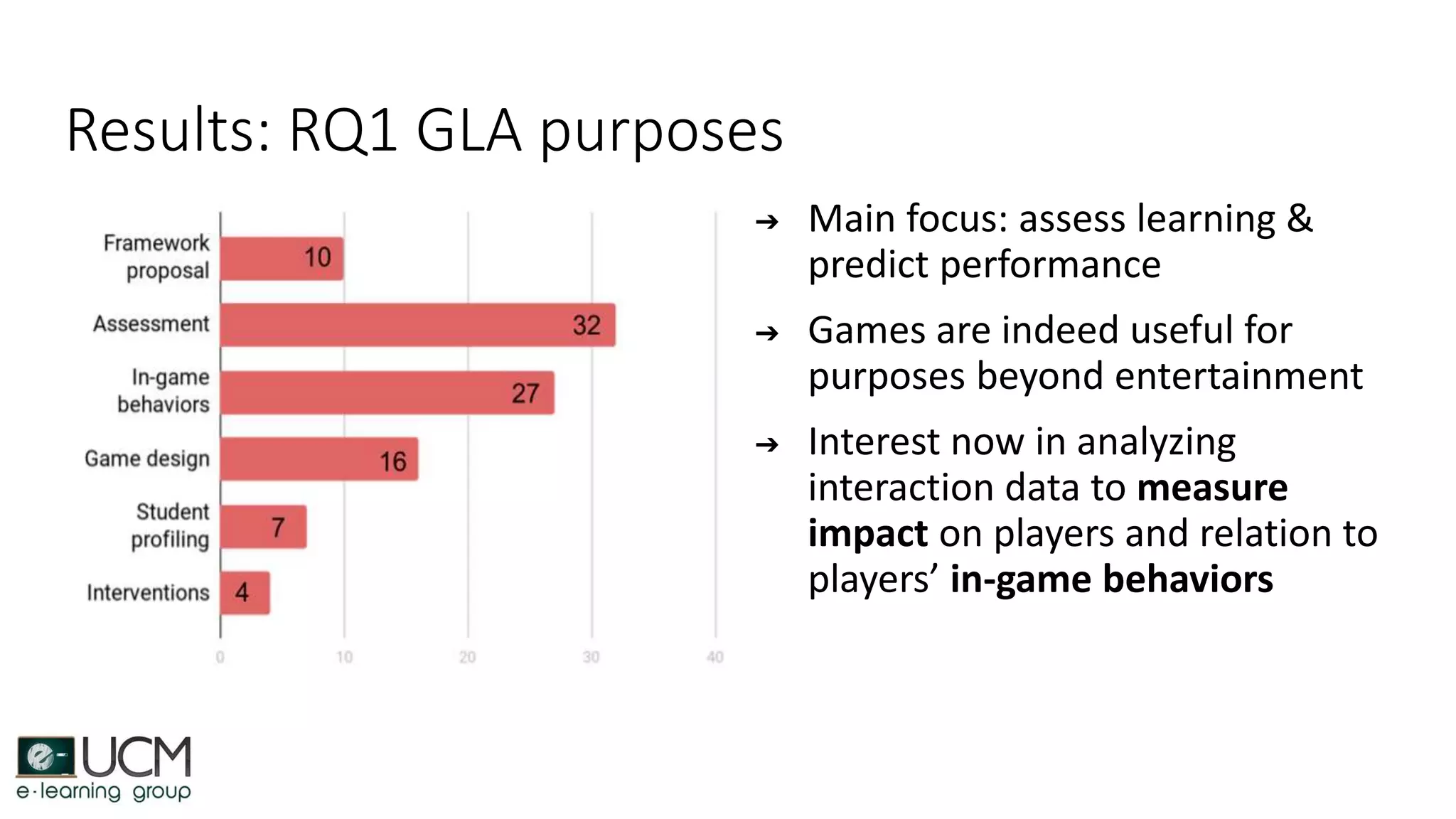

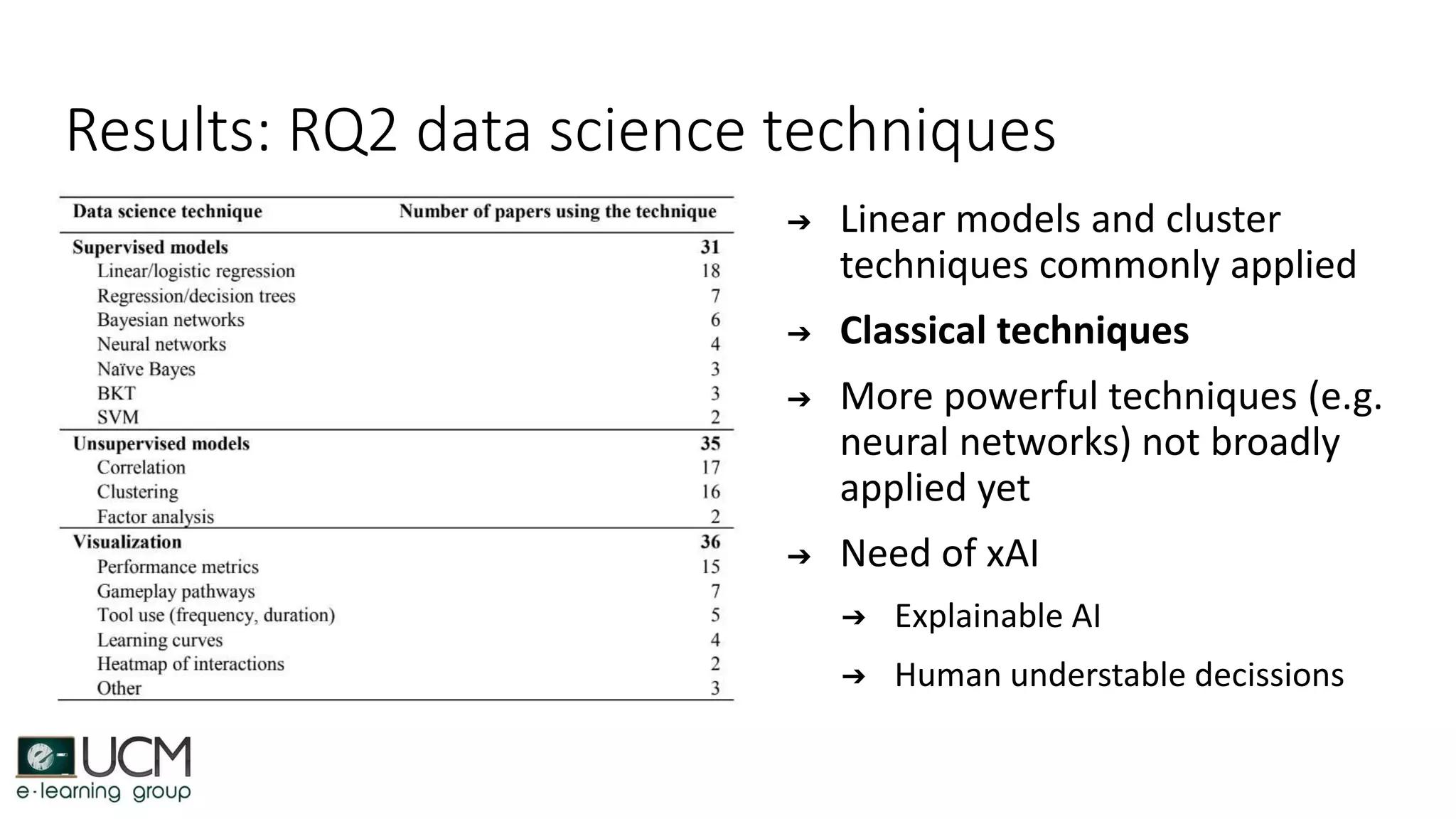

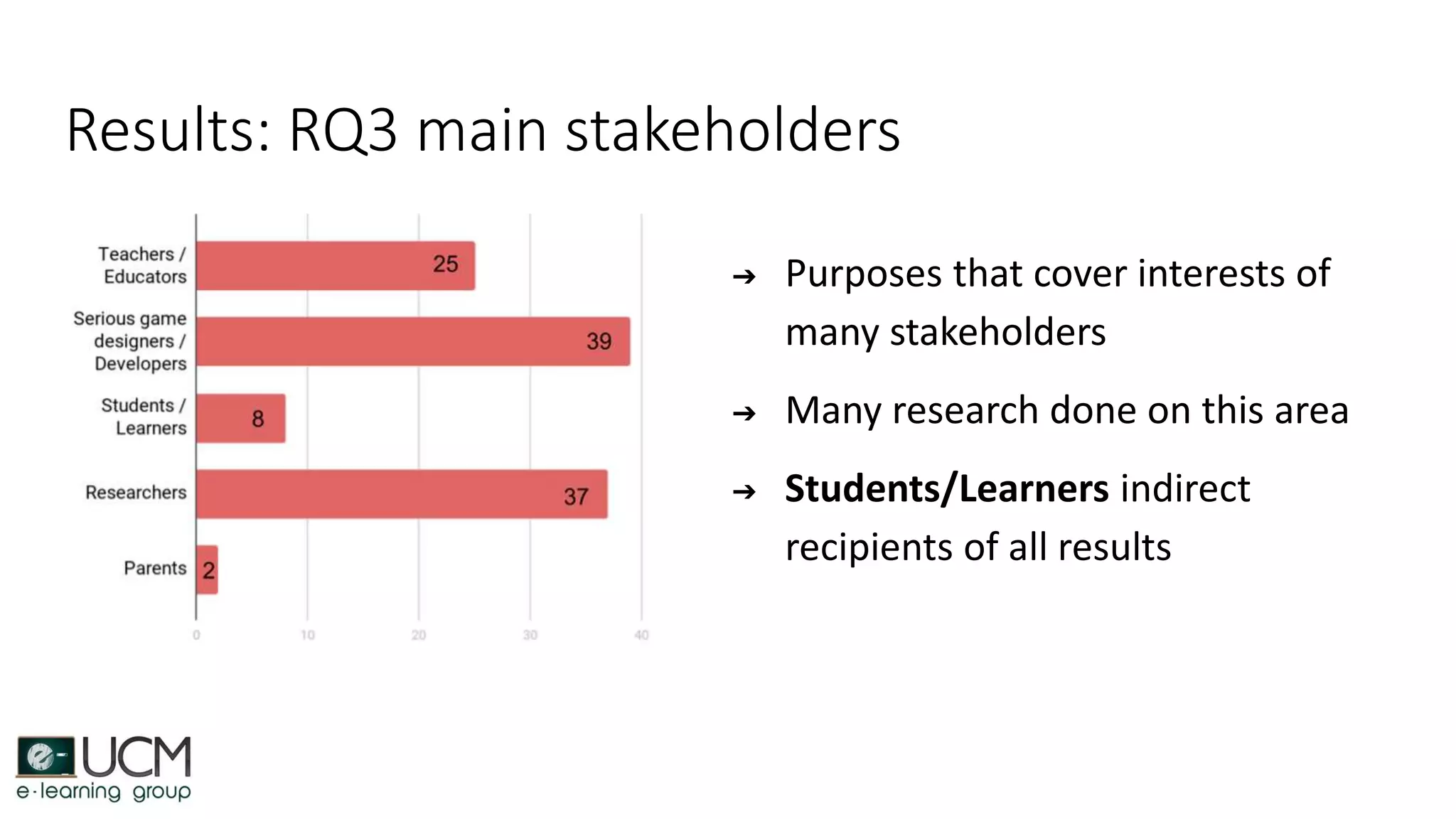

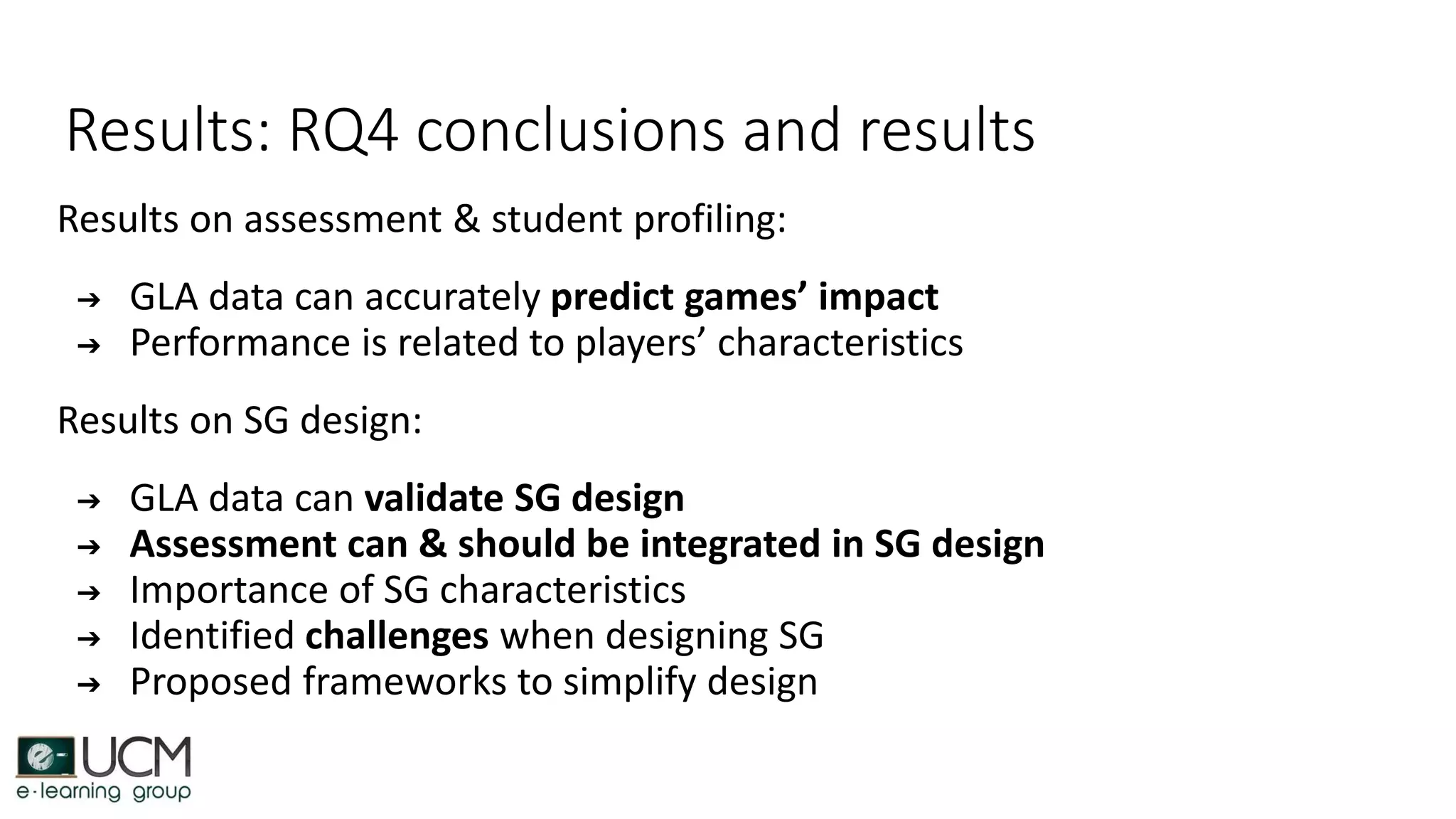

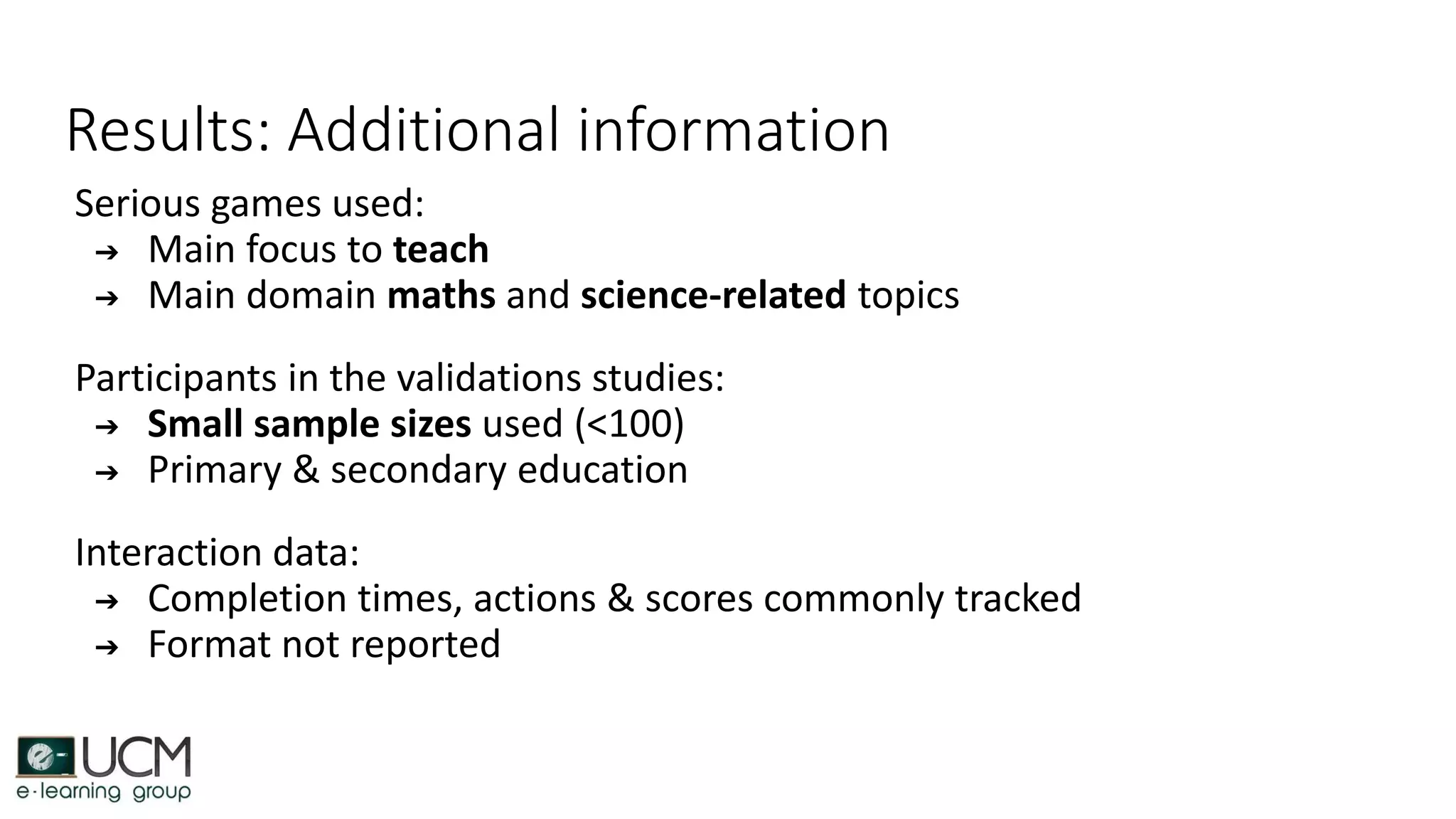

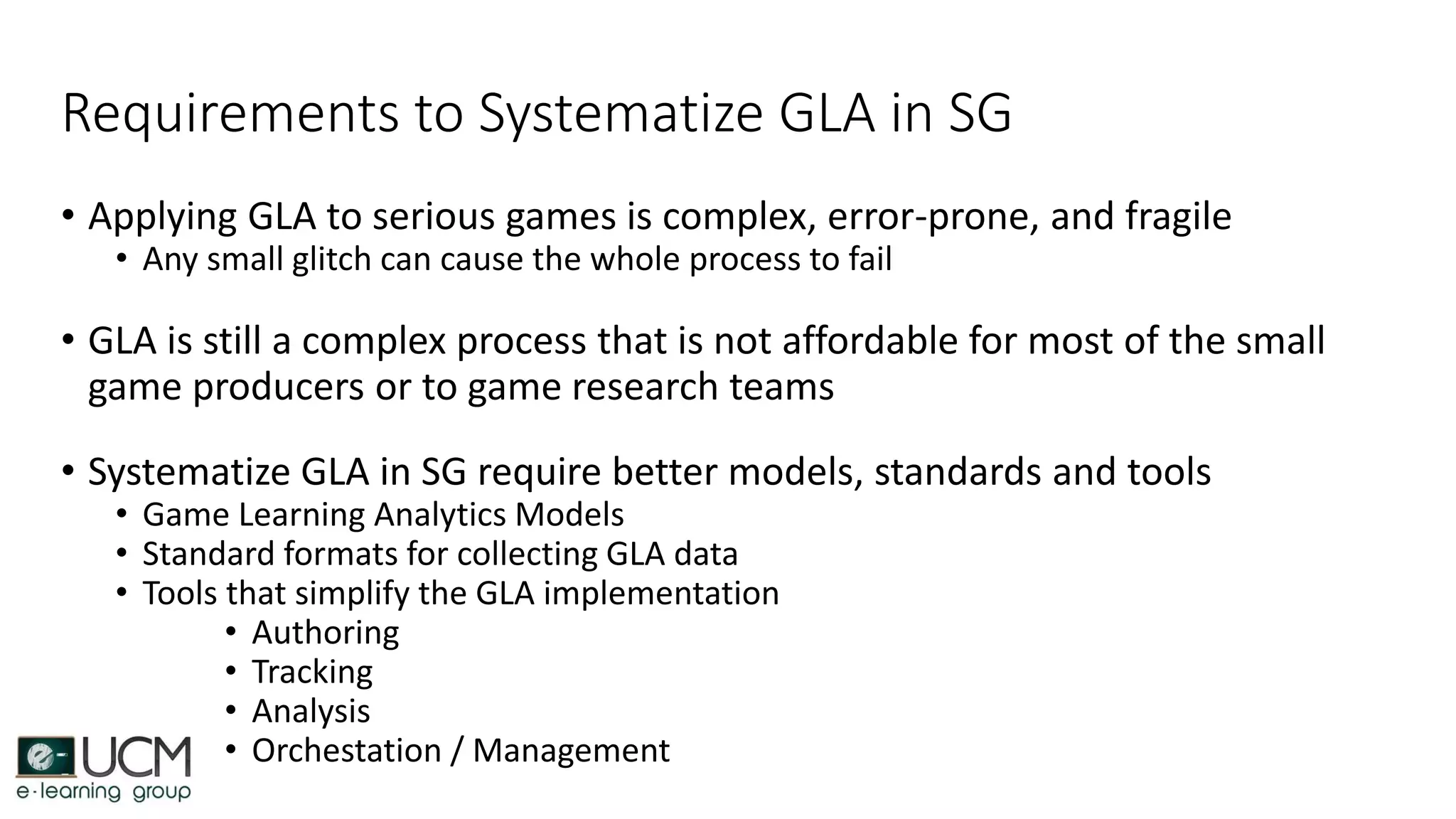

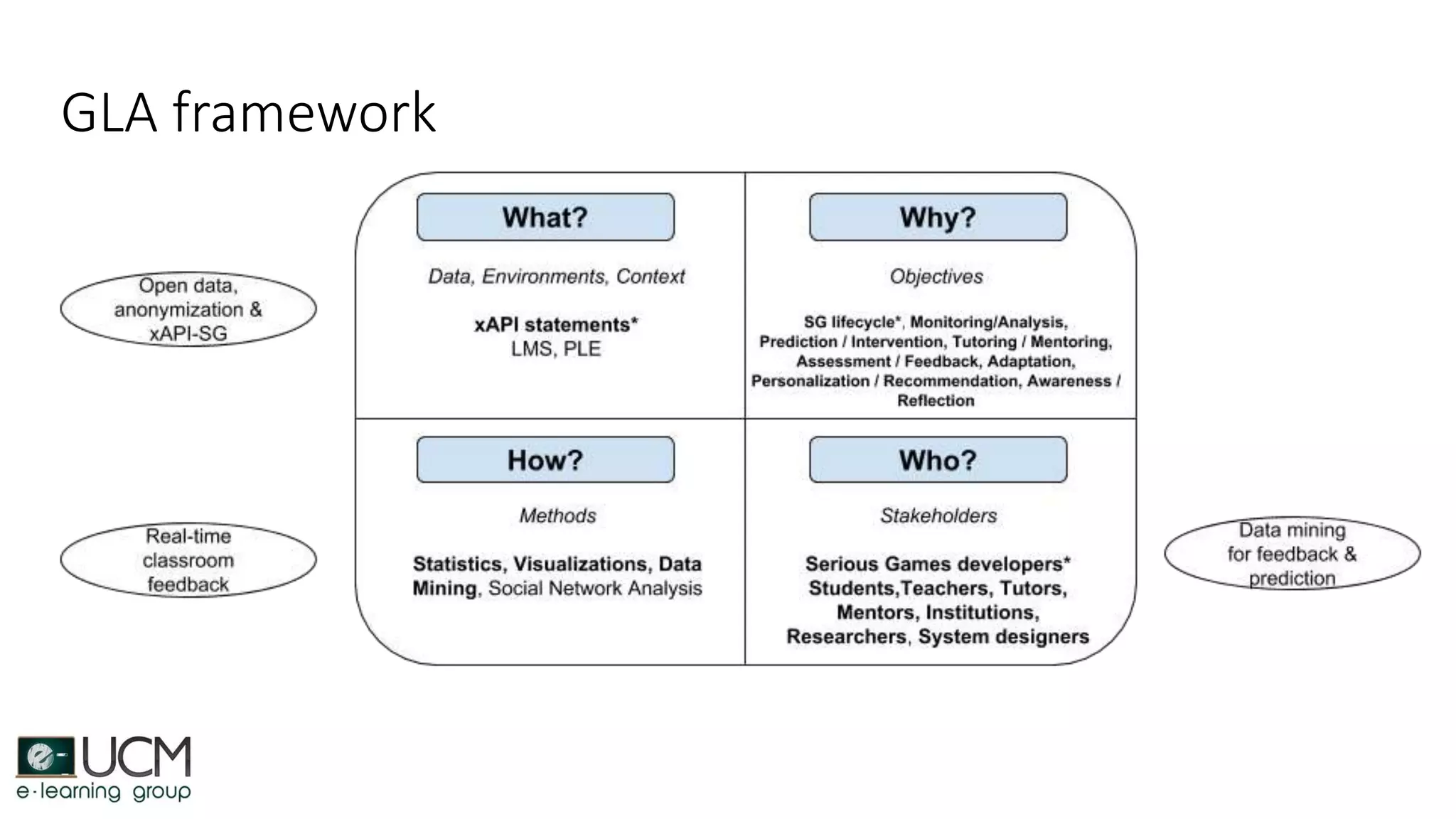

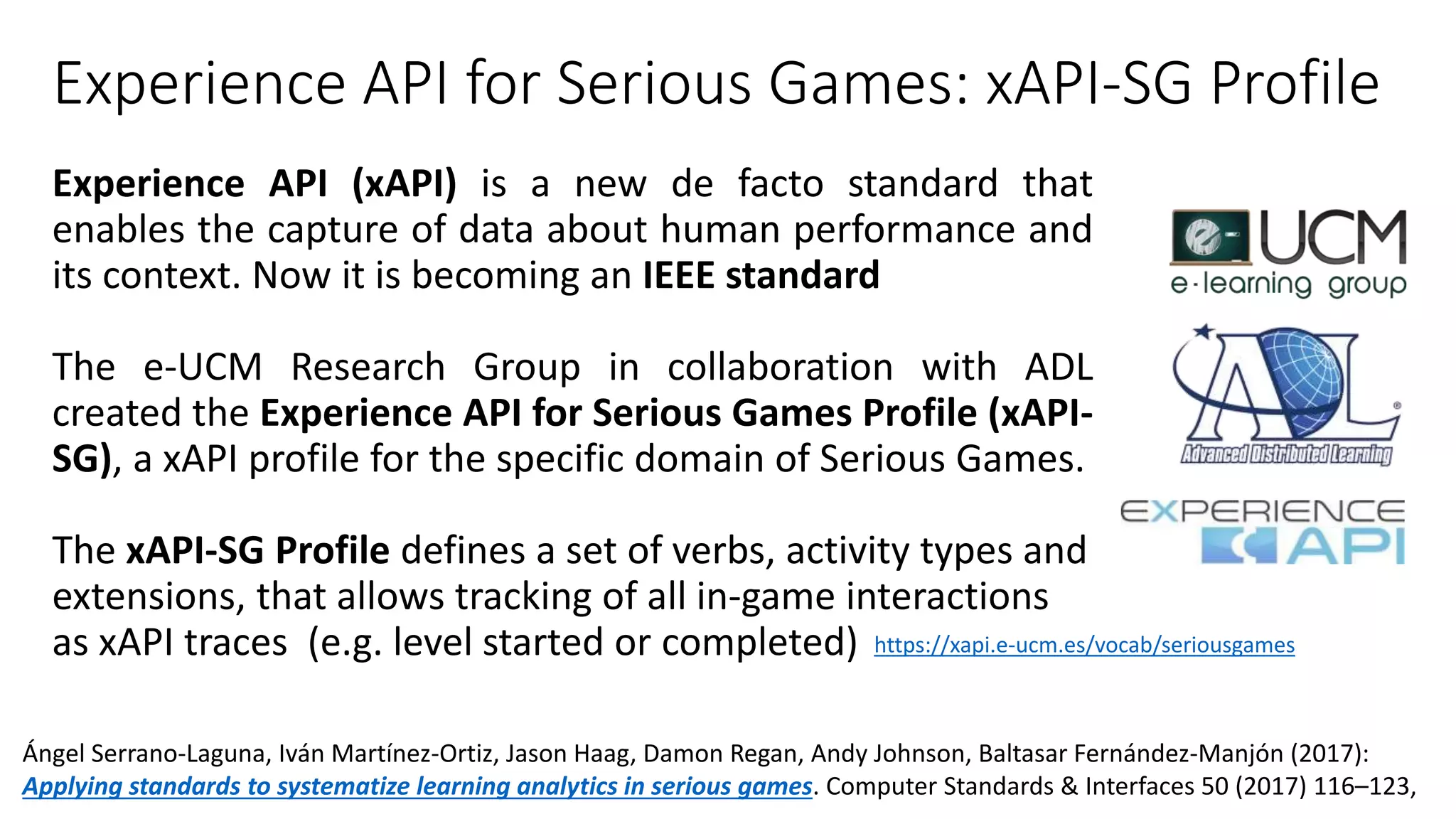

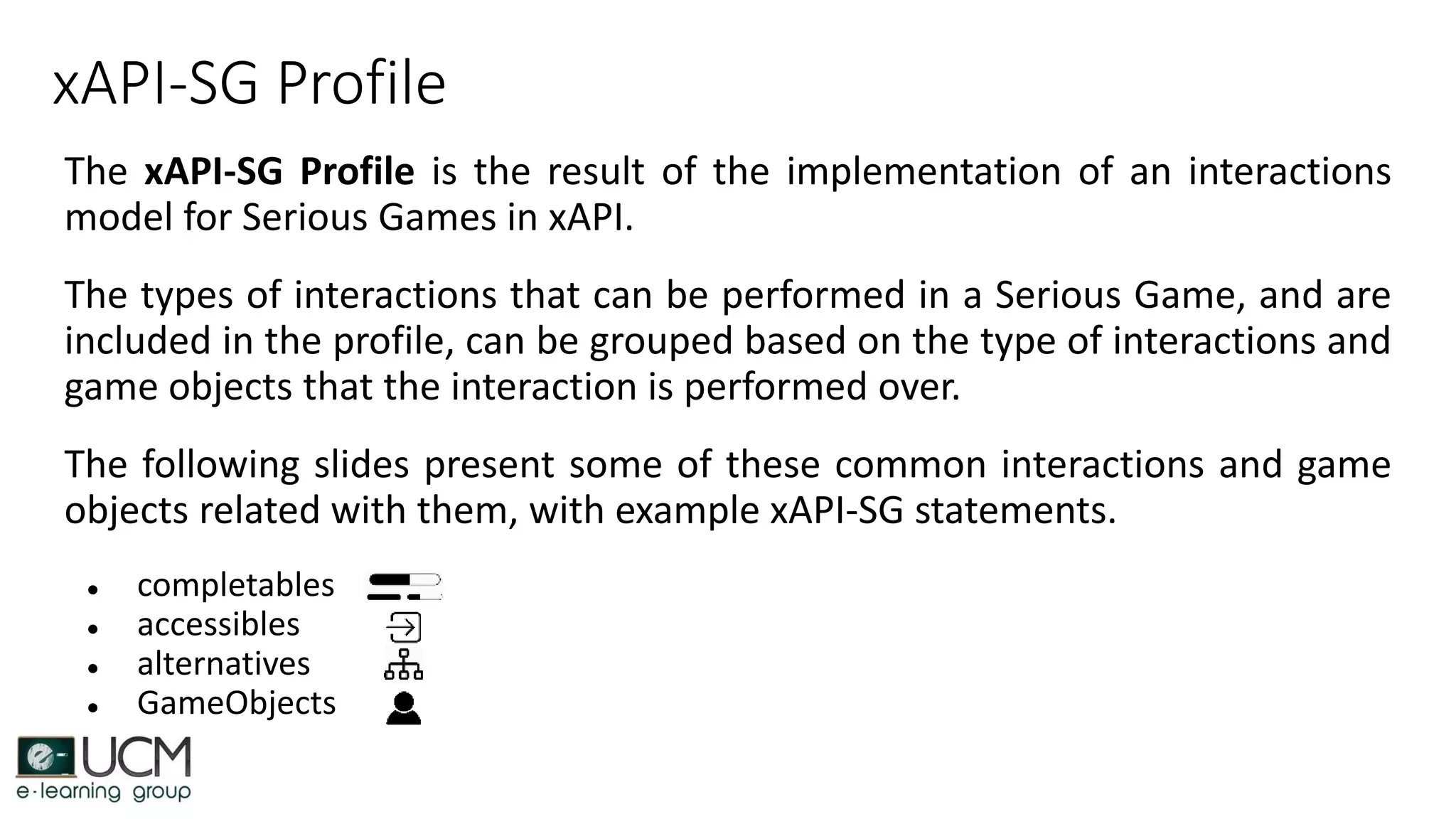

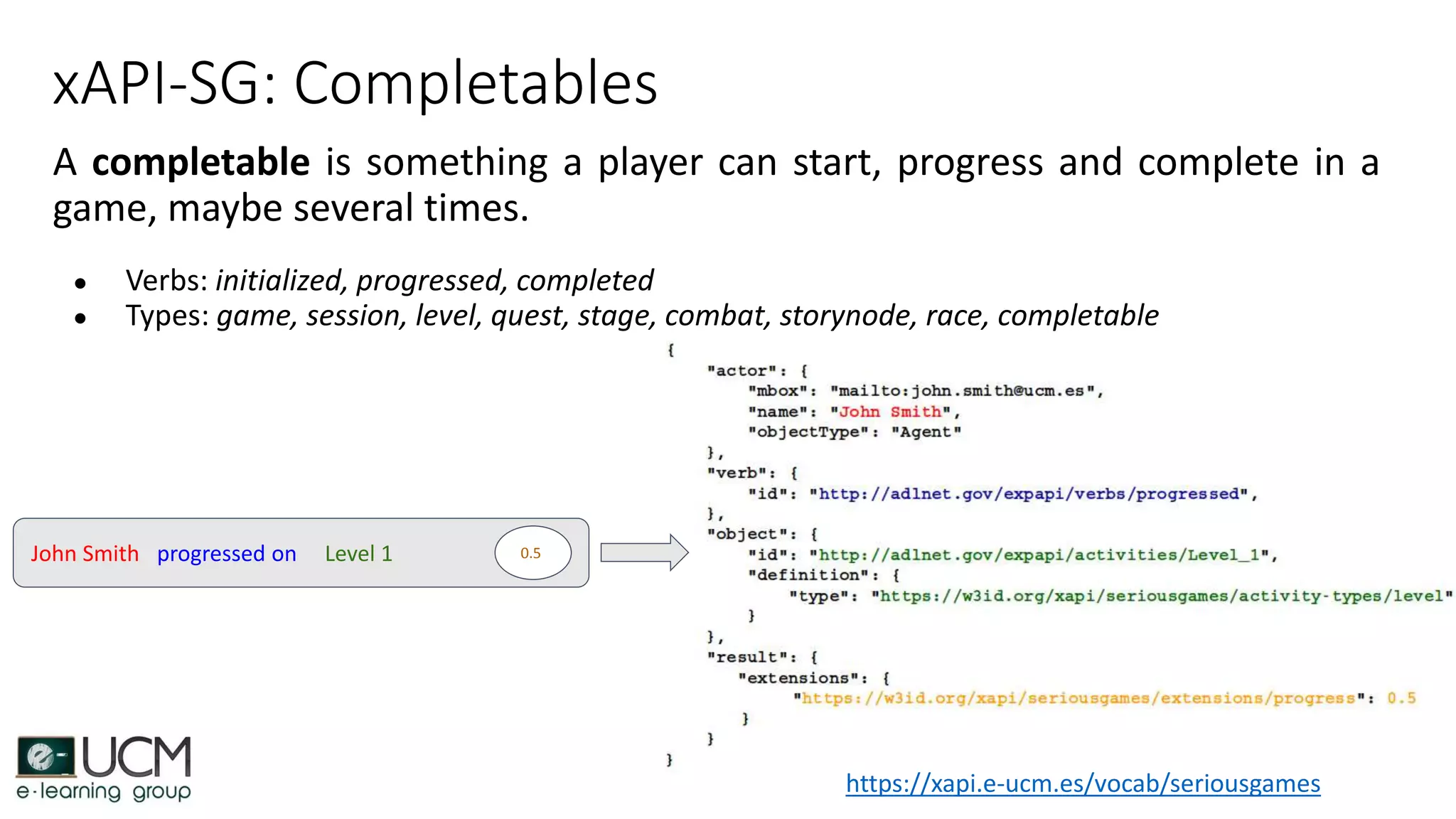

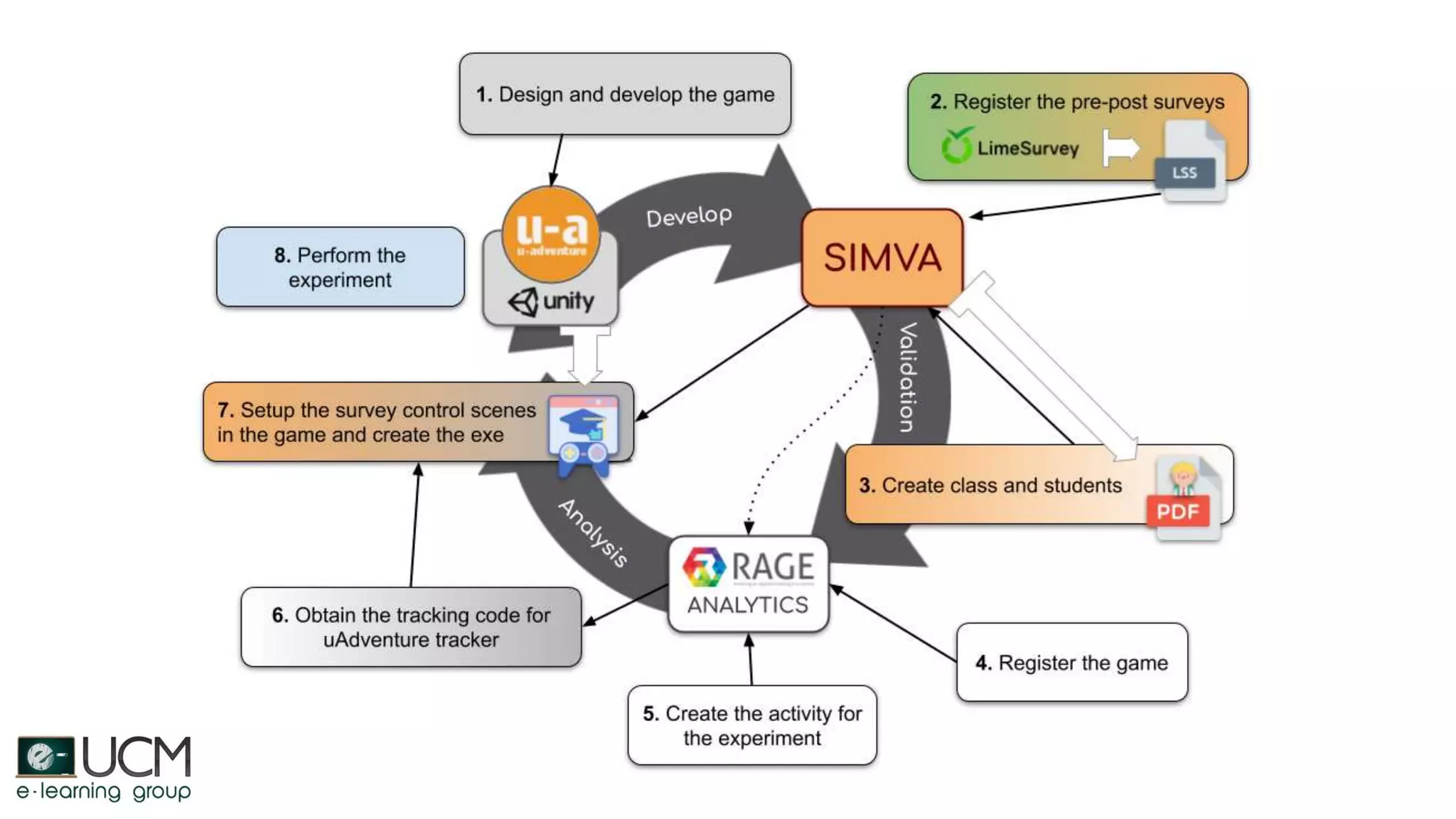

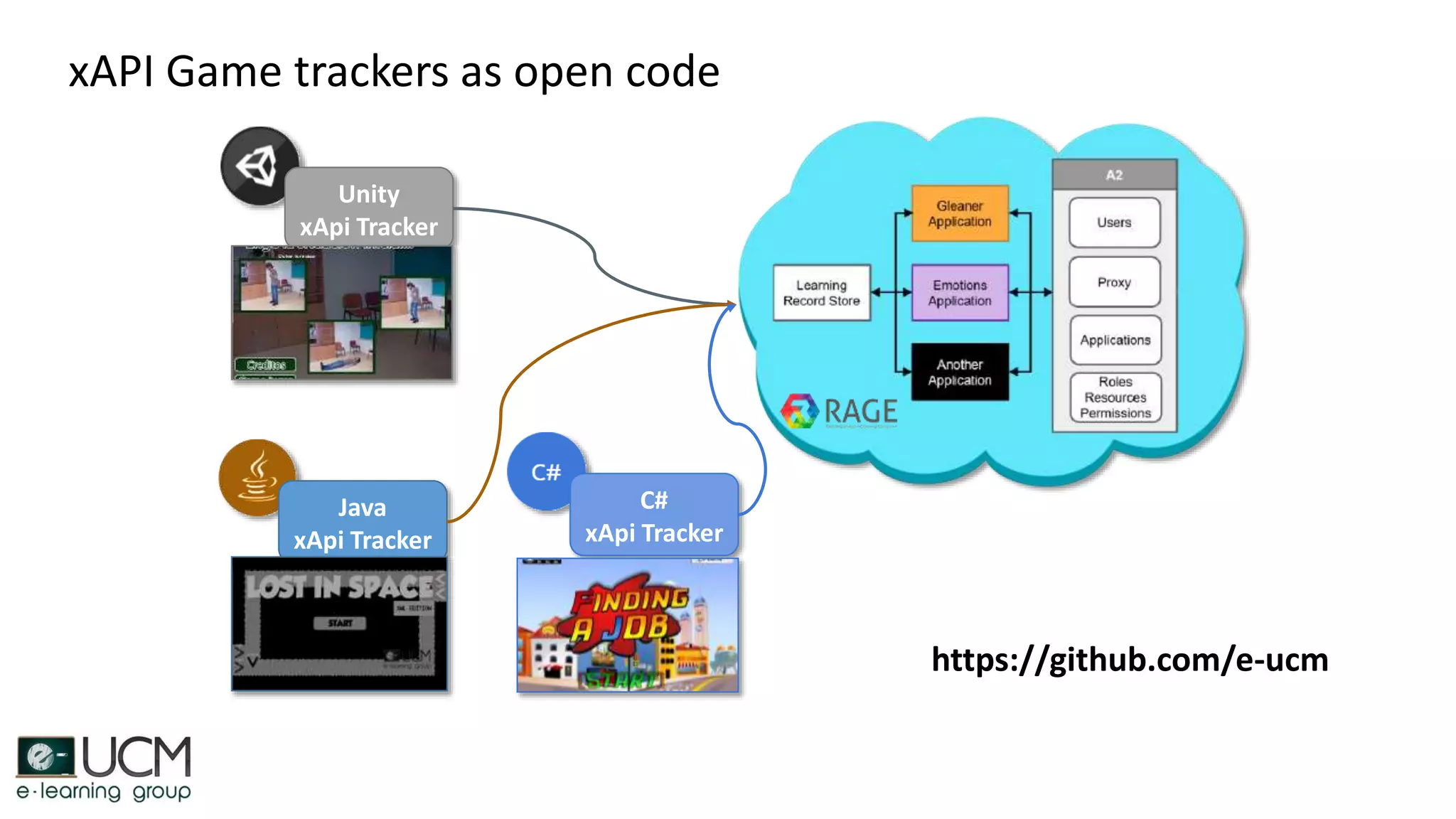

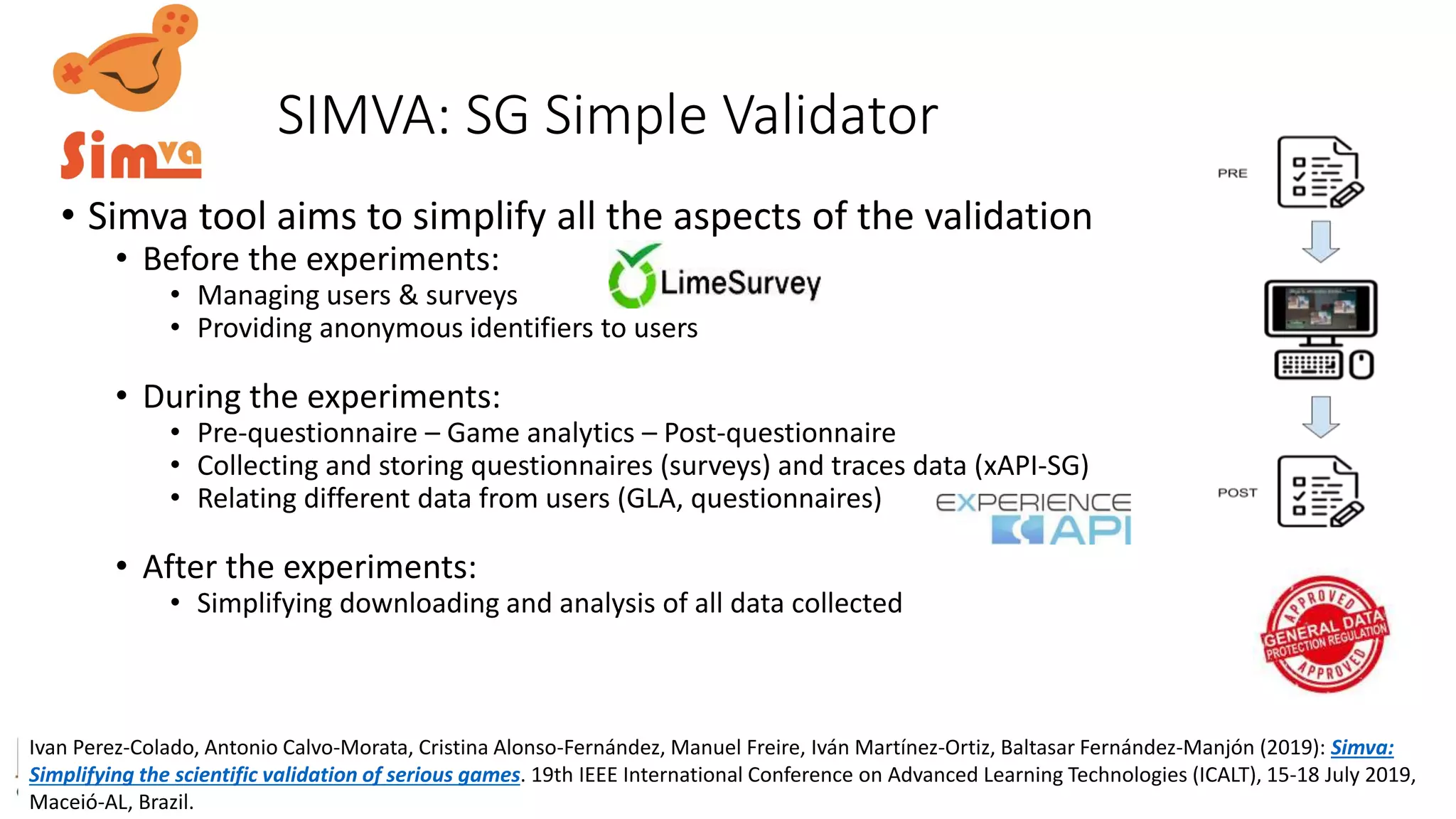

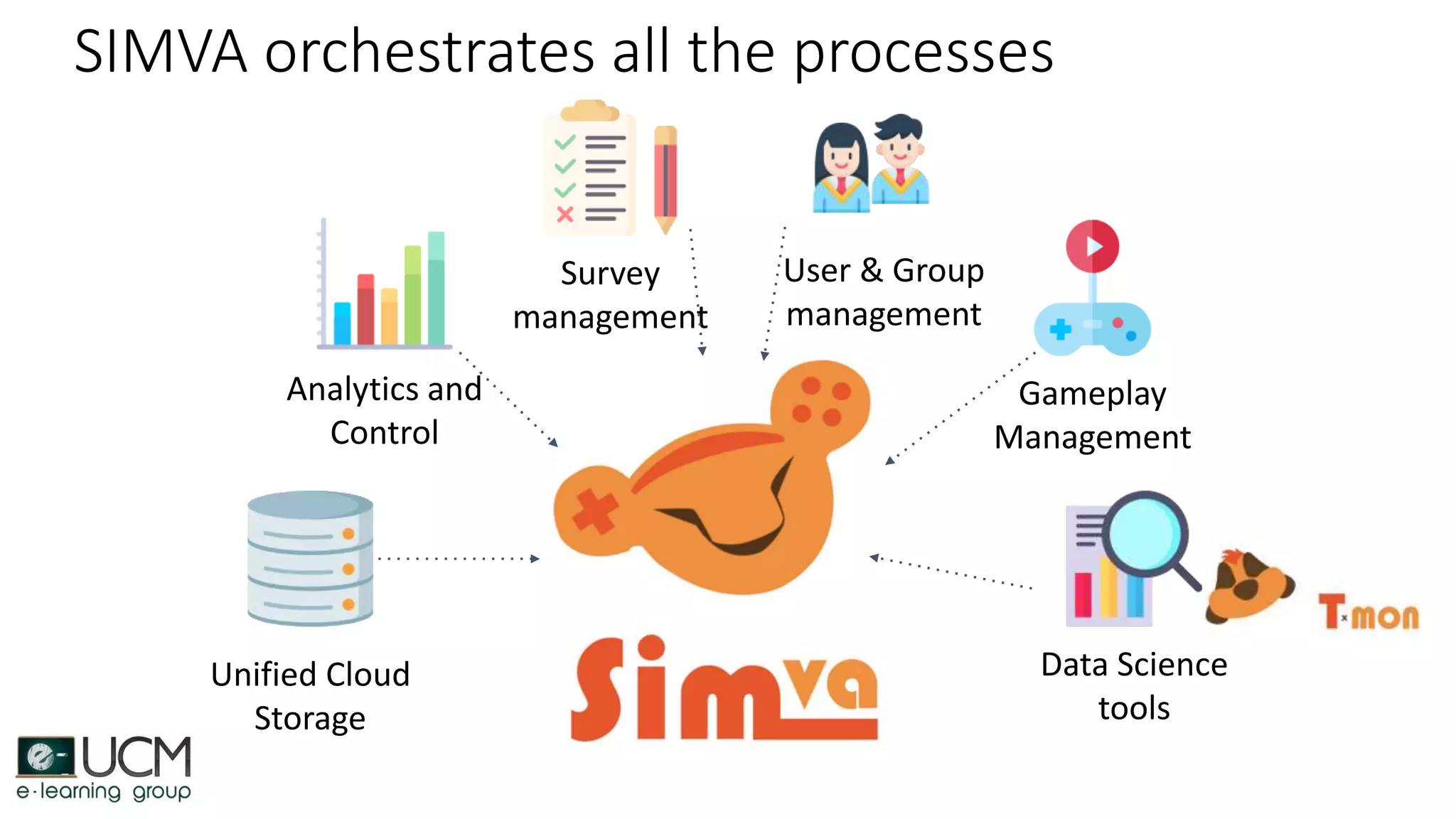

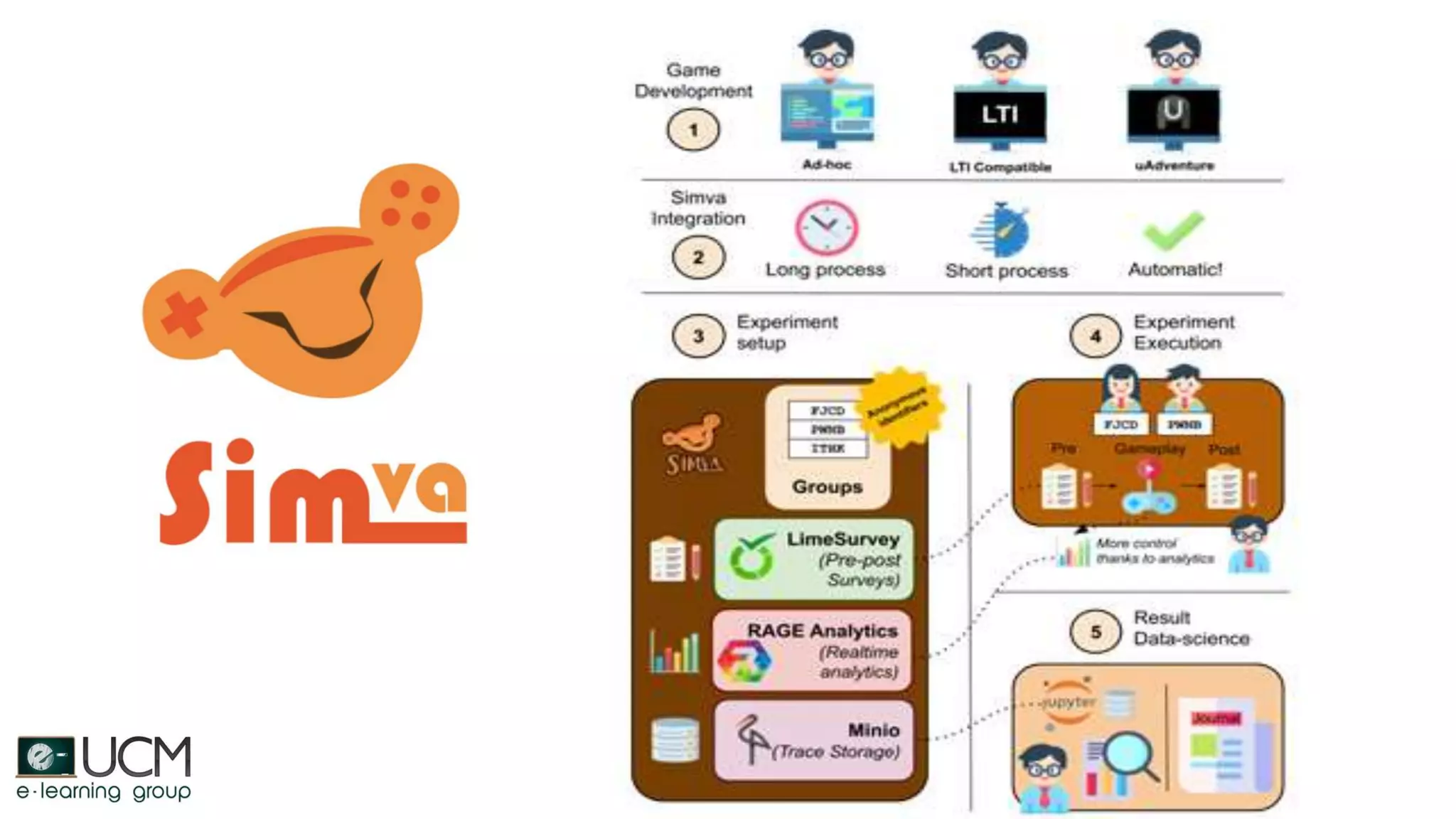

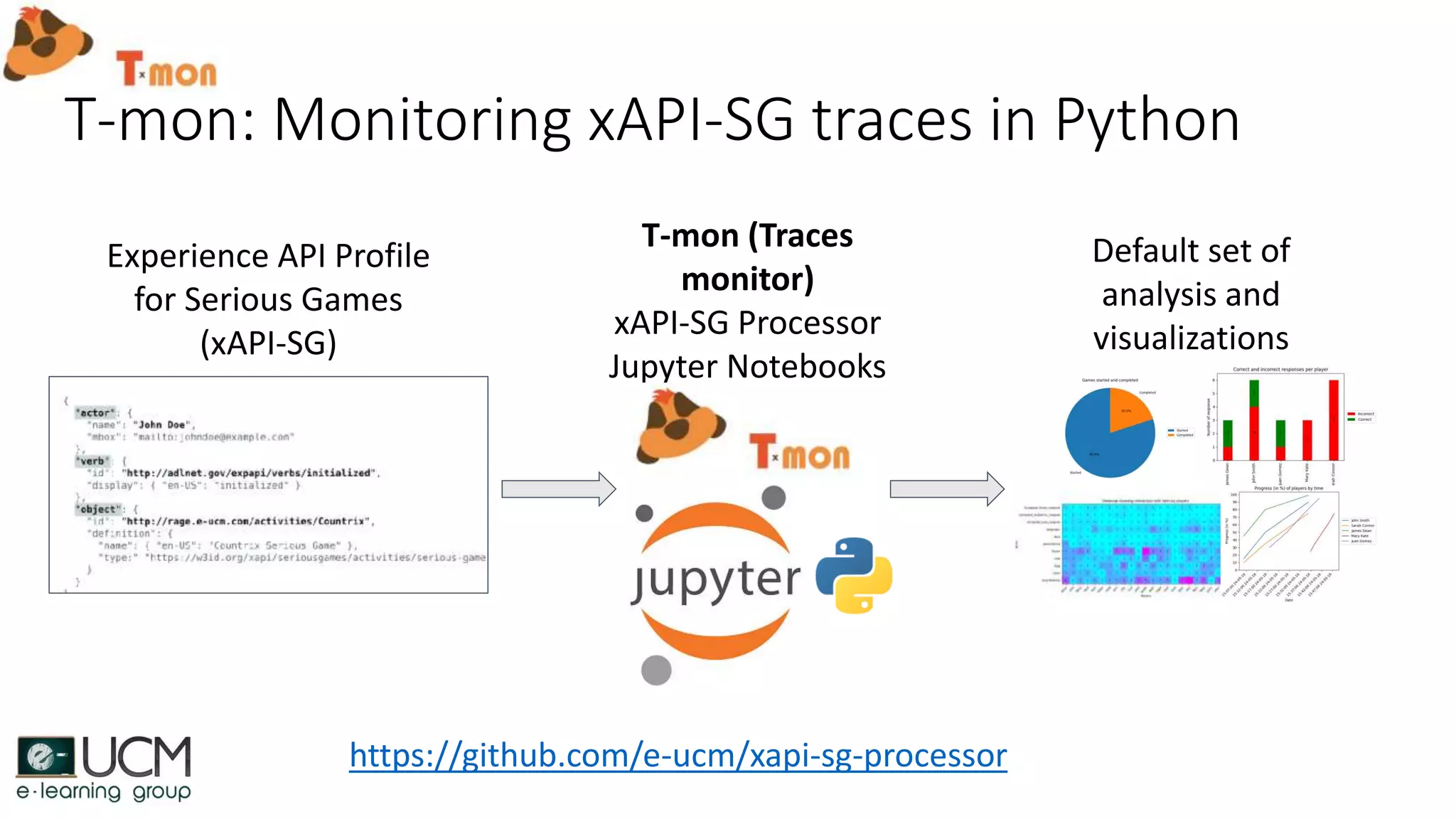

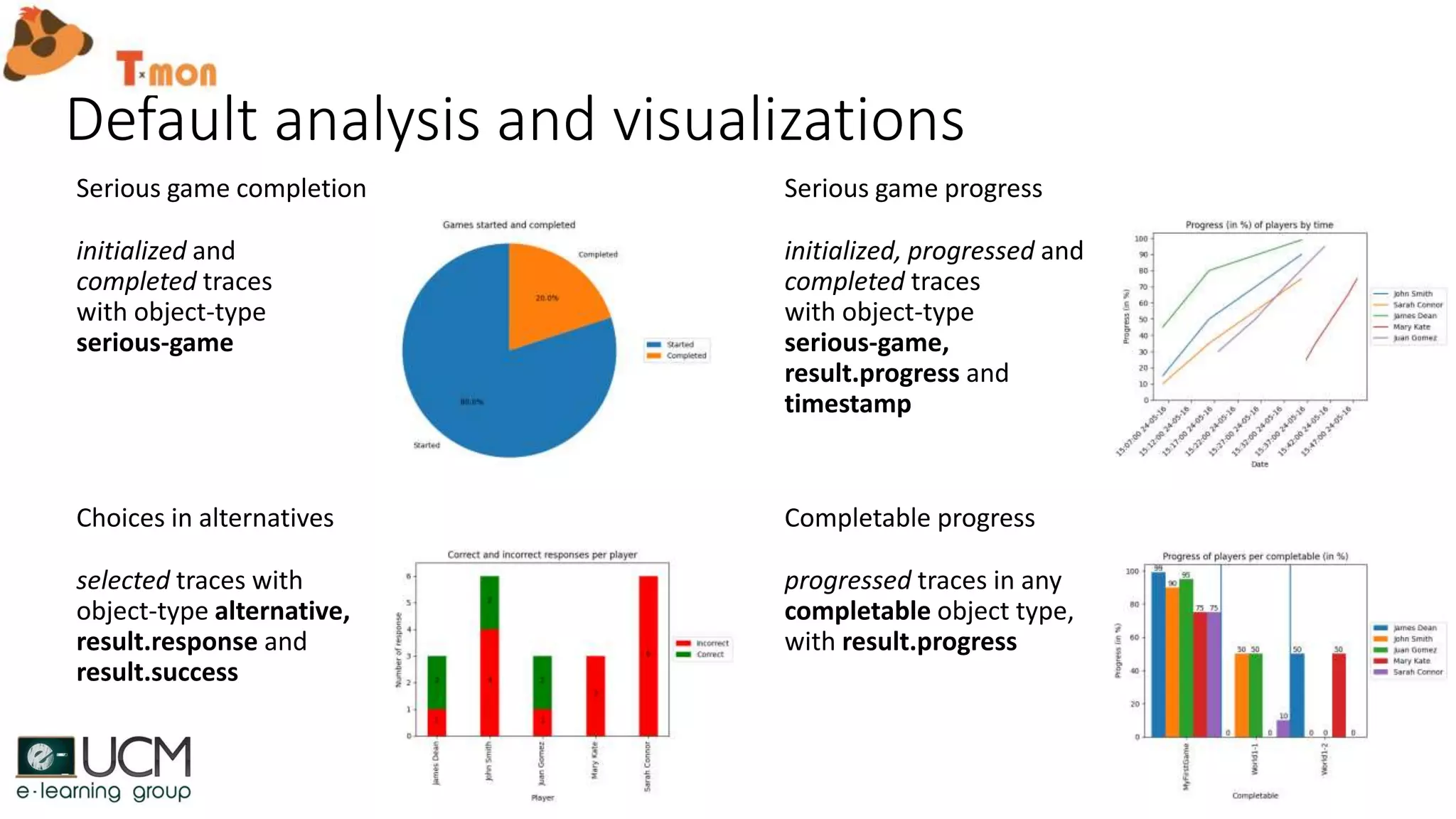

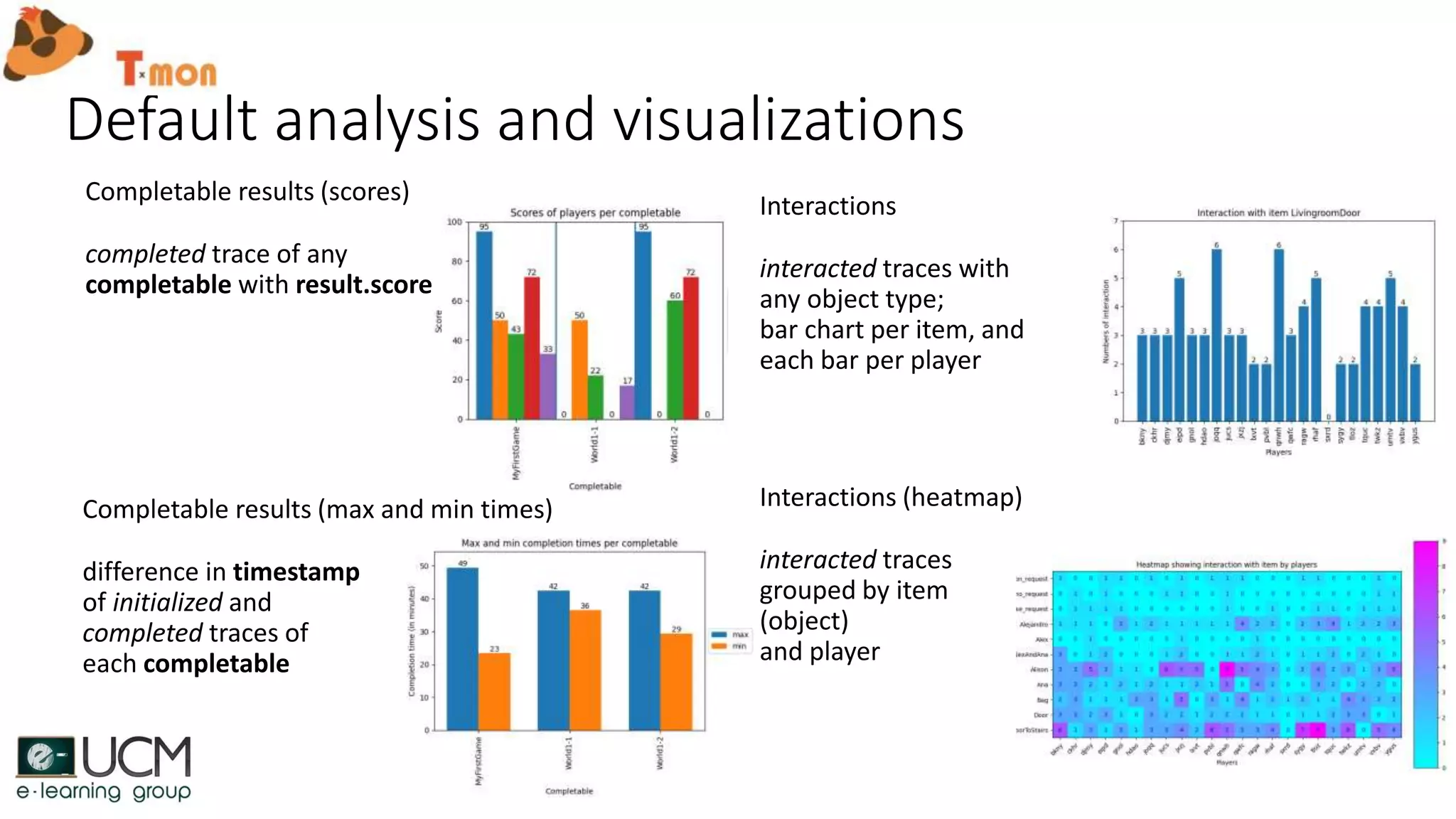

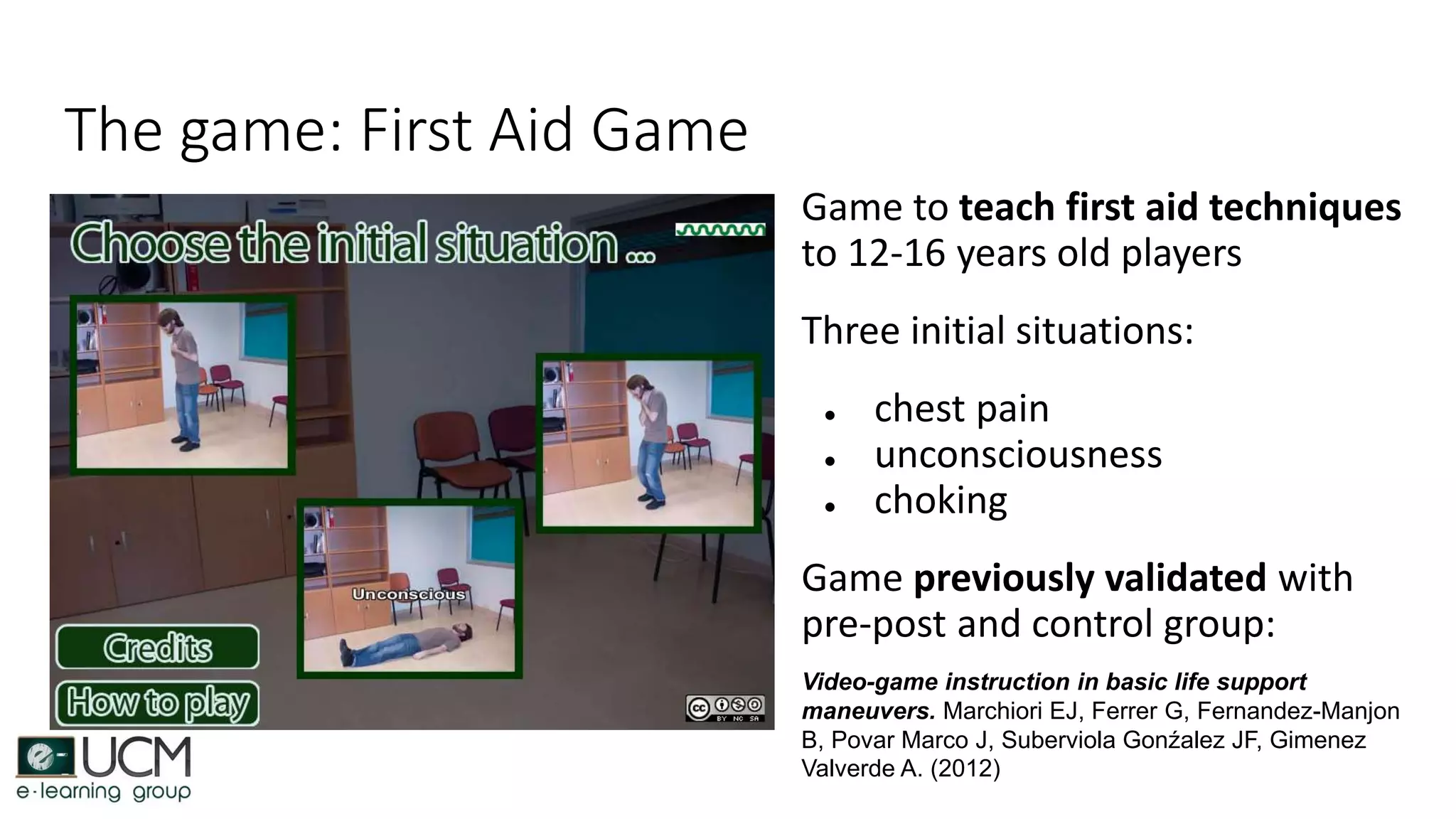

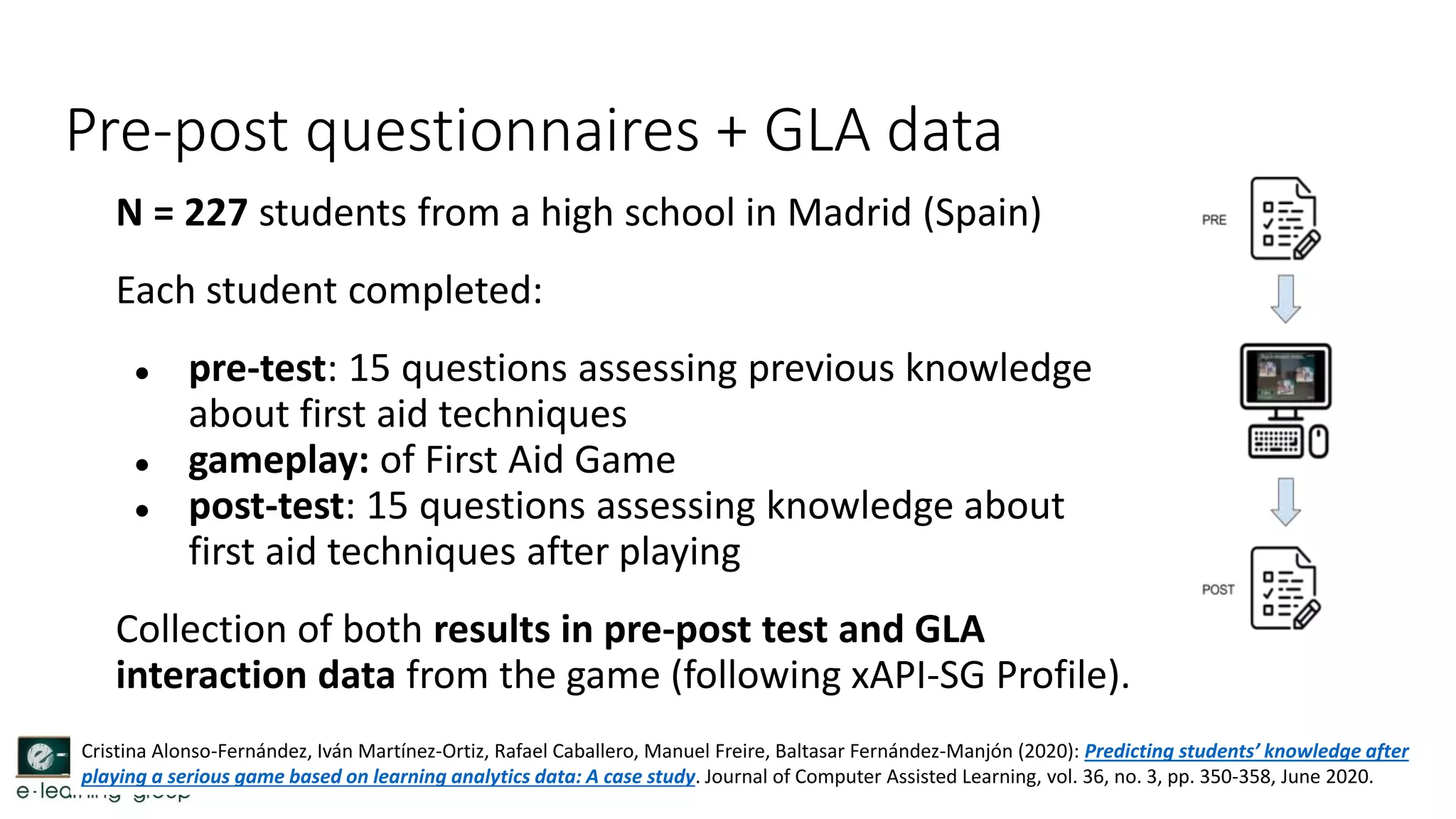

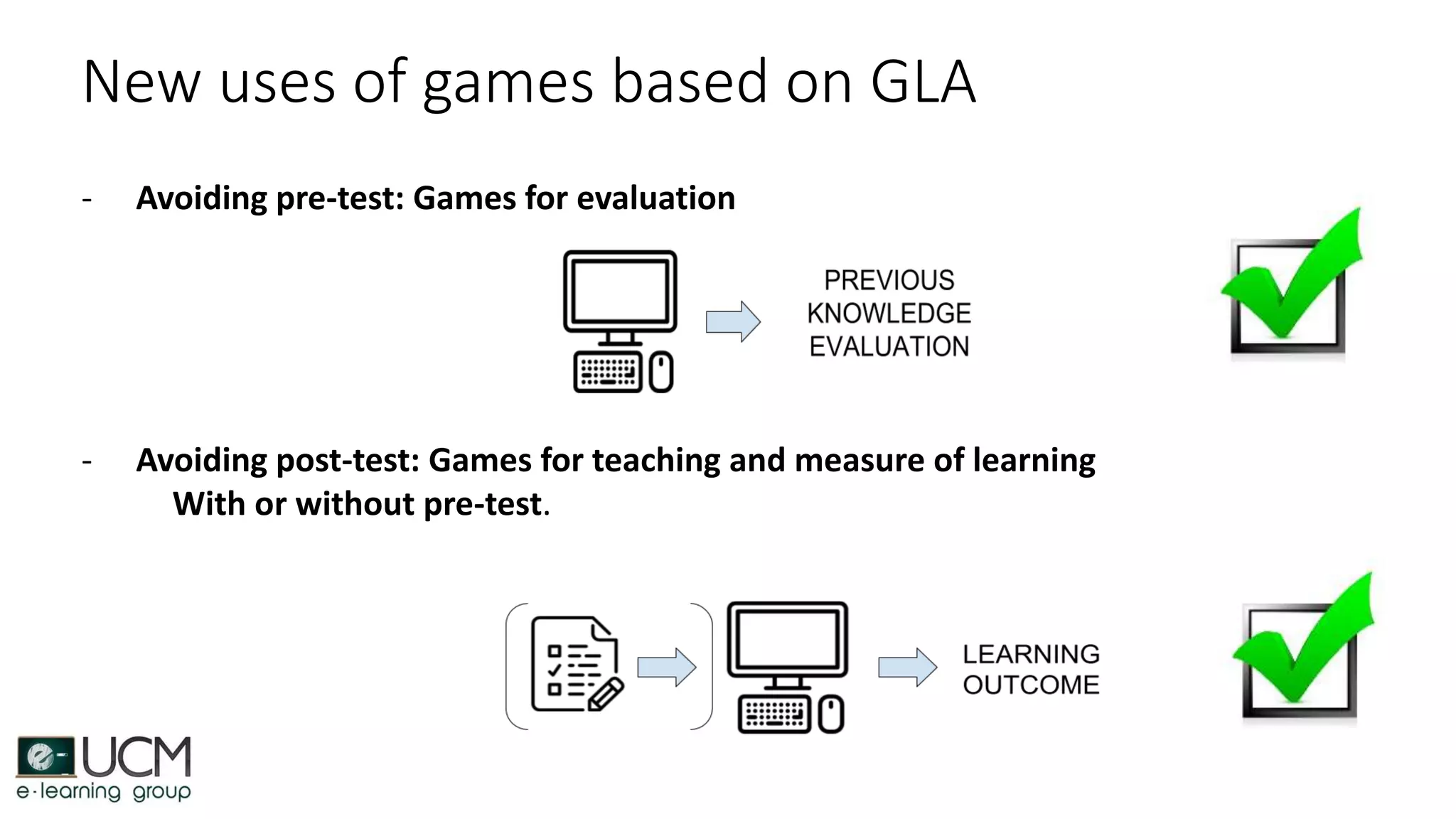

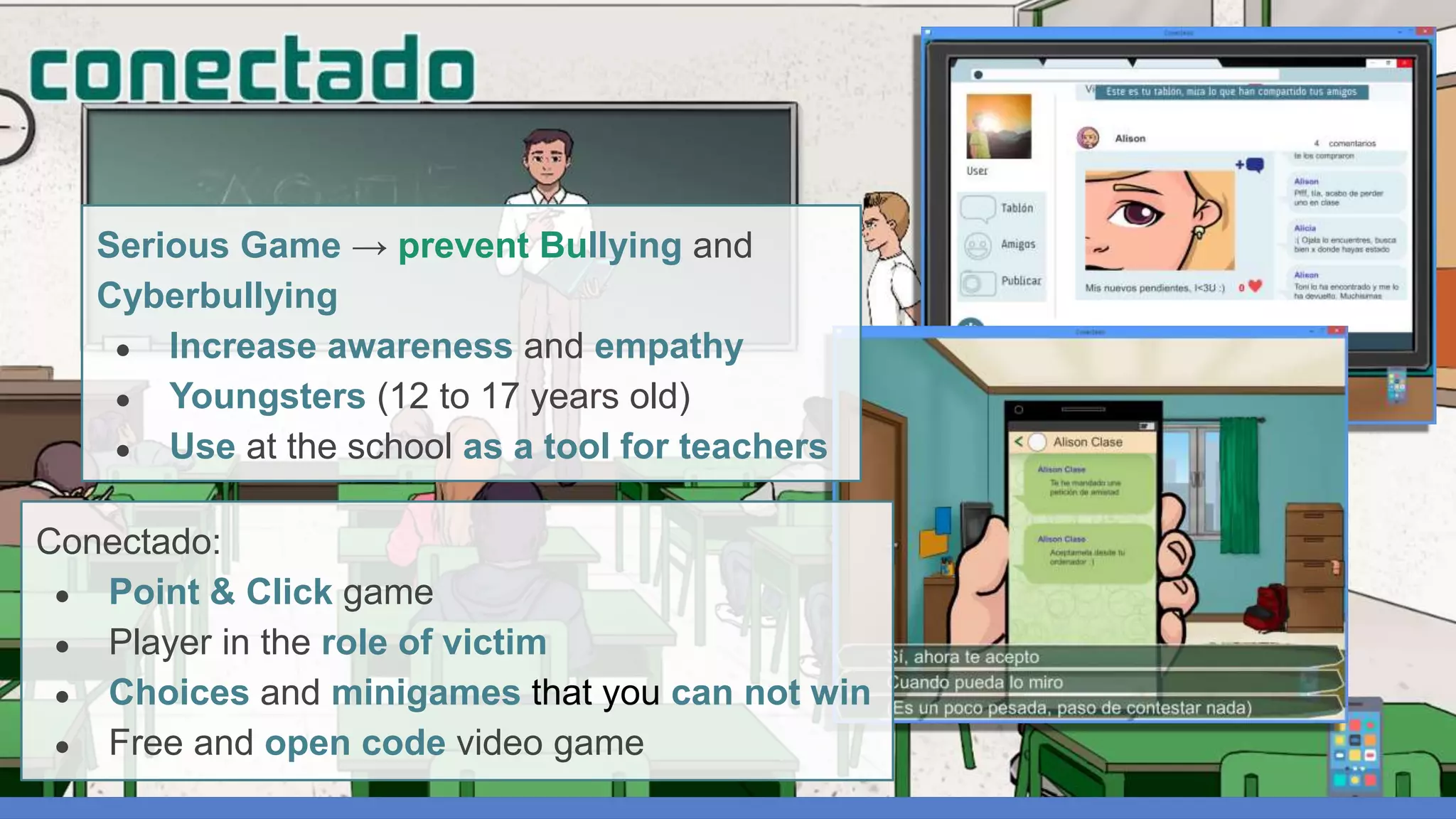

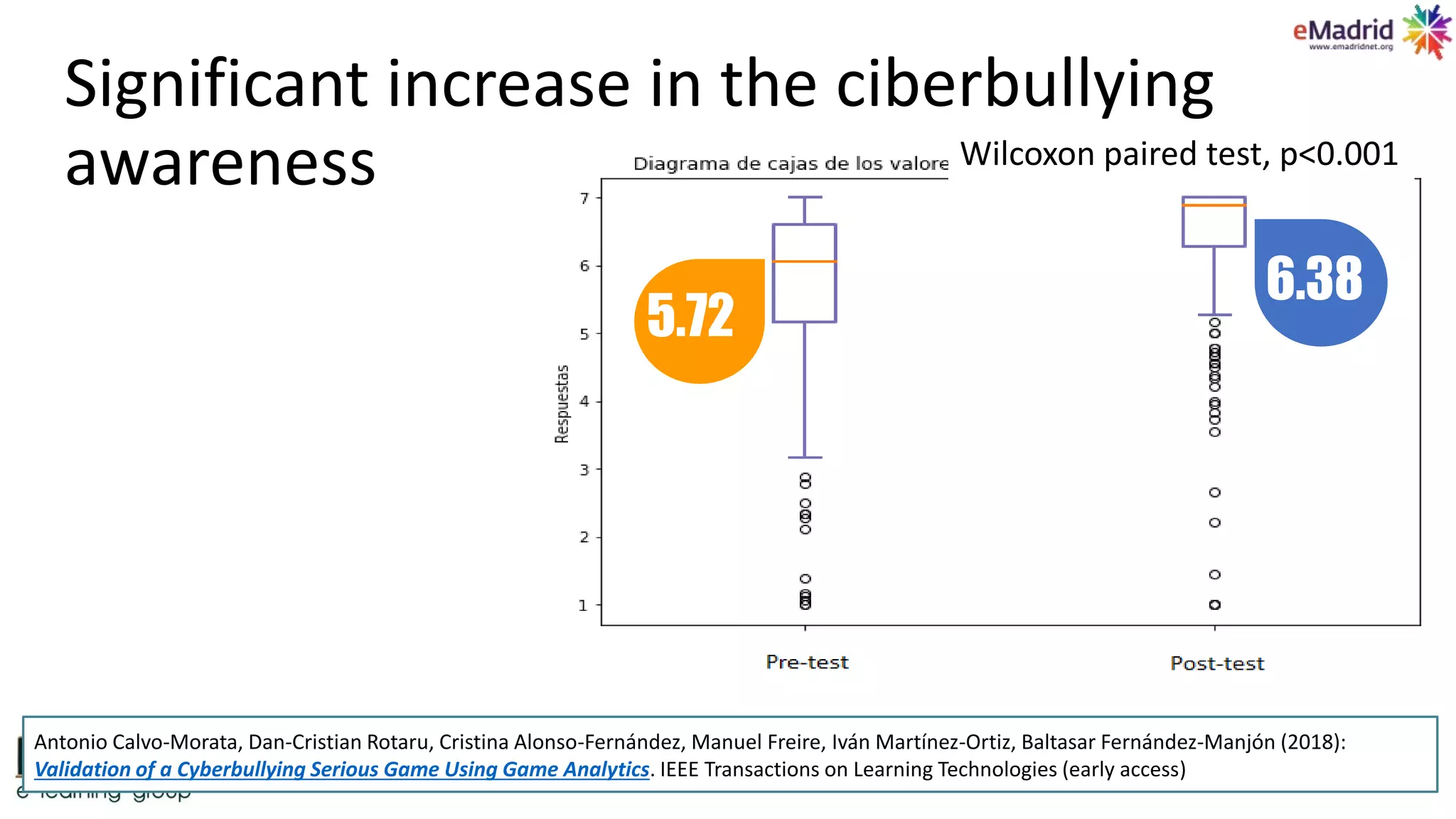

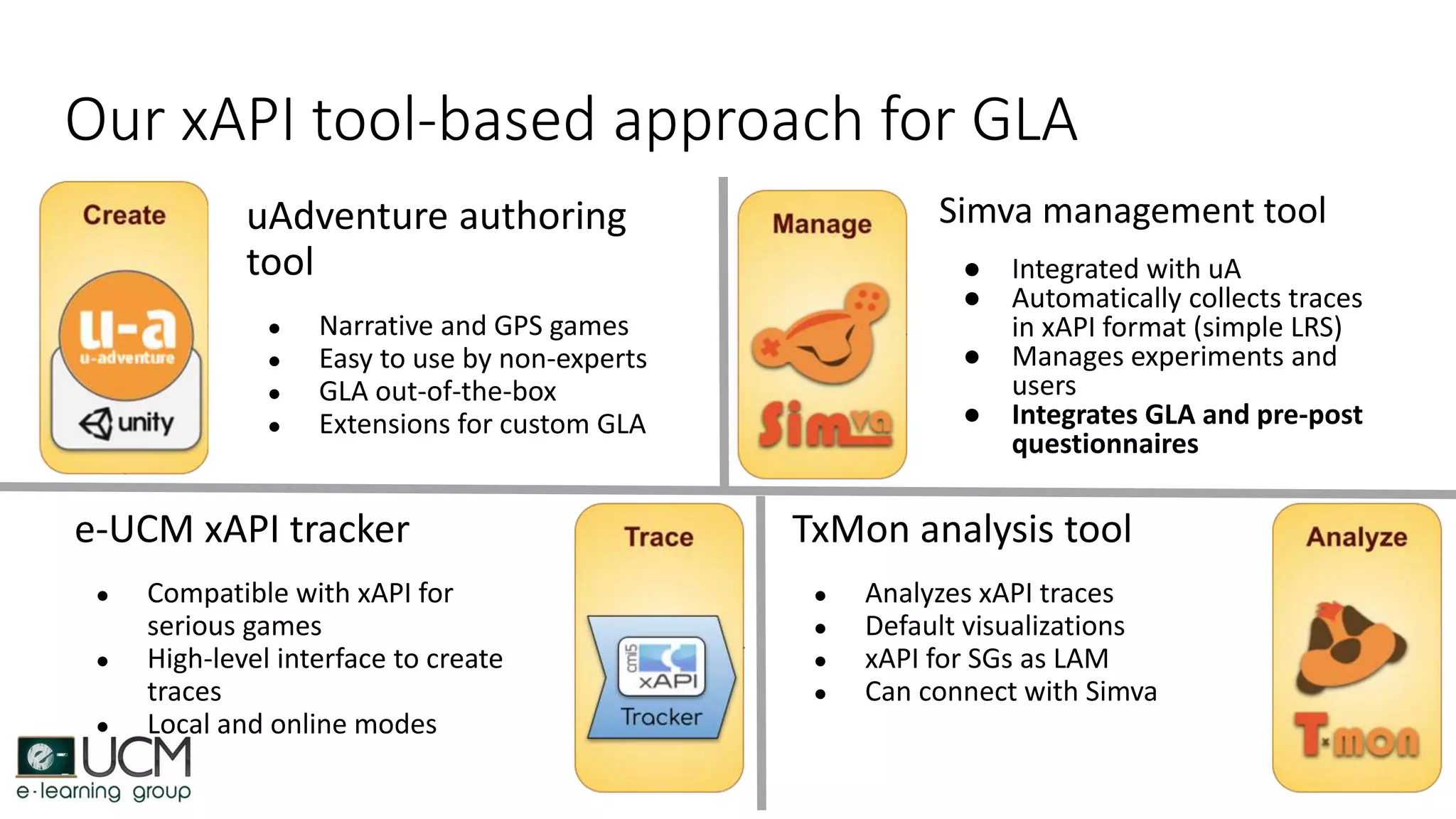

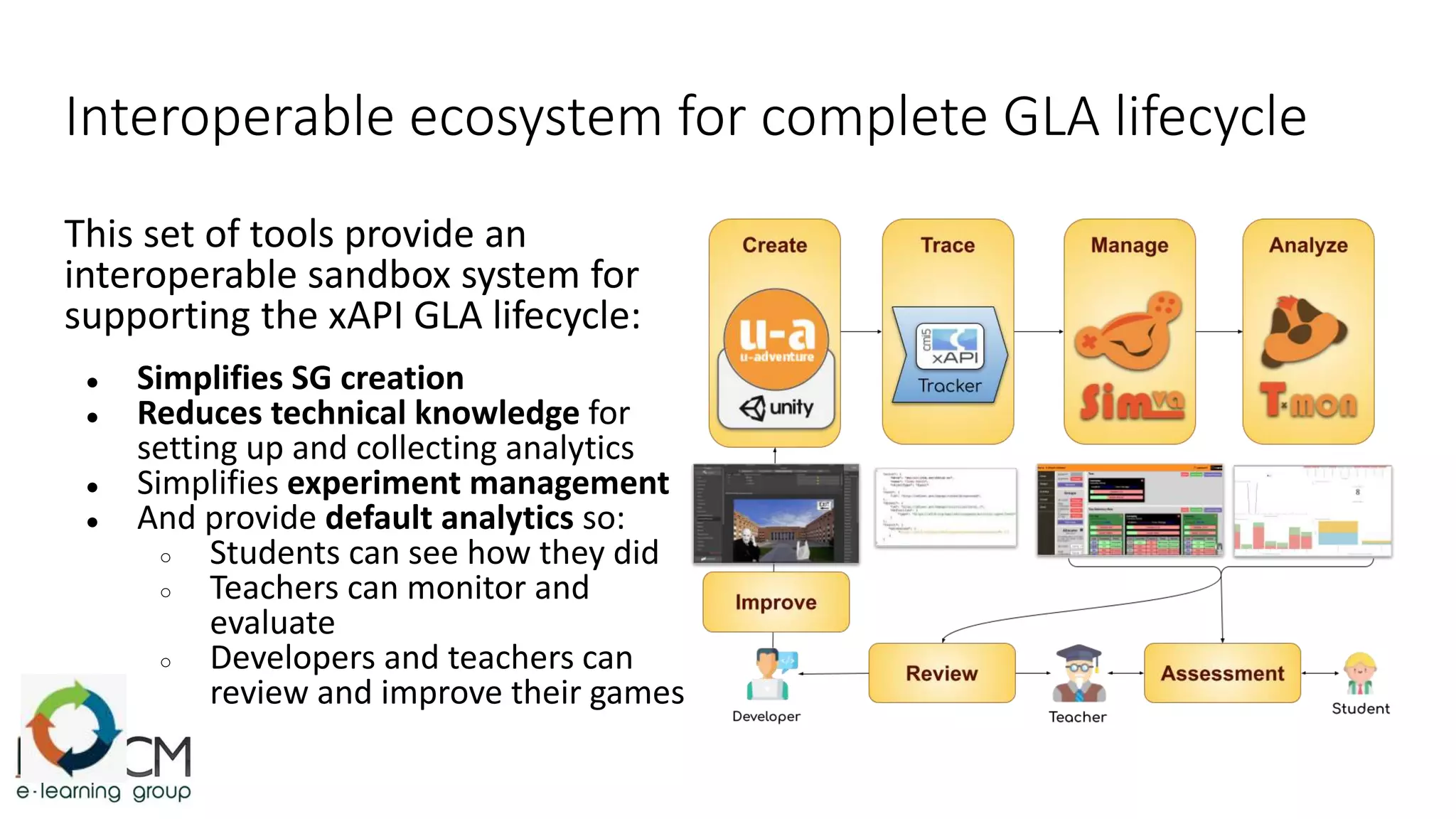

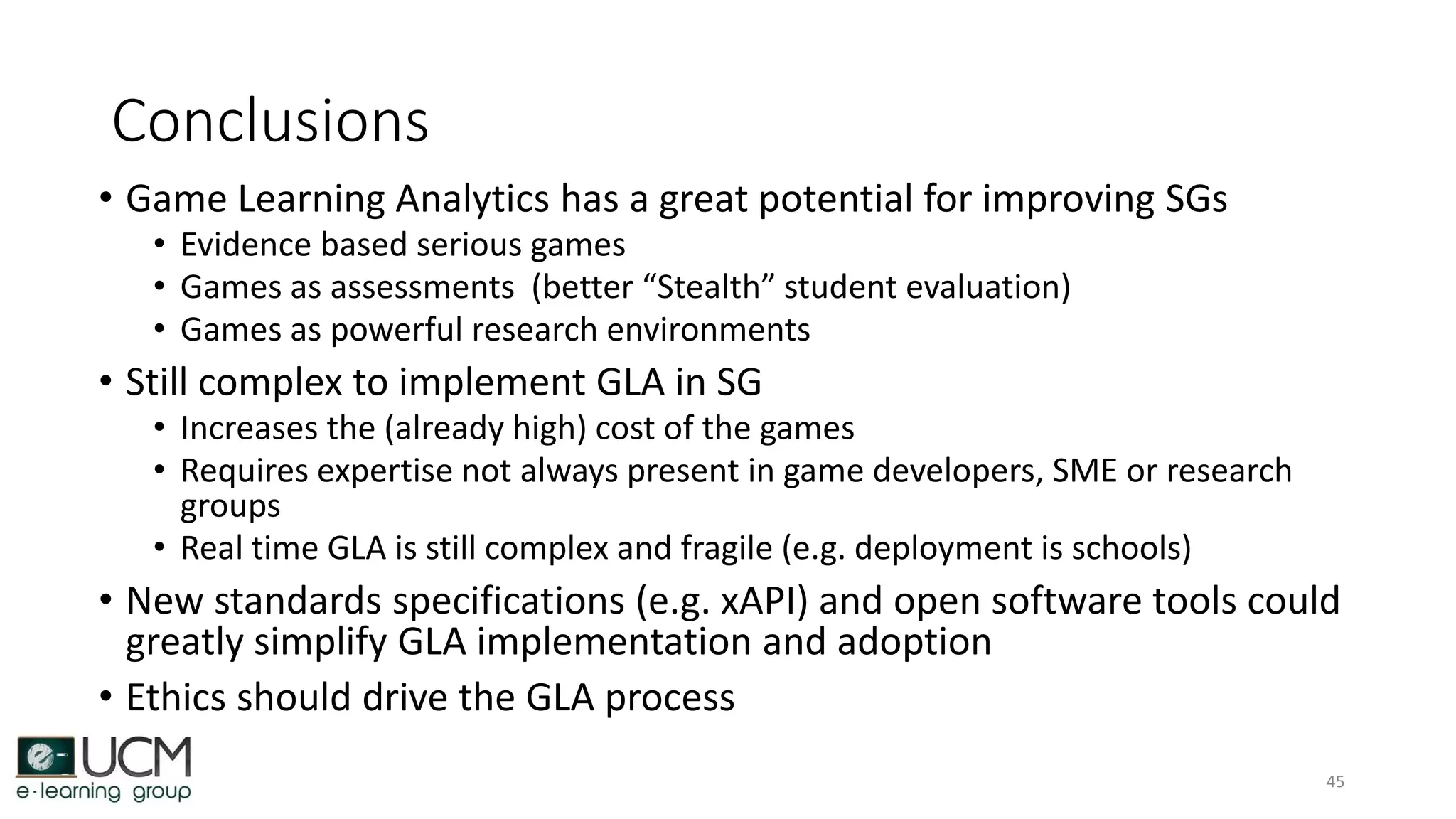

The document discusses the integration of game learning analytics (GLA) in serious games to enhance their efficacy and improve educational outcomes. It highlights the current challenges, such as limited user validation and the complexities involved in systematically applying GLA, particularly in mainstream education. The establishment of the experience API for serious games (xAPI-sg) aims to standardize data collection and analysis to facilitate better insights into student engagement and learning outcomes.