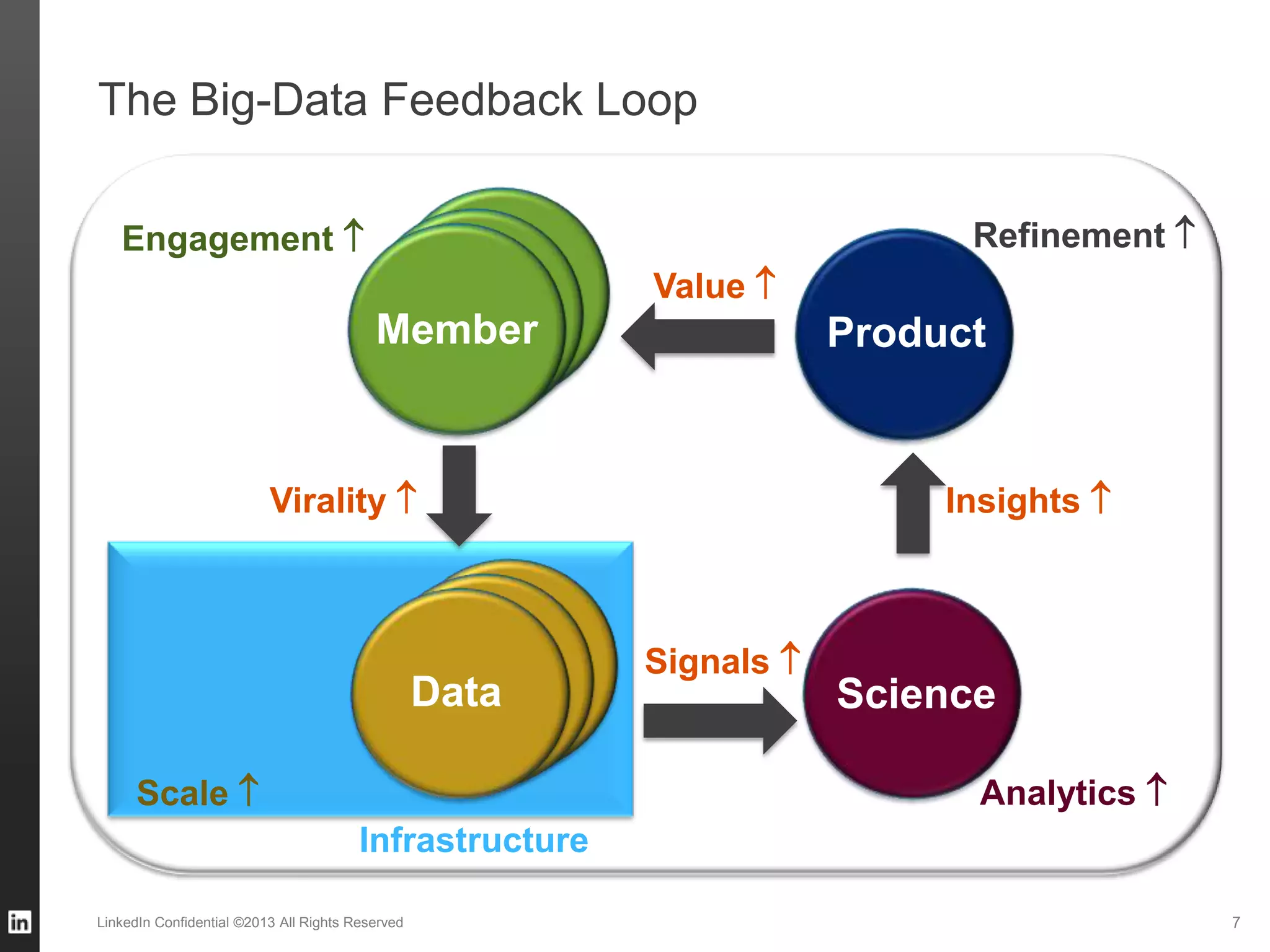

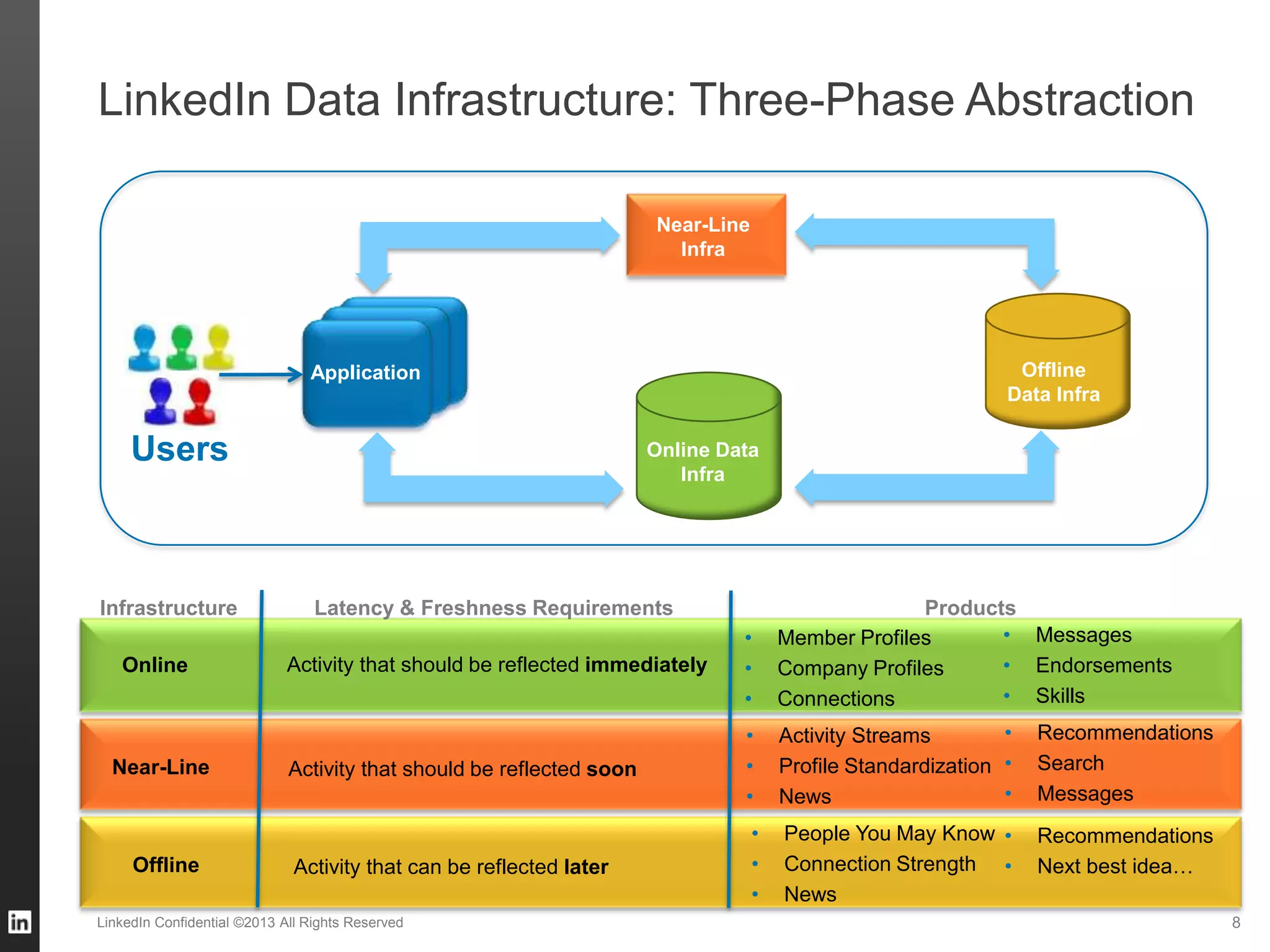

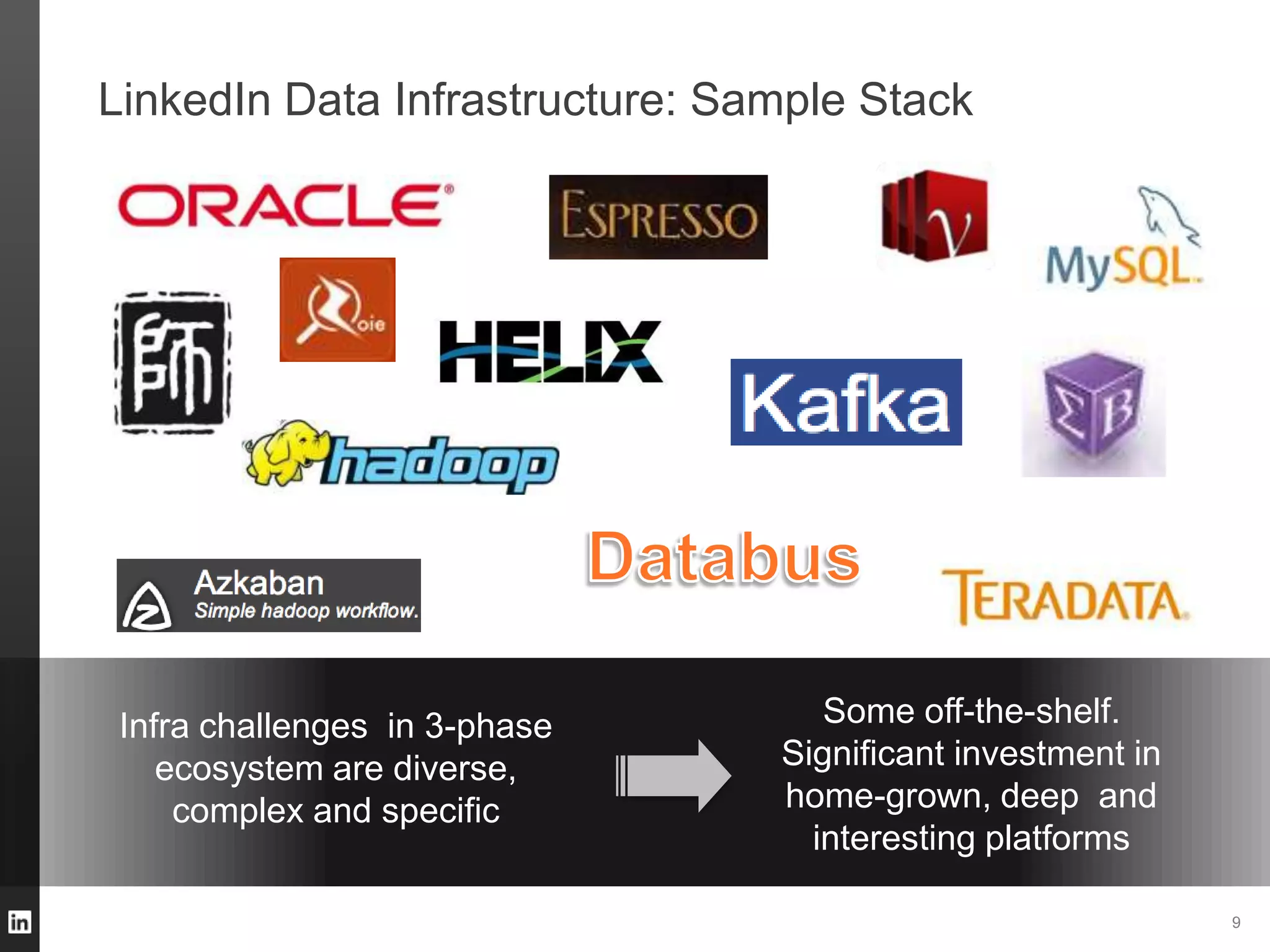

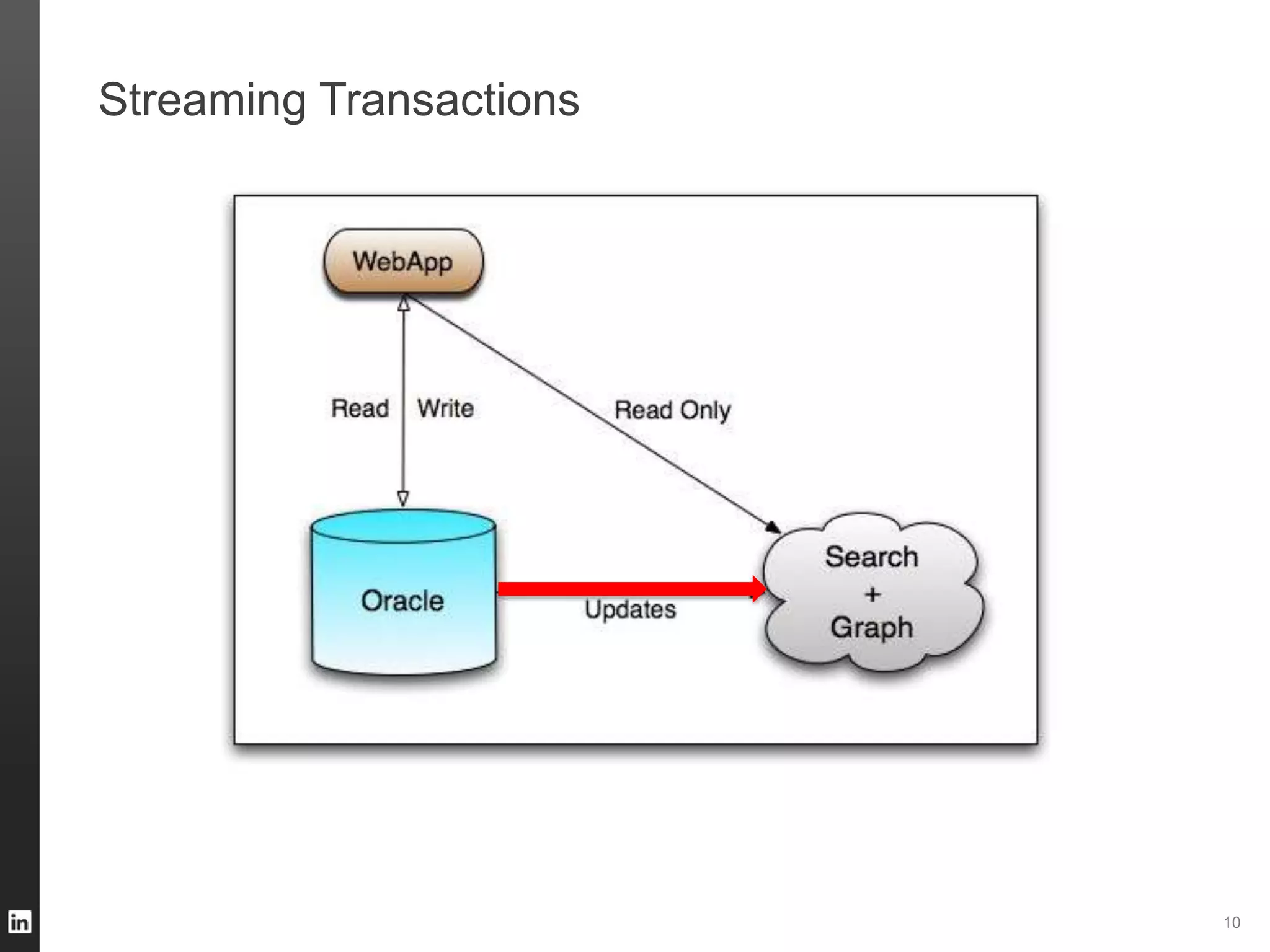

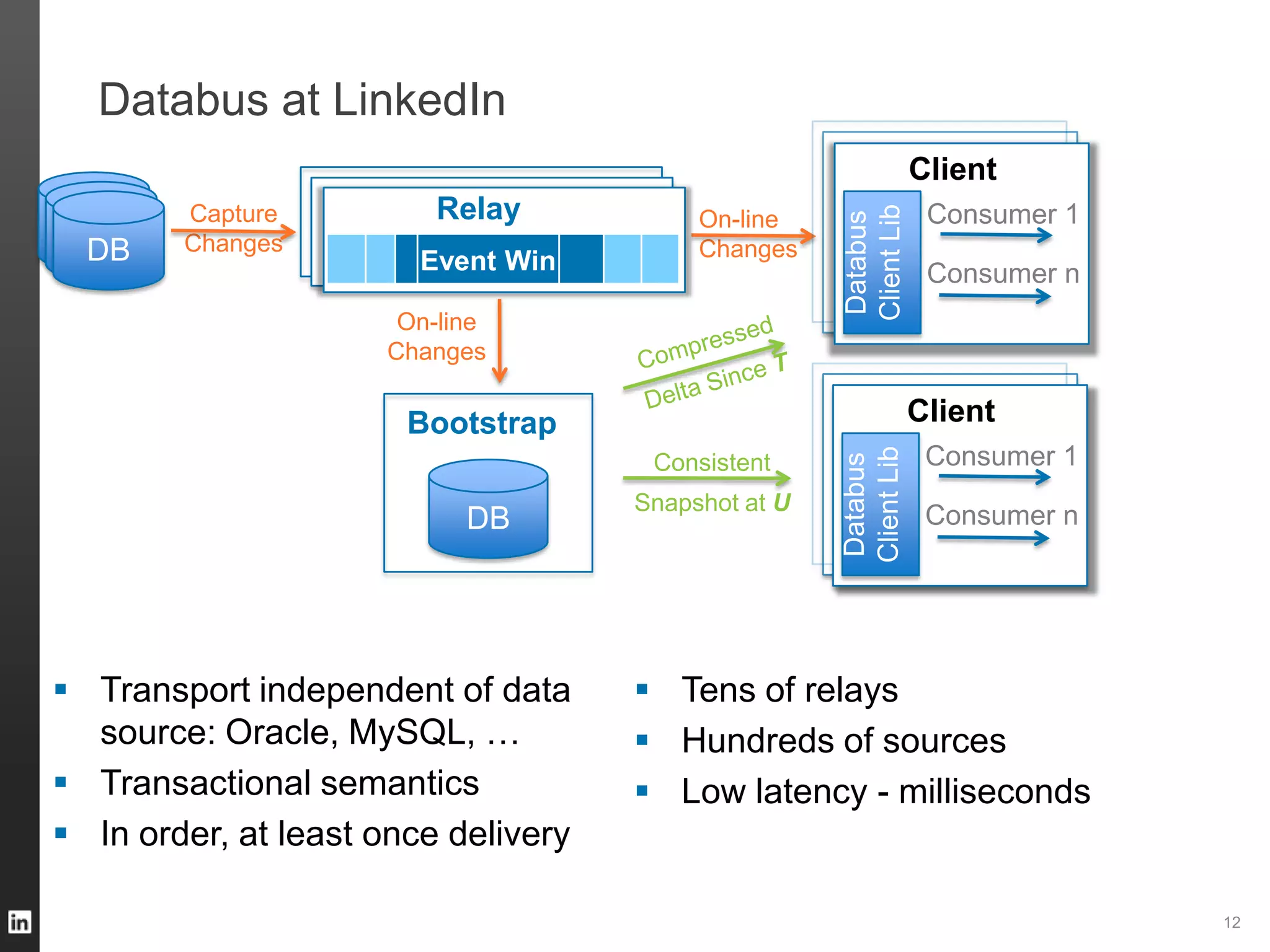

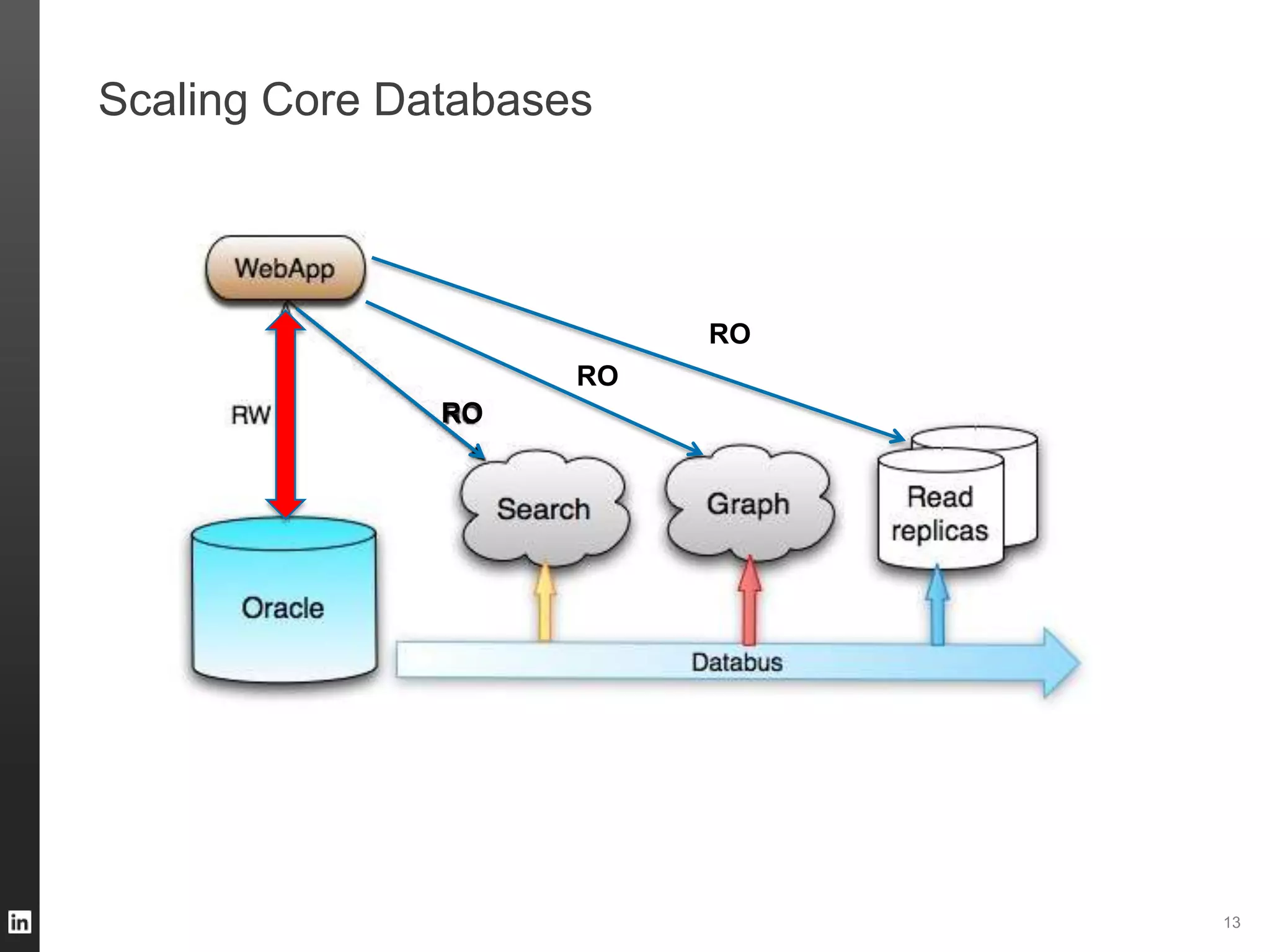

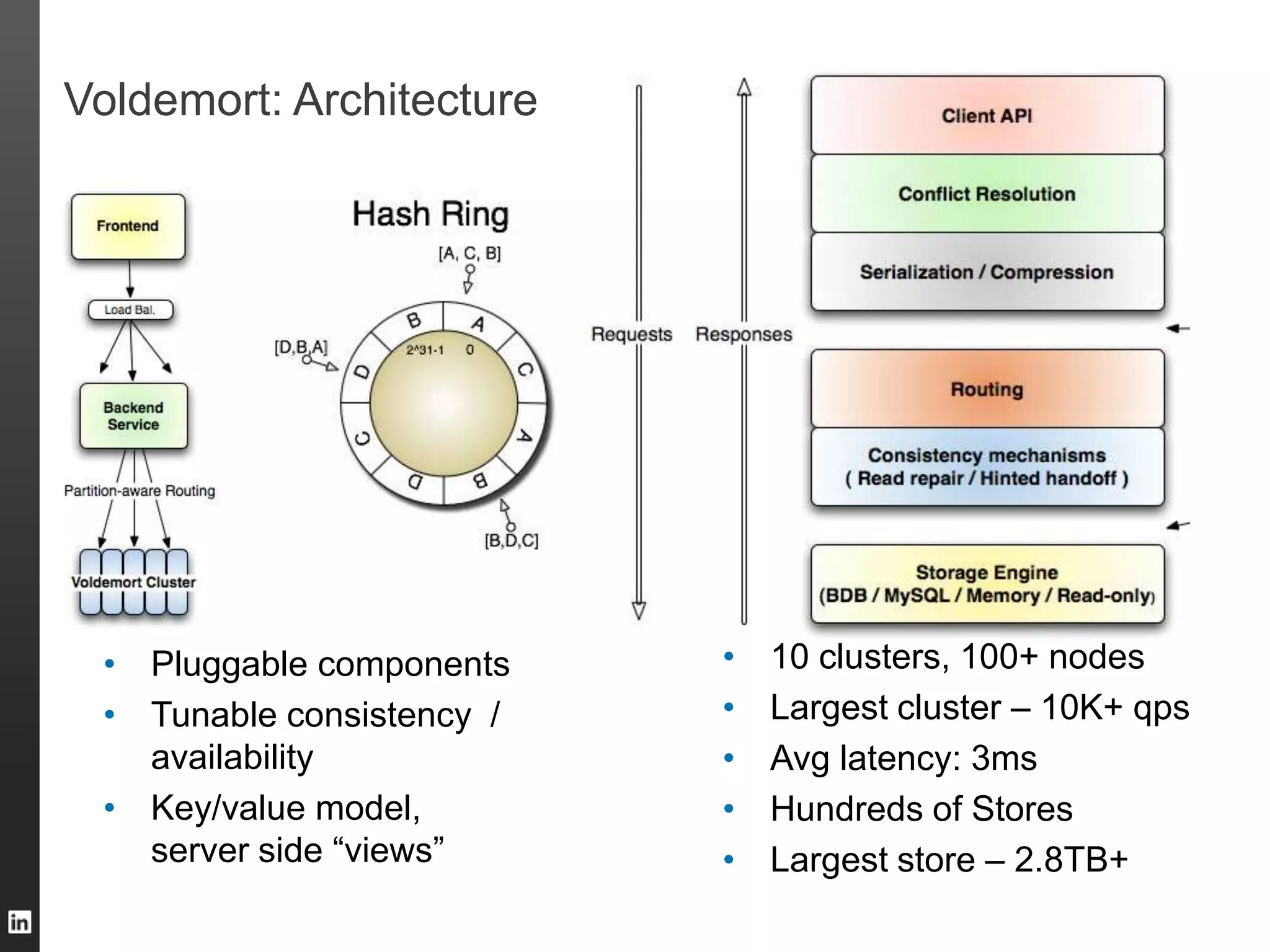

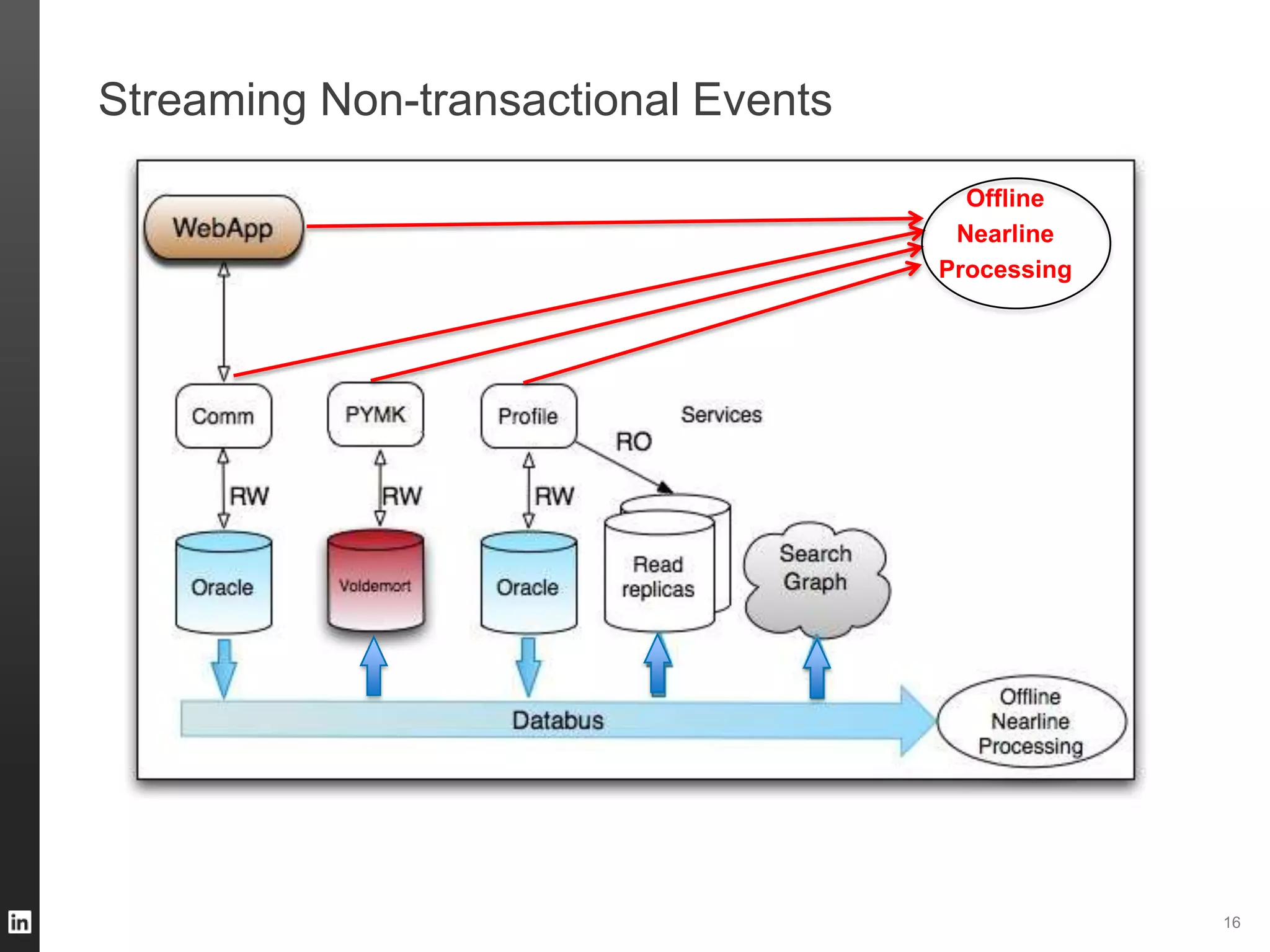

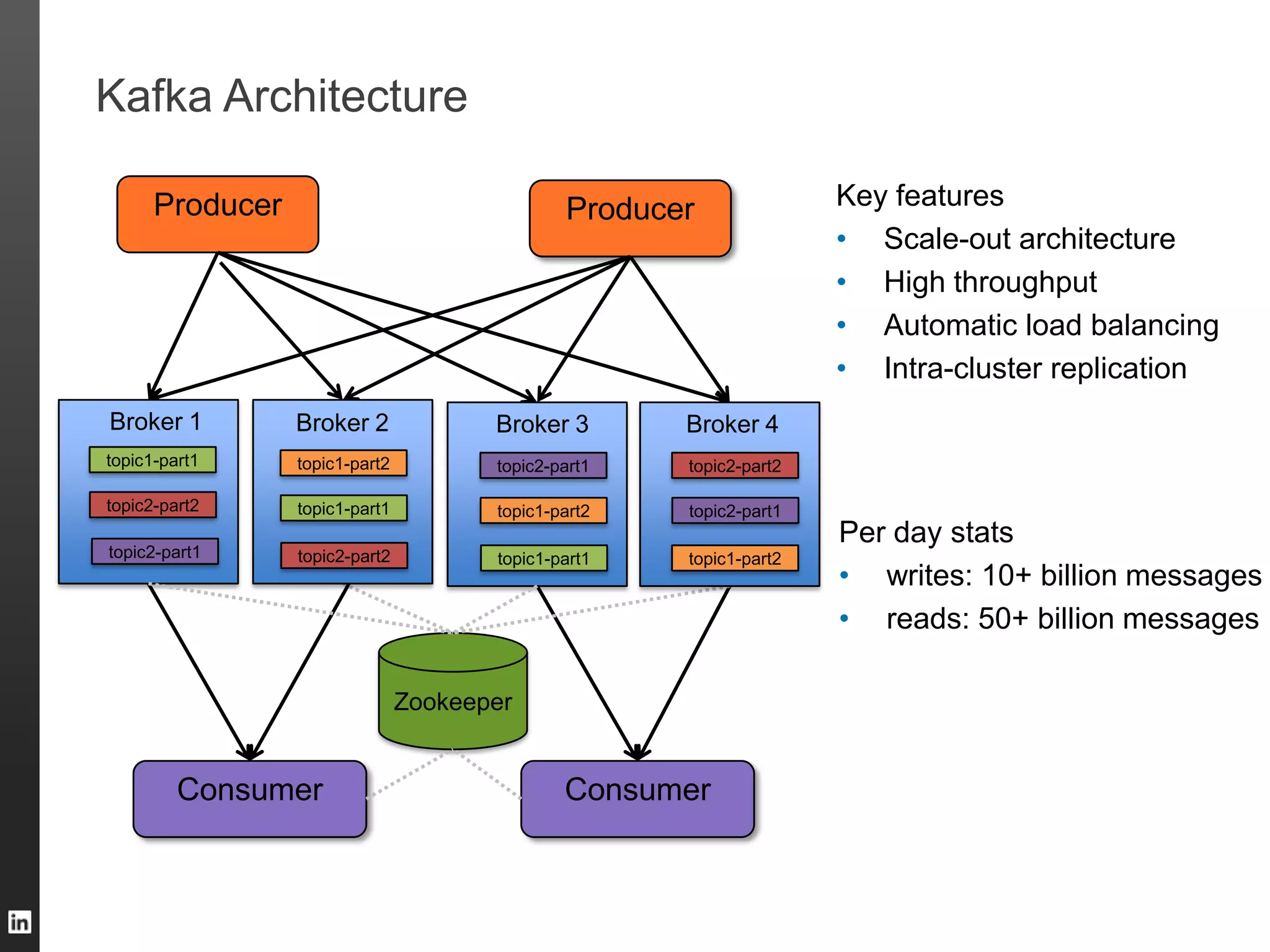

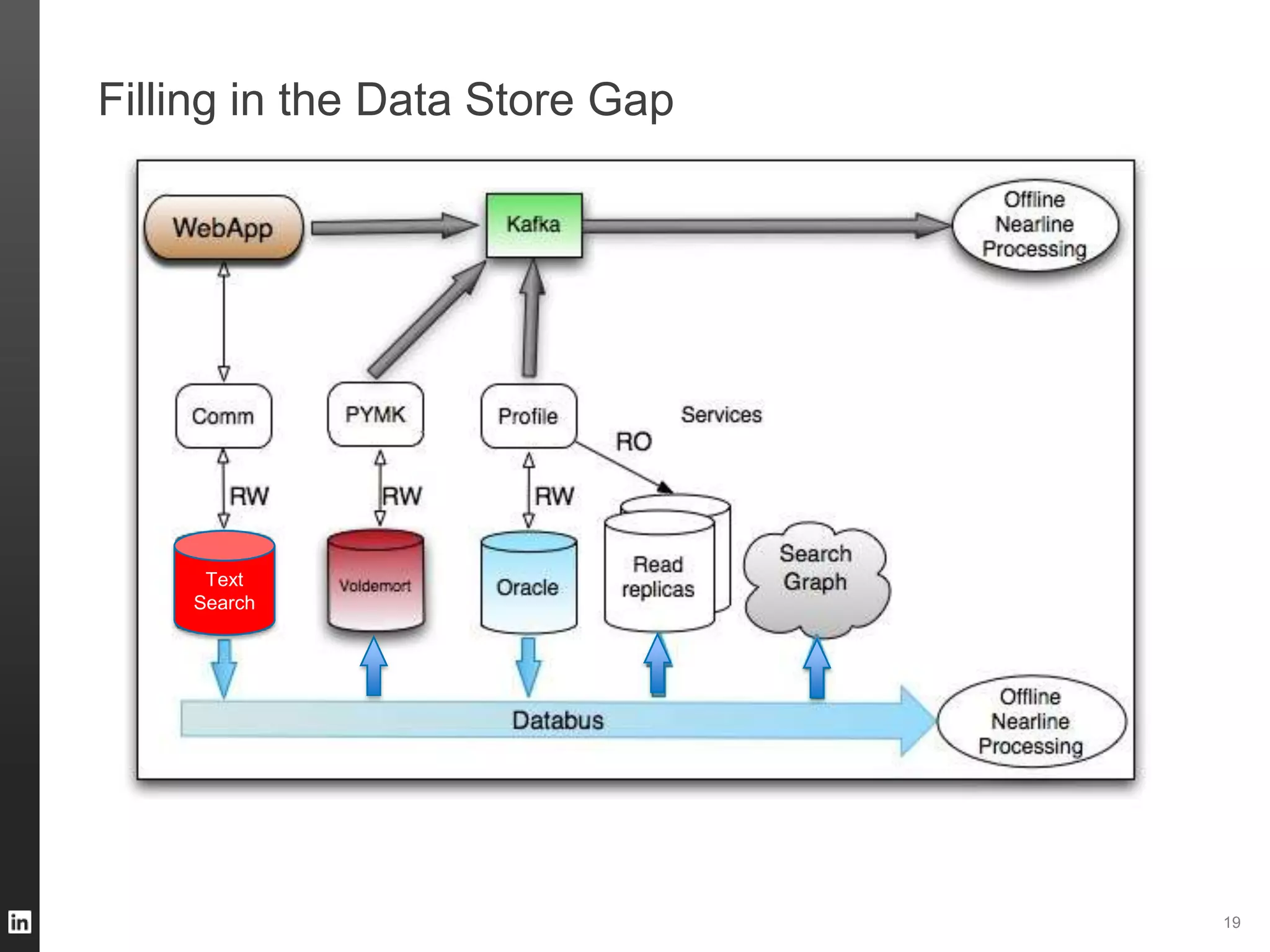

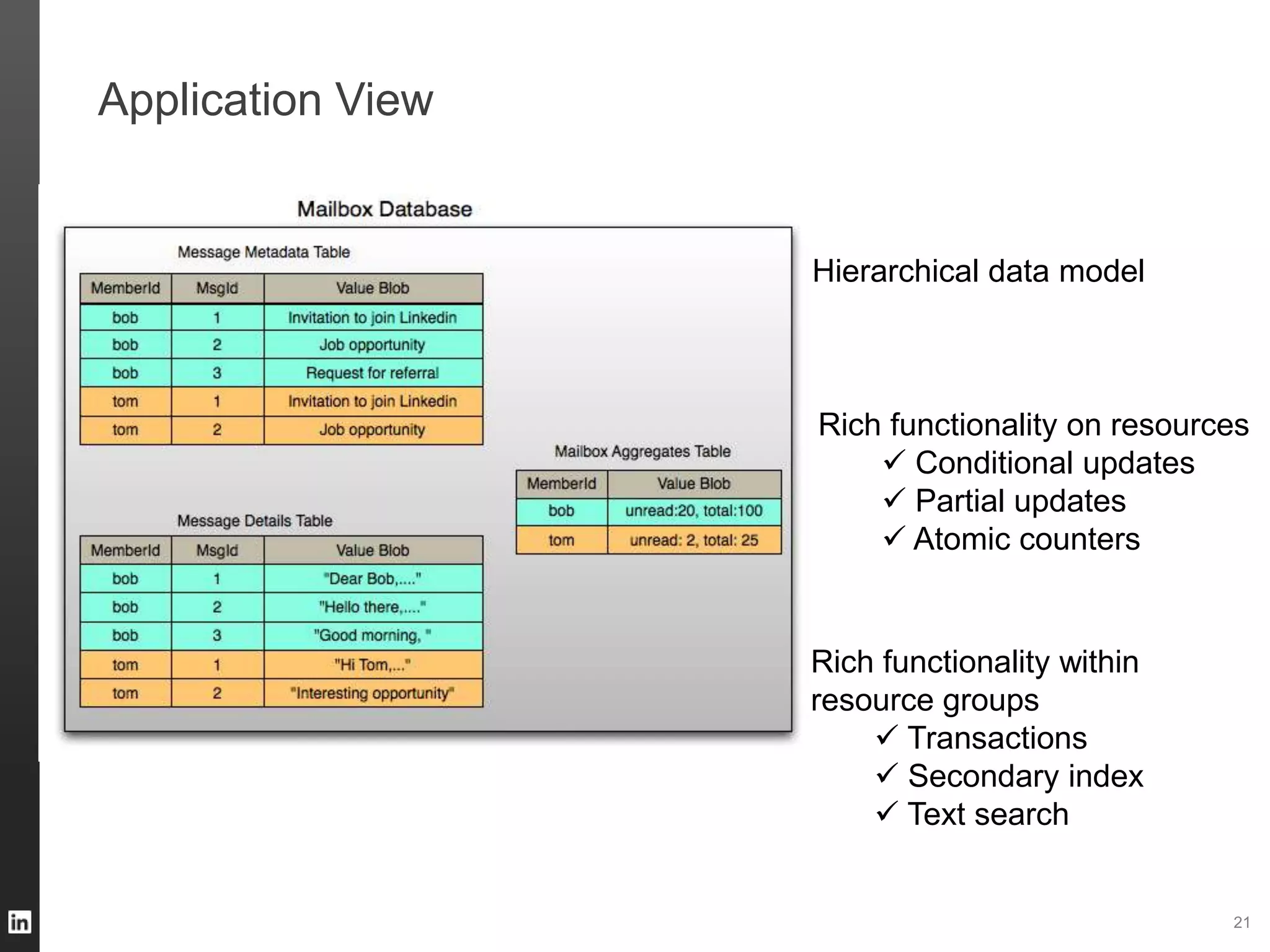

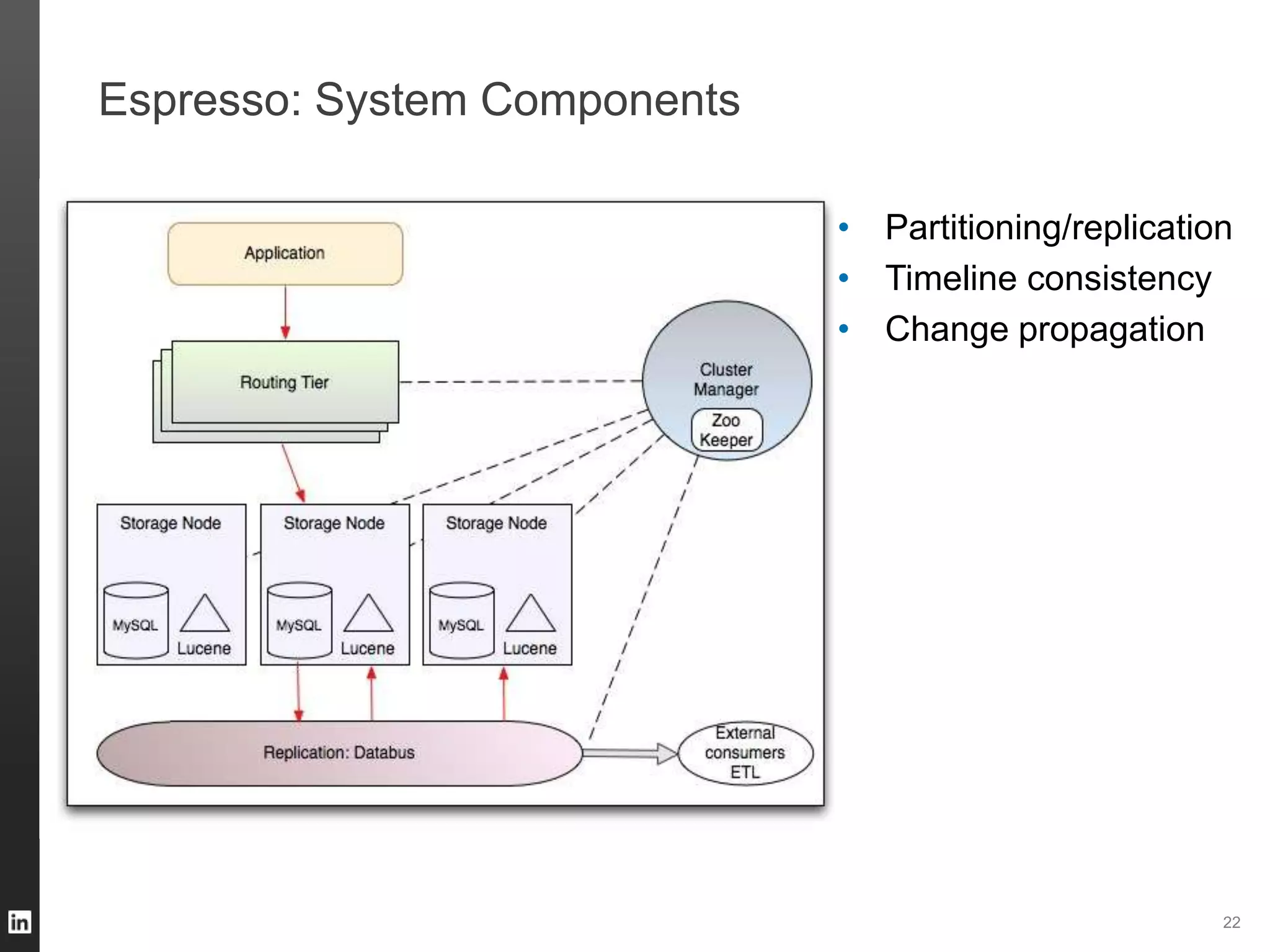

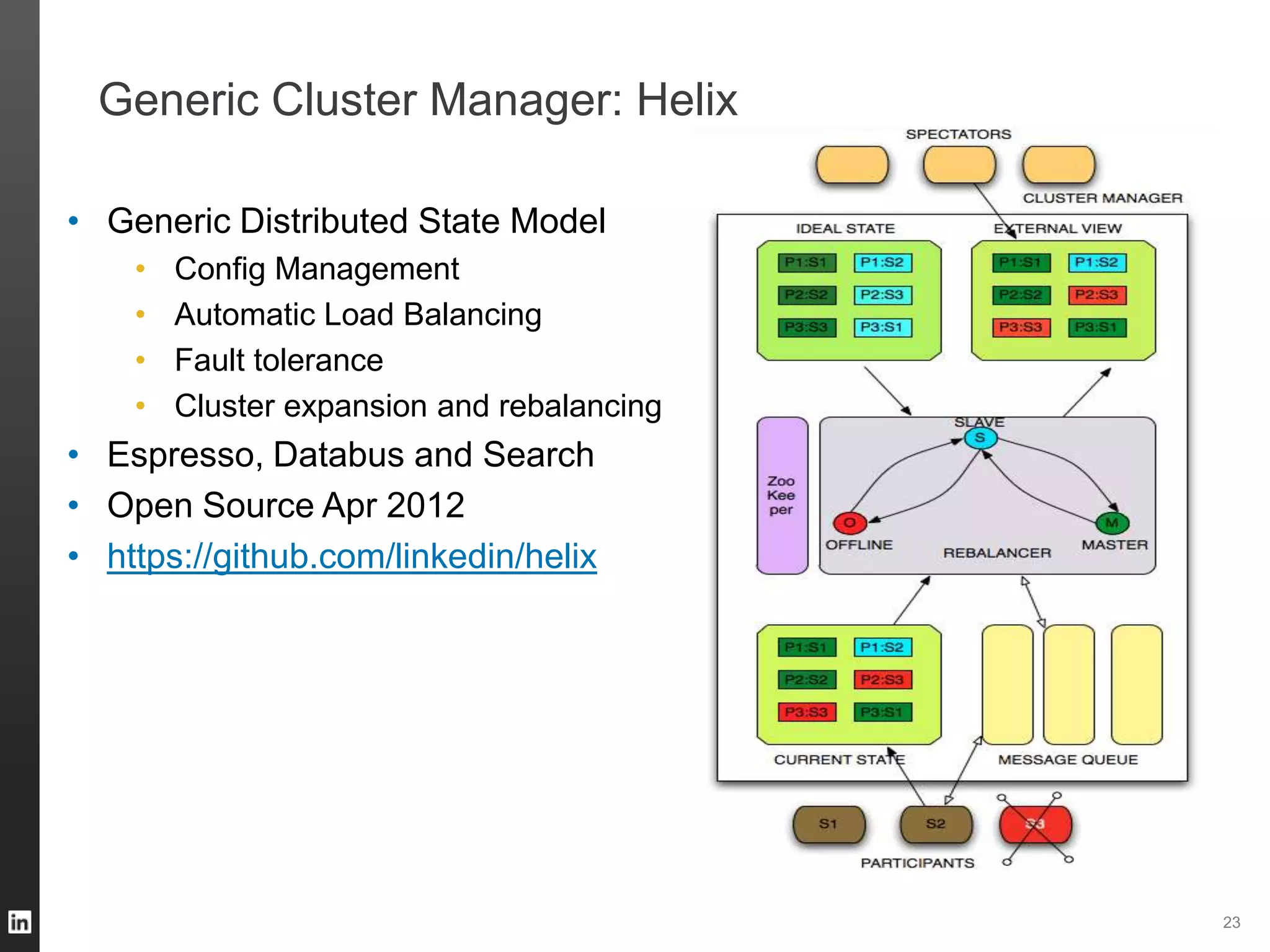

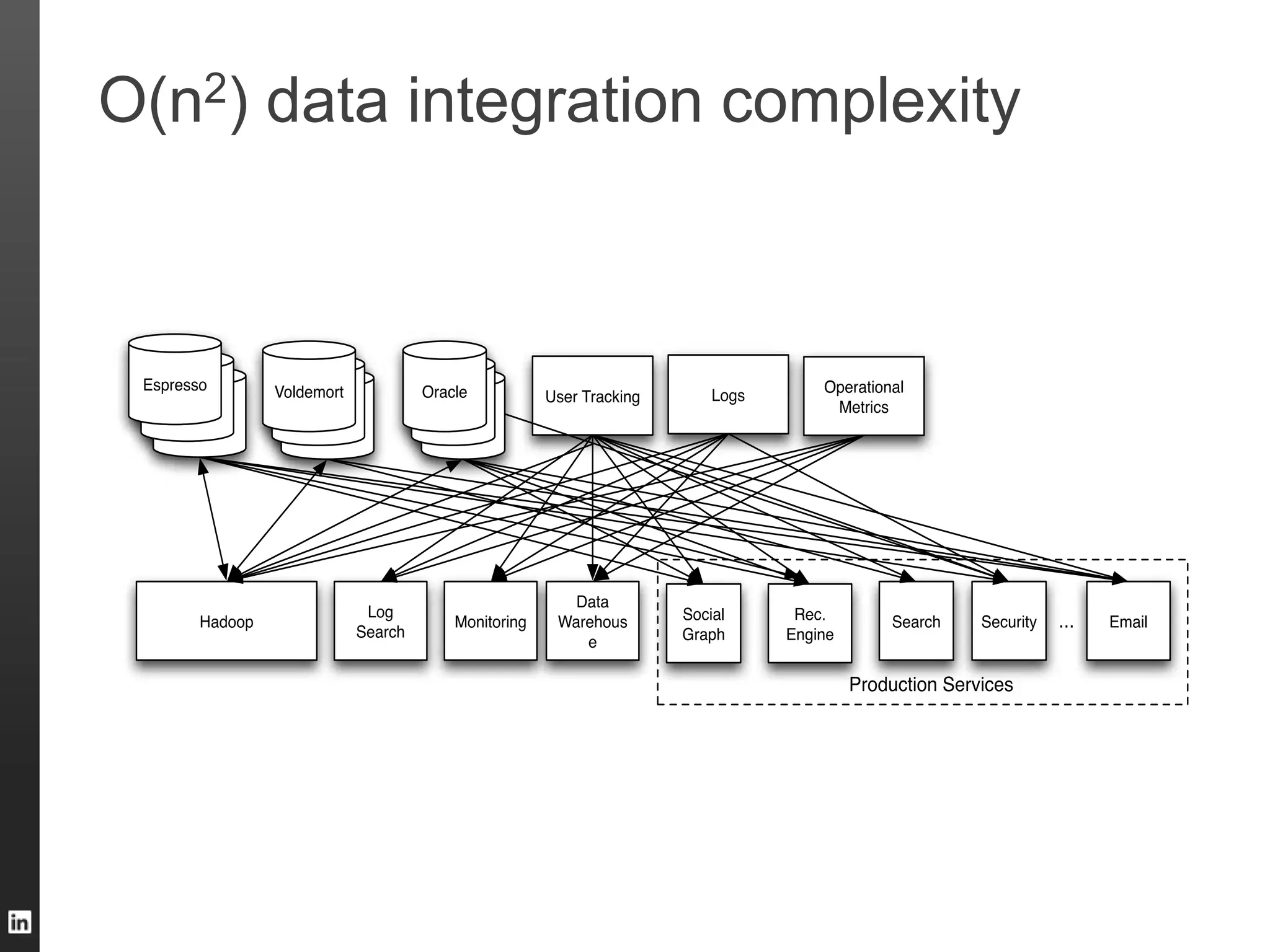

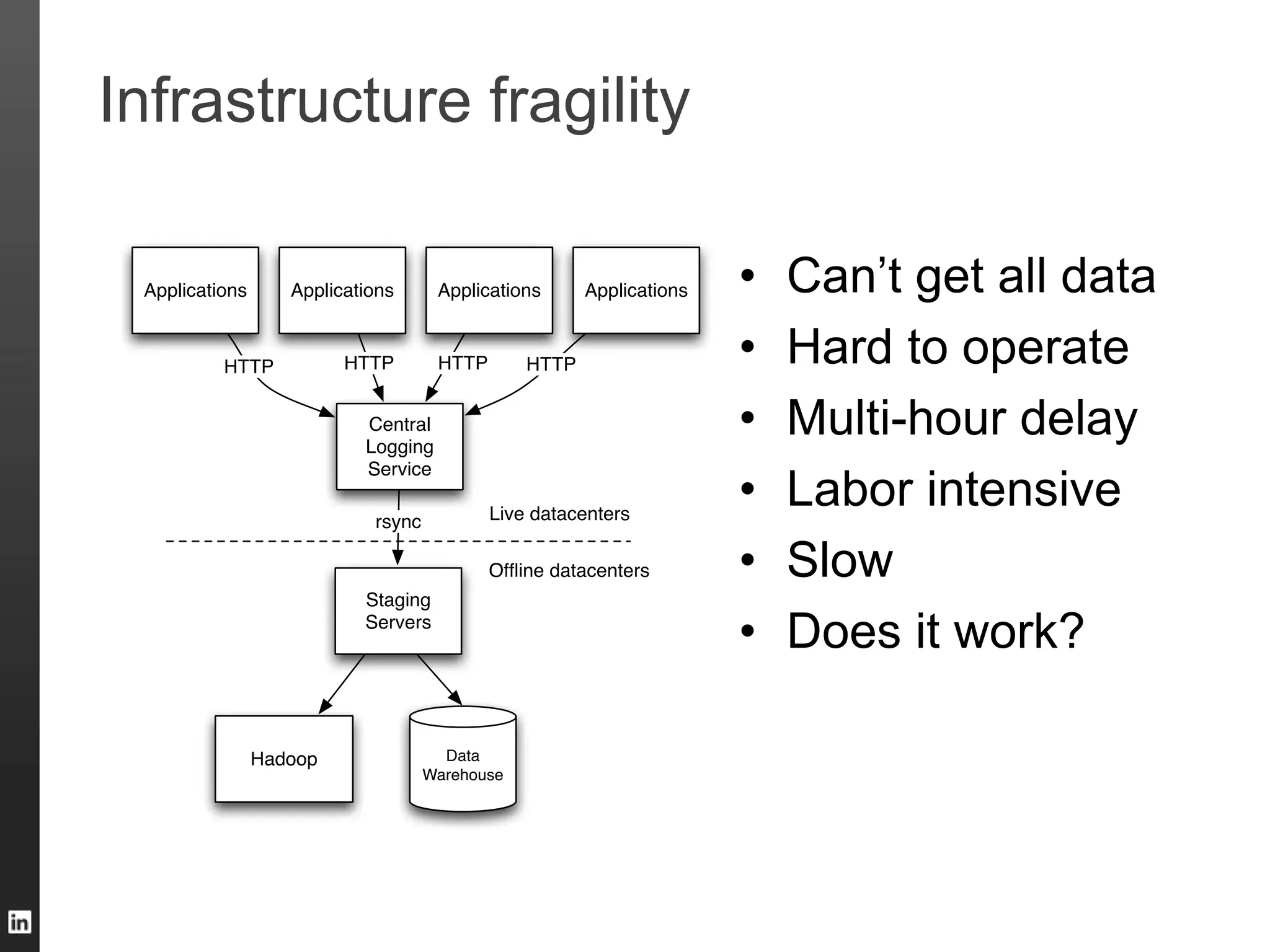

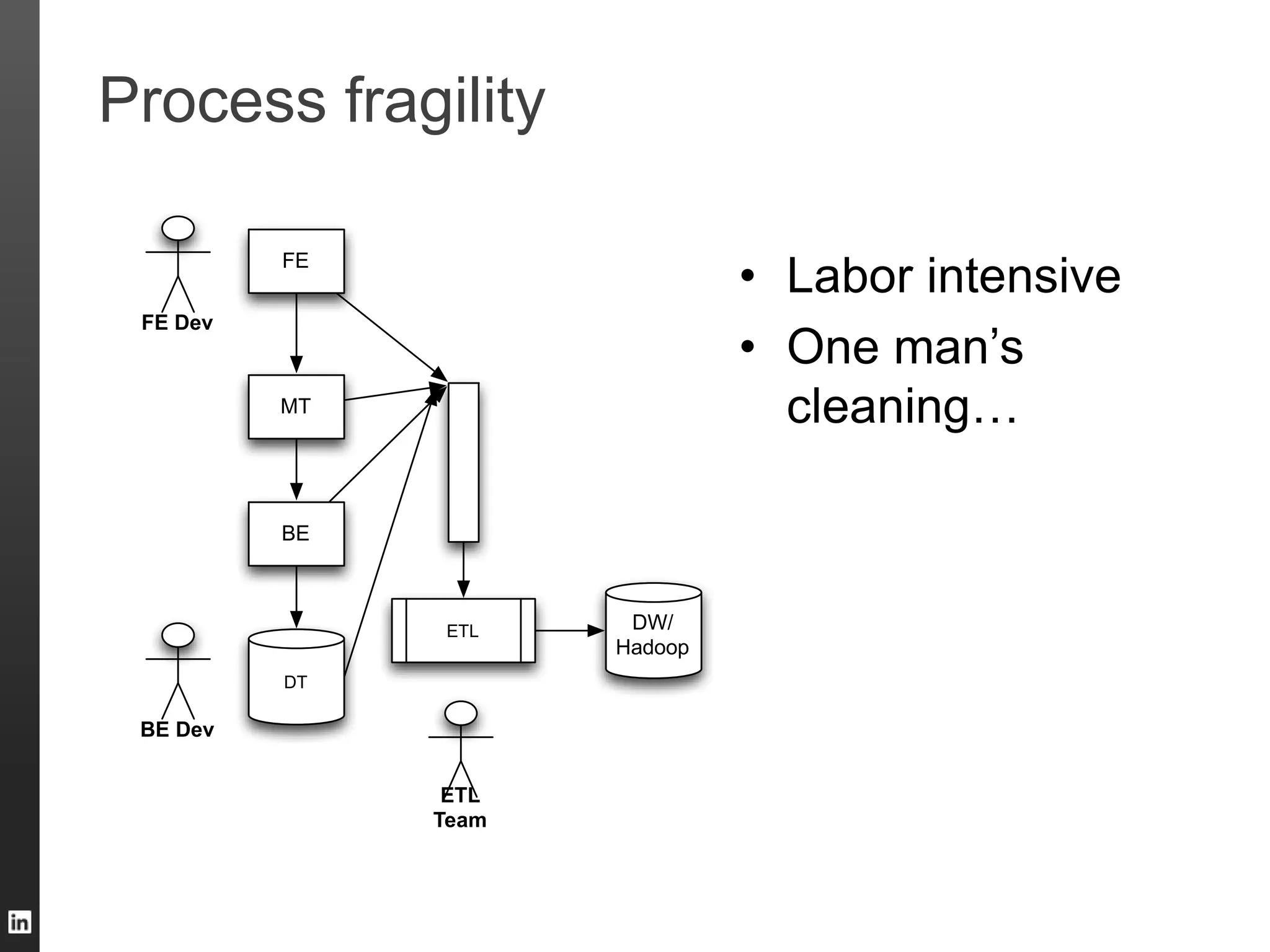

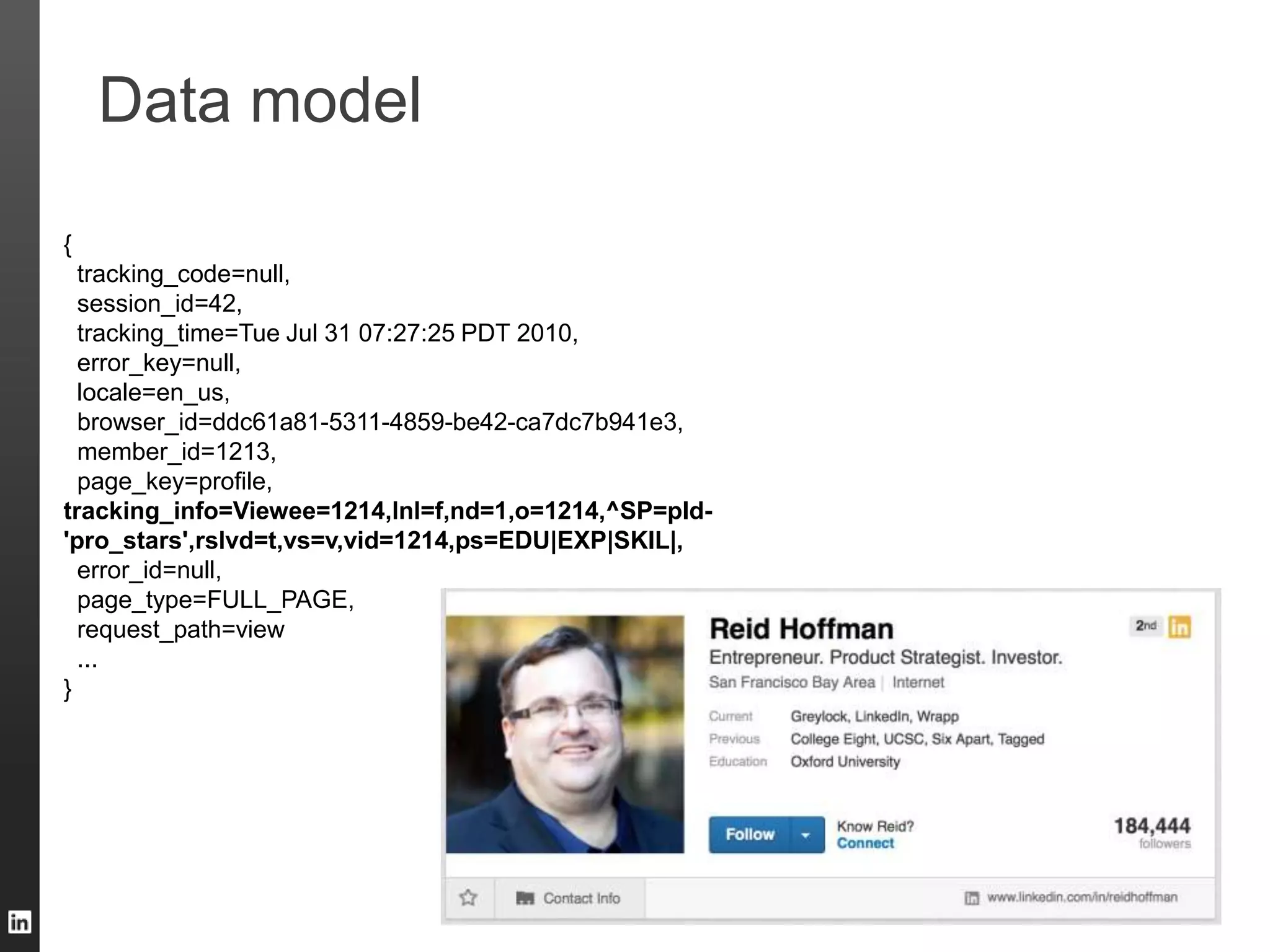

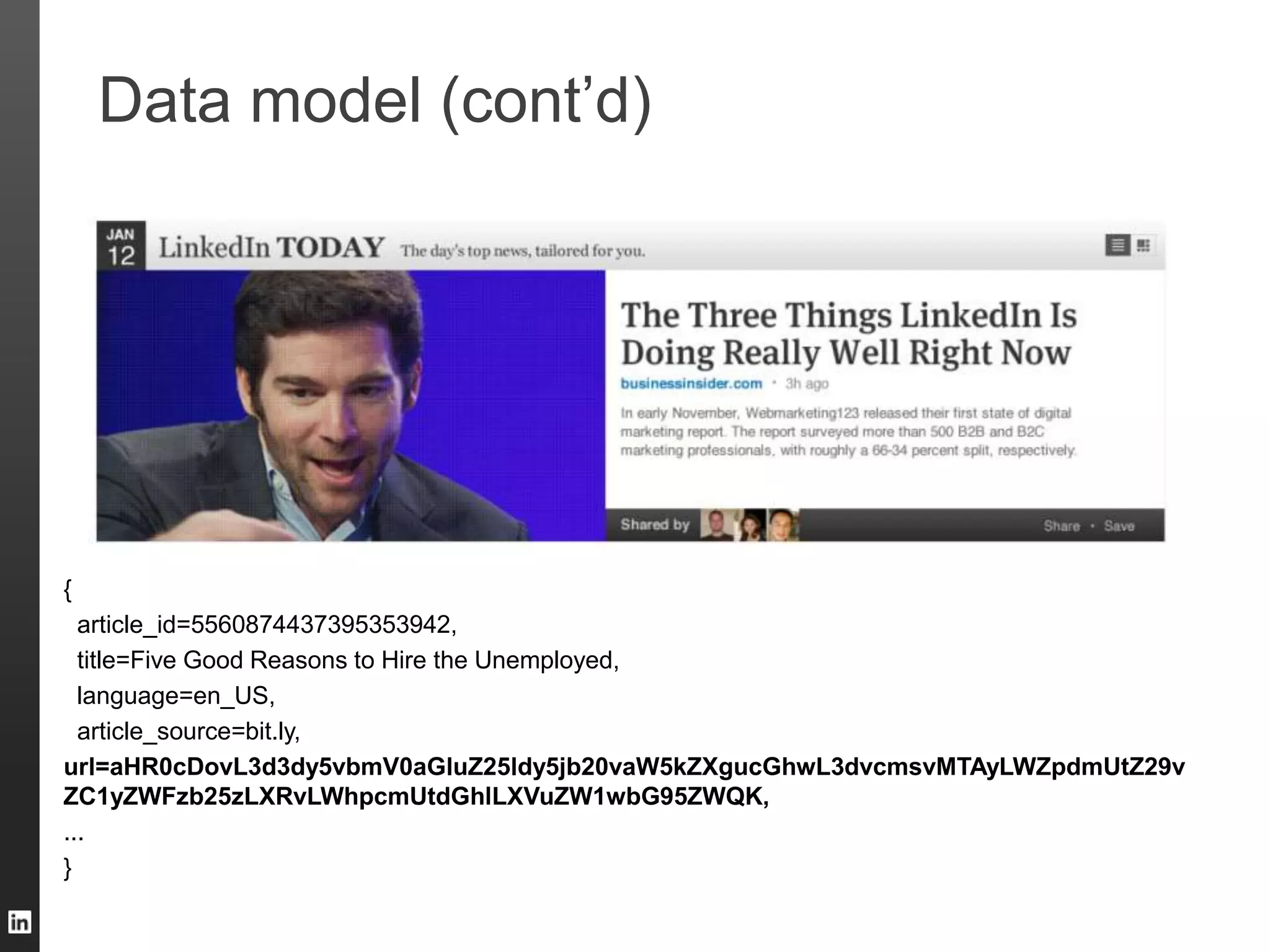

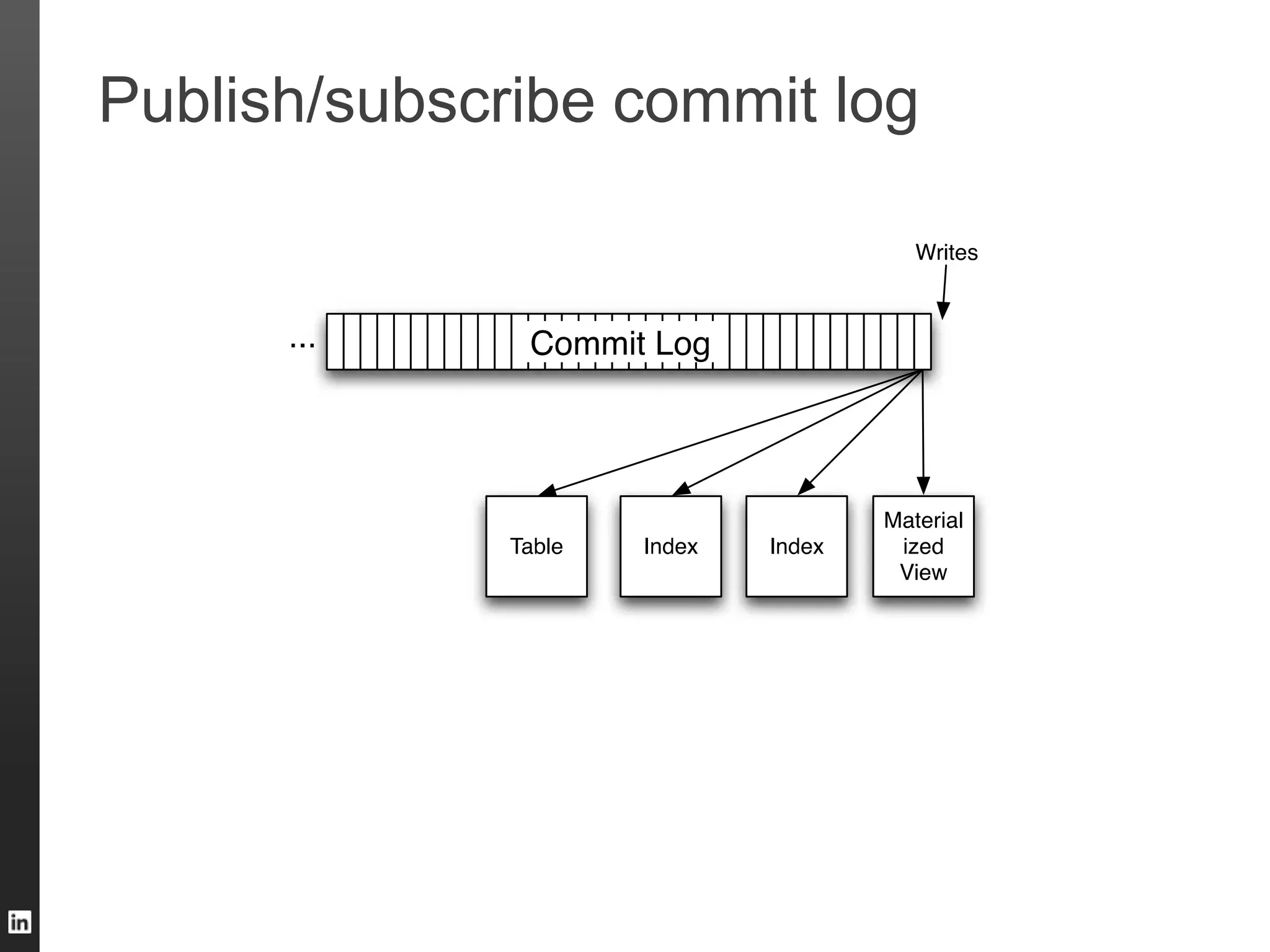

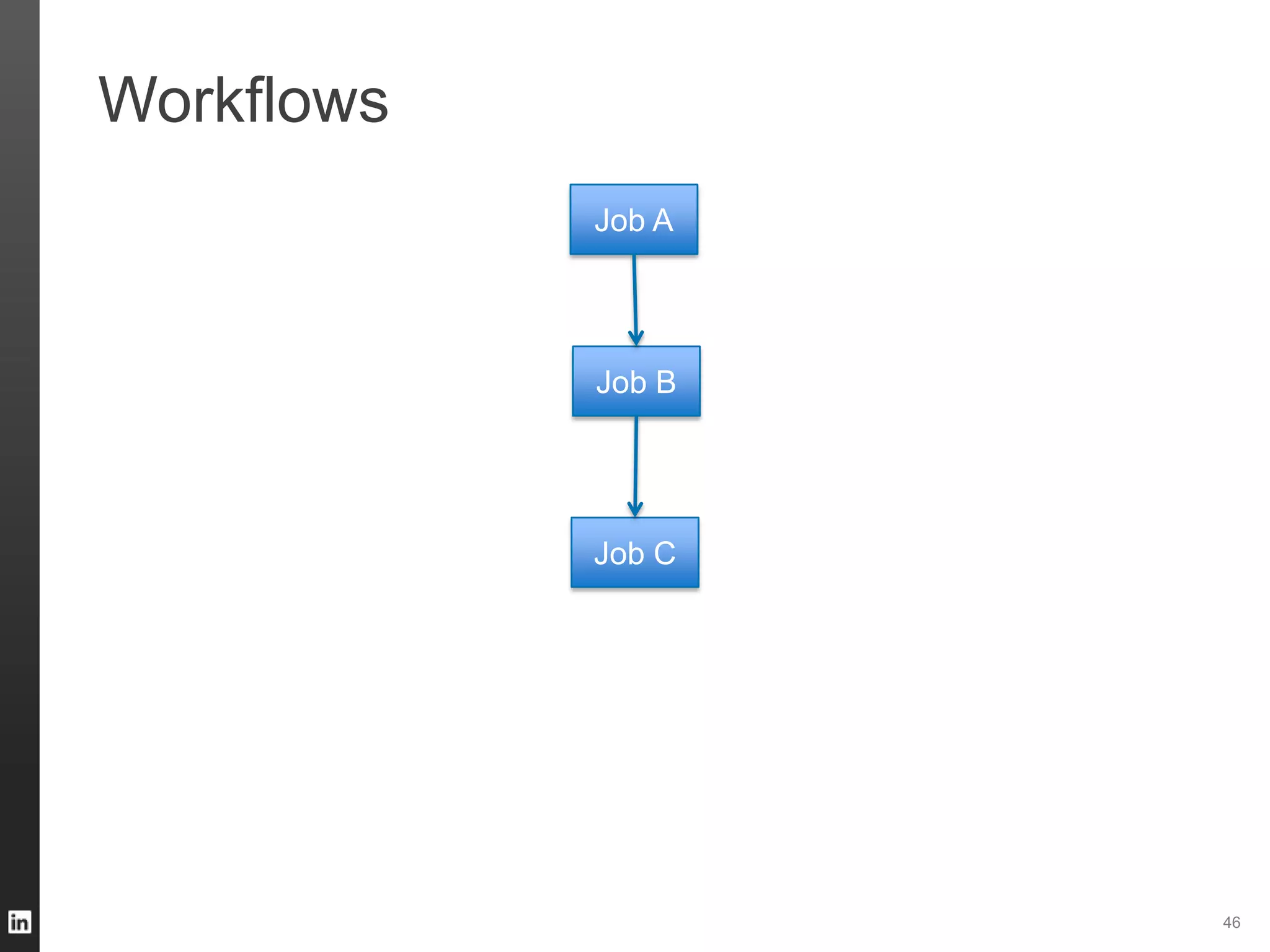

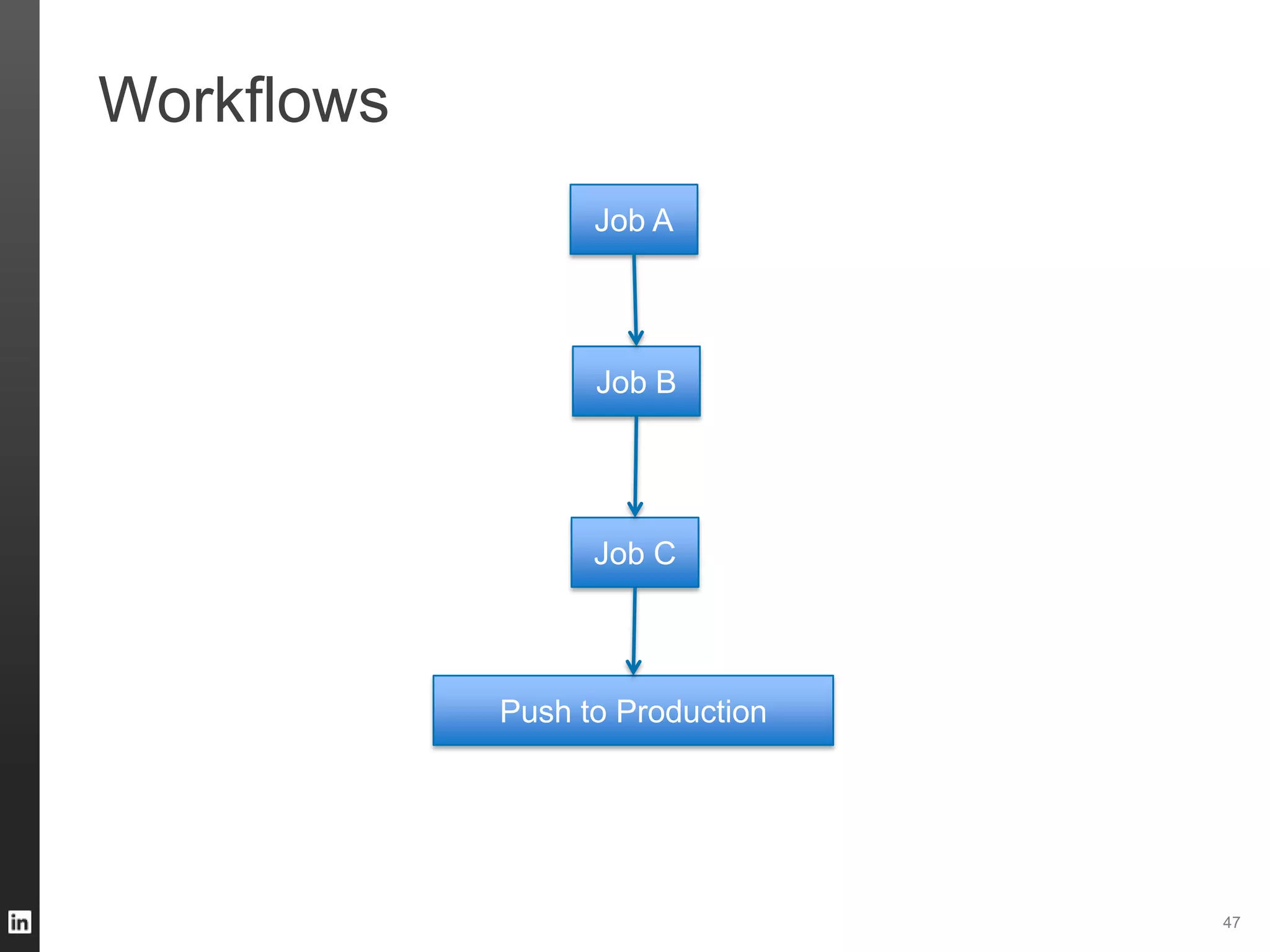

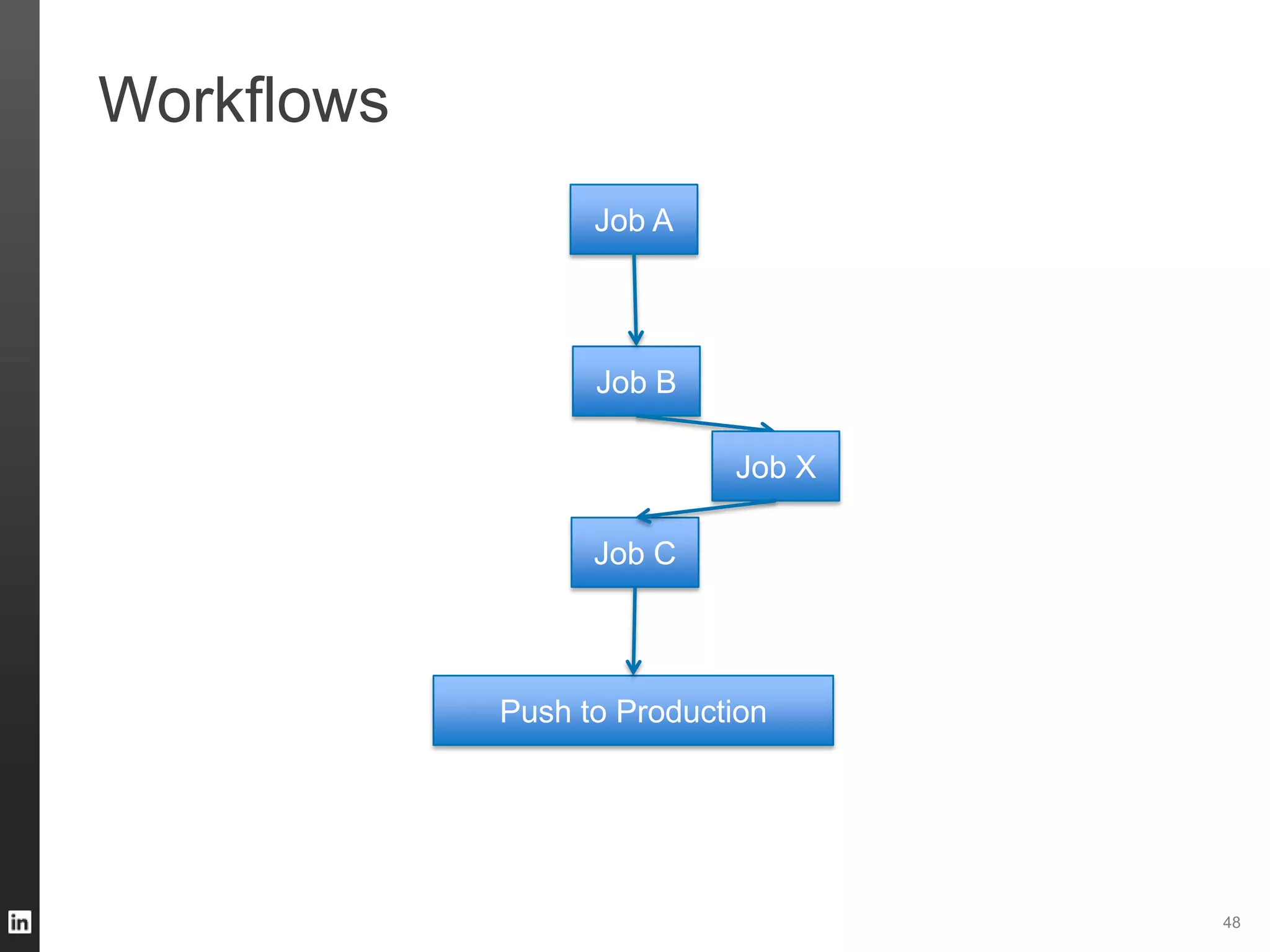

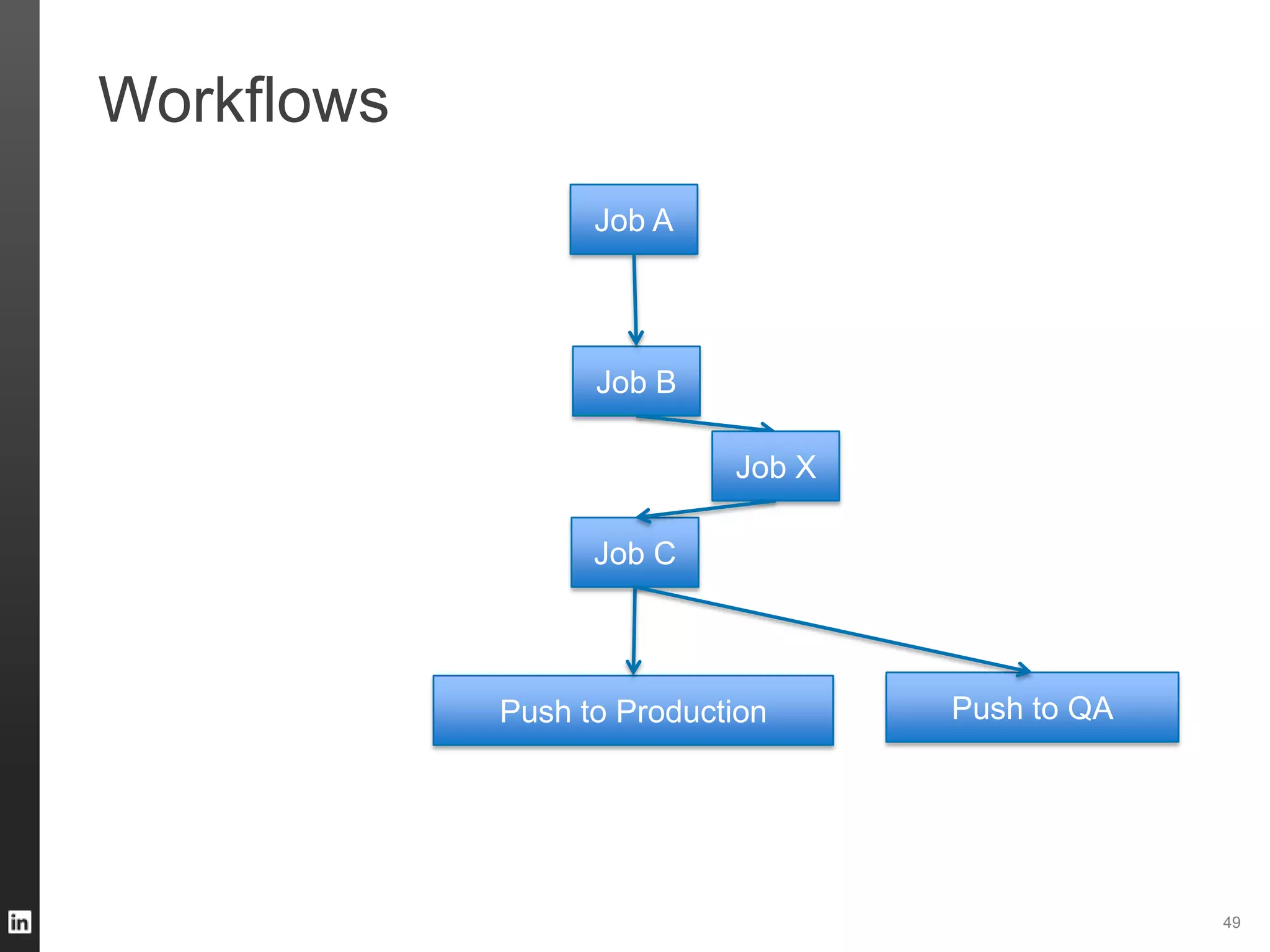

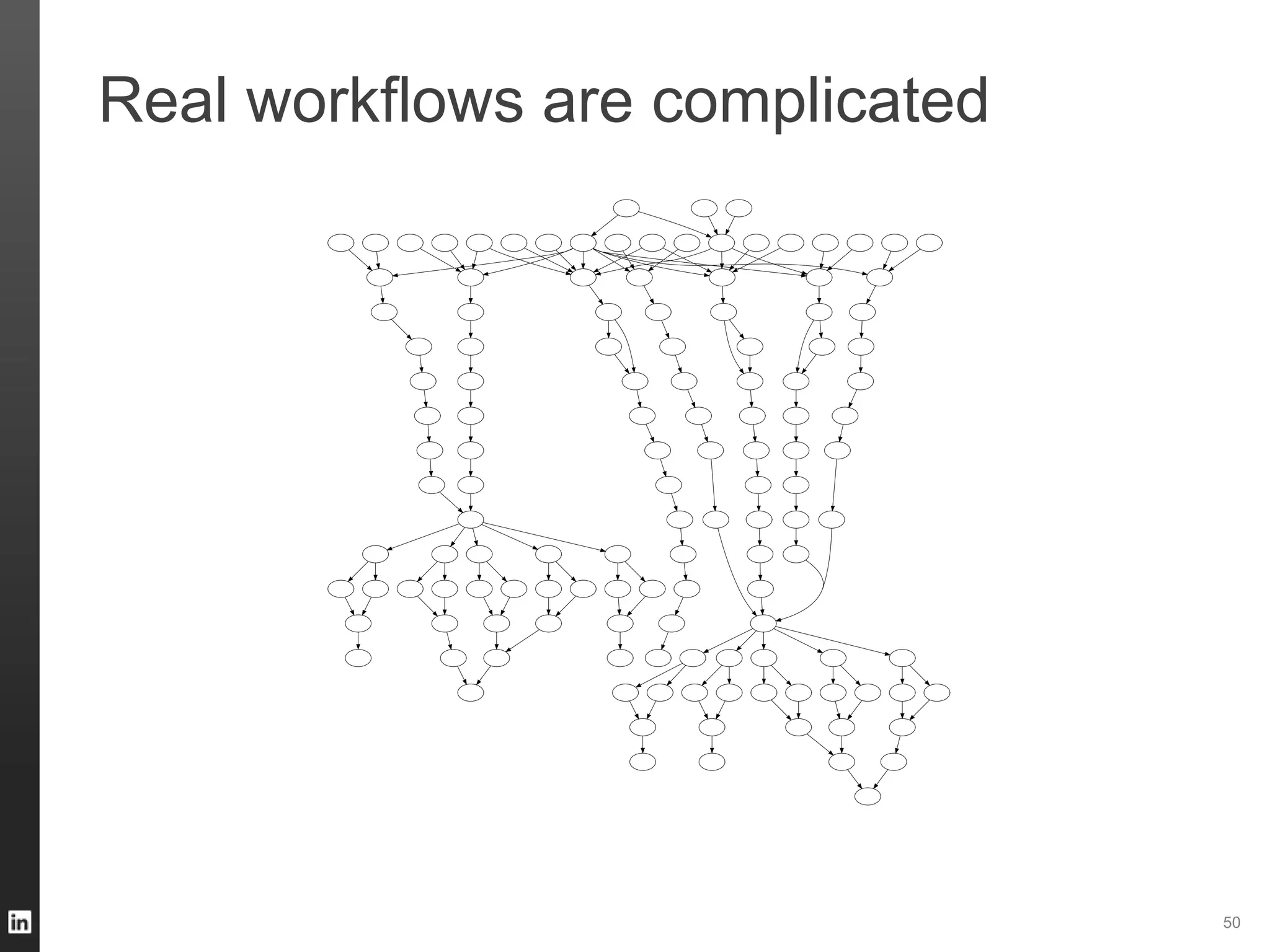

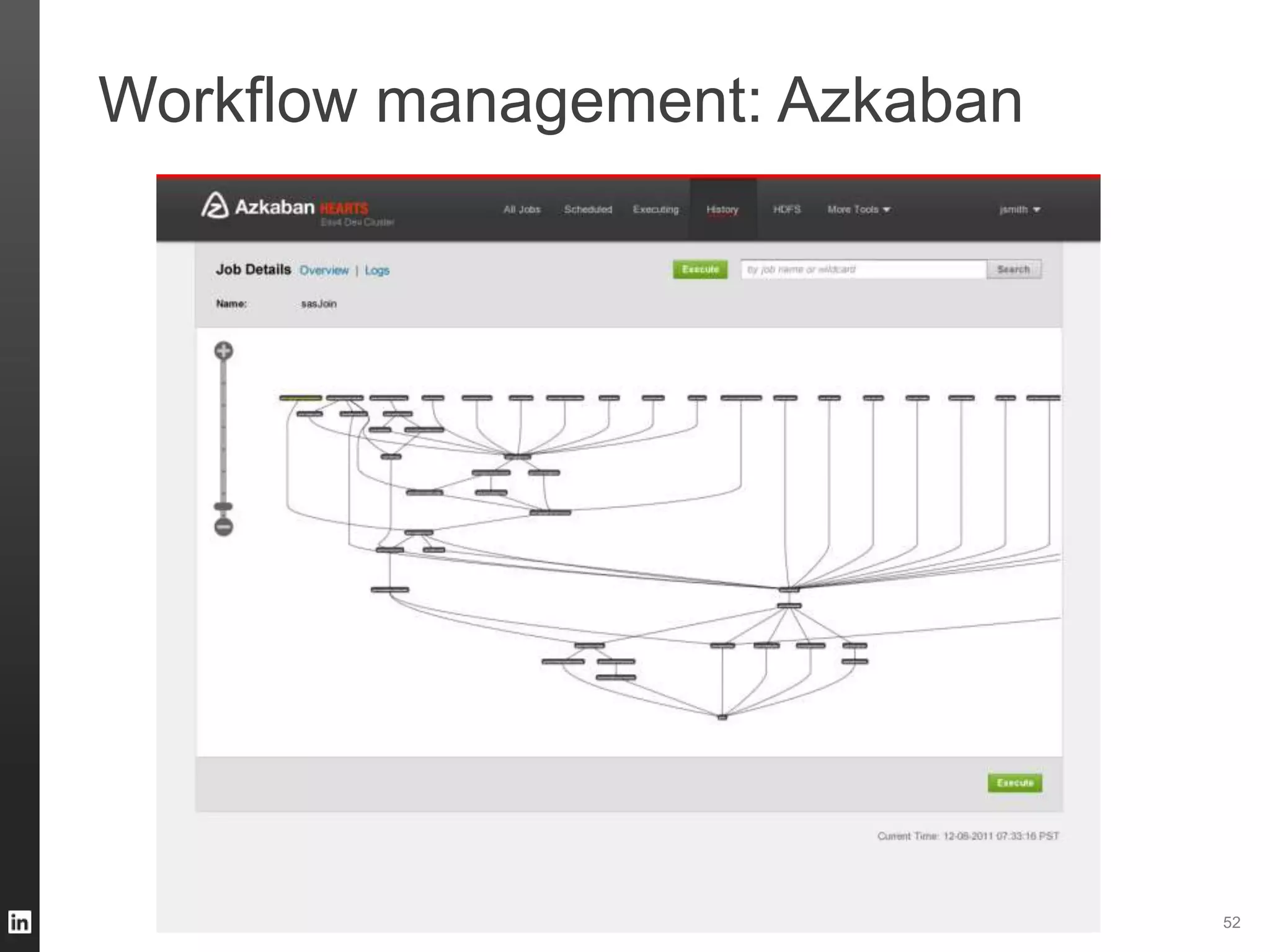

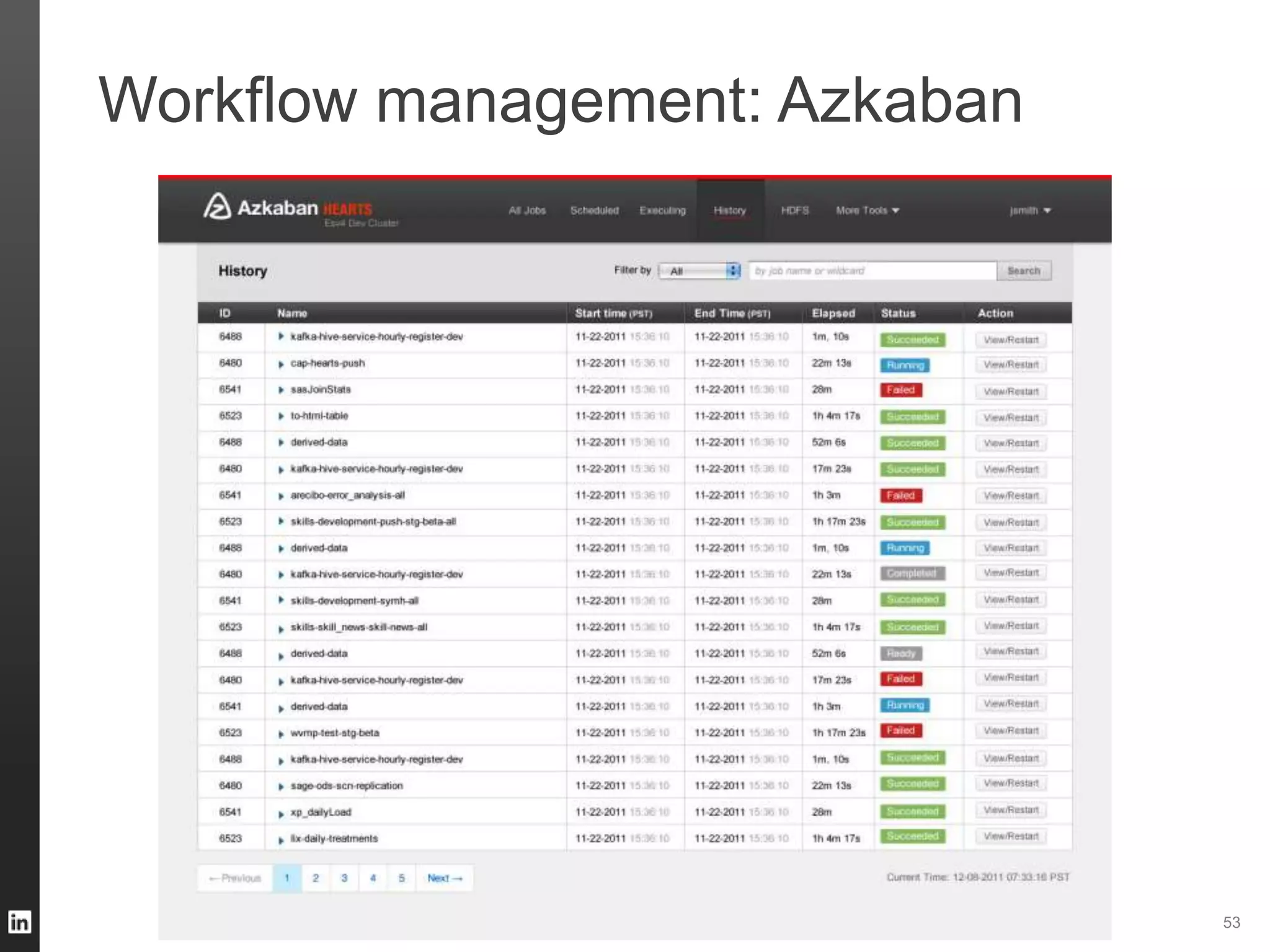

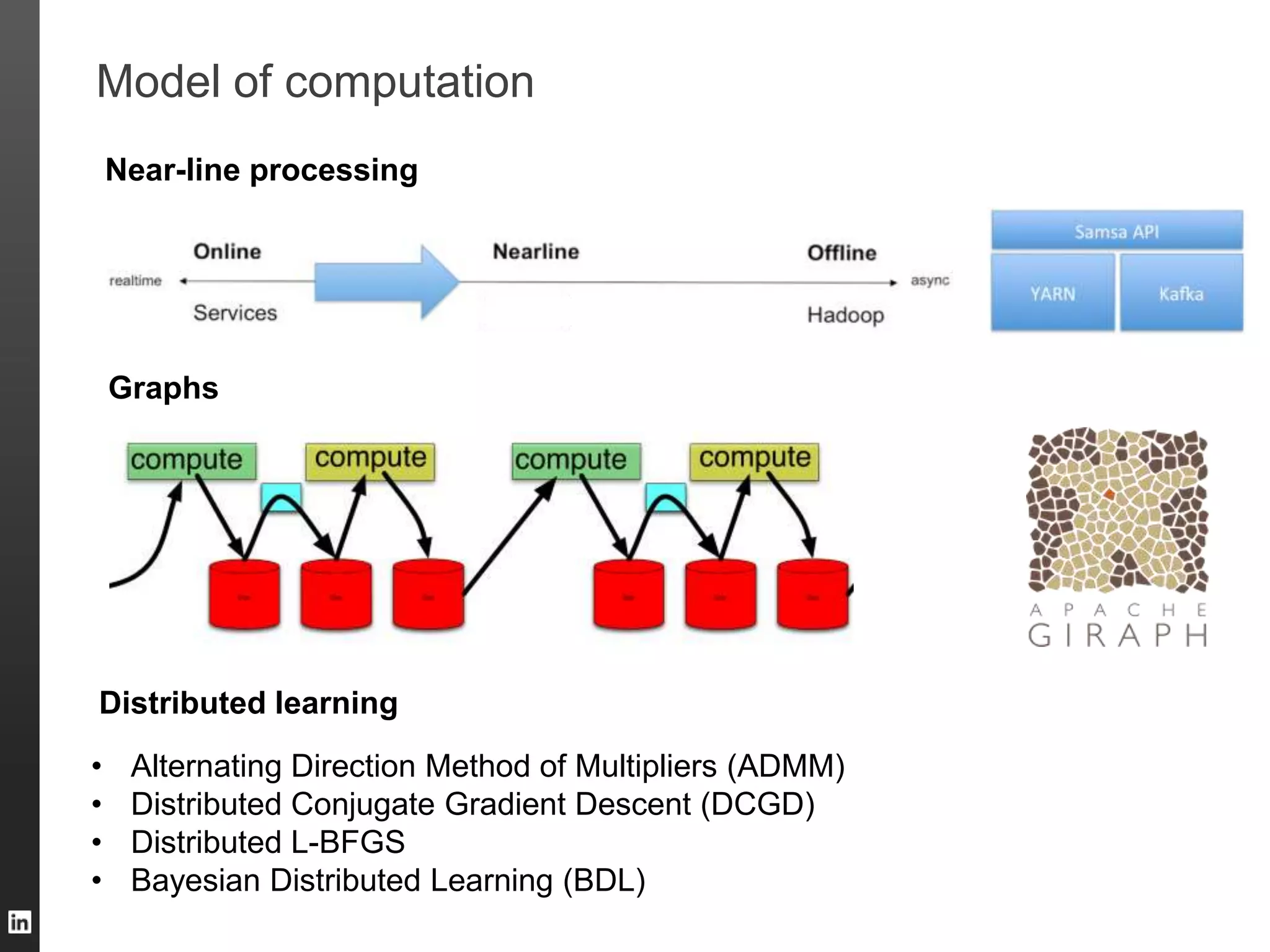

The document outlines LinkedIn's data infrastructure, detailing its online, near-line, and offline data processing systems, and addressing the challenges and solutions in managing large datasets. It highlights components like Databus for consistent data changes and Kafka for messaging, while discussing workflow management and data integration issues faced by data scientists. Key takeaways emphasize the importance of balancing specialized and generic solutions for effective infrastructure in a rapidly growing environment.