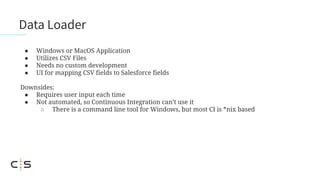

Codescience is a Salesforce product development organization that has been involved in app development since 2008, supporting over 220 apps on the AppExchange. The document provides an overview of test data, methods for loading it, and emphasizes the importance of using vetted records for effective QA and development practices. It discusses various methods for loading test data, including data loader, Bulk API, SalesforceDX, and custom solutions, along with their advantages and disadvantages.