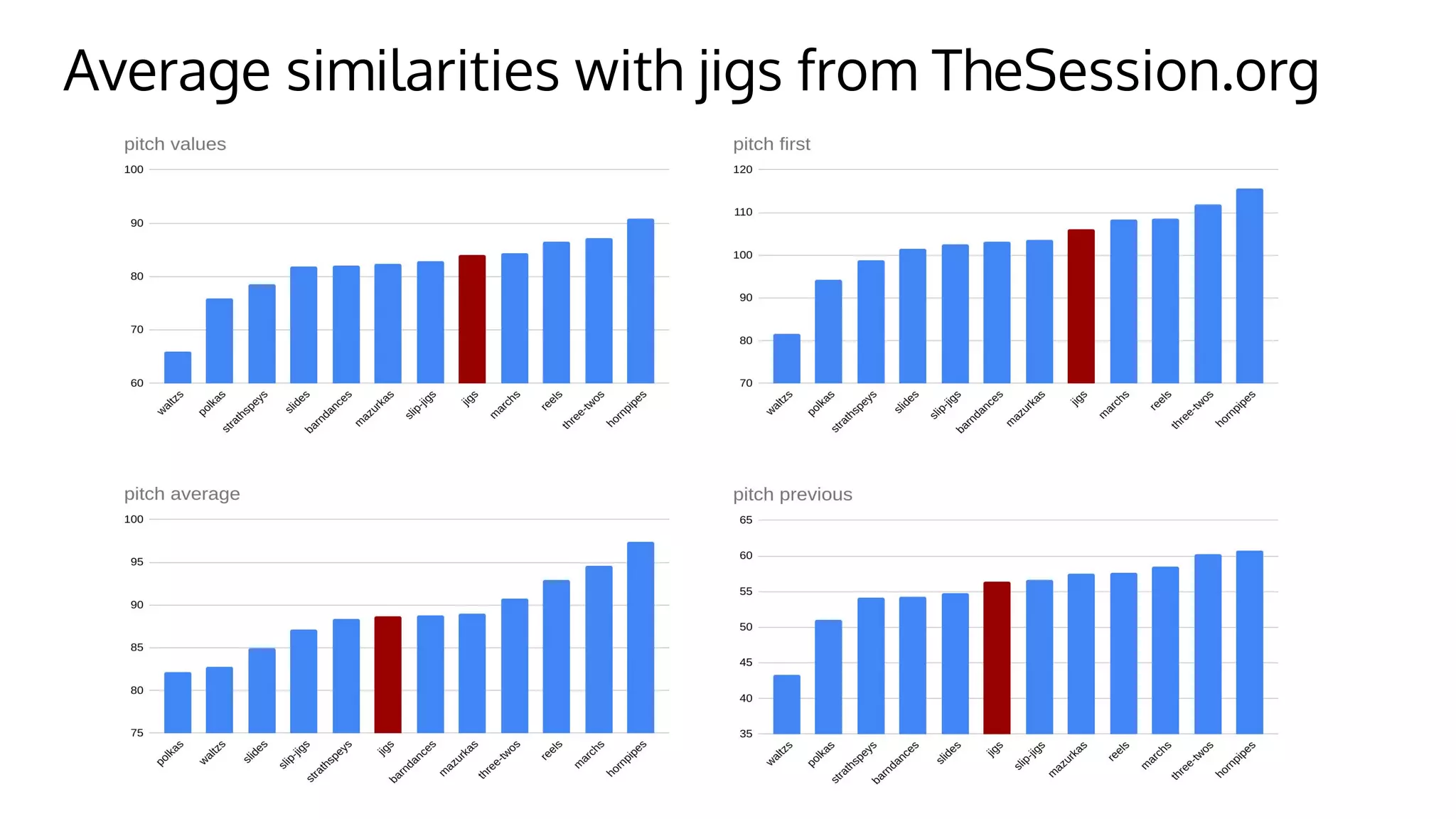

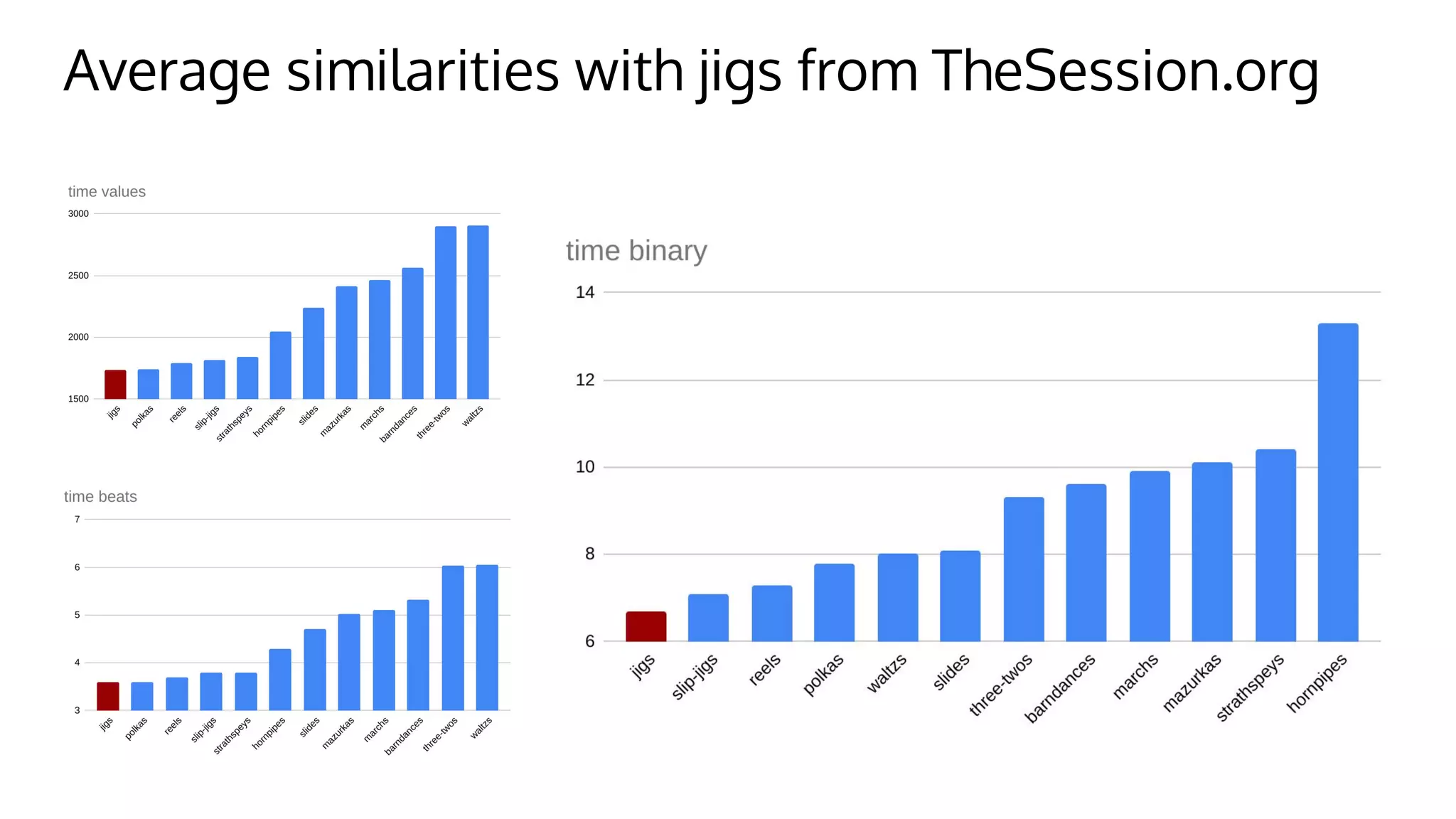

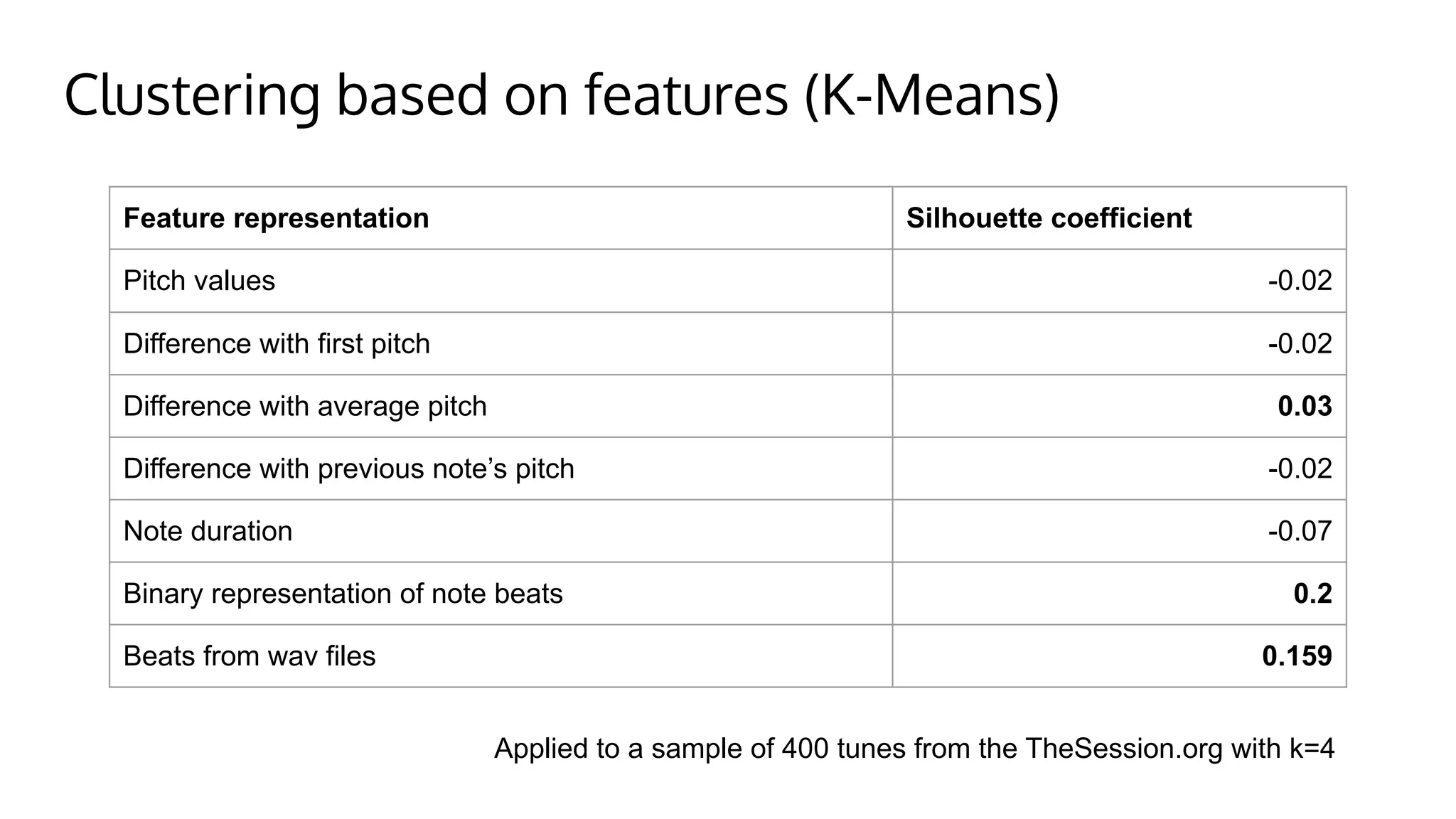

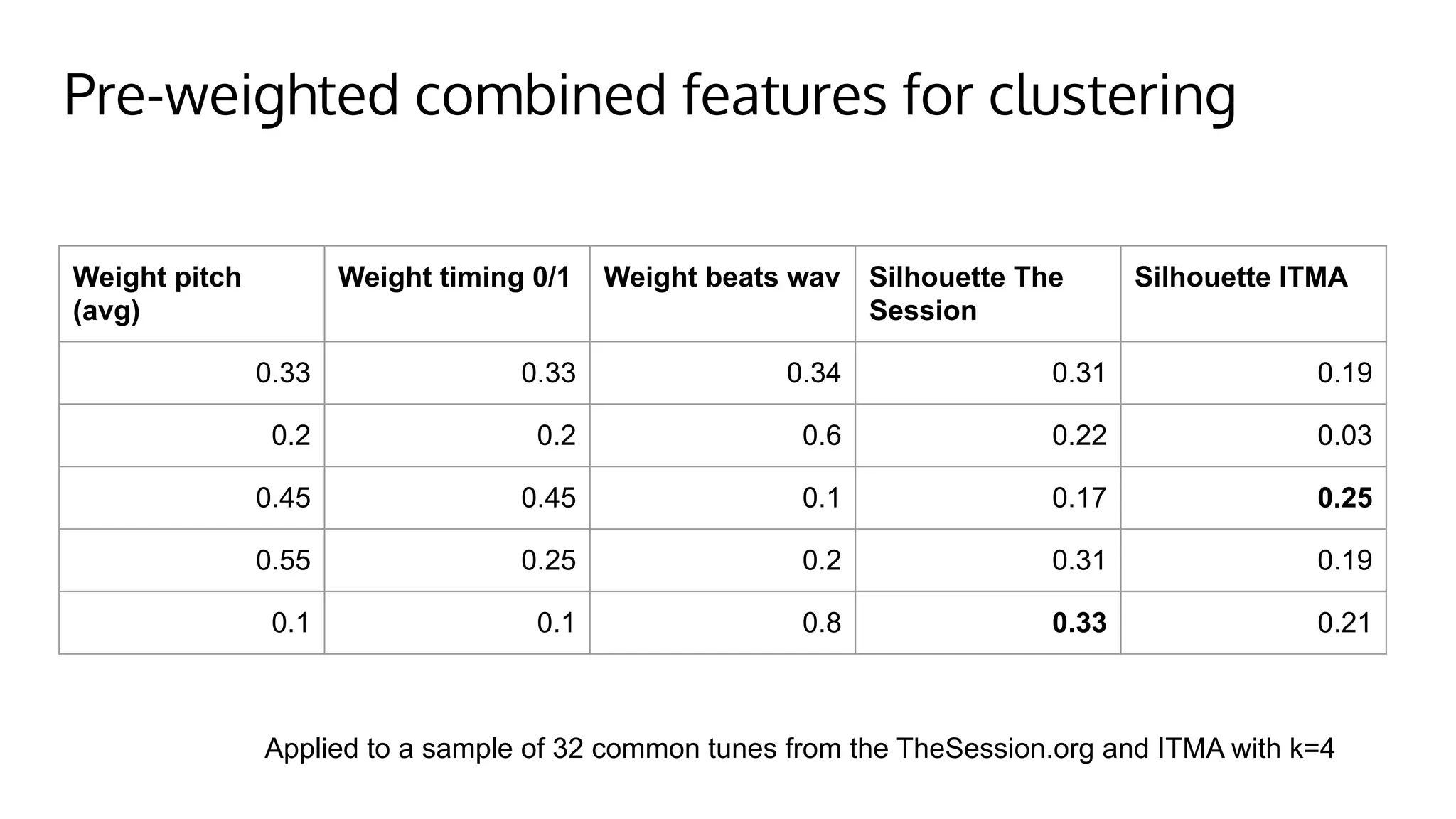

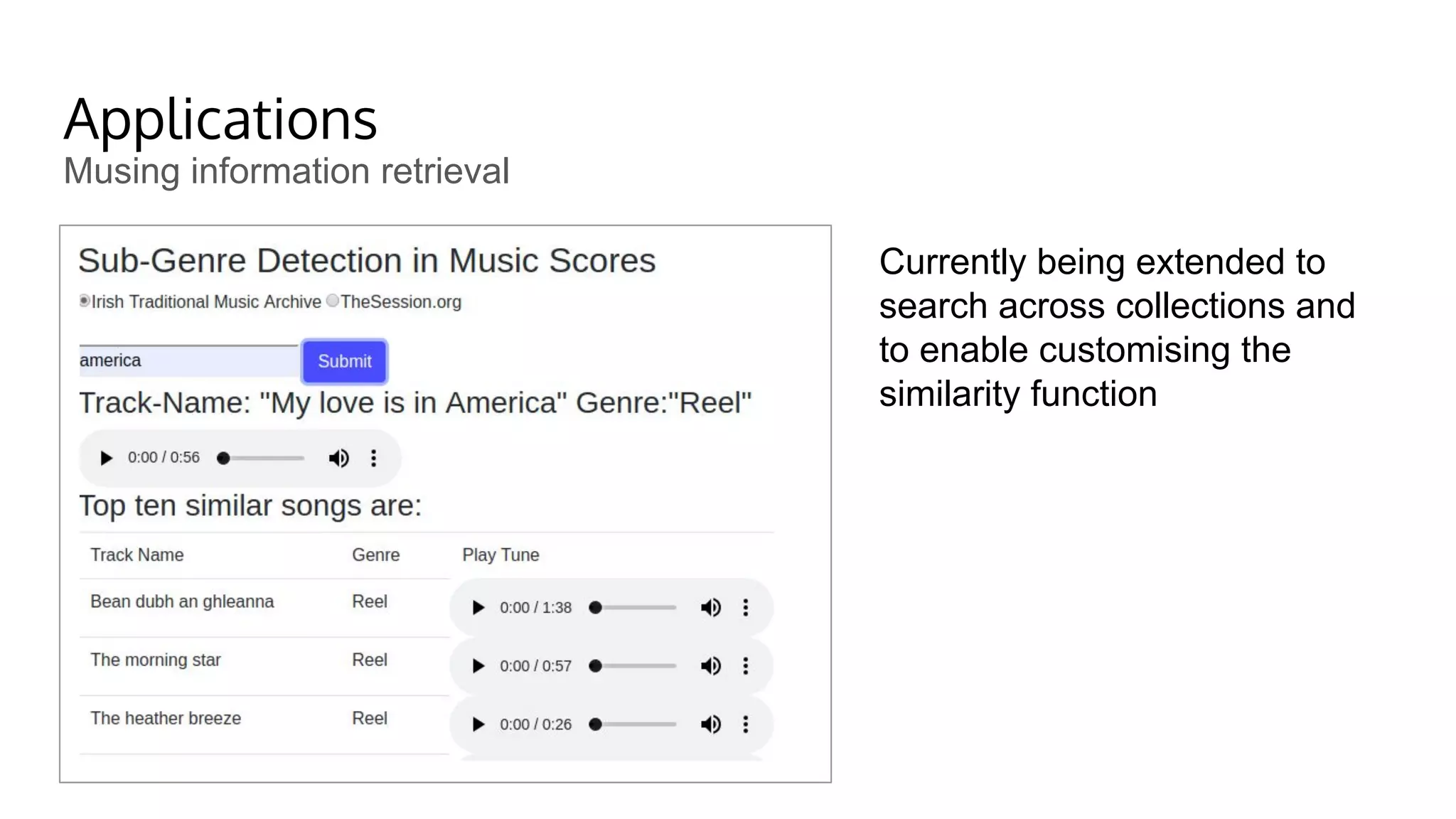

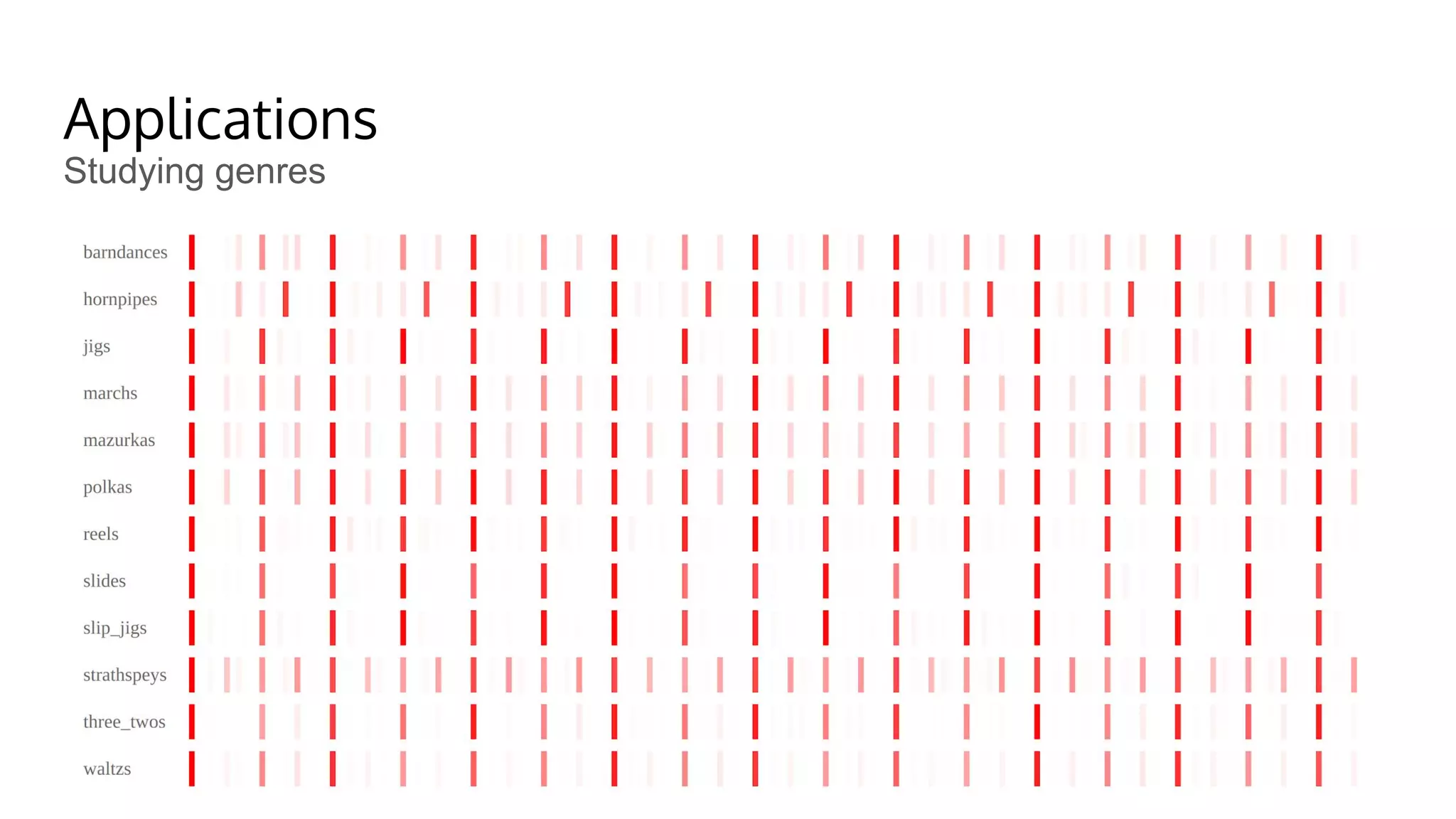

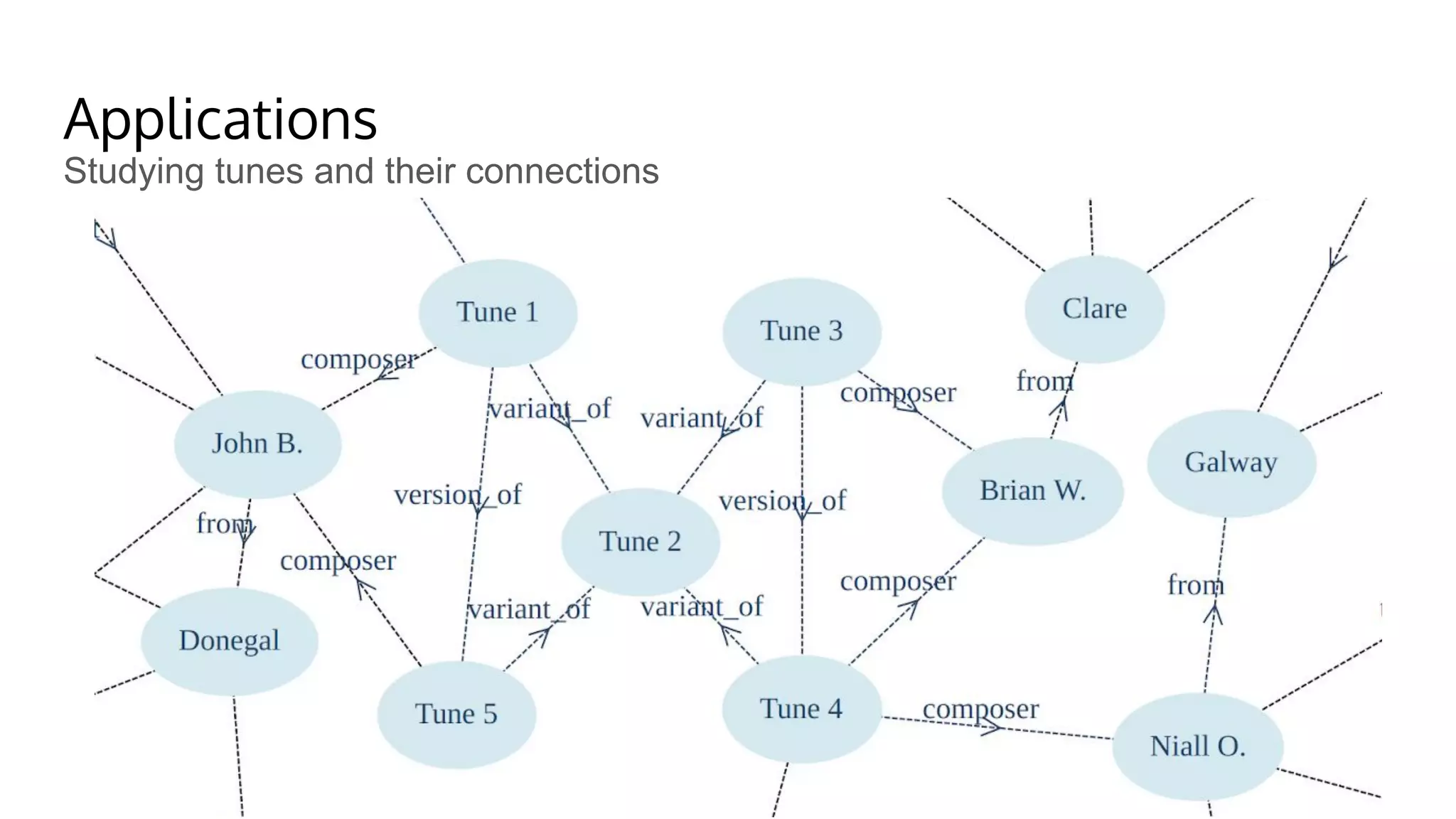

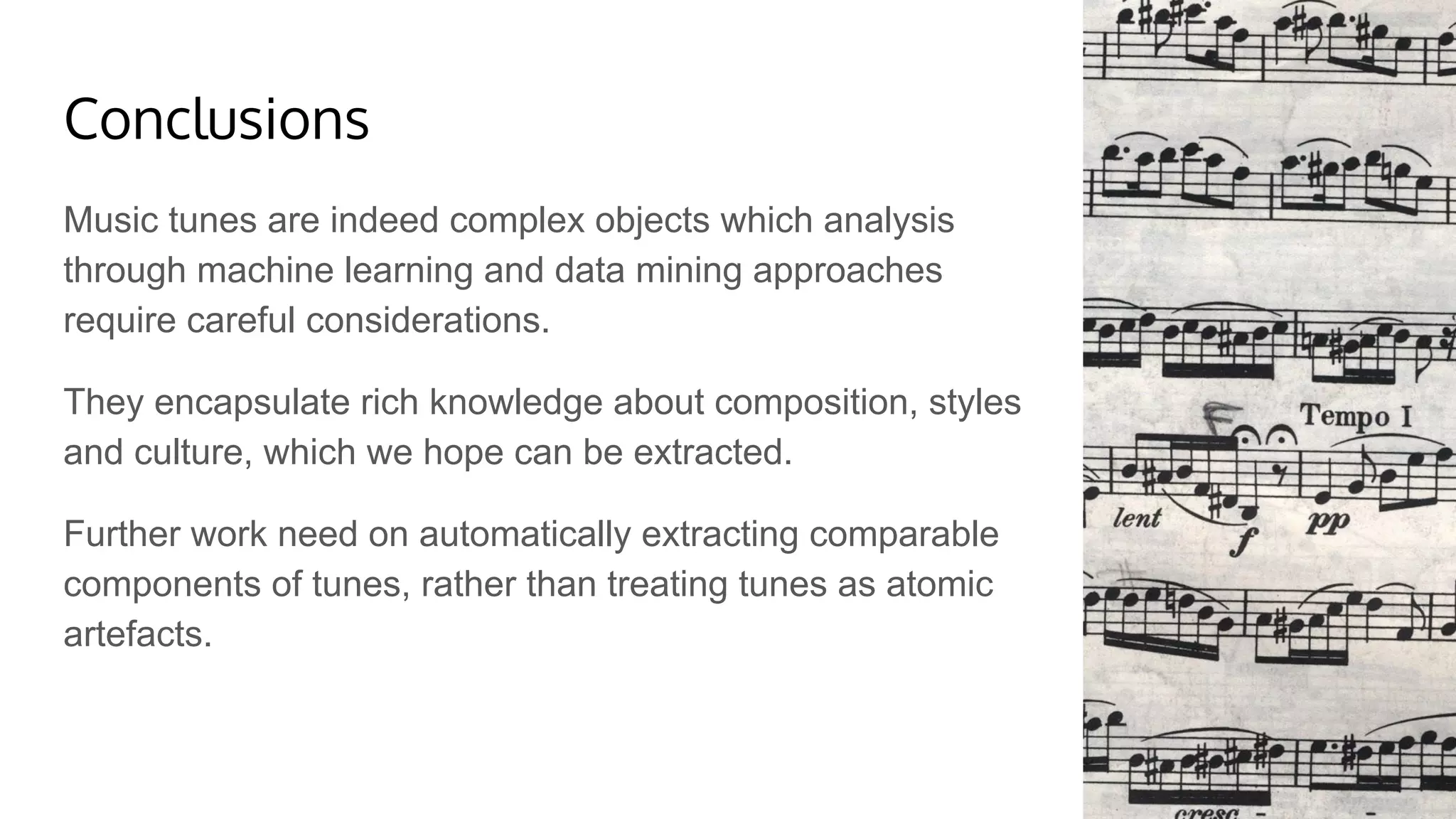

This document discusses an unsupervised learning approach to identify sub-genres in music scores. It explores different ways of representing musical features like pitch and timing in vector formats that can be analyzed using clustering algorithms. Evaluating different feature representations on a sample of folk tunes, the best results were obtained using a combined weighting of pitch, timing, beats extracted from audio files. This approach shows potential for applications like music information retrieval, studying musical genres and connections between tunes.

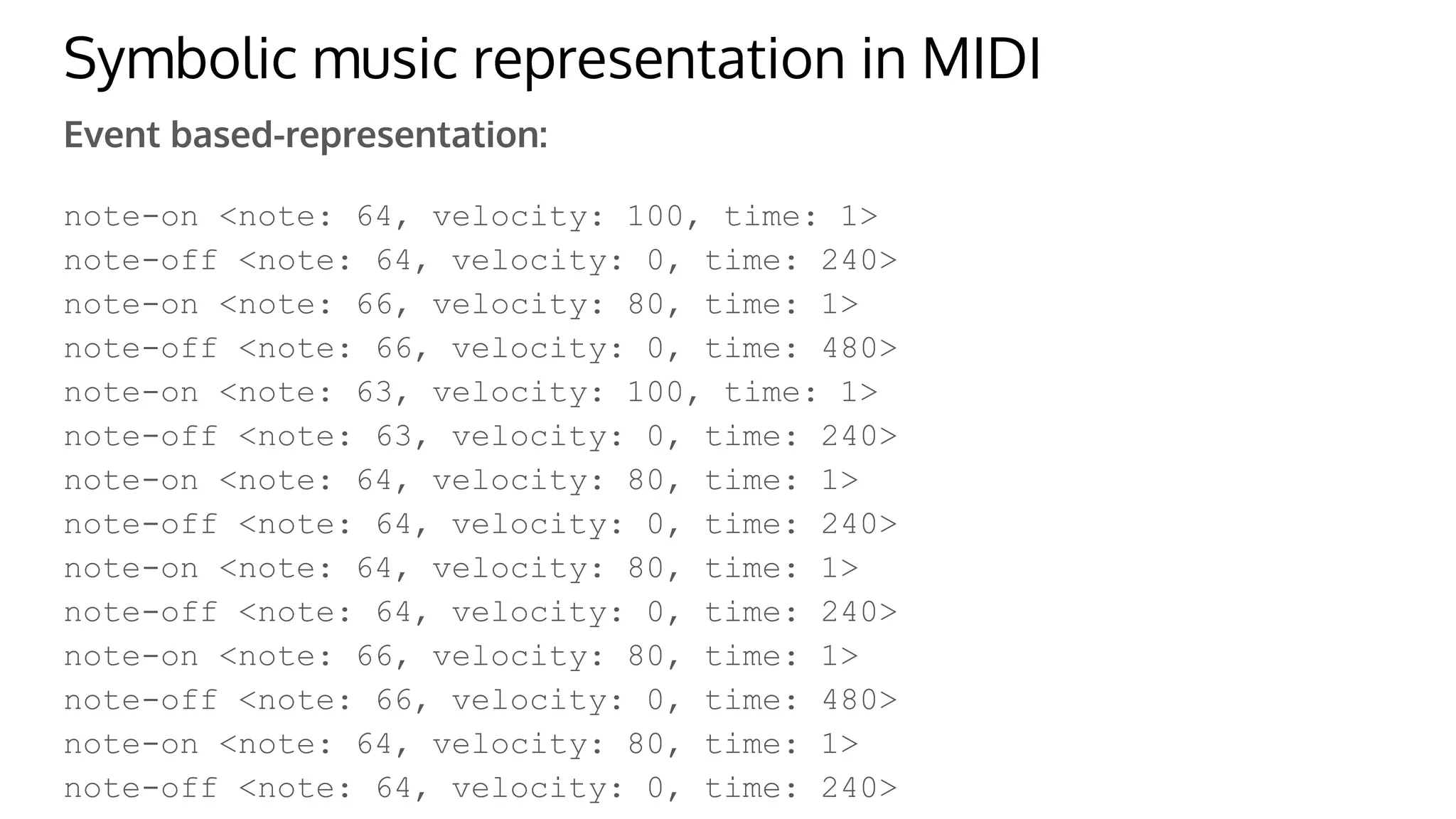

![Representing pitch features

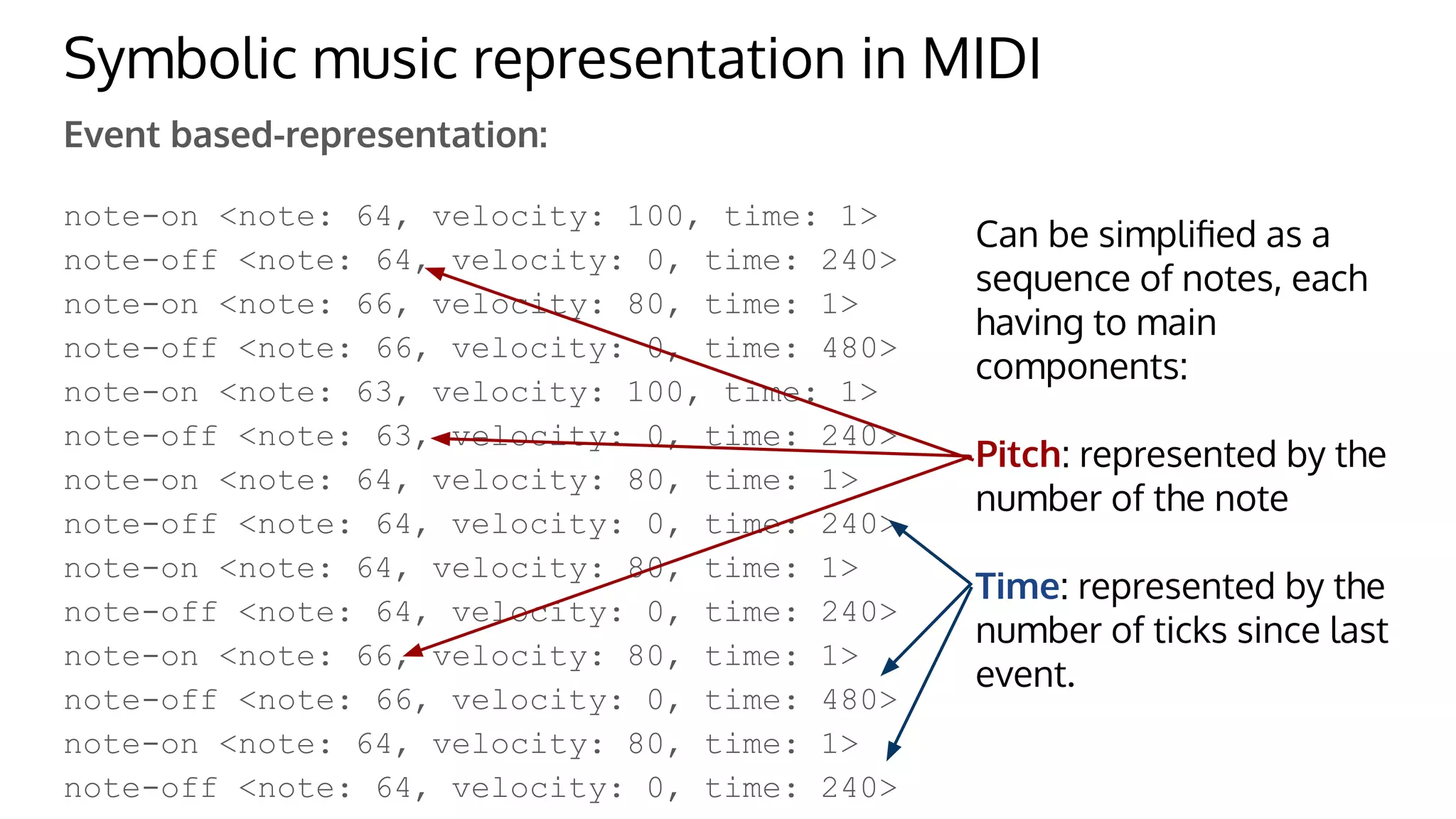

Basic approach: As a vector of note numbers

[64,66,63,64,64,66,64]

But, the same tune transposed would be completely different...

Vector of differences with the first note:

[0,+2,-1,0,0,+2,0]

Vector of differences with the average note:

[-0.43,+1.57,-1.43,-0.43,-0.43,+1.57,-0.43]

Vector of differences with first note:

[0,+2,-3,+1,0,+2,-2]](https://image.slidesharecdn.com/aics2019-200131140319/75/Unsupervised-learning-approach-for-identifying-sub-genres-in-music-scores-9-2048.jpg)

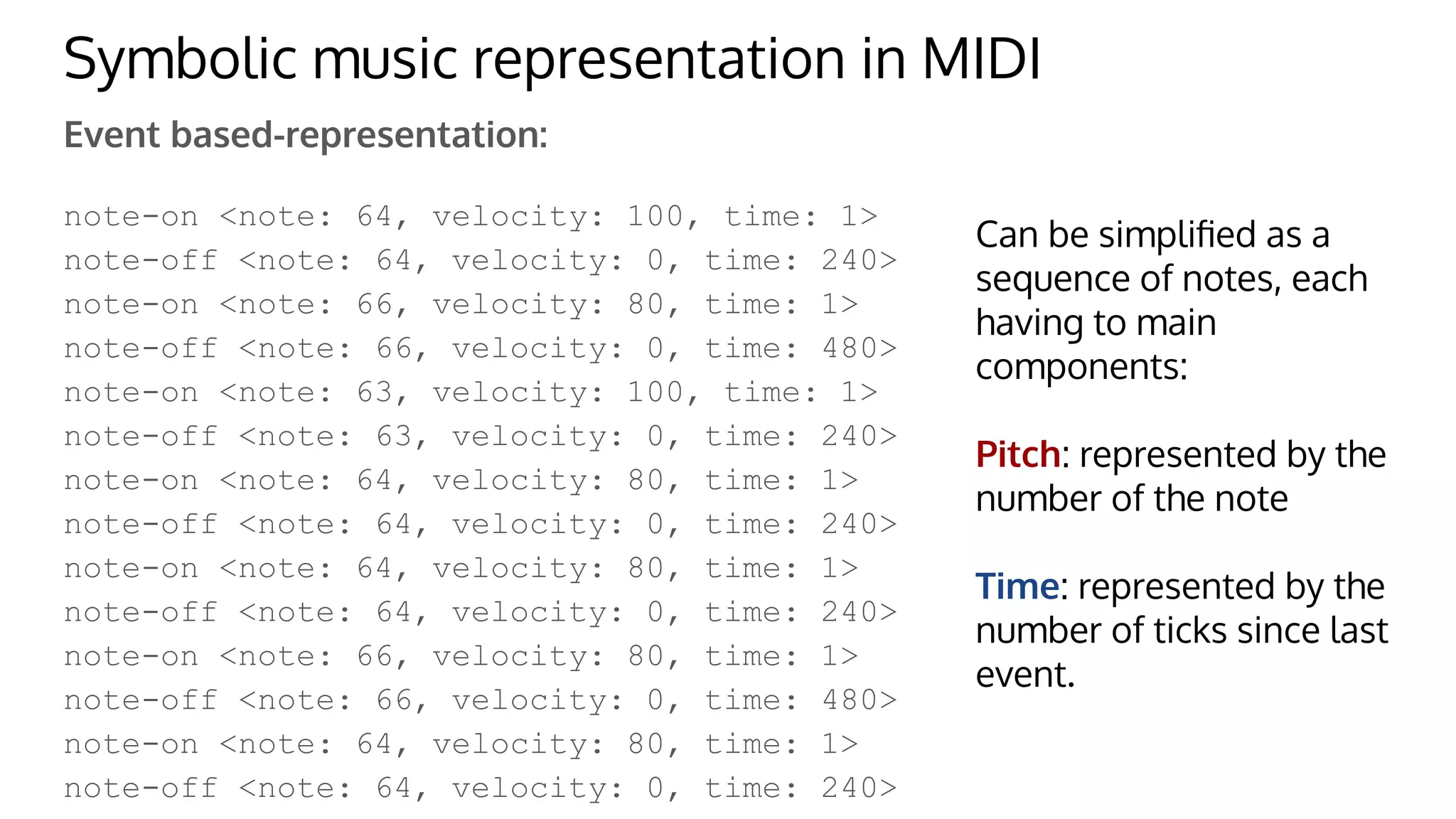

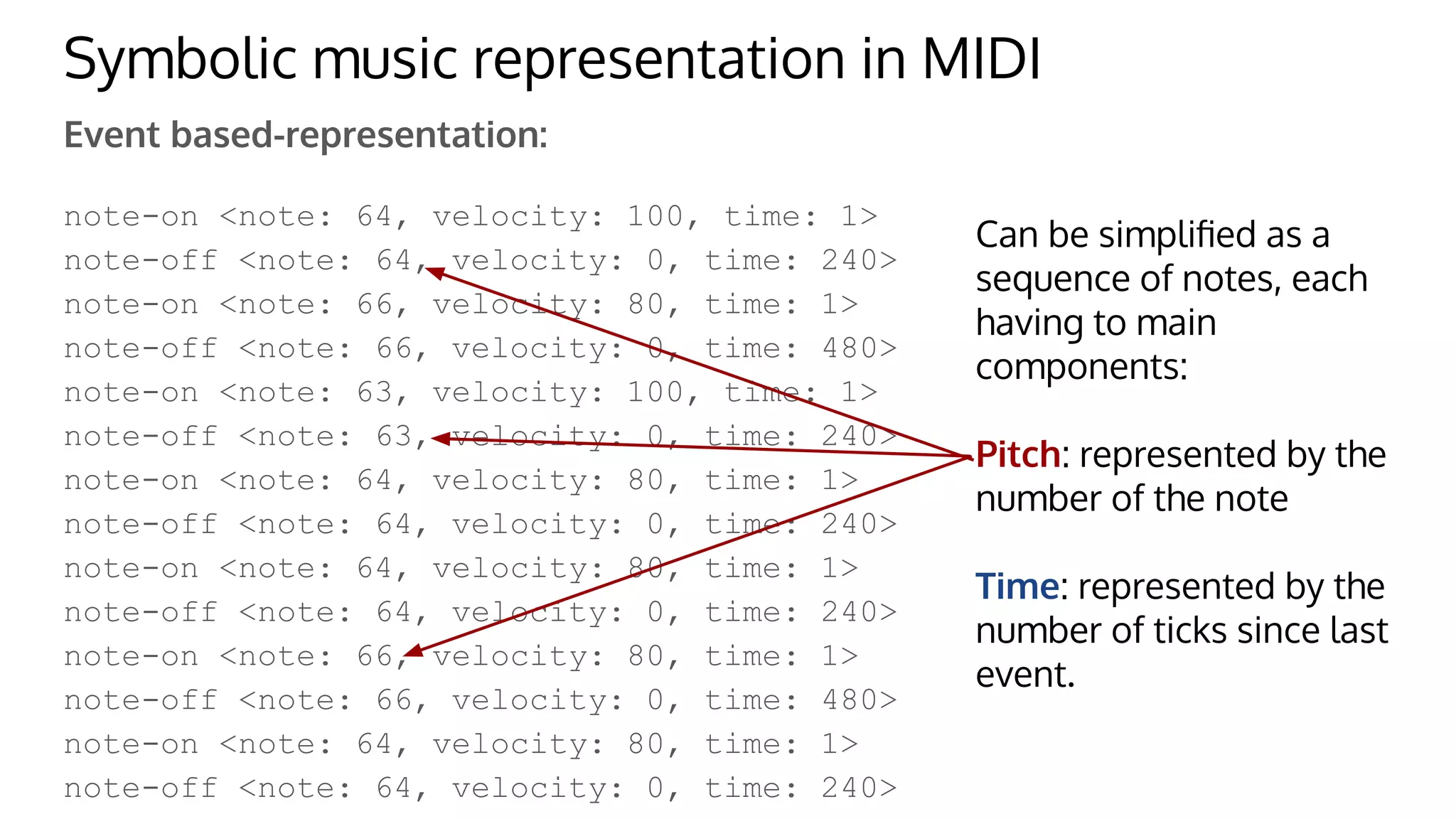

![Representing time features

Basic approach: Vector of durations

[240,480,240,240,240,480,240]

But depends on tick per beats. Vector of durations in beats

[0.5,1,0.5,0.5,0.5,1,0.5]

But depends on specific timing of the tune. Binary vector of note occurrence,

dividing beats in 24 possible locations (here reduced to 4):

[1,0,1,0,0,0,1,0,1,0,1,0,1,0,0,0,1,0]

Also, beat extracted from wav files and other “spectral” features.](https://image.slidesharecdn.com/aics2019-200131140319/75/Unsupervised-learning-approach-for-identifying-sub-genres-in-music-scores-10-2048.jpg)