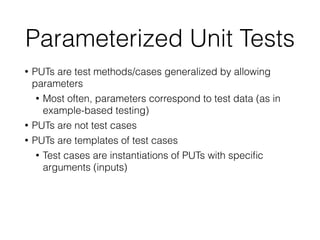

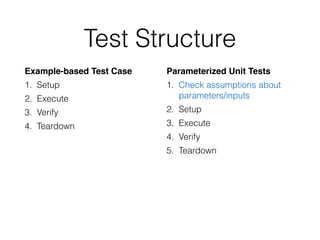

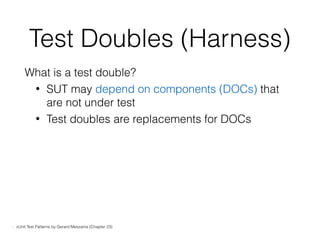

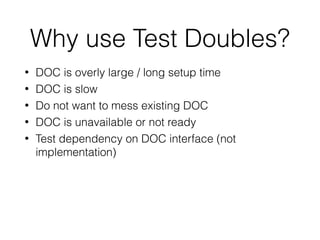

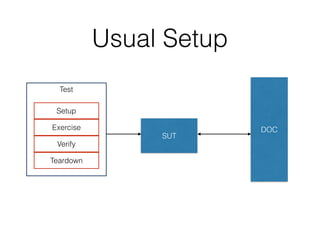

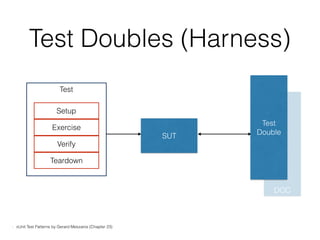

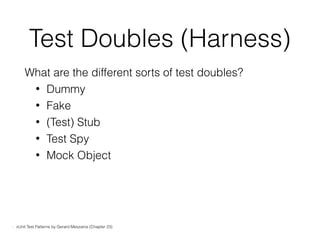

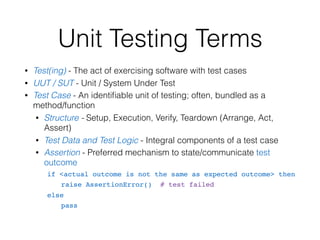

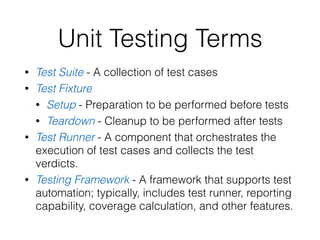

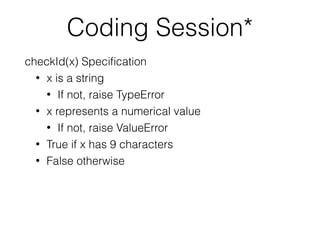

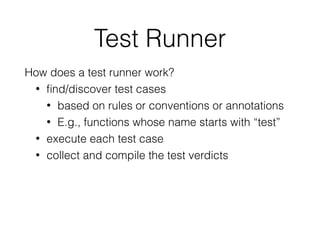

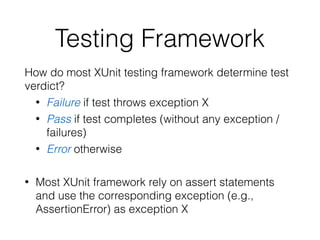

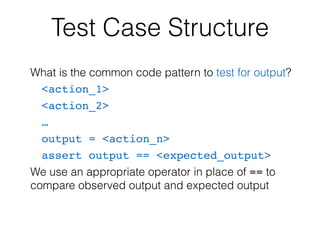

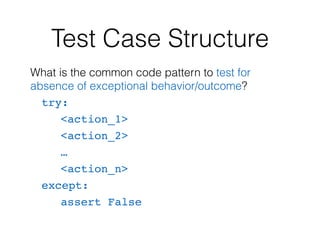

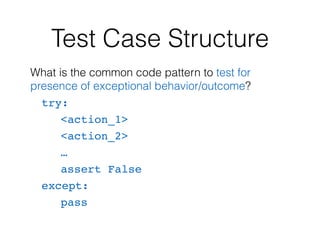

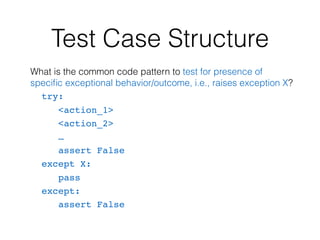

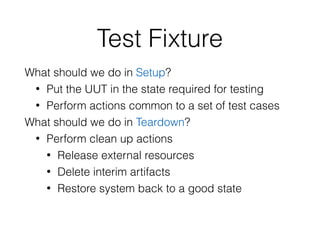

The document presents an in-depth overview of unit testing, covering essential terms, concepts, and structures involved in writing tests, including test cases, test runners, and testing frameworks across different programming languages. It discusses the use of assertions, setups, and teardowns, as well as the implementation of parameterized unit tests and test doubles. Additionally, it highlights common patterns and best practices for effective unit testing to ensure software reliability.

![Parameterized Unit Tests

void TestAdd() {

ArrayList a = new ArrayList(0);

object o = new object();

a.Add(o);

Assert.IsTrue(a[0] == o);

}

- Parameterized Unit Tests by Nikolai Tillmann and Wolfram Schultz [FSE’05] (Section 2)](https://image.slidesharecdn.com/unit-testing-170926175035/85/Unit-testing-4-Software-Testing-Techniques-CIS640-22-320.jpg)

![Parameterized Unit Tests

void TestAdd() {

ArrayList a = new ArrayList(0);

object o = new object();

a.Add(o);

Assert.IsTrue(a[0] == o);

}

void TestAdd(ArrayList a, object o) {

if (a != null) {

int i = a.Count;

a.Add(o);

Assert.IsTrue(a[i] == o);

}

}

- Parameterized Unit Tests by Nikolai Tillmann and Wolfram Schultz [FSE’05] (Section 2)](https://image.slidesharecdn.com/unit-testing-170926175035/85/Unit-testing-4-Software-Testing-Techniques-CIS640-23-320.jpg)

![Parameterized Unit Tests

void TestAdd() {

ArrayList a = new ArrayList(0);

object o = new object();

a.Add(o);

Assert.IsTrue(a[0] == o);

}

void TestAdd(ArrayList a, object o) {

Assume.IsTrue(a != null);

int i = a.Count;

a.Add(o);

Assert.IsTrue(a[i] == o);

}

- Parameterized Unit Tests by Nikolai Tillmann and Wolfram Schultz [FSE’05] (Section 2)](https://image.slidesharecdn.com/unit-testing-170926175035/85/Unit-testing-4-Software-Testing-Techniques-CIS640-24-320.jpg)