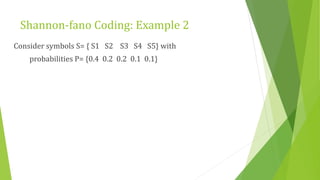

This document provides an overview of information theory and coding. It discusses key concepts such as entropy, which is a measure of information, and the source coding theorem. It also describes several coding techniques for lossless data compression, including Shannon-Fano coding and Huffman coding. Shannon-Fano coding assigns codes to symbols based on their probabilities, splitting the list in half at each step. Huffman coding assigns the shortest codes to most frequent symbols and longest codes to least frequent symbols through a tree building process. Examples are provided to demonstrate how to construct codes using these techniques.