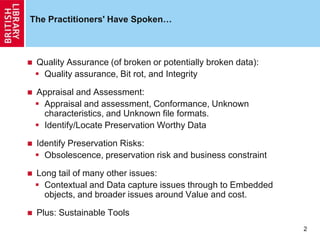

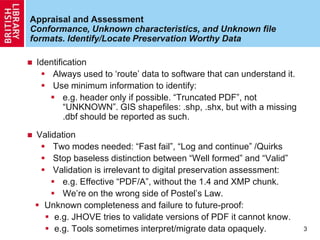

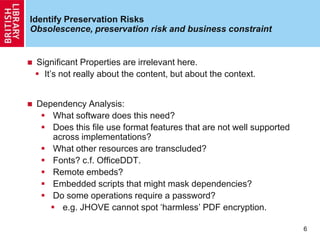

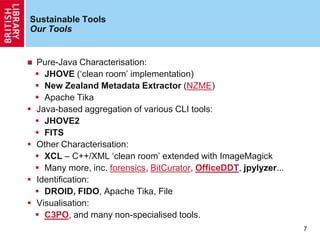

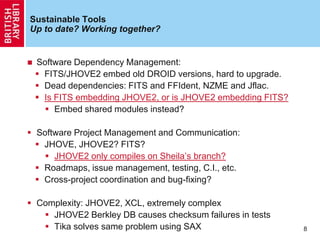

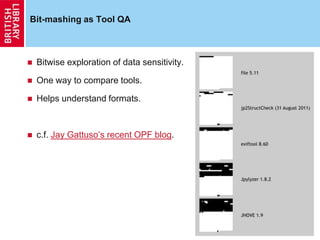

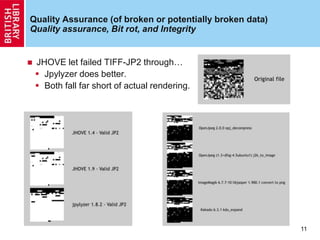

This document discusses issues and recommendations for improving digital preservation characterization tools. It addresses the need for quality assurance of broken data, appraisal of unknown file formats, identifying preservation risks, and ensuring sustainable tool development. Specific issues highlighted include outdated dependencies, lack of coordination across similar tools, and complexity making tools difficult to maintain. The document recommends adopting shared test corpora and frameworks to allow better comparison of tool results and coordination of development efforts. Unifying characterization efforts and leveraging existing sustainable projects could help address many of the issues raised.