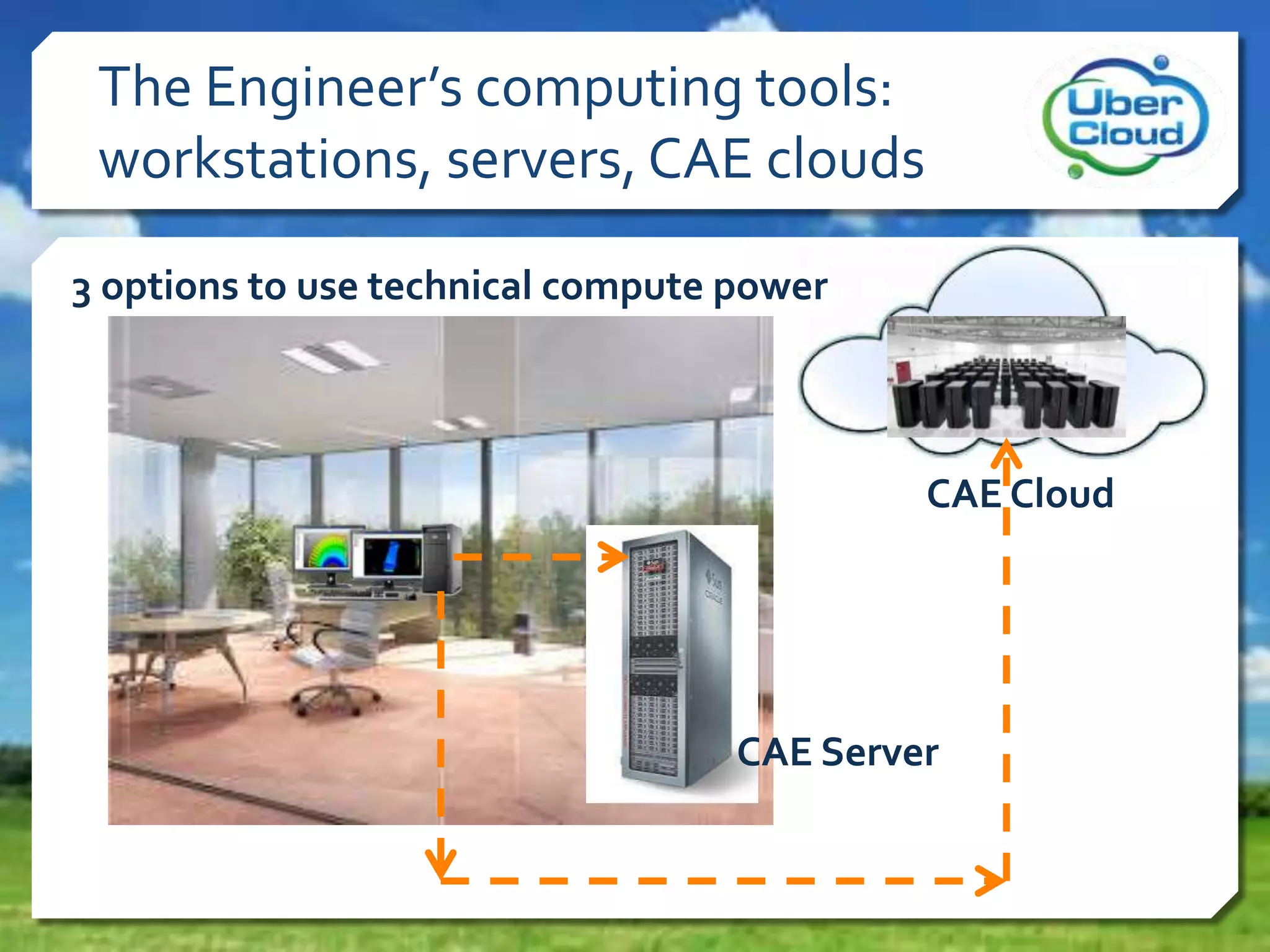

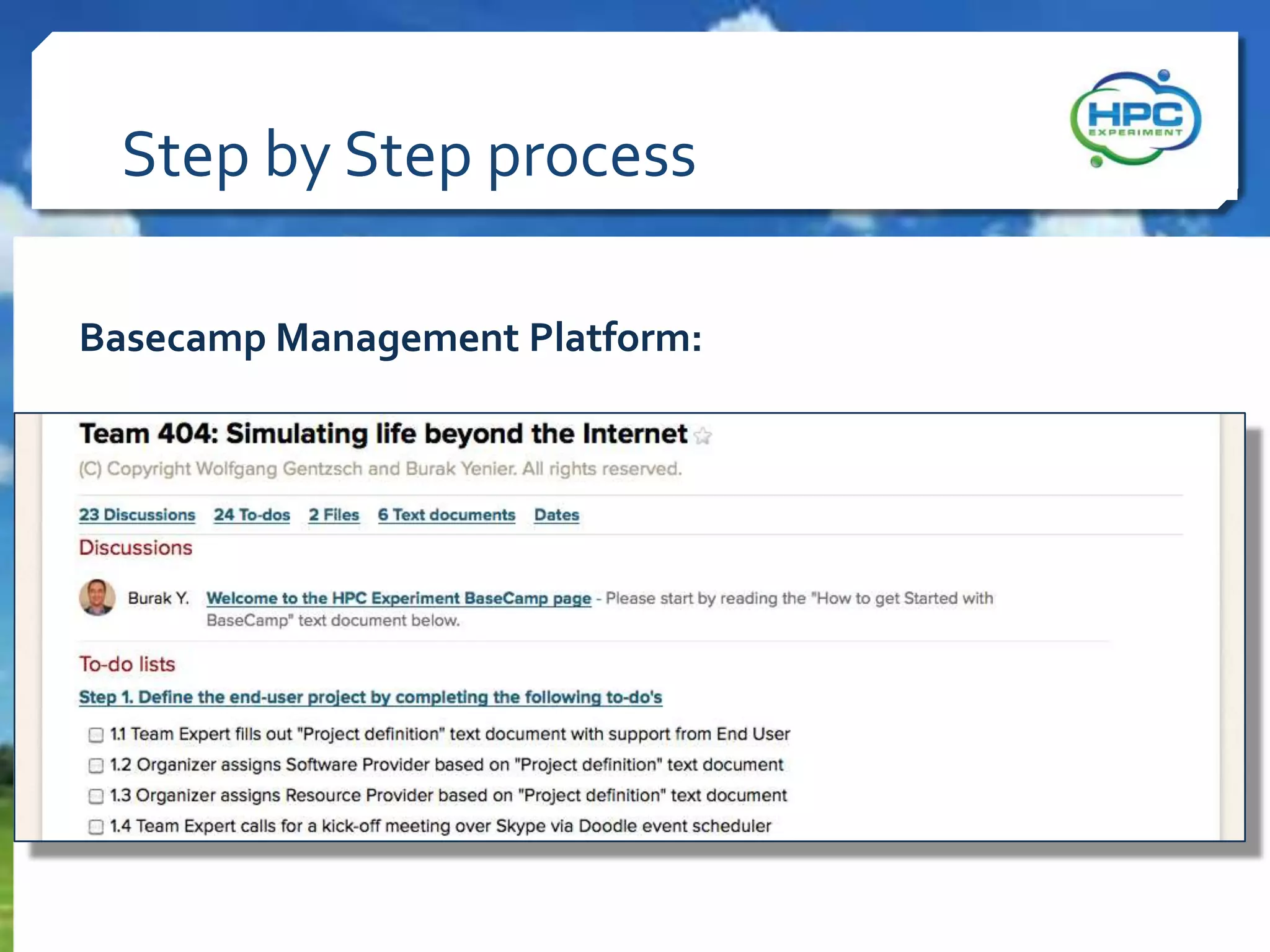

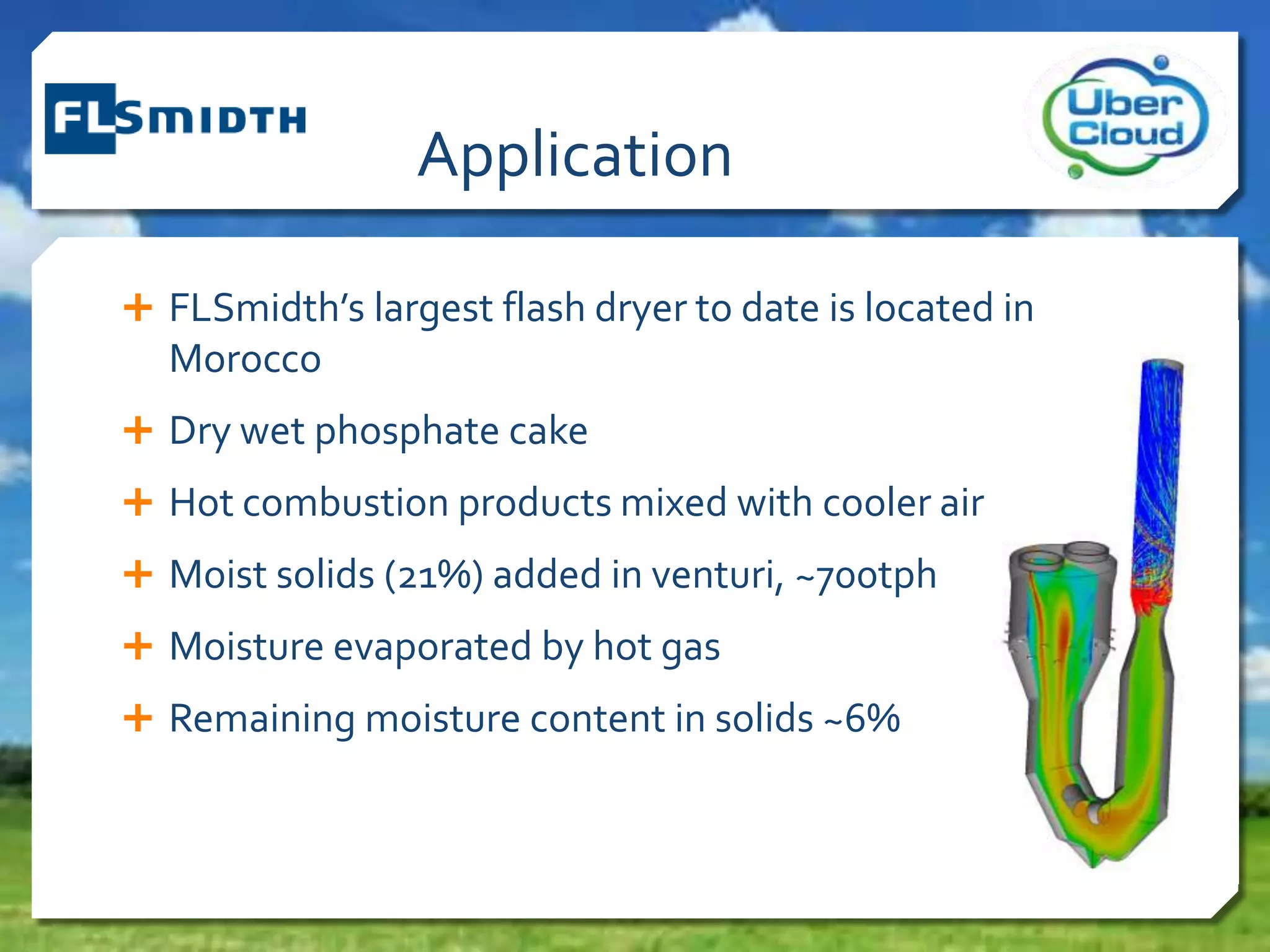

The UberCloud Experiment, initiated in mid-2012, aims to address challenges in technical computing for digital manufacturing, with over 1,300 participants and 55 cloud providers involved. It focuses on promoting High Performance Computing (HPC) usage and tackling barriers such as complexity, data security, and software licenses. Participants share experiences, and lessons learned emphasize the benefits of cloud HPC, including faster turnaround times and outsourced hardware management.