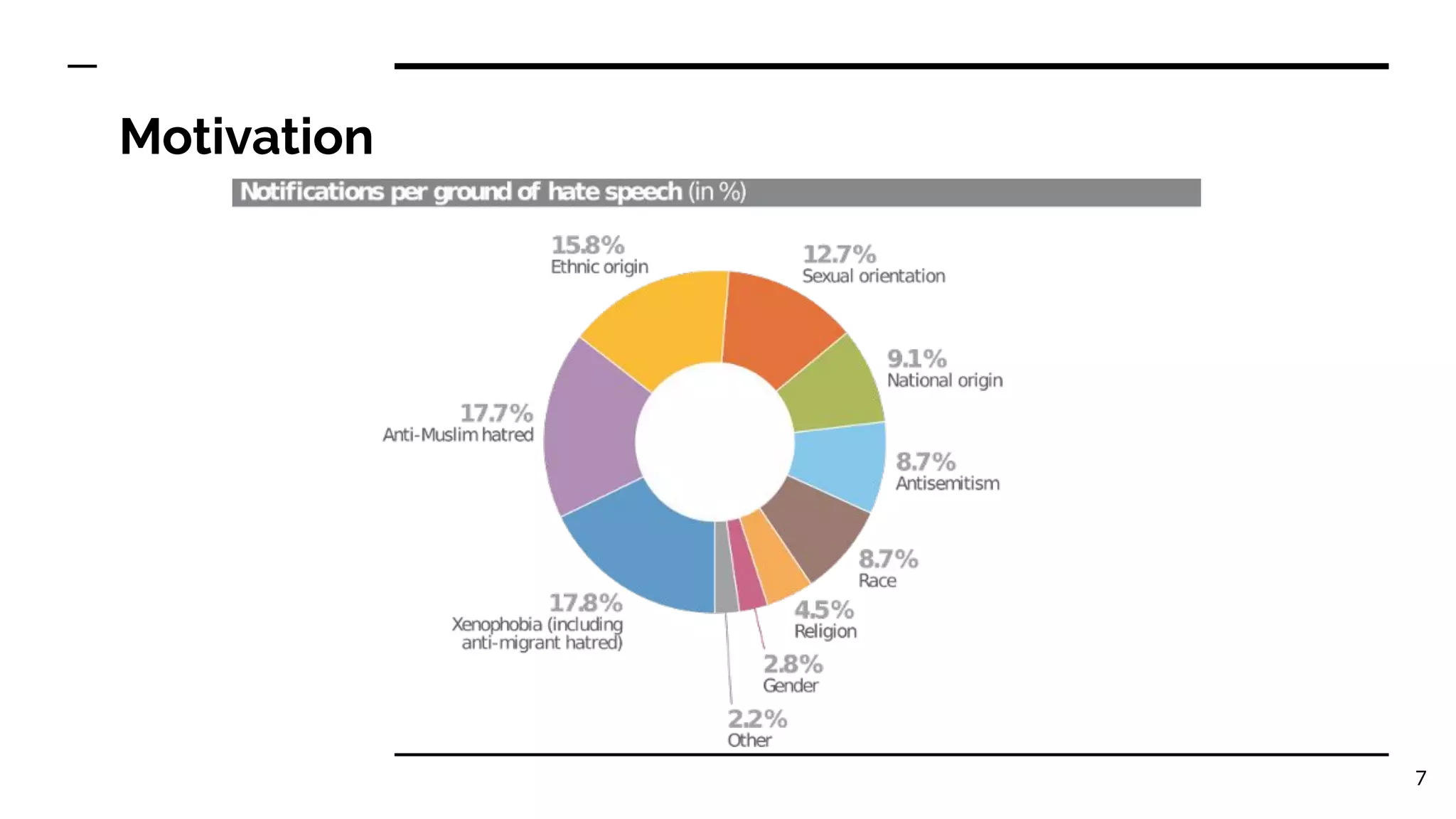

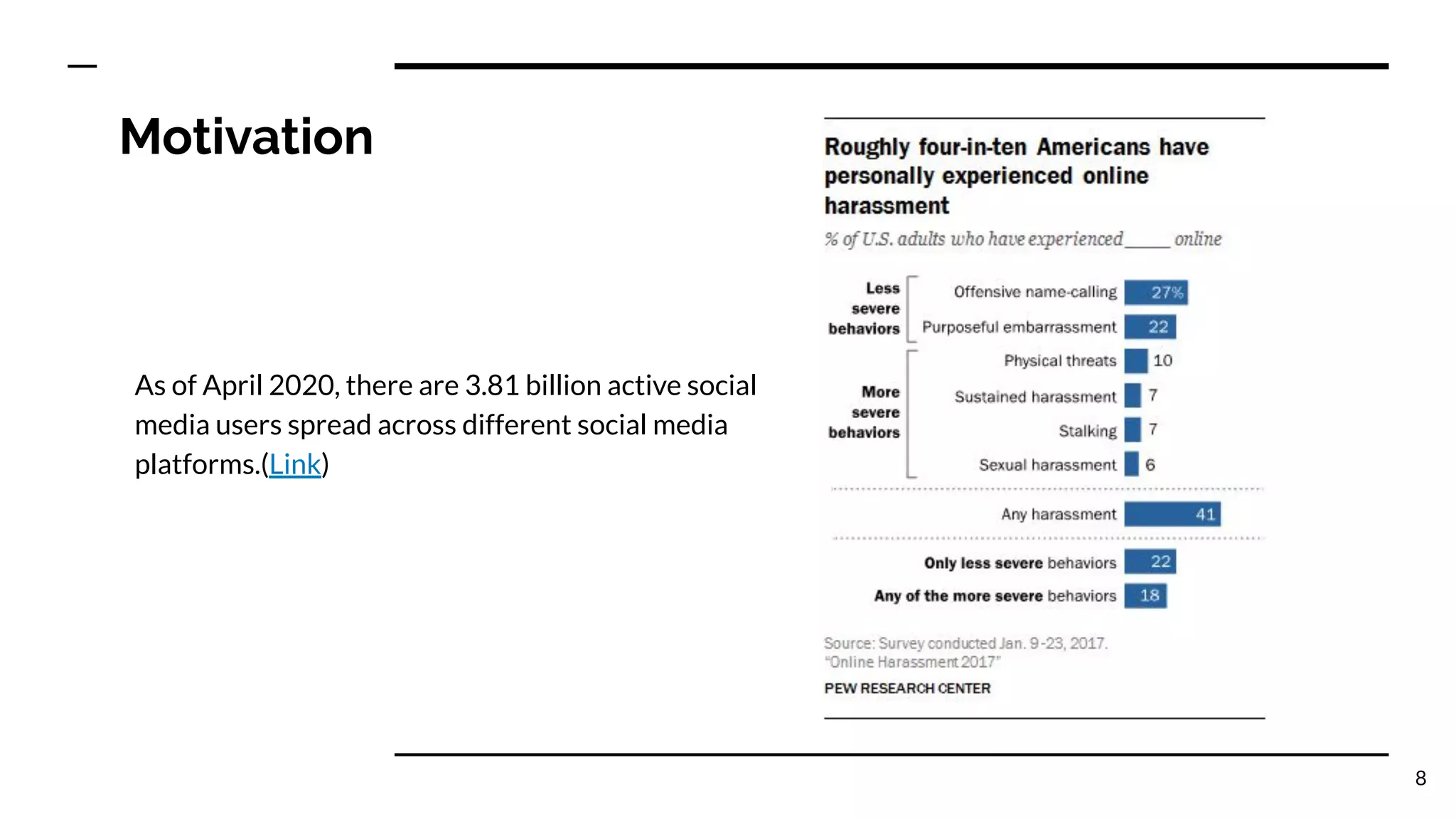

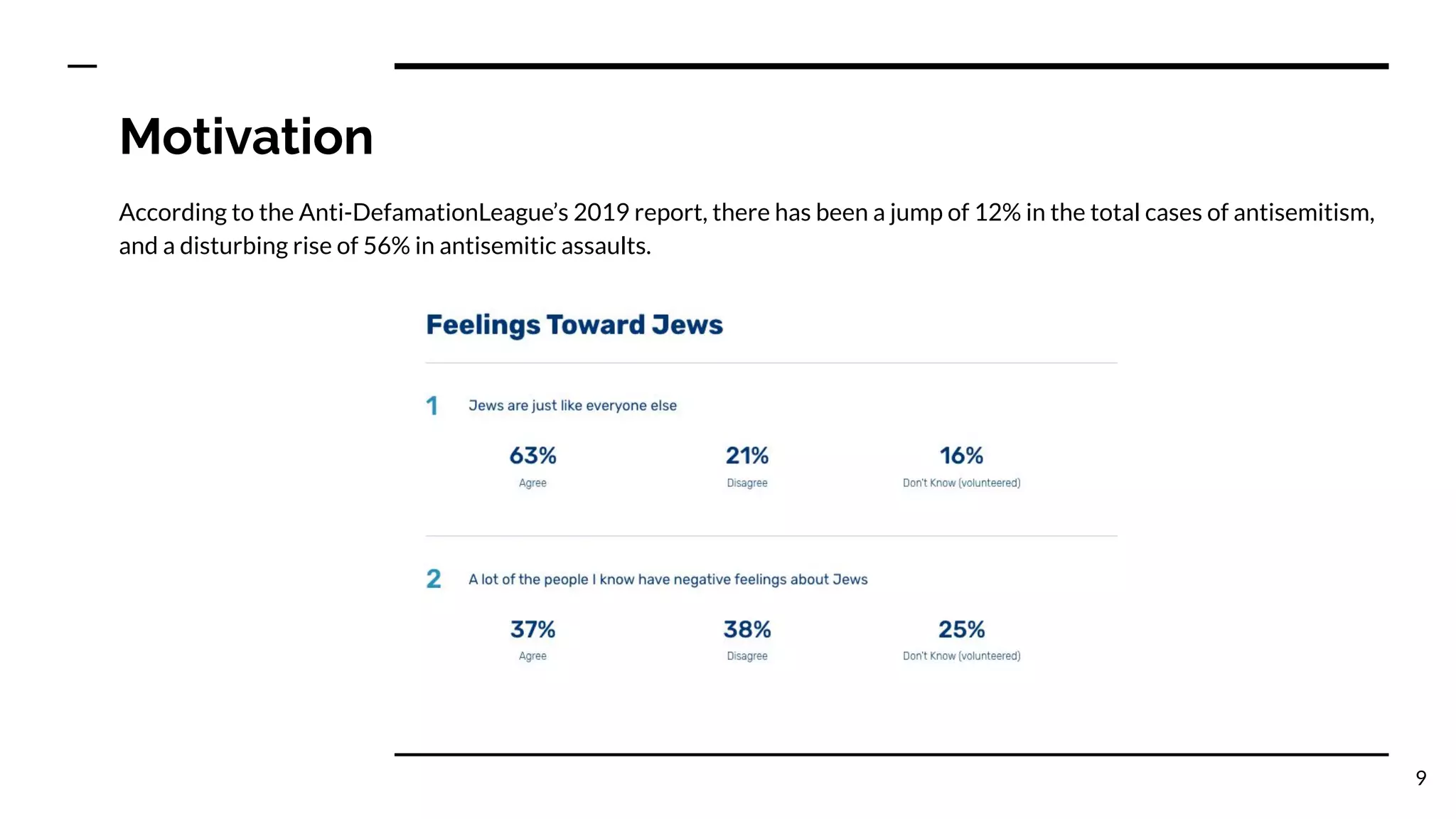

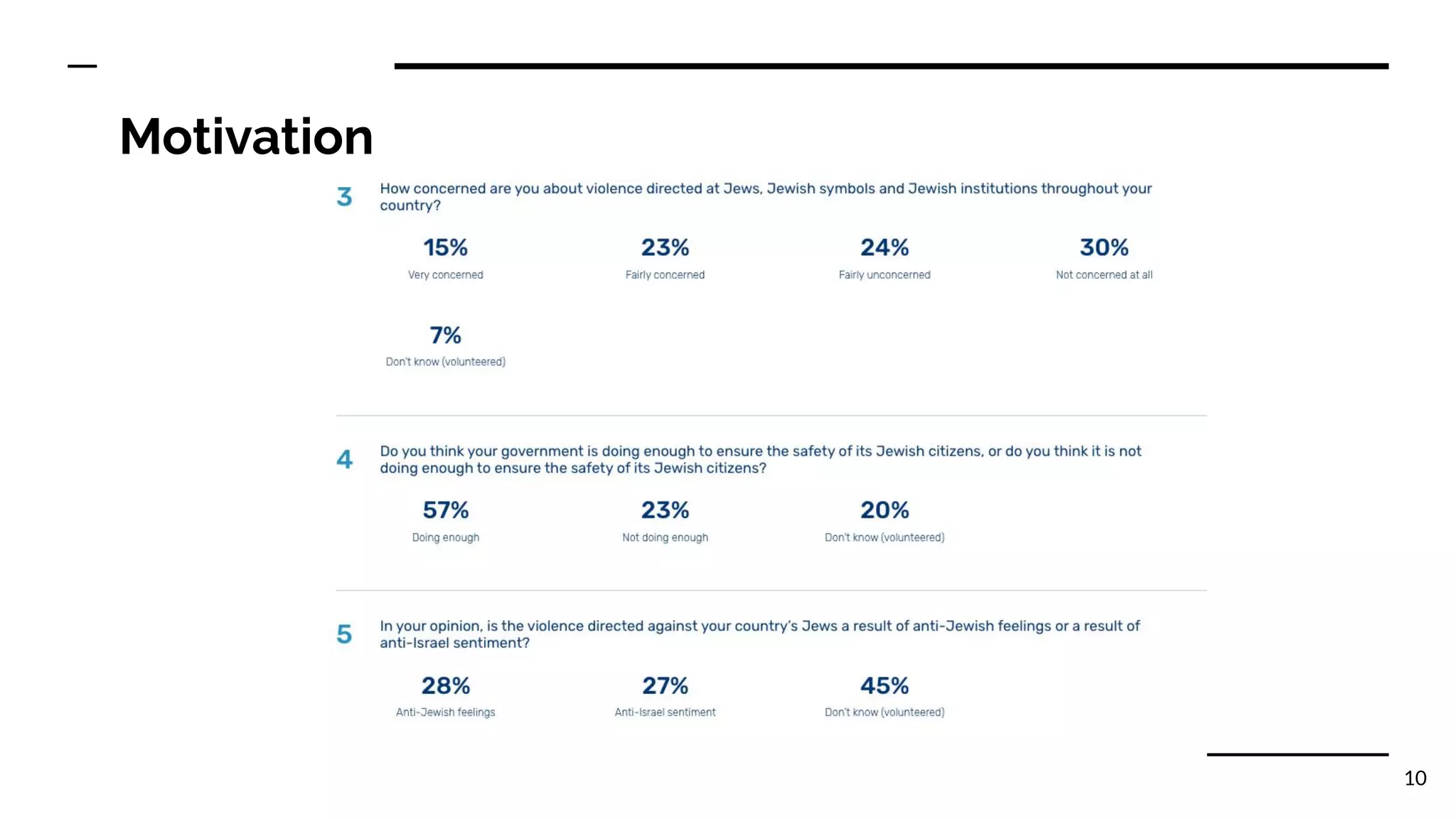

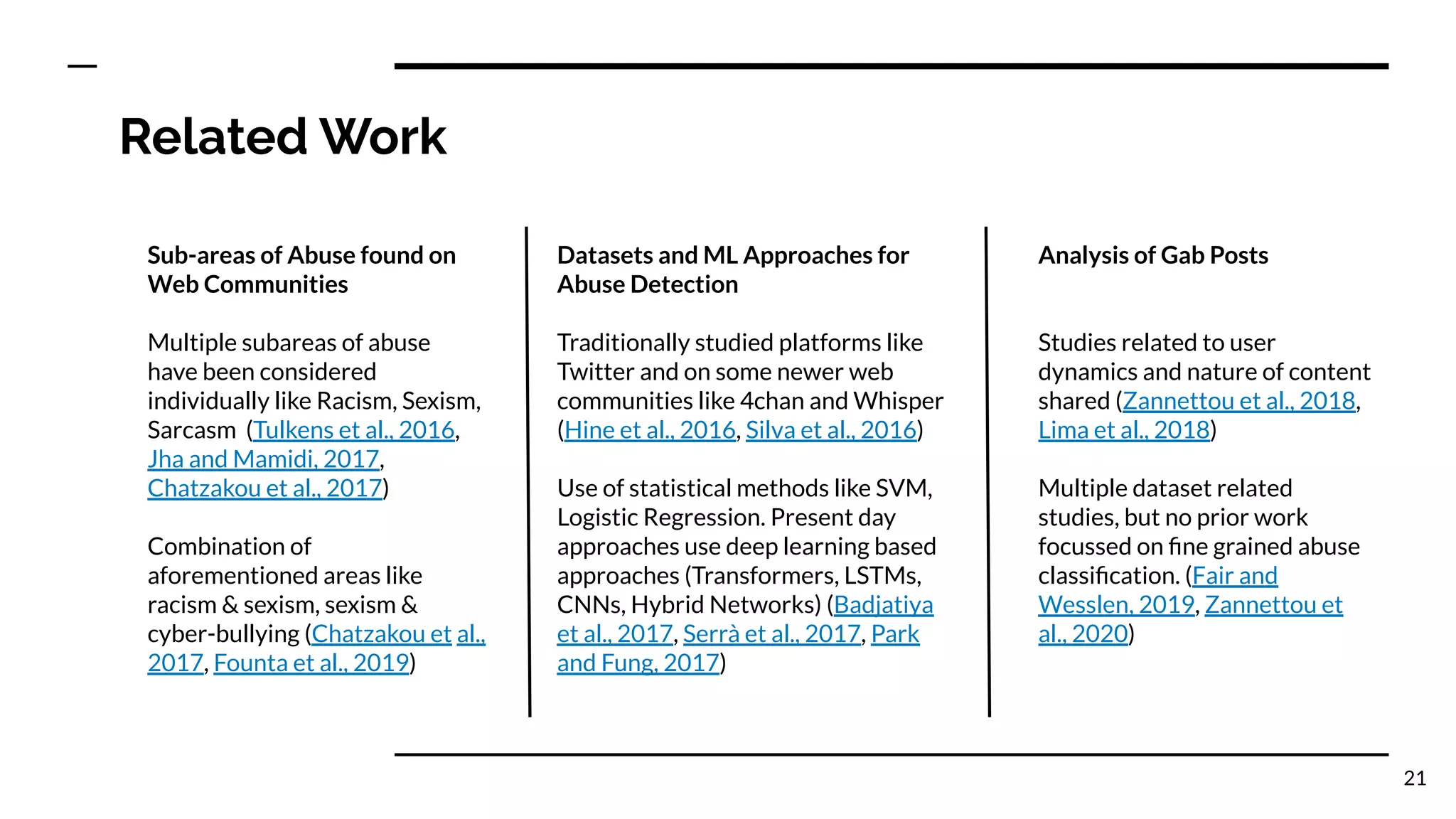

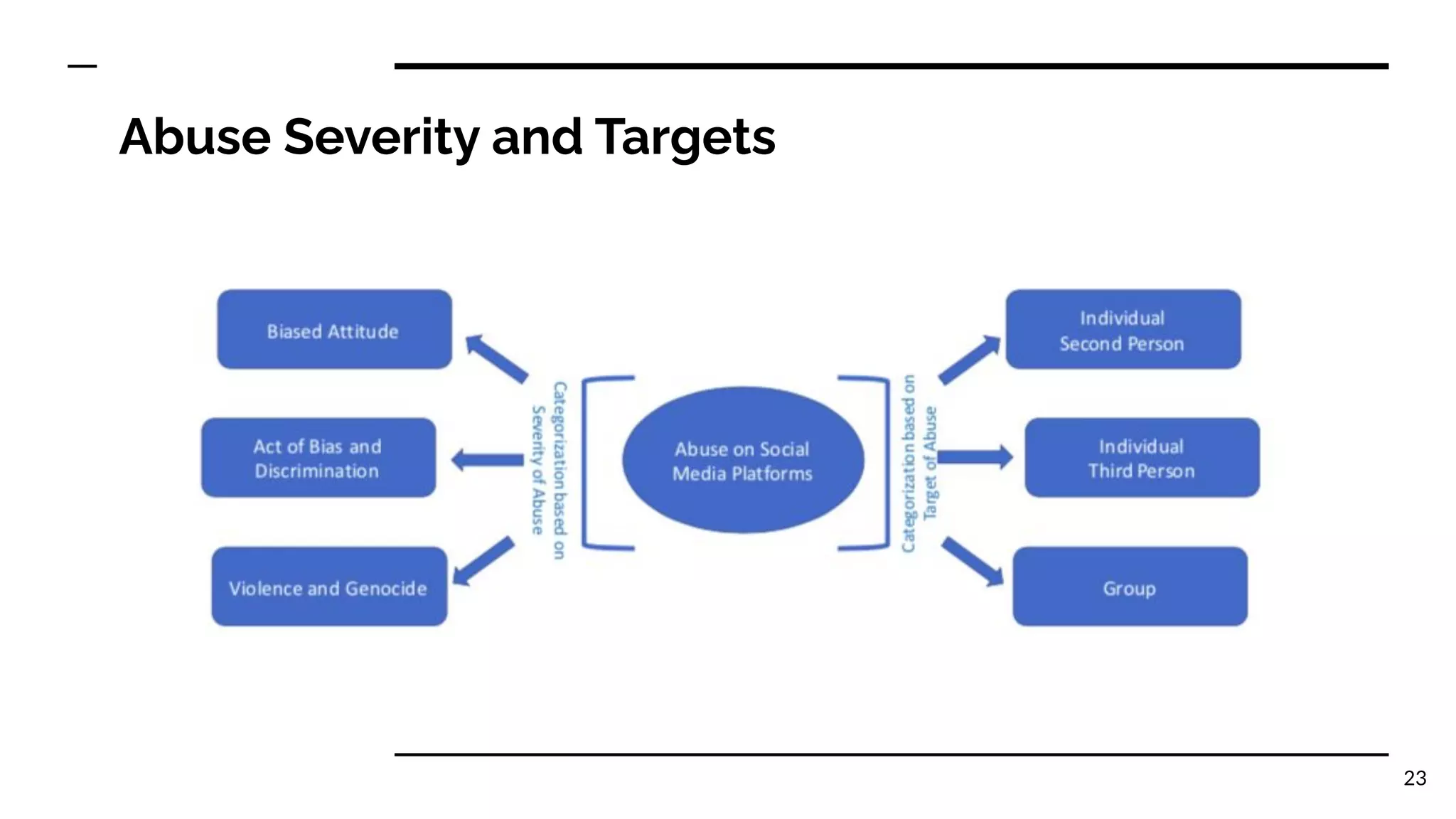

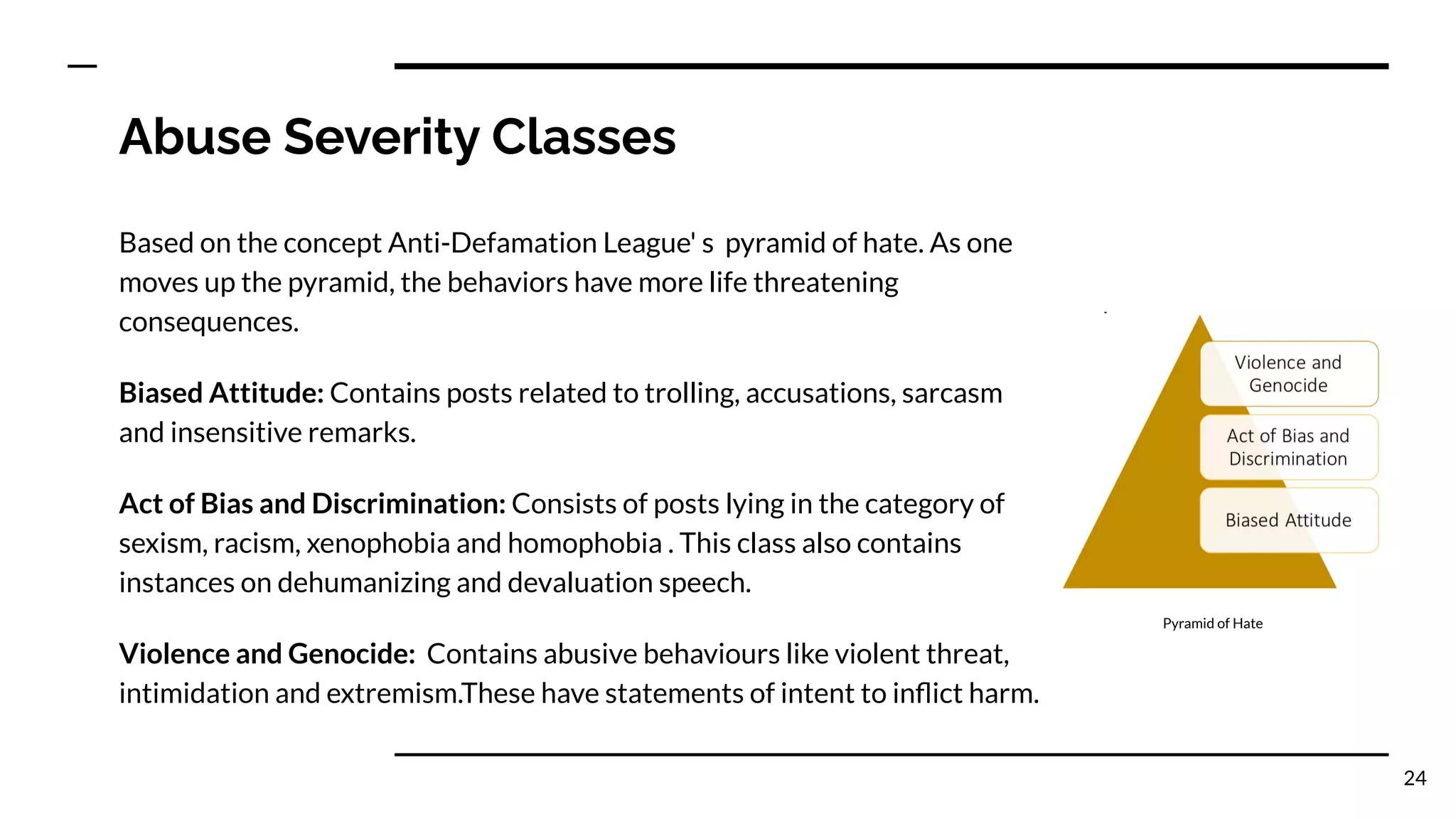

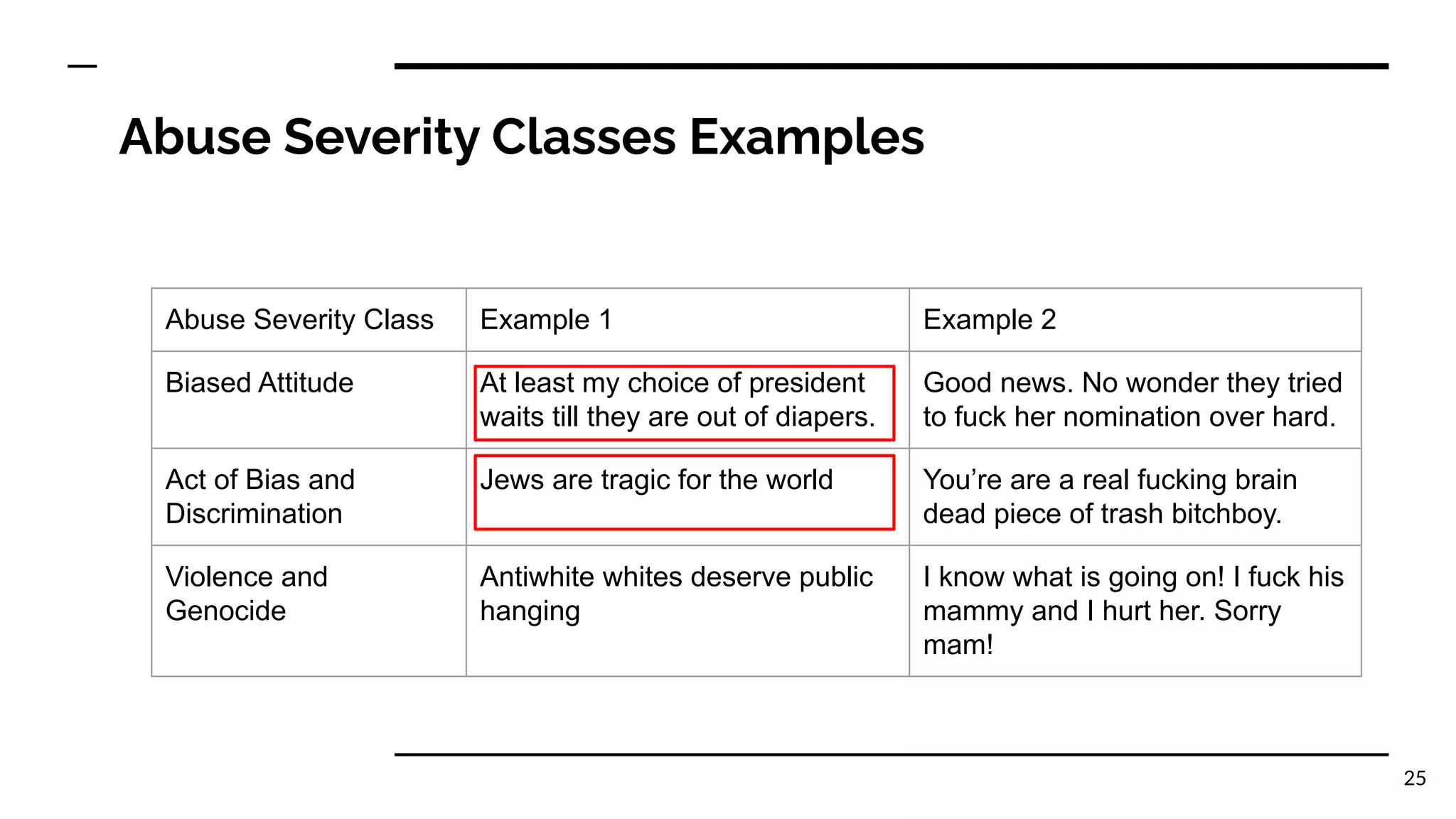

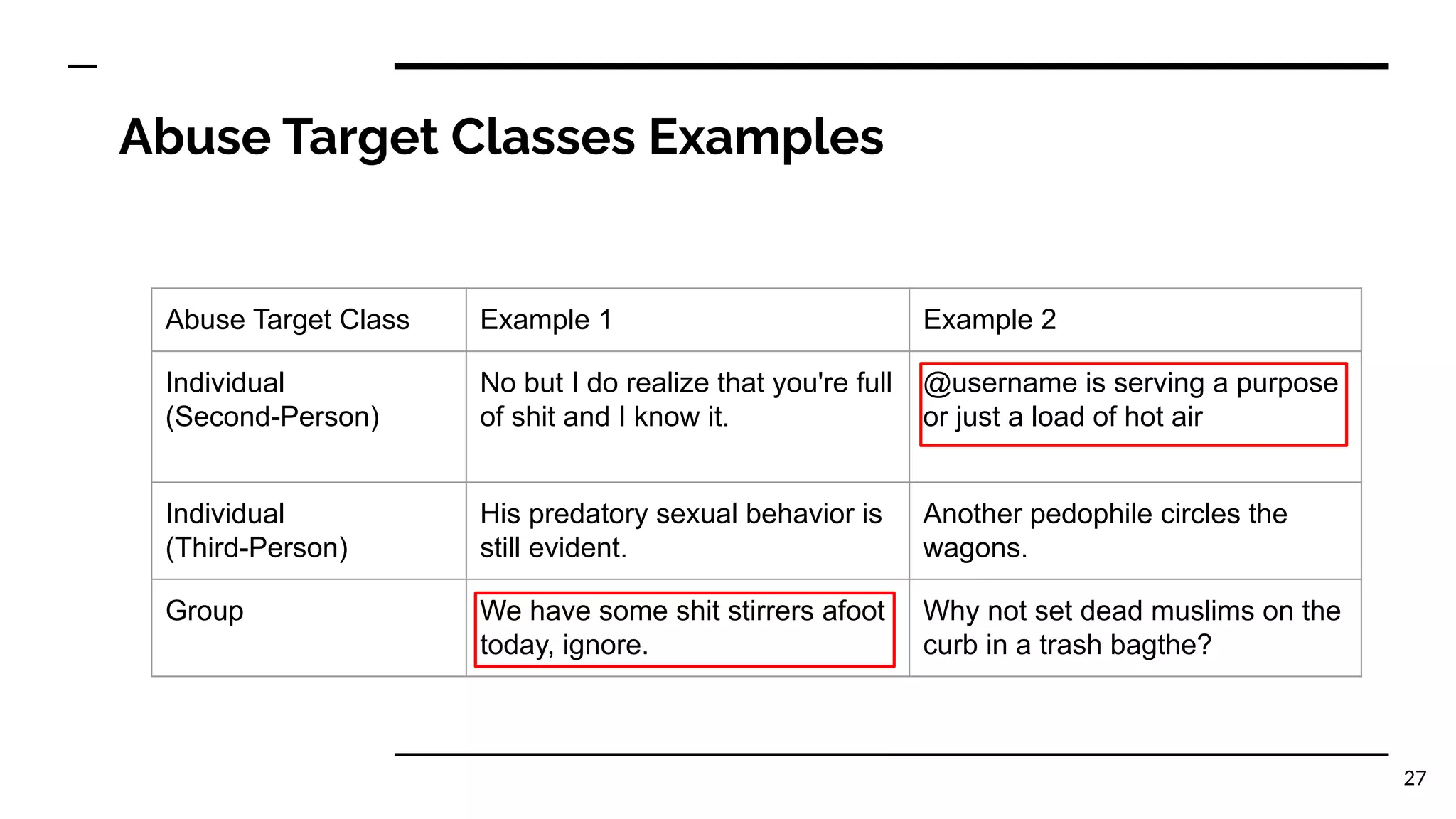

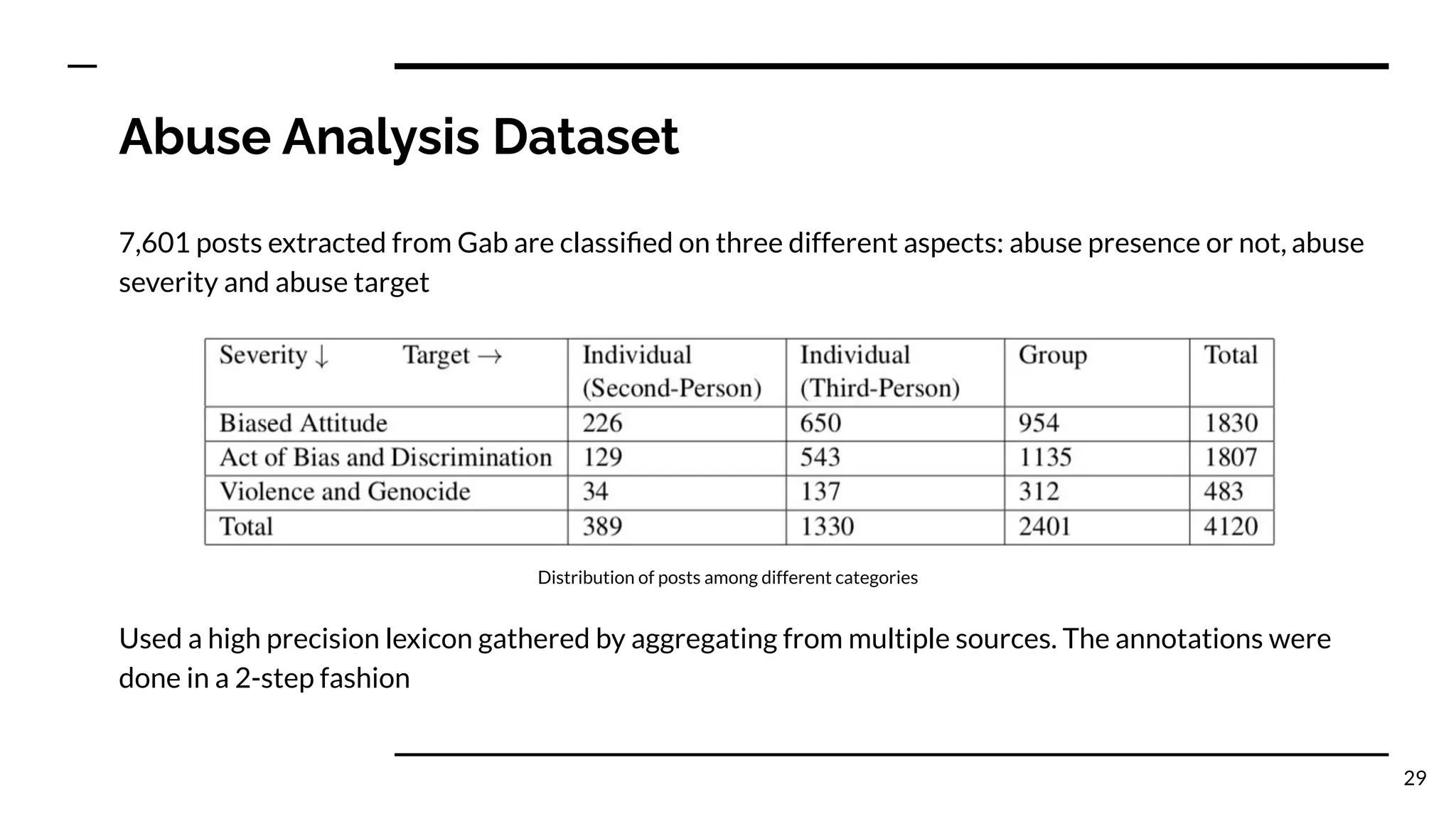

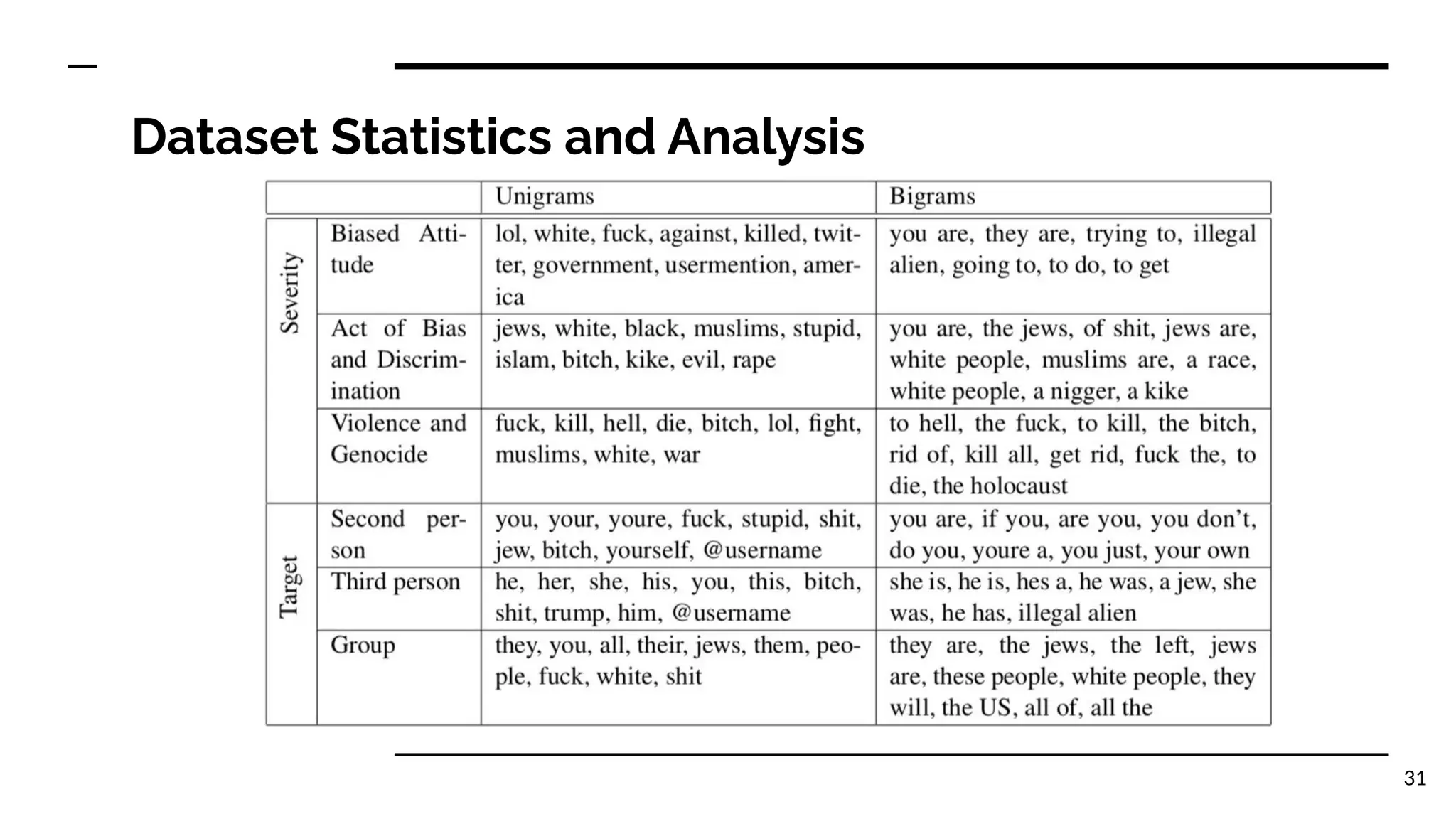

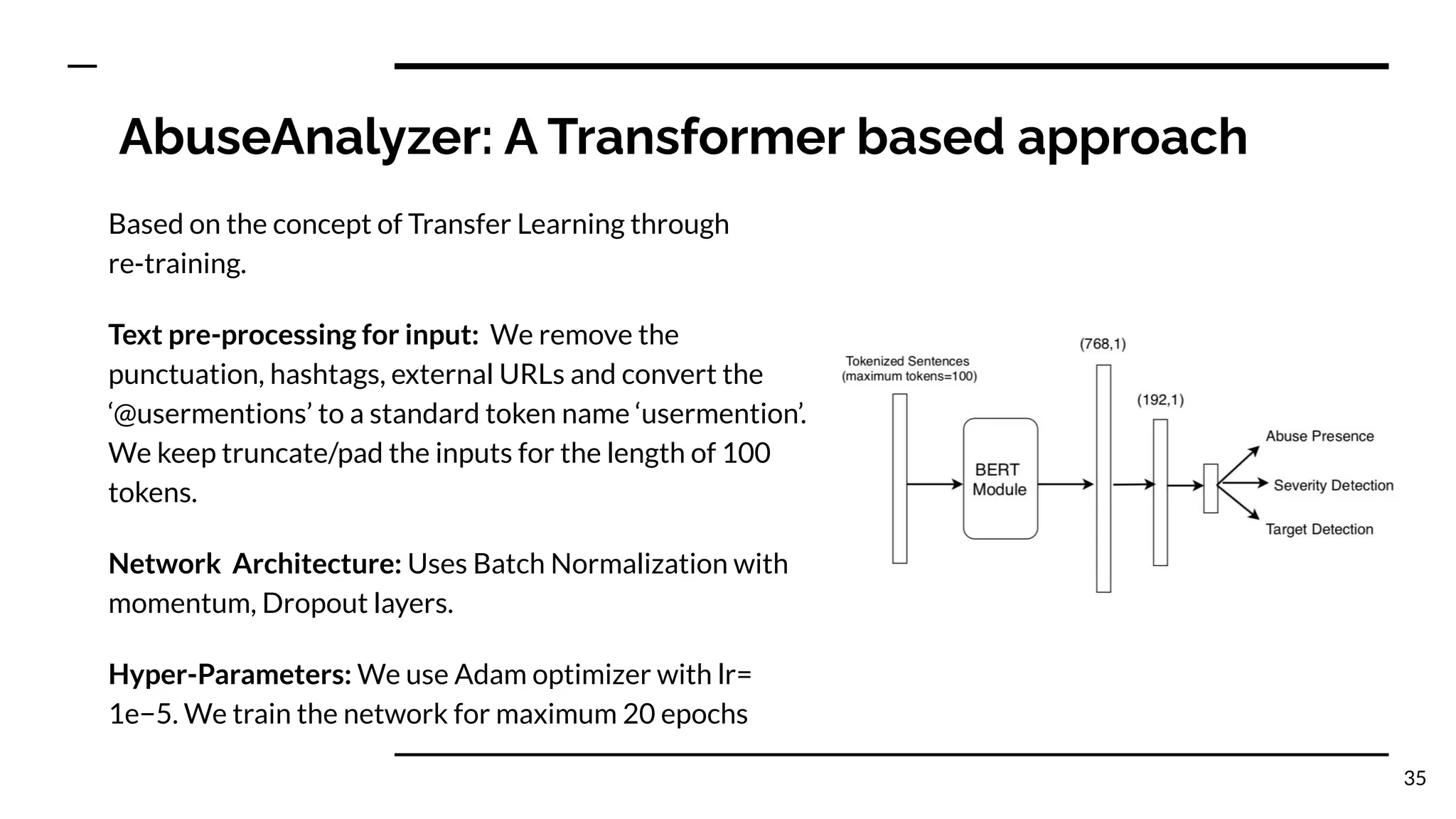

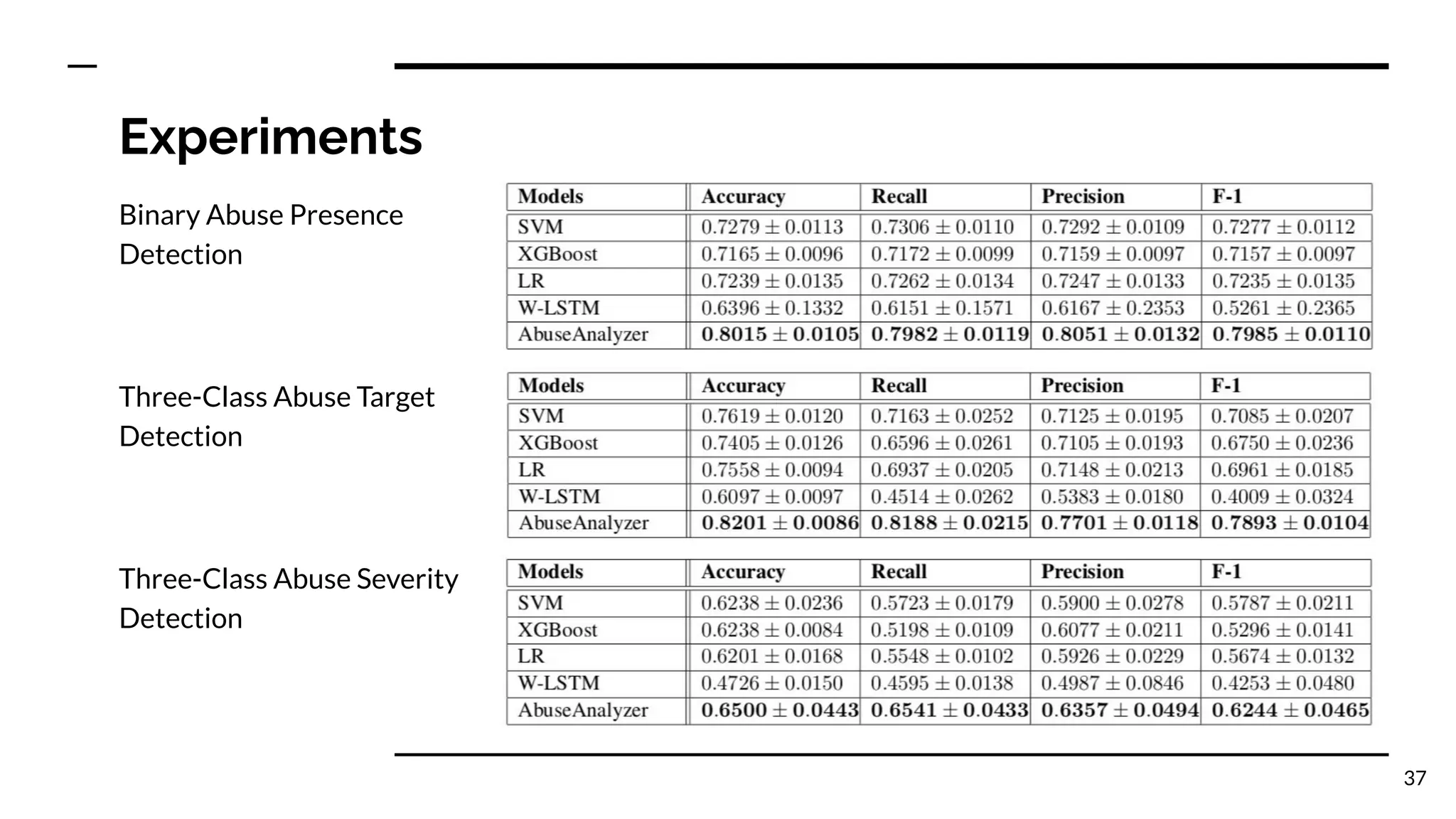

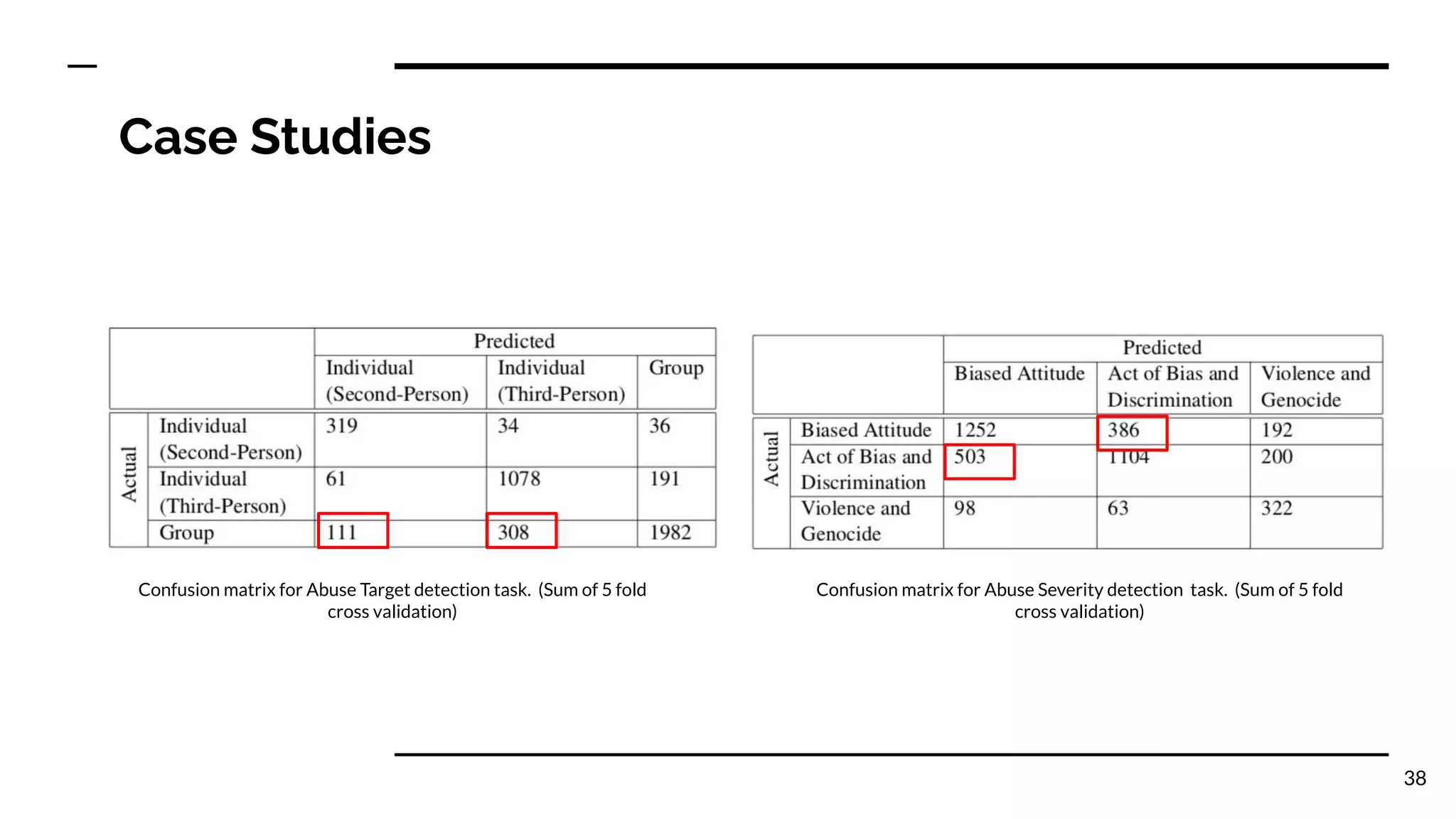

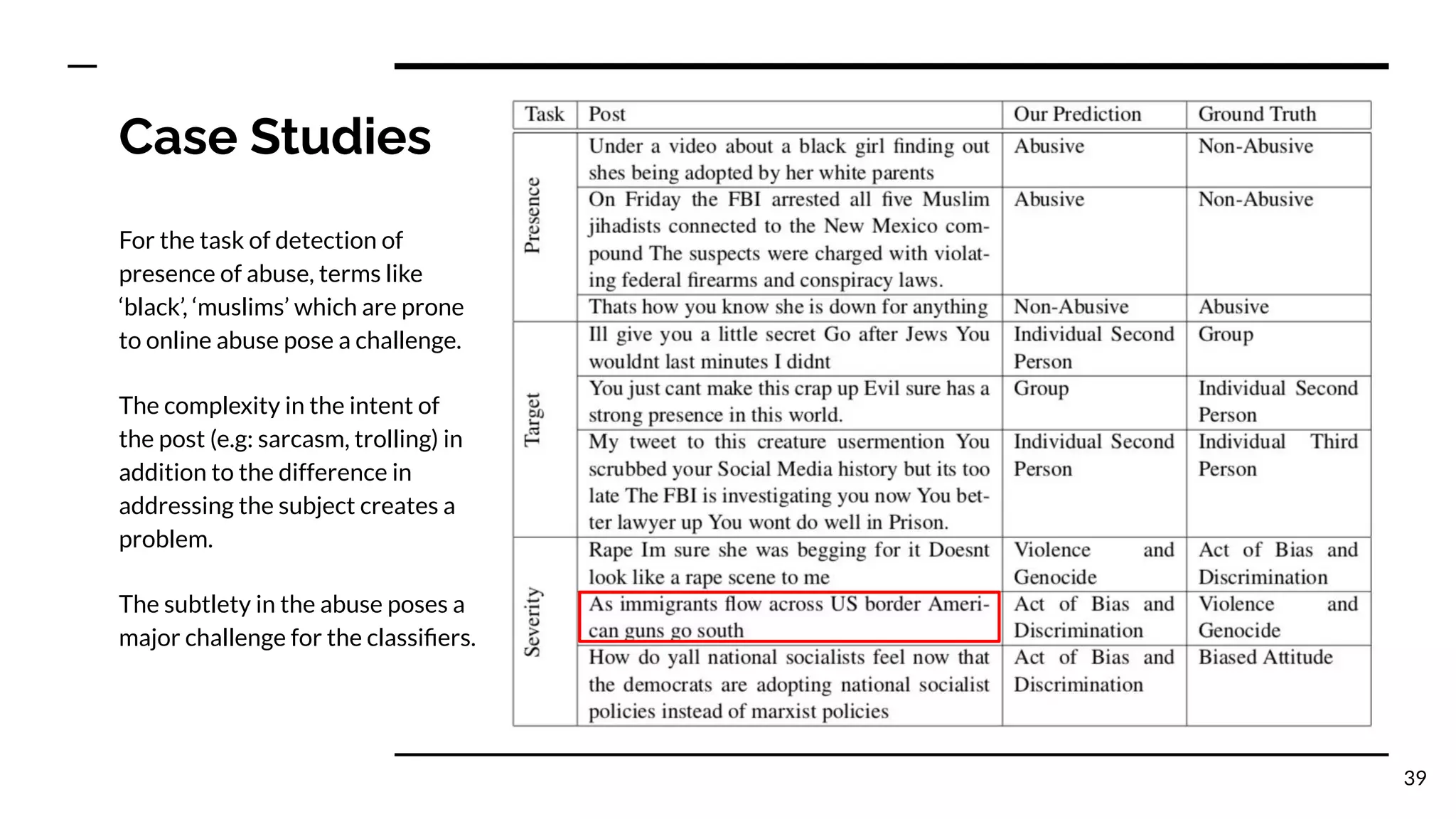

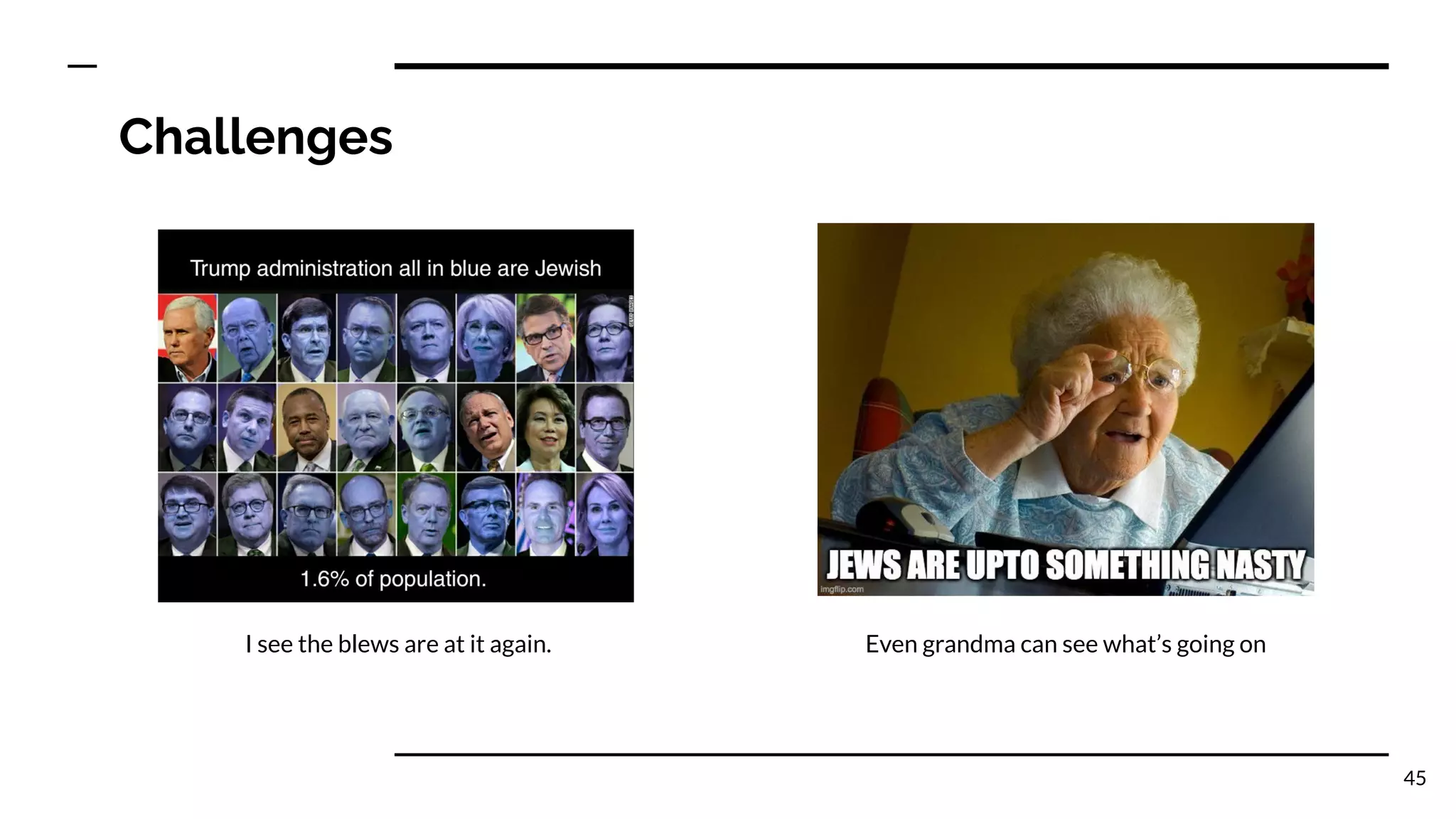

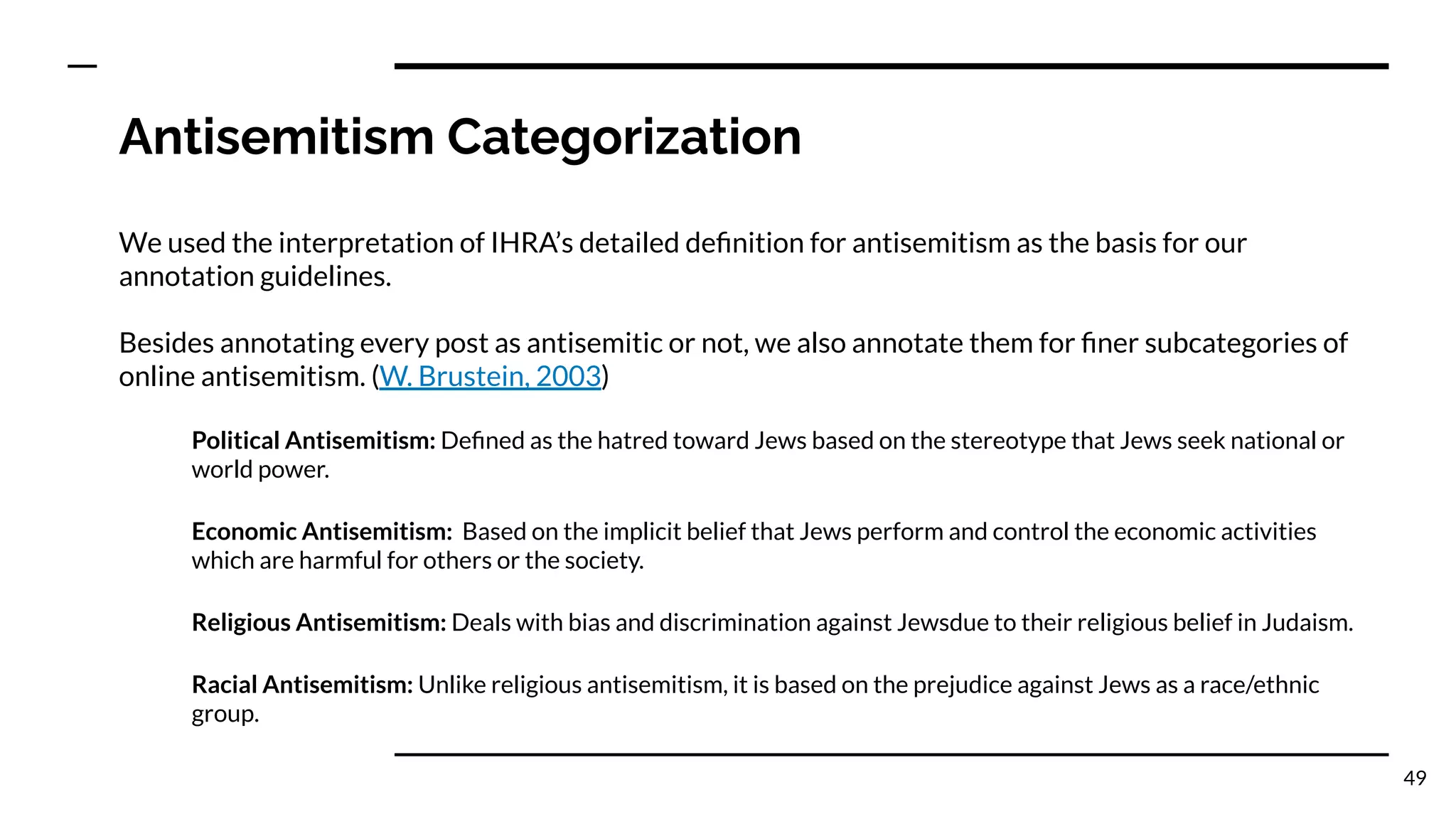

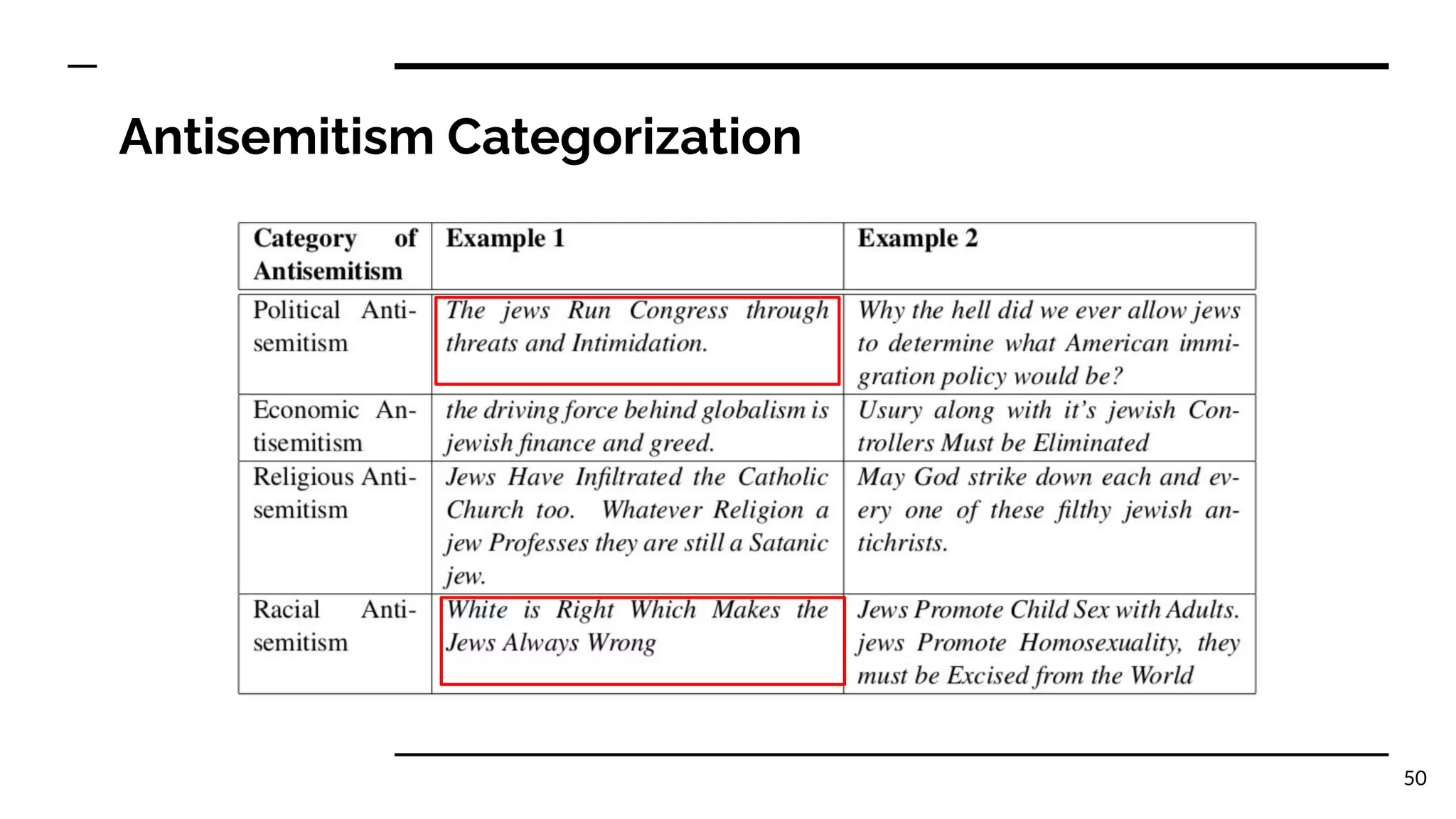

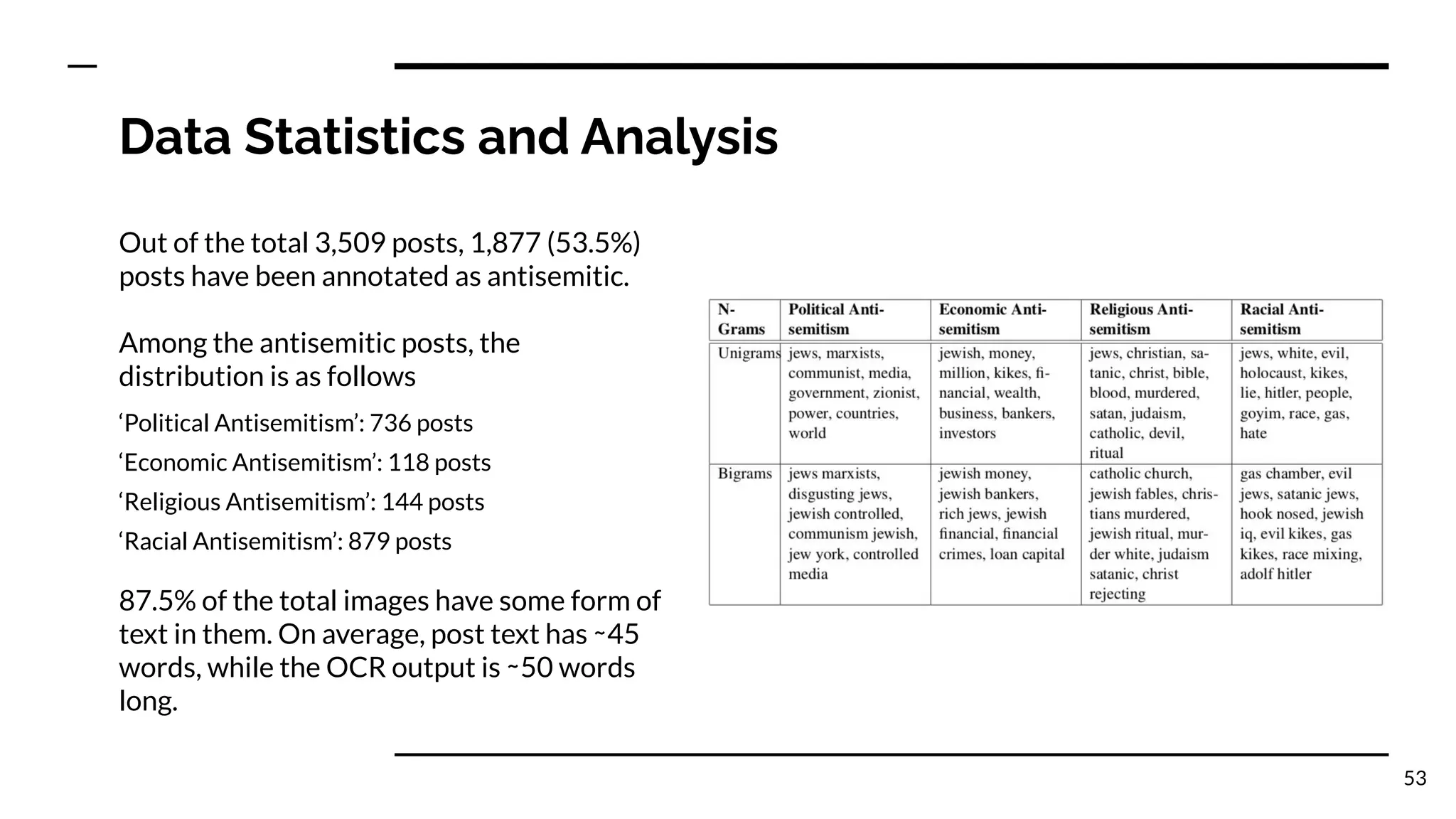

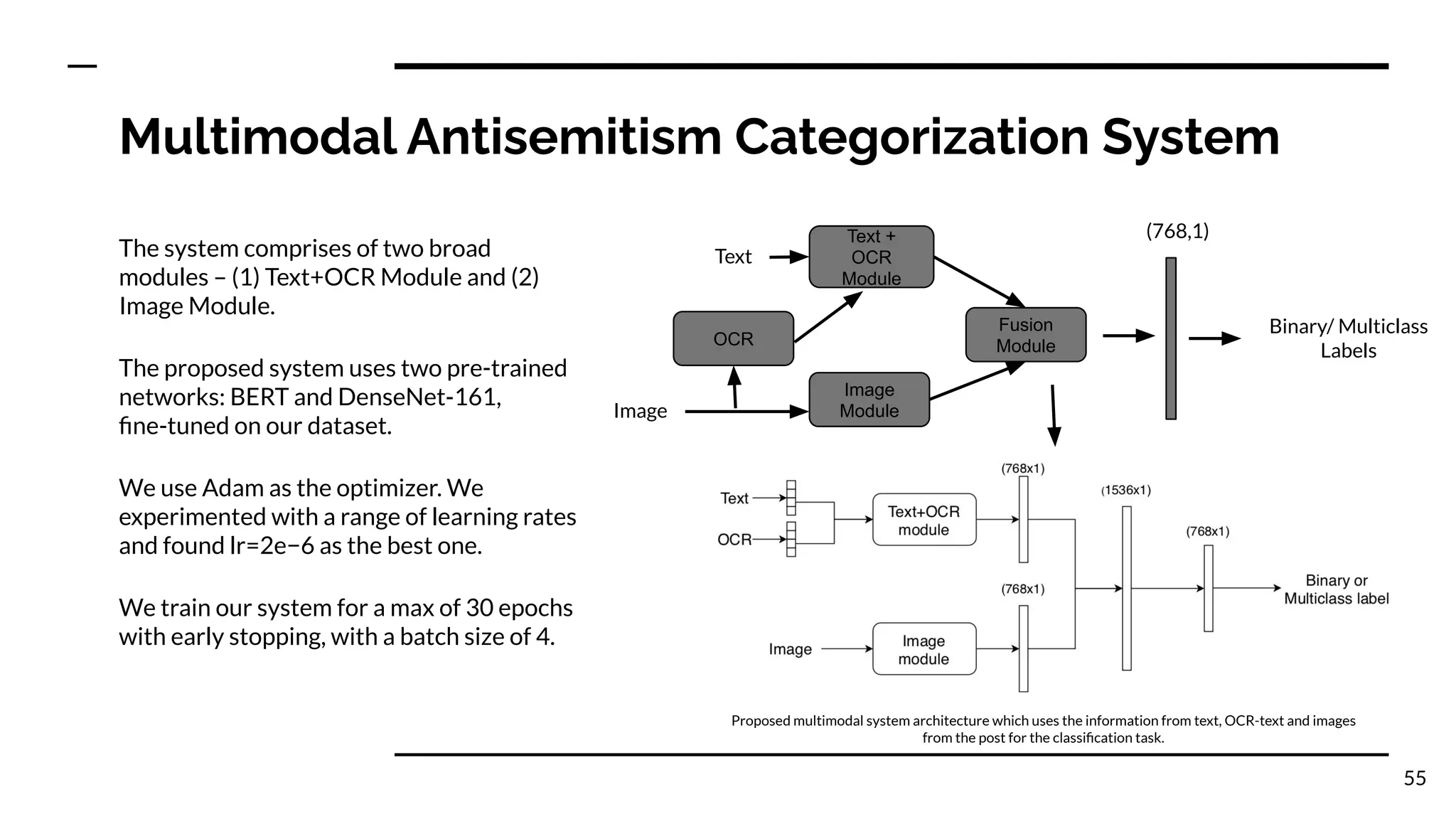

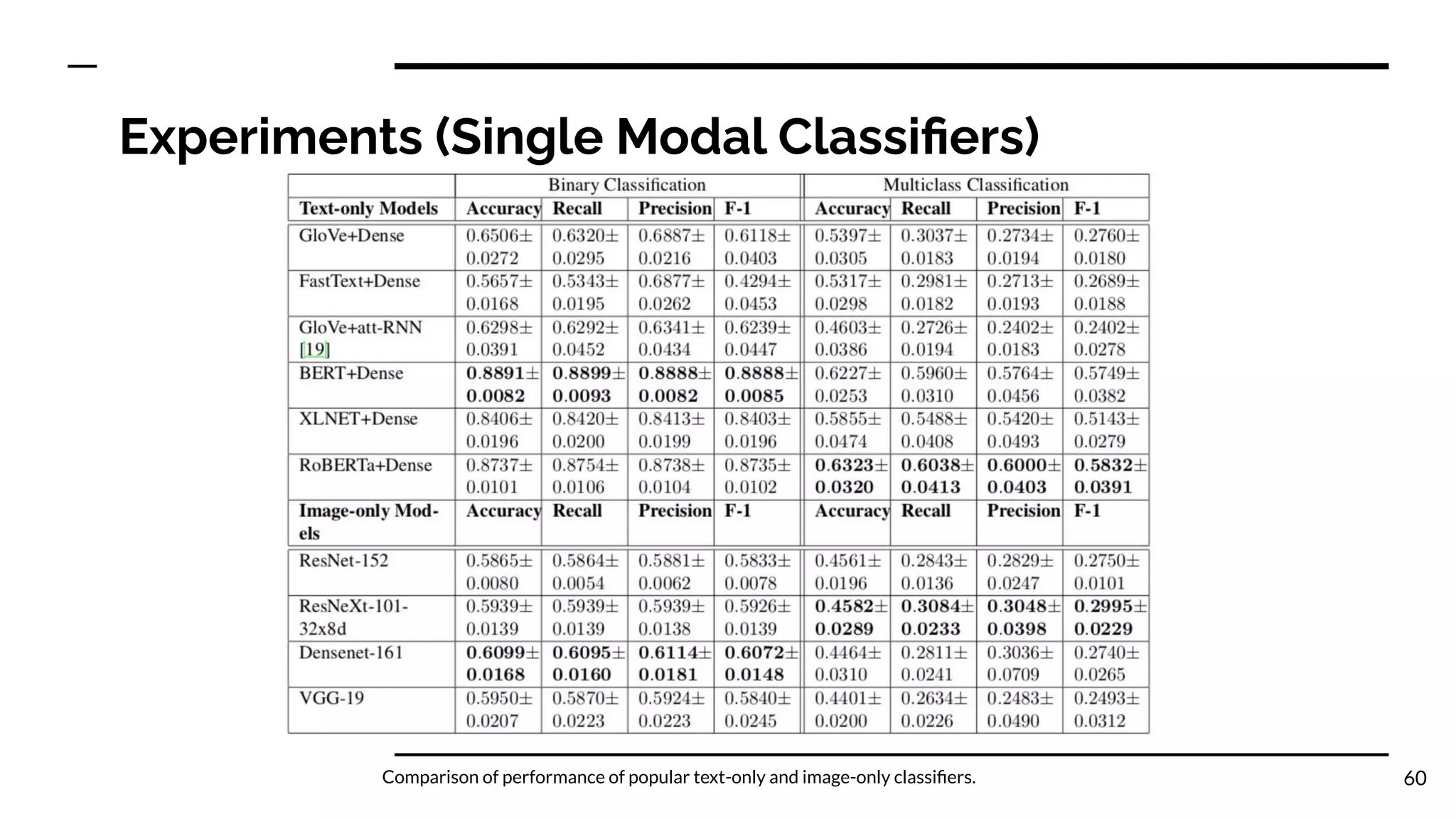

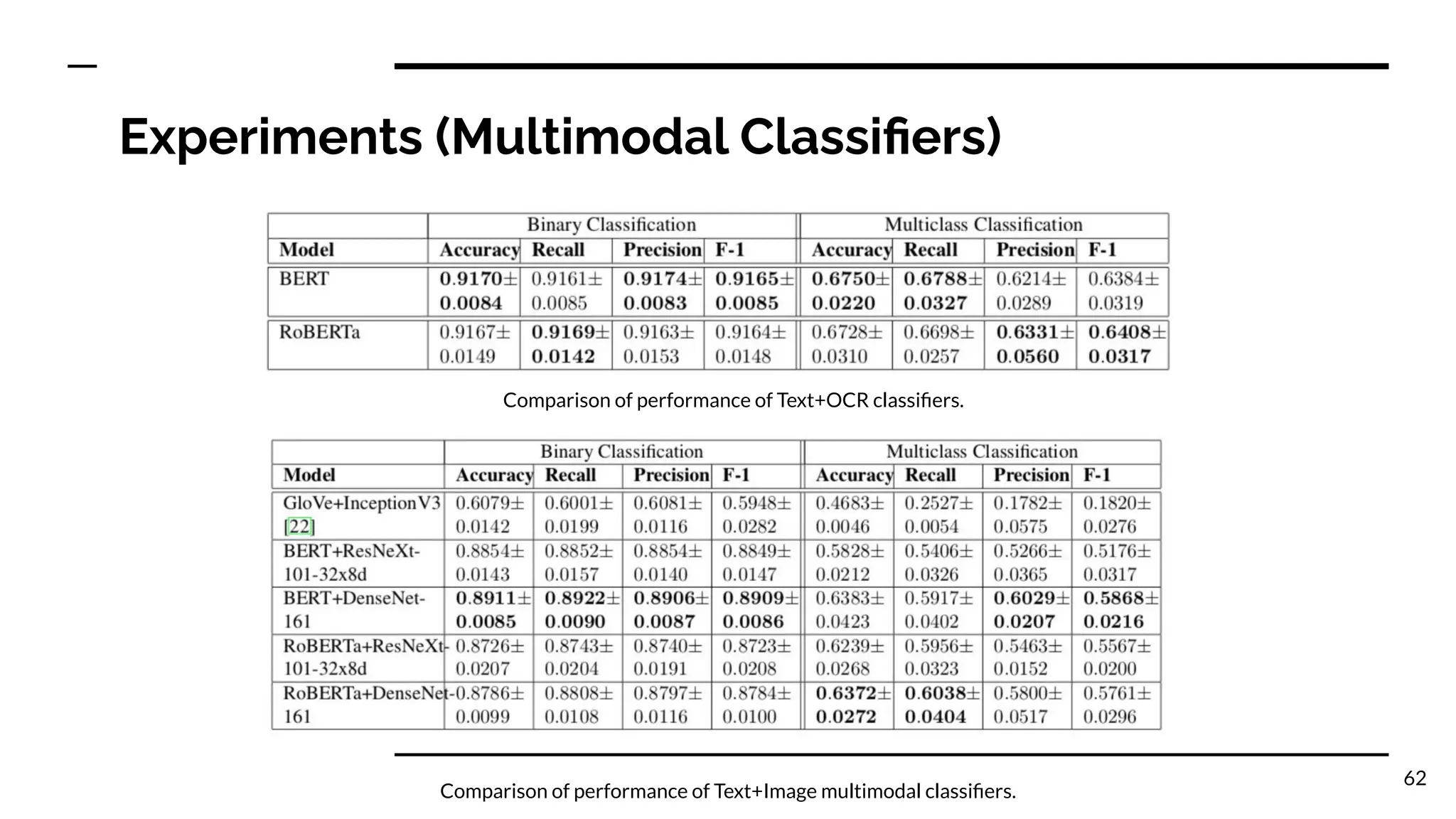

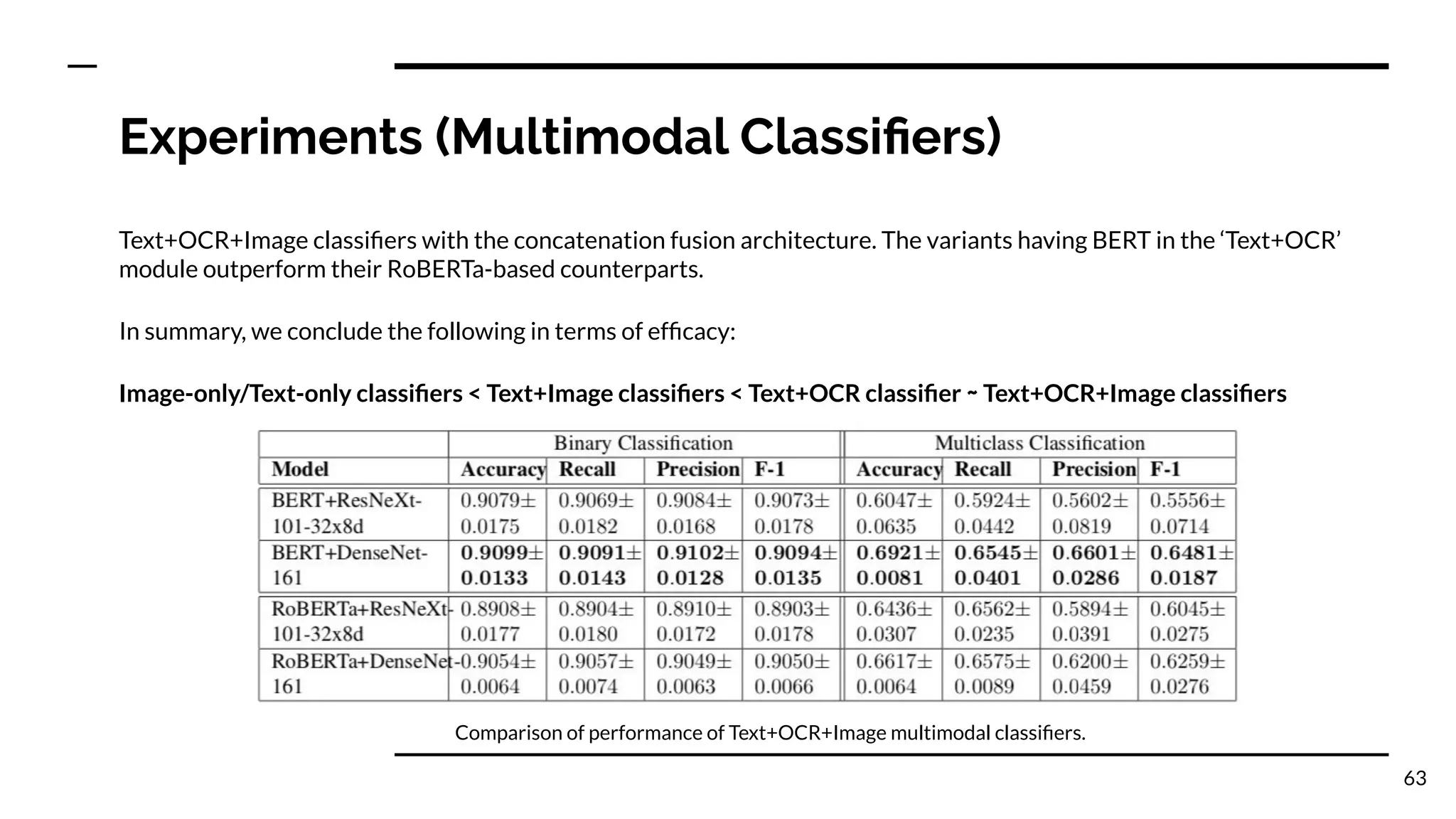

The document discusses online abuse and antisemitism, highlighting the rising incidents on social media platforms, specifically Gab, which has become a significant venue for such behavior due to its lenient moderation policies. It outlines a proposed multimodal deep learning approach for detecting online antisemitism and abuse, including the analysis of data consisting of text and images collected from Gab posts. The work emphasizes the importance of understanding the severity of abuse and categorizing types while addressing the challenges involved in detection and machine learning applications in this field.