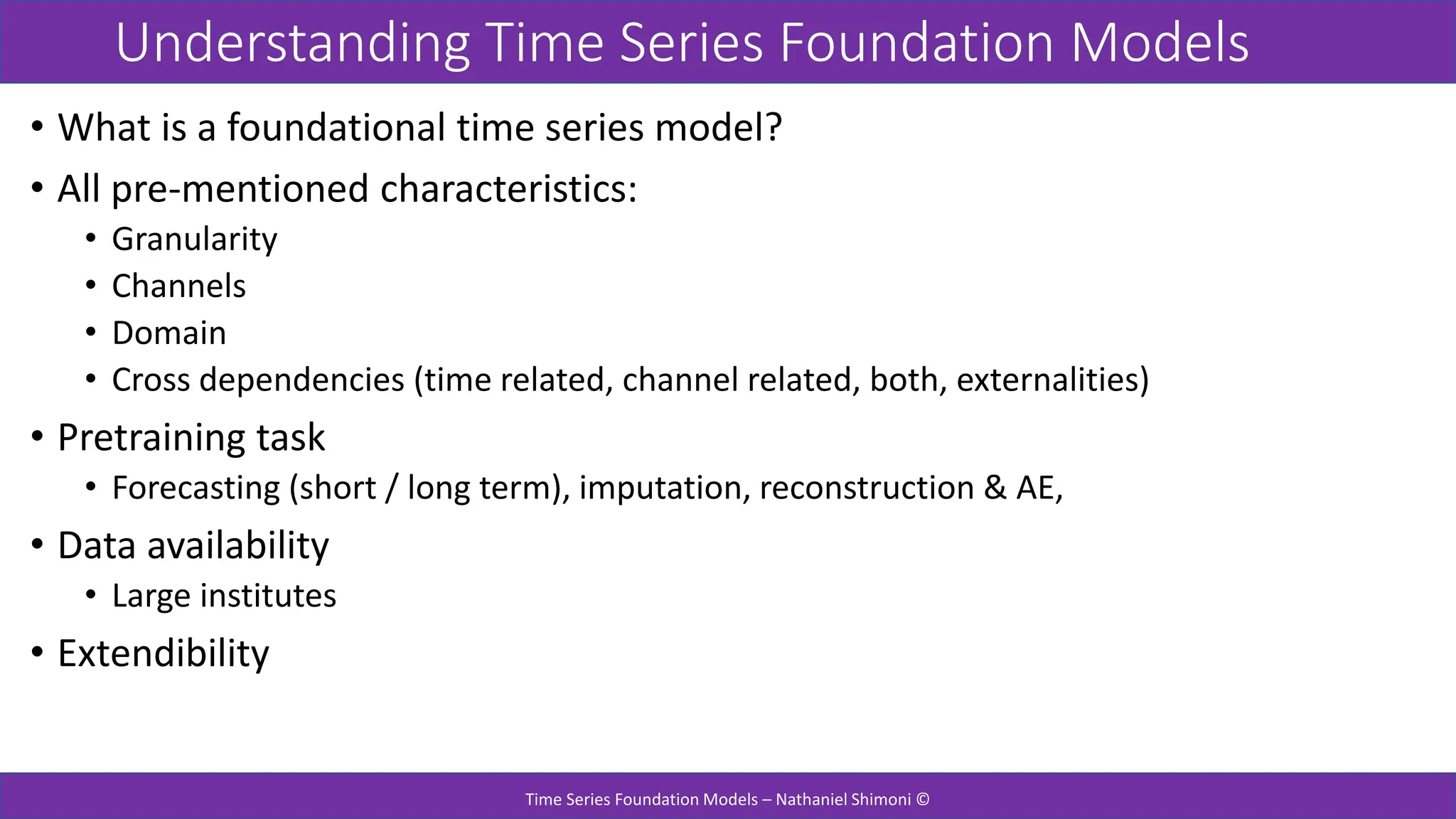

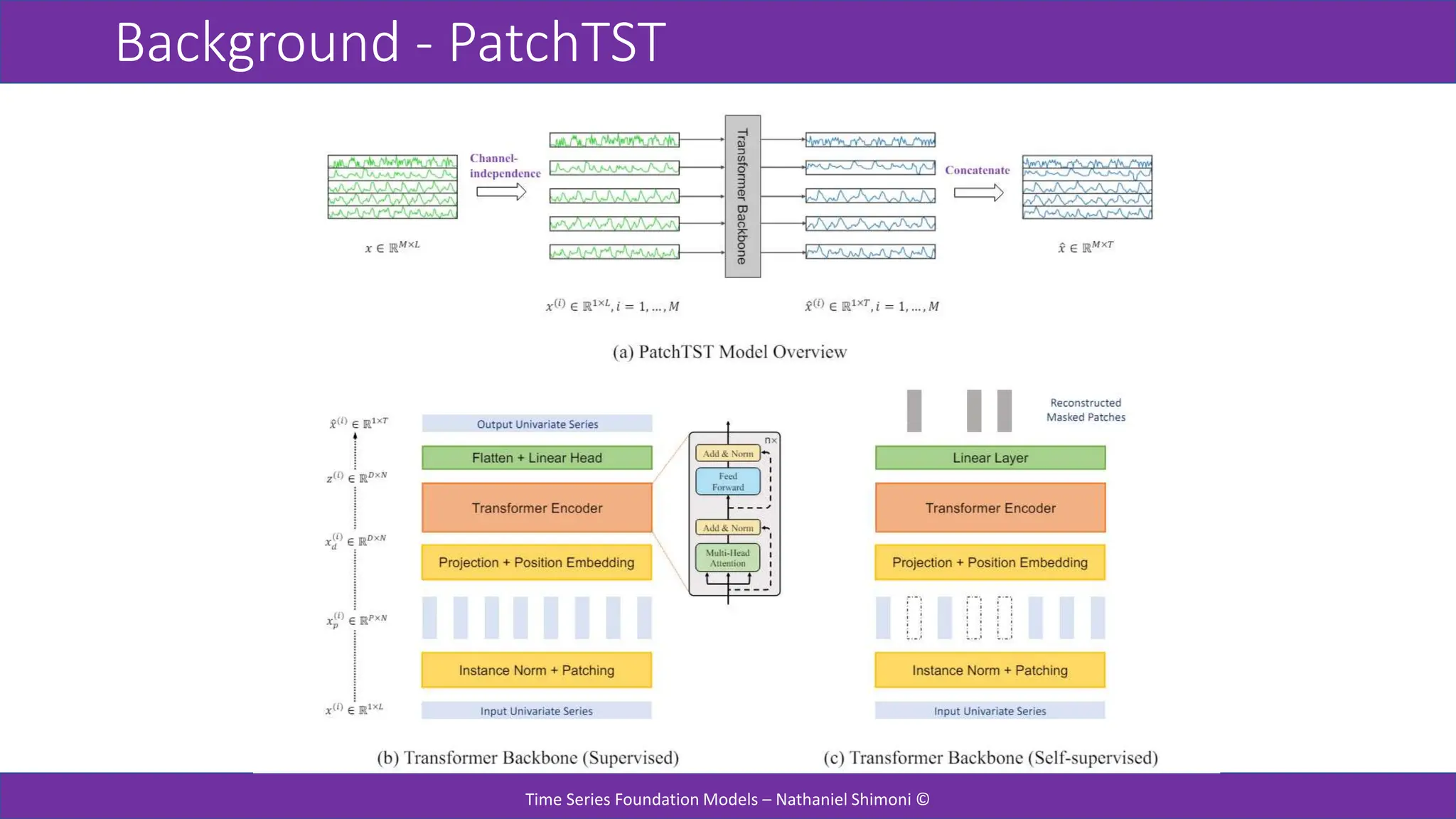

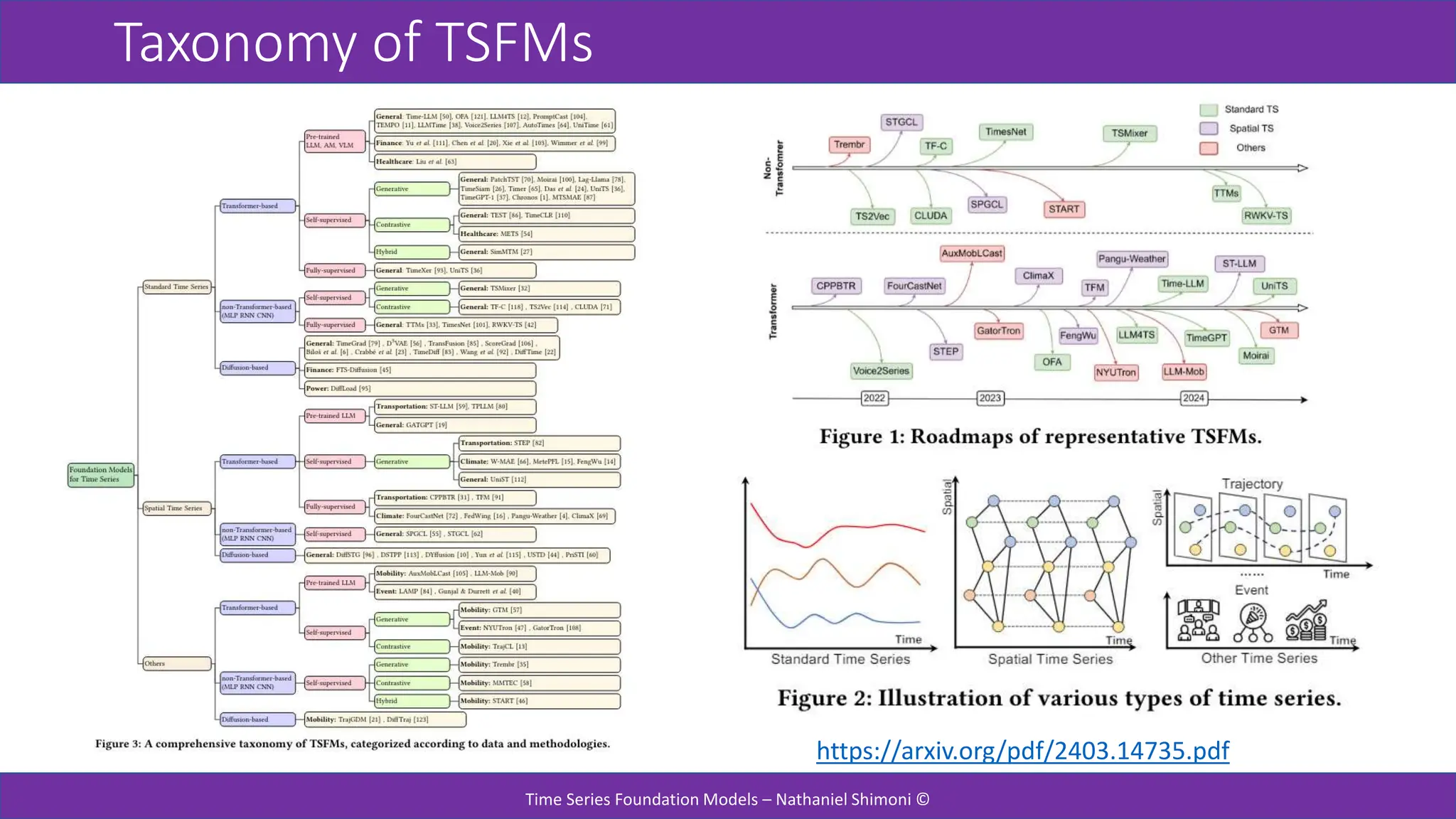

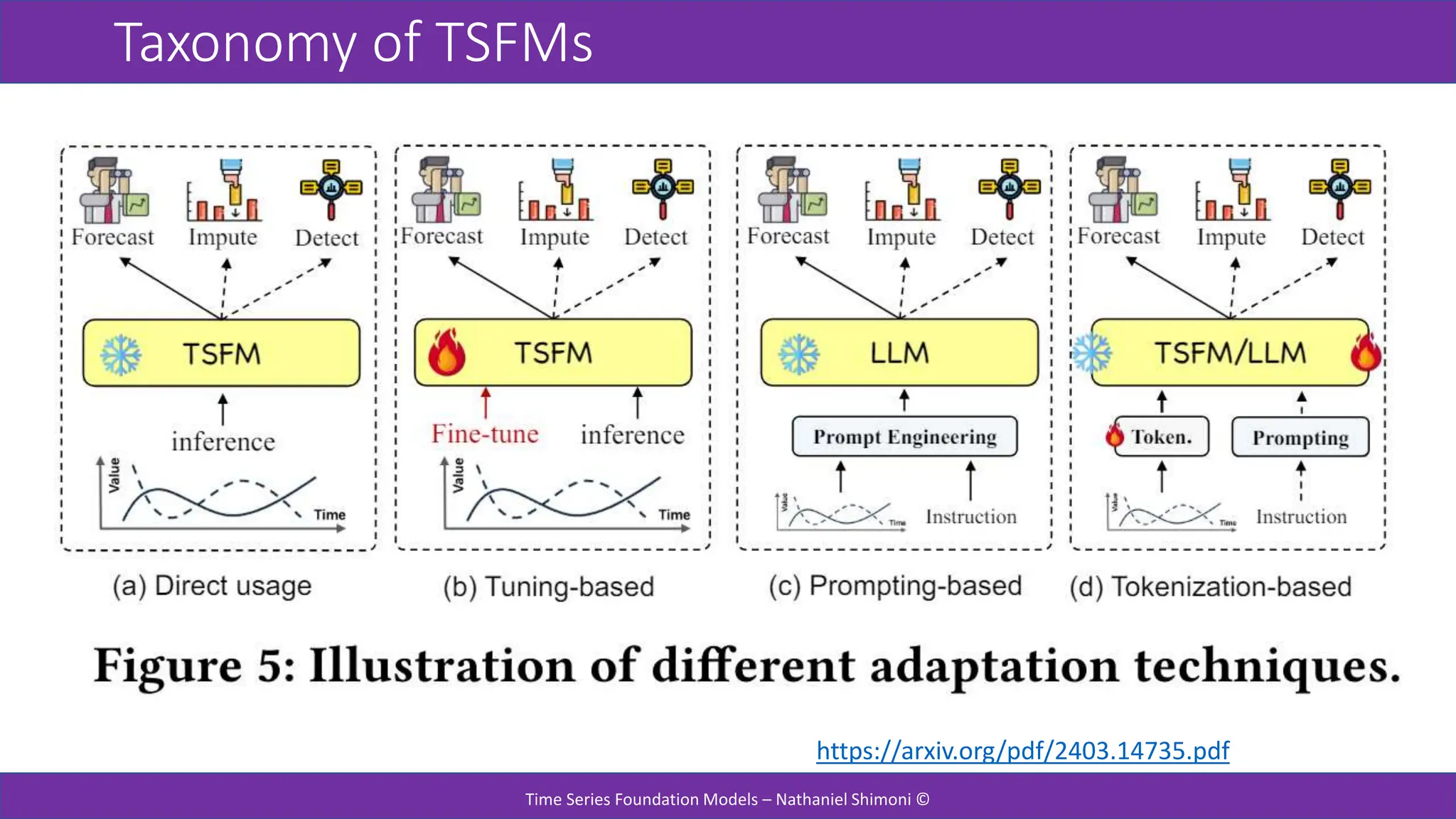

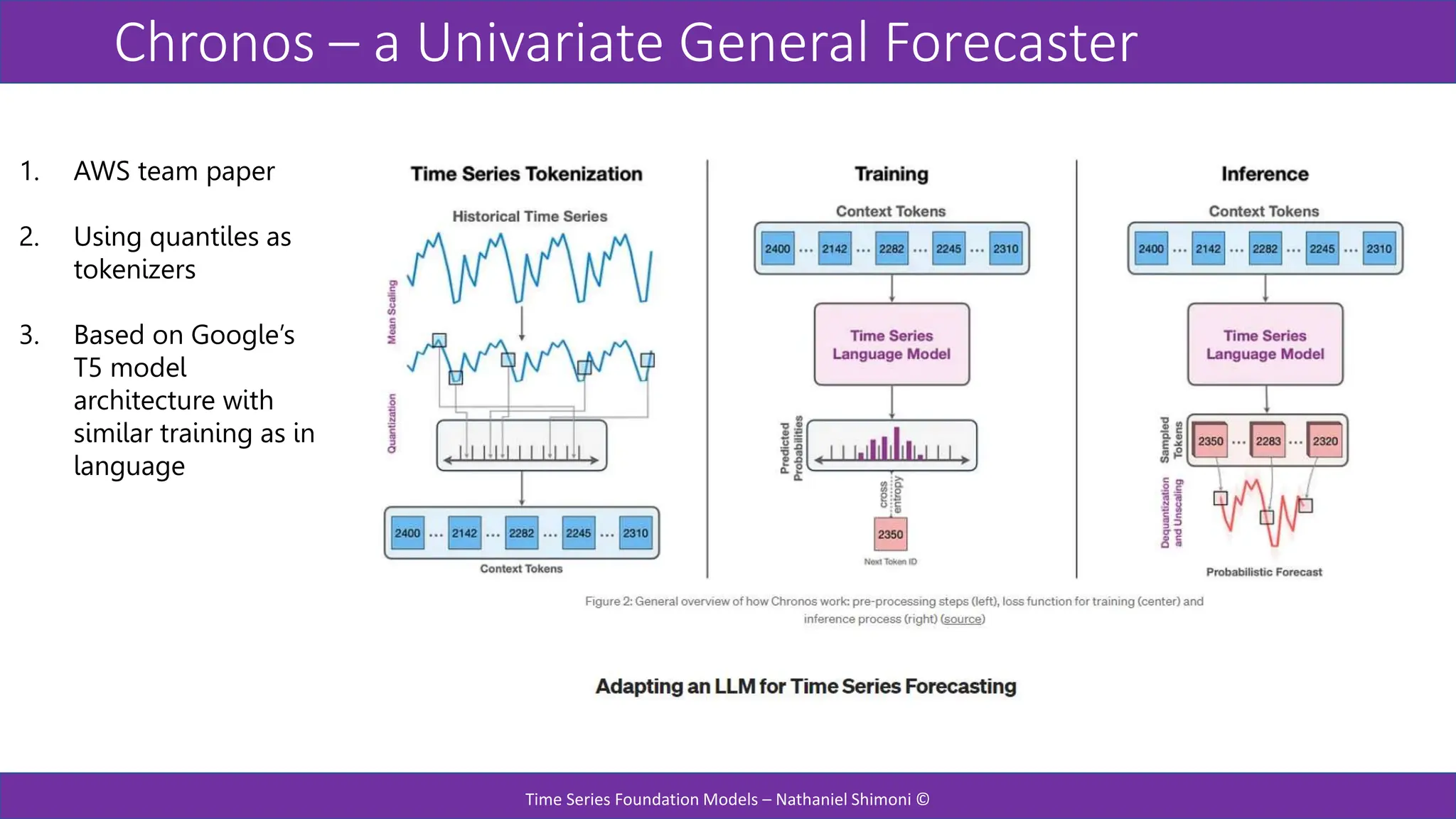

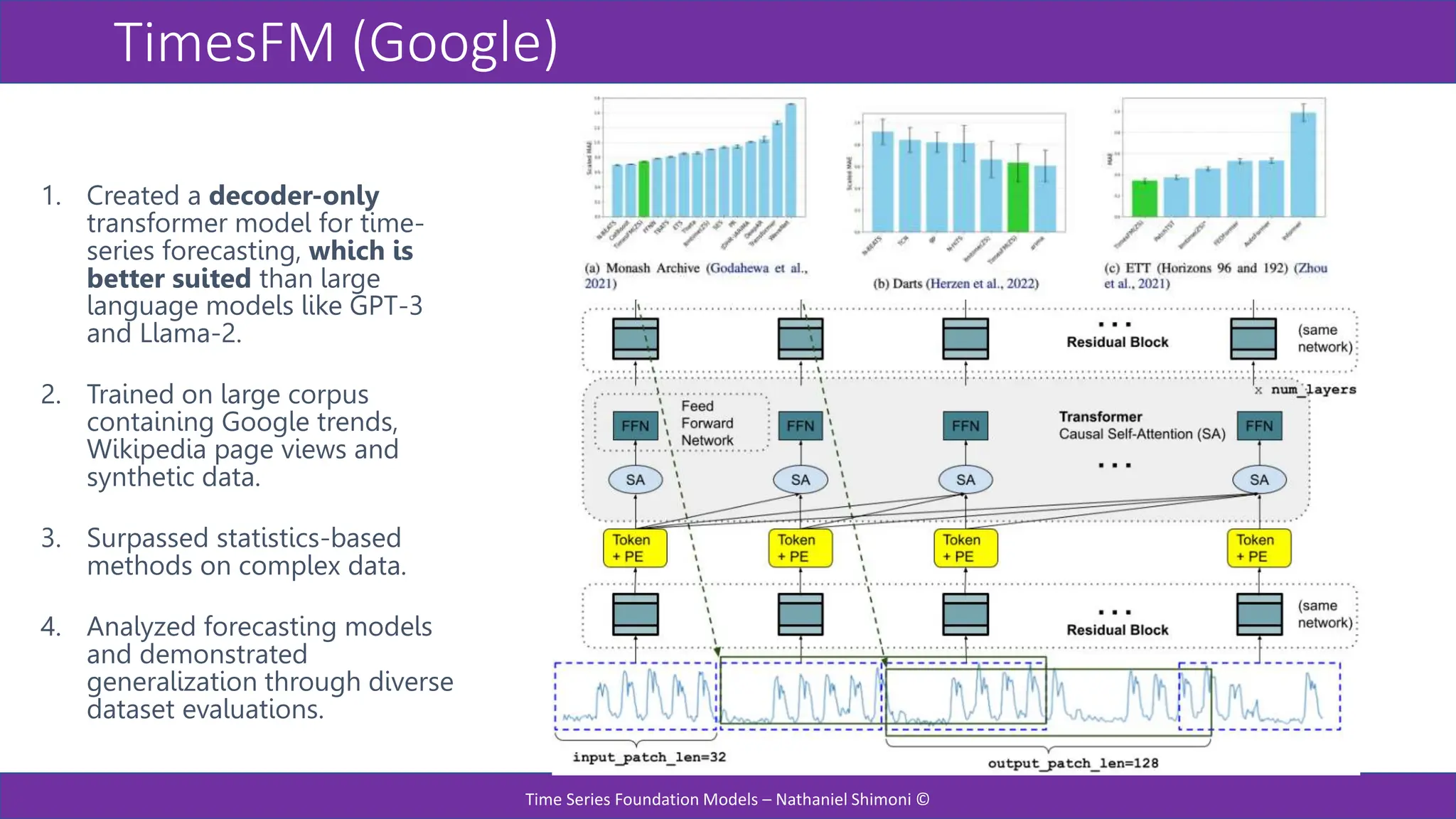

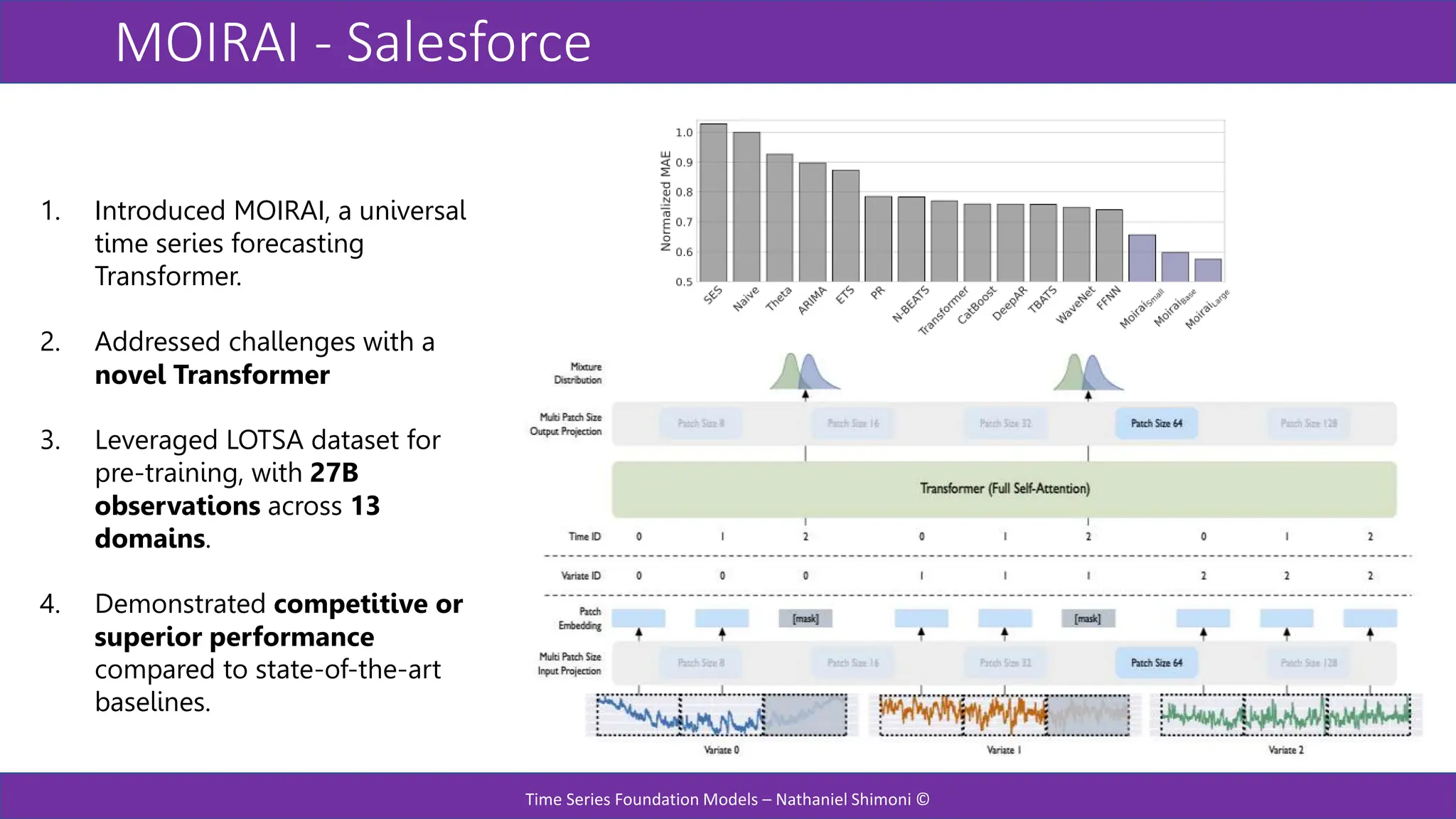

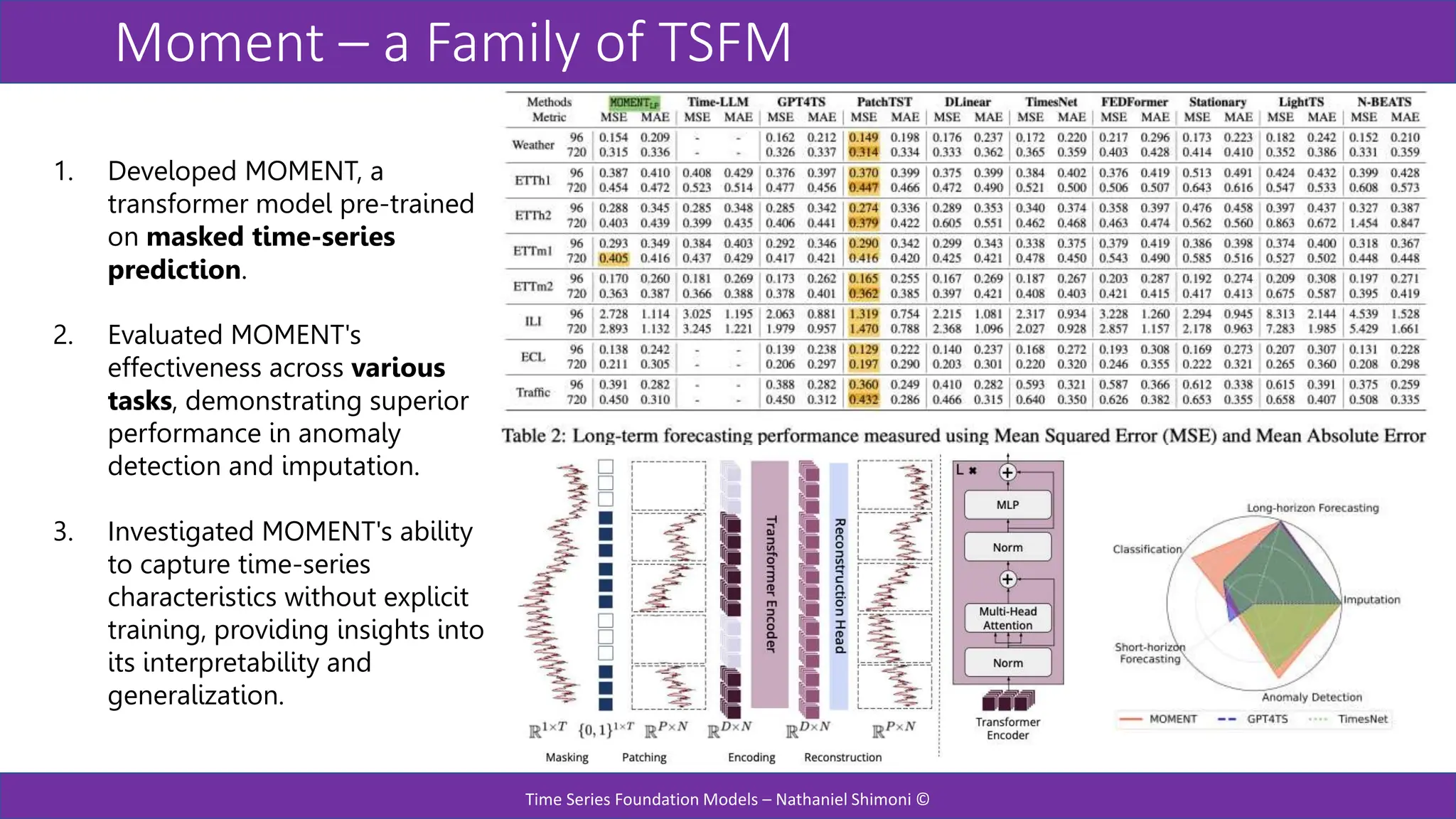

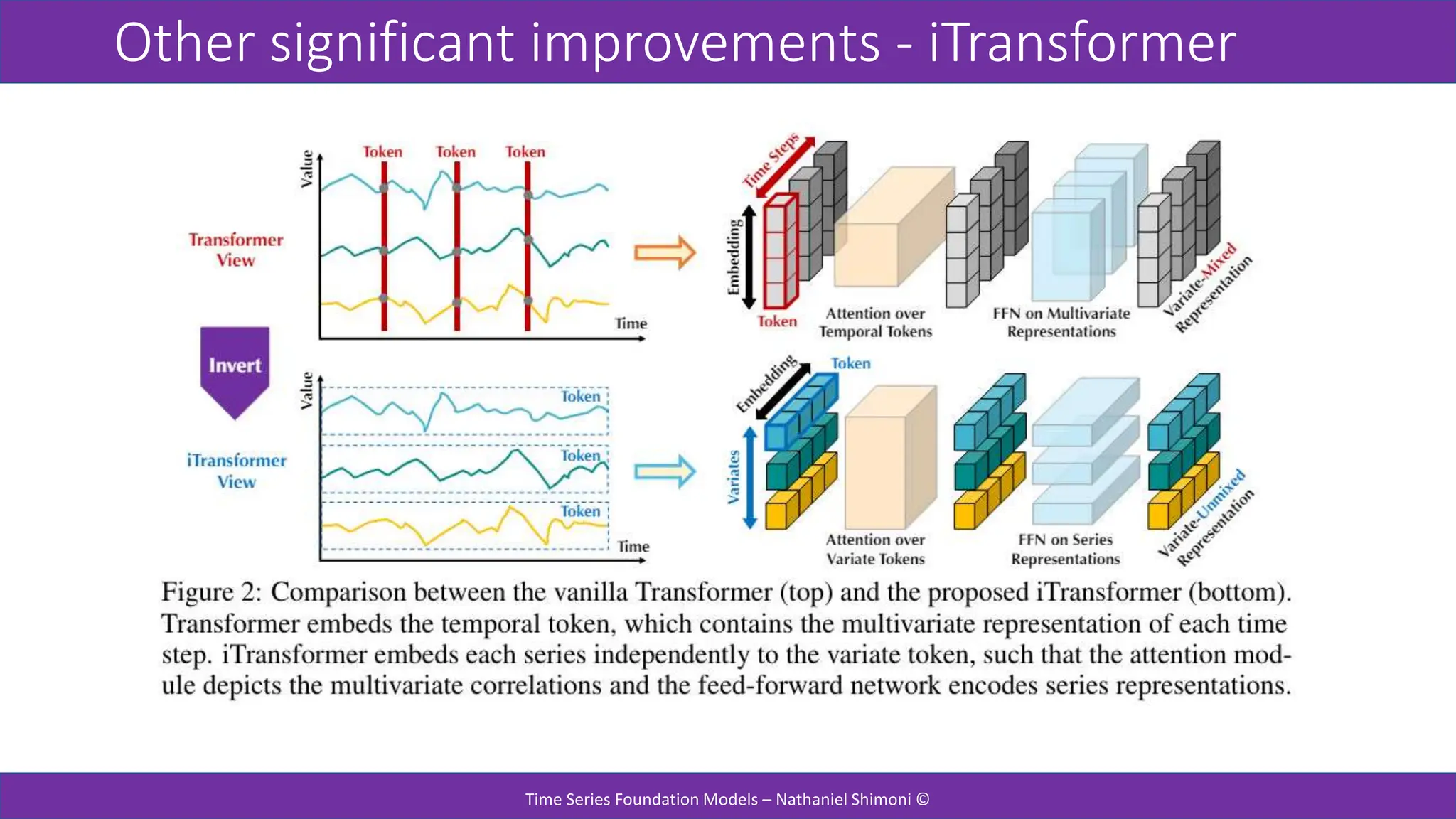

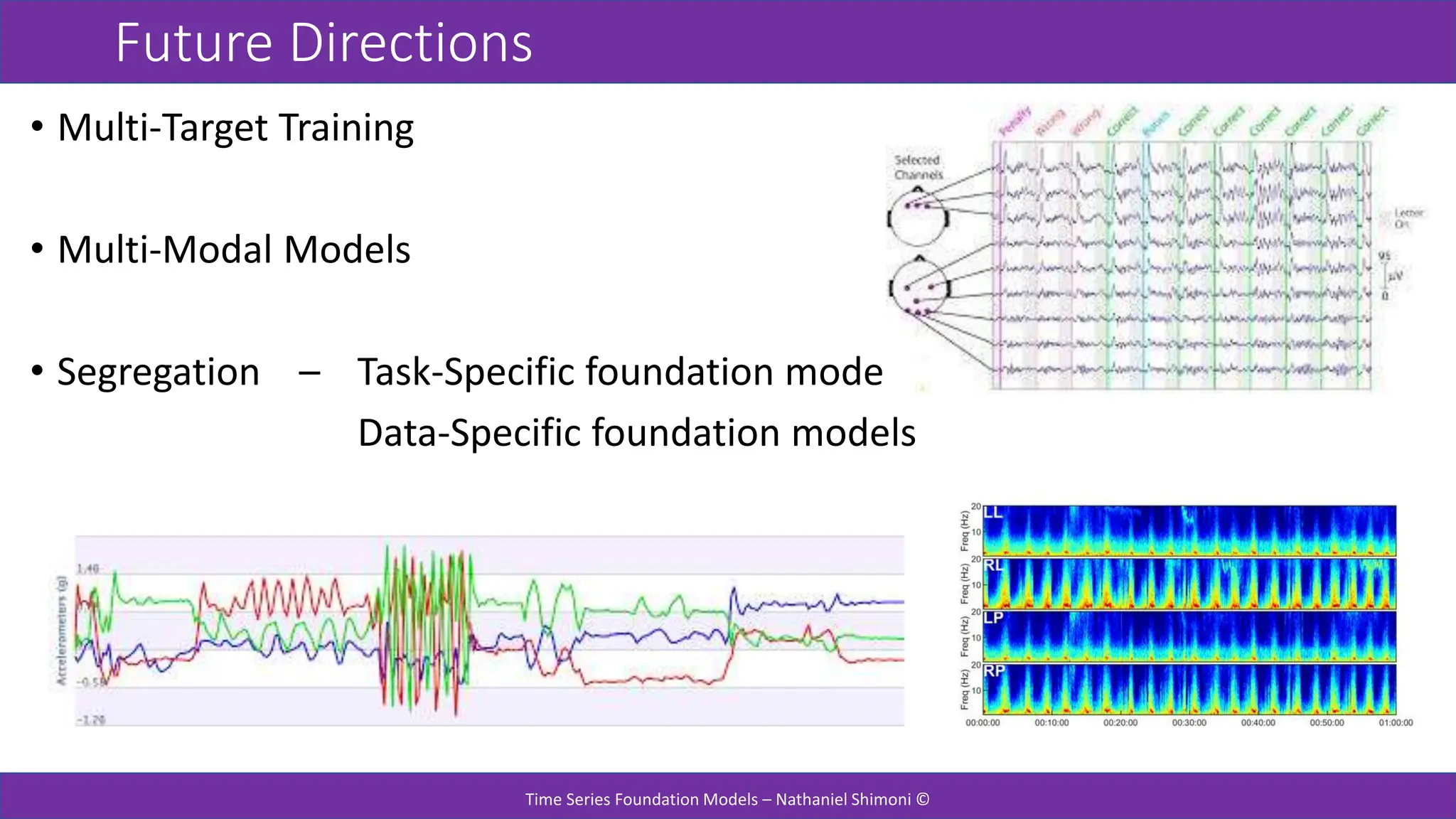

The document discusses foundational time series models (TSFMs), highlighting their characteristics like granularity, domain dependencies, and forecasting capabilities. It reviews notable TSFM developments, including Chronos, TimesFM, Moirai, and Moment, noting their competitive performance against traditional methods and their innovative architectures. Additionally, it addresses future directions and improvements in multi-target and multi-modal training.