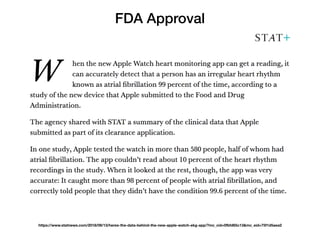

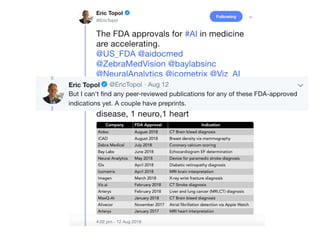

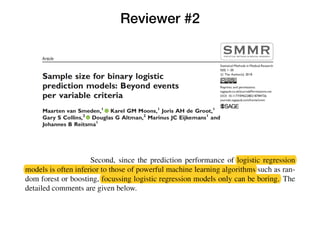

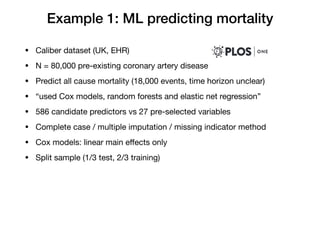

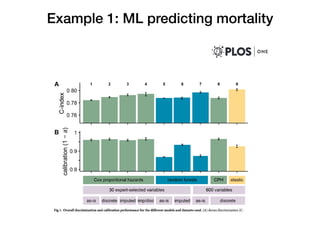

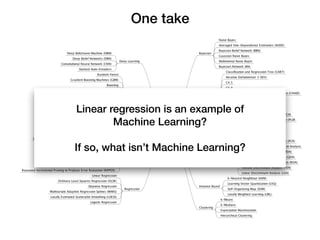

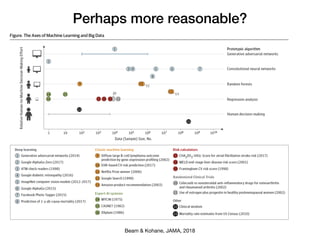

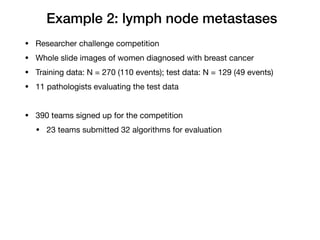

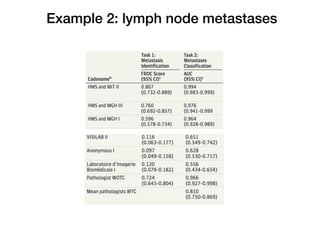

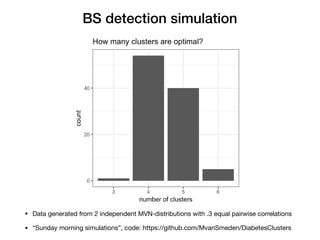

Maarten van Smeden discusses the role of machine learning (ML) and artificial intelligence (AI) in medicine, providing three illustrative examples: predicting mortality in patients with coronary artery disease, analyzing lymph node metastases in breast cancer, and clustering types of diabetes. He highlights the frequent confusion around what constitutes ML/AI, the misapplication of statistical principles in medical contexts, and the importance of involving statisticians in the evaluation of these technologies. The document concludes with a call for realistic simulations and collaboration between statisticians and computer scientists to improve the medical application of novel algorithms.