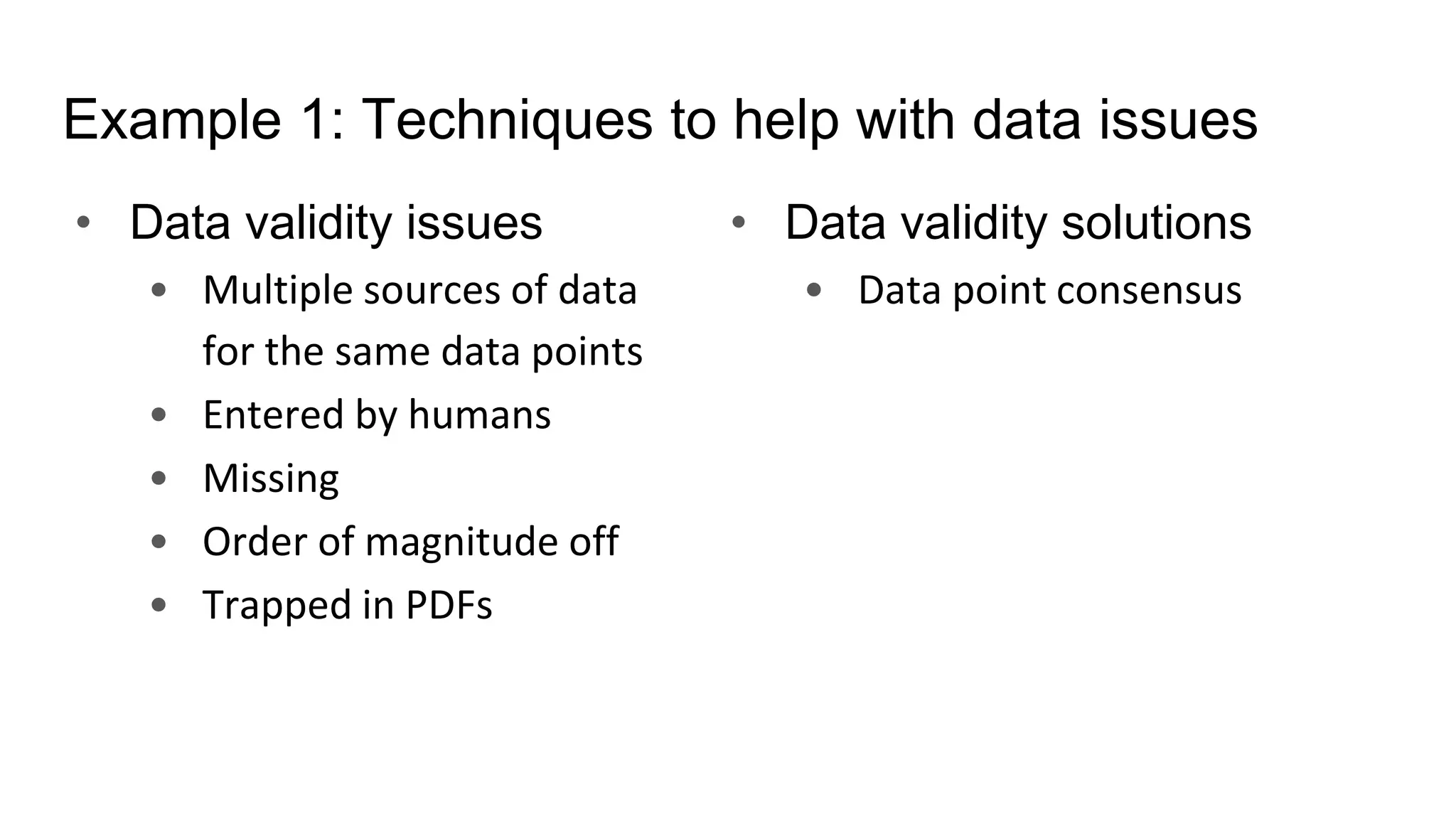

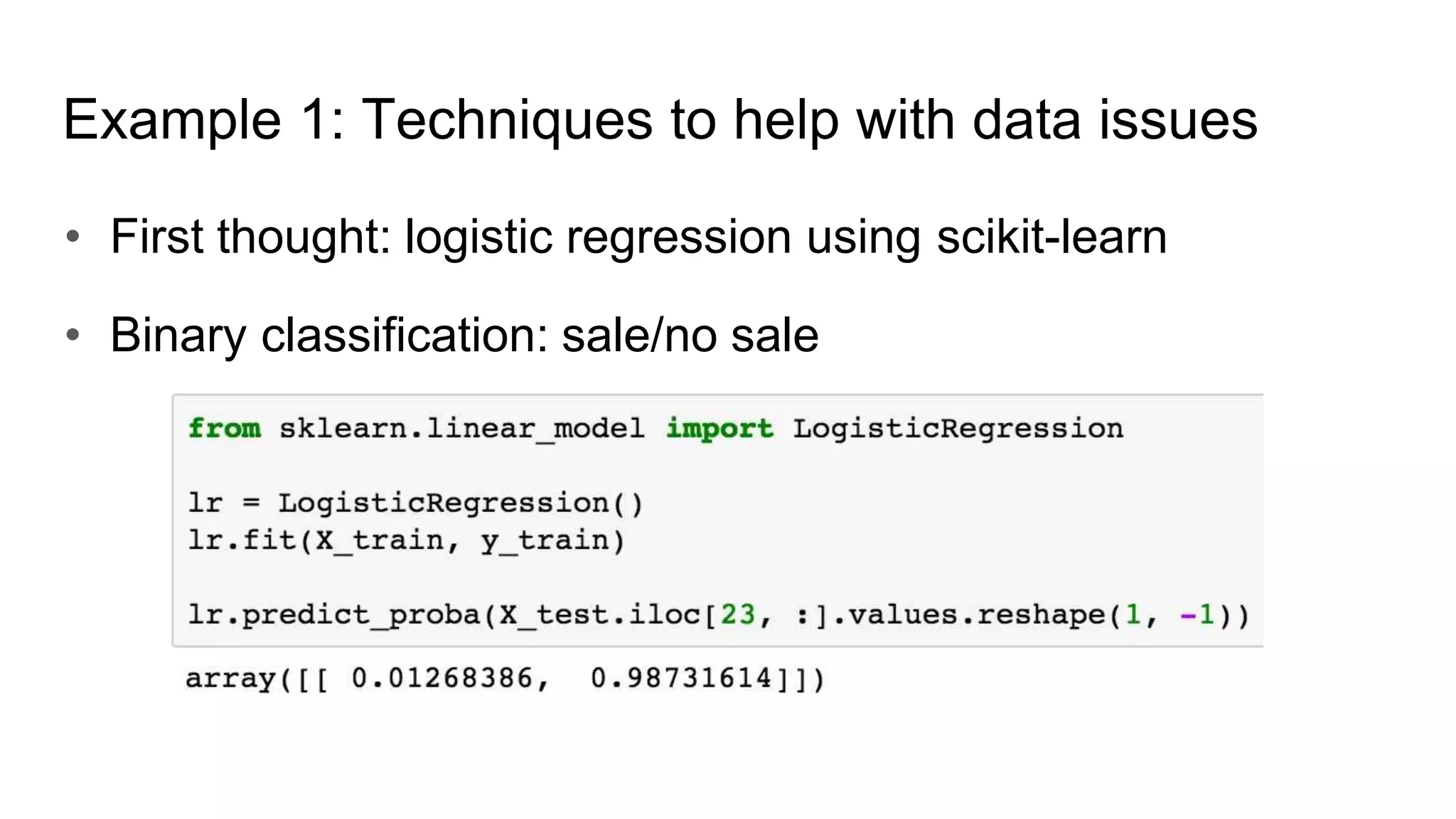

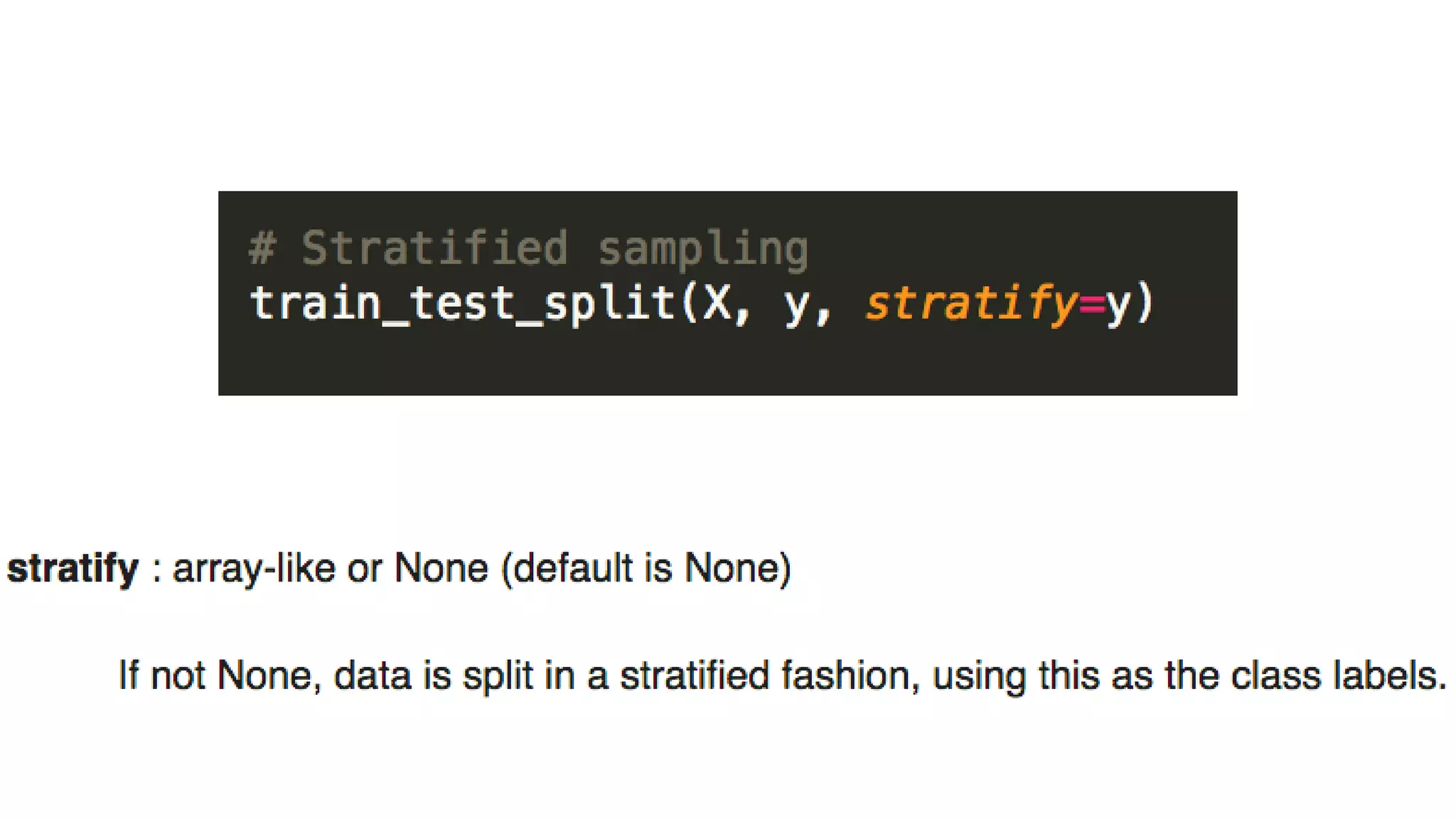

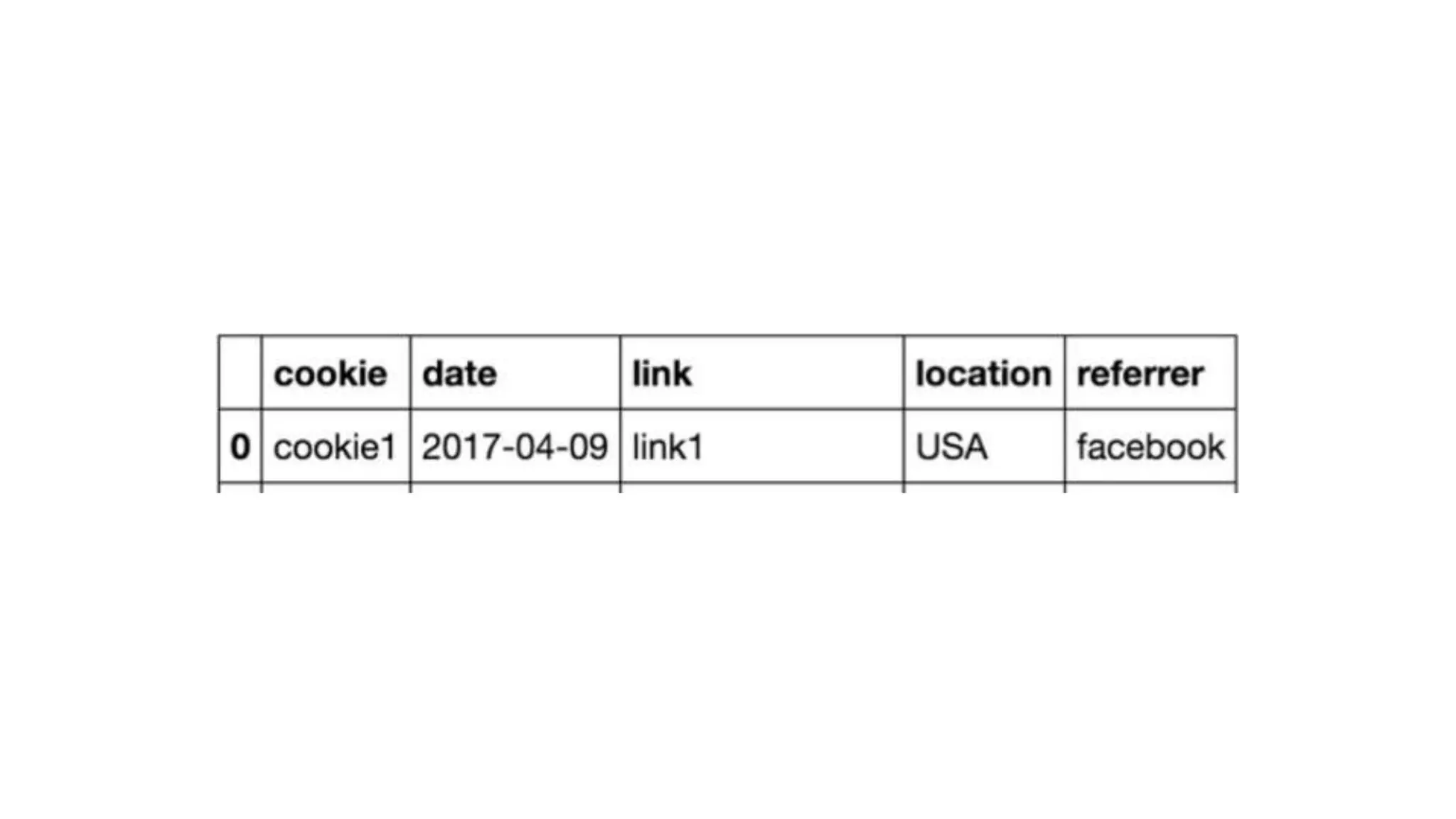

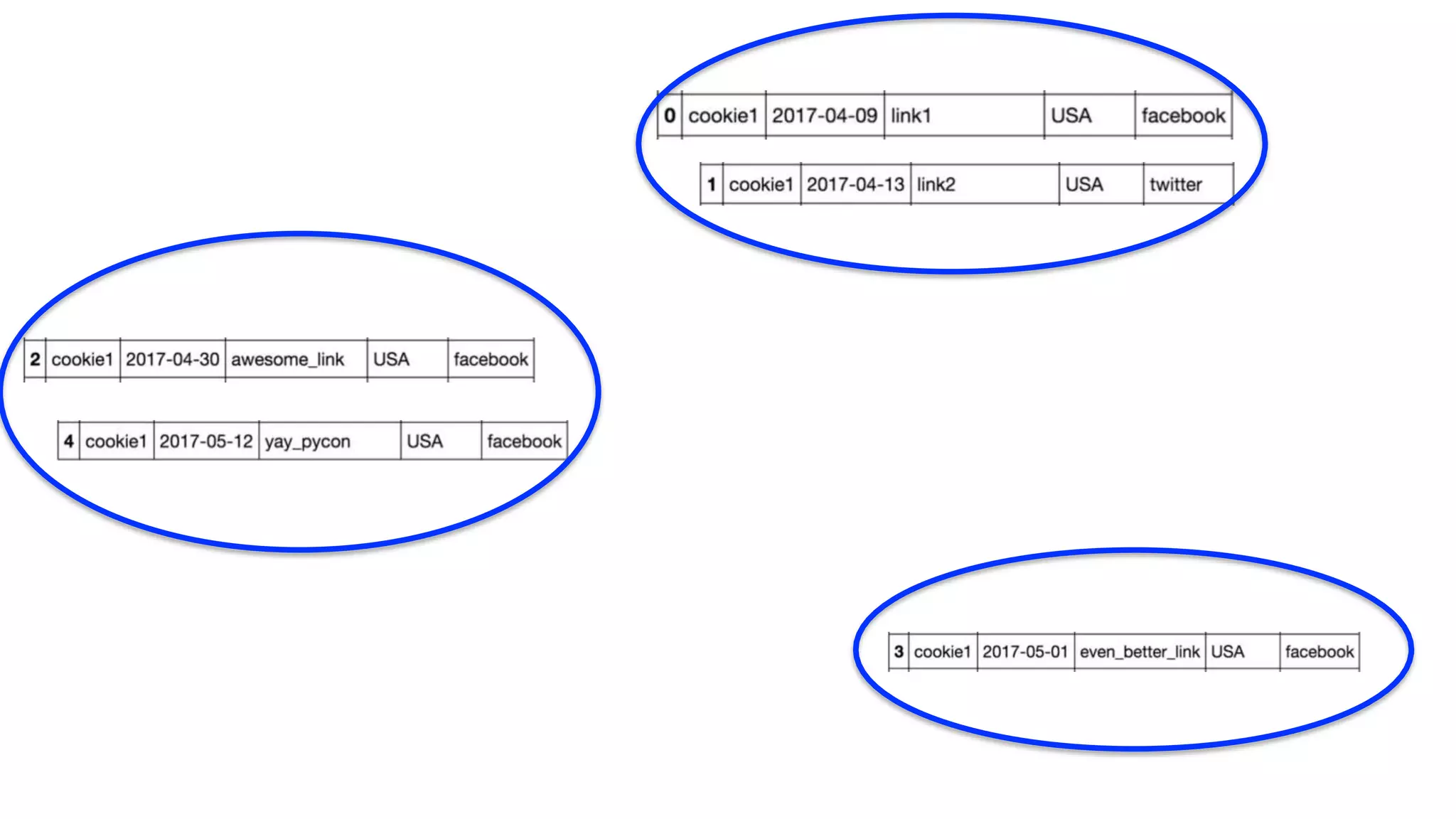

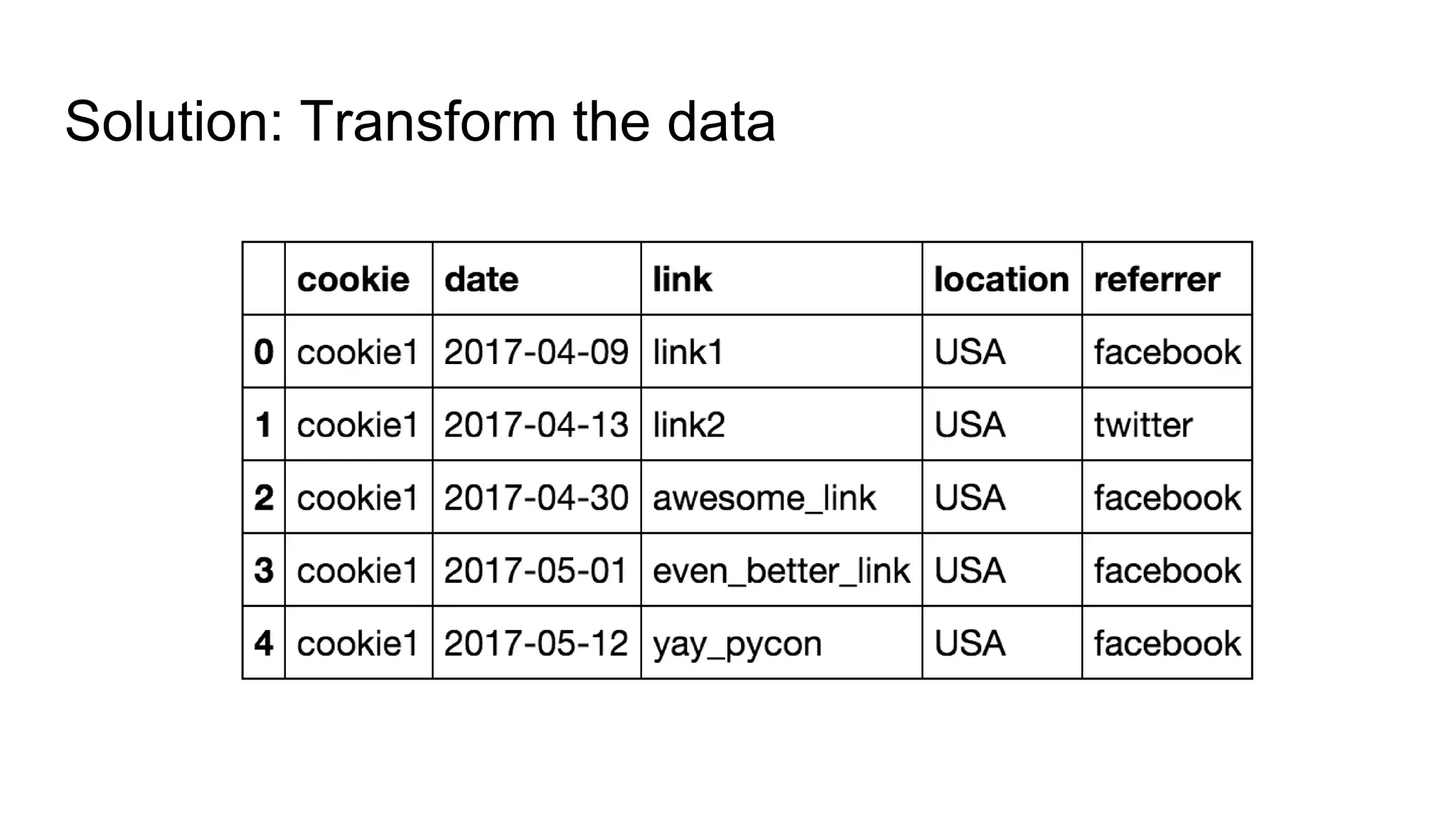

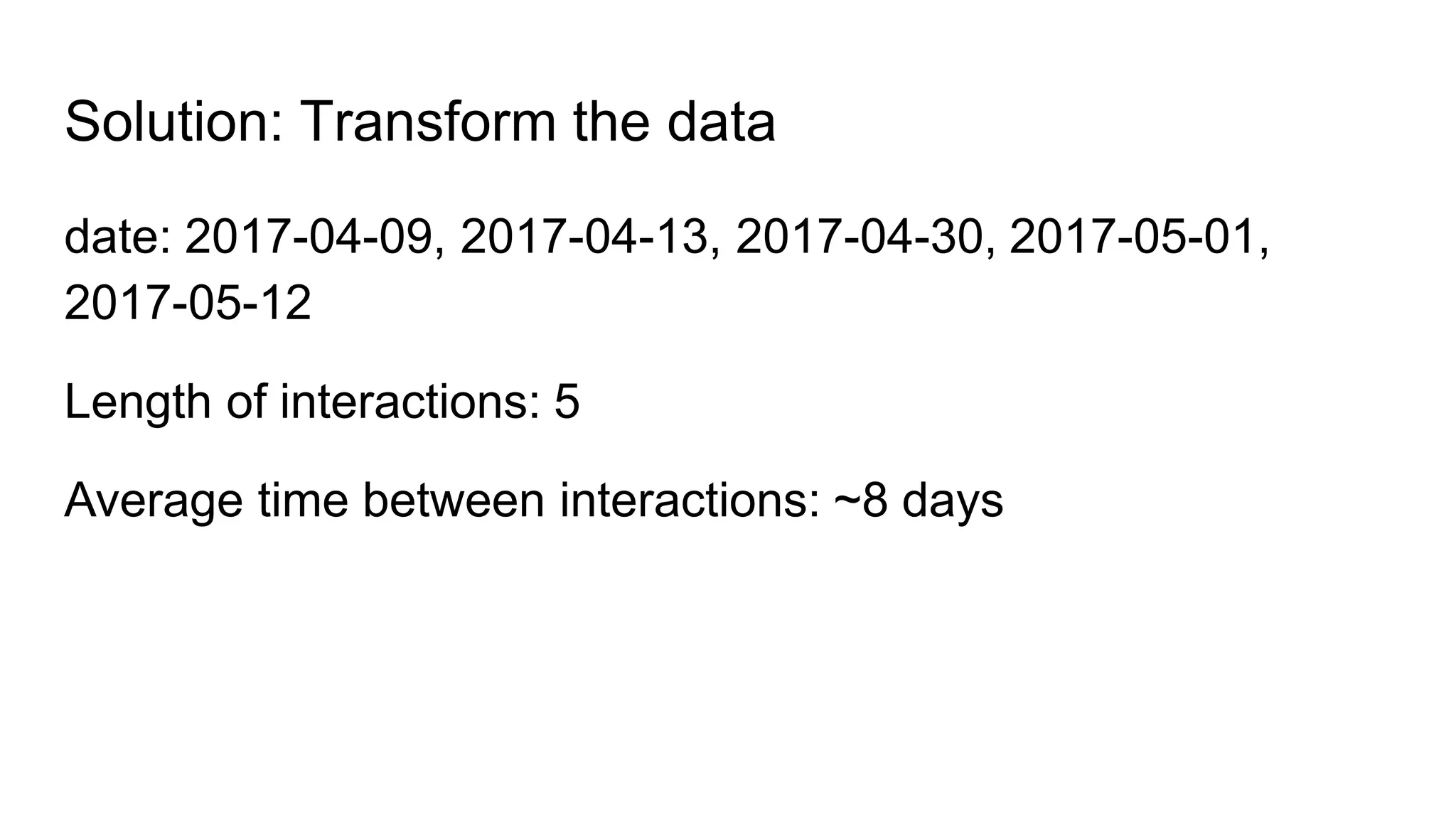

This talk discusses techniques for dealing with messy and imperfect data. Three examples are provided: (1) commercial real estate data with validity issues that were addressed through consensus, imputation, outlier removal and OCR; (2) click data with cookie issues that required transformations like sampling and clustering after preprocessing; (3) digital media data with access issues that necessitated working with insufficient data using decomposition and seeking more data. The overall message is to focus on the problem, identify data limitations, and apply creative solutions like reworking the data.

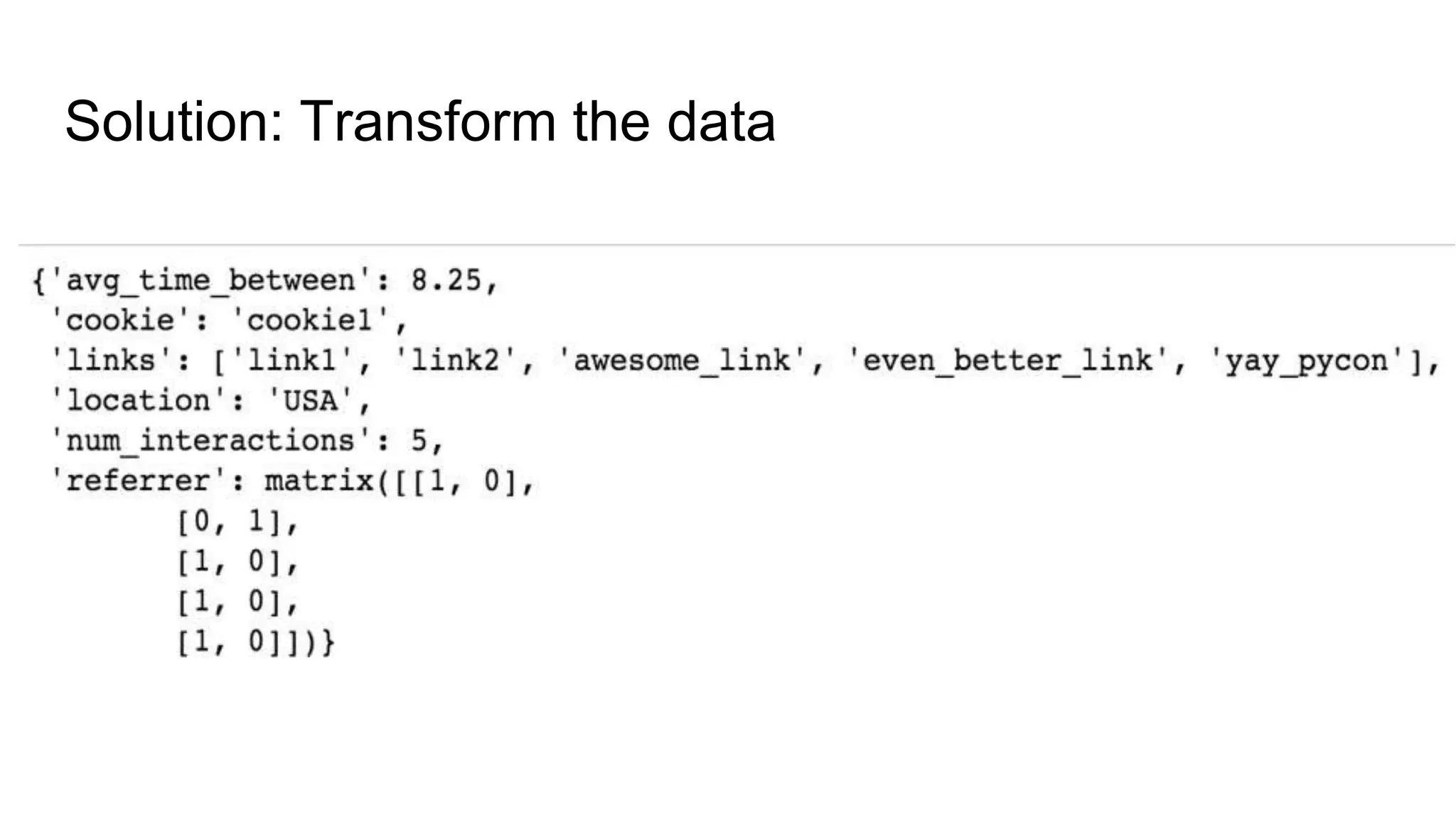

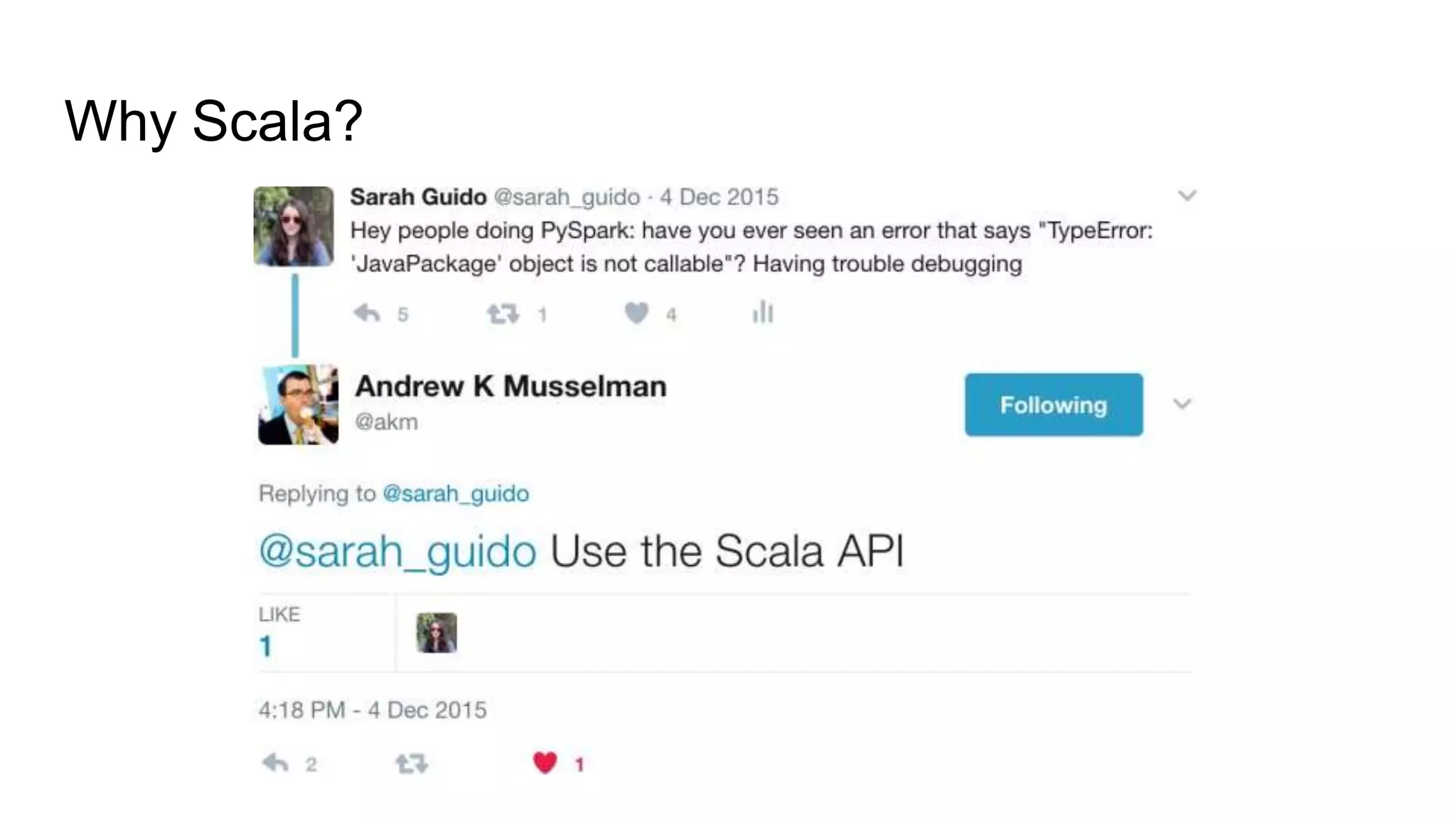

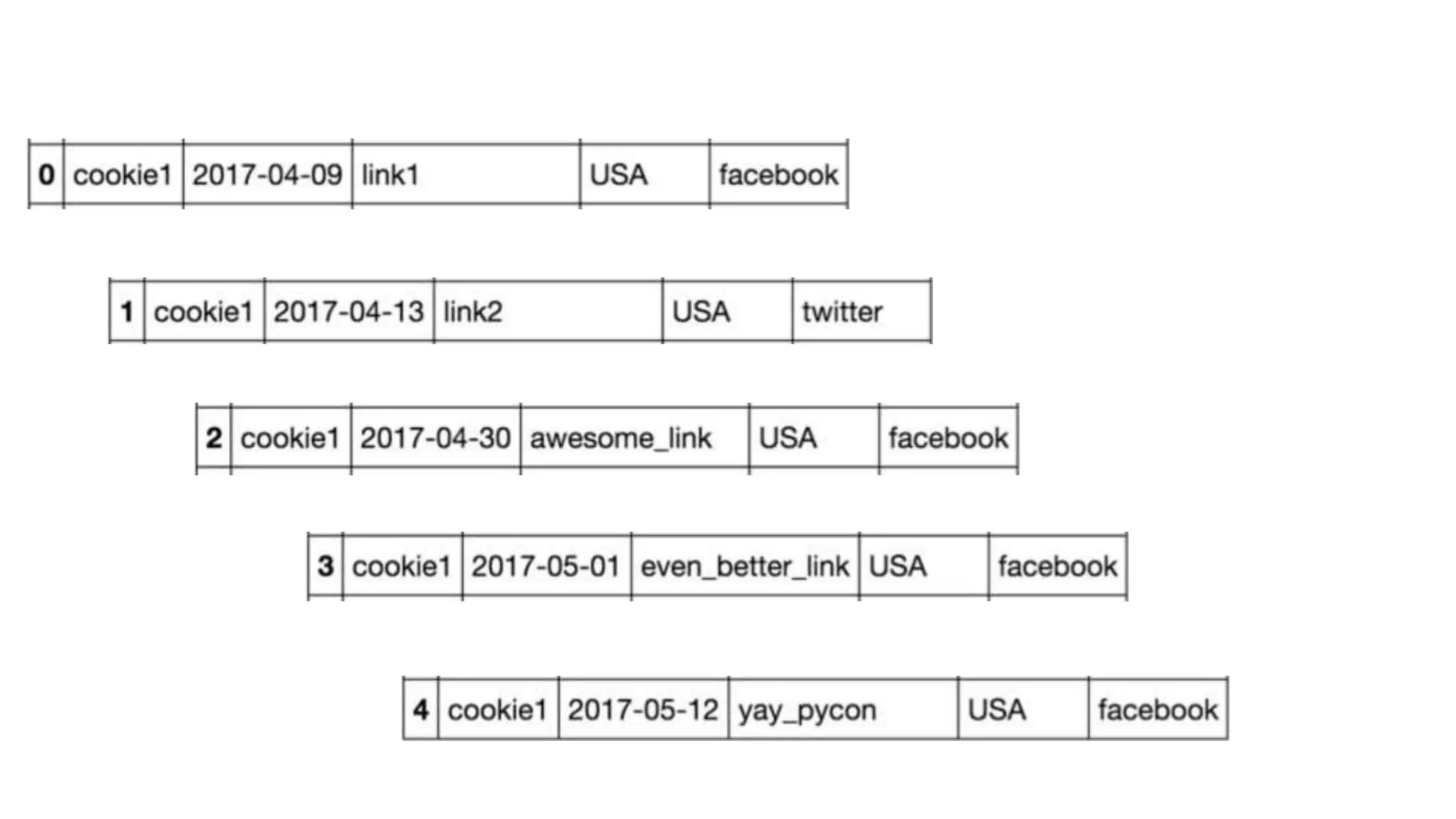

![Solution: Transform the data

referrer: facebook, twitter

One-hot encode and transform to matrix

• Facebook: [1, 0]

• Twitter: [0, 1]](https://image.slidesharecdn.com/datawranglingupload-180210191145/75/The-Wild-West-of-Data-Wrangling-PyTN-38-2048.jpg)