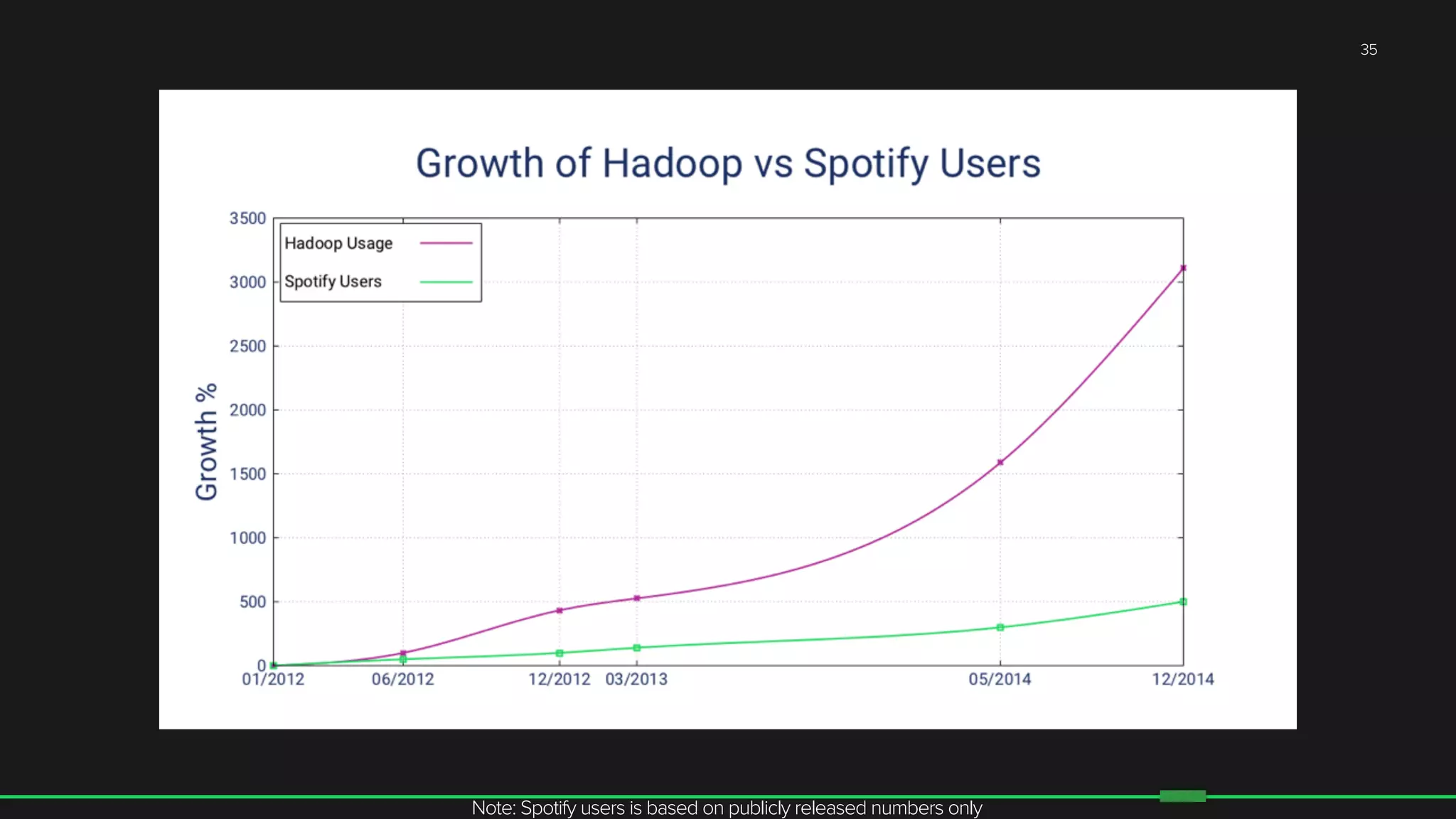

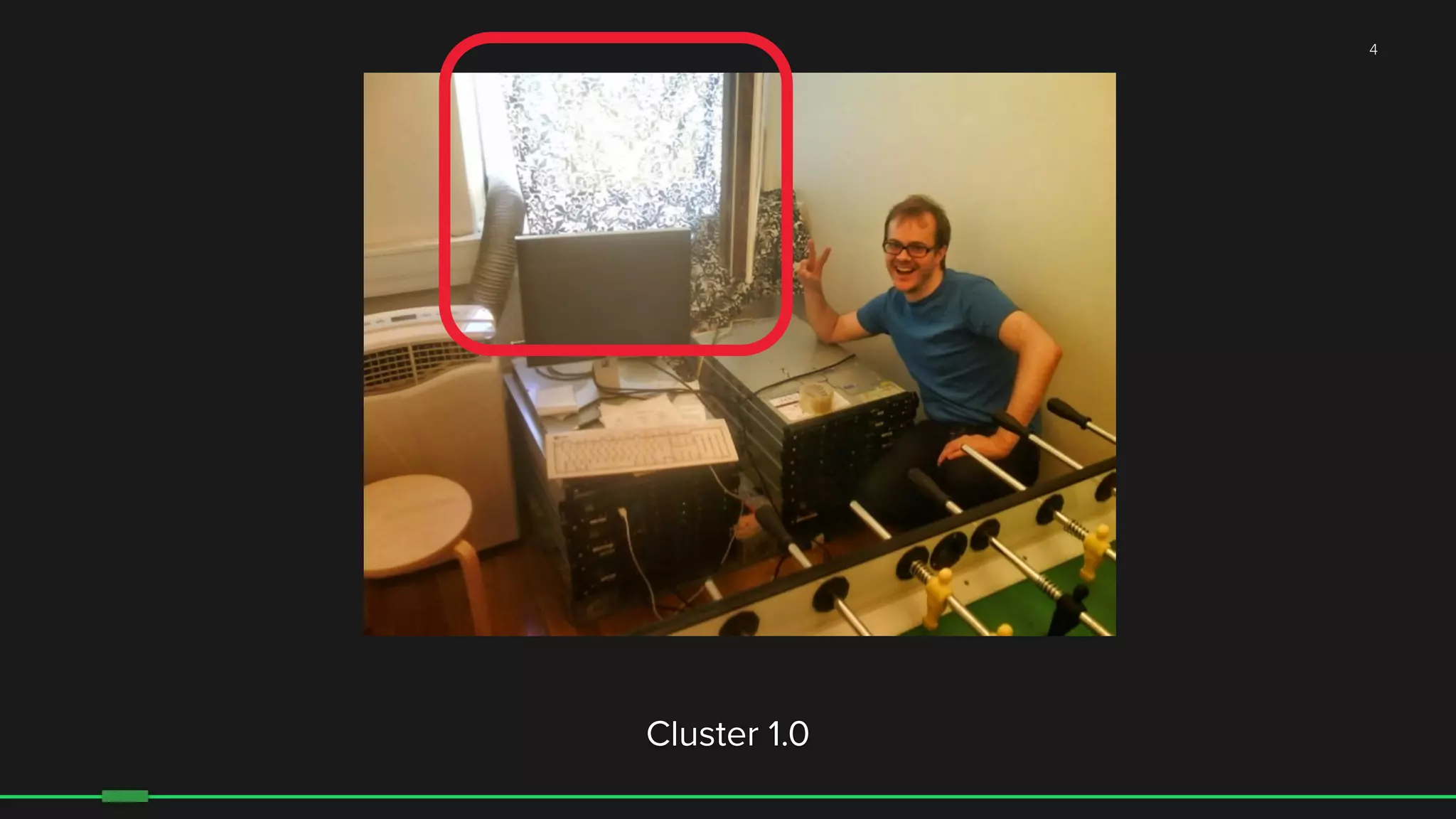

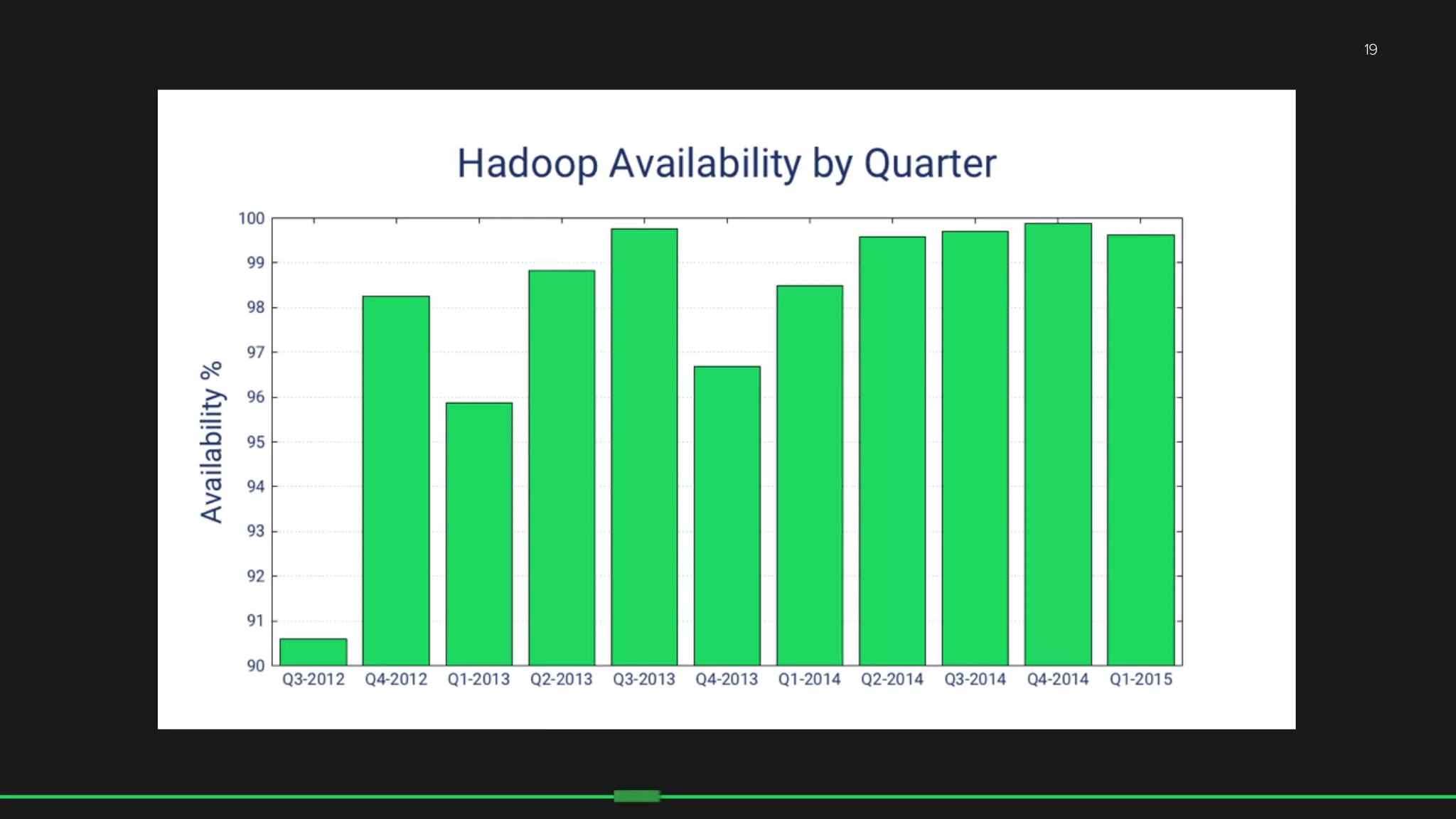

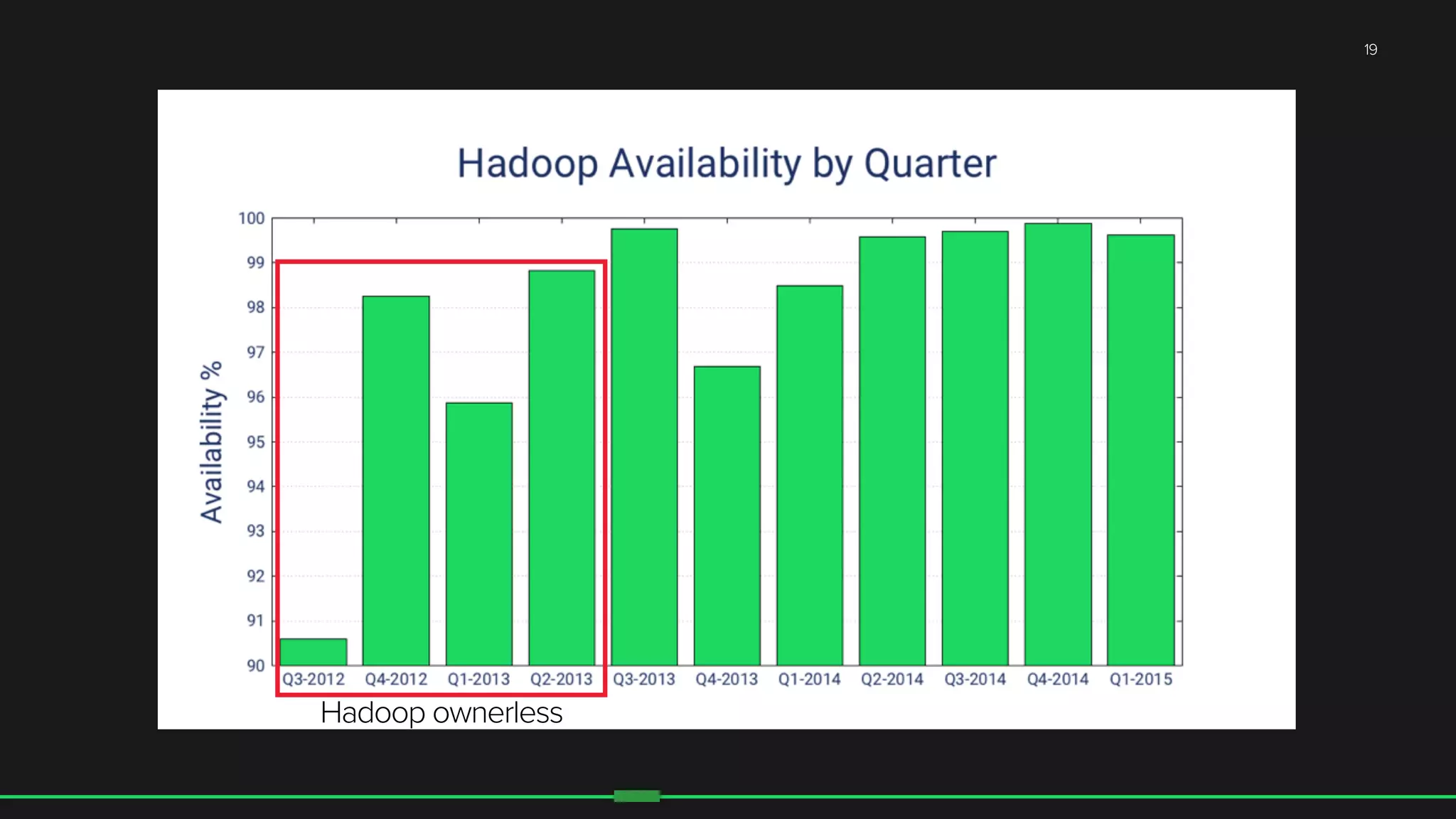

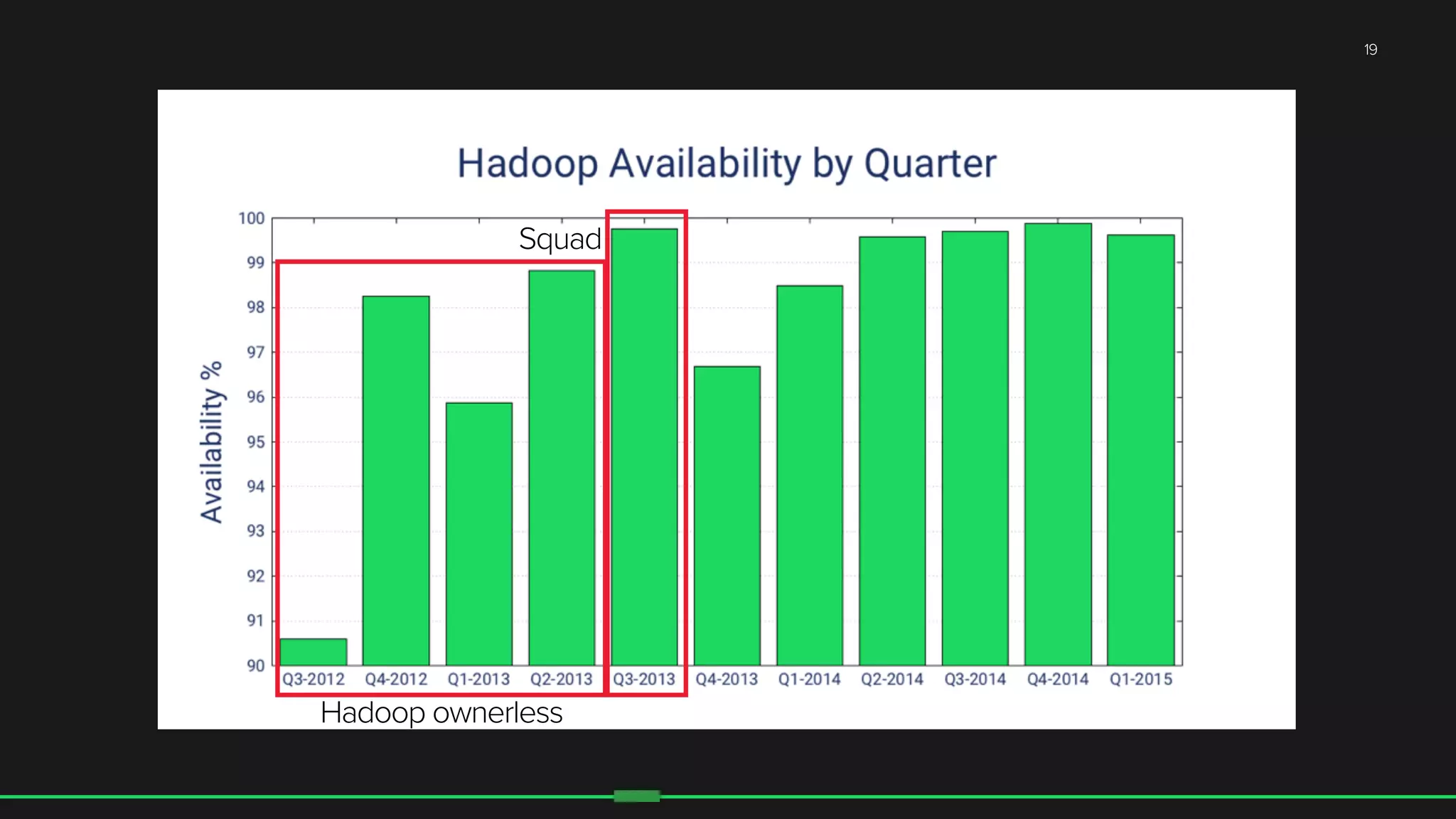

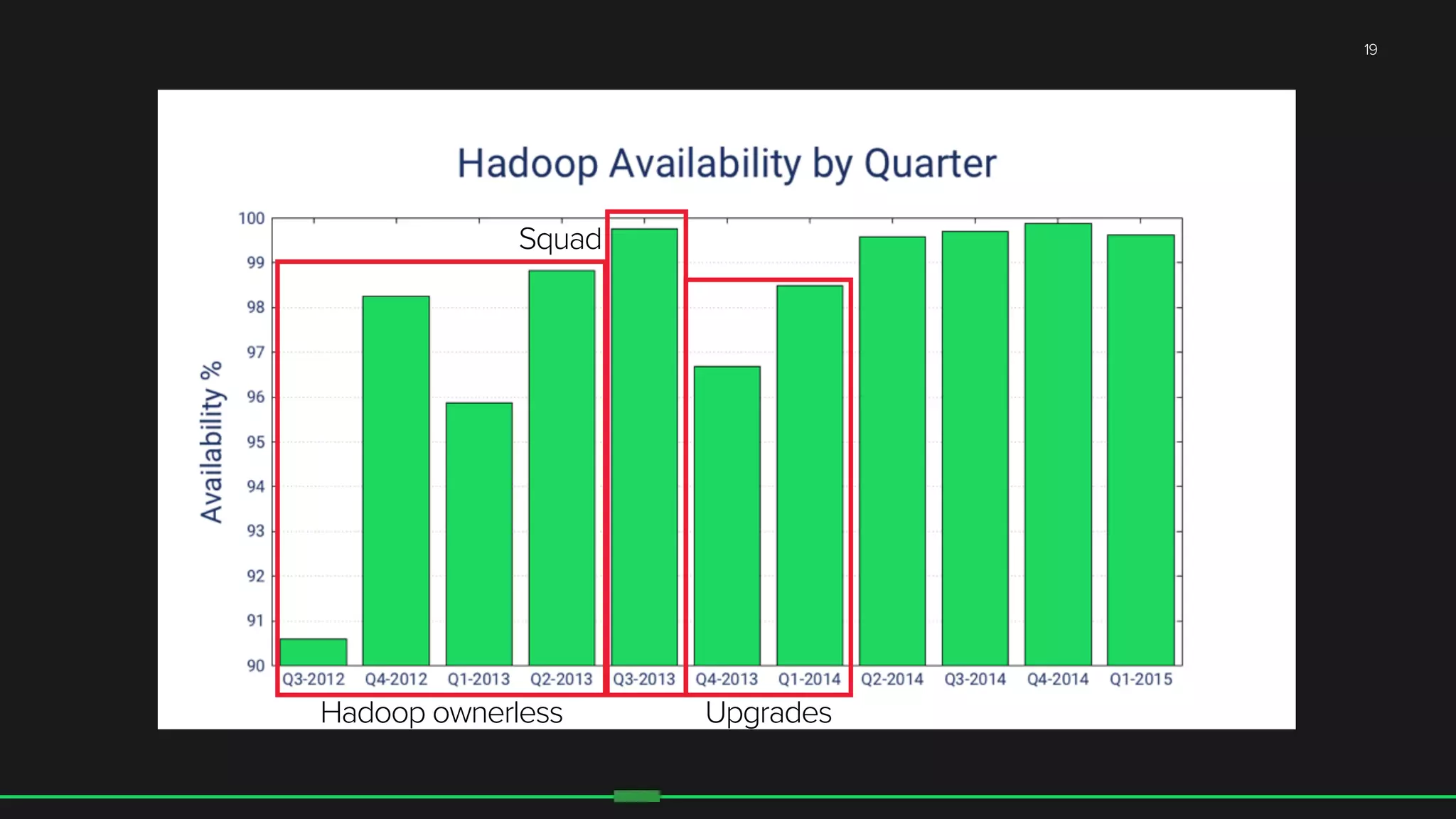

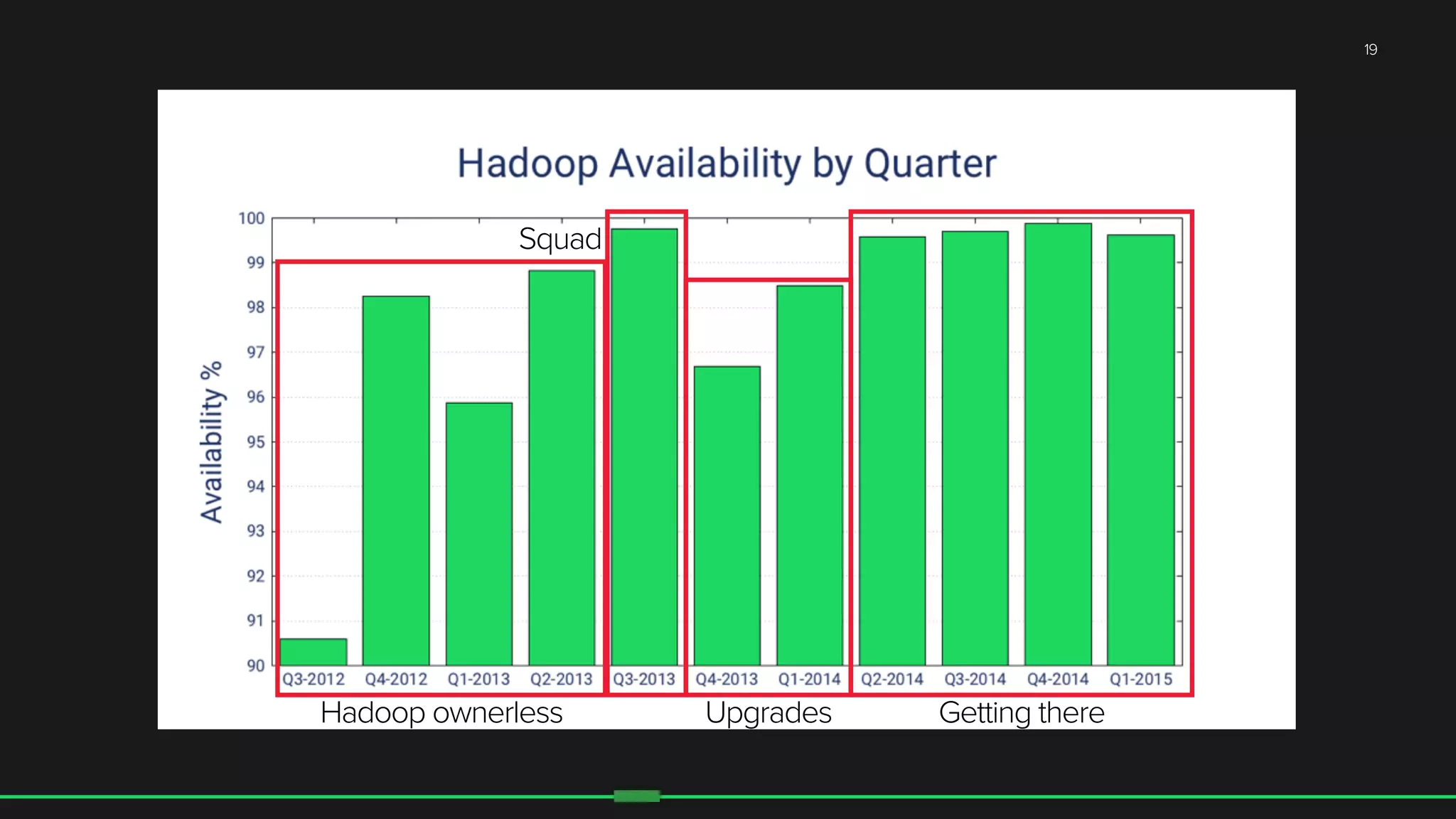

The document discusses the evolution of Hadoop at Spotify from 2009 to 2015, highlighting the company's growth, challenges, and improvements in their data infrastructure. It details the transition to a more reliable Hadoop setup with dedicated teams and automation tools, as well as lessons learned from failures. Additionally, it emphasizes the importance of user feedback and automation in scaling their data management systems.

![12

#

sudo

addgroup

hadoop

#

sudo

adduser

—ingroup

hadoop

hdfs

#

sudo

adduser

—ingroup

hadoop

yarn

#

cp

/tmp/configs/*.xml

/etc/hadoop/conf/

#

apt-‐get

update

…

[hdfs@sj-‐hadoop-‐b20

~]

$

apt-‐get

install

hadoop-‐hdfs-‐datanode

…

[yarn@sj-‐hadoop-‐b20

~]

$

apt-‐get

install

hadoop-‐yarn-‐nodemanager](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-18-2048.jpg)

![12

#

sudo

addgroup

hadoop

#

sudo

adduser

—ingroup

hadoop

hdfs

#

sudo

adduser

—ingroup

hadoop

yarn

#

cp

/tmp/configs/*.xml

/etc/hadoop/conf/

#

apt-‐get

update

…

[hdfs@sj-‐hadoop-‐b20

~]

$

apt-‐get

install

hadoop-‐hdfs-‐datanode

…

[yarn@sj-‐hadoop-‐b20

~]

$

apt-‐get

install

hadoop-‐yarn-‐nodemanager](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-19-2048.jpg)

![14

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐ls

/data

Found

3

items

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

lake

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

pond

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

ocean

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐ls

/data/lake

Found

1

items

drwxr-‐xr-‐x

-‐

hdfs

hadoop

1321451

2015-‐01-‐01

12:00

boats.txt

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐cat

/data/lake/boats.txt

…](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-21-2048.jpg)

![14

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐ls

/data

Found

3

items

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

lake

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

pond

drwxr-‐xr-‐x

-‐

hdfs

hadoop

0

2015-‐01-‐01

12:00

ocean

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐ls

/data/lake

Found

1

items

drwxr-‐xr-‐x

-‐

hdfs

hadoop

1321451

2015-‐01-‐01

12:00

boats.txt

[data-‐sci@sj-‐edge-‐a1

~]

$

hdfs

dfs

-‐cat

/data/lake/boats.txt

…](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-22-2048.jpg)

![[data-‐sci@sj-‐edge-‐a1

~]

$

snakebite

rm

-‐R

/team/disco/

CF/test-‐10/

22](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-34-2048.jpg)

![Goodbye Data (1PB)

[data-‐sci@sj-‐edge-‐a1

~]

$

snakebite

rm

-‐R

/team/disco/

CF/test-‐10/

22

OK:

Deleted

/team/disco](https://image.slidesharecdn.com/theevolutionofhadoopatspotify-throughfailuresandpain-150314153821-conversion-gate01/75/The-Evolution-of-Hadoop-at-Spotify-Through-Failures-and-Pain-35-2048.jpg)