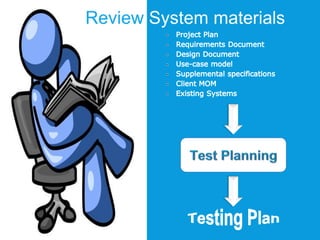

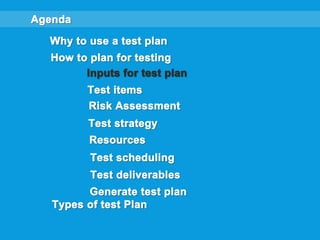

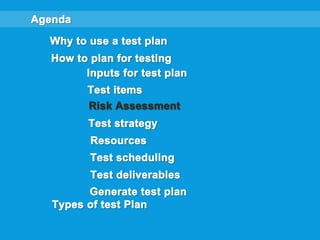

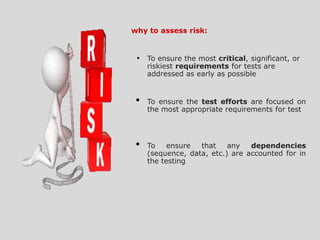

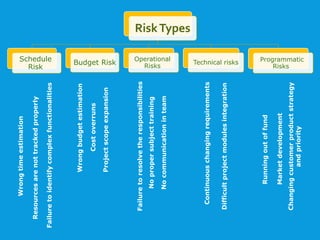

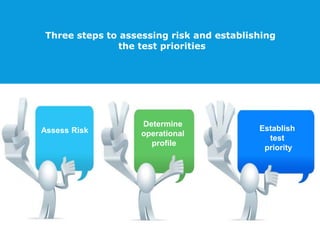

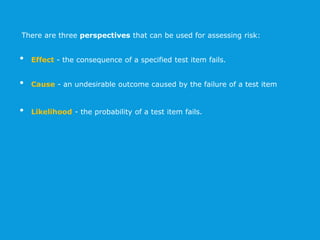

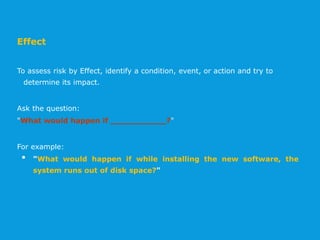

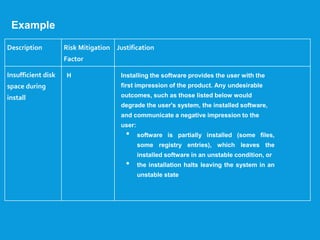

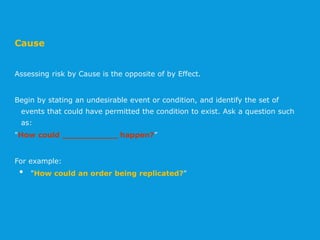

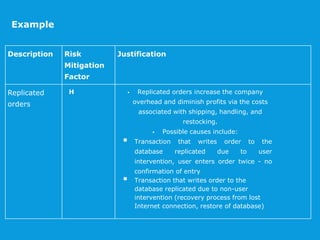

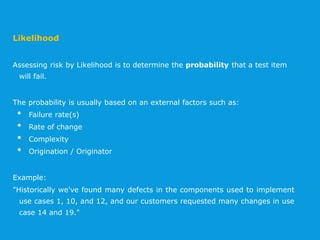

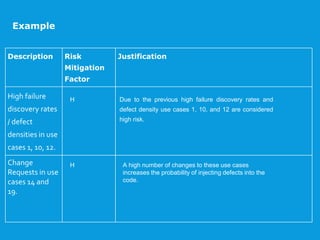

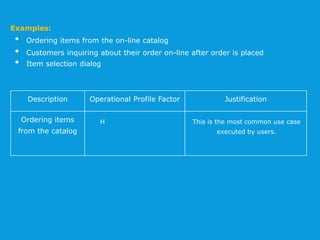

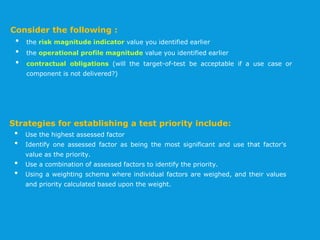

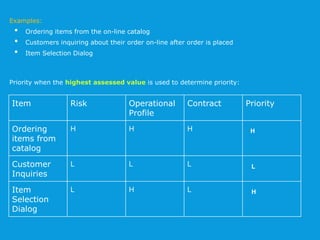

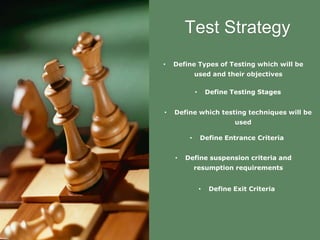

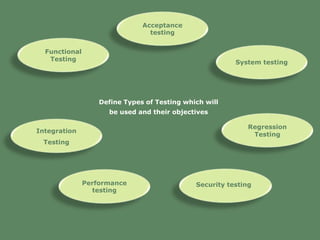

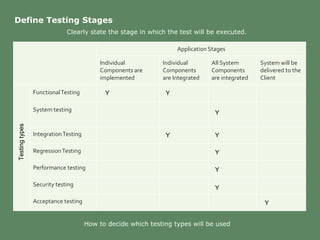

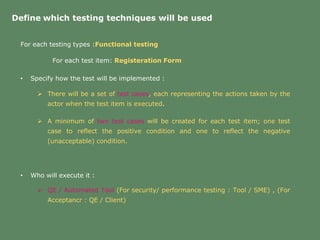

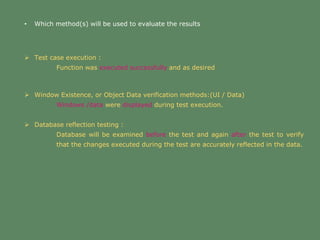

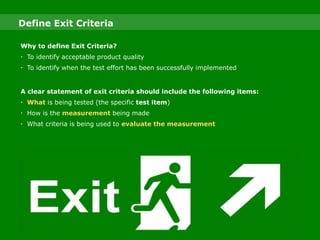

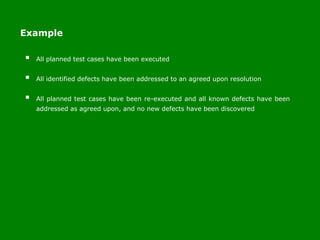

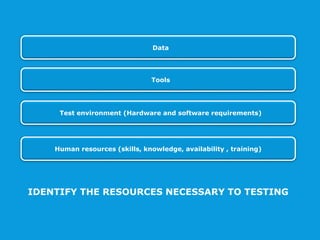

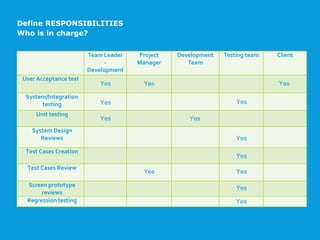

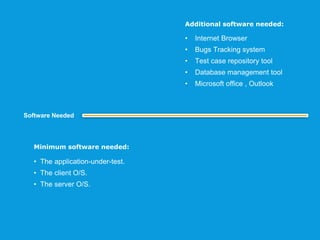

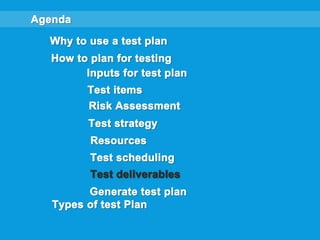

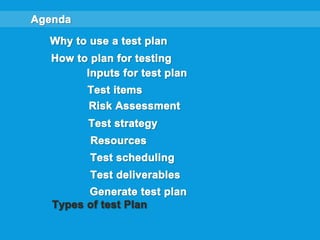

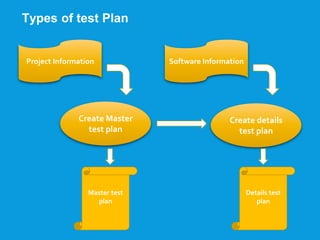

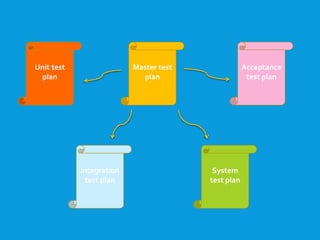

The document outlines a comprehensive guide on planning for software testing by defining test items, assessing risks, establishing test priorities, and outlining types of testing strategies. It emphasizes the importance of identifying verifiable test items, understanding various risk factors, and determining test priorities based on operational profiles and contractual obligations. The content also highlights the necessary testing resources, schedule creation, and documentation required for effective test planning.