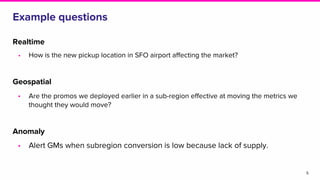

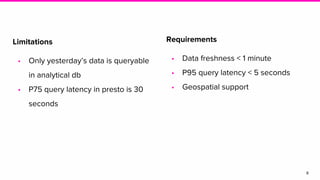

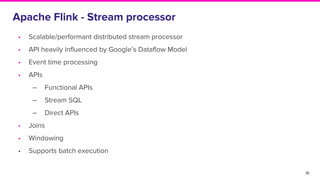

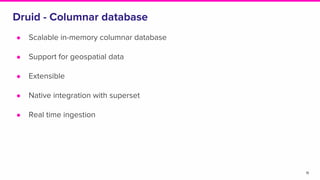

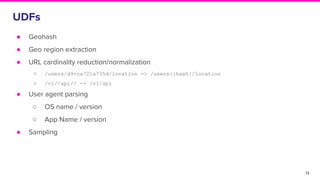

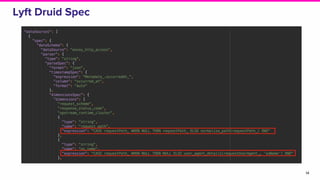

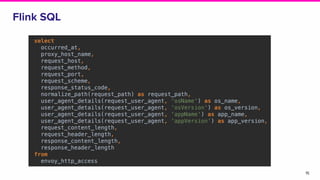

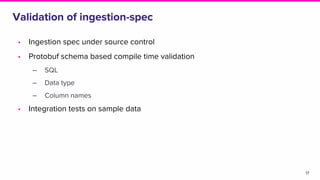

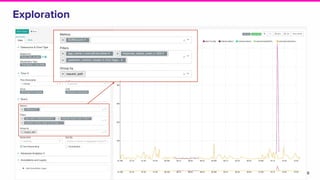

This document discusses the integration of streaming SQL and Druid architecture used at Lyft for real-time data processing and analysis. It highlights use cases, access methods, and the technical framework involving Apache Flink and Druid, while addressing the challenges and requirements for data freshness and query latency. The conclusion emphasizes the importance of an easy-to-use ingestion framework and the potential for augmented capabilities through Flink streaming SQL.