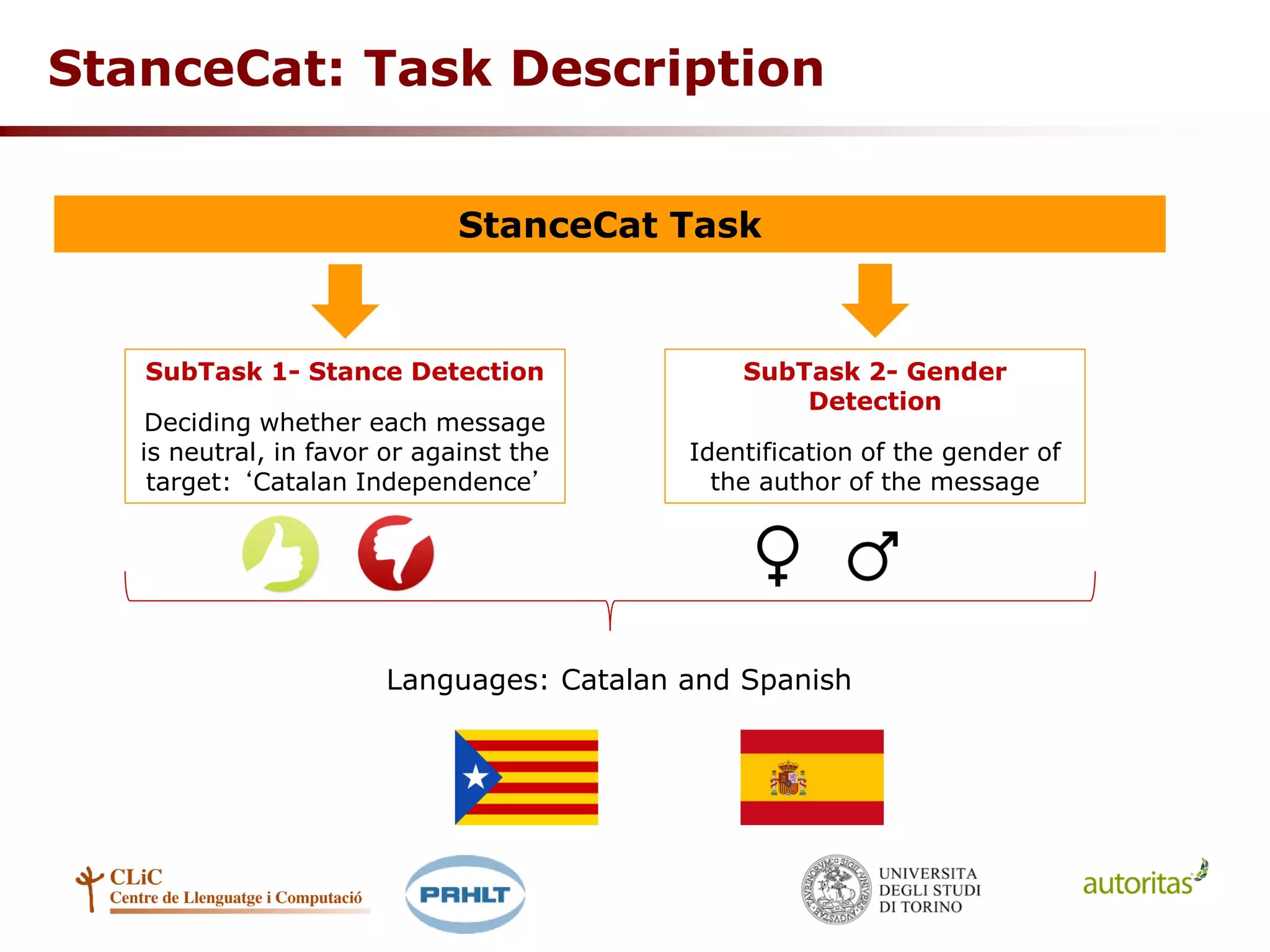

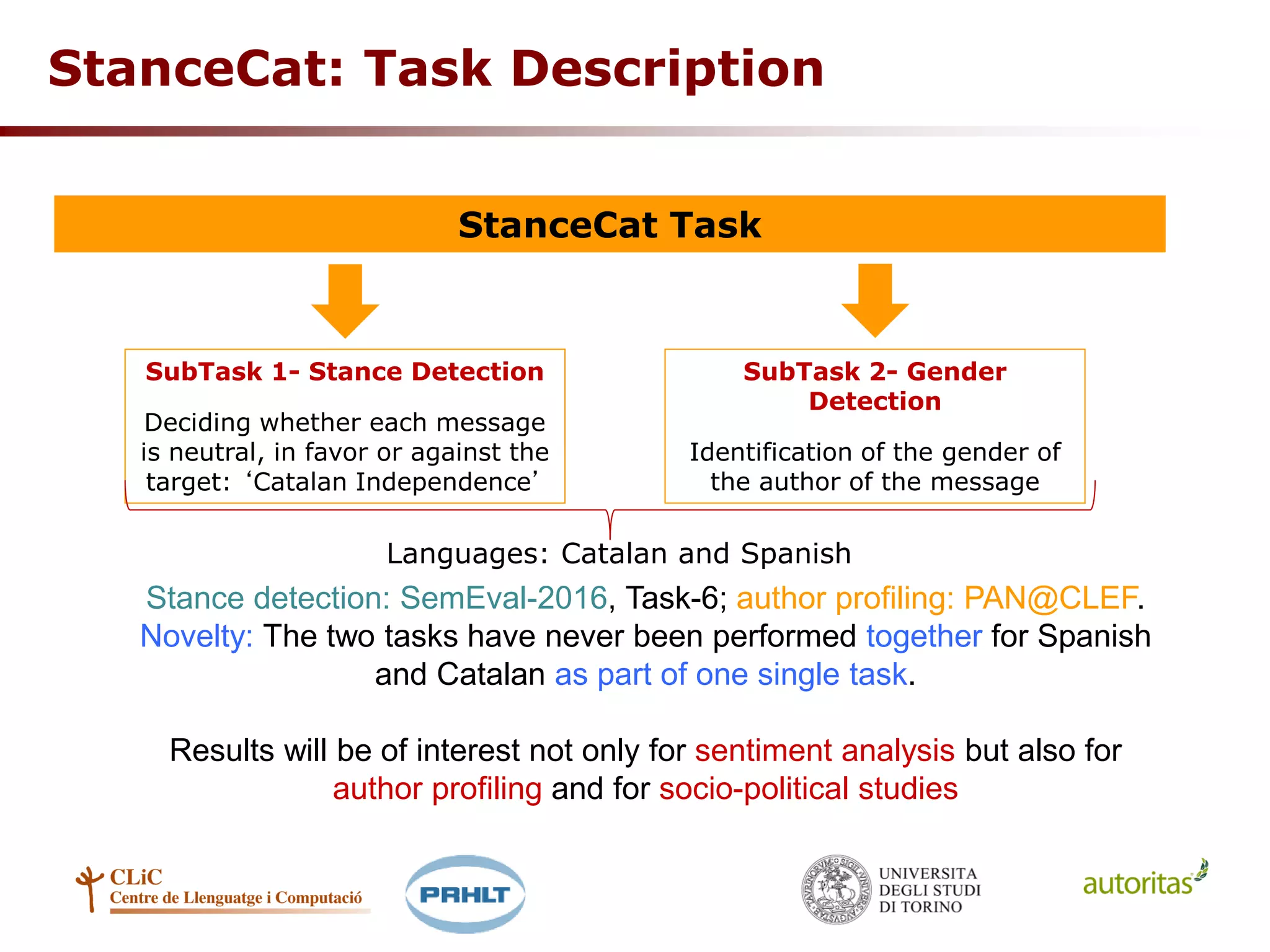

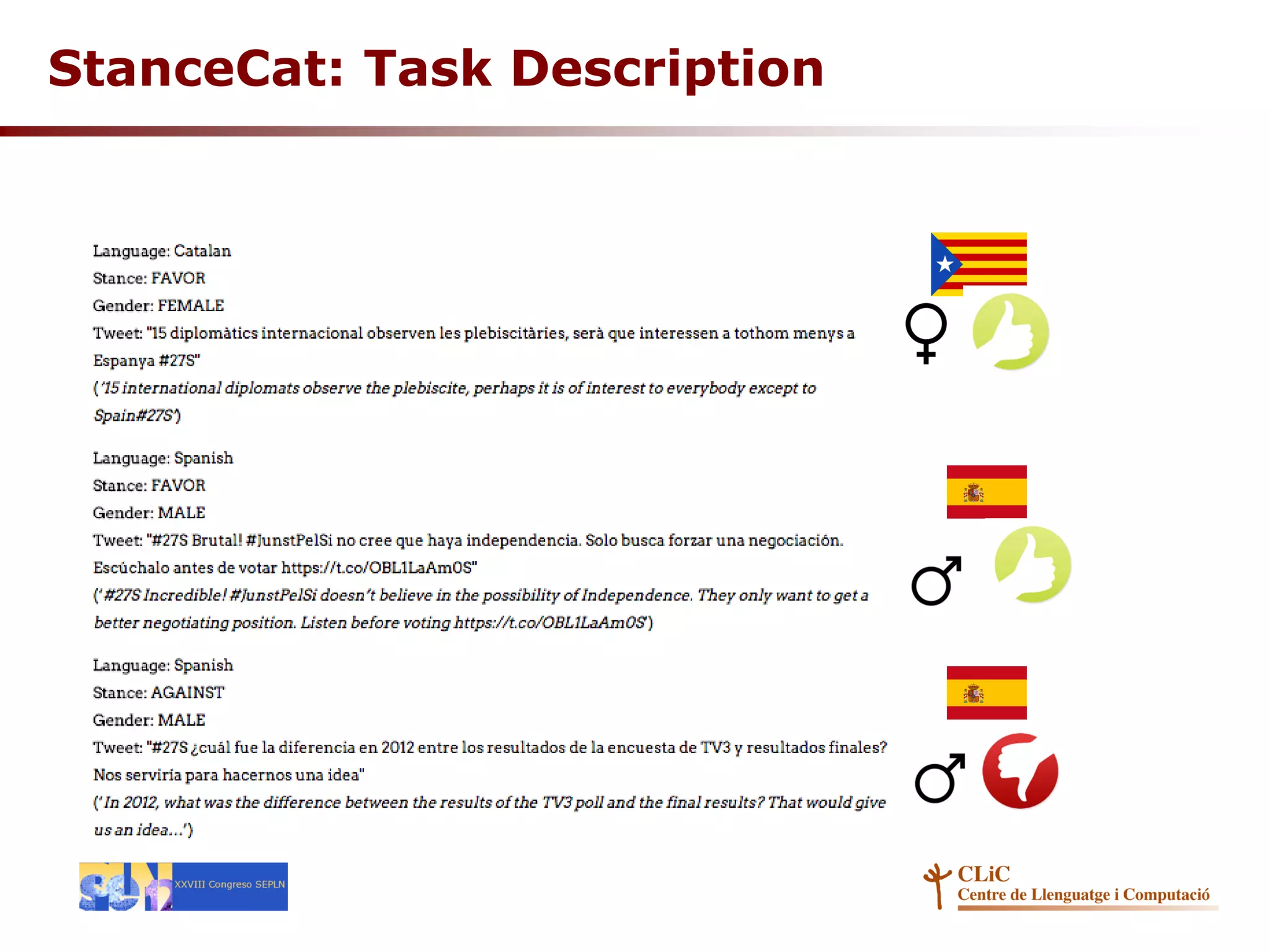

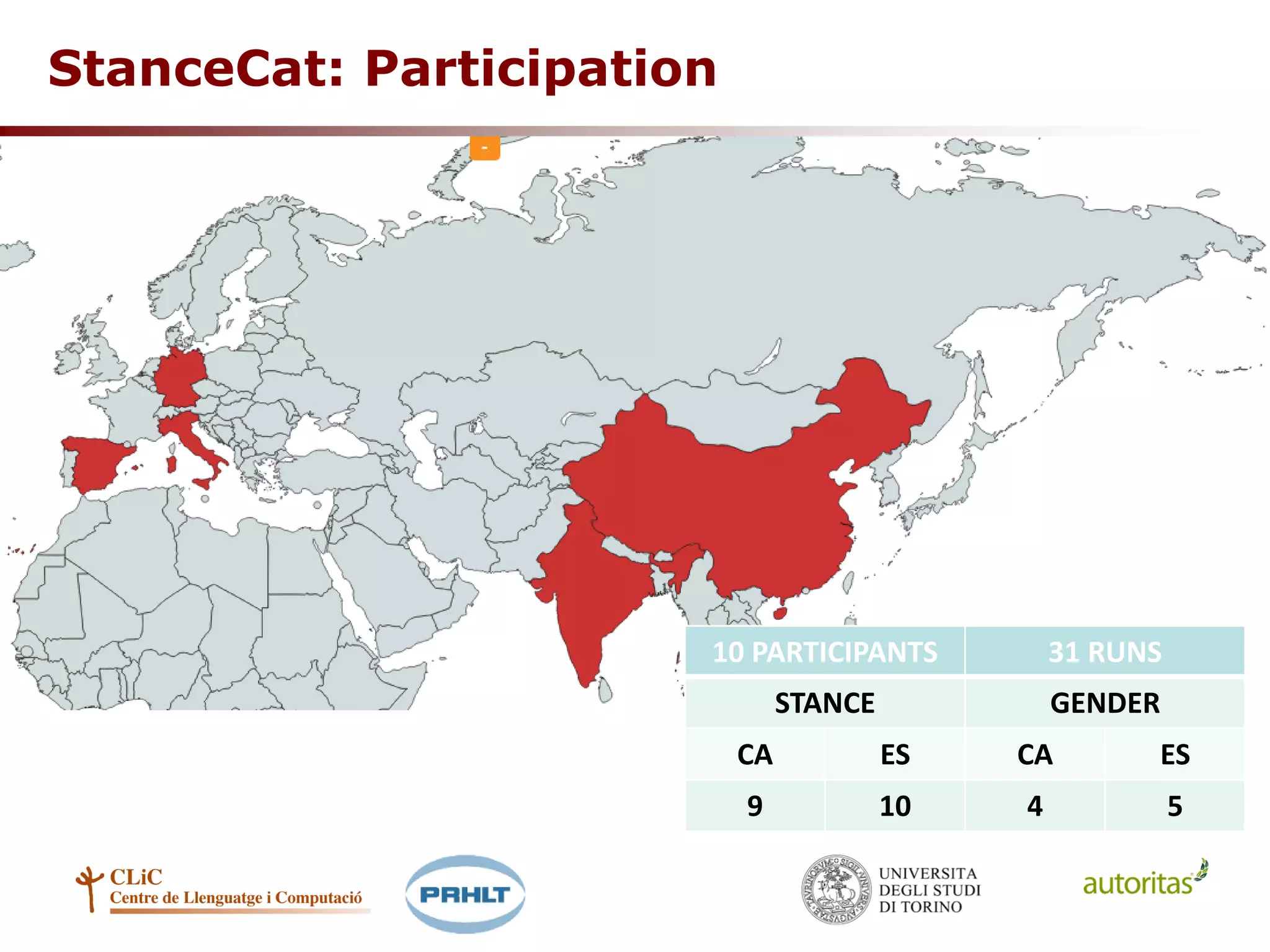

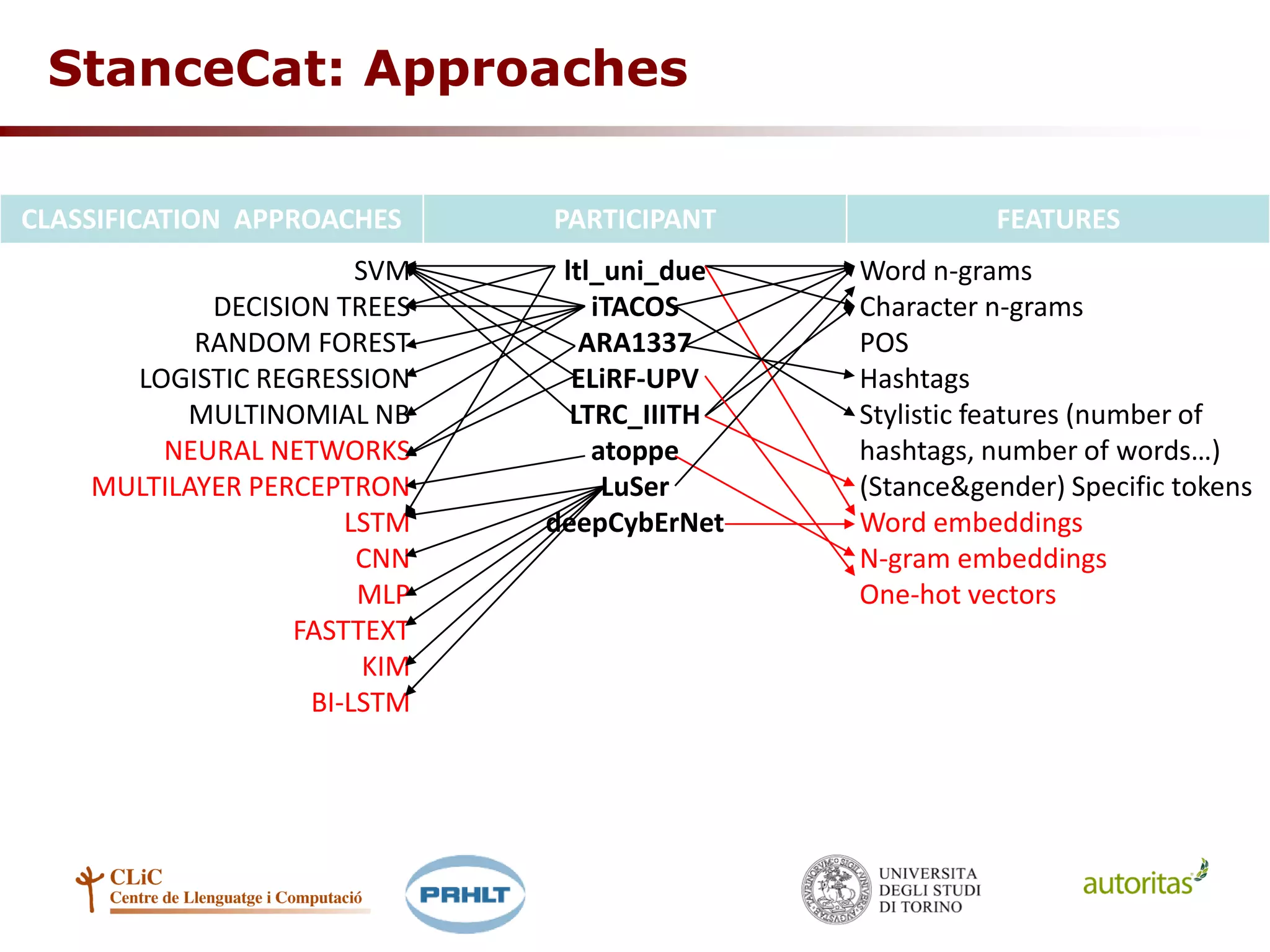

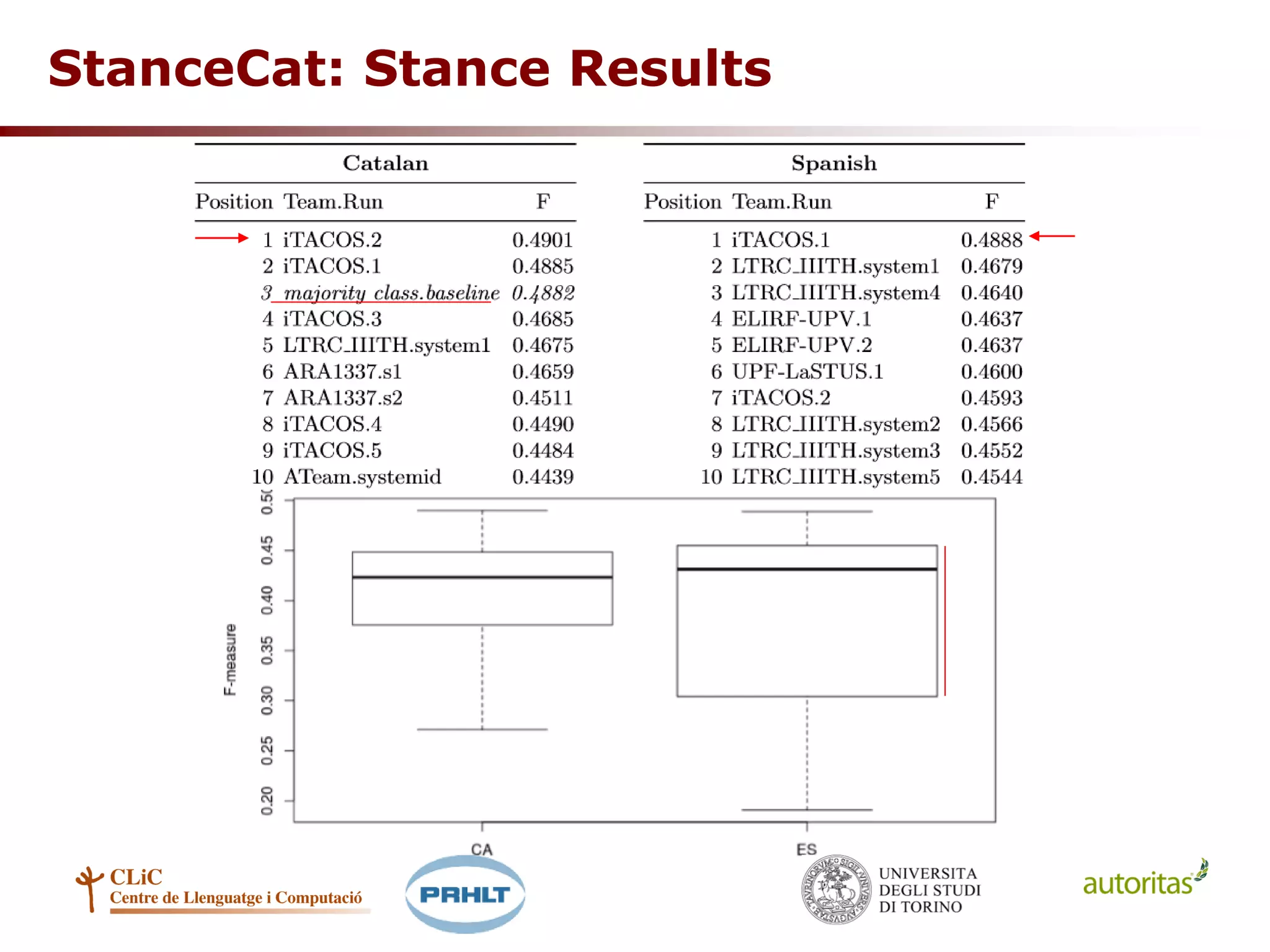

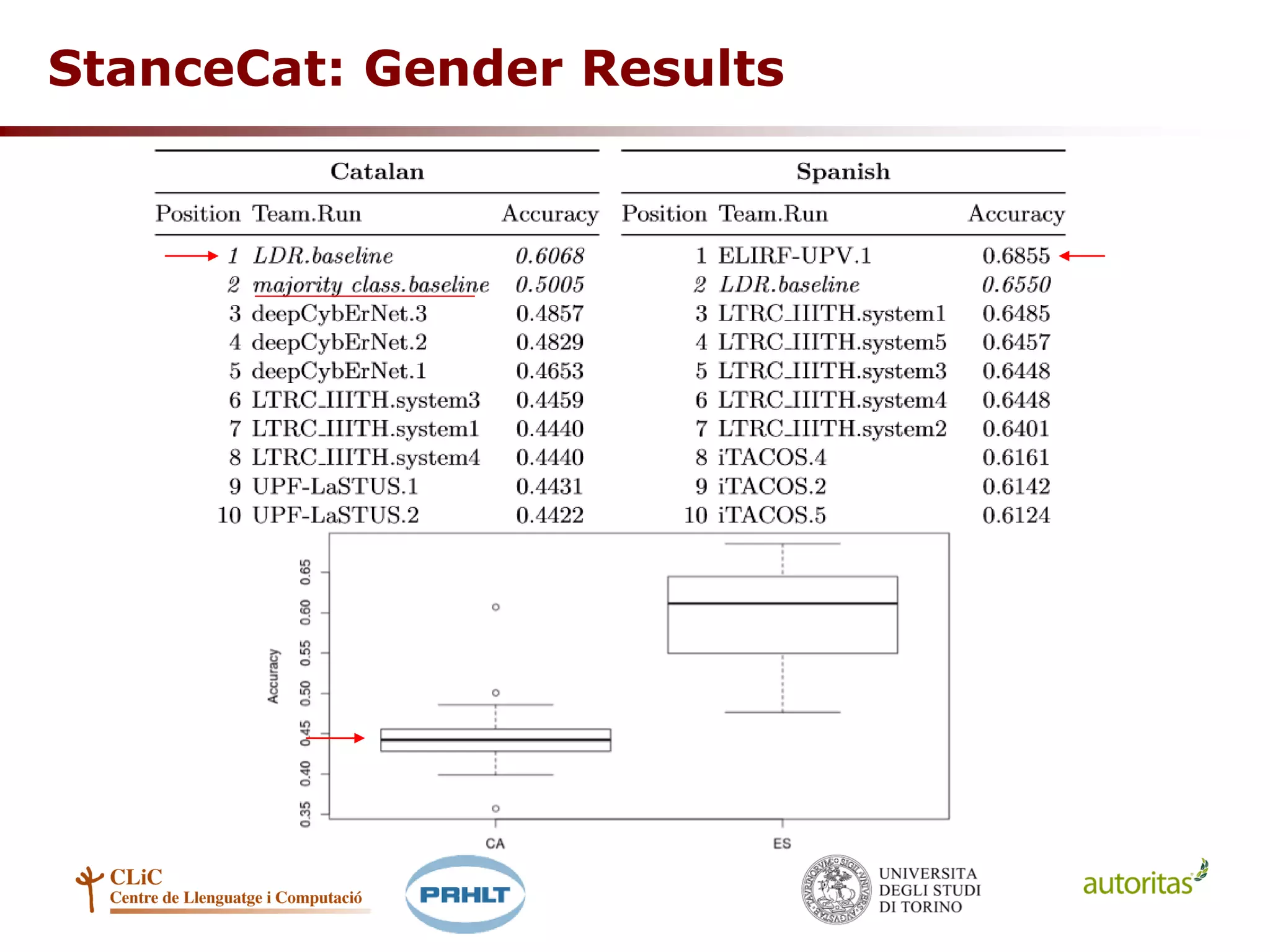

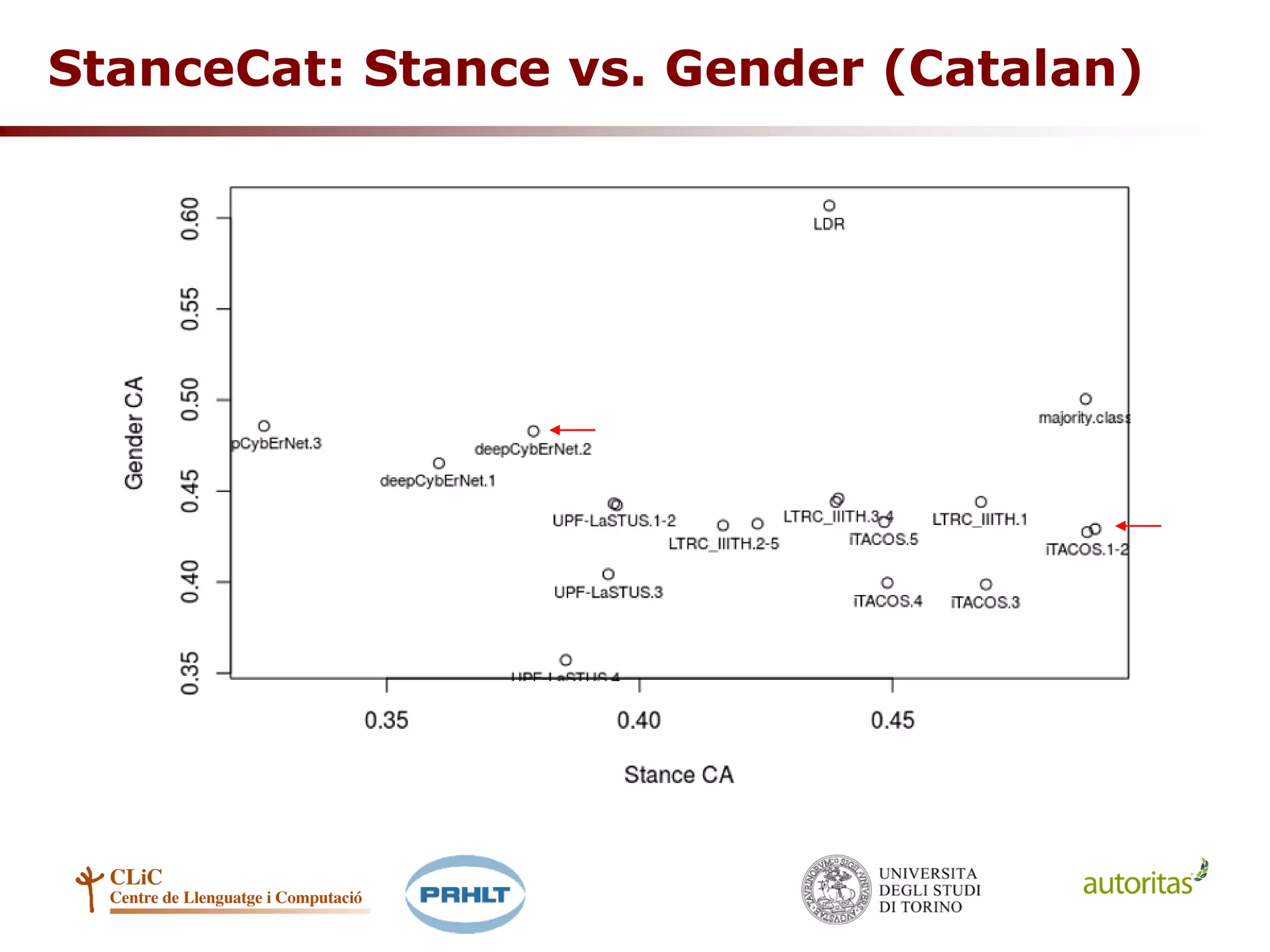

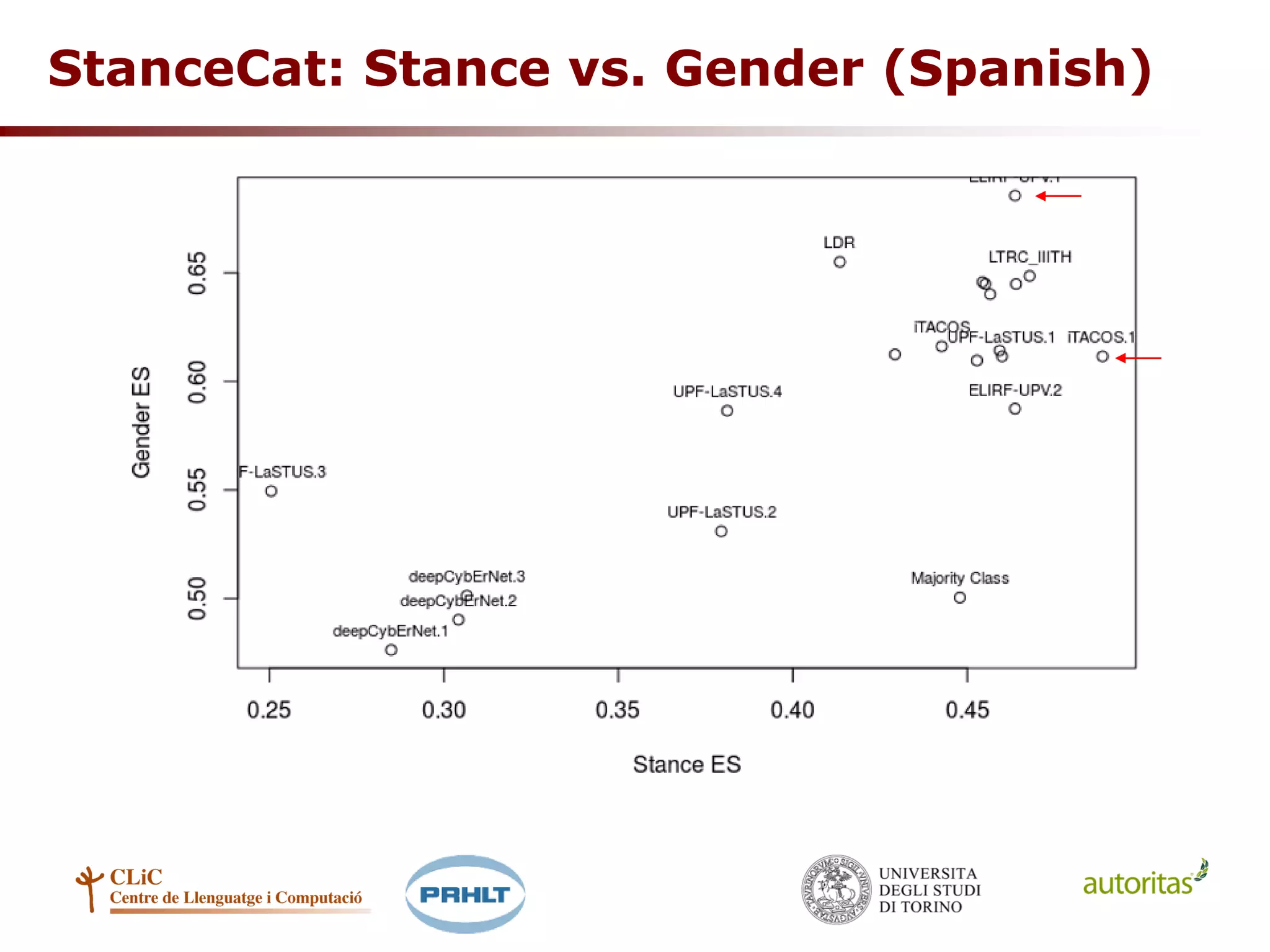

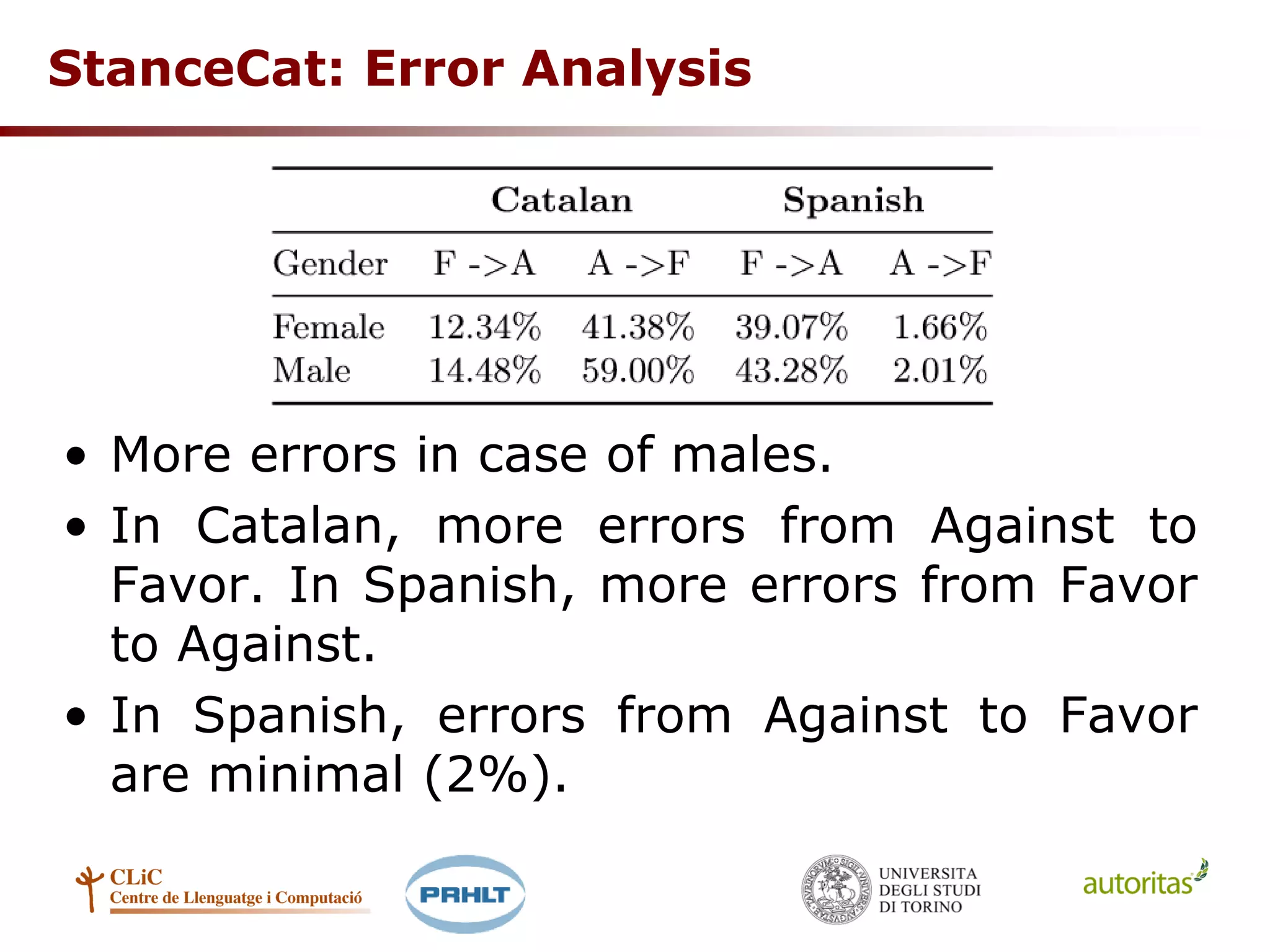

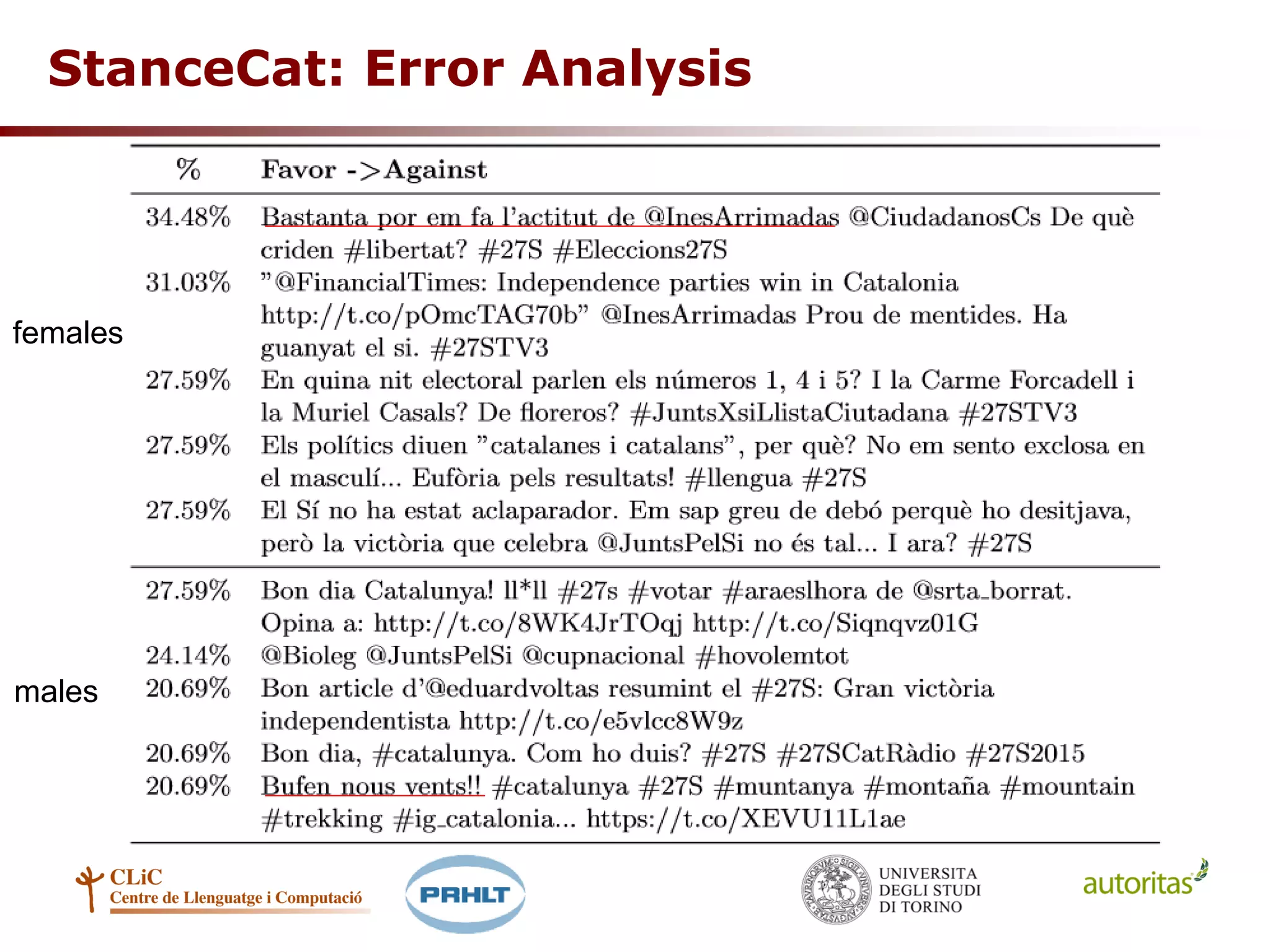

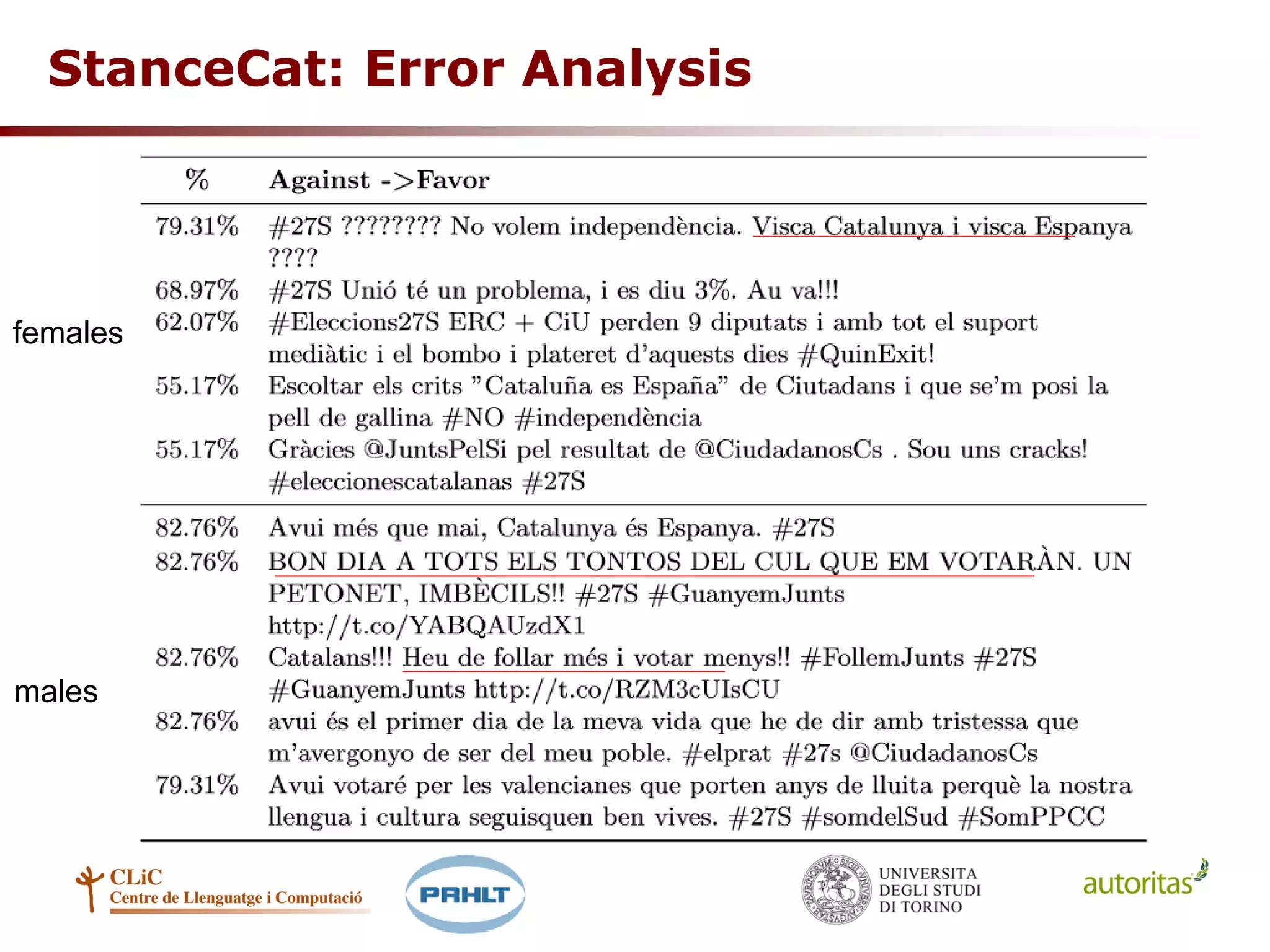

The document describes the StanceCat shared task on detecting stance and gender from tweets on Catalan independence. The task included stance detection (favor, against, neutral) and gender detection on a corpus of 10,800 tweets in Catalan and Spanish annotated for these attributes. Ten teams participated with 31 runs applying classifiers like SVM and neural networks. Results showed F-measures below 50%, with more errors for males and between stances. The dataset was released to facilitate further research on computational analysis of socio-political debates.

![StanceCat: Stance vs Sentiment

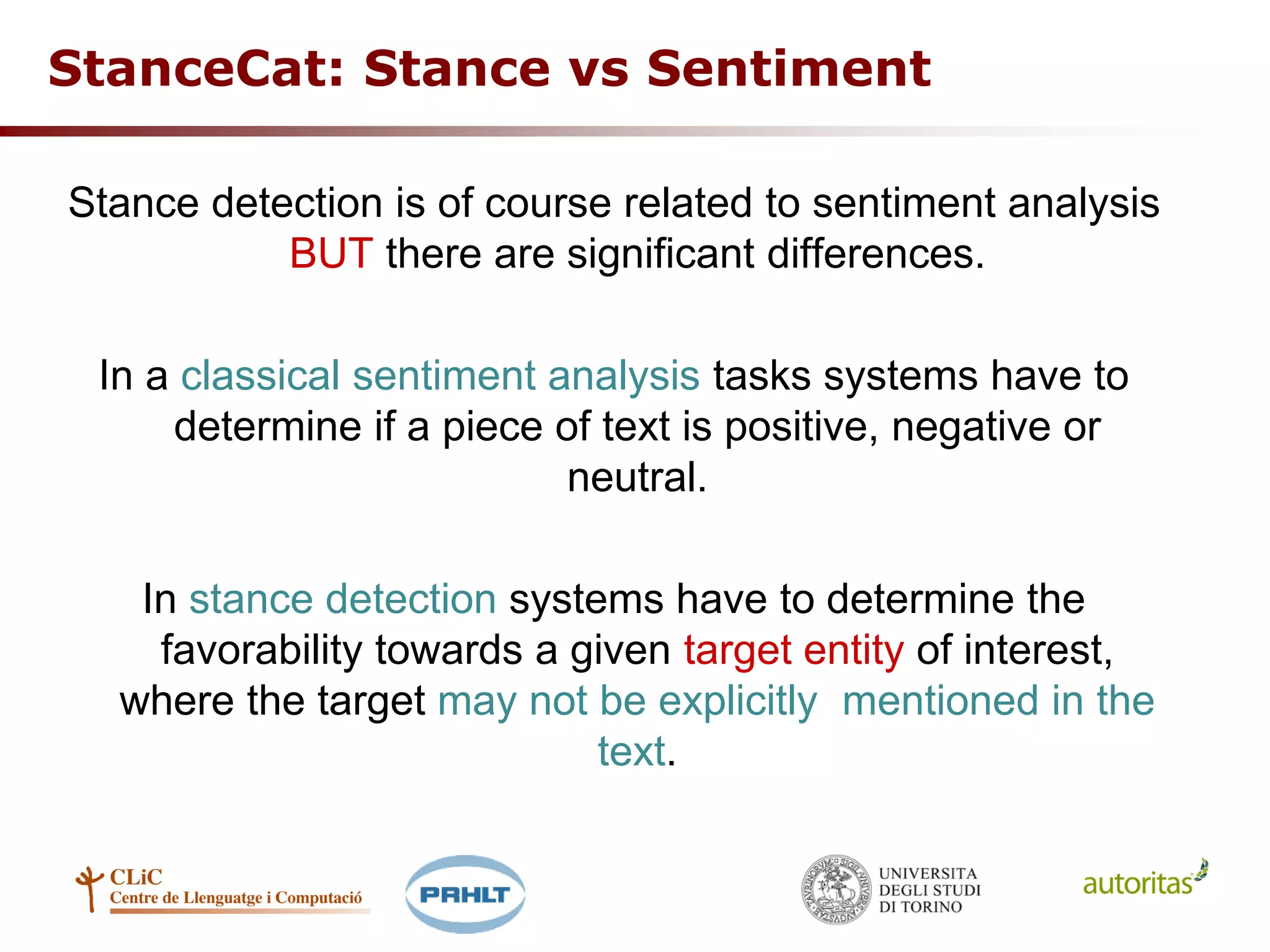

Example [source: training set of SemEval-2016 Task 6]

Support #independent #BernieSanders because he’s not a liar.

#POTUS #libcrib #democrats #tlot #republicans #WakeUpAmerica

#SemST.

• Target: Hillary Clinton [context: Party presidential primaries

for Democratic and Rapublican parties in US]

• The tweeter expresses a positive opinion towards an adversary of

the target (Sanders)

• We can infer that the tweeter expresses a negative stance towards

the target, i.e. she/he is likely unfavorable towards Hillary Clinton

• Important: tweet does not contain any explicit clue to find the target

• In many cases the stance must be inferred](https://image.slidesharecdn.com/stancetask-2017-170929092743/75/Stance-and-Gender-Detection-in-Tweets-on-Catalan-Independence-Ibereval-SEPLN-2017-9-2048.jpg)