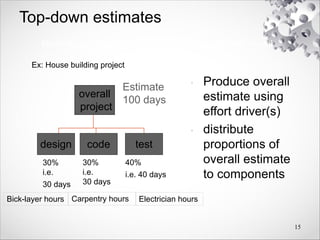

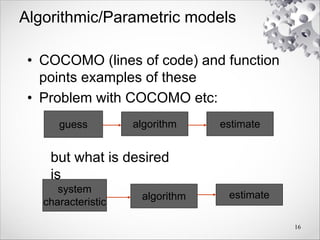

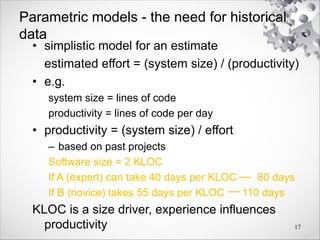

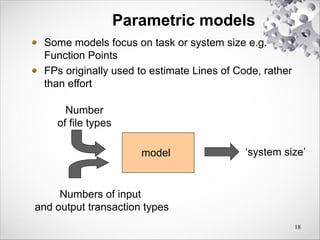

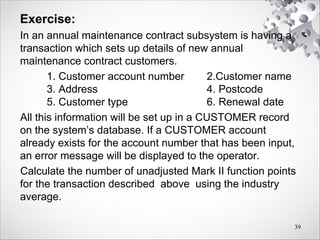

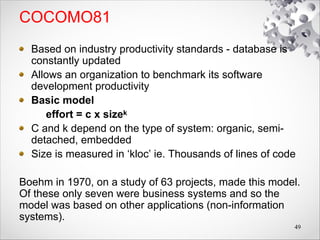

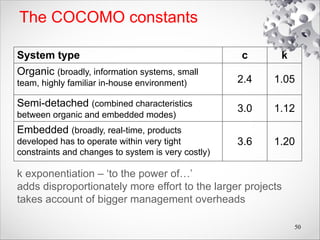

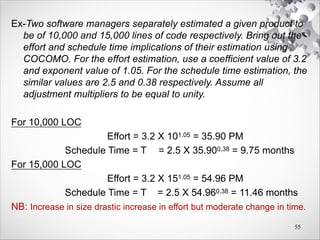

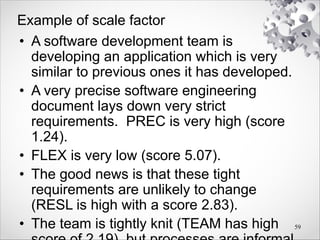

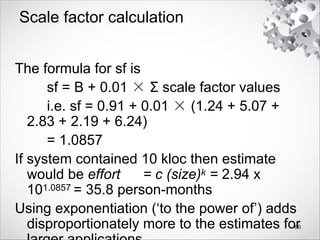

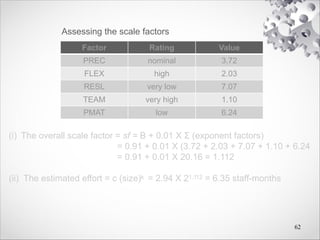

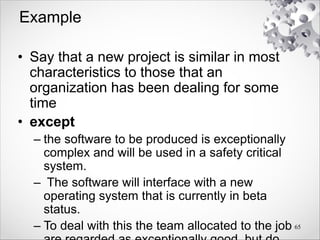

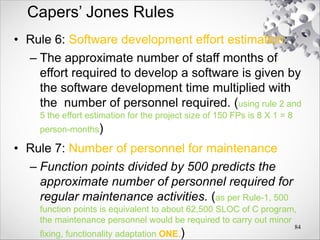

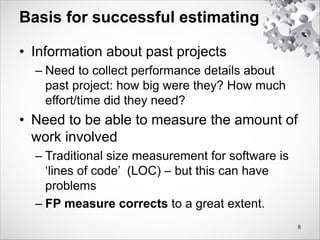

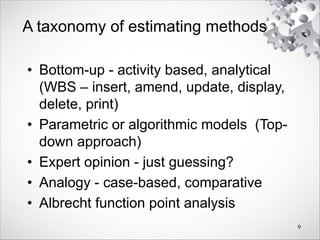

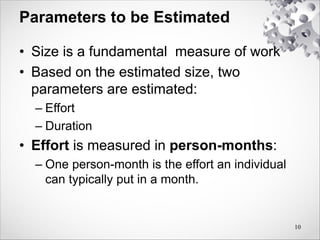

Chapter five discusses the complexities and challenges in software effort estimation, emphasizing the need for accurate predictions to meet project targets effectively. It highlights various factors influencing estimates, including political pressures, changing technologies, and individual project differences, while suggesting methods like bottom-up and top-down estimating approaches alongside function point analysis. Additionally, it addresses the importance of leveraging data from past projects to enhance the reliability and accuracy of future estimates.

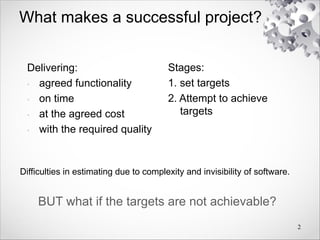

![Bottom-up estimating

1. Break project into smaller and smaller components

2. Stop when you get to what one person can do in

one/two weeks]

3. Estimate costs for the lowest level activities

4. At each higher level calculate estimate by adding

estimates for lower levels

A procedural code-oriented approach

a) Envisage the number and type of modules in the

final system

b) Estimate the SLOC of each individual module

c) Estimate the work content

d) Calculate the work-days effort

14](https://image.slidesharecdn.com/05softwareeffortestimation-240318164058-83d66168/85/Software_effort_estimation-for-Software-engineering-pdf-14-320.jpg)