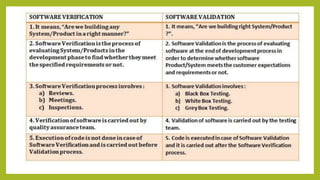

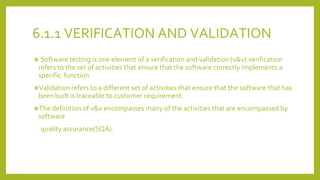

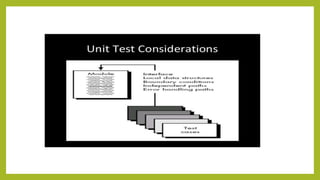

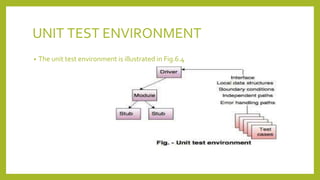

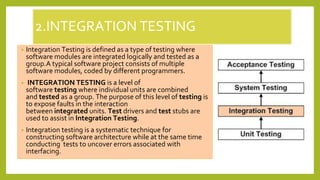

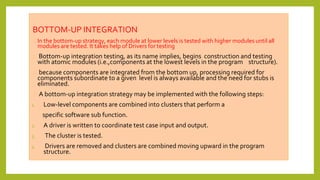

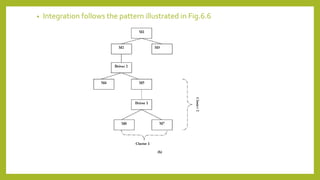

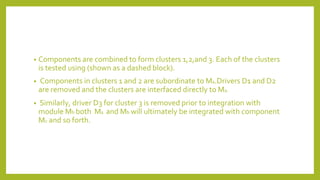

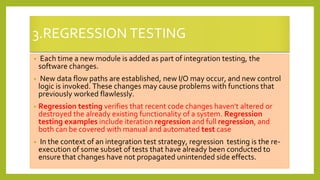

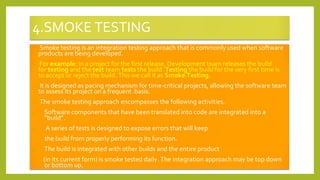

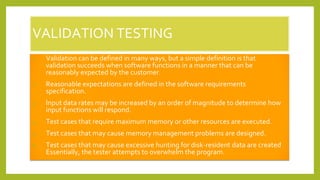

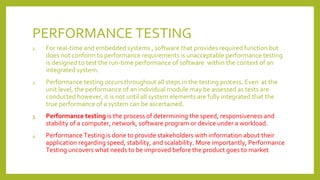

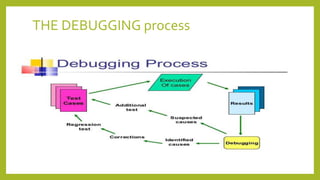

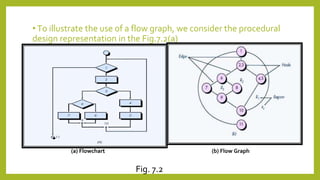

This document provides an introduction to software testing. It discusses characteristics of software testing such as conducting formal technical reviews and using different testing techniques at different stages. It also discusses verification and validation, with verification ensuring correct implementation and validation ensuring traceability to requirements. Various software quality assurance activities are listed. The document then discusses specific testing strategies like unit testing, integration testing, regression testing, smoke testing, validation testing, and performance testing. It describes the debugging process and different debugging approaches like brute force, backtracking, and cause elimination. Debugging tools can supplement these approaches.