The document is a comprehensive guide on search engine optimization (SEO) authored by Aaron Matthew Wall, covering essential strategies and tools to improve website rankings. It includes sections on domain registration, keyword selection, page optimization, link building, and common SEO practices, alongside practical tips for internet marketing. The guide also warns about the pitfalls of SEO and emphasizes the importance of creating valuable, engaging content for better conversion rates.

![27

people who did not want to spend much money. What did I learn? Sell what your

customers want, when they want it.

I still displayed some of my $400 and up baseball cards, just to get the “oohs” and

“ahhs.” I learned that once you have a crowd, many people follow just to see what

is going on, and then you can make sales. I knew I would not sell these cards. So

what did I change?

I created a display case with stacks of all the major stars and local popular players.

Each card was $1.00. It did not matter if the card was worth twenty-five cents or

four dollars; I just put $1.00 on each of them. I kept up with whom was doing well

and would buy up cards of players that were about to become popular. Buy-price

ranged from three cents to a quarter a card. Most of the cards I bought were

considered ‘junk’ by the sellers, yet I re-sold most within a month or two.

By taking the time to go through their junk and making ordering simple, I made

money. That $1 display case was a gold mine. Usually, that case sold more cards

than my good cases did. Also, I had very little invested—other than taking the time

to organize the cards.

So, I decided I would do this kid a favor and send him a free book on

marketing from my favorite author. When I asked him if he wanted a free

marketing book, he got angry, even though I was trying to help him. Instead

of considering my offer, he spouted off Eminem lyrics, telling me that “[he'd]

rather put out a mother****ing gospel record.”

All of his frustration, all of his anger, and all of his wasted time were unnecessary.

That is why search is powerful. You do not hunt for your customers, they hunt for

you. You pick the keywords, and the customer picks you. You not only sell what

your customers want, but you also sell it while they are actively looking for it.

The $1 baseball card case is a good analogy for effective search engine

optimization. Imagine the cards in that case as being web pages. It is great to rank

well for a term like SEO, but by ranking well for various small volume searches you

may be able to make far more money in the long run. Longer search phrases have

more implied intent, thus are far more targeted, and convert at a higher rate.

Having a social element to your site or business is like having the $400 baseball

card in the case. The people who reference the interesting parts of your site build

up your authority. Based on that authority, search engines learn to trust other parts

of your site and send visitors to those pages, as well.

You can think of web traffic like my baseball cards. Due to the vastness of the

market and market inefficiencies, there will always be many ways to make money.

If you learn how to spread ideas and build an audience, you can make a windfall of

profits.

Interactive Elements

Literature](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-27-320.jpg)

![101

You have to be able to evaluate how competitive your market is, what resources

you have available, and whether you can compete in that market. A large reason

many websites fail is that they are too broad or unfocused. If the top sites in your

industry are Expedia, Orbitz, Travelocity, Hotels.com, and other well-known

properties, you need to have a large budget, create something fundamentally

innovative, or look for a better niche opportunity in which you can dominate.

WebmasterWorld has a useful theming thread here

http://www.webmasterworld.com/forum34/68.htm

Keyword Density (KD)

Keyword density analyzers end up focusing people on something that is not

important. This causes some people to write content that looks like a robot wrote

it. That type of content will not inspire people to link to it and will not convert

well.

In March of 2005, Dr. Garcia, an information retrieval scientist, wrote an article

about keyword density. His conclusion was “this overall ratio [keyword density]

tells us nothing about:

• the relative distance between keywords in documents (proximity);

• where in a document the terms occur (distribution);

• the co-citation frequency between terms (co-occurrence);

• or the main theme, topic, and sub-topics (on-topic issues) of the

documents.

Thus, KD is divorced from content quality, semantics, and relevancy.”

Later on I will discuss how to structure page content, but it is important to know

that exact keyword density is not an important or useful measure of quality.

Why Focusing on Keyword Density is a Waste of Time

About half of all search queries are unique. Many of the searches that bring visitors

to your sites are for keyword phrases you never would have guessed. If a site is not

well-established, most search traffic will be for long, multiword search phrases.

When webmasters start thinking about keyword density, many of them tend to

remove descriptive modifiers and other semantically-related terms. Since some of

those terms will no longer appear on the page, the “optimized” site no longer ranks

well for many queries.

People write, search, and use language in similar ways. Thus, if you write naturally,

you are going to be far better optimized for long-tail searches than some person

who wastes time on keyword density will be.

If the content sounds like it was designed for engines instead of people, then less

people are going to want to read it or link to it.](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-101-320.jpg)

![184

There is significant effort being placed on looking for ways to move the PageRank

model to a model based upon trust and local communities.

Link Spam Detection Based on Mass Estimation

TrustRank mainly works to give a net boost to good, trusted links. Link Spam

Detection Based on Mass Estimation was a research paper aimed at killing the

effectiveness of low-quality links. Essentially the thesis of this paper was that you

could determine what percent of a site’s direct and indirect link popularity come

from spammy locations and automate spam detection based on that.

The research paper is a bit complex, but many people have digested it. I posted on

it at http://www.seobook.com/archives/001342.shtml.

Due to the high cost of producing quality information versus the profitability and

scalability of spam, most pages on the web are spam. No matter what you do, if

you run a quality website, you are going to have some spammy websites link to you

and/or steal your content. Because my name is Aaron Wall, some idiots kept

posting links to my sites on their “wall clock” spam site.

The best way to fight this off is not to spend lots of time worrying about spammy

links, but to spend the extra time to build some links that could be trusted to offset

the effects of spammy links.

Algorithms like the spam mass estimation research are going to be based on

relative size. Since quality links typically have more PageRank (or authority by

whatever measure they chose to use) than most spam links, you can probably get

away with having 40 or 50 spammy links for every real, quality link.

Another interesting bit mentioned in the research paper was that generally the web

follows power laws. This quote might be as clear as mud, so I will clarify it shortly.

A number of recent publications propose link spam detection

methods. For instance, Fetterly et al. [Fetterly et al., 2004]

analyze the indegree and outdegree distributions of web pages.

Most web pages have in- and outdegrees that follow a power-

law distribution. Occasionally, however, search engines

encounter substantially more pages with the exact same in- or

outdegrees than what is predicted by the distribution formula.

The authors find that the vast majority of such outliers are spam

pages.

Indegrees and outdegrees above refer to link profiles, specifically to inbound links and

outbound links. Most spam generator software and bad spam techniques leave

obvious mathematical footprints.

If you are using widely hyped and marketed spam site generator software, most of

it is likely going to be quickly discounted by link analysis algorithms since many](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-184-320.jpg)

![230

The use of the above listed tools allows me to automate most of the link research

process while still allowing me to remaining personal in the link request e-mails I

send out.

Example E-mail (sent to a personal e-mail if they have one available)

Hello <actual person>,

I have been browsing around photography sites

for a couple hours now and just ran across

yours…it’s awesome. [stroke their ego]

Wow! Your picture of that dove—blah blah

blah…bird photography is one of my blah blah

blah. [be specific and show you visited their site]

I took this pic of a flying mongoose here:

[look we are the same]

<optional>I have already added a link to your

site on my cool sites page.</optional> [Pay

them if you feel you need to—link or cash or whatever

other offer. If you feel a phone call is necessary, then

you may want to give them your number or call them

up versus sending an e-mail.]

[you or] Your site visitors may well be

interested in blah blah blah at my site blah

blah. [show them how linking to you benefits them]

If you like my site, please consider linking

to it however you like… [Let them know they have

choices—don’t come across as ordering them to do

something. Also, sometimes just asking for feedback

on an idea gives you all the potential upside of a link

request without any of the potential downside. If they

review your stuff and like it, they will probably link to

it. And by asking for their feedback instead of a link,

you show you value their opinion not just their link

popularity.]

or with this code. [make it easy for them]

I think my site matches well with the theme on

this page:

Either way, thanks for reading this and keep

posting the awesome photos. [reminder ego

stroke]

Thanks,

me

me at myemail.com

http://www.mywhateversite.com

my number](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-230-320.jpg)

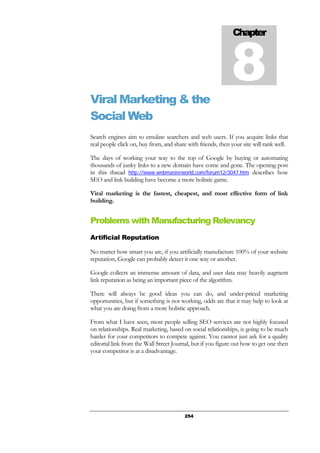

![312

Ads that are disabled from search syndication due to low relevancy and bid price

still appear in the Google contextual ad program, unless their quality score is too

low to appear there as well.

The whole point of quality-based minimum bids is to squeeze the margins out of

noisy low value ad campaigns.

Broad Match, Phrase Match, and Exact Match

Google AdWords and Yahoo! both have different levels of word matching. An ad

targeted to [search term] (with the brackets) will only show up on the search results

page for the query search term. This is called “exact match.” Yahoo!’s exact match is

a bit fuzzy, matching plurals and some common misspellings. Google’s exact

match is more precise, only matching the exact search query.

An ad targeted to “search term” (with the quotation marks) will show up on the

search results page for a query that has search term anywhere in it, in the same order.

This is called “phrase match.”

An ad targeted to search term (no quotations or brackets), will show up on the search

results page for any search query that has the words search and term in it. With

broad matching, synonyms to search term may also display your ad. If you decide to

start off with broad matching, you should view the synonyms to ensure none of

them are wasteful. This is especially true with acronyms or other terms with

multiple, well-known meanings.

Google offers all three levels of ad control. Yahoo! offers exact match and Yahoo!

groups phrase match and broad match in a category called advanced match.

Examples Match Type Will Show Results For Will Not Show Results For

[eat cheese] Exact eat cheese any other search

“eat cheese” Google

Phrase

Match

eat cheese;

I love to eat cheese;

you eat cheese

Cheese eat, or anything

else that does not have

both words together in

the same order as the

search term

eat cheese G Broad

(Y!

Advanced

match is

similar)

All above options and

searches such as

cheese eating;

ate cheddar.

Expanded broad match

may also show ads for the

following unrelated search

query after the original

search. If I search for

chedder then search for

knitting I might see a

cheddar ad near the

knitting search results.](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-312-320.jpg)

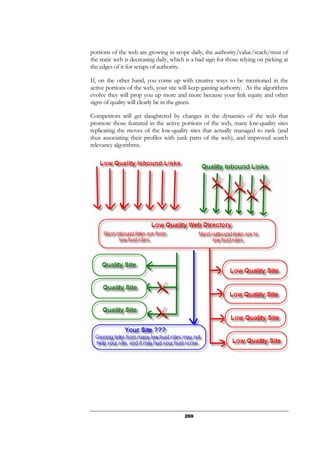

![326

[abc tires] $0.75 10.1% 2.32% $32.01

abc sports tire $0.74 12.4% 2.91% $25.29

abc tire co $0.59 15.1% 2.70% $21.73

[a b c tire

company]

$0.56 38.2% 11.11% $5.03

abc tire

products

$0.74 17.3% 22.22% $3.30

Misspellings $0.21 3.6% 1.82% $11.37

Adding modifiers will split up the traffic volumes to make some terms seem more

profitable than they are and make other similar permutations seem less profitable

than they are. The math from the above campaign was compiled over a year with

hundreds of thousands of ad impressions to help smooth out some of the

discrepancies.

You have to consider the cost of building and managing the account when building

out your keyword list, but I tend to like using various matching levels and modifiers

on my most important keywords.

Tools to Create Large Keyword Lists

Tools.SeoBook.com offers

• a free keyword list generator that allows you to create a list of keyword

permutations based on a list of core keywords and keyword modifiers

• a misspelling and typo generator tool

• a keyword list cleaner to filter out bad keywords

Pay-Per-Call

Google has started testing pay-per-call. For some businesses, pay-per-call will not

have much of an effect, but high-end local businesses (legal, loans, real estate, etc.)

will find their competitive landscape drastically different in the next year.

Google has also launched an offline Google AdWords ad manager software to

help advertisers manage their campaigns.

Pay-Per-Action Ads

Google offers ads that are distributed on a pay per action basis. Some of these ads

are regular text links that can be integrated directly into the page copy of the

publisher.

Google also allows you to set your regular bidding based on a maximum desired

cost per action, but this tool likely increases your cost per conversion and most

advertisers should be able to do a better job manually managing their bids.](https://image.slidesharecdn.com/seobook53-160322235603/85/Seo-Book-326-320.jpg)