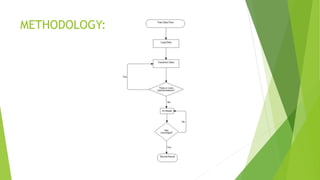

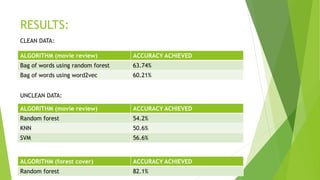

The document discusses sentiment analysis using machine learning, particularly focusing on natural language processing (NLP) methods. It explores various algorithms such as Naive Bayes, Random Forest, Support Vector Machines, and KNN for sentiment analysis of movie reviews, emphasizing the need to process large volumes of unstructured social media data. Results from multiple algorithms indicate varying accuracy rates, highlighting the challenges and methodologies involved in sentiment classification.