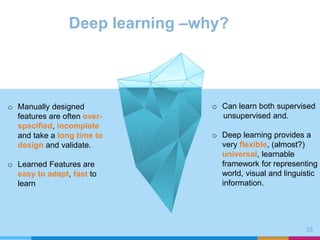

This document discusses approaches and methods for text classification. It outlines rule-based classification, statistical machine learning approaches like decision trees, k-nearest neighbors, naive Bayes, hidden Markov models, and support vector machines. It also discusses recent deep learning methods like convolutional neural networks, recurrent neural networks, bidirectional LSTMs, hierarchical attention networks, and more for text classification without feature engineering. The document provides examples of how each method has been applied and highlights their strengths and limitations.