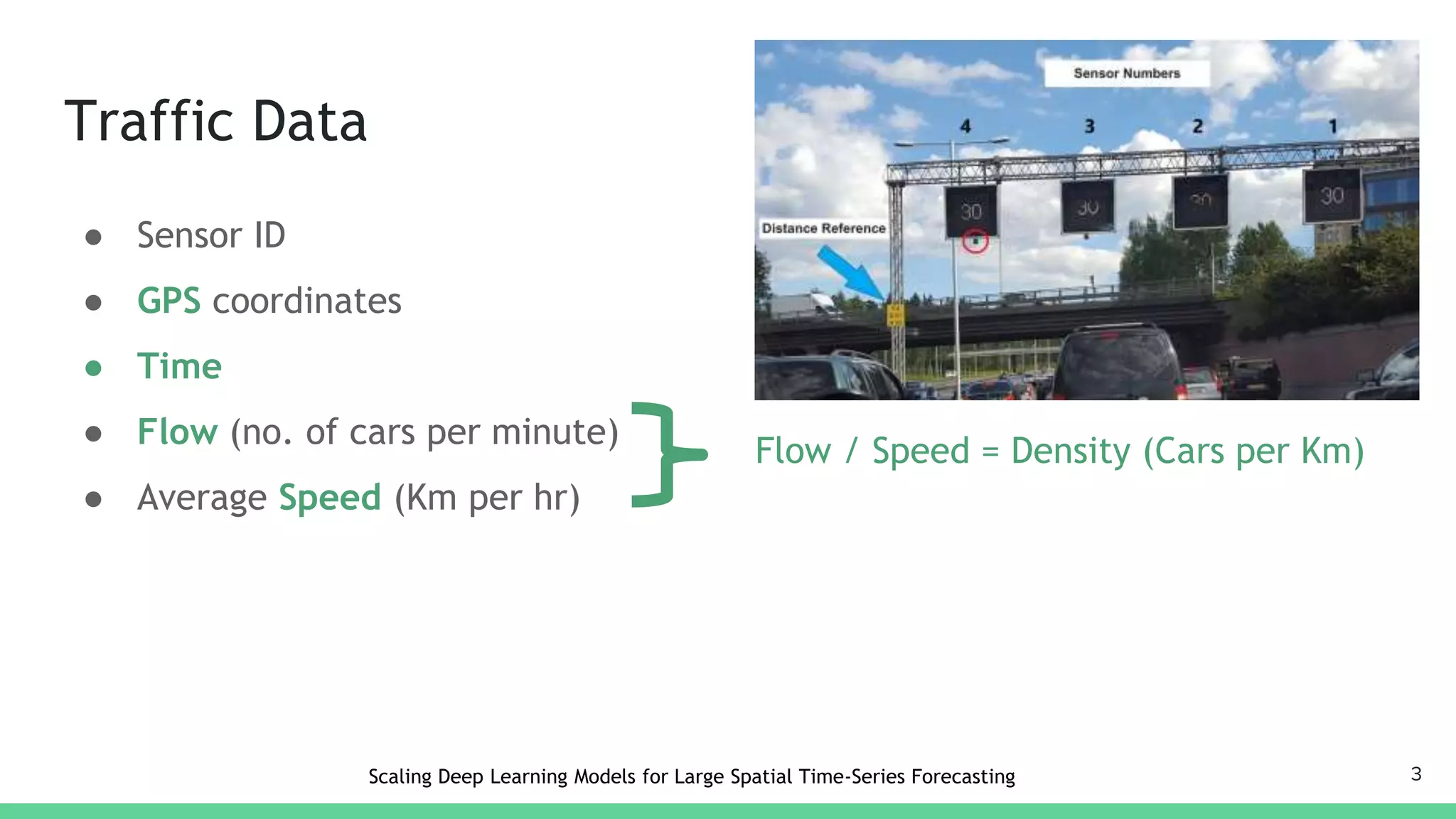

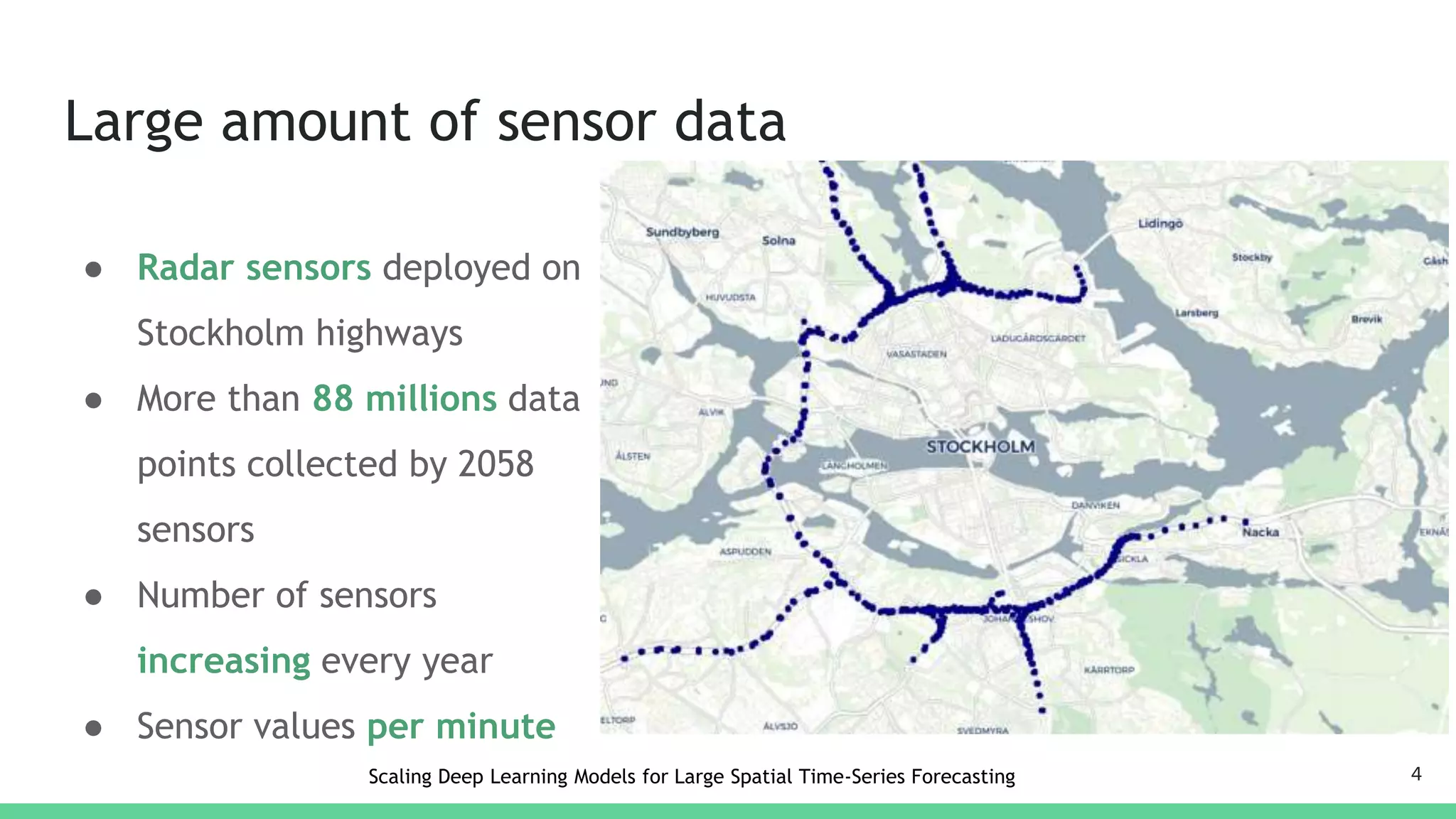

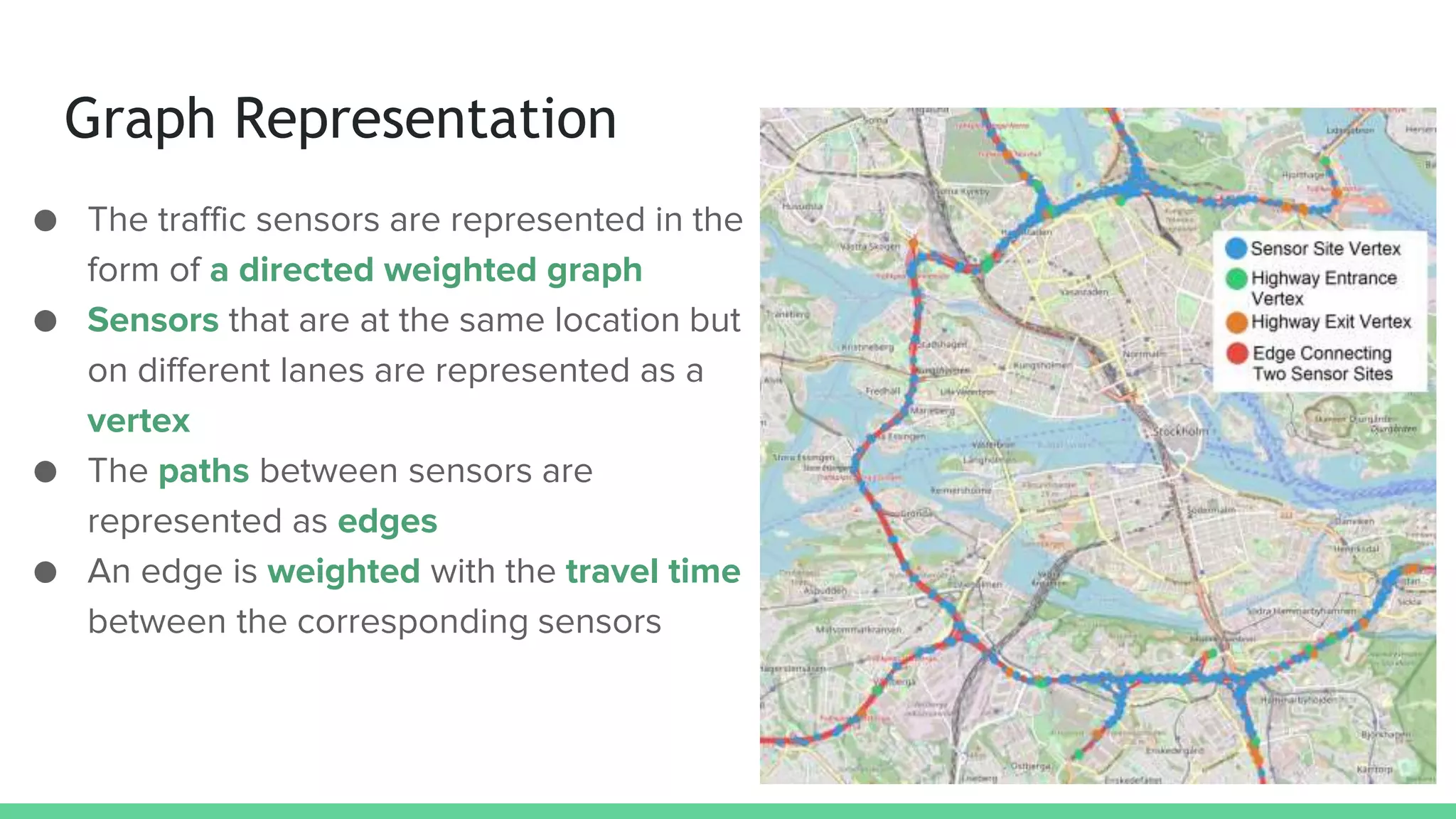

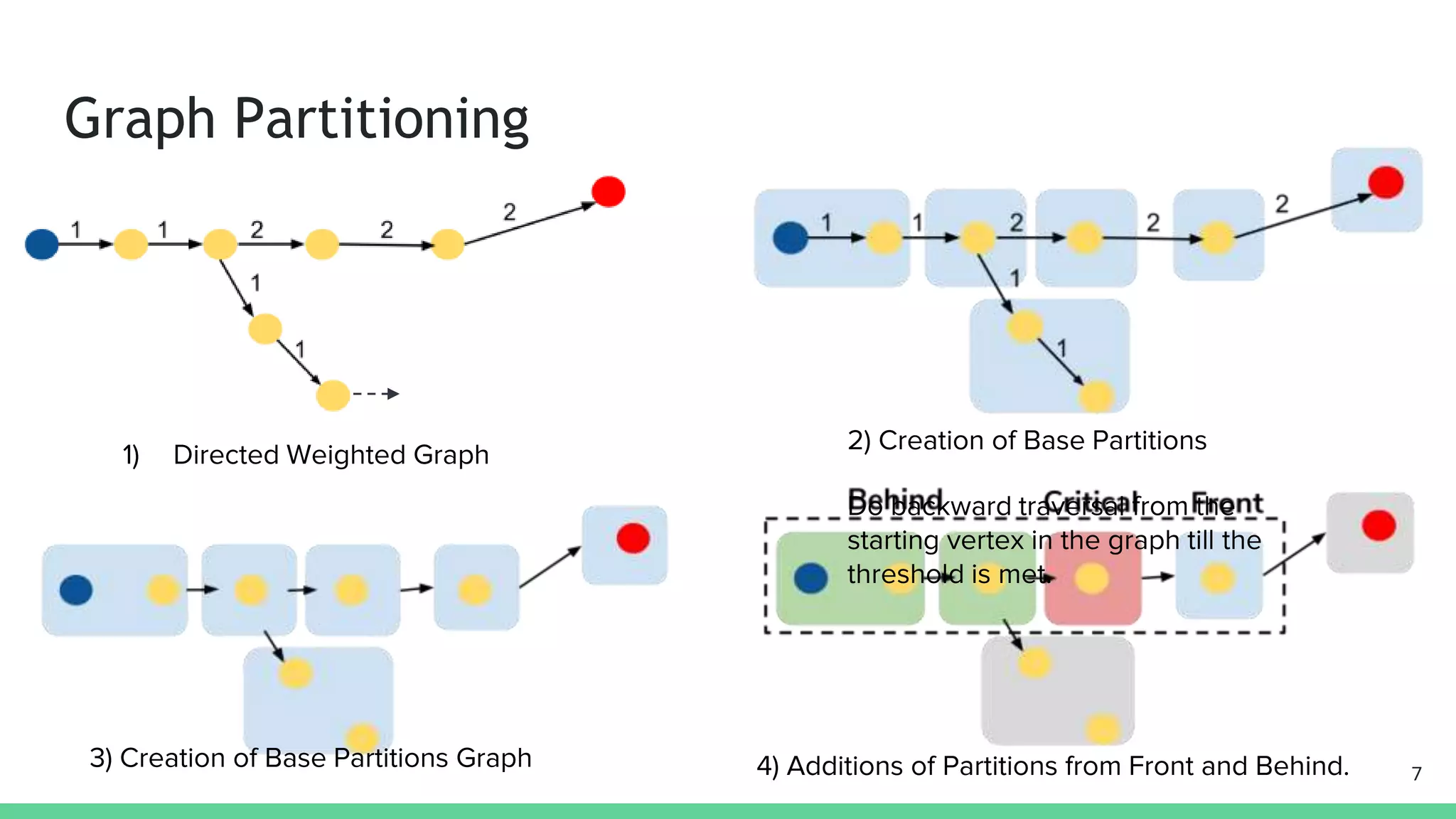

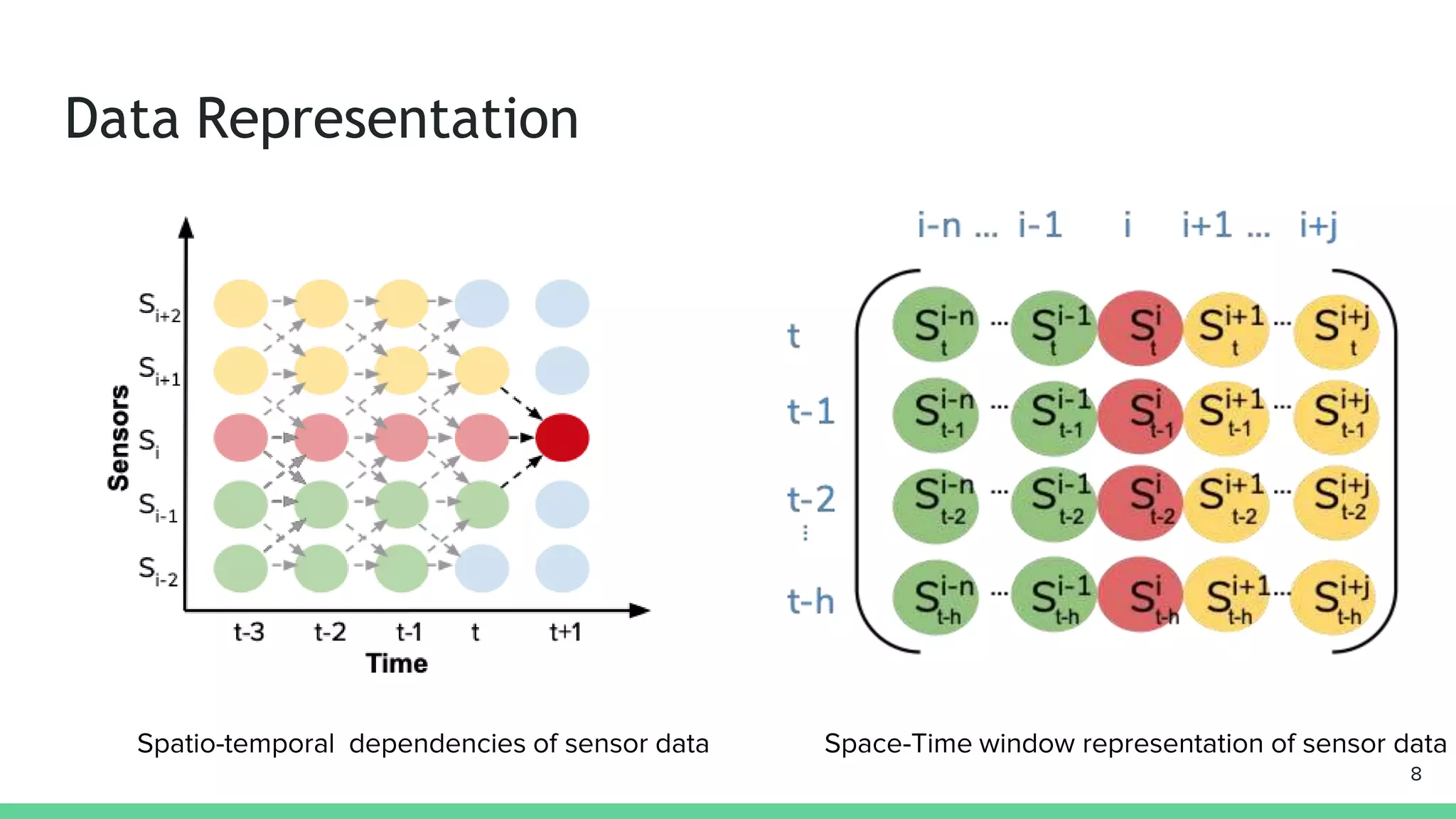

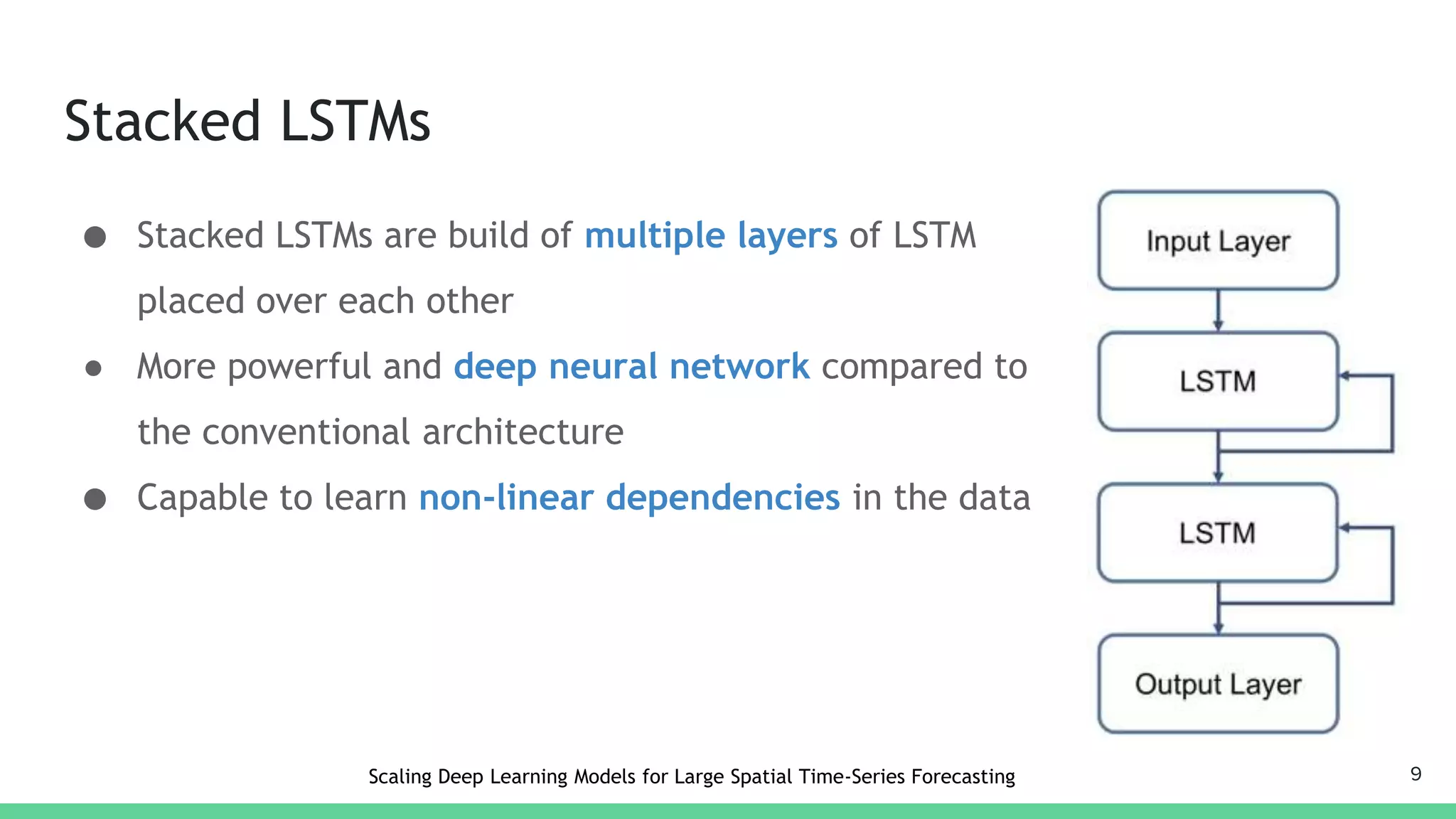

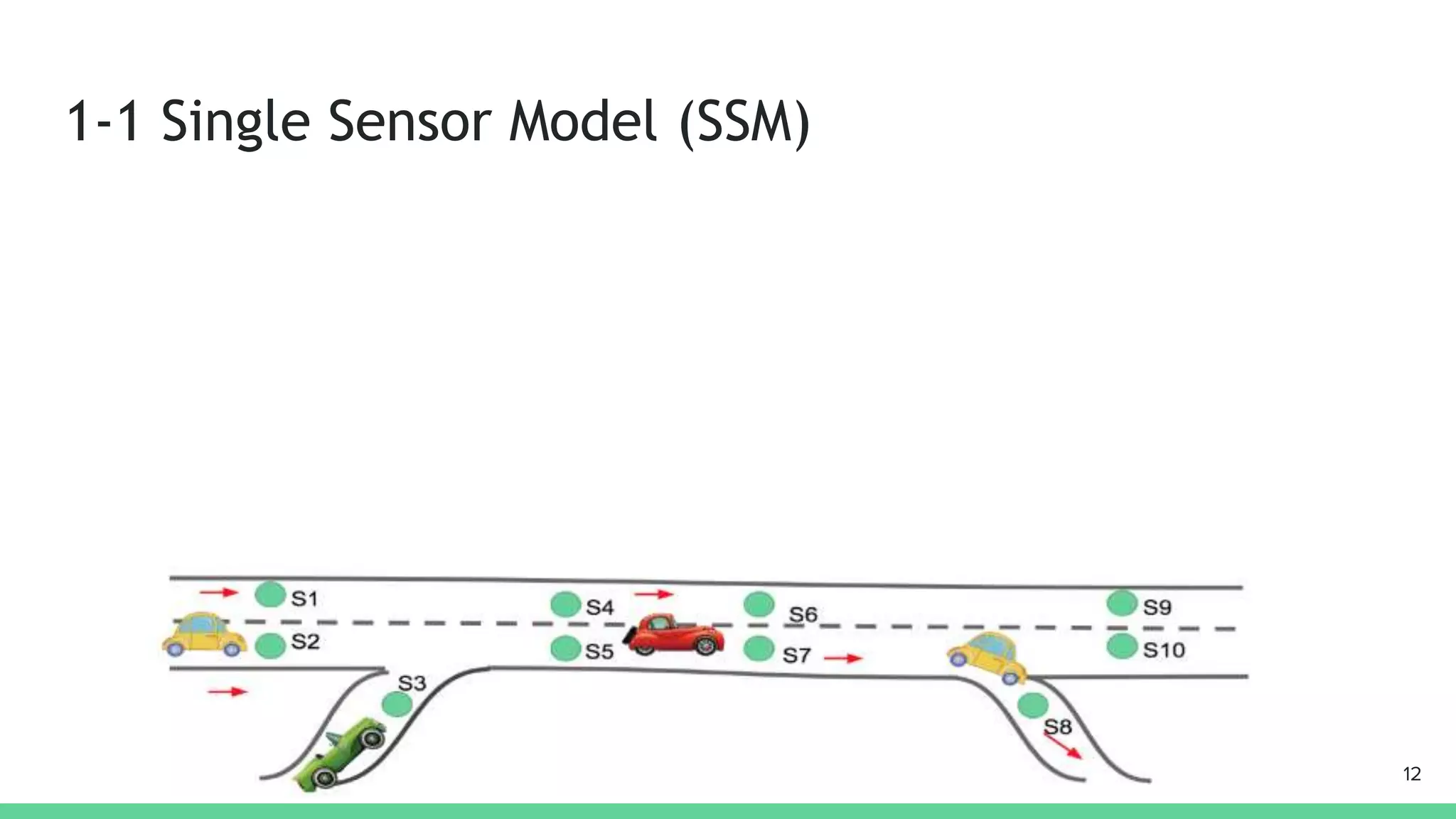

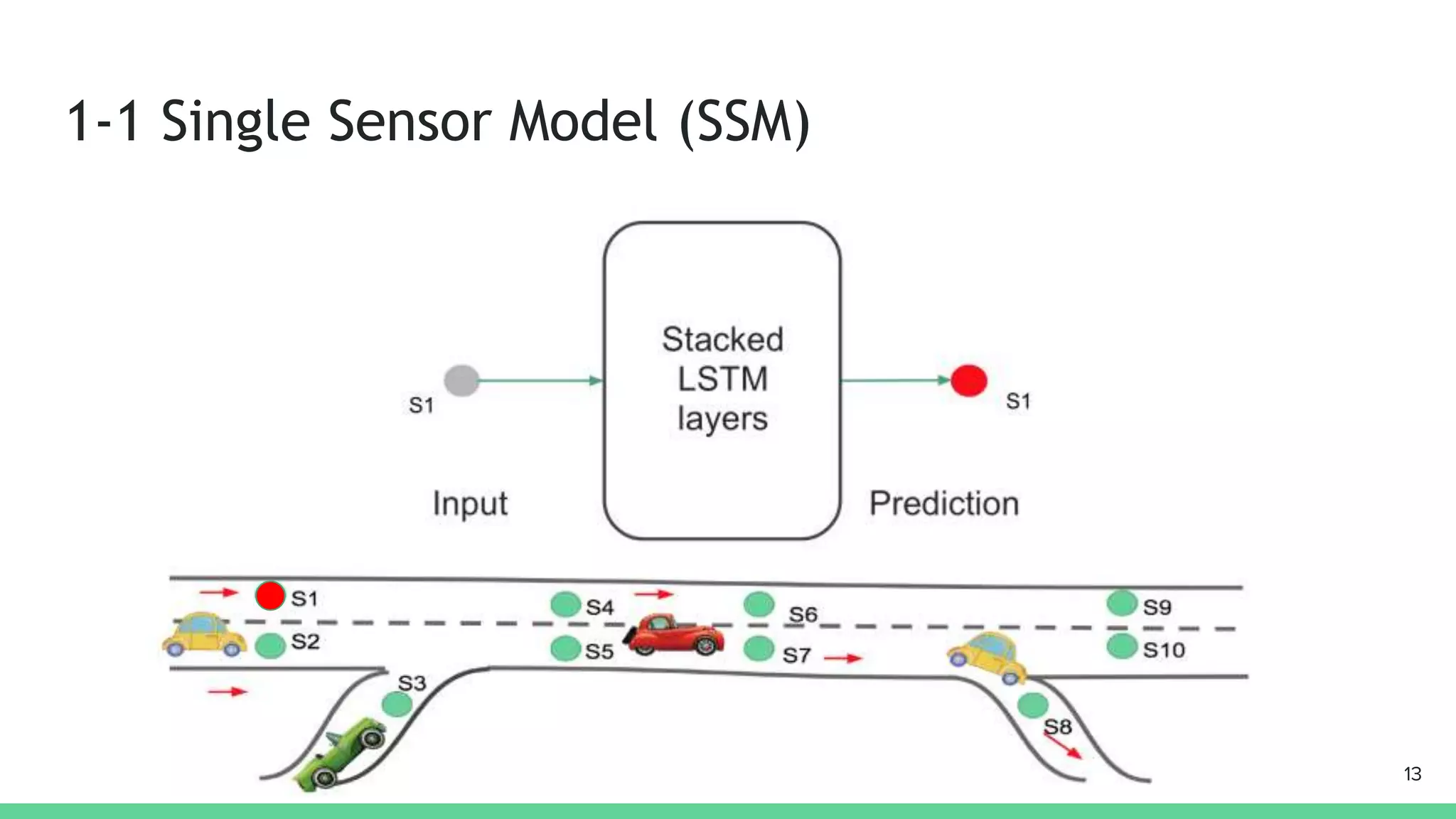

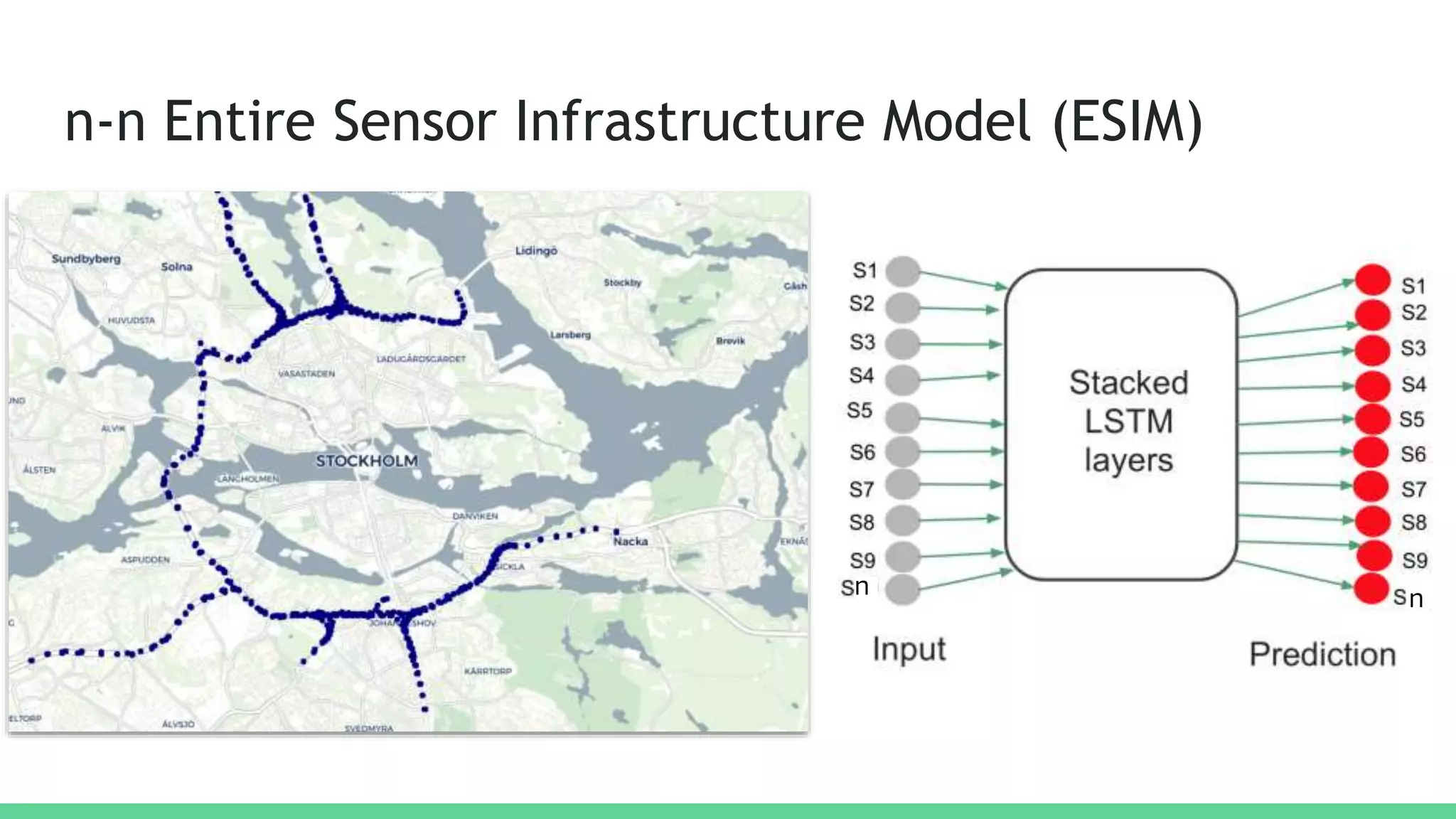

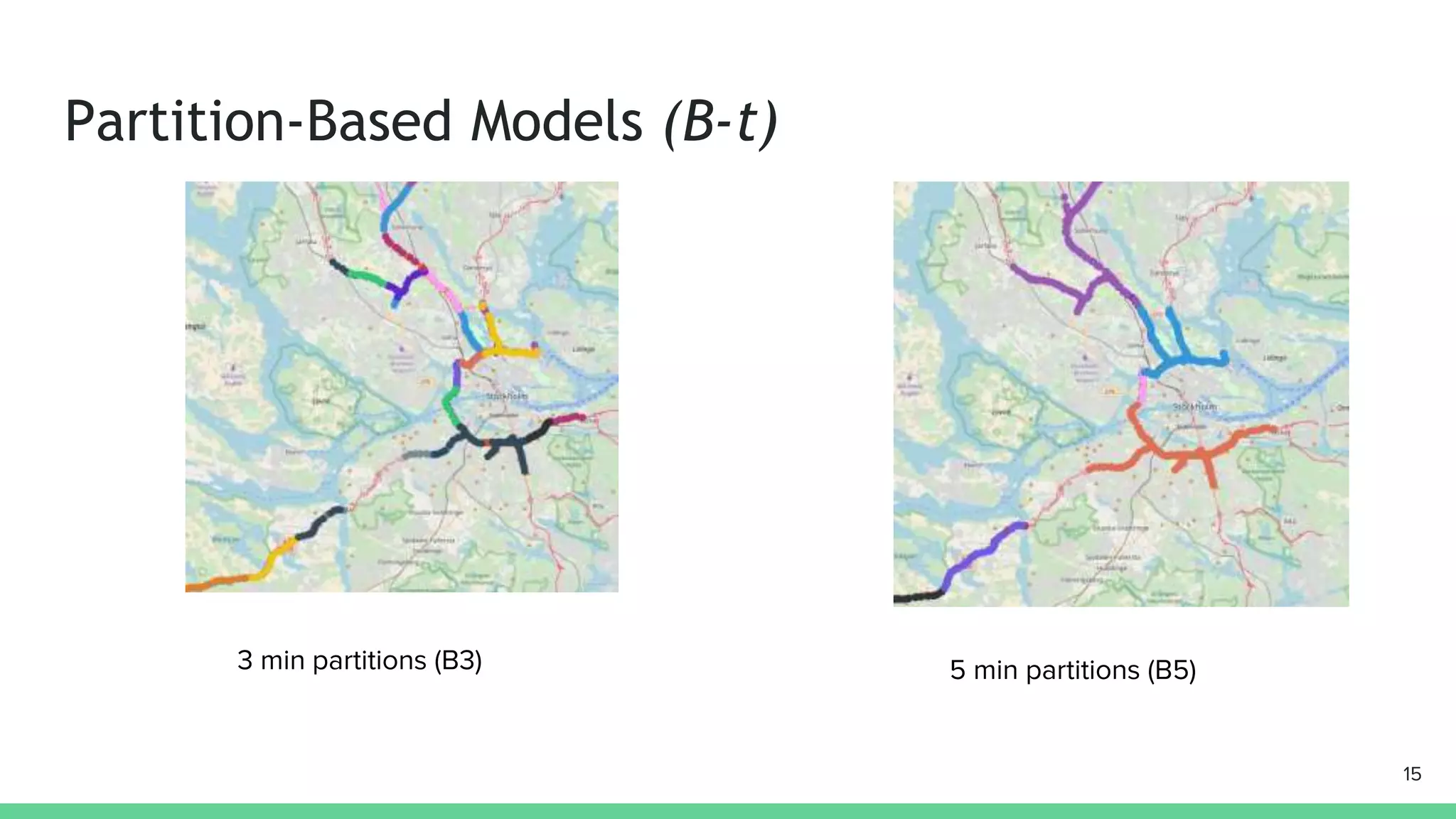

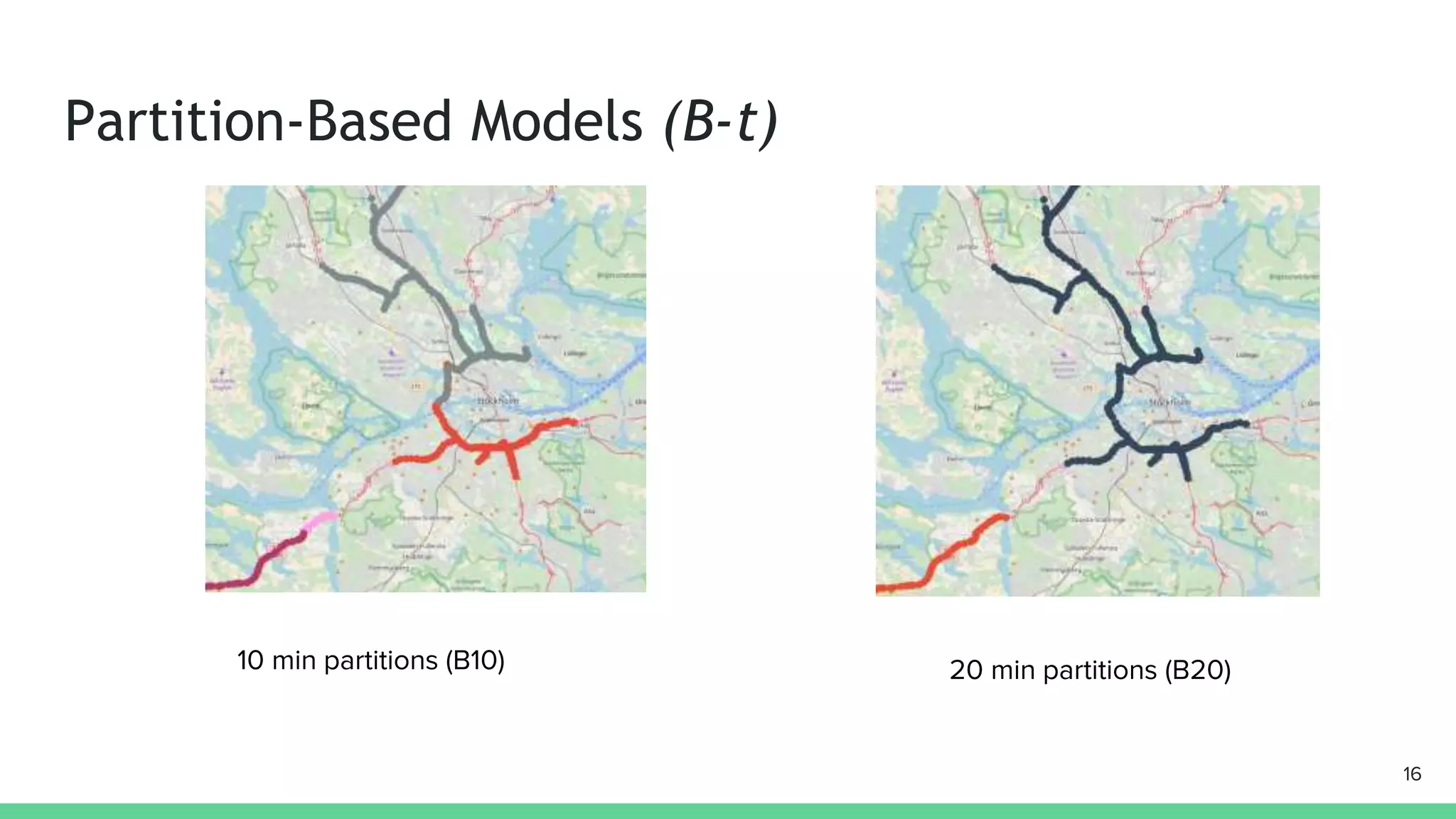

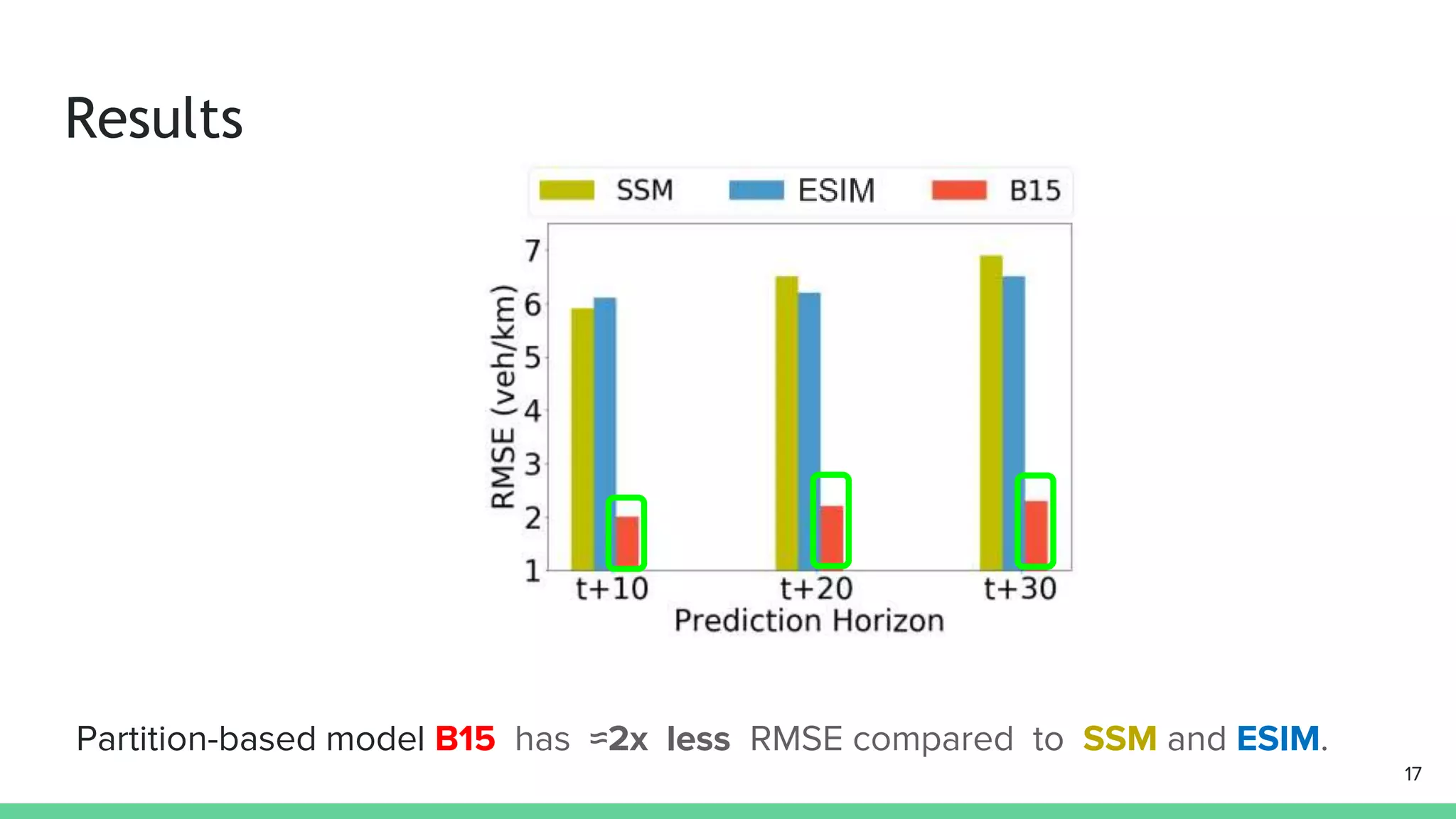

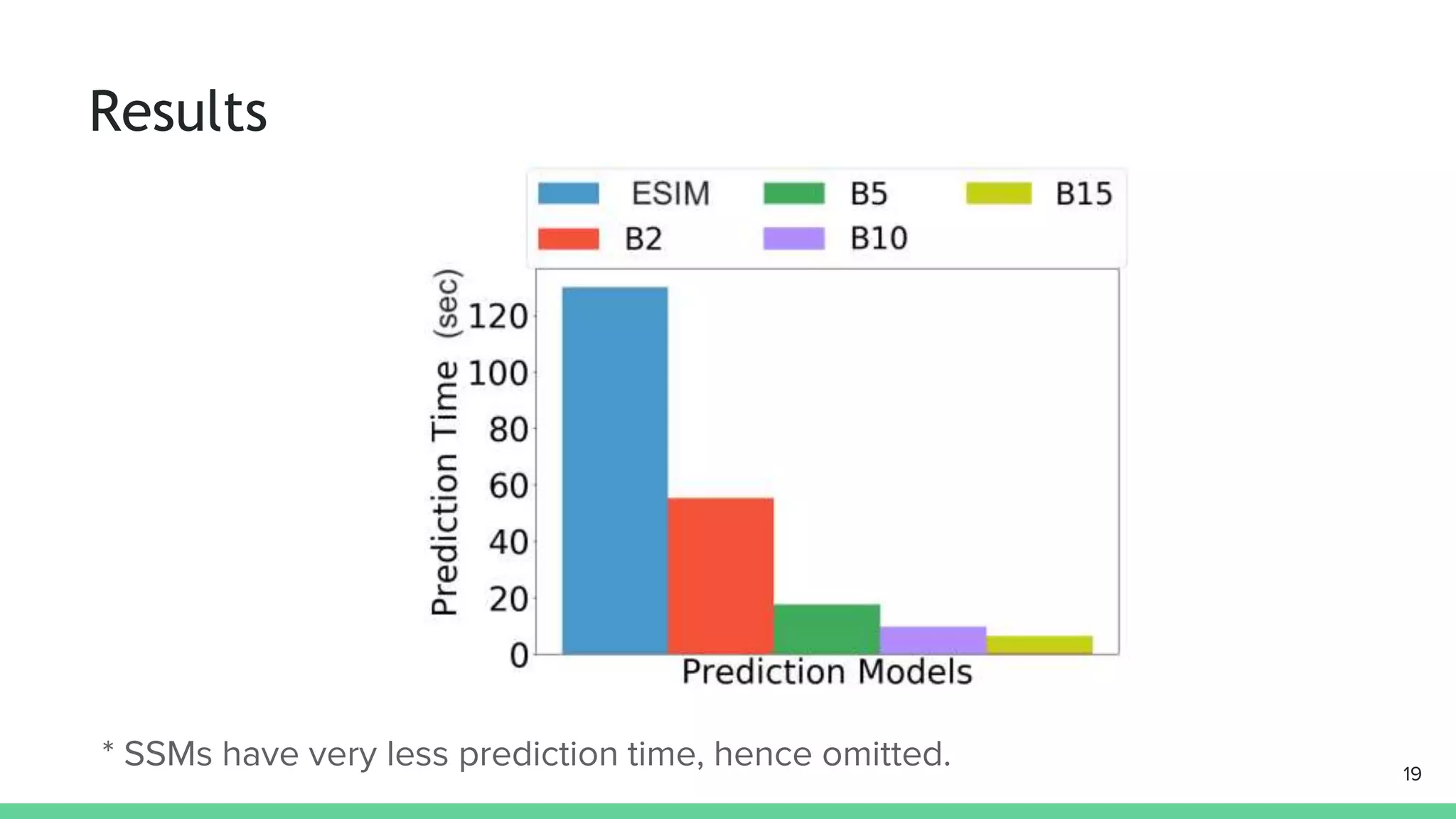

The document discusses challenges in scaling deep learning models for spatial time-series forecasting, specifically emphasizing the computational intensity of training deep neural networks. It proposes partitioning and distribution as effective strategies for managing large datasets from traffic sensors, including the creation of directed weighted graphs for data representation. Key research questions include how to partition data while preserving dependencies and optimizing forecast accuracy, with results showing that partition-based models significantly reduce prediction errors compared to single sensor models.