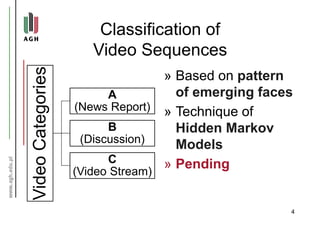

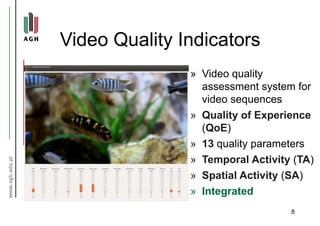

The document discusses a video summarization framework that includes shot boundary detection, video sequence classification, detection of talking head shots, detection of day and night shots, and video quality indicators. It also discusses recognizing events, indexing over 5,000 videos with over 27 million frames, developing summarization components, and evaluating summarization algorithms with collaborators.